This article is an interpretation of a report from Datadog on Severless (Source: Datadog)

Every time a new technology emerges, it will often have its fair share of advocates as well as skeptics. One such technology is serverless. As its name suggests, the main goal of serverless technology is to abstract server management from cloud computing, allowing users to fully focus on application development and produce more business value. In addition, customers only need to pay as needed, but not to keep their computing resources resident.

Of course, there is no "one-size-fit-all" technology for every business. For instance, serverless technology can make testing and debugging more challenging due to the lack of visibility into backend processes. Poor implementation of serverless can also introduce new security concerns. But despite all of these concerns, more and more businesses are adopting serverless in their architecture. Furthermore, as serverless offerings become more mature, the technology barrier will be broken, thus allowing businesses to adopt it more easily.

In this blog post, we will analyze Datadog's report on serverless to better understand how this technology is being applied in the real world.

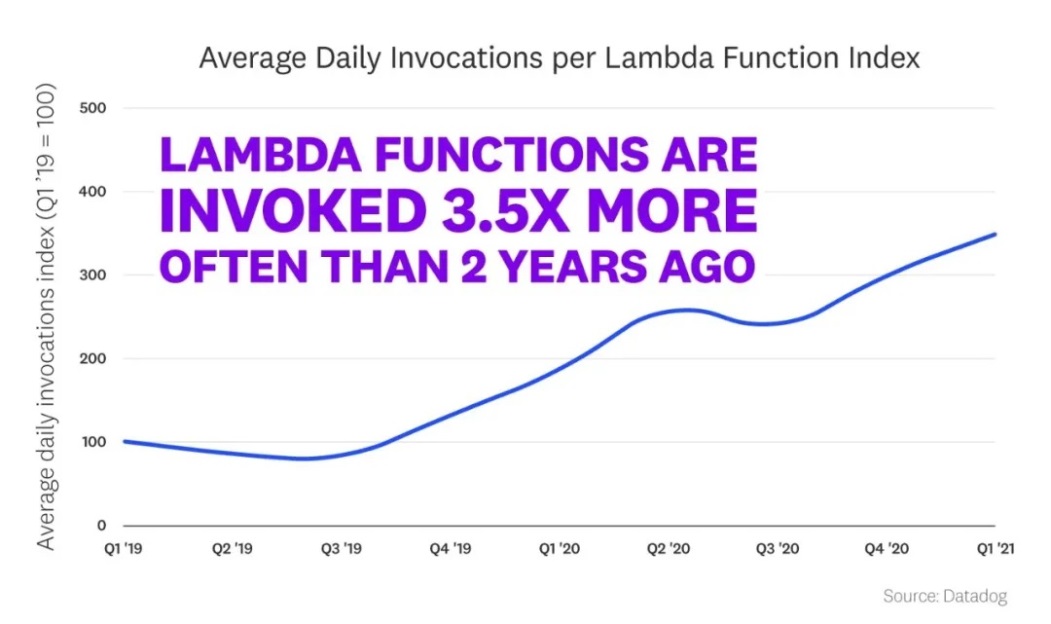

Lambda is called 3.5 times more frequently than that of two years ago and runs for up to 900 hours per day.

Enterprises equipped with Lambda have greatly increased their usage of it since 2019. By the beginning of 2021, functions daily calling of these companies is on average 3.5 times more than two years ago. In addition, in the same group of Lambda users, the function of each enterprise runs an average of 900 hours per day.

Common cloud servers in ordinary cloud environments are billed based on the configuration leased and the lease duration. The leased configuration is charged based on the vCPU and memory.

Similarly, Alibaba Cloud Function Compute is charged based on the number of calls and function execution duration. Therefore, the number of calls and function execution duration are the metrics to measure the Serverless usage by customers. The report does not provide the absolute value of the number of daily calls, but we can estimate the range of Serverless consumption by customers based on the data generated in 900 hours of running a day.

Based on the billing standards of Alibaba Cloud Function Compute, the pre-pay method is used as follows:

For an instance with 1GB of computing power, the cost is 0.00003167 RMB per second. 900 running hours per day with 1GB of memory will trigger a cost of 102.6 RMB, with an annual consumption of 37,000 RMB. This, coupled with the consumptions on storage, network, security, database and other cloud products, equals to the cloud expenditure of a medium-sized enterprise. Usually, cost generated from the number of function calls would not be too high, especially for applications related to AI modeling, such as Python. The cost of calling 1 million times a day in Alibaba Cloud function compute is 13.3 RMB.

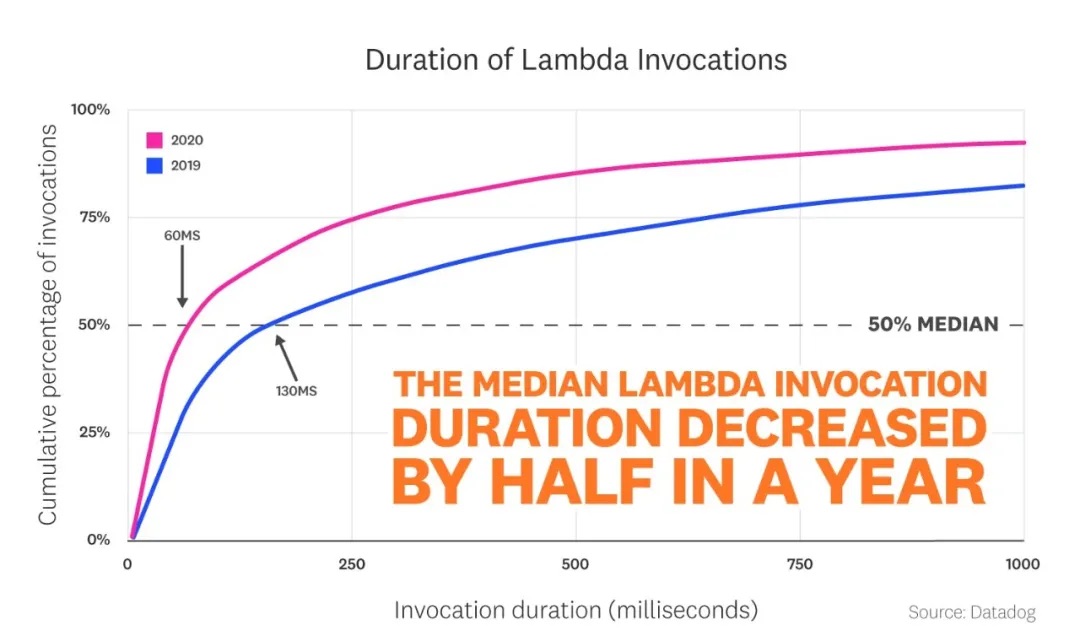

The median Lambda execution time is 60ms, half of that of one year before.

Function execution time is a new concept in the FaaS field. Since FaaS is an event-driven architecture, computing resources are called to execute the function application and fees are billed only when it is actually triggered. The longer the function application runs, the higher the cost is. Unlike Function Compute, cloud servers are billed based on the number of rented servers. Although cloud servers provide auto scaling, they are not event-driven, which results in the restrictions on the scaling rules.

Common cloud server is charged by second, while industry-wide FaaS products, such as Lambda and Alibaba Cloud Function Compute, support millisecond-based billing. The finer the billing granularity, the greater the space for computing cost optimization.

Data structure shows that more and more AWS customers are using the best practices of cost optimization to shorten function execution time and further optimize the computing cost, maximizes the cost advantage of Serverless.

In the following figure, the tail of the function execution time curve is very long, indicating that Lambda not only supports short-term jobs, but also compute-intensive use cases.

Although this report does not show any computing-intensive business scenarios where Lambda is used, the main scenarios involved are audio and video processing, AI modeling and so on based on the promotion cases of domestic cloud vendors.

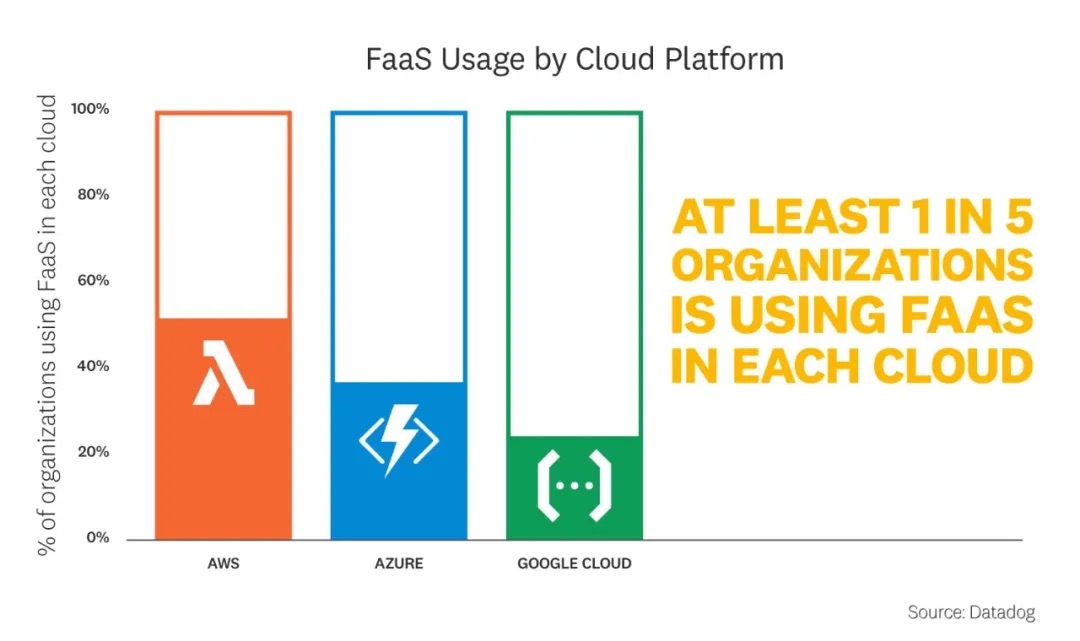

In addition to AWS Lambda, Azure Function and Google Cloud Function are also growing rapidly.

AWS Lambda is one of the earliest FaaS product, followed by Azure and Google with FaaS products, which were introduced later. Their growth may benefit from the maturity of the entire industry, from 20% of Azure customers who use Azure Functions a year ago to 36% now, while Google already has 25% of Cloud customers using Cloud functions.

In this section, Versel's case is referenced in the report:

Versel is a very practical website management and hosting tool, which can quickly deploy websites and apps without even purchasing servers or domain names, installing and configuring Nginx, uploading website files, adding DNS resolution items, configuring Web page certificates. Most importantly, personal use is permanently free of charge.

Versel manages applications with low coupling. Obviously, the business model of Versel makes full use of the cost advantage of Serverless technology to minimize the server cost for free individual users. Function applications are used to process server-side rendering, API routing requests. In the past year, the number of Vercel server called per month increased from 2.62 billion to 7.4 billion, 28 times more than before.

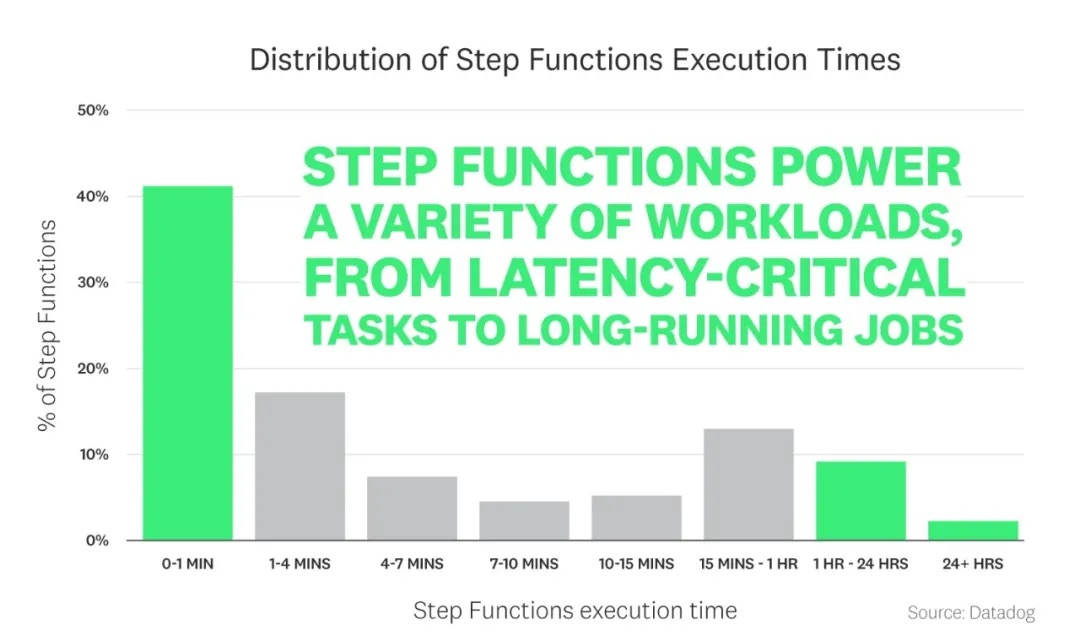

AWS Step Functions is the best companion of Lambda. On average, each workflow contains 4 functions, which increase month by month.

A complete business logic requires multiple function applications, and even computing units such as elastic computing and batch computing. The orchestration capability of the workflow allows computing tasks to be orchestrated in a distributed manner in order, branch, or parallel to simplify the tedious coding procedure. It also provides visual monitoring of the status of each execution link in the entire business process, killing two birds with one stone. AWS Step Functions can do so as well.

The report shows that the average Step Functions workflow contains 4 Lambda functions, with a month-to-month growth trend. This shows that more and more customers are using workflow to handle increasingly complex business logic. Among them, workflows with an execution time of 1 minute account for 40%, but there are also some workflows with an execution time of more than 1 hour or even more than 1 day, which are mainly based on AI modeling.

In this section, the report refers to Stedi, an enterprise that provides the structured message sending service in the transaction field of B2B transaction, such as the push of marketing mail and other services. This type of business scenario is event-triggered and can invoke a large number of target mailboxes in a short period of time. Therefore, the Serverless plus workflows can perfectly meet customers' requirements for cost optimization, development, and O&M efficiency.

A quarter of AWS CloudFront customers use Lambda@Edge.

Lambda@Edge is a feature of Amazon CloudFront that allows customers to run code near application users, improving performance and reducing latency. With Lambda@Edge, customers do not need to pre-configure or manage infrastructure in multiple places around the world, but only to pay based on the computing time used with no cost produced when the code is not running.

This means in the edge scenario, the network provides Serverless computing capabilities together to customers without calling computing power from the cloud, improving the customer experience for latency-sensitive edge services. The examples here are video monitoring, intelligent analysis, and business monitoring and analysis in the IoT scenario.

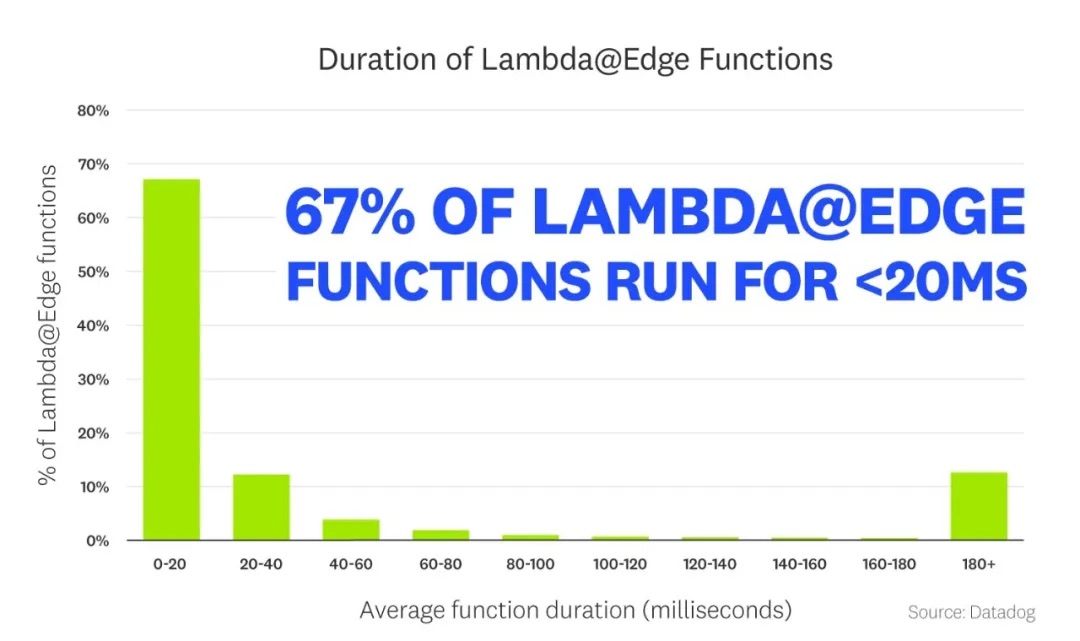

The report shows that a quarter of Amazon CloudFront customers use Lambda@Edge, with less than 20 milliseconds of function execution time for 67% of them, indicating that these applications are sensitive to latency. The more services that are needed, the greater the potential of Serverless on the edge side is.

For more than half of the reserved instances of customer functions, less than 80% of resource is used.

In the Java/.Net programming framework, application startup will be slower. Because Java needs to initialize its virtual machine (JVM) and load a large number of classes to the memory before executing user code. There are many possible solutions for cold start optimization, such as function reserved instances or to improve the speed of pulling container images through on-demand loading as well as more efficient storage and algorithms, thereby optimizing the cold start speed.

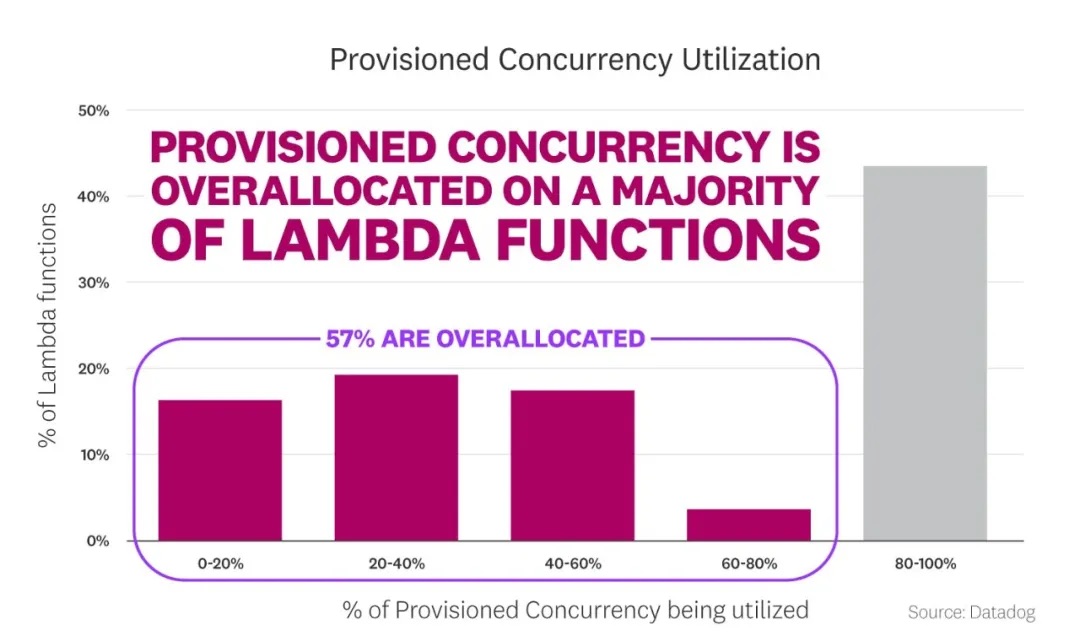

In essence, function reservation is a very effective way to avoid cold start, but it could not solve the cold start problem from the root. More resources to reserve means more waste, which runs counter to the on-demand use advocated by Serverless. Therefore, this year's report paid much attention to the usage of function reserved instances.

The report shows that 57 percent of customers who use functions reserved instances use less than 80% of the reserved instances. More than 30% use less than 40%. More than 40% use 80%-100%, which means they may still be troubled by the cold start problem. Therefore, the optimization of reserved instance design remains a challenge for vendors and customers to solve, and the relevant best practices are of great guiding significance.

If you like to learn more, you can refer to our Best practices of minimizing Function Compute costs.

Open-source Serverless Framework is the major way to deploy function applications.

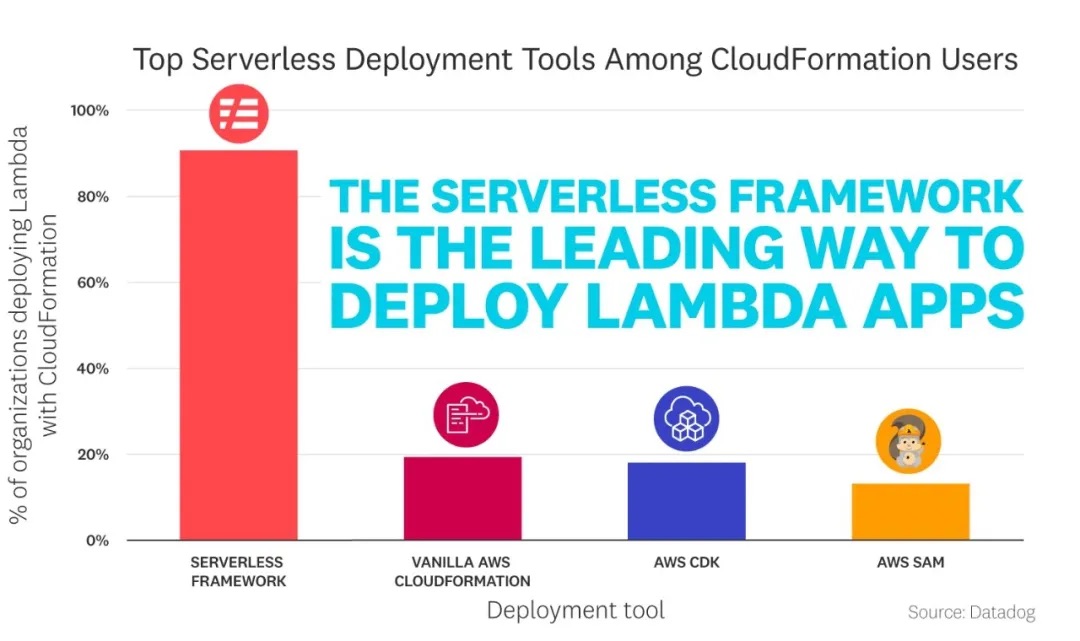

In a Serverless architecture, it is not very complex to manually deploy several function applications. When the application scale reaches dozens or hundreds, the deployment difficulty of the application will be multiplied. To deal with this, you can use deployment tools to improve the deployment efficiency, just as Kubernetes is used to automatically deploy, scale, and manage containerized applications. Kubernetes is already an essential tool in managing containers.

The report shows that more than 80% of customers use the Serverless Framework to deploy and manage function applications. Although the reason is not provided in the report, it could probably be the usability, openness, and community attributes of the Serverless Framework.

The report also predicts that the deployment tools of infrastructure as a code class will play a more important role in the large-scale deployment of Serverless Applications. AWS has three exclusive deployment tools: vanilla CloudFormation, the AWS CDK and AWS SAM. The usage rates for the three tools are 19%, 18%, and 13% respectively. (There is the case in which the same customers use two or more tools at the same time so that the usage superimposed is above 100%)

In China, Alibaba Cloud and its peers have all provided their own closed-source deployment tools. For instance, Tencent Cloud and the Serverless Framework have cooperated to develop a Serverless application center, and Alibaba Cloud released the Serverless Devs last year to support the deployment, O&M, and monitoring of function applications. Midway, an open source project that provides the js development-state framework, has won more than 4,000 stars. The increase of developer participants in the Serverless development will inject activity into the domestic ecosystem of open-source tools.

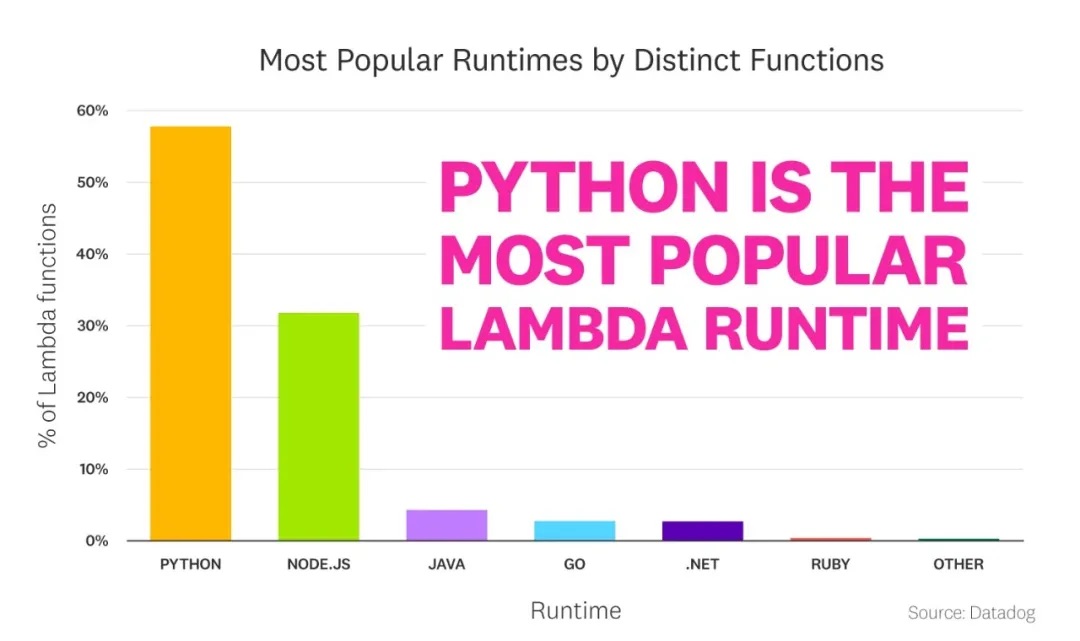

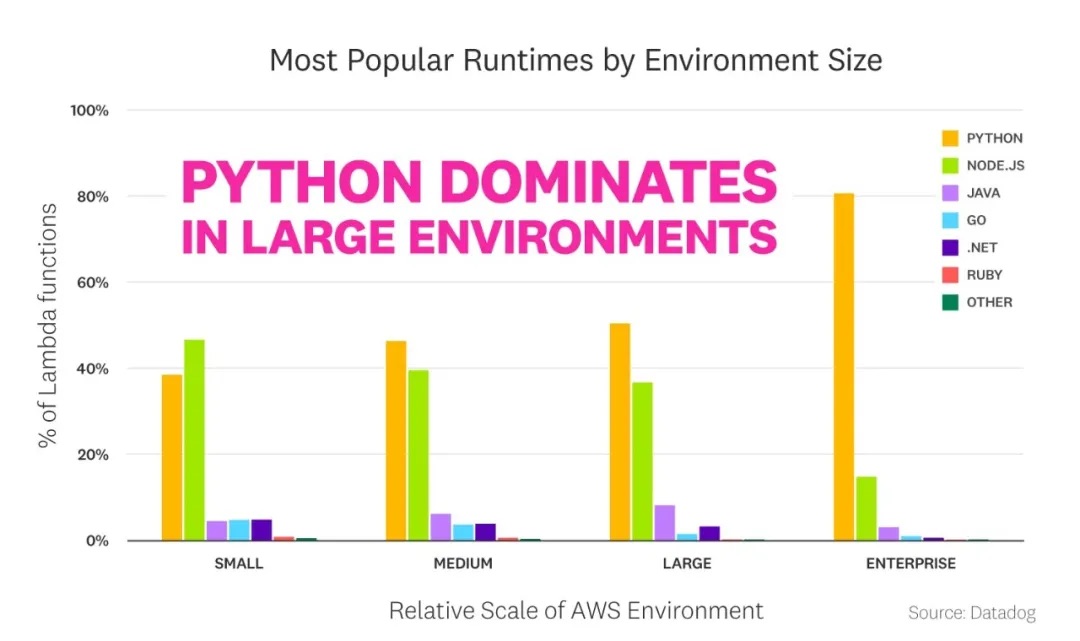

Python is the most popular Lambda runtime, especially in large environments.

The report shows that 58% of users use Python, 31% for Node.js, and less than 10% for Java, Go, .NET Core and Ruby. However, considering the characteristics of different vendors, the share of Java on Alibaba Cloud may be higher, and.NET will be more popular for Azure customers.

What's interesting is that in a small Lambda runtime environment, Node.js is more popular than Python. As the scale of functions grows, Python becomes more and more popular. However, Python is used four times more frequently than Node.js, as shown in the following figure:

Leijuan shared the programming language analysis of the report on the Alibaba Cloud intranet: large enterprises use Python more in terms of big data, AI, with a large amount of needs for Lambda. Therefore, Python has an absolute advantage in the number of Lambda; Node.js applications do not need such a long runtime (multi-core CPU and large memory). This usually involves small instances, or individual Node.js developers, who usually choose a small Lambda environment as well.

Furthermore, the usage distribution of various programming language versions is as follows, decreasing in order, Python 3. x, Node.js 12, Node.js 10, Python 2.7, Java 8, Go 1. x, .NET Core 2.1, .NET Core 3.1.

On the whole, the use of Serverless outside China is rapidly expanding compared with last year. The function execution duration is continuously increasing, with usage methods more mature, and the tools for developers more open.

In China, Serverless is no longer restricted to some offline tasks or low-coupling applications. Many enterprise customers have applied Serverless to core procedures in production. For example, Century Mart has deployed core applications such as transaction systems, membership systems, inventory system, backend system, and promotion modules, on Function Compute to reduce customers' investment in infrastructure. Xianyu has already implemented the Serverless-based transformation for traditional giant applications to overcome many industry challenges, such as reuse of code between functions, and unified upgrade of function dependencies.

Although it takes time to transform applications, I believe that more and more enterprise customers will still choose Serverless for more convenience.

Comparative Interpretation of CNCF Cloud Native Survey China 2020

Serverless Engineering Practices | Build a Kubeless Platform Quickly

99 posts | 7 followers

FollowNeel_Shah - July 2, 2025

Amuthan Nallathambi - June 4, 2023

Alibaba Clouder - July 27, 2020

Alibaba Cloud Serverless - February 28, 2023

Kidd Ip - October 9, 2025

Alibaba Container Service - April 28, 2020

99 posts | 7 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Serverless Application Engine

Serverless Application Engine

Serverless Application Engine (SAE) is the world's first application-oriented serverless PaaS, providing a cost-effective and highly efficient one-stop application hosting solution.

Learn MoreMore Posts by Alibaba Cloud Serverless