In recent years, machine learning technology has been developed rapidly and used widely in all walks of life. The field of machine learning has a lot of challenges and opportunities for industry professionals. Deep learning frameworks such as TensorFlow and PyTorch enable developers to construct and deploy machine learning applications quickly. With rapid advancements in cloud computing in the recent past, many companies have been willing to migrate to cloud for their development and production services. This is because cloud computing offers more advantages than traditional platforms in terms of computing costs and scalability. Cloud platforms usually adopt compute-storage separation infrastructure to achieve elasticity and cost savings. For example, using object storage to build a data lake allows enterprises to store a large amount of data cost-effectively. In the scenario of machine learning training, it is especially suitable to store training data in a data lake.

Storing training data in a data lake has the following benefits:

1) There is no need to synchronize data to the training node in advance. In the traditional way, the transfer of data to the local disk of a computing device is mandatory at the start. However, in OSS, the data can be directly read for training, minimizing preparatory work.

2) A data lake can store a larger amount of training data. The storage capacity is no longer limited to the local disk of the computing node. For deep learning, in fact, more training data may contribute to better training results.

3) You can elastically scale computing resources to save costs. Machine learning generally uses CPUs with more cores or high-end GPUs that are relatively expensive. However, the cost of OSS is relatively low. It is easy to decouple data from computing resources by storing training data in a data lake. In addition, you can pay for the computing resources used and release resources as per demand to achieve higher cost savings.

However, this method still faces many problems and challenges:

1) You cannot expand the latency and bandwidth of remote data pulling in accordance with computing resources. Hardware computing capabilities are constantly improving, and leveraging GPU for computing can provide faster training speed. Alibaba Cloud's ECS and container services allow quick scheduling of large-scale computing resources. In addition, access to OSS requires a network environment. Thanks to the advancements in network technology, a high-speed network is available these days to access object storage. Nevertheless, the network latency and bandwidth of object storage fail to expand in accordance with the computing resources, which may become a bottleneck for the training speed. Hence, accessing data in a compute-storage separated environment in an efficient way is a significant challenge.

2) More convenient and commonly used data access methods are required. TensorFlow and other deep learning frameworks support GCS and HDFS, but the support for many third-party object storage services is insufficient. Portable Operating System Interface (POSIX) is a much better way to access data, similar to a local disk. POSIX greatly simplifies the developer's adaptation to storage systems.

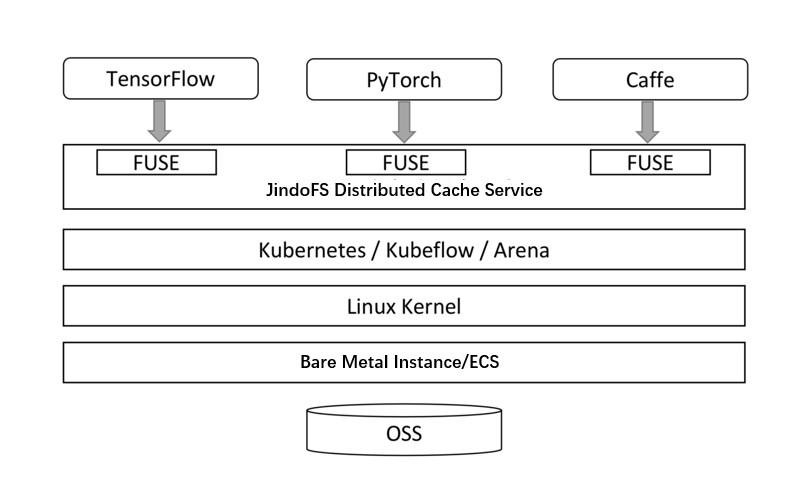

JindoFS provides a cache-based acceleration solution for this scenario to solve the problems discussed above.

JindoFS provides a distributed cache system on the computing side. It can effectively utilize the local storage resources (like disk or memory) in the computing cluster for caching hot data in OSS. Thus, it reduces repeated data pulling from OSS and network bandwidth consumption.

For deep learning, we recommend GPUs with stronger computing capabilities to achieve faster training speed. In this case, high memory throughput is required to fully utilize the GPU. Thus, you can apply JindoFS to establish a memory-based distributed high-speed cache. As long as the entire cluster's memory can support the entire data set (excluding the memory required for tasks), you can apply memory-based cache and local high-speed network. Through this, you can achieve high data throughput for faster computing.

In some machine learning scenarios, the scale of the training data exceeds that of the memory capacity. In addition, relatively low CPU or GPU capabilities require high throughput data access, leading to computing limitations based on the network bandwidth. Therefore, you can use local SSD as the cache medium for constructing the JindoFS distributed cache service. Also, you can improve the training speed by utilizing local storage resources to cache hot data.

JindoFS contains a FUSE client that provides a simplified way to access data. You can map JindoFS cluster instances to a local file system through the FUSE program. In this manner, JindoFS offers an acceleration experience similar to accessing files in local disks.

Visit Alibaba Cloud —> Container Service —> Kubernetes to create a Kubernetes cluster.

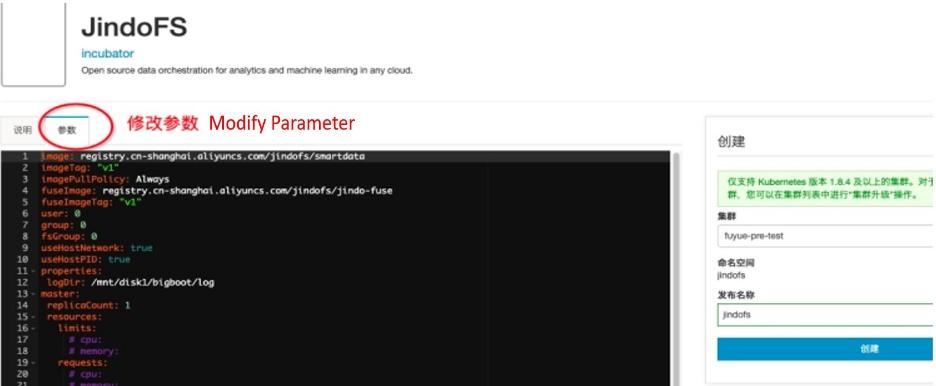

2.1) Click Container Service —> Kubernetes —> Application Directory and Enter the Page of "JindoFS" Installation and Configuration.

2.2) Configure Parameters

For the complete configuration template, see Container Service for Kubernetes - Application Directory - JindoFS Installation Instructions

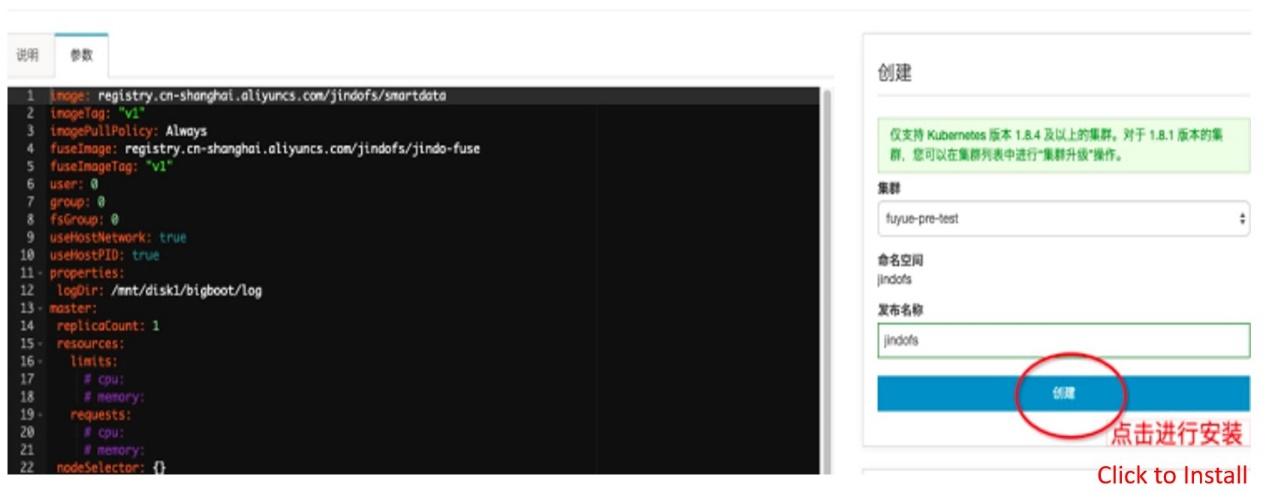

Configure OSS Bucket and AK. The example in the documentation uses JFS Scheme deployment. So, you need to modify the following configuration items:

jfs.namespaces: test

jfs.namespaces.test.mode : cache

jfs.namespaces.test.oss.uri : oss://xxx-sh-test.oss-cn-shanghai-internal.aliyuncs.com/xxx/k8s_c1

jfs.namespaces.test.oss.access.key : xx

jfs.namespaces.test.oss.access.secret : xxA namespace named test is created based on these configuration items, which points to chengli-sh-test, the xxx/k8s_c1 directory of the OSS bucket. By doing so, operating namespace through JindoFS is similar to operating the OSS directory.

2.3) Install Services

2.3.1) Verify Installation

# kubectl get pods

NAME READY STATUS RESTARTS AGE

jindofs-fuse-267vq 1/1 Running 0 143m

jindofs-fuse-8qwdv 1/1 Running 0 143m

jindofs-fuse-v6q7r 1/1 Running 0 143m

jindofs-master-0 1/1 Running 0 143m

jindofs-worker-mncqd 1/1 Running 0 143m

jindofs-worker-pk7j4 1/1 Running 0 143m

jindofs-worker-r2k99 1/1 Running 0 143m2.3.2) Visiting /mnt/jfs/ Directory in Host Machine Is Equivalent to Accessing Files in JindoFS

ls /mnt/jfs/test/

15885689452274647042-0 17820745254765068290-0 entrypoint.sh2.3.3) Install Kubeflow (Arena)

Kubeflow is an open-source Kubernetes-based cloud-native AI platform. It focuses on developing, orchestrating, deploying, and running scalable and portable machine learning workloads. Kubeflow supports two types of distributed training in TensorFlow, namely Parameter Server mode and AllReduce mode. Based on Arena developed by the Alibaba Cloud container service team, users can submit distributed training frameworks of these two types.

Refer to this guide to install Kubeflow.

2.3.4) Start TF Job

arena submit mpi \

--name job-jindofs\

--gpus=8 \

--workers=4 \

--working-dir=/perseus-demo/tensorflow-demo/ \

--data-dir /mnt/jfs/test:/data/imagenet \

-e DATA_DIR=/data/imagenet -e num_batch=1000 \

-e datasets_num_private_threads=8 \

--image=registry.cn-hangzhou.aliyuncs.com/tensorflow-samples/perseus-benchmark-dawnbench-v2:centos7-cuda10.0-1.2.2-1.14-py36 \

./launch-example.sh 4 8In this article, a ResNet-50 model job is submitted, using an ImageNet dataset size of 144 GB. Data is stored in the TFRecord format, each of which is about 130 MB. You can find both the model job and ImageNet dataset on the web. Among these parameters, /mnt/jfs/ is a directory mounted on the host machine through JindoFS FUSE, while test is a namespace corresponding to an OSS bucket. The directory /mnt/jfs/ is mapped to /data/imagenet directory in the container through --data-dir, so that the job can read the data in OSS. The data already being read will be automatically cached in the JindoFS cluster.

With the JindoFS cache-based acceleration service, you can cache most of the hot data to the local memory or disk once it is read. With this approach, you can significantly accelerate the deep learning training speed. For most training scenarios, you can preload data to the cache to speed up the next training cycle.

DataWorks: A Platform for Developing and Governing a Data Lake

62 posts | 7 followers

FollowAlibaba EMR - November 18, 2020

Alibaba EMR - June 8, 2021

Alibaba EMR - April 30, 2021

Alibaba Developer - July 8, 2021

Alibaba Developer - March 1, 2022

Alibaba Cloud MaxCompute - December 22, 2021

62 posts | 7 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn MoreMore Posts by Alibaba EMR