By Yili

This article summarizes the presentation at the Yunqi Community Director's Course and shares some practical tips and development trends of the Cloud Native technology.

The development of container technologies preludes the cloud-native computing:

First, it is the containerization of applications. Many PaaS platforms such as Heroku/CloudFoundry are built based on the container technologies, significantly simplifying the deployment and maintenance of Web applications. Docker puts forward the container packaging specification—Docker Image and creates Docker Hub for global application distribution and collaboration, greatly facilitating the popularization of container technologies and applying container applications in more business scenarios. Communities such as Open Container Initiative and Containerd further facilitate the standardization and normalization of container technologies.

Then it is the orchestration and scheduling of containers. Developers want to optimize/combine and efficiently schedule underlying resources by using container technologies to improve the system usage, application SLAs and the automation level. Kubernetes stands out from among other competing products due to its excellent openness, high scalability, and active community. Google donating Kubernetes to CNCF accelerates the popularization of Kubernetes.

With the establishment of container and container orchestration standards, Kubernetes/Docker hides the differences between underlying infrastructures and provides excellent portability to support multiple clouds/hybrid clouds. The community has begun to build upper-layer business abstraction based on these features. Let's look at the service governance layer. The year 2018 was the beginning of Service Mesh. Istio is a service governance platform launched by Google/IBM/Lyft. Similar to a network protocol stack between microservices in the cloud-native era, Istio can be used to implement a variety of tasks without the need to process them in the application layer, such as dynamic service invocation routing, flow limits, downgrading, end-to-end tracing, and secure transmission. Based on this, cloud-native frameworks targeting specific fields also emerge quickly, such as the cloud-native Kubeflow platform for machine learning and Knative for serverless workloads. These layered architectures allow developers to focus only on their own business logic instead of the complexity of underlying implementations.

We can see that the prototype of a cloud-native operating system begins to take shape. This is a good era for developers because cloud infrastructure and cloud-native computing technologies have significantly improved the business innovation speed.

To solve the challenges in large-scale Internet applications, we build applications in a distributed manner. When building distributed system applications, we often make some assumptions, which have been proven to be inaccurate or incorrect during the long-time process of running applications. Peter Deutsch summarized the common "misconceptions about distributed systems". The main misconceptions are listed as follows:

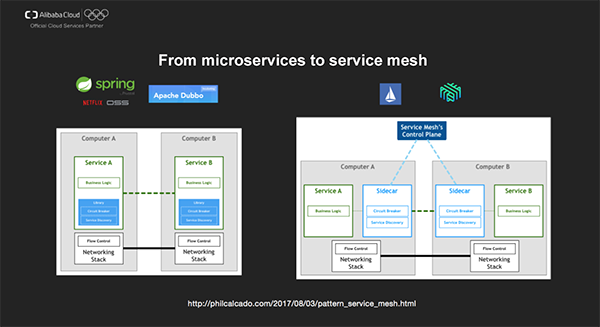

To solve the challenges in the large-scale Internet applications, we have been discussing how to transform application architectures from monolithic architectures to distributed microservices architectures. Spring Cloud based on the Netflix OSS project and the open-source Apache Dubbo project from Alibaba Group are two excellent microservices frameworks.

Current microservices architecture implementations are usually built within applications by using code libraries, which include features such as service discovery, fusion, and flow limits. However, using code libraries may cause potential version conflicts. In addition, once these code libraries are changed, the entire application needs to be rebuilt and changed, even if the application logic has no changes at all.

On the other hand, microservices in enterprises/organizations and complex systems are usually implemented by using different programming languages and frameworks, and service governance code libraries for heterogeneous environments have many differences and cannot enable unified solutions for shared problems.

To solve the preceding issues, the community begins to adopt and popularize the Service Mesh technology.

Istio and Linkerd are two representative implementations of the Service Mesh technology. They intercepts all the network communication between microservices by using the sidecar proxy and implement a variety of features between services in a unified manner, such as load balancing, access control, and rate limiting. Applications don't need to perceive the access details about underlying services. Sidecar and applications can be updated separately, implementing the decoupling between application logic and service governance.

We can understand the service connection infrastructure layer between microservices in analogy to the TCP/IP protocol stack. The TCP/IP protocol stack provides basic communication services for all the applications in an operating system. However, the TCP/IP protocol stack and applications don't have a close coupling relationship. Applications only need to use the underlying communication feature provided by the TCP/IP protocol without focusing on specific implementations of TCP/IP, such as how IPs are routed and how TCP creates links and performs congestion management. The goal of Service Mesh is to provide basic service communication protocol stacks that are irrelevant to implementations.

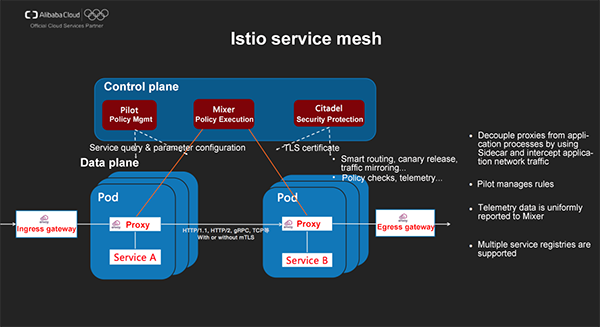

The preceding picture shows the architecture of Istio, which is logically divided into the data plane and the control plane.

In the data plane, smart proxies deployed as sidecar deployment intercept and process inter-service communication.

The control plane includes the following components:

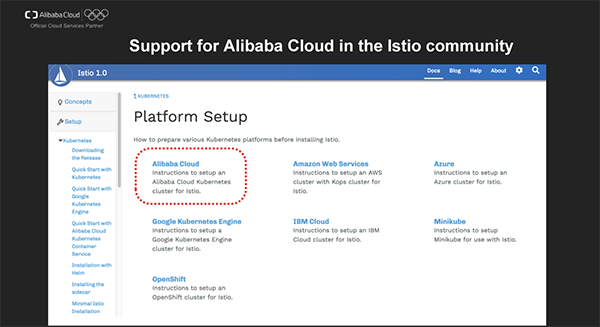

The year 2018 was the very beginning of Service Mesh and the first Istio version (V1.0) available for production was released in early August this year. We have contributed to the Istio community the guide on how to use Istio on Alibaba Cloud to help you get started quickly.

In the Alibaba Cloud Kubernetes (ACK) console, one-click deployment and out-of-the-box features are available for ease of use. You can decide whether or not to enable features such as log collection and metrics displaying simply by checking/unchecking different options.

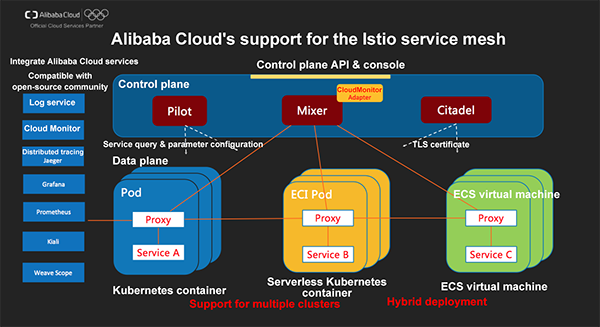

The support for Istio in ACK helps implement the deep integration of Istio with Alibaba Cloud Log Service and CloudMonitor, improving the availability of Istio in the production environment.

The Istio service mesh enables the management of access traffic among pods in Kubernetes clusters as well as the integration with ECI/Serverless Add-on, implementing the network communication between classic k8s nodes and Serverless K8s pods.

With the Mesh Expansion hybrid deployment feature, you can also uniformly manage traffic among application loads on existing ECS and containers. This is a very important feature that is useful for gradually migrating application on existing virtual machines to container platforms.

Istio versions later than v1.0 provide an important feature that allows you to create a service mesh across k8s clusters. This means that a unified service governance layer can be created across different clusters and that requirements in multiple scenarios such as hybrid cloud and disaster recovery can be met.

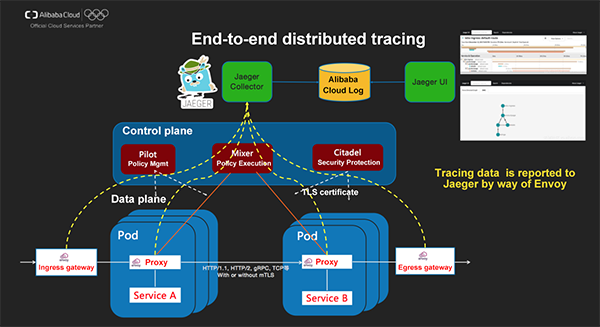

The end-to-end observability is a very important feature in Istio. Directly using the open-source Jaeger solution will lead to many challenges in the production environment. The bottom-layer storage of Jaeger depends on Cassandra clusters and requires complicated maintenance, tuning, and capacity planning. Alibaba Cloud Container Service provides a solution that supports the end-to-end analysis of Jaeger based on SLS. No maintenance is required in the data persistence layer.

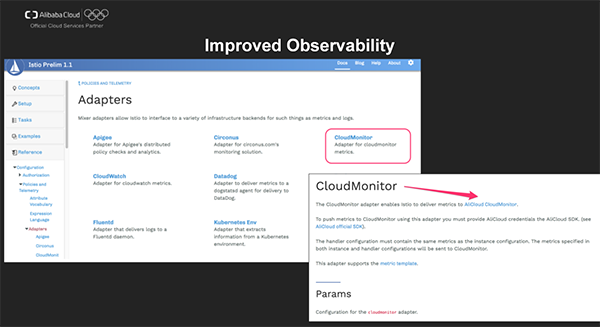

We also provide the adapter for Alibaba Cloud CMS in Istio's official Mixer Adapters. With some simple Adapter configuration, you can easily send runtime metrics data (for example, number of requests) to the monitoring backend for unified monitoring and alarming.

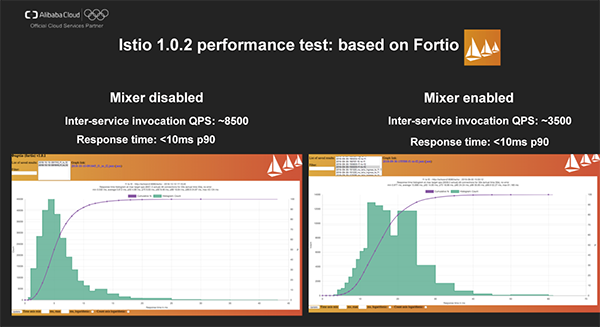

The modular architecture of Istio enables easy extension, but this architecture has some impact on the performance. We used the performance testing tool Fortio to conduct a performance test targeting Istio 1.0.2 in Alibaba Cloud Container Service.

We can see that when Mixer is not enabled, the inter-service invocation QPS of Istio is around 8500 and that the response time is within 10ms in 90% of the cases. When Mixer is enabled, QPS is reduced to 3500 and the response latency has no change.

The Istio community is continuously optimizing Istio performance. For example, the execution of some governance policies has migrated from Mixer to Envoy in the data plane. If your applications have high performance requirements, we recommend that you use the traffic management feature of Pilot first and temporarily disable Mixer. Istio 1.1 will be released at the end of 2018, and we will perform a thorough performance test on it.

A major challenge in secure production is how to release applications without influencing online business. However good the tests we perform are, we can't always find all of the potential problems. We must release versions in a safe and controllable way, limit influences of failures within an acceptable range and support quick rollback.

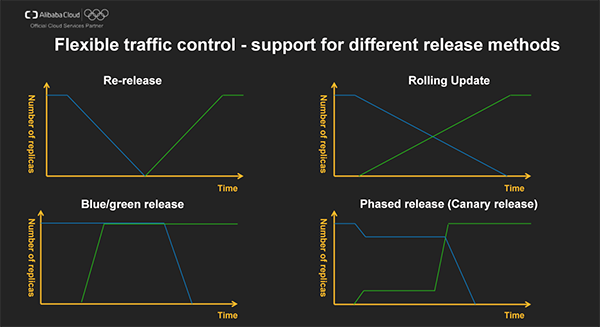

The simplest method to release newer applications is republishing, that is, to stop the older versions first and start newer applications. However, this method will cause business interruption, which is usually unacceptable in the production environment.

The built-in Deployment feature in Kubernetes supports rolling update. This is a progressive update process, which gradually removes and stops older instances in the load balancing service backend, starts newer application instances and adds them to the load balancing service backend at the same time. This method can implement application releases without business interruptions. Because the whole release process is uncontrollable, application rollback is very costly if problems occur later due to insufficient tests.

Therefore, most Internet companies adopt the phased release method, which is more controllable.

Let's first look at the blue/green release. For the blue/green release, newer applications and older applications co-exist and newer versions have the same resources. Only a portion of traffic is split into newer versions by routing. This release method provides good stability and only requires route switching for rollback. However, this method requires twice the system resources and therefore is not suitable for releasing large-scale applications.

Another release method is the canary release. We gradually release a newer version of an application and keep an older version at the same time. First we split a small portion of traffic to the newer version (for example, 10%) by routing and if the newer version functions correctly, we gradually release the newer version, take the older version offline and split more traffic into the newer version. If problems occur, we only need to roll back parts of the application.

We can see that application releasing and routing management are two orthogonal tasks. We can implement controllable releases with k8s. ACK supports both the blue/green release and the canary release. Istio provides a route control layer independent of release methods. We can flexibly switch application versions according to the request type, mobile devices/PCs, and regions. Combining Istio and ACK can implement safe and controllable releases.

A distributed system is very complex. In a distributed system, stability risks in any of the infrastructure, application logic, and maintenance aspects can lead to business system failures.

We cannot simply "trust in Buddha that there will be no downtime" when approaching stability challenges. Instead, we should use systematic methods to proactively prevent downtime.

Netflix put forward the Chaos Engineering concept and released a series of tools as early as 2012. Chaos Monkey randomly kills instances in production and testing environments to test system durability, train standby services and ensure simpler, faster, and more automatic recovery; Latency Monkey purposefully increases the latency of requesting or returning on a machine to test system response; Chaos Gorilla simulates an outage of a data center to test business disaster tolerance and resiliency. Chaos engineering lets you conduct experiments in distributed systems in production and testing environments to validate and improve system durability and implement continuous process optimizations.

Behind Alibaba Group's success of the Double 11 event each year are two indispensable factors: the systematic stability experiments and the end-to-end stress tests on the whole e-commerce platform. In order to support Double 11 events, it is required to comprehensively and thoroughly simulate the whole platform and ensure that performance, capacity, and stability in each aspect meet the requirements. The following considerations are every important:

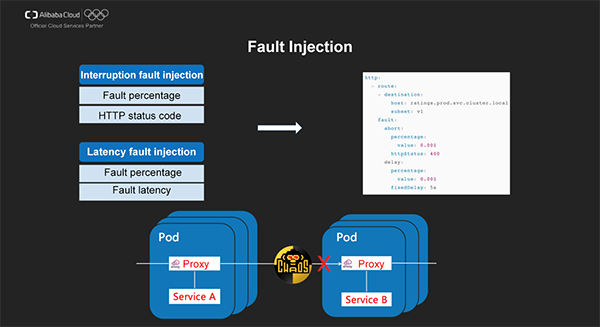

Fault injection is a common technique in chaos engineering. Istio supports two fault injection types. The first type is interruption fault injection that terminates requests and returns error code to downstream services to simulate errors in upstream services. Istio also allows specifying the proportion of interrupted requests. The second type is latency fault injection that induces latency before the forwarding action in order to simulate faults such as network faults and upstream service overloads.

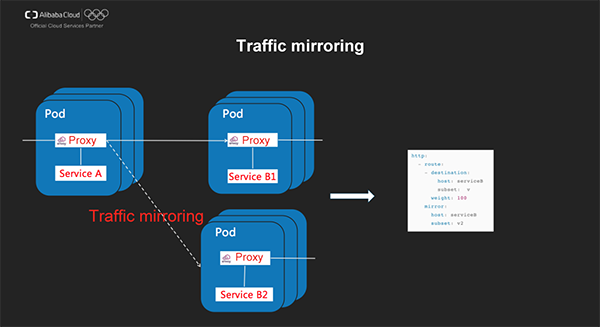

Traffic mirroring is a method of sending replicas of real-time traffic to the mirror service for validating tests. Traffic mirroring is performed outside the critical request path of the primary service. For example, when forwarding a HTTP request to an intended target, mirror the traffic and send the mirrored traffic to other targets at the same time. This allows us to record the real data and traffic models in a production system, which can be used to generate simulated test data and plans and perform end-to-end stress testing in a more real manner.

Note: For performance considerations, Sidecar/Gateway will not wait for the response from the mirroring targets before the response from the intended target is returned.

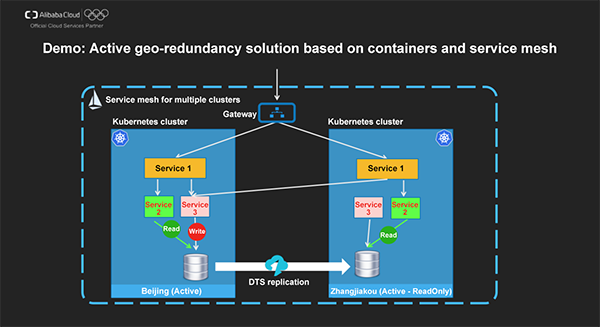

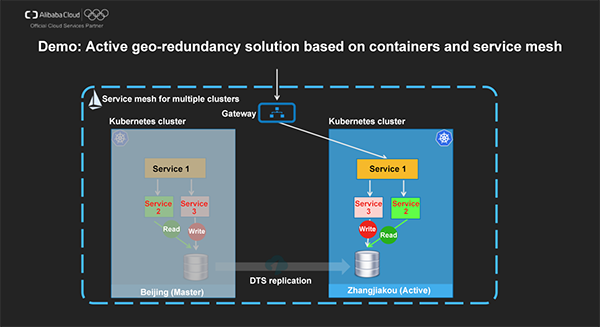

Now let's look at an active geo-redundancy example solution that is based on containers and service mesh:

Consider a microservices application for redeeming reward points, which is deployed in two k8s clusters in Beijing and Zhangjiakou respectively. This application implements network interconnection with Express Connect and database replication with DTS. The services in the whole system are managed by using a highly available Istio service mesh across clusters.

In this example, we can use Alibaba Cloud Container Service to easily and uniformly deploy and manage applications in k8s clusters in the two regions. Meanwhile, we can use the Istio service mesh to implement the service management plane. Initially, the clusters in both Beijing and Zhangjiakou provide public-facing services. When the cluster in Beijing cannot be accessed, we switch all service invocations to the cluster in Zhangjiakou to ensure business continuity.

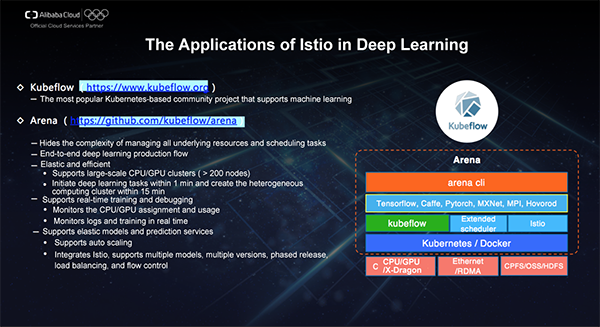

Kubeflow is a Kubernetes-based community project initially launched by Google to support machine learning. Kubeflow can support different cluster learning frameworks in a cloud-native manner and manage the entire lifecycle from data preparation and model training to model prediction.

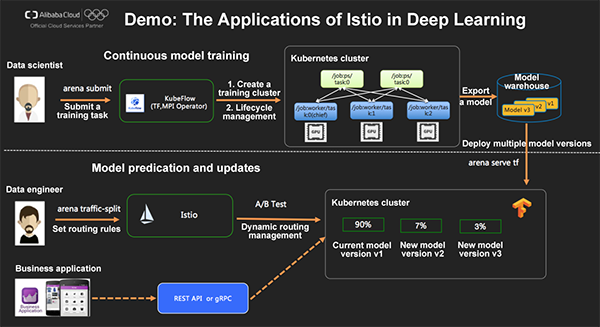

Arena is an open-source project contributed by the Alibaba Cloud Container Service team to the Kubeflow community. This tool can hide all the underlying details and cascade deep learning pipelines. Today we mainly explain how to use Service Mesh to strengthen online inference services and support multiple models/versions, phased release, refined traffic flow control, and elasticity.

Continuously optimizing deep learning models is a great challenge in AI production applications. For example, an e-commerce platform needs to train models continuously based on user behavior records so that it can better predict user preferences and consumption hotspots. Meanwhile, before a new model goes online, a safe and controllable process is required to validate the improvements brought by that new model.

By using the Arena commands, we can easily and continuously optimize training models, release models, and traffic switching models. Data scientists use "arena submit" to submit distributed model training, for which GPUs or CPUs can be used. When a new model is trained, the "arena serve" command deploys the new model to a production environment, where the old model is also retained. However, this new model doesn't provide public-facing services. At this point, data engineers can use the "traffic-split" command in Arena to implement traffic control, model version updates, and rollback.

Serverless Kubernetes Container Service Supports Mounting EIPs with Pods

Deploy Virtual Nodes Quickly with Container Service for Kubernetes

228 posts | 33 followers

FollowAlibaba Clouder - February 14, 2020

Alibaba Clouder - February 22, 2021

Alibaba Container Service - September 13, 2024

Alibaba Cloud Native - November 3, 2022

Alibaba Developer - March 8, 2021

JeffLv - December 2, 2019

228 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn MoreMore Posts by Alibaba Container Service