Spark is a high-performance, easy-to-use engine for large-scale data analytics. It supports a wide range of applications, including complex in-memory computing, which makes it ideal for building large-scale, low-latency data analysis applications. DataWorks provides Serverless Spark Batch nodes that allow you to easily develop and periodically schedule Spark tasks on EMR Serverless Spark clusters in DataWorks.

Applicability

Computing resource limitations: You can only attach EMR Serverless Spark computing resources. Ensure that network connectivity is available between the resource group and the computing resources.

Resource group: Only Serverless resource groups can be used to run this type of task.

(Optional) If you are a Resource Access Management (RAM) user, ensure that you have been added to the workspace for task development and have been assigned the Developer or Workspace Administrator role. The Workspace Administrator role has extensive permissions. Grant this role with caution. For more information about adding members, see Add members to a workspace.

If you use an Alibaba Cloud account, you can skip this step.

Create a node

For more information, see Create a node.

Develop a node

Before you develop a Serverless Spark Batch task, you must first develop the Spark task code in EMR and compile it into a Java Archive (JAR) package. For more information about Spark development, see Spark Tutorials.

Choose an option based on your scenario:

Option 1: Upload and reference an EMR JAR resource

In DataWorks, you can upload a resource from your local machine to DataStudio and then reference it. After you compile the Serverless Spark Batch task, obtain the compiled JAR package. We recommend that you choose a storage method for the JAR package based on its size. If the JAR package is smaller than 500 MB, you can upload it from your local machine as a DataWorks EMR JAR resource.

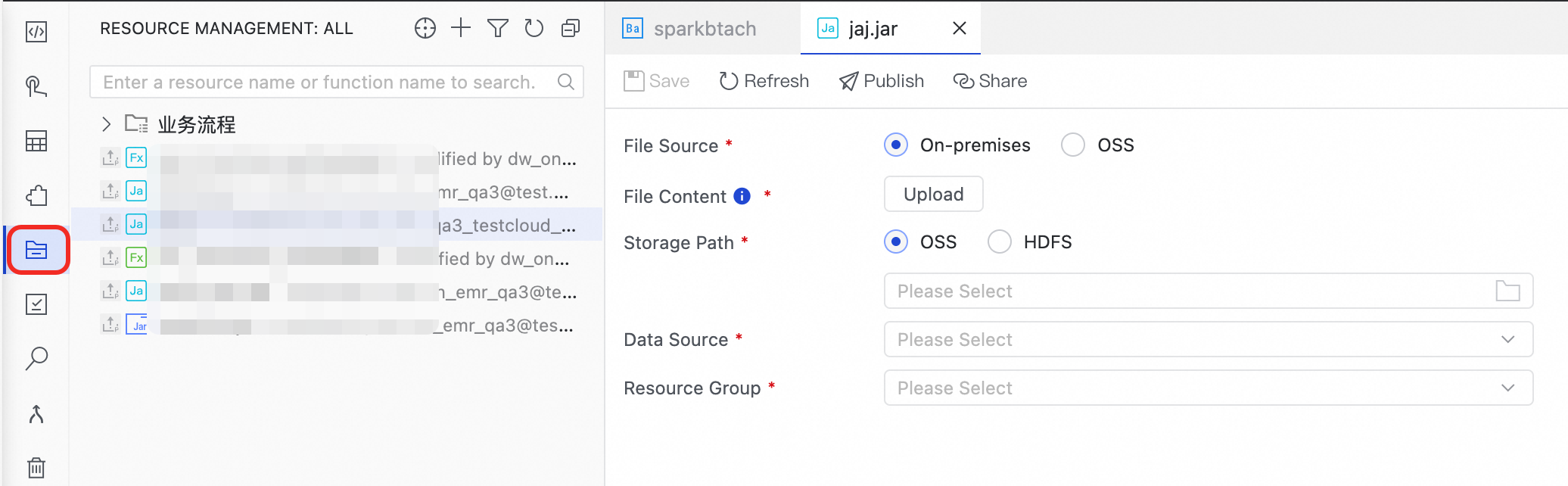

Create an EMR JAR resource.

In the navigation pane, click the Resource Management icon

to open the Resource Management page.

to open the Resource Management page.On the Resource Management page, click the

icon, select , and enter the name spark-examples_2.11-2.4.0.jar.

icon, select , and enter the name spark-examples_2.11-2.4.0.jar.Click Upload to upload spark-examples_2.11-2.4.0.jar.

Select a Storage Path, Data Source, and Resource Group.

ImportantFor Data Source, select the bound Serverless Spark cluster.

Click the Save button.

Reference the EMR JAR resource.

Open the code editor for the created Serverless Spark Batch node.

In the navigation pane, expand Resource Management. Find the resource that you want to reference, right-click the resource, and then select Reference Resource.

After you select the resource, a success message is displayed in the code editor of the Serverless Spark Batch node. This indicates that the resource is referenced.

##@resource_reference{"spark-examples_2.11-2.4.0.jar"} spark-examples_2.11-2.4.0.jarThe resource is referenced when a reference statement is automatically added to the code editor. In this statement, spark-examples_2.11-2.4.0.jar is the name of the EMR JAR resource that you uploaded.

Rewrite the code of the Serverless Spark Batch node to add the spark-submit command. The following code provides an example.

ImportantThe code editor for Serverless Spark Batch nodes does not support comment statements. Use the following example to rewrite the task code. Do not add comments. Adding comments will cause an error when you run the node.

For EMR Serverless Spark, you do not need to specify the deploy-mode parameter in the spark-submit command. Only cluster mode is supported.

##@resource_reference{"spark-examples_2.11-2.4.0.jar"} spark-submit --class org.apache.spark.examples.SparkPi spark-examples_2.11-2.4.0.jar 100Command

Description

classThe main class of the task in the compiled JAR package. In this example, the main class is

org.apache.spark.examples.SparkPi.NoteFor more information about the parameters, see Submit a task using spark-submit.

Option 2: Directly reference an OSS resource

You can directly reference an OSS resource in the node. When you run the EMR node, DataWorks automatically downloads the OSS resource to your local machine. This method is often used in scenarios where an EMR task must run with JAR dependencies or an EMR task depends on scripts.

Develop a JAR resource: This topic uses SparkWorkOSS-1.0-SNAPSHOT-jar-with-dependencies.jar as an example.

Upload the JAR resource.

Log on to the OSS console. In the navigation pane, click Buckets.

Click the name of the destination bucket to open the file management page.

Click Create Directory to create a folder to store the JAR resource.

Go to the folder and upload the

SparkWorkOSS-1.0-SNAPSHOT-jar-with-dependencies.jarfile to the bucket.

Reference the JAR resource.

On the editor page for the created Serverless Spark Batch node, edit the code to reference the JAR resource.

ImportantIn the following code, the OSS bucket name is

mybucketand the folder isemr. Replace them with your actual bucket name and folder path.spark-submit --class com.aliyun.emr.example.spark.SparkMaxComputeDemo oss://mybucket/emr/SparkWorkOSS-1.0-SNAPSHOT-jar-with-dependencies.jarParameter description:

Parameter

Description

classThe full name of the main class to run.

ossfile pathThe format is

oss://{bucket}/{object}Bucket: A container in OSS for storing objects. Each Bucket has a unique name. Log on to the OSS Management Console to view all Buckets under the current account.

object: A specific object, such as a file name or path, stored in a bucket.

NoteFor more information about the parameters, see Submit a task using spark-submit.

Debugging nodes

In the Run Configuration section, configure parameters, such as Computing Resource and Resource Group.

Configuration Item

Description

Computing Resource

Select a bound EMR Serverless Spark computing resource. If no computing resources are available, select Create Computing Resource from the drop-down list.

Resource Group

Select a resource group that is bound to the workspace.

Script Parameters

When you configure the node content, you can define variables in the

${ParameterName}format. You must then specify the Parameter Name and Parameter Value in the Script Parameters section. These variables are dynamically replaced with their actual values at runtime. For more information, see Sources and expressions of scheduling parameters.ServerlessSpark Node Parameters

The runtime parameters for the Spark program. The following parameters are supported:

DataWorks custom runtime parameters. For more information, see Appendix: DataWorks parameters.

Spark built-in property parameters. For more information, see Open-source Spark properties and Custom Spark Conf parameters.

Configure the parameters in the following format:

spark.eventLog.enabled : false. DataWorks automatically adds the parameters to the code that is submitted to Serverless Spark in the following format:--conf key=value.NoteDataWorks lets you configure global Spark parameters for each module at the workspace level. You can also set the priority of these global parameters over module-specific parameters. For more information, see Configure global Spark parameters.

On the toolbar of the node editor, click Run.

ImportantBefore you publish, you must synchronize the ServerlessSpark Node Parameters from the Run Configuration to the ServerlessSpark Node Parameters for the Scheduling.

Next steps

Schedule a node: If a node in the project folder needs to run periodically, you can set the Scheduling Policies and configure scheduling properties in the Scheduling section on the right side of the node page.

Publish a node: If the task needs to run in the production environment, click the

icon to publish the task. A node in the project folder runs on a schedule only after it is published to the production environment.

icon to publish the task. A node in the project folder runs on a schedule only after it is published to the production environment.Node O&M: After you publish the task, you can view the status of the auto triggered task in the Operation Center. For more information, see Get started with Operation Center.

References

Appendix: DataWorks parameters

Parameter | Description |

SERVERLESS_QUEUE_NAME | Specifies the resource queue to which the task is submitted. By default, tasks are submitted to the Default Resource Queue that is configured for the cluster in the Cluster Management section of the Management Center. If you require resource isolation and management, you can add queues. For more information, see Manage resource queues. Configuration methods:

|