In DataWorks, you can specify Spark parameters for each module at the workspace level. By default, each module uses these parameters to run tasks. You can also customize global Spark parameters and set their priority over parameters configured within specific modules, such as Data Development, Data Analysis, and Operation Center. For more information, see the official Spark documentation. This topic describes how to configure global Spark parameters.

Background information

Apache Spark is an engine for large-scale data analytics. In DataWorks, you can use the following methods to configure the Spark parameters for running scheduling nodes:

Method 1: Configure global Spark parameters

You can set the Spark parameters for a DataWorks feature module to run EMR tasks at the workspace level. You can also define the priority of these parameters over the parameters configured within a specific module. For more information, see Configure global Spark parameters.

Method 2: Configure Spark parameters within a product module

Data Development (Data Studio): For Hive and Spark nodes, you can set the Spark properties for a single node task in the Scheduling Configurations section on the right side of the node editing page.

Other product modules: Setting Spark property parameters separately within the module is not supported.

Limits

Only users with the following roles can configure global Spark parameters:

An Alibaba Cloud account.

A Resource Access Management (RAM) user or RAM role that has the AliyunDataWorksFullAccess permission.

A Resource Access Management (RAM) user with the Workspace Administrator role.

Spark parameters take effect only for EMR Spark nodes, EMR Spark SQL nodes, and EMR Spark Streaming nodes.

NoteIf you want to enable Ranger access control for Spark in DataWorks, add the

spark.hadoop.fs.oss.authorization.method=rangerconfiguration when you configure global Spark parameters to ensure that Ranger access control is enabled.You can update Spark-related configurations in the Management Center of DataWorks and the E-MapReduce console. If the configurations for the same Spark parameter conflict, the configuration in the DataWorks Management Center takes precedence for tasks that are submitted from DataWorks.

You can set global Spark parameters only for the Data Development (Data Studio), Data Quality, Data Analysis, and Operation Center modules.

Prerequisites

An EMR cluster is registered to DataWorks. For more information, see DataStudio (old version): Associate an EMR computing resource.

Configure global Spark parameters

Go to the page for configuring global Spark parameters.

Go to the SettingCenter page.

Log on to the DataWorks console. In the top navigation bar, select the desired region. In the left-side navigation pane, choose . On the page that appears, select the desired workspace from the drop-down list and click Go to Management Center.

In the left-side navigation pane of the SettingCenter page, click Cluster Management. The Cluster Management page appears.

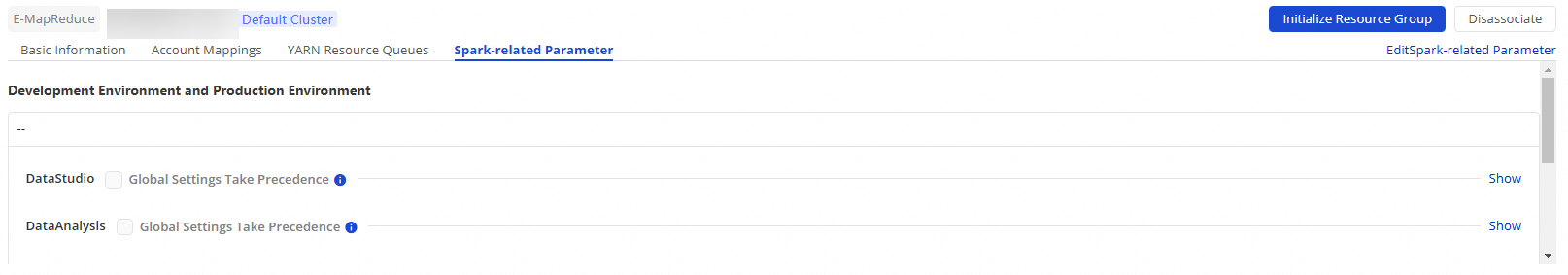

Find the destination EMR cluster and click SPARK Parameters to open the global SPARK parameter configuration page.

Configure global Spark parameters.

Click Edit SPARK Parameters in the upper-right corner of the SPARK Parameters page to configure global SPARK parameters and priorities for each module.

NoteThe configurations globally take effect in a workspace. You must confirm the workspace before you configure the parameters.

Parameter

Step

Spark property

Configure the Spark properties (Spark Property Name and Spark Property Value) that are used when each module runs EMR tasks. You can refer to Spark Configurations and Spark Configurations on Kubernetes for configuration.

Global Configuration Has Priority

If you select this option, the global configuration takes precedence over the configuration within the product module. In this case, tasks are run based on the globally configured Spark properties.

Global configuration: Refers to the settings configured in for an EMR cluster on the SPARK Parameters page as Spark properties.

NoteYou can set global Spark parameters only for the Data Development (Data Studio), Data Quality, Data Analysis, and Operation Center modules.

Configuration within the product module:

Data Development (Data Studio): For Hive and Spark nodes, you can set the Spark properties for a single node task in the Scheduling Configurations section on the right side of the node editing page.

Other product modules: Setting Spark property parameters separately within the module is not supported.