In Operation Center, you can view tasks that are published to the production environment and perform related operations and maintenance (O&M), such as testing and data backfill. This topic uses an auto triggered task as an example to demonstrate the basic operations in Operation Center. You will learn how to confirm task configurations, backfill data for historical periods, and configure intelligent monitoring rules to ensure that your tasks are scheduled correctly.

Prerequisites

An auto triggered node named result_table is created and published. For more information, see Data development.

This topic uses the result_table node to demonstrate O&M operations. You can perform these operations on your own tasks as needed.

Background information

The DataWorks Operation Center supports operations management for various task types, such as auto triggered tasks, one-time tasks, and real-time sync tasks. It also provides multiple monitoring methods for objects such as the tasks and the resources they use. This helps you promptly identify and handle exceptions based on alerts to ensure efficient and stable data production.

This topic describes only the basic workflow for task execution in Operation Center. You can perform more advanced O&M operations as needed, such as the following:

Publish, unpublish, or freeze tasks. For more information, see Basic O&M operations for auto triggered tasks.

Manage and control O&M operations on tasks. For more information, see Manage and control O&M operations (Advanced).

For more information about Operation Center, see Overview of Operation Center.

Go to Operation Center

Log on to the Operation Center console. Switch to the destination region, select your workspace from the drop-down list, and then click Enter Operation Center.

Procedure

Phase 1: Test and verify the scheduling task

Step 1: View the configuration of the auto triggered task

To prevent an auto triggered task from being scheduled unexpectedly, you must check its configuration after you publish it. Make sure that parameters, such as scheduling parameters and the exclusive resource group for scheduling, are correct. If they are not, modify the configuration and publish the task again.

Step 2: Test the auto triggered task

Use the smoke testing feature to verify that the auto triggered task runs correctly in the production environment. If an error occurs, you must fix it promptly to ensure that the task can run as expected.

Step 3: Backfill historical data for the auto triggered task

Use the data backfill feature to recalculate data for a historical period.

Step 4: View recurring instances

After an auto triggered task is published, recurring instances are generated based on its schedule. If Instance Generation Mode is set to Generate On The Next Day (T+1) in DataStudio, the task starts scheduling on the next day. If it is set to Generate Immediately After Publishing, the task schedules on the same day. You can view the generation and running status of the instances to check whether the task is scheduled as expected.

Step 5: View the execution results

After you test a node or backfill data, you can check the status of the data write operation.

Phase 2: Monitor the auto triggered task

Step 6: Create a custom monitoring rule

You can configure intelligent monitoring rules for the auto triggered task as needed. This lets you monitor the scheduling and running status of the task to ensure that it is scheduled correctly in the future.

Step 7: Create an intelligent baseline (Advanced)

To ensure that high-priority tasks generate data by a specific time, you can set up intelligent baseline monitoring. If the system predicts that a task cannot be complete by the specified time, the baseline sends an alert about the task exception. This helps you quickly retrieve information about and handle the exception.

Step 8: Create an automatic O&M rule for a resource group

You can create custom monitoring rules for an exclusive resource group. You can set monitoring alerts for the resource group's usage and the number of instances waiting on it, and then perform related O&M operations.

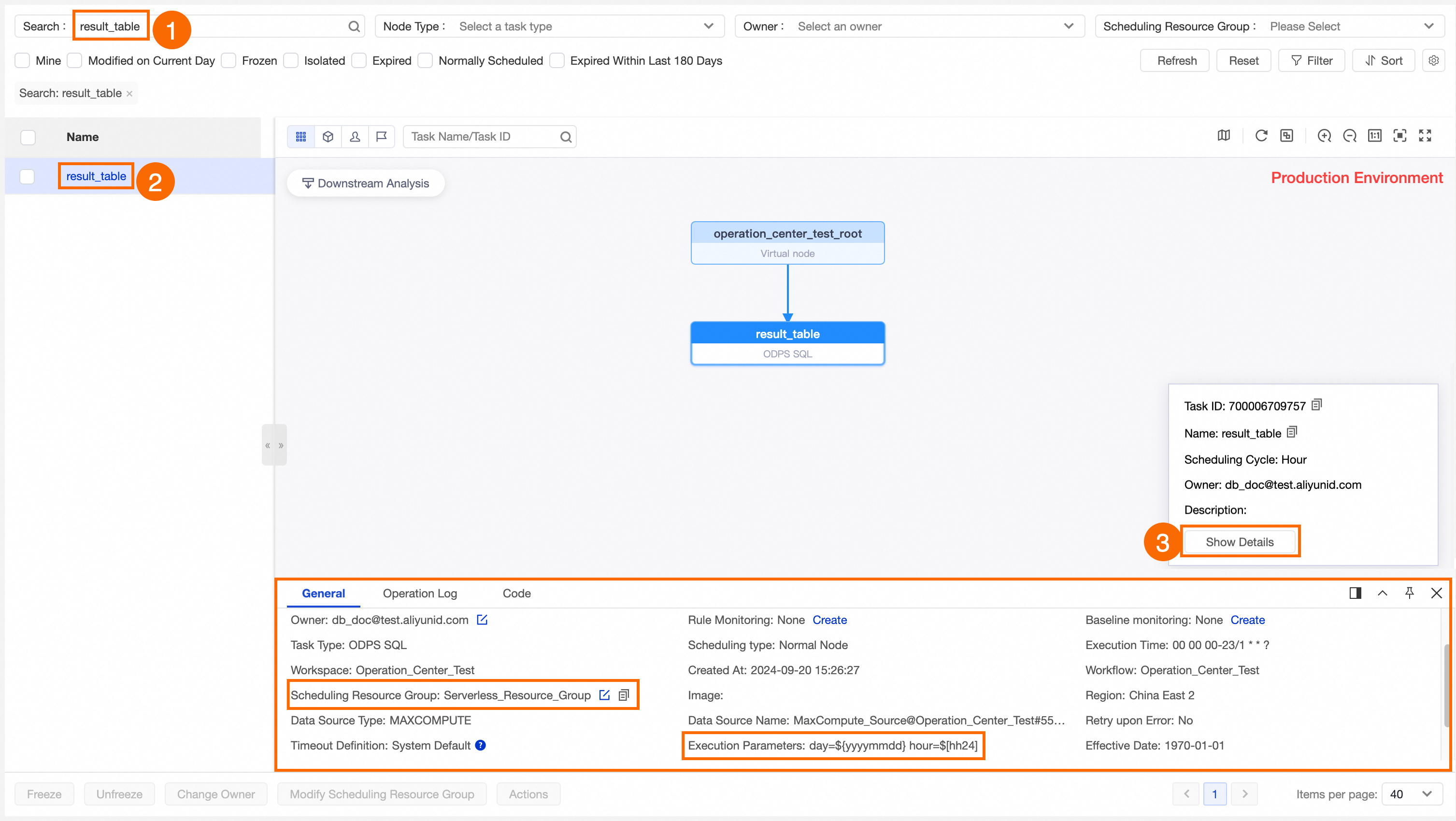

Step 1: View the configuration of the auto triggered task

To prevent an auto triggered task from being scheduled unexpectedly, you must check its configuration after you publish it. Make sure that parameters, such as scheduling parameters and node dependencies, are correct.

Find the destination node.

In the navigation pane on the left, choose .

On the Auto Triggered Task page, search for the destination node.

View the node details.

Click the destination node to go to its Directed Acyclic Graph (DAG).

Click Expand Details to view the detailed information about the node.

For more information about operations on auto triggered tasks, see Manage auto triggered tasks.

If the node configuration does not meet your expectations, go to Data Development (DataStudio) to find the node, modify its configuration on the node editing page, and publish it again. For more information, see Node-related operations.

This example shows how to find the published result_table node in the auto triggered task list and check whether its Scheduling Parameter and Schedule Resource Group are correctly configured.

Step 2: Test the auto triggered task

You can use the smoke testing feature for an auto triggered task to verify that its scheduling and execution in the production environment meet your expectations. This operation executes the actual code logic.

Go to the smoke testing page.

You can go to the smoke testing page using one of the following methods:

Method 1: In the auto triggered task list, find the destination task and click Test in the Operation column.

Method 2: In the DAG of the destination task, right-click the task and select Test.

Configure the data timestamp and runtime for the test, and then click OK.

When the task is tested, a test instance is generated. You can go to the page to view the instance details and check its running status.

NoteFor more information about smoke testing, see Perform smoke testing.

To view test instances, see Run a test and view test instances.

This example shows how to test the result_table node and view the execution status of the generated test instance.

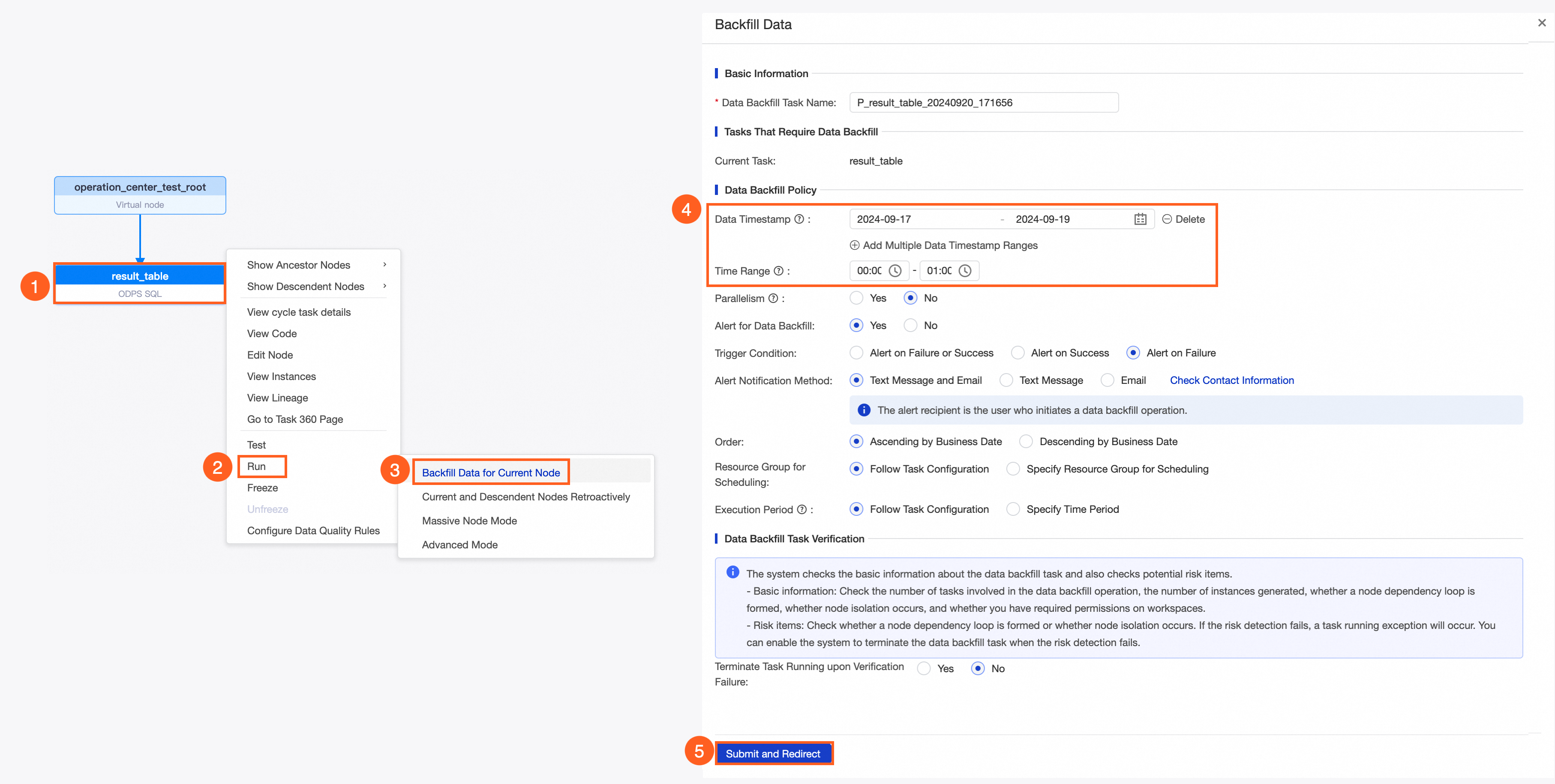

Step 3: Backfill historical data for the auto triggered task

After an auto triggered task is developed, submitted, and published, it runs at the scheduled time. If you want to recalculate data for a historical period, you can use the data backfill feature.

Go to the data backfill page.

You can go to the data backfill page using one of the following methods:

Method 1: In the auto triggered task list, find the destination task and click Backfill Data in the Operation column.

Method 2: In the DAG of the destination task, right-click the task and select Backfill Data.

Select a data backfill mode.

Select a data backfill mode as needed:

Data backfill mode

Description

Scenarios

You can select one or more tasks as root tasks and then manually select their descendant nodes as the scope for the data backfill. You can also specify a subset of descendant nodes.

NoteThis mode is compatible with previous data backfill plans, such as Current Node, Current Node and Descendant Nodes, and Advanced Mode.

A maximum of 500 root tasks and 2,000 total tasks, including root tasks and their descendant nodes, are supported.

This mode is used for batch data backfill for the current node and its descendant nodes.

This mode is used to flexibly select a batch of nodes for data backfill. The nodes do not need to have dependencies.

You can select a start task as the root task and one or more end tasks. The platform automatically analyzes and includes all tasks between the start and end tasks, inclusive, in the data backfill scope.

This mode is used for end-to-end data backfill for tasks that have complex dependencies.

You can select a task as the root task and determine the data backfill scope based on the workspaces of its descendant nodes.

NoteThis mode is compatible with the previous Massive Nodes Mode data backfill plan. A maximum of

20,000tasks are supported.You cannot configure a task blacklist.

This mode is used if the descendant nodes of the current node are in multiple workspaces and you need to backfill data for nodes in those workspaces.

After you select a root task, the system automatically includes the task and all its descendant nodes in the data backfill scope.

ImportantYou can view which tasks are triggered only when the data backfill task is running. Use this mode with caution.

This mode is used for data backfill for a root task and all of its descendant nodes.

Configure data backfill parameters.

Configure the data timestamp, the nodes for which you want to backfill data, and other parameters as needed. The required parameters vary based on the selected mode. For more information, see Backfill data and view data backfill instances (New).

In this example, the data backfill mode Backfill Data For Current Node is selected. Data generated in the time period from 00:00 to 01:00 every day from 2024-09-17 to 2024-09-19 is backfilled for the result_table node. You can backfill data for the node by performing the operations shown in the following figure.

After you configure data backfill, the variables in the node code are replaced with specific values based on the scheduling parameters and data timestamp that you configured.

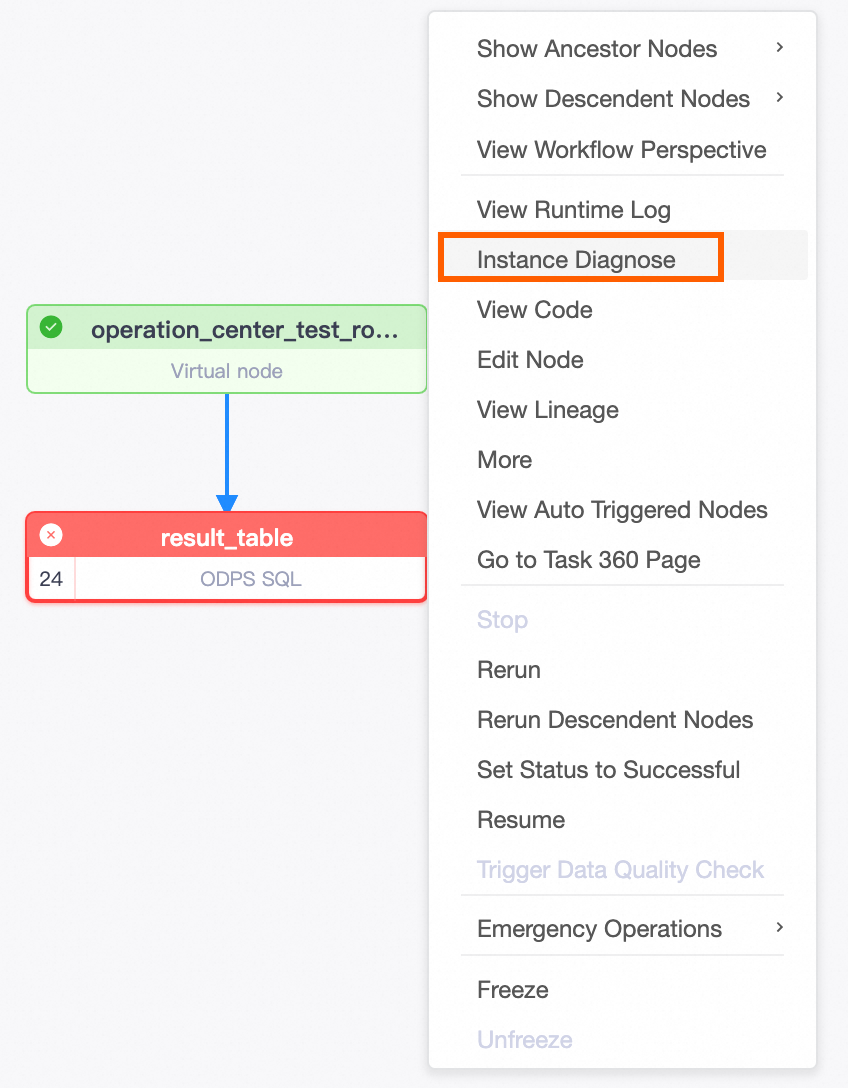

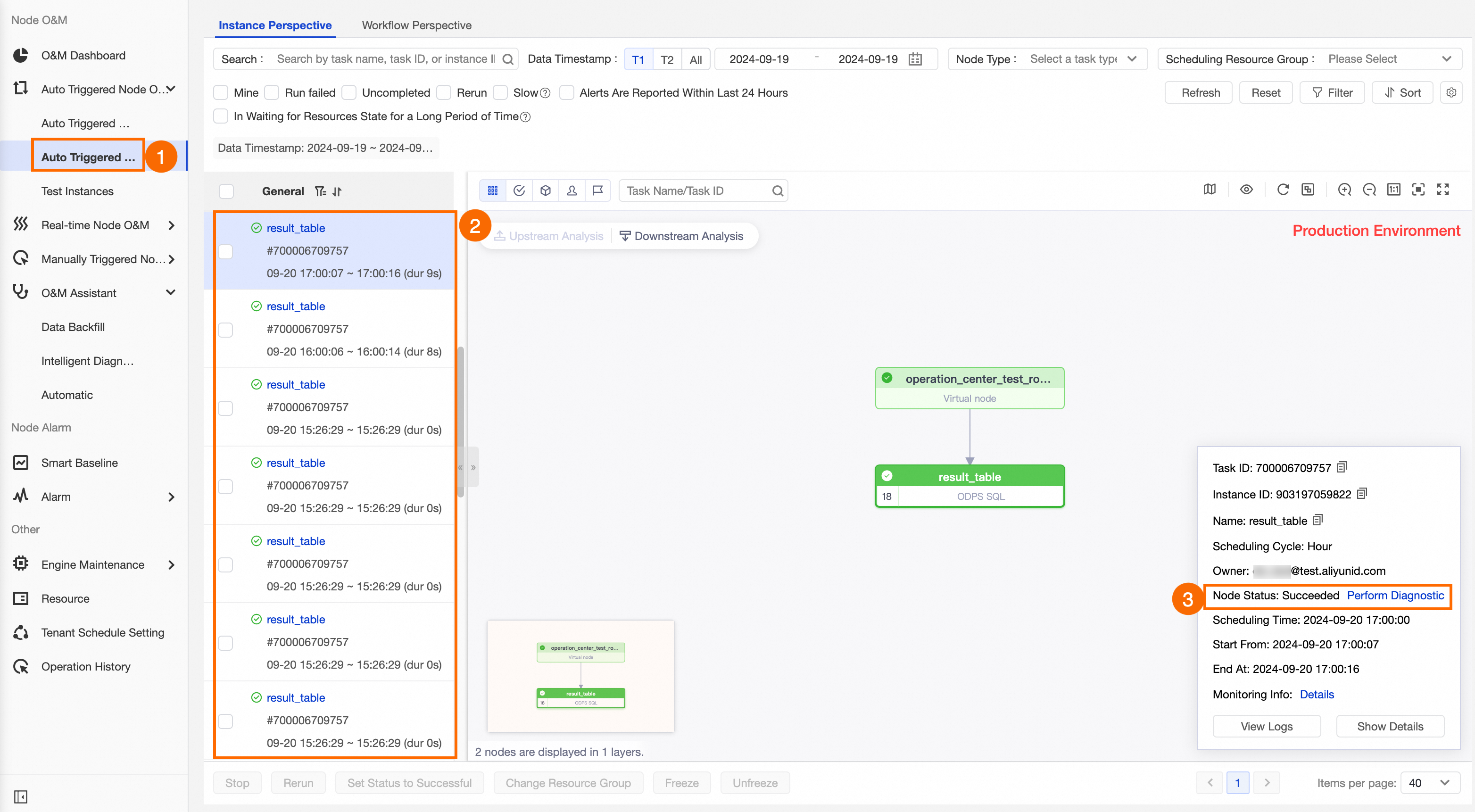

Step 4: View recurring instances

After an auto triggered task is published, recurring instances are generated based on its schedule. If Instance Generation Mode is set to Generate On The Next Day (T+1) in DataStudio, the task starts scheduling on the next day. If it is set to Generate Immediately After Publishing, the task schedules on the same day. You can view the generated recurring instances to check whether the task is scheduled and running as expected.

Go to the recurring instances page.

In the navigation pane on the left of Operation Center, choose .

View recurring instances.

Check whether the corresponding recurring instances are generated and running as expected based on the schedule configuration of the task. For more information about recurring instances, see View recurring instances.

If an instance is not running, perform the following steps:

Use the Upstream Analysis feature in the DAG panel to quickly locate the key upstream tasks that are blocking the current task.

Use the Runtime Diagnosis feature to diagnose the reasons why the key upstream task is not running or to identify existing issues. If a task has deep dependency layers, you can use the runtime diagnosis feature to quickly locate issues and improve O&M efficiency.

This example shows the recurring instances of the hourly scheduled node result_table generated on 2024-09-19.

Step 5: View the execution results

After you test an auto triggered task or backfill data, you can check the status of the data write operation in the following ways:

Go to Data Map to view the results.

Query the destination table in Data Map and view the table details to confirm that the data is written correctly. For more information about how to find and view tables, see Find a table and View table details.

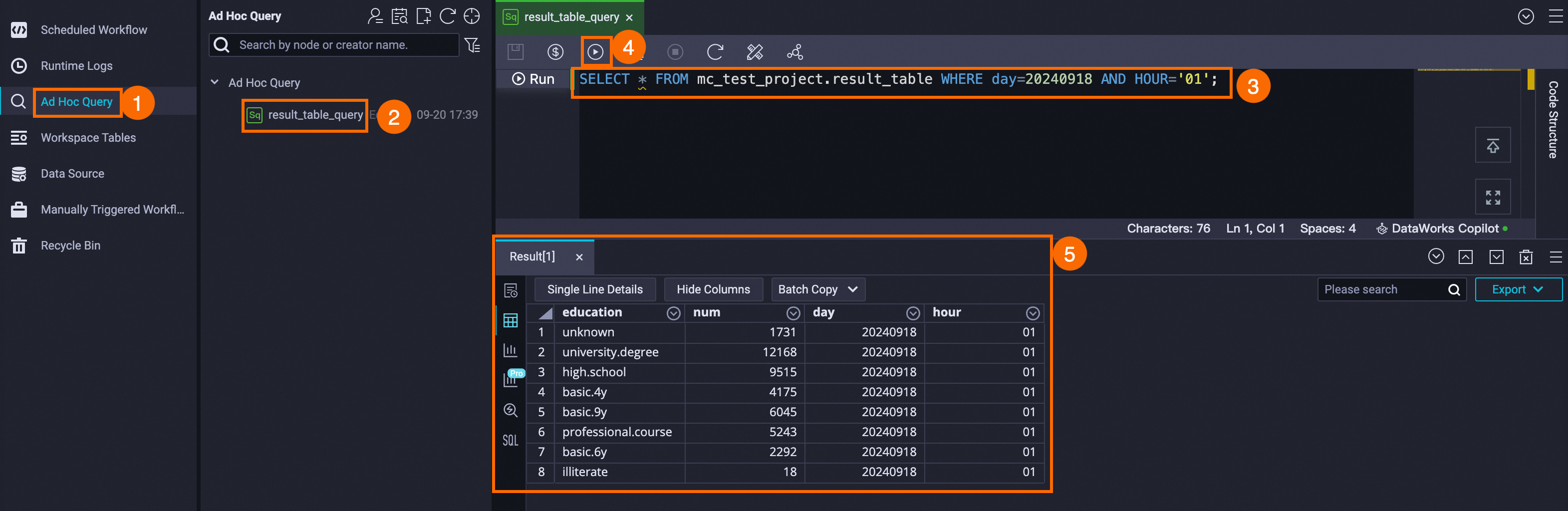

Use an Ad Hoc Query to view the results.

To query data and related SQL code only in the developer environment (DataStudio) to check whether the actual runtime results match your expectations or to verify the correctness of the code without publishing the code to the production environment, you can create an ad hoc query file.

By default, a Resource Access Management (RAM) user does not have the permissions to query production tables in the MaxCompute DPI engine. You can go to Data Map and request permissions for the table on its product page. For more information, see Request table permissions.

When a node is executed in the developer environment, data is written to the developer DPI engine project. When the node is executed in the production environment, data is written to the production DPI engine project. Before you run a query, confirm the DPI engine project where the data is located. You can go to the Computing Resource page to view the DPI engine project information for the corresponding environment.

MaxCompute supports cross-project table access, such as accessing tables that are created in other data sources or accessing production project tables from a developer project. However, some DPI engines do not support this feature. Whether a DPI engine type supports cross-project table access depends on its actual features.

In this example, the DPI engine project for the result_table node in the production environment is mc_test_project. You can use an ad hoc query to create an ODPS SQL node and run an SQL statement to query the partition data of the mc_test_project.result_table table.

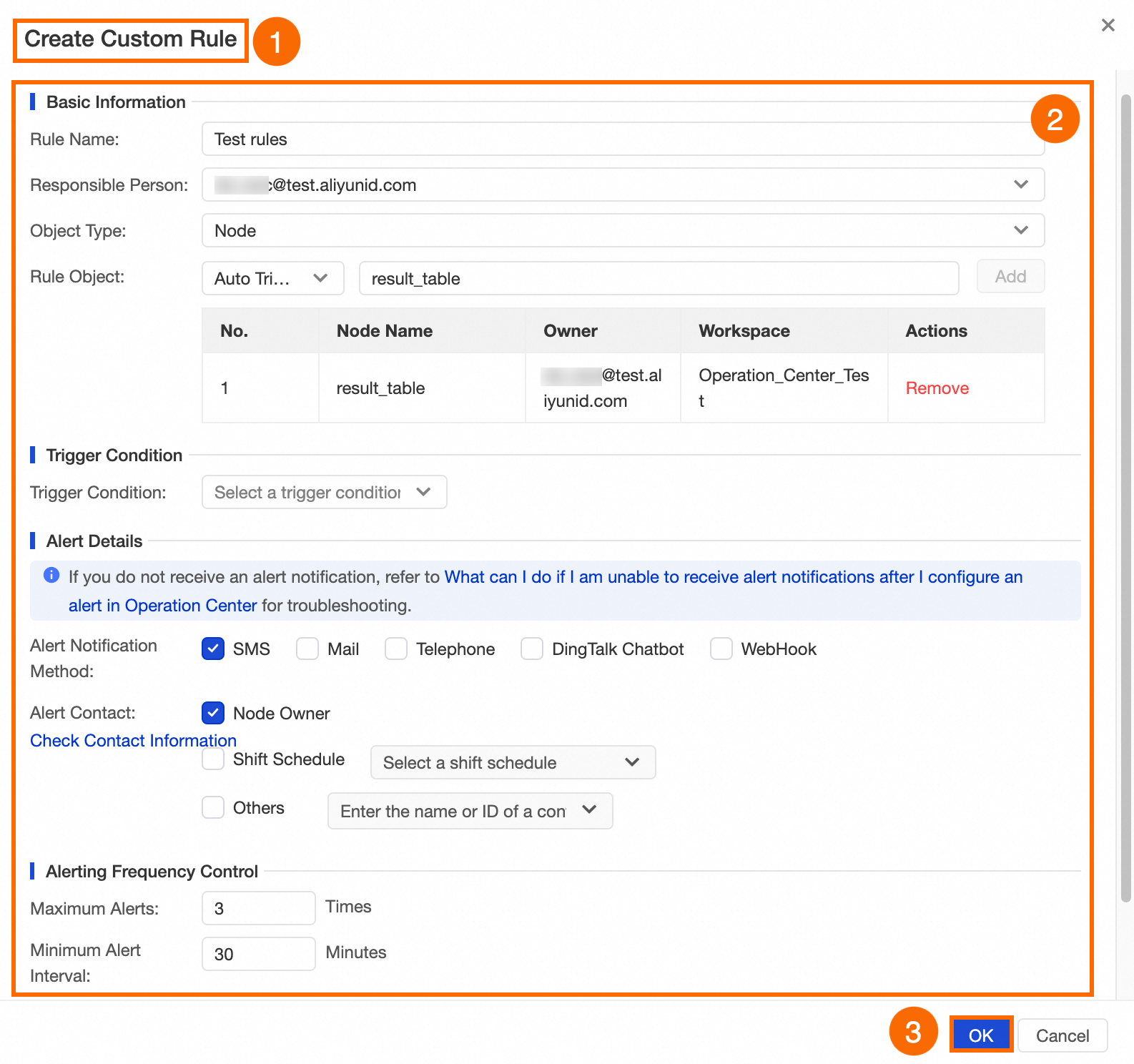

Step 6: Create a custom monitoring rule

After the node is tested and verified, you can create a custom monitoring rule for the node to monitor its running status. If the node fails to run, DataWorks sends an alert based on your configuration. This helps you promptly identify and handle exceptions and ensures that the node can be scheduled as expected in the future.

In the navigation pane on the left, choose .

Create a custom rule.

Click Create Custom Rule.

Configure the rule information.

You can customize the rule as needed. For more information about the configuration, see Custom monitoring rules.

This example shows how to configure a monitoring alert for the

result_tablenode for when the node fails to run. The specific configuration is shown in the following figure. If the

If the result_tablenode fails to run, theTest rulesrule is triggered, and an alert is sent to the node owner by text message. The alert is sent a maximum of 3 times at an interval of 30 minutes.NoteYou must configure the alert contact information in advance. For more information, see View and set alert contacts.

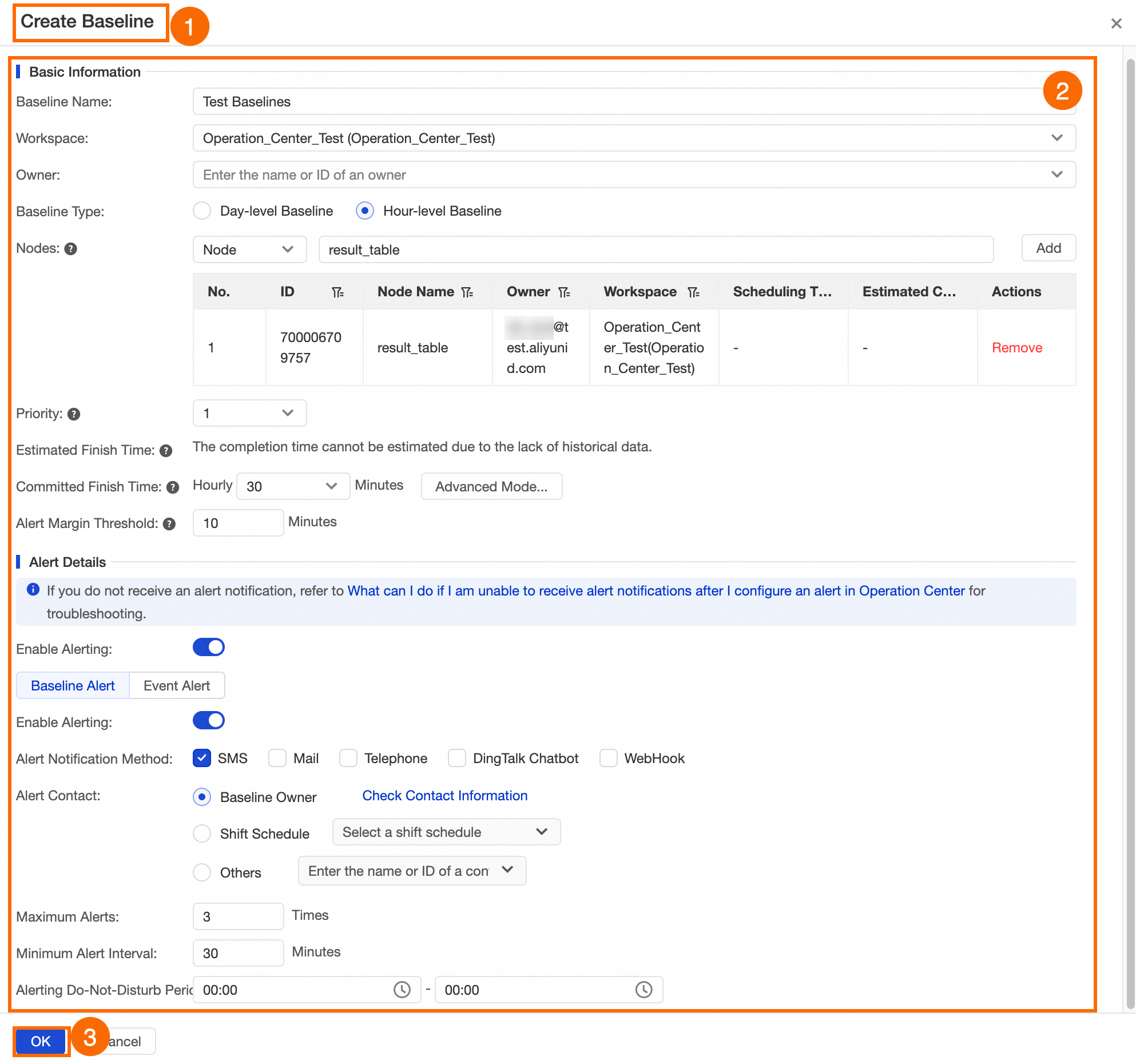

Step 7: Create an intelligent baseline (Advanced)

To ensure that a task can generate data within a specified time, you can set up baseline monitoring for the task. You can add the task to a baseline and set its priority and committed time. DataWorks calculates the estimated completion time of the baseline task based on its running history and provides high-priority tasks with preferential access to schedule resources. If DataWorks predicts that the baseline task may not be complete before the committed time, it sends an alert. You can handle the exception promptly based on the alert.

In the navigation pane on the left, click Intelligent Baseline.

Create an intelligent baseline.

On the Baseline Management tab, click Create Baseline.

Configure the baseline information.

You can configure the baseline information as needed. For more information about the configuration, see Create a baseline.

This example configures an hourly baseline for the

result_tablenode to monitor its hourly data output. The specific configuration is shown in the following figure. The following list describes some of the parameters:

The following list describes some of the parameters:Priority: The larger the value, the higher the priority. High-priority tasks are provided with preferential access to schedule resources when resources are scarce.

Estimated Finish Time: The estimated completion time is calculated based on the node's completion history over a period of time.

Committed Time: The latest time by which the node must generate data. You can set this based on your business needs and the node's actual completion history.

Alert Margin: You can set an alert margin based on the Committed Time to allow time for handling task exceptions. This helps ensure that the task can be complete by the committed time.

NoteThe interval between the alert margin and the committed time must be at least 5 minutes.

If the hourly instance of the

result_tablenode cannot be complete within 30 minutes of each hour, theTest Baselinesbaseline is triggered. An alert is sent to the node owner by text message. The alert is sent a maximum of 3 times at an interval of 30 minutes.

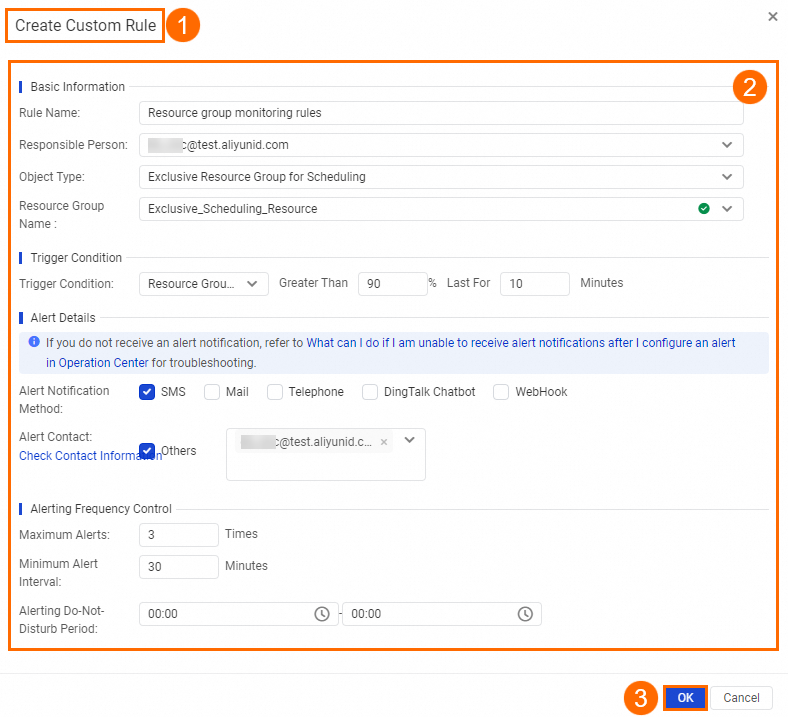

Step 8: Create an automatic O&M rule for a resource group

If you use an exclusive resource group to run tasks, you can create automatic O&M rules for the exclusive resource group as needed. This lets you set monitoring alerts for the usage of the resource group and the number of instances waiting on it, and then perform related O&M operations.

The automatic O&M feature works by associating a monitoring rule with an exclusive resource group. You can customize monitoring metrics for instances that run on the destination resource group and define O&M rules based on your business logic. If an instance meets the filter conditions, the O&M action is automatically triggered and executed to achieve automatic O&M.

Currently, automatic O&M is supported only for exclusive resource groups for scheduling.

To prevent slow task execution that is caused by resource shortages, you can move the task to an exclusive resource group for scheduling. For more information about how to change the resource group that is used by a task, see General reference: Switch resource groups.

Create a monitoring rule for the resource group.

In the navigation pane on the left, choose .

Create and configure a monitoring rule for the resource group.

The configuration of a monitoring rule for a resource group is similar to the configuration of a monitoring rule for a node. You need to only set Object Type to Exclusive Resource Group For Scheduling. For more information about the configuration, see Custom monitoring rules.

This example monitors the resource group utilization of the

Exclusive_Scheduling_Resourceresource group. The configuration is shown in the following figure.NoteThis topic demonstrates only the configuration operation. When you configure the rule, make sure that you configure it for the resource group that you use.

If the resource group utilization of the

If the resource group utilization of the Exclusive_Scheduling_Resourceresource group is greater than 90% for 10 minutes, theResource group monitoring rulesrule is triggered. An alert is sent to the recipient by text message. This alert is sent a maximum of 3 times.

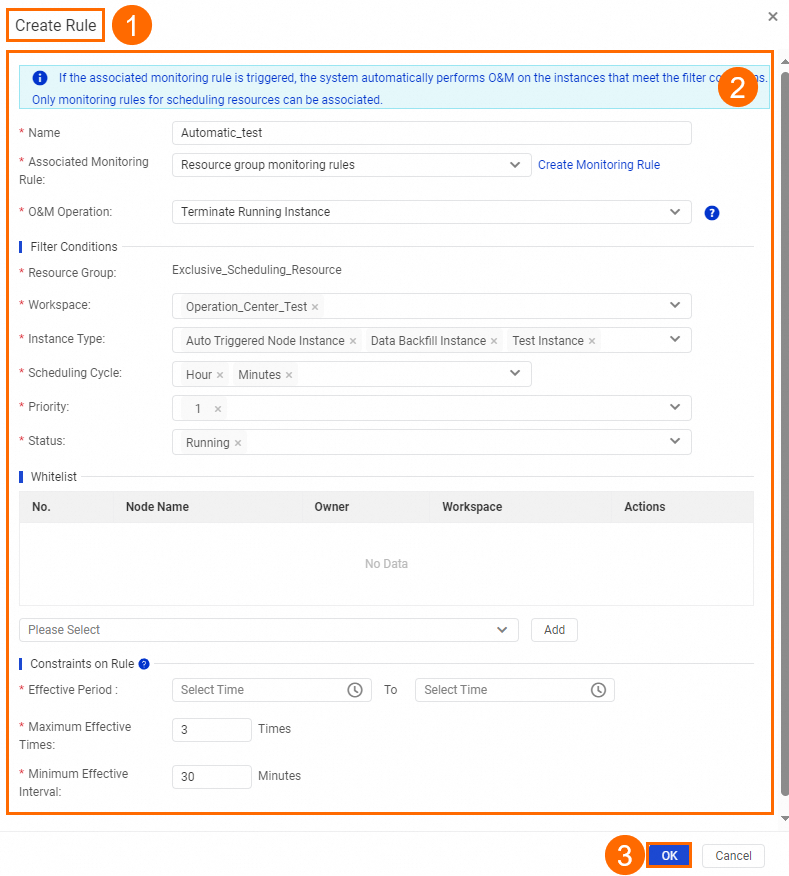

Configure an automatic O&M rule based on the resource group monitoring rule.

In the navigation pane on the left, choose .

On the Rule Management tab, click Add Rule.

Configure the rule information.

You can configure the rule information as needed. For more information about the configuration, see Create an automatic O&M rule for a resource group.

This example creates a resource group named

Automatic_testand associates it withResource group monitoring rules, which is a monitoring rule that uses an exclusive resource group for scheduling. When theResource group monitoring rulesrule is triggered, DataWorks automatically performs O&M operations on instances inAutomatic_testthat meet the filter conditions. The following figure shows the configuration. The following section describes some of the parameters:

The following section describes some of the parameters:Associated Monitoring Rule: Currently, you can associate a rule only with a monitoring rule for an exclusive resource for scheduling. You must create the required resource group monitoring rule in advance.

O&M Action: Currently, you can select only Stop Running Instance. This means that after the O&M rule is triggered, instances that meet the conditions are stopped.

If the utilization of the

Exclusive_Scheduling_Resourceexclusive resource group for scheduling exceeds 90% for 10 minutes, DataWorks stops the priority-1 hourly and minute tasks that belong to recurring instances, test instances, and data backfill instances running on theExclusive_Scheduling_Resourceresource group in the specified workspace.

Manage and control O&M operations (Advanced)

Operation Center supports extension points, such as node freezing, node restoring, data backfill, and node unpublishing. You can use these extension points with extension programs to customize the logic processing and O&M operations for tasks. For more information, see Overview of extensions and Application example: Check for triggering events in Operation Center.

What to do next

You can configure Data Quality monitoring rules for the table data that is generated by the node to ensure that the data output meets your expectations. For more information, see Data Quality.