This manuscript is based on an interview with Zhang Miao, Chief Technical Officer (CTO) of Wanwudezhi, and Zhu Shuohan, who is in charge of big data.

At the end of 2018, Wanwudezhi built a technical team from scratch. Its technical architecture had changed rapidly, including servitization and platformization.

To support the rapid development of the business, Wanwudezhi rarely makes supporting services by themselves, and it extensively uses the Software-as-a-Service (SaaS) and Platform as a Service (PaaS) provided by the cloud platform. For example, its big data system is built on the framework system of Alibaba Cloud DataWorks and MaxCompute. Using the core storage and computing components, upper-layer visualization, and service query components, Wanwudezhi has many customization requirements during its use. Wanwudezhi has carried out some secondary development based on open-source solutions.

Zhang Miao believes efficiency is a top priority for fast, iterative start-ups. This directly influenced the cloud products they chose to build their research and development system. If you build the entire link and infrastructure by yourself, it is nearly impossible to achieve a rapid development speed.

In the early days, the amount of data of Wanwudezhi was relatively small, and all business data was put in the same large data database instance. At that time, the read library or creating an analysis database by subscribing to binlogs could complete the daily report output.

Running SQL was sufficient, which was a common solution when the data volume was small. At that time, there was no concept of big data. All Structured Query Language (SQL) scripts ran on a relational database management system (MySQL) to generate data reports, and regularly, the reports were given to operations. This was the basic architecture of Wanwudezhi in the early days.

About four or five months after the launch of the Wanwudezhi app, its e-commerce business developed rapidly. In 2019, there was exponential growth every month. This is how we found that MySQL could not check the data. We began to explore new solutions to help us build a big data platform. Previously, we focused more on business data and put it in the database, which was relatively simple. After we connected the tracking point, we needed to get the log. However, MySQL could not parse logs. We began to think about which big data platform architecture could meet our needs at that time.

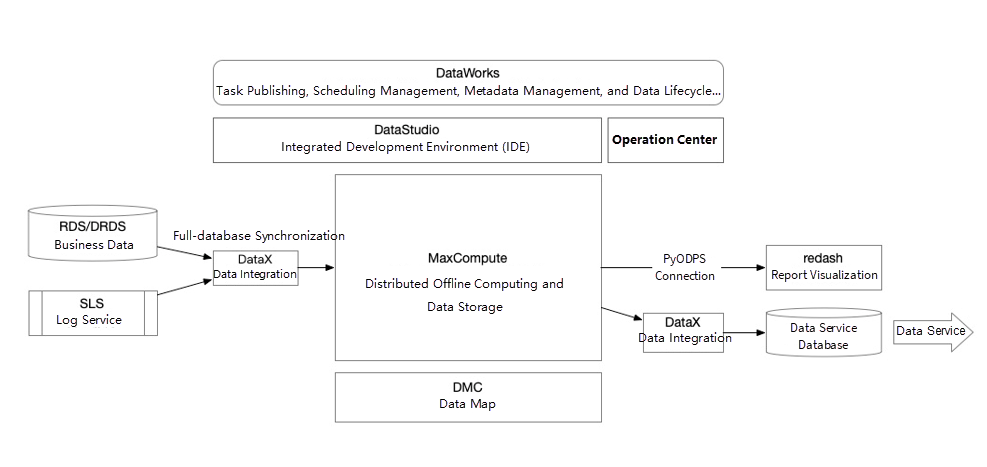

At that point, the human resources for Wanwudezhi were limited, and the overall data scale was not large. Although MySQL could not check the data, the data had not reached a large scale. We tended to choose an end-to-end big data development platform. It was efficient, and we did not need to focus on many things at the bottom. For start-up companies, building the underlying data infrastructure in the early stage was a thankless task. Besides, this platform could help us efficiently move the MySQL-based system to the cloud. We found that Alibaba Cloud's DataWorks and MaxCompute were in line with our expectations. We started with a database. DataWorks supports one-click synchronization of the entire database to MaxCompute. For the early construction, it was mostly configured, and after it was run, the initial warehouse was ready. The volume was not large, and there was no need to consider a series of things, such as diversion and stratification. All of our business applications relied on the Alibaba Cloud platform, and the business logs were also stored on the Alibaba Cloud Log Service (SLS). SLS databases could be archived to MaxCompute through DataWorks, which could shorten the link in the data conversion. It was very convenient to combine our entire frontend logs with backend business data. Therefore, we built our earliest big data platform based on DataWorks and MaxCompute.

Early Big Data Platform Architecture

At first, we all sent emails based on MySQL and Excel. After all, there were only a few people in our company at that time, and our business was focused. When our business grew larger, the number of business partners grew, and the needs of each small department were a lot different. Then, we needed to build a visual data platform.

First, we used the Redash, ApsaraDB RDS (RDS), and MaxCompute process. MaxCompute processed the data, wrote the data back to the RDS through the dataset layer, and then connected the RDS to the frontend report visualization software for display. However, the process was long. You had to synchronize the business data to MaxCompute first before it could run the task. After running the task, it wrote the task to RDS for visualization. The entire process took a long time. There were many intermediate links. When the data accumulation was high, the occupation of RDS was large, and the storage cost was very high.

Then, we began to advance to the second stage. We used the Redash tool and discovered that Alibaba Cloud MaxCompute provides a PyODPS Software Development Kit (SDK) that integrates PyODPS capabilities through secondary development in our open-source tools. Then, we could directly use the data in MaxCompute without writing back. This saves RDS storage space and shortens our data link. At that time, many tasks that needed to be written were gradually transformed in this direction. This transformation solved the problems when the link was long, and the storage space was insufficient. However, a new problem occurred. MaxCompute is a file system, and the speed of reading data could not return in seconds. Therefore, we had a deeper understanding of MaxCompute and found that the Lightning feature met our expectations. It is equivalent to encapsulating a layer on the offline system, which is similar to the concept of a database in the data warehouse. All of our result tables were small and could be returned to the report system using Lightning. Our report system iterated like this to form a business database for MaxCompute and then returned to a report system architecture through Lightning. After nearly a year, we had been using this architecture to realize data visualization and automated reports.

The problems we encountered in the initial stage were caused by the development of the e-commerce businesses and the gradual introduction of other businesses. For example, we had a content community and merchant businesses. In addition to those businesses, the original single-database support in the technical architecture had an RDS bottleneck and could not be expanded without restriction. As a result, we built a platform and service-oriented technical architecture. On the data side, the business development department started to perform database and table sharding on the entire platform. A business application could only have instances of this business application. Then, the instances of this business application might have multiple tables at the bottom layer. The same logical table of the same business might be divided into different fact tables. At this stage, there were many read libraries, and the business became more complex. It would be inefficient to process reports by accessing the source table. We did two things to solve these two problems.

First, based on the capabilities of DataWorks and MaxCompute, the original one-click full-database archiving data warehouse model was adjusted. By adjusting multiple serial data baselines, each baseline allocated the baseline start time appropriately based on the running time and resource occupation of each node, reducing concurrent requests to read the library. The number of requests was too large. If the requests were sent concurrently, the Input/Output (I/O) of the read library would reach the limit, and a series of alarms would be triggered. This way, the pressure on the read library was reduced, and the read library cost was saved.

Second, the business was differentiated. We began to do data warehouse modeling. More database versions were introduced to the database sharding, table sharding, and business change. The earliest was RDS, which was a single MySQL. Later, the single MySQL system could not support the application of large data volumes. We introduced a series of solutions, such as PolarDB-X (DRDS) and ApsaraDB for HBase (Hbase), to solve the problems of business data storage, computing, and processing. Business data was stored in different media in our data warehouse. Therefore, we needed to monitor the quality of data from different sources. This applied the features of DataWorks and MaxCompute, which could regularly monitor the data quality and remind us that the data inflow of a certain business was abnormal through the existing function of triggering alarms. This way, our data warehouse personnel could intervene and solve problems promptly.

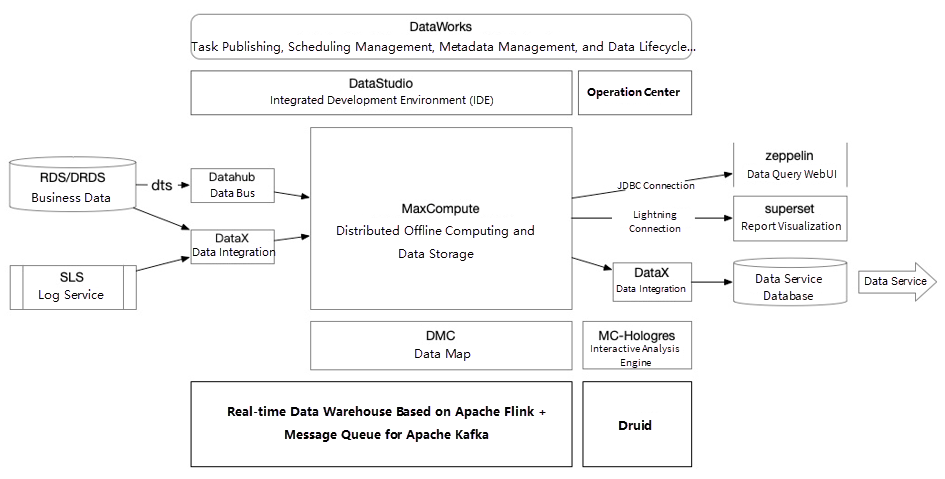

Current Big Data Architecture

The current situation is different from the mid-term because the platform volume has increased. The real problem is that the amount of data in a single table is very large, regardless of the service type. A single table can no longer be imported in batches through the data integration feature of DataWorks. Since batch importing is not realistic, we began to investigate other ways to synchronize the data of the business database. We also thought about Alibaba Cloud products, including Data Transmission Service (DTS), which supports data integration and points to a data warehouse. However, it is not perfect. For example, DRDS data cannot be directly uploaded to the data warehouse. Since many database shards and table shards exist, we need to synchronize the DRDS data to the data warehouse. We iterated over data integration.

A new integration component, DTS and DataHub, was introduced, and then the data was synchronized to the data warehouse. Since DataHub can archive data according to our needs, we can archive the data into the data warehouse every 15 minutes. The whole architecture includes the business database, DTS, and DataHub. Then, DataWorks is used to access MaxCompute, a cloud-native big data platform.

With the increase of quasi-real-time and real-time requirements, two problems need to be solved urgently. First, all original data queries or quasi-real-time data queries depend on the computing capability of MaxCompute. Due to the quasi-real-time demand, we run a large number of tasks every hour, half-hour, or 15 minutes, but the computing power is limited. If business intelligence (BI) personnel want to query data in a table, the computing resources may be synchronizing other tables or computing other tasks. Therefore, the query efficiency is not high. Then, we found out Hologres, which can access the underlying file data of MaxCompute without occupying any MaxCompute resources, form an independent computing node and cluster, and solve the problems of query acceleration and resource isolation.

We currently need to provide many real-time data indicators from the list category to the business side. In the second half of this year, an advertising platform was launched again, and merchants can advertise on our platform. List, livestreaming, and other businesses rely on real-time data to generate business value. In this case, a real-time data warehouse has been introduced. The construction of a real-time data warehouse relies on Alibaba Cloud E-MapReduce (EMR). Realtime Compute for Apache Flink and Message Queue for Apache Kafka are used to build a real-time data warehouse.

There are several data sources, and one is from DTS to DataHub. In addition to archiving DataHub to MaxCompute, Flink can also subscribe to DataHub data in these scenarios. We also used Flink on Yarn mode to build our real-time data warehouse. Yarn helped us build the framework of the real-time data warehouse based on EMR. After a real-time warehouse is built, another requirement is to generate real-time data. We need to report and visualize the data and make a series of data automatically be pushed to the business team.

At this time, we introduced the data visualization of the query engines, Druid and superset. Druid and superset are naturally bound together, so Message Queue for Apache Kafka can be directly consumed by Druid's data engine to implement a complete closed data link in real-time, which constitutes our current big data platform. The offline framework consists of MaxCompute, DataWorks, and report visualization. The real-time framework consists of Flink, Message Queue for Apache Kafka, Druid, and superset.

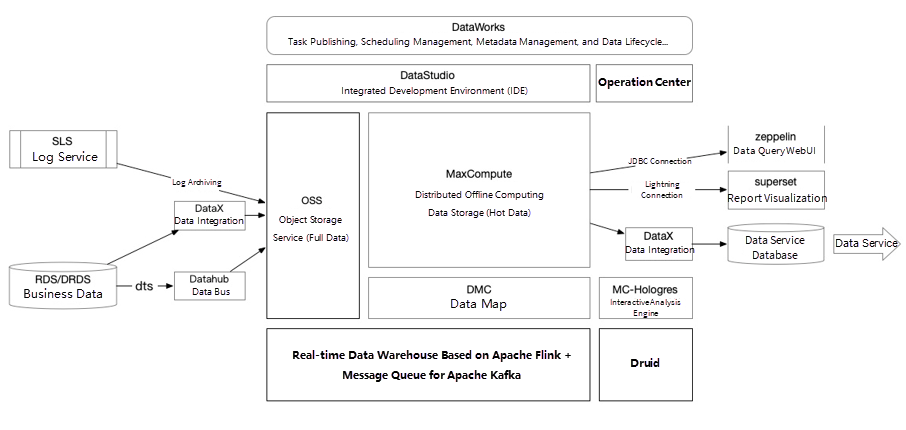

The eventual plan is to introduce the construction of Alibaba Cloud LakeHouse. We have considered this plan from two aspects.

Future Plan

On the one hand, through the construction of Alibaba Cloud LakeHouse, the offline and real-time systems can obtain the same data, and the data does not need to store multiple backups in multiple places. Data consistency is ensured while the storage cost is saved. The unified storage can avoid the problem of data silos. All data in the entire platform, whether it is stored, written, or read, can be analyzed in association with one another. We research unstructured and semi-structured data out of structured data. On the other hand, hot and cold data must be separated. Also, considering the big data cost, the storage cost can be optimized.

It is unnecessary to store cold data in an access-intensive storage medium. Currently, Alibaba Cloud LakeHouse can help us meet this kind of hot and cold data separation requirement. It can archive cold data to the Object Storage Service (OSS). The hot data that is accessed every day is still stored in MaxCompute, but we can also put it in OSS to obtain a complete data backup. The OSS data can also be used in the EMR cluster by JindoFS to help us connect the offline and real-time storage of the entire cluster. Data exchange and information exchange can be completed through the same medium. This is what we hope to accomplish in the future.

Continuous Definition of SaaS Cloud-Based Data Warehouses and Real-Time Analysis

137 posts | 21 followers

FollowAlibaba Cloud Big Data and AI - January 8, 2026

Alibaba EMR - April 27, 2021

Alibaba Cloud Community - March 29, 2022

Alibaba Cloud New Products - January 19, 2021

Alibaba Clouder - June 23, 2021

Alibaba Cloud MaxCompute - January 21, 2022

137 posts | 21 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba Cloud MaxCompute