By Meng Shuo (Qianshu)

This article discusses the definition of SaaS-based cloud data warehouse integrated with AI, by Meng Shuo, MaxCompute product manager of the Alibaba Cloud business unit.

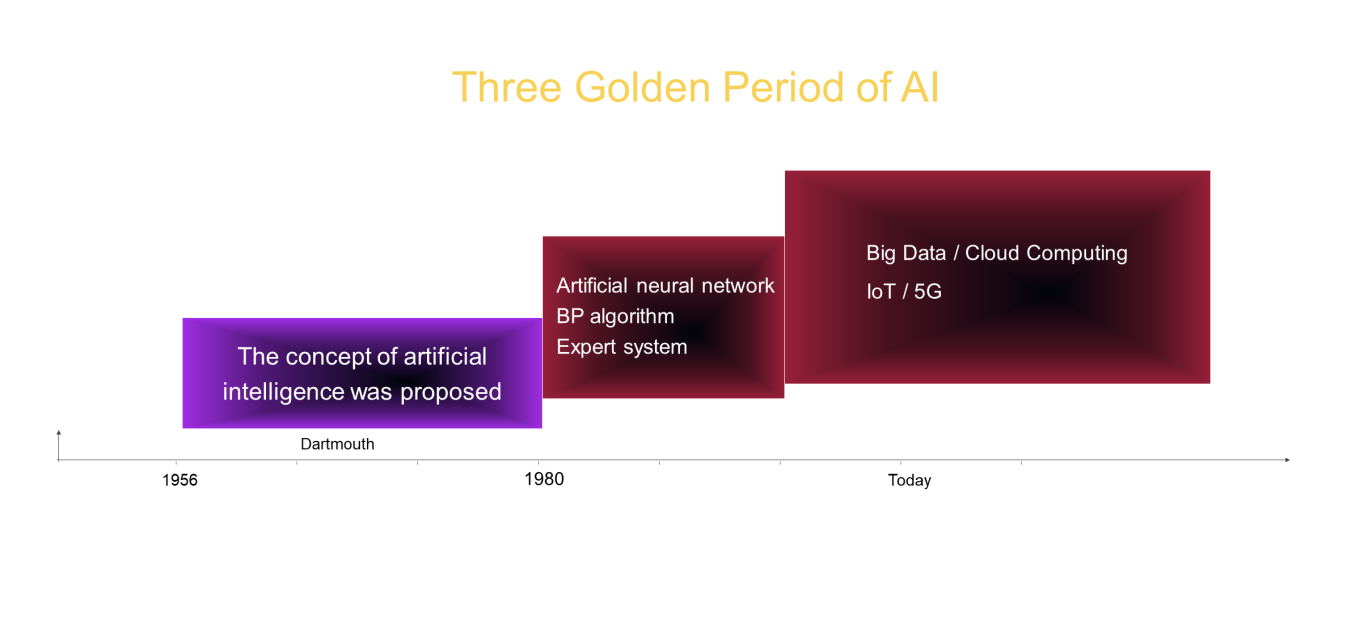

Artificial intelligence (AI) is a concept that emerged as early as the 1950s. After that, due to various reasons, AI experienced a long process of dormancy for decades. It is not until the last few years that AI became popular again. In fact, AI has actually enjoyed three "golden periods" of development in its history.

The first time was when the concept of AI was put forward, scholars thought that AI technology could change the world, but it did not. The second time was around the 1980s, when some algorithms that simulated the thinking of the human brain, such as neural network, had been proposed, but they did not develop rapidly either. The third can be considered to have started from around 2010. Compared to the previous two periods, this time, there was big data as the means of production, with powerful computing power, and cloud computing as the infrastructure.

Moreover, the development of IoT and 5G technologies and scenario-driven applications picked up at this time. For example, online search is one of many scenarios where AI algorithms are applied. Therefore, this time is a golden age for AI development.

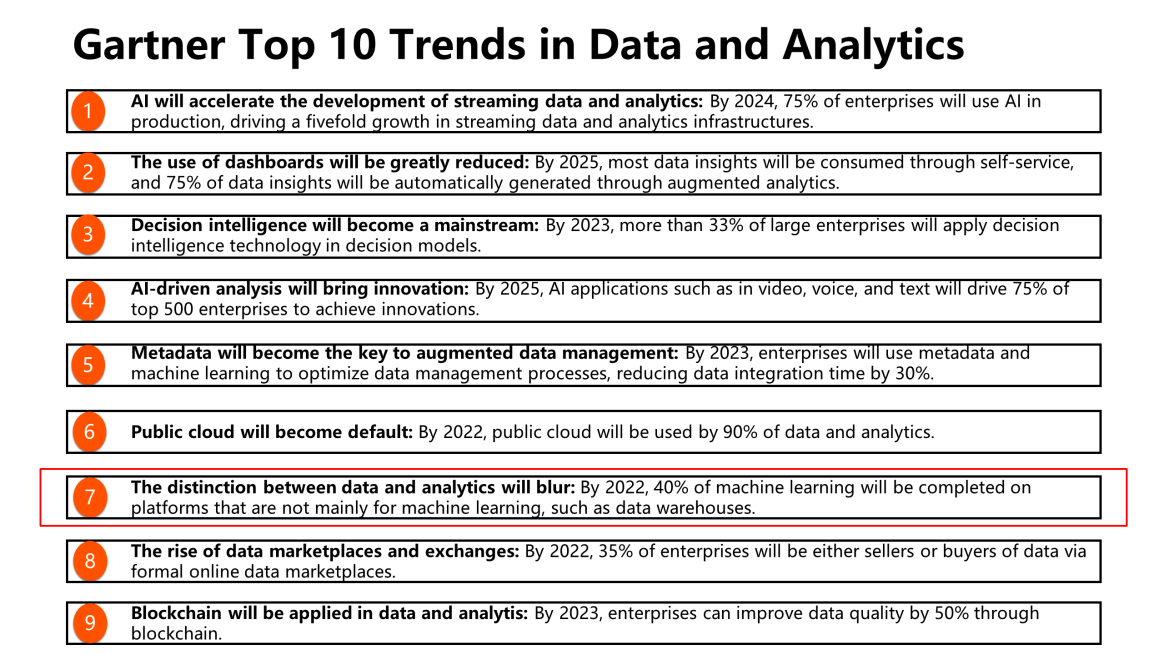

Ten trends in data and analytics are predicted by Gartner as follows.

In the figure above, we can see that the boundary between data and analytics will gradually become blurred in the future. Gartner predicted that by 2022, 40% of the machine learning will be completed on platforms that are not mainly for machine learning, such as data warehouses. Therefore, MaxCompute integrated with AI is well aligned with this trend.

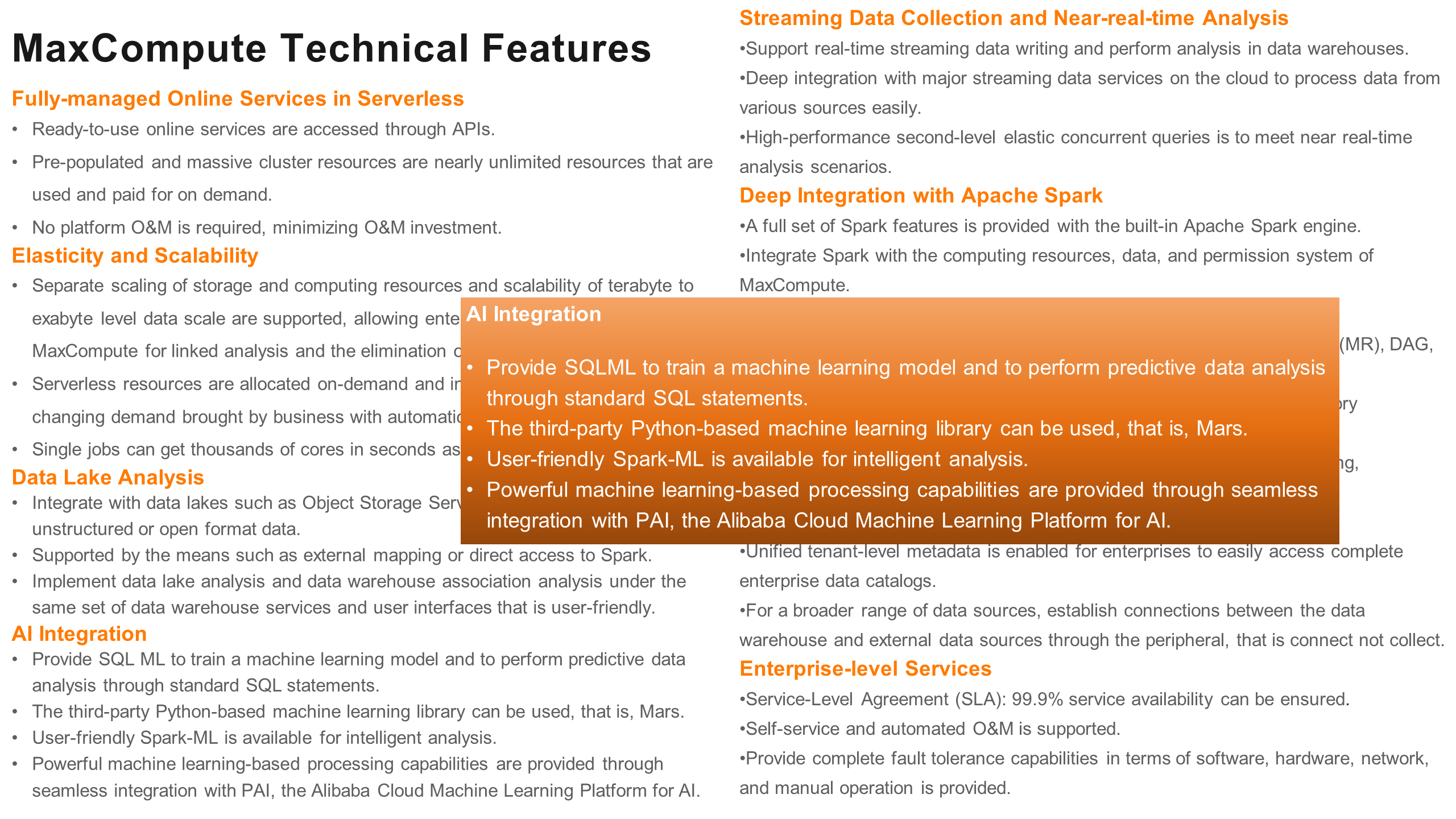

This is because the data warehouse carries the data assets of the entire enterprise, especially MaxCompute. It is a data platform with TB to EB storage, and it can scale elastically to expand its storage capacity. So, the built-in machine learning feature of the data warehouse has obvious advantages, including:

In addition, the built-in machine learning feature of the data warehouse is an integration that brings benefits to every user. For business people, new ideas can be quickly tested, and their return on investment (ROI) can be improved. For data scientists and data analysts, most of the work can be implemented by SQL and Python. In this easy-to-use and efficient mode, the model development can be seamlessly integrated with the production environment. For database administrators (DBAs), it is easier and more secure to manage data.

The product features of MaxCompute have been covered previously and will not be described in detail here. Here, it introduces the main capabilities of, MaxCompute integrated with AI.

SQLML and Mars are two of the native AI extension capabilities of MaxCompute. This article focuses on these two capabilities.

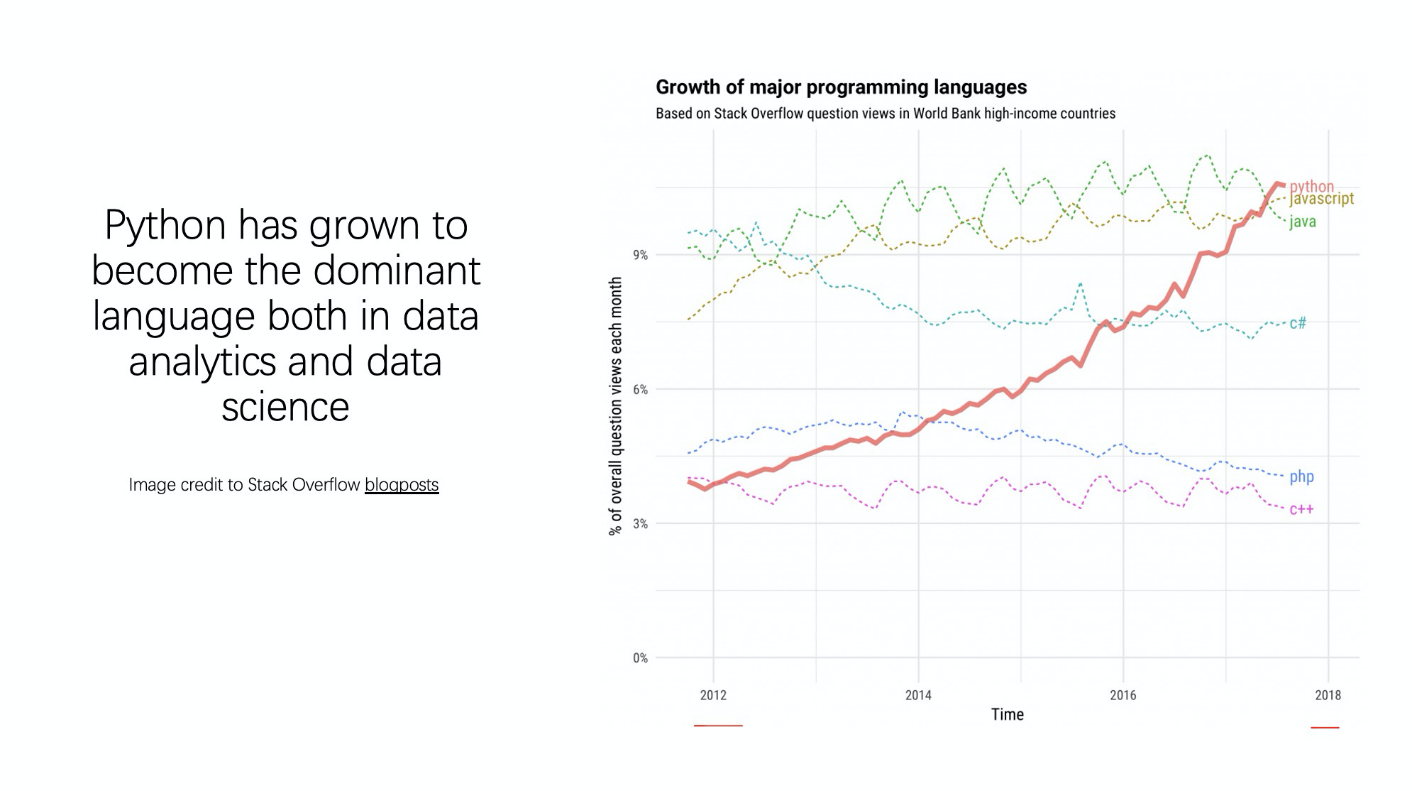

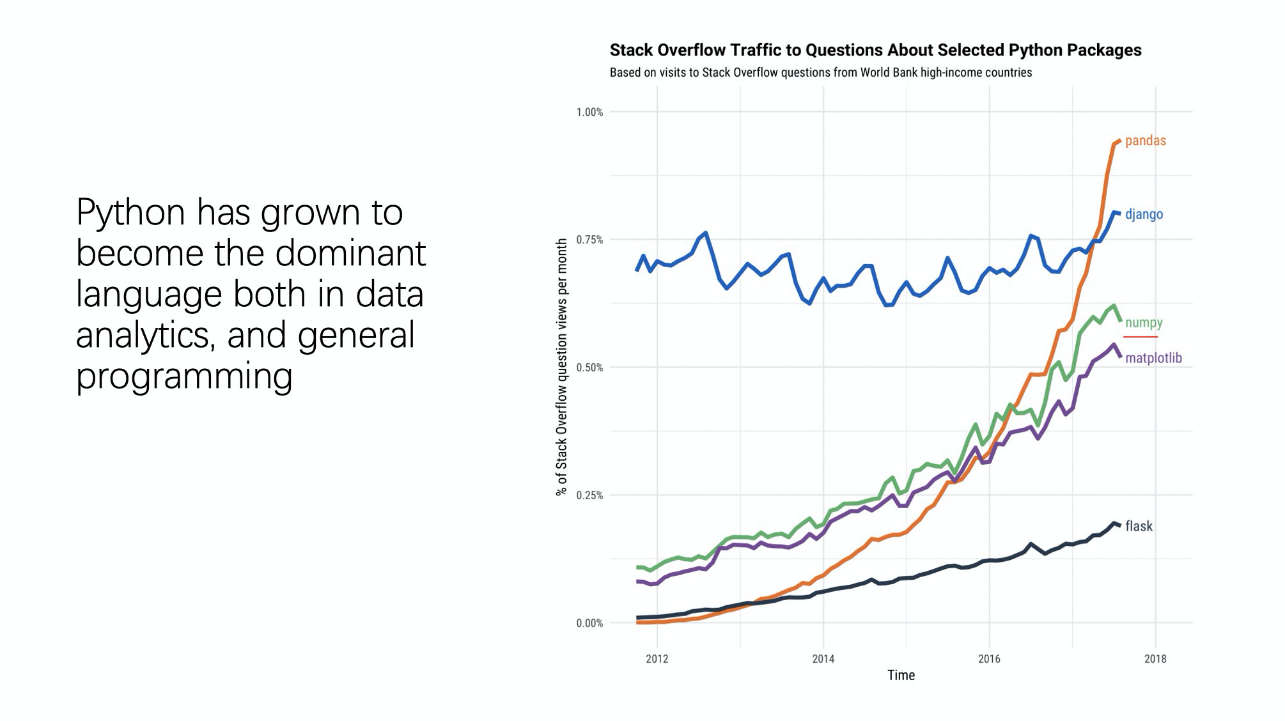

The reason for choosing SQL and Python is that they are two of the most popular languages in current data processing and machine learning today. The following two figures show the development and status of SQL and the development of Python.

As far as data processing languages are concerned, relational databases (SQL-based relational databases) and similar databases are still among the top data processing engines with a stable ecosystem. Python is becoming the mainstream in data analytics and data science with a powerful machine learning ecosystem. For the integration of MaxCompute, these two languages are the fundamental trend and reduce learning and migration costs for users.

The name of this project is Mars. At the beginning, it meant matrix and array. Now it is no longer limited to them. Since the data dimensions of the project can be very high, Alibaba Cloud has set off for a higher goal and constantly challenge itself.

The reasons to create Mars are as below:

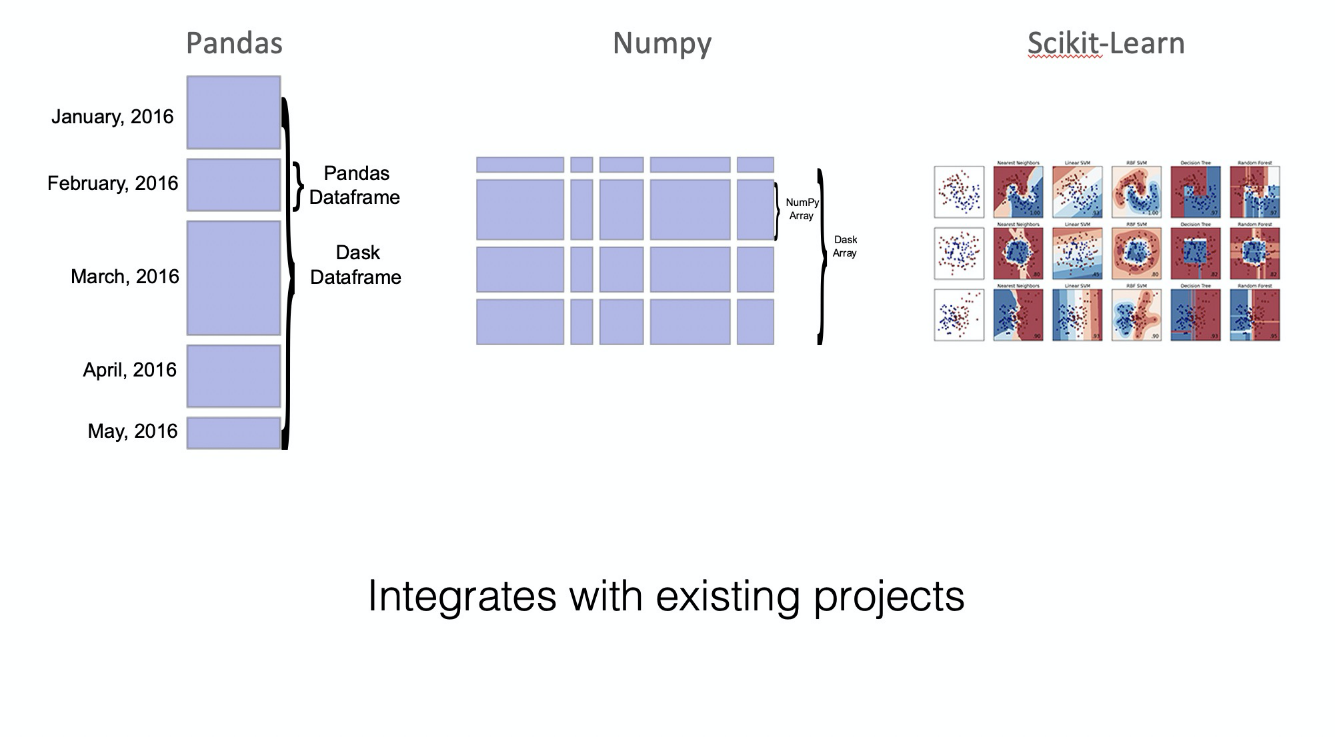

Now, Mars is the only commercially large-scale scientific computing engine. For more information about Mars, visit the Alibaba Cloud official website. The basic idea of Mars is shown in the following figure. It is mainly to make the corresponding distributed processing of mainstream scientific computing and machine learning libraries in Python.

The following is a simple demo of SQLML.

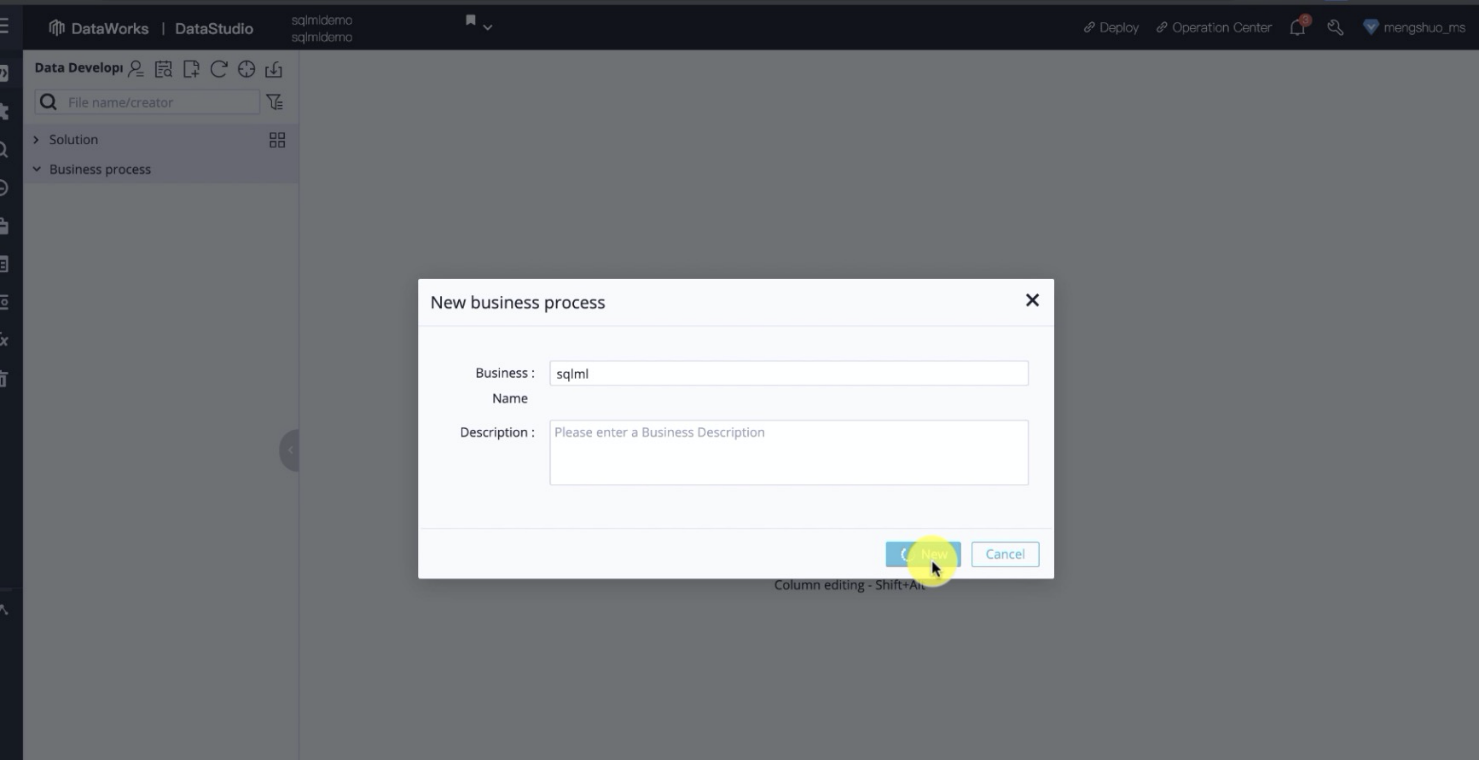

First, create a workflow in DataWorks. It can be found that the workflow has many components. Create a temporary query first, as shown in the following figure.

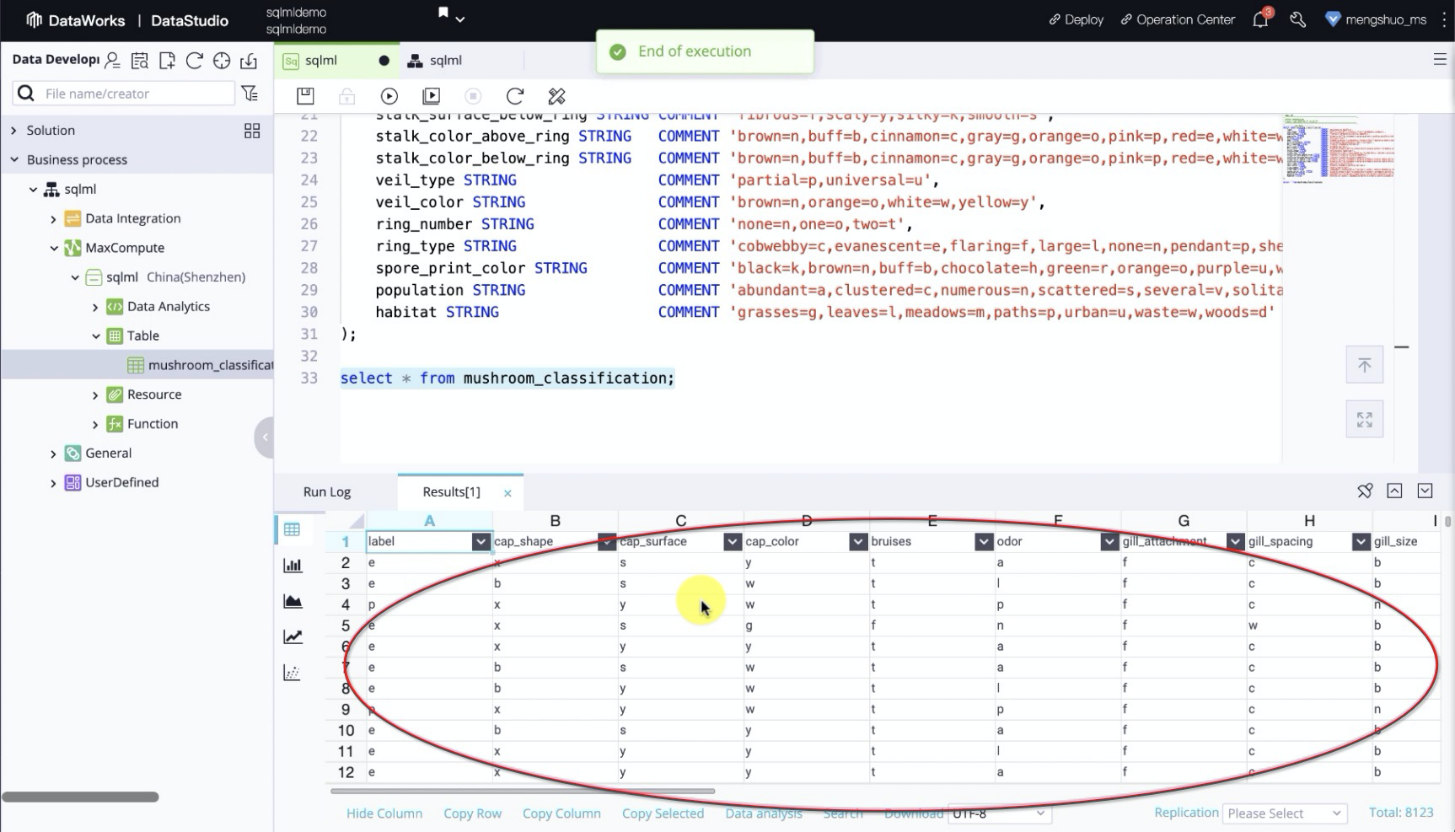

Then create a new table to save some attributes about mushrooms. Based on these attribute data, a classification can be made.

Import the data into the table after the table is created. Because the dataset is relatively small, the csv file can be uploaded locally with columns that correspond to the fields in the table.

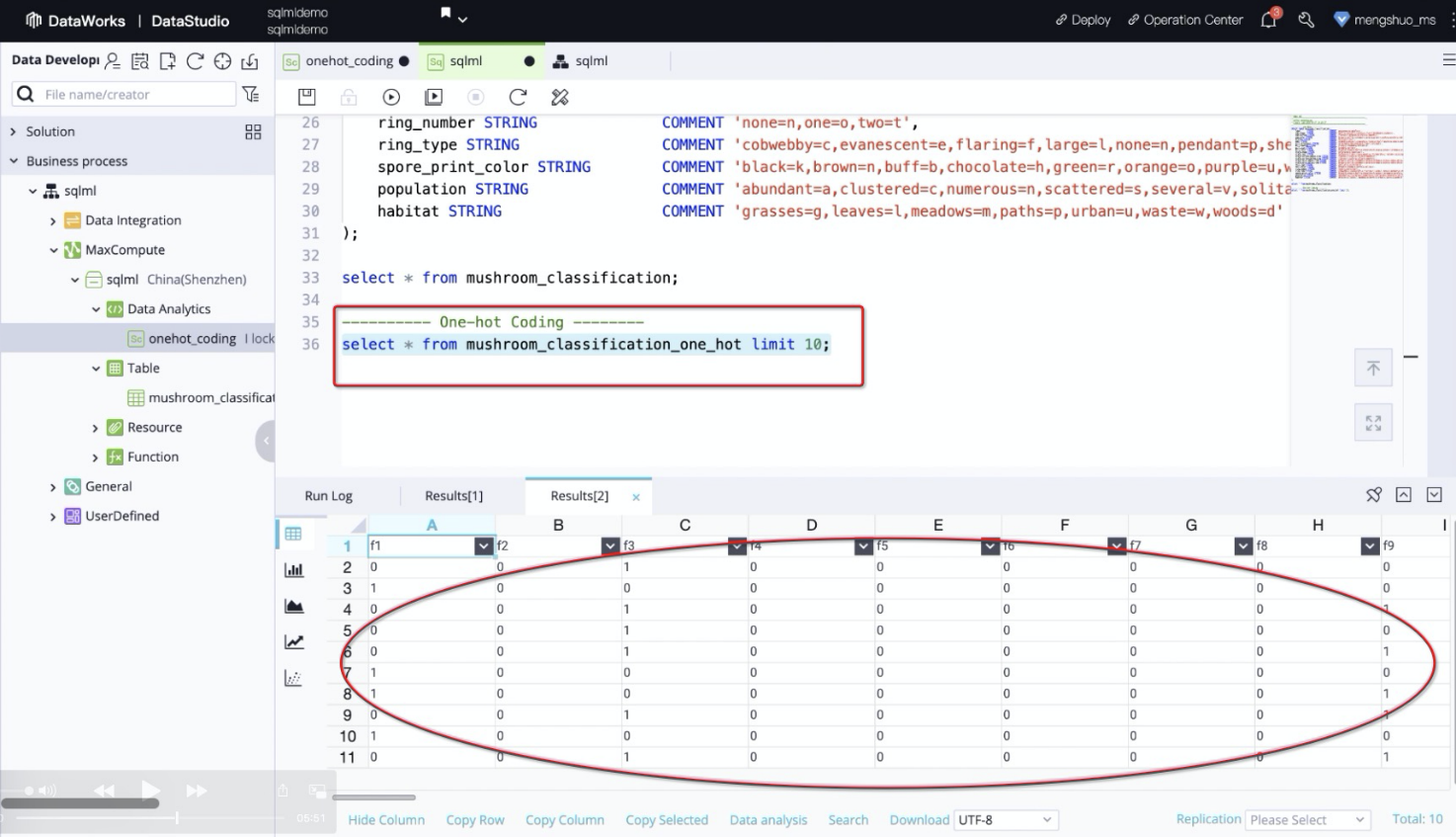

After that, one-hot coding for the features needs to be performed, as shown in the following figure.

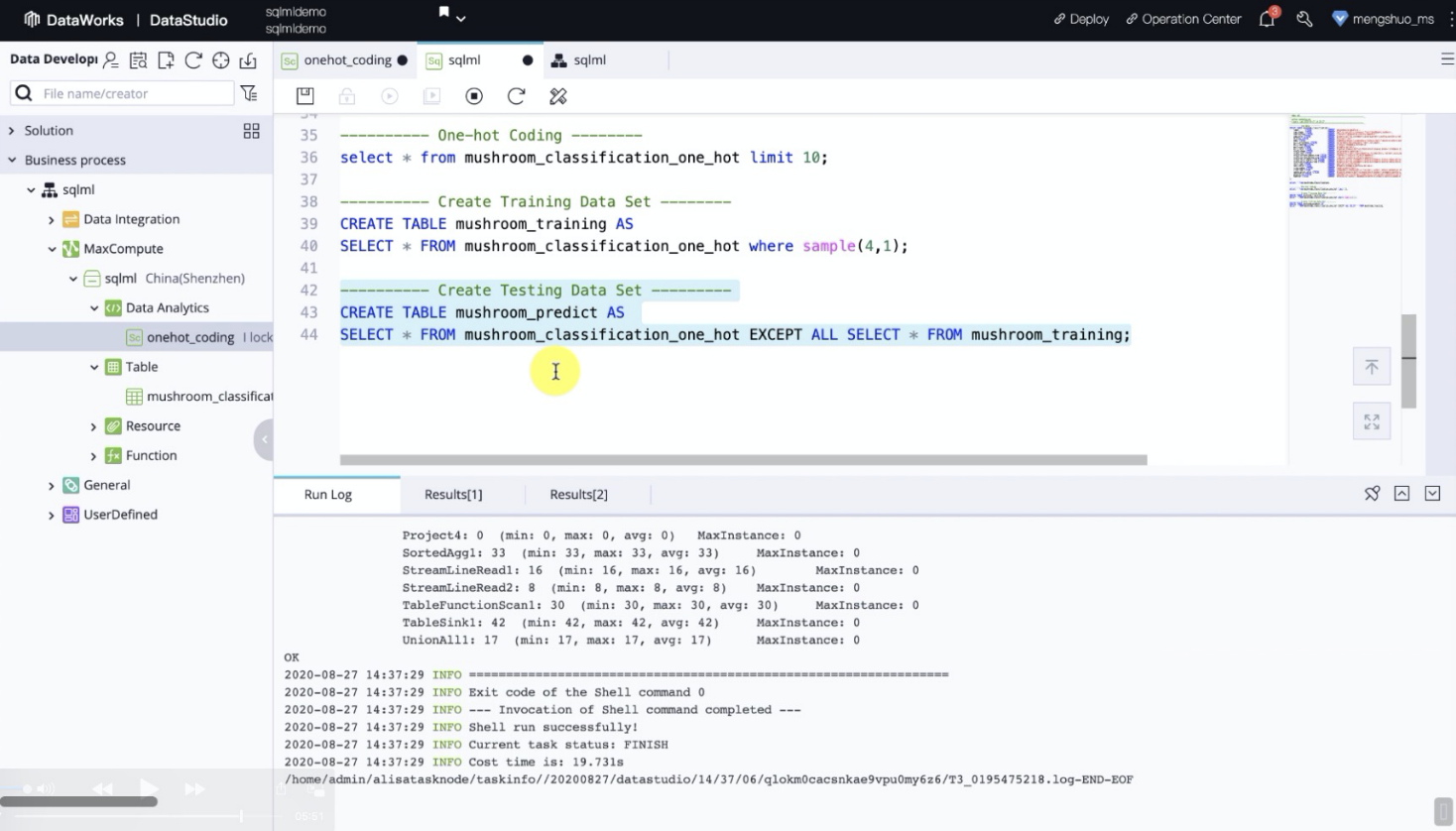

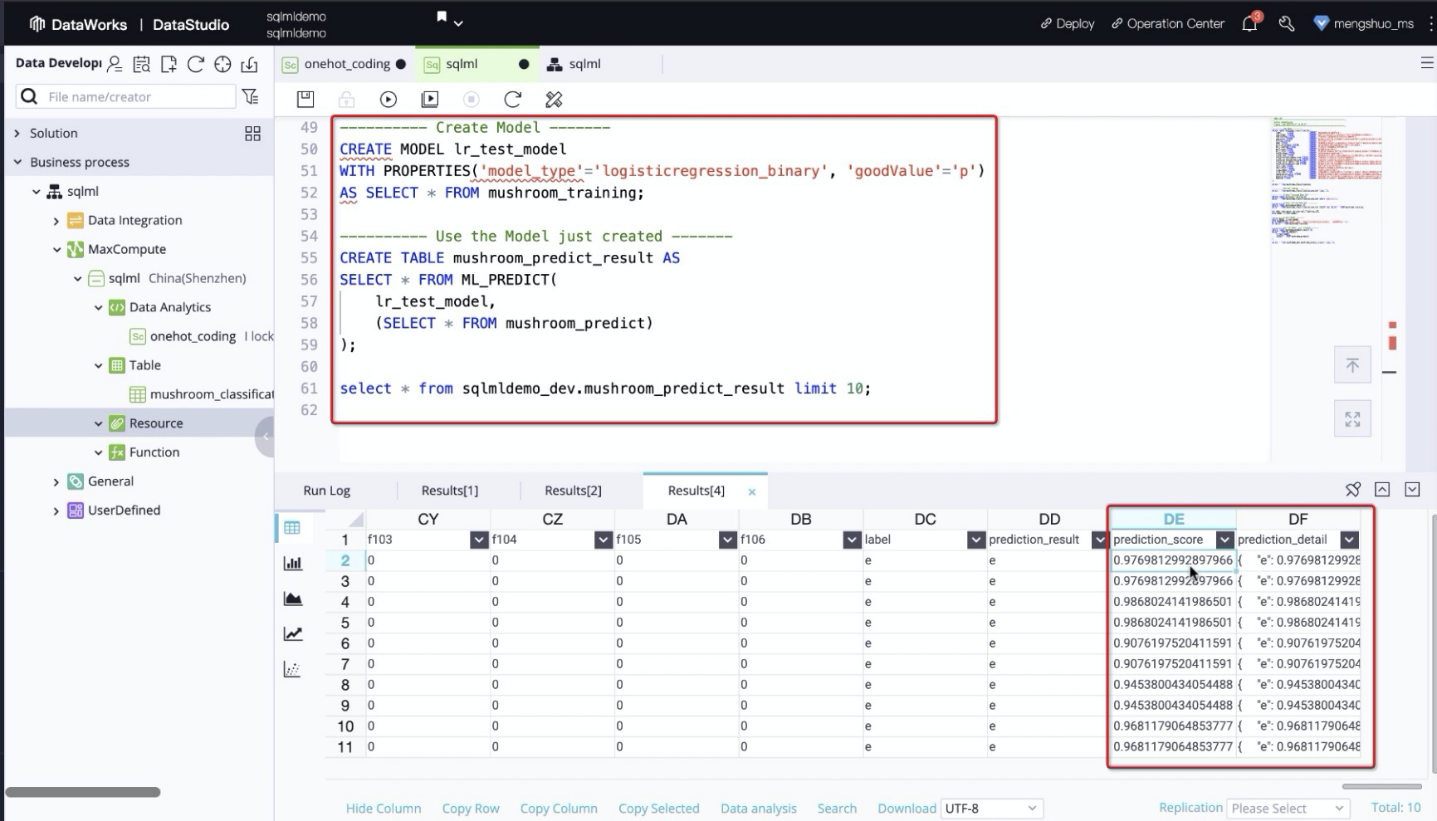

Then, divide the data into a training data set and a testing data set, and import the sets into a separate table, respectively. The model can be created. Here, a common binary classification algorithm, the logistic regression, is used as follows.

Obtain the training results easily by running the model.

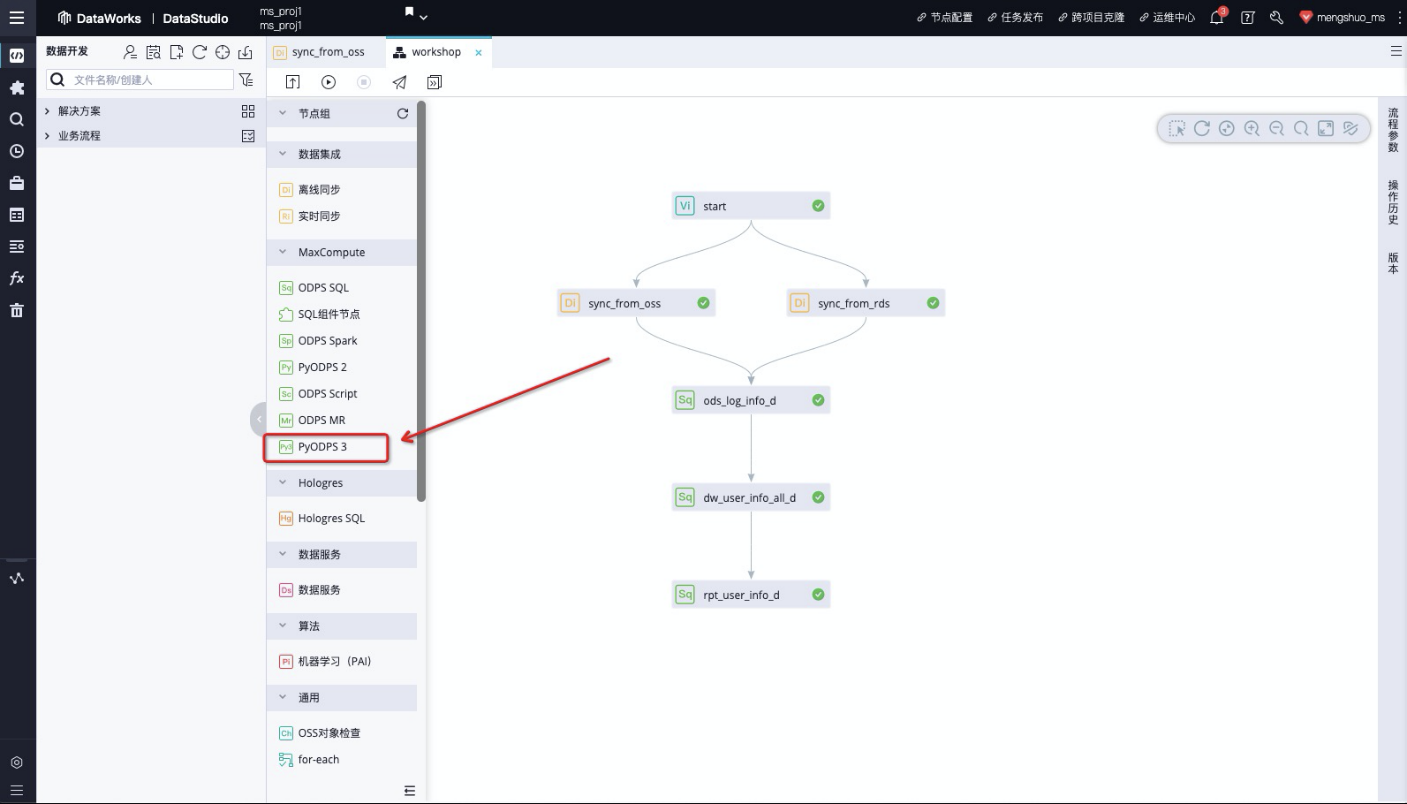

Through the demo above, a training process of machine learning has been completed easily. The process is similar to using UDFs in SQL with simplicity and high efficiency. The demo above introduces the training process in SQLML. The Mars is also easy to use, which only needs to drag and drop the PyODPS 3 component, as shown in the following figure.

Currently, Mars is already available for trial. SQLML will be ready soon. Welcome to try Mars.

Integrating Real-time Search with SaaS-based Cloud Data Warehouses

137 posts | 21 followers

FollowAlibaba Cloud MaxCompute - March 24, 2021

Alibaba Cloud MaxCompute - March 25, 2021

Alibaba Cloud MaxCompute - March 25, 2021

Alibaba Cloud MaxCompute - March 25, 2021

Alibaba Cloud MaxCompute - July 14, 2021

ApsaraDB - November 17, 2020

137 posts | 21 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Network Intelligence Service

Network Intelligence Service

Self-service network O&M service that features network status visualization and intelligent diagnostics capabilities

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by Alibaba Cloud MaxCompute