By Senze Zhang

With the official release of RocketMQ 5.1.0, tiered storage, as a new independent module of RocketMQ, has become a milestone in Technical Preview. RocketMQ 5.1.0 allows users to offload messages from local disks to other cheaper storage media, and it can extend the message retention duration at a lower cost. This article details the design and implementation of RocketMQ tiered storage.

The tiered storage of RocketMQ allows you to offload data to other storage media without affecting hot data reading and writing. The tiered storage of RocketMQ is suitable for the following scenarios:

The biggest difference between the implementation of tiered storage in RocketMQ and Kafka and Pulsar is that we upload messages in quasi-real-time instead of waiting for a CommitLog to be full before uploading, mainly based on the following considerations:

The tiered storage is designed to reduce the mental burden of users. Users can switch between hot and cold data read and write links without changing the client, and users can use tiered storage capabilities by simply modifying the server configuration. The following two steps are required to achieve the target above:

Optional: You can modify the implementation of tieredMetadataServiceProvider to switch the metadata storage to json-based file storage.

More instructions and configuration items can be found on GitHub for the README[1] of tiered storage.

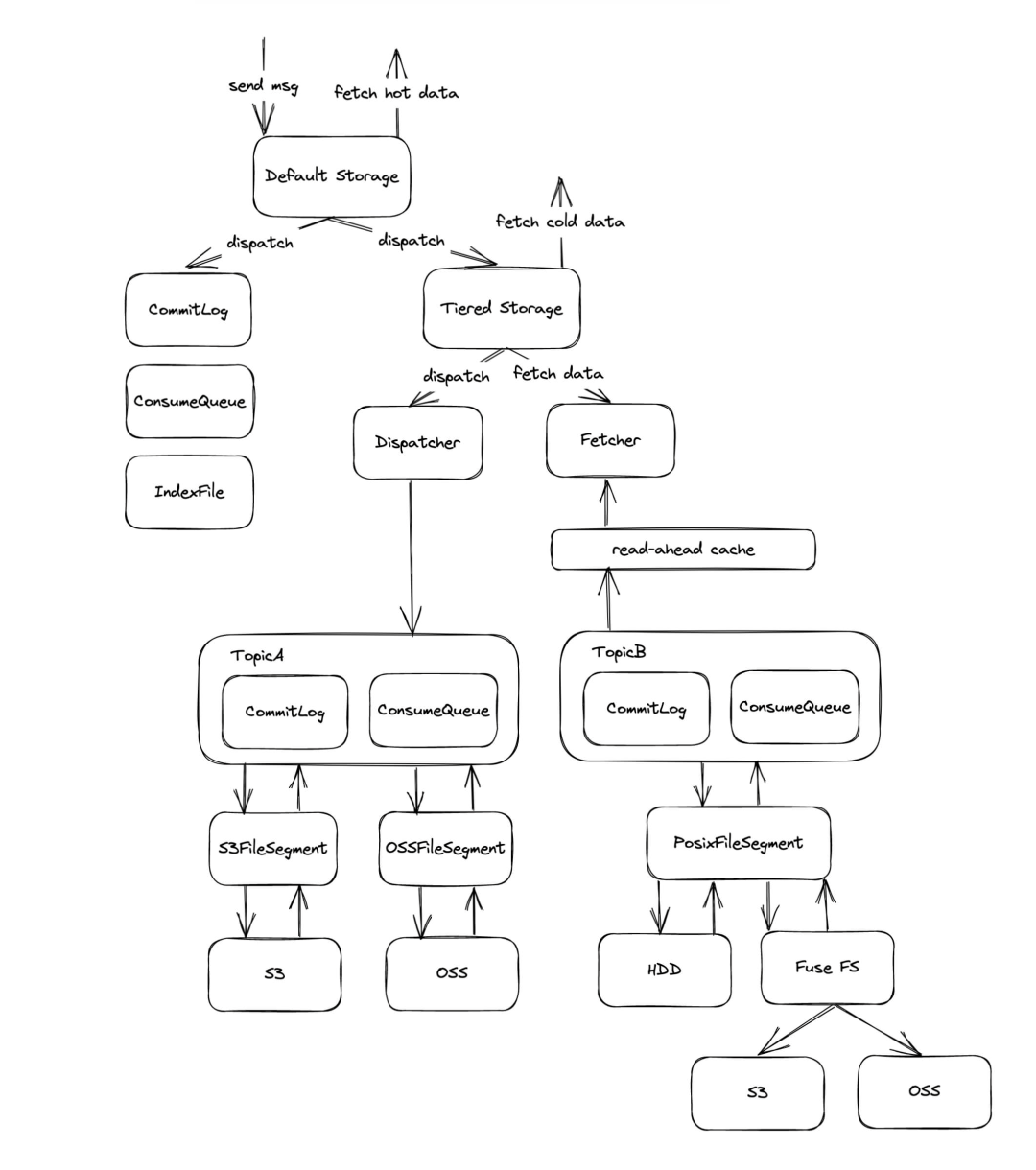

Architecture

Access Layer: TieredMessageStore/TieredDispatcher/TieredMessageFetcher

The access layer implements some of the read and write interfaces in MessageStore and adds asynchronous semantics to them. TieredDispatcher and TieredMessageFetcher implement the upload/download logic of tiered storage respectively. Compared with the underlying interface, more performance optimization has been done here, including using an independent thread pool to avoid slow I/O blocking access to hot data and using the read-ahead cache to optimize performance, etc.

Container Layer: TieredCommitLog/TieredConsumeQueue/TieredIndexFile/TieredFileQueue

The container layer implements logical file abstraction similar to DefaultMessageStore. It divides files into CommitLog, ConsumeQueue, and IndexFile, and each logical file type holds a reference to the underlying physical file through FileQueue. The difference is because the dimension of CommitLog in tiered storage is changed to the queue dimension.

Driver Layer: TieredFileSegment

The driver layer is responsible for maintaining the mapping between logical files and physical files and connecting TieredStoreProvider to the underlying file system read/write interfaces (POSIX, S3, OSS, and MinIO). Currently, PosixFileSegment implementations are provided to transfer data to other hard disks or mount data to OSS through FUSE.

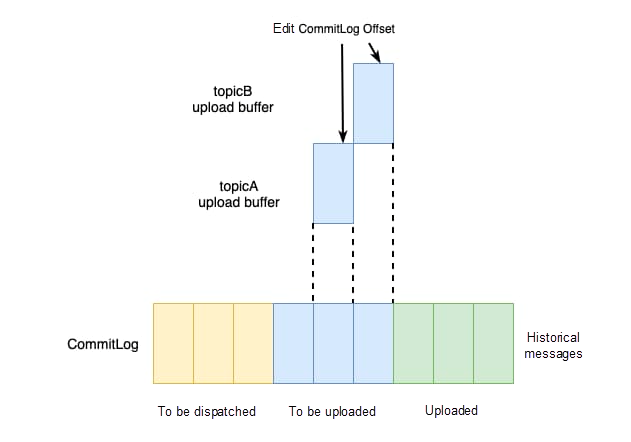

Message upload for tiered storage in RocketMQ is triggered by the dispatch mechanism. When the tiered storage is initialized, TieredDispatcher is registered as the dispatcher of CommitLog. This way, whenever a message is sent to the Broker, the Broker calls TieredDispatcher to dispatch the message, and the TieredDispatcher writes the message to the upload buffer and returns success immediately. The entire dispatch process does not contain any blocking logic to ensure that the construction of the local ConsumeQueue is not affected.

TieredDispatcher

The content written to the upload buffer by TieredDispatcher is only a reference to the message, and the message body will not be buffered into the memory. The CommitLog is created based on the queue dimension for tiered storage. In this case, the commitLog offset field needs to be regenerated.

Upload Buffer

When the upload buffer is triggered to read the commitLog offset field of each message, the new commitLog offset is embedded into the original message by concatenating.

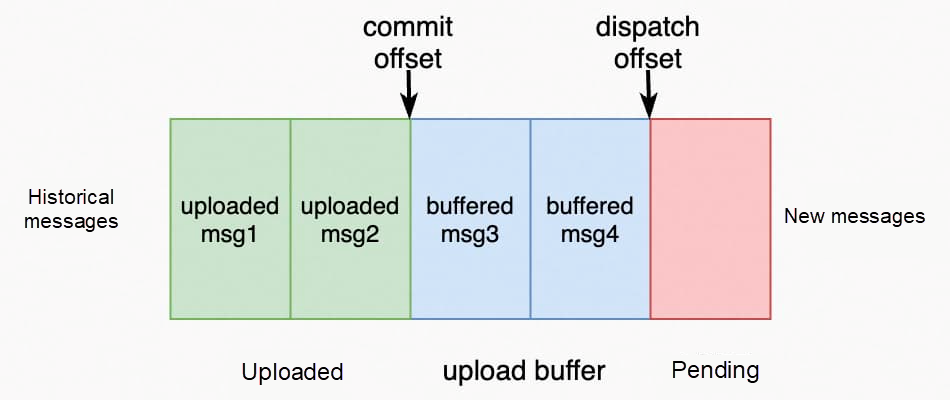

Each queue has two key offsets to control the upload progress:

Upload Progress

Let’s take Consumer as an example. The dispatch offset is the offset for pulling messages, and the commit offset is the offset for confirming consumption. The portion between the commit offset and the dispatch offset is the pulled but unconsumed messages.

TieredMessageStore implements the interface related to message reading in MessageStore. It determines whether to read messages from tiered storage through queue offset in the request. The following policies are available based on the configuration (tieredStorageLevel):

/**

* Asynchronous get message

* @see #getMessage(String, String, int, long, int, MessageFilter)

getMessage

*

* @param group Consumer group that launches this query.

* @param topic Topic to query.

* @param queueId Queue ID to query.

* @param offset Logical offset to start from.

* @param maxMsgNums Maximum count of messages to query.

* @param messageFilter Message filter used to screen desired

messages.

* @return Matched messages.

*/

CompletableFuture<GetMessageResult> getMessageAsync(final String group, final String topic, final int queueId,

final long offset, final int maxMsgNums, final MessageFilter

messageFilter);Messages that need to be read from tiered storage are processed by TieredMessageFetcher. First, TieredMessageFetcher checks whether the parameters are valid and then initiates a pull request based on the queue offset. TieredConsumeQueue or TieredCommitLog converts the queue offset to the physical offset of the corresponding file to read messages from TieredFileSegment.

// TieredMessageFetcher#getMessageAsync similar with

TieredMessageStore#getMessageAsync

public CompletableFuture<GetMessageResult> getMessageAsync(String

group, String topic, int queueId,

long queueOffset, int maxMsgNums, final MessageFilter

messageFilter)TieredFileSegment maintains each physical file offset stored in the file system and reads required data through interfaces implemented for the different storage media.

/**

* Get data from backend file system

*

* @param position the index from where the file will be read

* @param length the data size will be read

* @return data to be read

*/

CompletableFuture<ByteBuffer> read0(long position, int length);When TieredMessageFetcher reads messages, more messages are read in advance for the next use. These messages are temporarily stored in the read-ahead cache.

protected final Cache<MessageCacheKey /* topic, queue id and queue

offset */,

SelectMappedBufferResultWrapper /* message data */> readAheadCache;The design of the read-ahead cache refers to TCP Tahoe. The number of messages read ahead each time is controlled by the additive-increase and multiplicative-decrease mechanism, similar to the congestion window:

The read-ahead cache supports sharding and concurrent requests when a large number of messages are read to achieve higher bandwidth and lower latency.

The read-ahead cache of a topic message is shared by all groups that consume this topic. The cache invalidation policy is listed below:

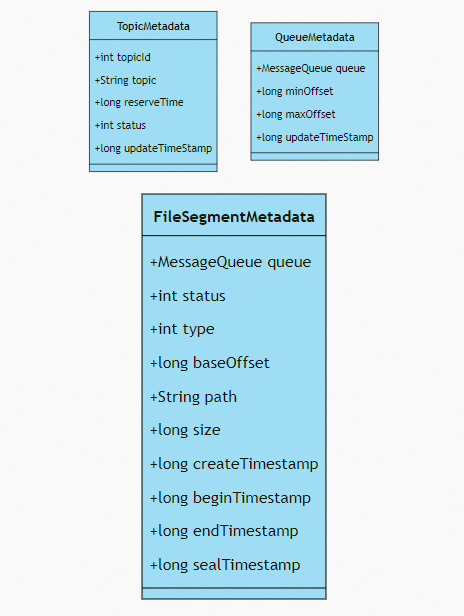

The upload progress is controlled by commit offset and dispatch offset. Tiered storage creates metadata for each topic, queue, and fileSegment and persists these two offsets. When Broker restarts, it resumes from the metadata and continues to upload messages from the commit offset. Previously cached messages are re-uploaded and not lost.

Cloud-native-oriented storage systems are expected to maximize the value of cloud storage, and OSS is the dividend of cloud computing. The tiered storage of RocketMQ is expected to take advantage of the low cost of OSS to extend the message storage time and expand the value of data. It also aims to use its shared storage features to obtain both cost and data reliability in a multi-replica architecture and evolve to a Serverless architecture in the future.

When tiered storage pulls messages without calculating whether the tag of the message matches, the tag filtering is performed by the client. This will bring additional network overhead. We plan to add tag filtering capabilities on the server in the future.

Read-ahead cache invalidation requires that all groups that subscribe to this topic have accessed the cache. This is difficult to trigger when the consumption progress of multiple groups is inconsistent, resulting in the accumulation of useless messages in the cache.

You need to calculate the consumption QPS of each group to estimate whether a group can use cached messages before the cache expires. If a cached message is not expected to be accessed before it expires, it should be expired immediately. Correspondingly, for broadcasting consumption, the message expiration policy should be optimized so the message expires after all clients have read the message.

Currently, we mainly face the following three problems:

[1] README

https://github.com/apache/rocketmq/blob/develop/tieredstore/README.md

634 posts | 55 followers

FollowAlibaba Cloud Native Community - February 1, 2024

Alibaba Cloud Native Community - February 8, 2024

Alibaba Cloud Native - July 18, 2024

Alibaba Cloud Native Community - December 6, 2022

Alibaba Cloud Native Community - February 15, 2023

Alibaba Cloud Native Community - July 19, 2022

634 posts | 55 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn MoreMore Posts by Alibaba Cloud Native Community