By Zibai

Large language models (LLMs) are massive deep learning models pre-trained on extensive datasets. Underlying converters are comprised of a set of neural networks with self-attention encoders and decoders. These components extract meaning from a series of texts and understand the relationships between words and phrases within the texts.

The key bottleneck of LLM inference lies in the shortage of GPU memory resources. Thus, a variety of acceleration frameworks primarily emphasize reducing peak GPU memory usage and enhancing GPU utilization.

TensorRT-LLM[1] is an LLM inference optimization framework launched by NVIDIA. It provides a set of Python APIs for defining LLMs and uses the latest optimization techniques to convert LLMs to TensorRT Engines. The optimized TensorRT Engines are directly used for inference.

TensorRT-LLM primarily leverages the following four optimization techniques to enhance LLM model inference efficiency.

Model quantization is a technique that reduces the GPU memory usage during model inference by decreasing the precision of the original model.

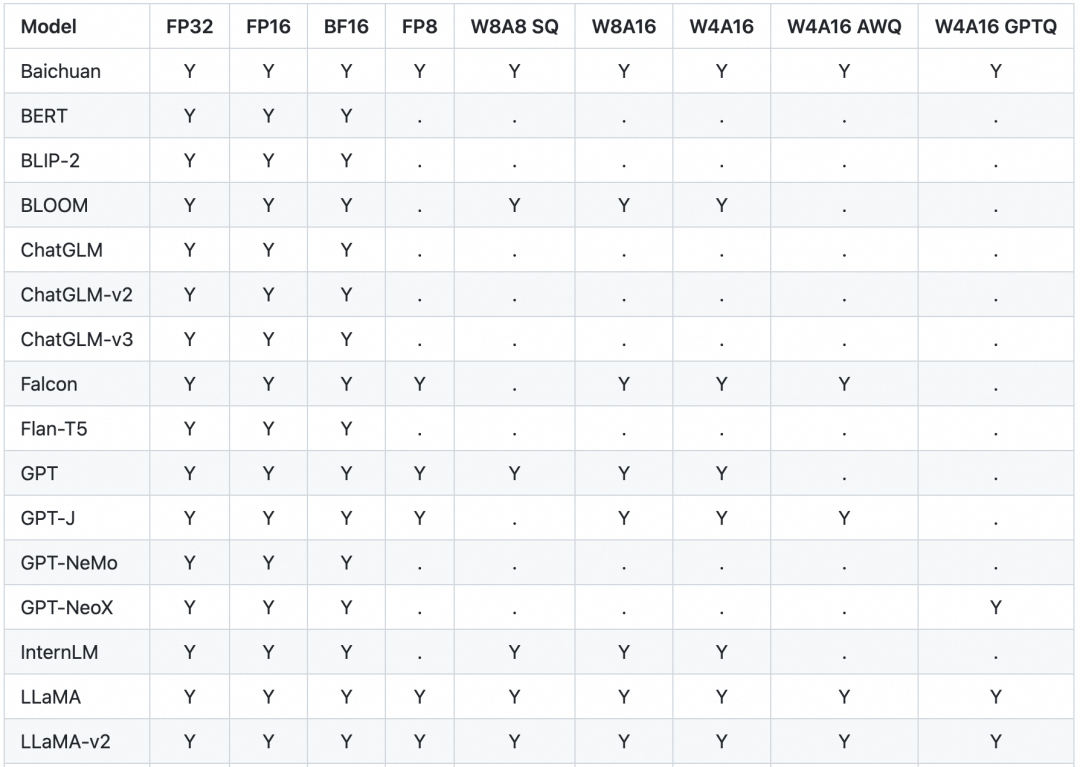

TensorRT supports multiple precisions for various models. The supported quantization precisions for some mainstream models are listed below.

W8A8 SQ uses the SmoothQuant technique[2], which reduces the model weight and activation layer to the quantization precision of INT8 without reducing the accuracy of model inference, significantly reducing GPU memory consumption.

W4A16 or W8A16 means that the model weight is at the quantization precision of INT4 or INT8, and the activation layer is at the quantization precision of FP16.

W4A16 AWQ and W4A16 GPTQ respectively implement the quantization methods mentioned in AWQ[3] and GPT[4]. The model weight is at the quantization precision of INT4 and the activation layer of FP16.

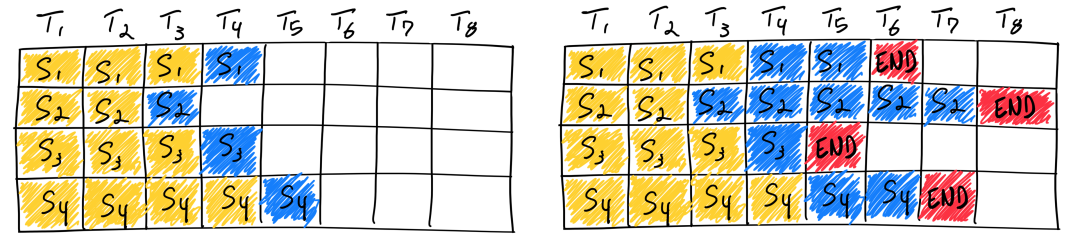

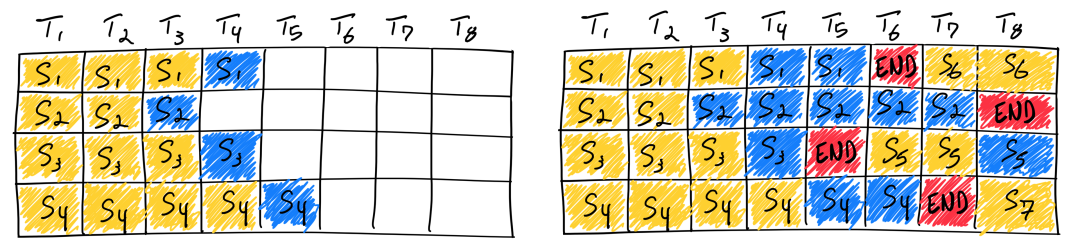

The traditional batching technique is static, which means that one batch can be performed only after all sequences in batching are inferred. The following figure shows an inference process in which the maximum output token is 8 and the batch size is 4. Static batching is used. The S3 sequence has completed inference at T5, but it cannot be processed until the S2 sequence is completed at T8. This results in a significant waste of resources.

In-flight batching is also known as continuous batching or iteration-level batching. It can improve inference throughput and reduce inference latency. The continuous batching process is as follows. When the S3 sequence is processed, a new sequence S5 is inserted for processing to improve resource utilization. For more information, see Orca: A Distributed Serving System for Transformer-Based Generative Models[5].

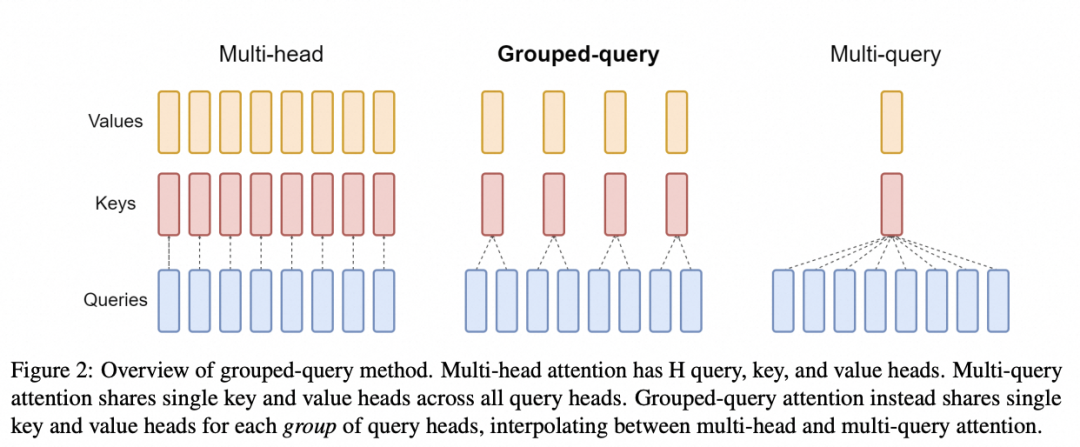

The attention mechanism is used to extract key/important information from sequences and plays a vital role in tasks such as emotion recognition, translation, and question-answering. Attention mechanisms can be divided into MHA (Multi-head Attention), MQA (Multi-query Attention)[6] and GQA (Group-query Attention)[7] mechanisms according to the evolutionary order. Both MQA and GQA are variants of MHA.

MHA is a standard multi-head mechanism. Each query stores a KV, which requires a large amount of GPU memory. All queries in MQA share one KV, so some details are prone to be lost during inference. GQA groups queries, and each group shares one KV, which effectively avoids the problems of MHA and MQA.

The TensorRT-LLM supports MHA, MQA, and GQA. For the implementation, see tensorrt_llm.functional.gpt_attention.

TensorRT-LLM optimizes neural networks to improve execution efficiency when compiling LLMs to TensorRT Engines.

The cloud-native AI suite is a solution provided by Alibaba Cloud Container Service for Kubernetes (ACK) that integrates cloud-native AI technologies and products. It helps enterprises efficiently implement cloud-native AI systems.

This article will describe how to utilize TensorRT-LLM to optimize LLM model inference based on Alibaba Cloud's ACK cloud-native AI suite.

1) Build the image required for the notebook.

FROM docker.io/nvidia/cuda:12.2.2-cudnn8-runtime-ubuntu22.04

ENV DEBIAN_FRONTEND=noninteractive

RUN apt-get update && apt-get upgrade -y && \

apt-get install -y --no-install-recommends \

libgl1 libglib2.0-0 wget git curl vim \

python3.10 python3-pip python3-dev build-essential \

openmpi-bin libopenmpi-dev jupyter-notebook jupyter

RUN pip3 install tensorrt_llm -U --extra-index-url https://pypi.nvidia.com

RUN pip3 install --upgrade jinja2==3.0.3 pynvml>=11.5.0

RUN rm -rf /var/cache/apt/ && apt-get clean && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/* && \

rm -rf /root/.cache/pip/ && rm -rf /*.whl

WORKDIR /root

RUN git clone https://github.com/NVIDIA/TensorRT-LLM.git --branch v0.7.1

ENTRYPOINT ["sh","-c","jupyter notebook --allow-root --notebook-dir=/root --port=8888 --ip=0.0.0.0 --ServerApp.token=''"]2) Download the model. In this article, Baichuan2-7B-Base is chosen as an example.

a) Confirm that tensorrt_llm is installed.

! python3 -c "import tensorrt_llm; print(tensorrt_llm.__version__)"

# 0.7.1b) Install baichuan dependencies.

! cd /root/TensorRT-LLM/examples/baichuan

! pip3 install -r requirements.txtc) Download the Baichuan2-7B-Chat model.

! yum install git-lfs

! GIT_LFS_SKIP_SMUDGE=1 git clone https://www.modelscope.cn/baichuan-inc/Baichuan2-7B-Chat.git

! cd Baichuan2-7B-Chat/

! git lfs pulld) Compile the model to TensorRT Engines and set the model weight to the quantization precision of INT8. Model conversion takes about 5 minutes.

! cd /root/TensorRT-LLM/examples/baichuan

# Build the Baichuan V2 7B model using a single GPU and apply INT8 weight-only quantization.

! python3 build.py --model_version v2_7b \

--model_dir ./Baichuan2-7B-Chat \

--dtype float16 \

--use_gemm_plugin float16 \

--use_gpt_attention_plugin float16 \

--use_weight_only \

--output_dir ./tmp/baichuan_v2_7b/trt_engines/int8_weight_only/1-gpu/e) Use the Tensort Engines that you created for inference.

# With INT8 weight-only quantization inference

! python3 ../run.py --input_text " What is the second-highest mountain in the world?" \

--max_output_len=50 \

--tokenizer_dir=./Baichuan2-7B-Chat \

--engine_dir=./tmp/baichuan_v2_7b/trt_engines/int8_weight_only/1-gpu/Expected output:

Input [Text 0]: "What is the second-highest mountain in the world?"

Output [Text 0 Beam 0]: "The second-highest mountain in the world is Chogori Peak (K2) of the Karakoram Mountains, with an altitude of 8,611 meters. "1) Use the built-in benchmark of TensorRT-LLM.

Add the baichuan2_7b_chat configuration to _allowed_configs dict. For code, see Reference[11].

Note: The 0.7.1 benchmark does not support the baichuan2 model. Therefore, you need to modify the allowed_configs configuration.

! cd /root/TensorRT-LLM/benchmarks/python

! vim allowed_configs.py

# "baichuan2_7b_chat":

ModelConfig(name="baichuan2_7b_chat",

family="baichuan_7b",

benchmark_type="gpt",

build_config=BuildConfig(

num_layers=32,

num_heads=32,

hidden_size=4096,

vocab_size=125696,

hidden_act='silu',

n_positions=4096,

inter_size=11008,

max_batch_size=128,

max_input_len=512,

max_output_len=200,

builder_opt=None,

)),Run the benchmark:

! python3 benchmark.py \

-m baichuan2_7b_chat \

--mode plugin \

--engine_dir /root/TensorRT-LLM/examples/baichuan/tmp/baichuan_v2_7b/trt_engines/int8_weight_only/1-gpu \

--batch_size 1 \

--input_output_len "32,50;128,50"

# batch_size refers to the concurrency.

# input_output_len refers to the length of the input and output, and multiple test cases are separated with semicolons.Expected outputs:

[BENCHMARK] model_name baichuan2_7b_chat world_size 1 num_heads 32 num_kv_heads 32 num_layers 32 hidden_size 4096 vocab_size 125696 precision float16 batch_size 1 input_length 32 output_length 50 gpu_peak_mem(gb) 8.682 build_time(s) 0 tokens_per_sec 60.95 percentile95(ms) 821.977 percentile99(ms) 822.093 latency(ms) 820.348 compute_cap sm86 generation_time(ms) 798.45 total_generated_tokens 49.0 generation_tokens_per_second 61.369

[BENCHMARK] model_name baichuan2_7b_chat world_size 1 num_heads 32 num_kv_heads 32 num_layers 32 hidden_size 4096 vocab_size 125696 precision float16 batch_size 1 input_length 128 output_length 50 gpu_peak_mem(gb) 8.721 build_time(s) 0 tokens_per_sec 59.53 percentile95(ms) 841.708 percentile99(ms) 842.755 latency(ms) 839.852 compute_cap sm86 generation_time(ms) 806.571 total_generated_tokens 49.0 generation_tokens_per_second 60.7512) Compare the performance of the INT8 quantization model with that of the original model.

The execution command of the original model:

def normal_inference():

from transformers import AutoModelForCausalLM, AutoTokenizer

from transformers.generation.utils import GenerationConfig

tokenizer = AutoTokenizer.from_pretrained(model_path, use_fast=False, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(model_path, device_map="auto", torch_dtype=torch.bfloat16, trust_remote_code=True)

model.generation_config = GenerationConfig.from_pretrained(model_path)

messages = []

messages.append({"role": "user", "content": prompt})

response = model.chat(tokenizer, messages)

print(response)

print(response)The execution command of the INT8 quantization model:

def tensorrt_llm_inference():

from subprocess import Popen, PIPE

script = f'''python3 /root/TensorRT-LLM/examples/run.py --input_text \"{prompt}\" \

--max_output_len=50 \

--tokenizer_dir=/root/TensorRT-LLM/examples/baichuan/Baichuan2-7B-Chat \

--engine_dir=/root/TensorRT-LLM/examples/baichuan/tmp/baichuan_v2_7b/trt_engines/int8_weight_only/1-gpu/'''

p = Popen(['sh', '-c', script], stdout=PIPE,

stderr=PIPE)

output, err = p.communicate()

if p.returncode != 0:

print(f"tensorrt_llm_inference() error:{err}")

return

print(output)

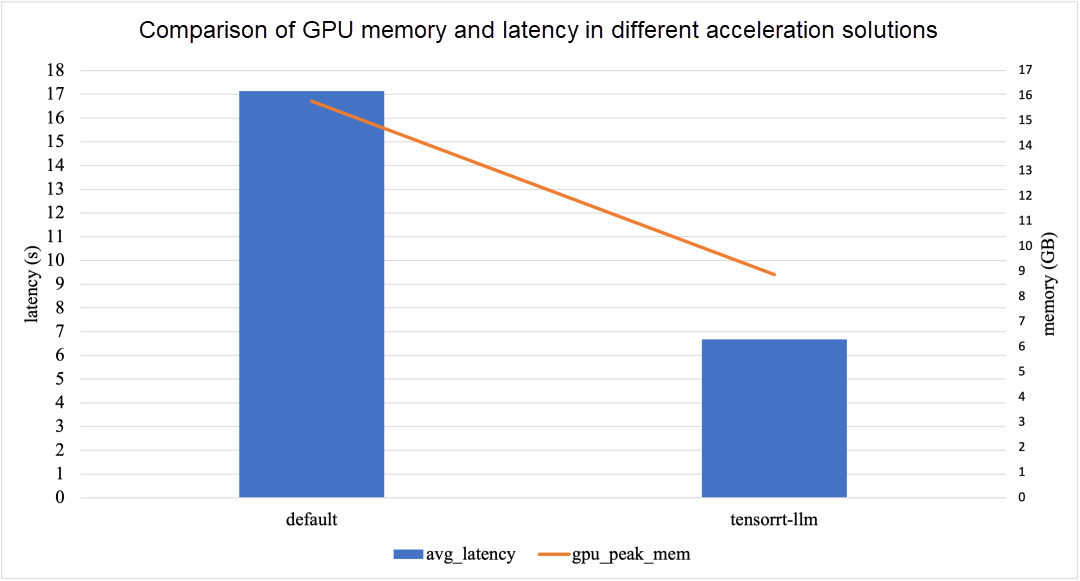

Compared with the default Baichuan2-7B-Chat model, the INT8 quantization model used in the TensorRT-LLM acceleration solution reduces the memory peak by 43.8% and the latency by 61.1%.

[1] Reference

[2] SmoothQuant technique

[3] AWQ

[4] GPTQ

[5] Orca: A Distributed Serving System for Transformer-Based Generative Models

[6] MQA (Multi-query Attention)

[7] GQA (Group-query Attention)

[8] Install the cloud-native AI suite

[9] ACK console

[10] ecs.gn7i-c16g1.4xlarge

[11] TensorRT-LLM

[12] Reference

Writing Flink SQL for Weakly Structured Logs: Leveraging SLS SPL

Decipher the Knative's Open-Source Serverless Framework: Traffic Perspective

664 posts | 55 followers

FollowAlibaba Cloud Community - October 10, 2024

Alibaba Cloud Community - June 30, 2025

Alibaba Container Service - July 24, 2024

Alibaba Container Service - August 30, 2024

Alibaba Cloud Native Community - February 20, 2025

Alibaba Container Service - November 15, 2024

664 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by Alibaba Cloud Native Community