By Yuanyi

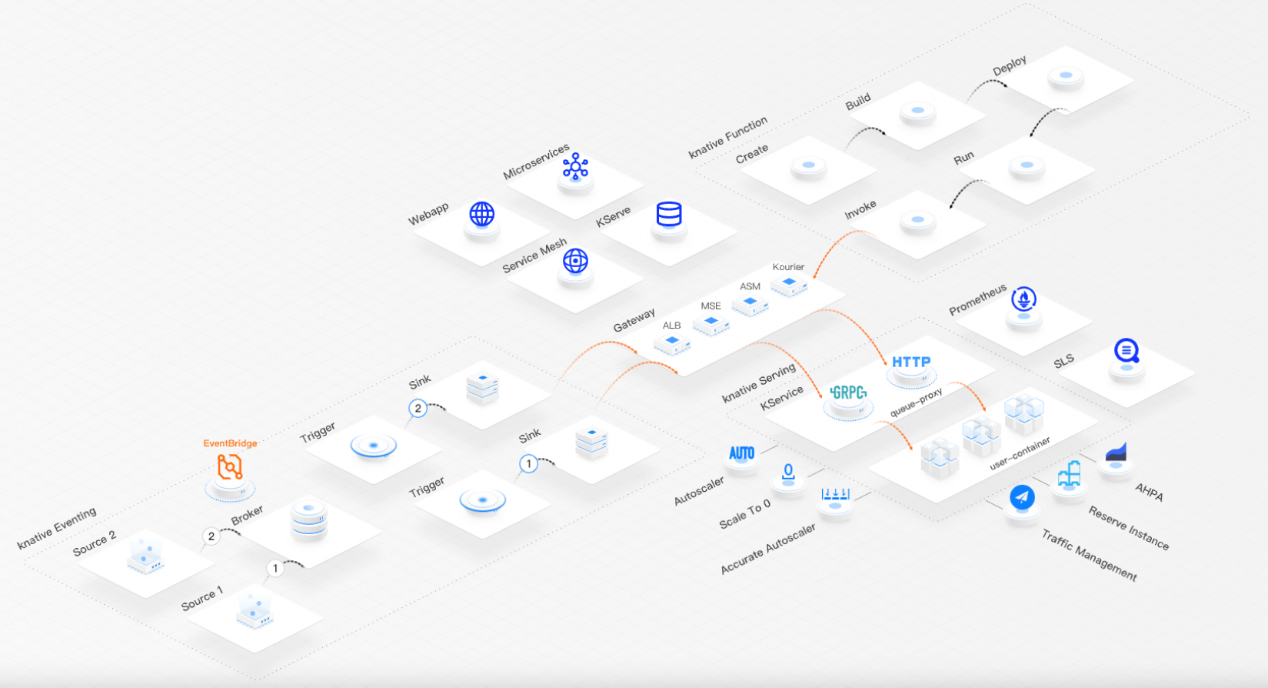

Knative is an open-source serverless application orchestration framework based on Kubernetes. Its goal is to establish cloud-native and cross-platform serverless application orchestration standards. The main features of Knative include request-based auto scaling, scale-in to 0, multi-version management, traffic-based canary release, function deployment, and event-driven architecture.

For web applications, traffic metrics such as parallelism, QPS, and RT can be used to measure the current web service quality. Knative provides request-driven serverless capabilities, including multi-version traffic management, traffic access, traffic-based elasticity, and monitoring. This article deciphers the capabilities of Knative from the perspective of traffic.

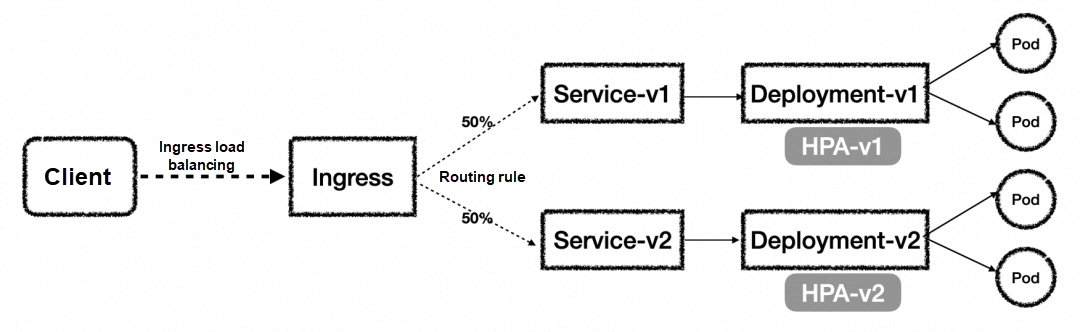

Let's take a look at how to implement a traffic-based blue-green deployment in Kubernetes.

First, you need to create the corresponding Kubernetes Service and Deployment. If you want elasticity, you also need to configure HPA. Then, when doing traffic canary release, you need to create a new version. Using the example in the figure above, the original version is v1, and to achieve the canary release, a new version, v2, needs to be created. To create v2, you must create the corresponding Service, Deployment, and HPA. After creation, you can adjust the traffic distribution ratio through Ingress to achieve canary release. Clearly, implementing traffic-based blue-green deployment in Kubernetes requires managing multiple resources, and as versions iterate, management will become more complex.

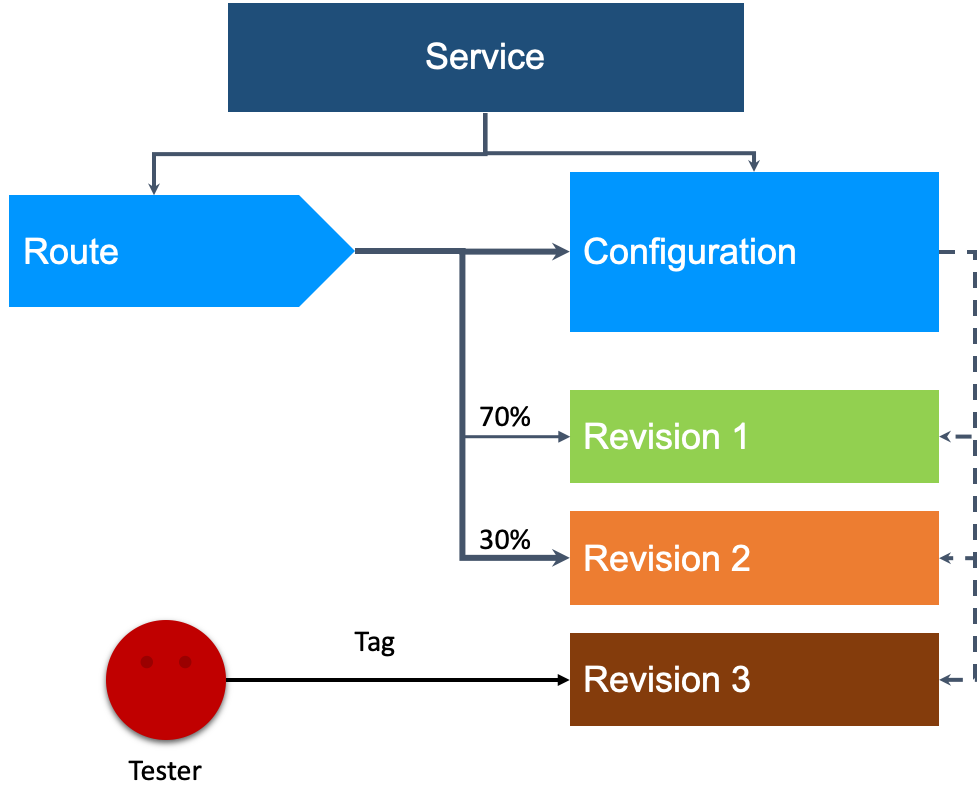

In contrast, achieving traffic-based canary release in Knative only requires targeting a resource object of the Knative Service and adjusting the traffic ratio.

Knative offers an abstraction of the serverless application model called the Service. The Knative Service configuration consists of two parts: Configuration and Route. Configuration is used to configure the workload and creates a new Revision whenever it is updated. Route is primarily responsible for traffic management in Knative. Basically, Knative Revision ≈ Ingress + Service + Deployment + Elasticity (HPA). The following example shows the traffic configuration of a Knative service:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: example-service

namespace: default

spec:

...

traffic:

- percent: 0

revisionName: example-service-1

tag: staging

- percent: 40

revisionName: example-service-2

- percent: 60

revisionName: example-service-3To achieve traffic-based blue-green deployment, you only need to configure the corresponding traffic ratio between different revision versions. The same applies when rolling back to the original version; you just need to switch the traffic of the new version back to 0 and set the original version to 100.

When releasing a new version, it is often necessary to verify if there are any issues before directing production traffic to the new version. However, with the traffic of the new version set to 0, how can it be verified? Knative provides a tagging mechanism to address this issue. It involves tagging a version and automatically generating an access address for the tag.

For instance, if the staging tag is set in the example-service-1 version mentioned earlier, it creates an access address as staging-example-service.default.example.com. This endpoint allows verification of the example-service-1 version without concern of online traffic accessing the version.

Each time a Knative service is modified, a new revision is created. However, as iterations accelerate, numerous historical versions are inevitably generated. So, how can these historical versions be cleaned up? Knative provides an automatic version cleanup capability. GC policies can be configured through config-gc. The following describes the parameters:

• retain-since-create-time: The retention period from the creation time. The default value is 48 hours.

• retain-since-last-active-time: The retention period from the last active state (the revision is active as long as it is referenced by route, even if the traffic parameter is set to 0). The default value is 15 hours.

• min-non-active-revisions: The minimum number of inactive versions. The default value is 20.

• max-non-active-revisions: The maximum number of inactive versions. The default value is 1000.

apiVersion: v1

kind: ConfigMap

metadata:

name: config-gc

namespace: knative-serving

labels:

app.kubernetes.io/name: knative-serving

app.kubernetes.io/component: controller

app.kubernetes.io/version: "1.8.2"

data:

_example: |

# ---------------------------------------

# Garbage Collector Settings

# ---------------------------------------

# Duration since creation before considering a revision for GC or "disabled".

retain-since-create-time: "48h"

# Duration since active before considering a revision for GC or "disabled".

retain-since-last-active-time: "15h"

# Minimum number of non-active revisions to retain.

min-non-active-revisions: "20"

# Maximum number of non-active revisions to retain

# or "disabled" to disable any maximum limit.

max-non-active-revisions: "1000"If you want to immediately reclaim inactive revisions, you can make the following configurations:

min-non-active-revisions: "0"

max-non-active-revisions: "0"

retain-since-create-time: "disabled"

retain-since-last-active-time: "disabled"If you want to retain only 10 inactive revisions, you can make the following configurations:

retain-since-create-time: "disabled"

retain-since-last-active-time: "disabled"

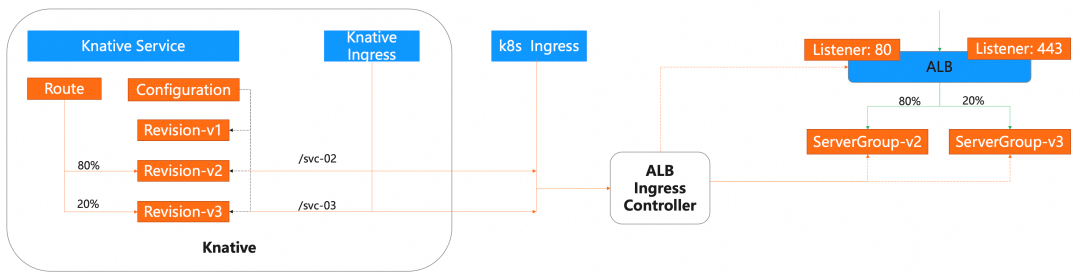

max-non-active-revisions: "10"To meet the demands of different scenarios, we provide diverse gateway capabilities, including ALB, MSE, ASM, and Kourier gateways.

Apart from the application load balancer (ALB), Alibaba Cloud provides a more powerful way to manage traffic: Ingress, which is fully managed and O&M-free and provides the auto-scaling capability.

To configure the ALB gateway, you only need to configure the following settings in the config-network:

apiVersion: v1

kind: ConfigMap

metadata:

name: config-network

namespace: knative-serving

labels:

app.kubernetes.io/name: knative-serving

app.kubernetes.io/component: networking

app.kubernetes.io/version: "1.8.2"

data:

ingress.class: "alb.ingress.networking.knative.dev"

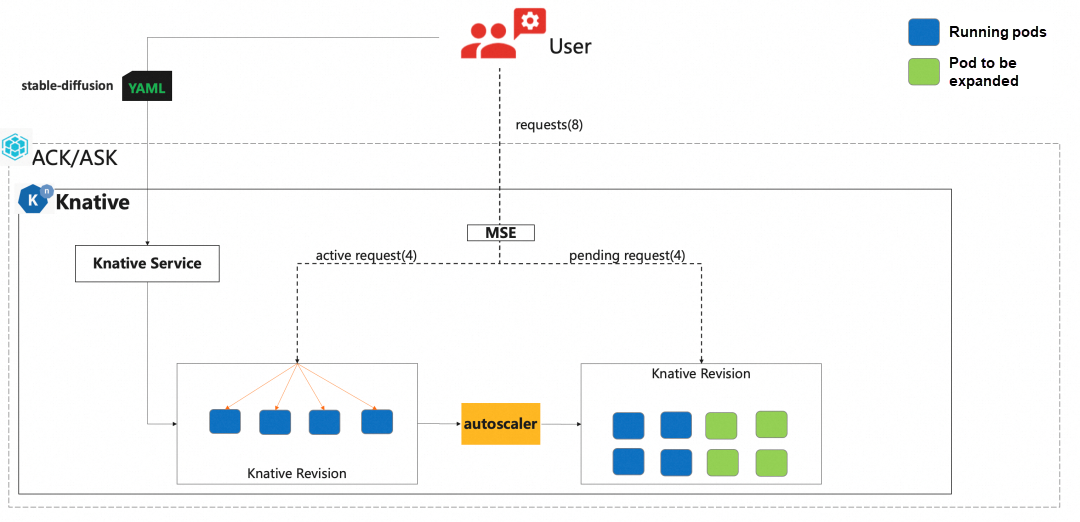

...Microservices Engine (MSE) cloud-native gateway is a next-generation gateway product that is compatible with the Kubernetes ingress standard. It combines traditional traffic gateways and microservice gateways. Knative and MSE gateways support accurate request-based automatic scalability (MPA). This allows you to precisely control the number of concurrent requests that are processed by a single pod.

To configure the MSE gateway, you only need to configure the following settings in the config-network:

apiVersion: v1

kind: ConfigMap

metadata:

name: config-network

namespace: knative-serving

labels:

app.kubernetes.io/name: knative-serving

app.kubernetes.io/component: networking

app.kubernetes.io/version: "1.8.2"

data:

ingress.class: "mse.ingress.networking.knative.dev"

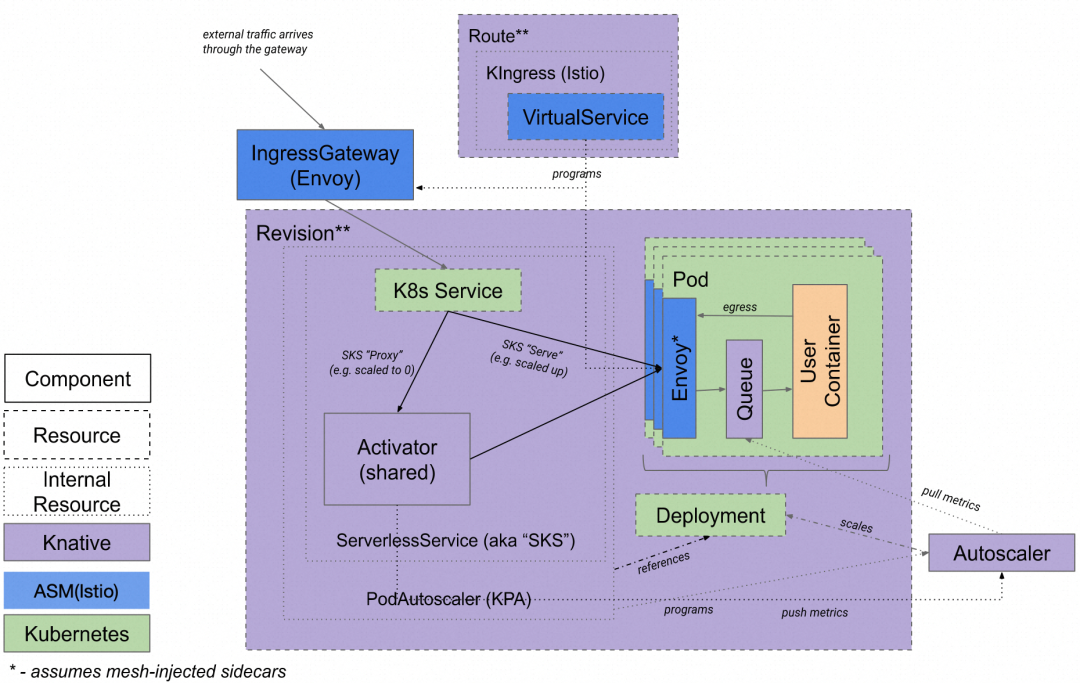

...Alibaba Cloud Service Mesh (ASM) is a fully managed platform that manages traffic for microservice applications and is compatible with Istio. With features such as traffic control, mesh monitoring, and inter-service communication security, ASM simplifies service governance in all aspects.

Knative naturally supports integration with Istio, so you only need to configure the istio.ingress.networking.knative.dev in the config-network:

apiVersion: v1

kind: ConfigMap

metadata:

name: config-network

namespace: knative-serving

labels:

app.kubernetes.io/name: knative-serving

app.kubernetes.io/component: networking

app.kubernetes.io/version: "1.8.2"

data:

ingress.class: "istio.ingress.networking.knative.dev"

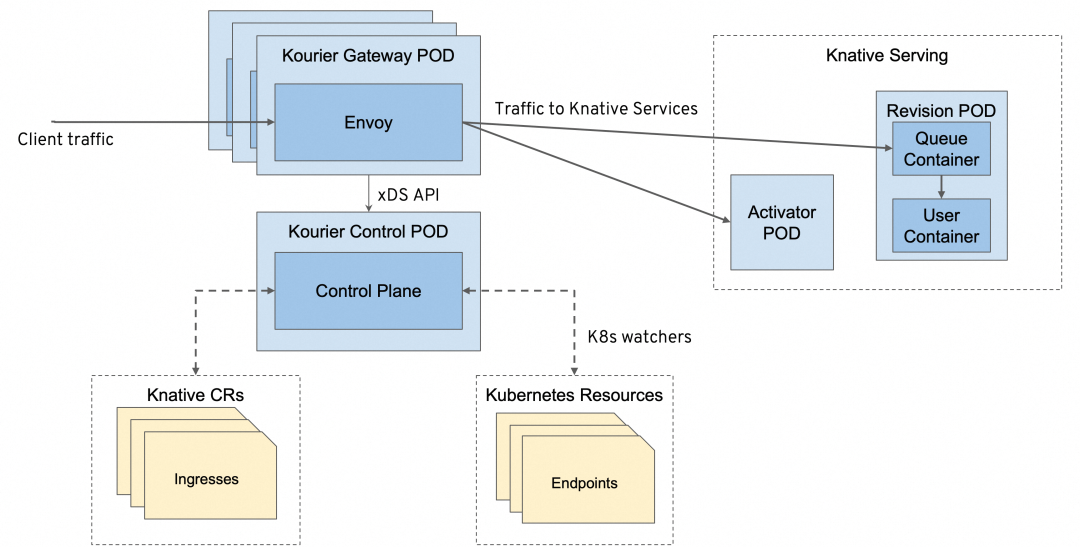

...Kourier is a lightweight gateway that is implemented based on the Envoy architecture. It provides traffic distribution for Knative revisions and supports gRPC services, timeout and retry, TLS certificates, and external authentication and authorization.

You only need to configure the kourier.ingress.networking.knative.dev in the config-network:

apiVersion: v1

kind: ConfigMap

metadata:

name: config-network

namespace: knative-serving

labels:

app.kubernetes.io/name: knative-serving

app.kubernetes.io/component: networking

app.kubernetes.io/version: "1.8.2"

data:

ingress.class: "kourier.ingress.networking.knative.dev"

...Among the preceding gateways, ALB focuses on application load balancing, cloud-native MSE focuses on microservice scenarios, and ASM provides service mesh (Istio) capabilities. You can select the gateway product according to your business scenarios. Of course, if you only need basic gateway capabilities, you can choose Kourier.

For more information about selecting a suitable Knative gateway, see https://www.alibabacloud.com/help/en/ack/serverless-kubernetes/user-guide/select-a-gateway-for-knative

Knative supports multiple access protocols, including HTTP, gRPC, and WebSocket. The following example shows the configuration of a Knative gRPC service:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-grpc

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/class: kpa.autoscaling.knative.dev

spec:

containers:

- image: docker.io/moul/grpcbin # The image contains a tool that is used to test gRPC by providing a gRPC Service to respond to requests.

env:

- name: TARGET

value: "Knative"

ports:

- containerPort: 9000

name: h2c

protocol: TCPThe name of the gRPC service in the port field of the Knative service is set to h2c.

In addition, to meet the demands of exposing both HTTP and gRPC in a single service, we have also extended Knative capabilities to support configuring both HTTP and gRPC service exposure. The configuration example is as follows:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-grpc

annotations:

knative.alibabacloud.com/grpc: grpcbin.GRPCBin

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/class: kpa.autoscaling.knative.dev

spec:

containers:

- image: docker.io/moul/grpcbin

args:

- '--production=true'

env:

- name: TARGET

value: "Knative"

ports:

- containerPort: 80

name: http1

protocol: TCP

- containerPort: 9000

name: h2c

protocol: TCPIn Knative, you can customize the domain name for individual Knative services. In addition, you can also configure a global domain name suffix and a customized path.

If you want to customize the domain name of a single service, you can use DomainMapping. The domain mapping configuration templates are as follows:

apiVersion: serving.knative.dev/v1alpha1

kind: DomainMapping

metadata:

name: <domain-name>

namespace: <namespace>

spec:

ref:

name: <service-name>

kind: Service

apiVersion: serving.knative.dev/v1

tls:

secretName: <cert-secret>• <domain-name> Set the service domain name.

• <namespace> Set the namespace, which is the same as the namespace where the service resides.

• <service-name> The name of the destination service.

• <cert-secret> (Optional) The name of the certificate secret.

You can modify the config-domain to configure the global domain name suffix. Replace example.com with mydomain.com. Modify the configuration as follows:

apiVersion: v1

kind: ConfigMap

metadata:

name: config-domain

namespace: knative-serving

data:

mydomain.com: ""You can use the knative.aliyun.com/serving-ingress annotation to configure a custom routing path. The following is an example:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: coffee-mydomain

annotations:

knative.aliyun.com/serving-ingress: cafe.mydomain.com/coffee

spec:

template:

metadata:

spec:

containers:

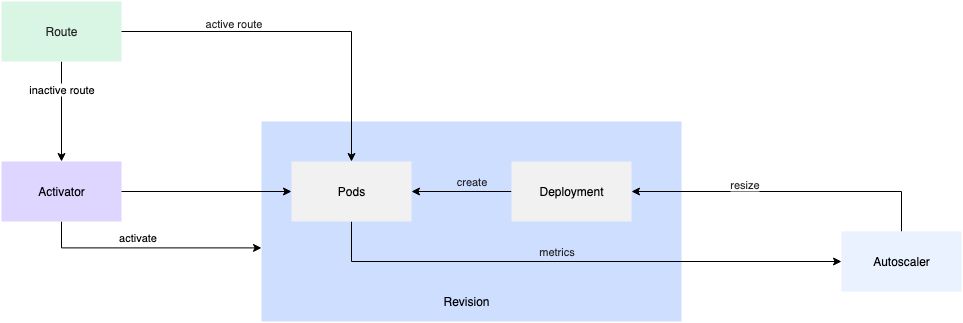

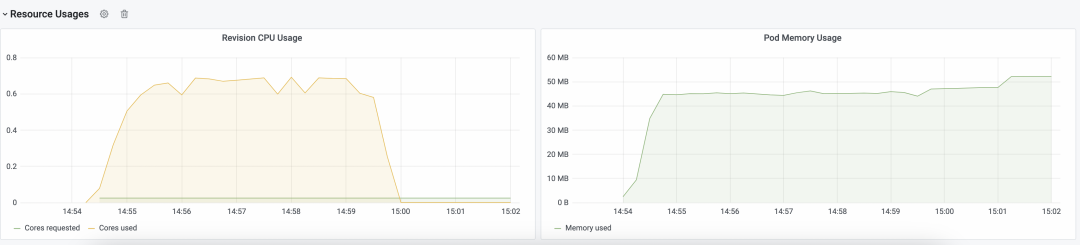

- image: registry.cn-hangzhou.aliyuncs.com/knative-sample/helloworld-go:160e4dc8Knative provides auto-scaling capability based on traffic requests (Knative Pod Autoscaler, KPA). The implementation principle is as follows: Knative Serving injects a queue-proxy container into each pod. This container reports the concurrency metrics of business containers to the autoscaler. After receiving these metrics, the autoscaler adjusts the number of pods in the deployment based on the number of concurrent requests and the corresponding algorithm.

KPA performs auto-scaling based on the average number of requests (or concurrency) per pod. By default, KPA uses concurrency-based auto-scaling with a maximum concurrency of 100 per pod. In addition, Knative also provides the concept of target-utilization-percentage, which is used to specify the target utilization percentage of automatic scaling.

In this example, the number of pods is calculated based on the following formula: Number of pods = Total number of concurrent requests/(Maximum concurrency of pods × Target utilization percentage).

For example, if the maximum concurrency of pods in the service is set to 10 and the target utilization percentage is set to 0.7, and 100 concurrent requests are received, the autoscaler creates 15 pods (100/(0.7 × 10) ≈ 15).

In addition, when the request is 0, the number of supported pods is automatically scaled in to 0.

For more information about Knative elasticity, see Decipher the Elastic Technology of Knative's Open Source Serverless Framework.

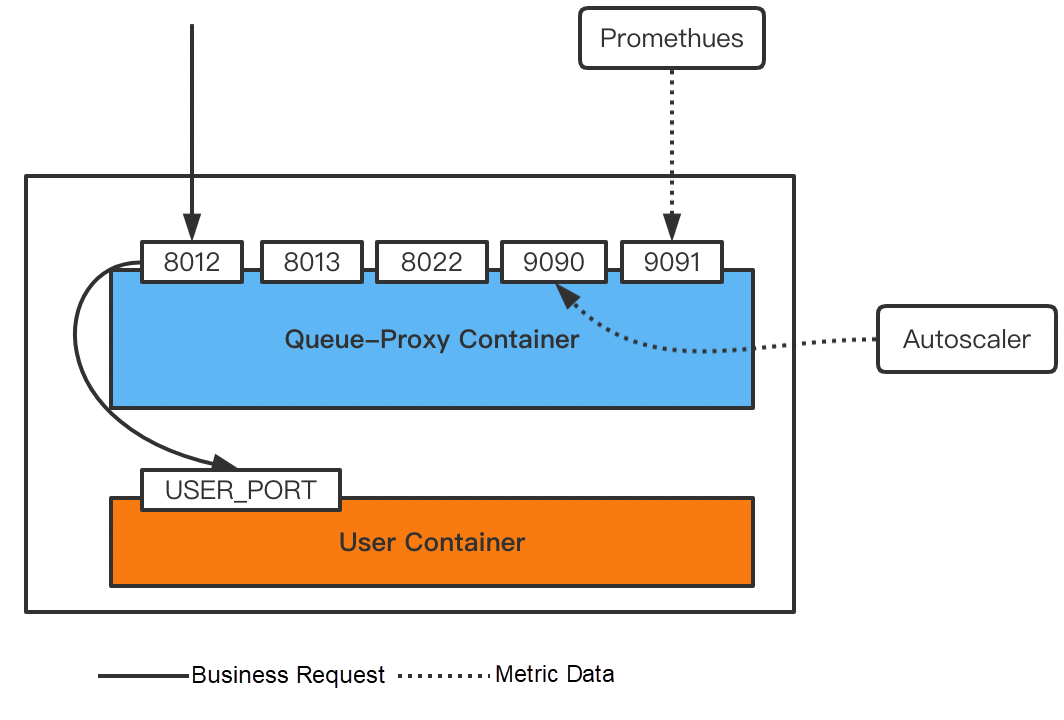

The key to how Knative collects traffic metrics is queue-proxy. In addition to traffic elasticity, queue-proxy also provides Prometheus metrics exposure, such as the number of requests, concurrency, and response time. The following is the description of the ports:

• 8012: The HTTP port of queue-proxy where the traffic ingress goes.

• 8013: The HTTP2 port for forwarding grpc traffic.

• 8022: The management port of queue-proxy, supporting features such as health check.

• 9090: The monitoring port of queue-proxy, exposing metrics for autoscaler to collect for KPA scaling

• 9091: Prometheus application monitoring metrics, such as number of requests and response time.

• USER_PORT: The container port configured by the user, that is, the service port exposed by the business. It is configured in the ksvc container port.

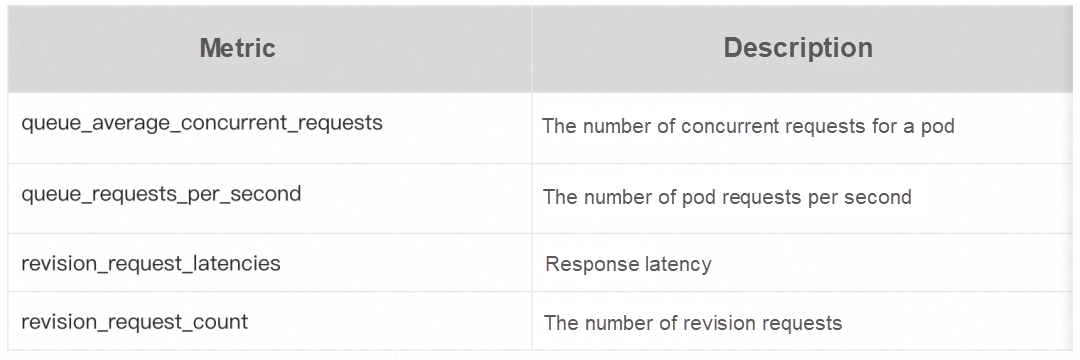

The following table describes the key metrics collected by Prometheus.

In addition, Knative provides the Grafana dashboard: https://github.com/knative-extensions/monitoring/tree/main/grafana

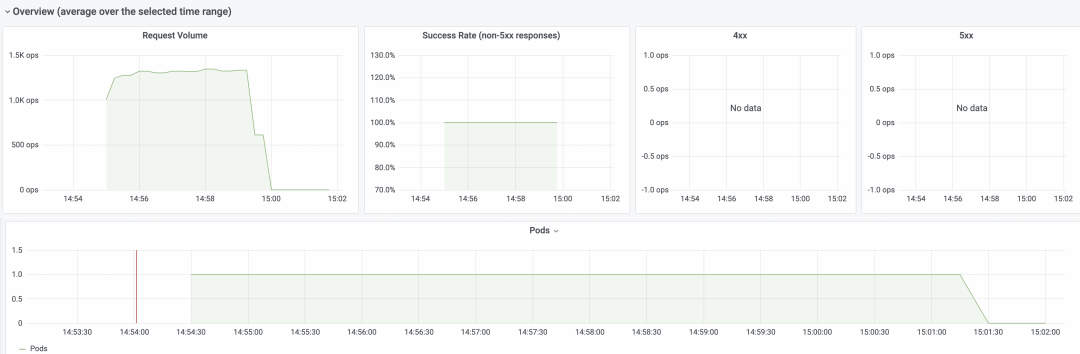

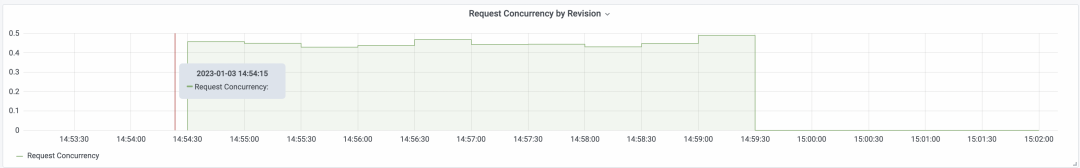

Alibaba Cloud Container Service Knative integrates this Grafana dashboard to provide out-of-the-box observability. You can view the number of requests, request success rate, pod scaling trend, and request response latency on the dashboard.

Overview: You can view the monitoring data of Knative about the number of requests, the success rate of requests, 4xx (client error), 5xx (server error), and the pod scaling trend.

The vertical axis of the dashboard data, ops/sec, indicates the number of requests processed per second.

Based on these metrics, we can easily calculate the resource usage of one request.

This article describes Knative traffic management, traffic access, traffic-based elasticity, and monitoring. Knative also provides a wide range of cloud product gateway capabilities for different business scenarios. Based on Knative, you can easily achieve traffic-based on-demand use and auto-scaling serverless capabilities.

Accelerating Large Language Model Inference: High-performance TensorRT-LLM Inference Practices

664 posts | 55 followers

FollowAlibaba Cloud Native Community - November 23, 2023

Alibaba Container Service - March 7, 2025

Alibaba Container Service - July 22, 2021

Alibaba Container Service - May 12, 2021

Alibaba Cloud Native Community - March 6, 2023

Alibaba Clouder - June 22, 2020

664 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn MoreMore Posts by Alibaba Cloud Native Community