By Changsheng Su

Apache RocketMQ is widely used in various business scenarios. In production scenarios, users often query and locate specific batches of messages using message IDs or specific business keys such as student IDs and order numbers. This helps identify complex issues in distributed systems.

Traditionally, message index storage relies on database systems or local file systems. However, due to limited disk capacity, it becomes challenging to handle large amounts of data.

In cloud-native scenarios, object storage provides elasticity and pay-as-you-go capabilities, effectively reducing storage costs. However, it may not offer flexible support for random reads and writes. In the queue models of Apache RocketMQ, written data is approximately ordered by time. This enables non-stop writing for hot data in random indexes. Additionally, it supports the separation of hot and cold data, and uses asynchronous integration to transfer cold data to a more cost-effective storage system.

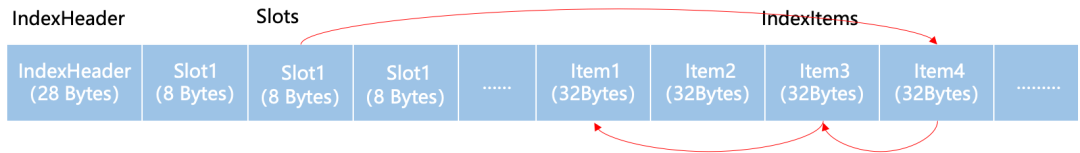

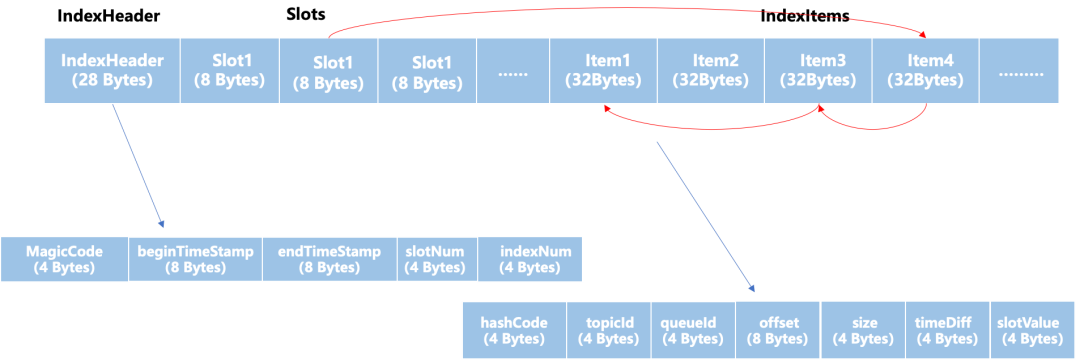

An index is an efficient data structure that supports fast storage and lookup with time efficiency at the cost of spatial efficiency. Let's take a look at the structure of RocketMQ index files. RocketMQ index files have a three-part structure based on the head-inserted hash tables. This structure allows them to query fast, occupy a small space, and be easy to maintain. However, the number of local index files increases with the data volume.

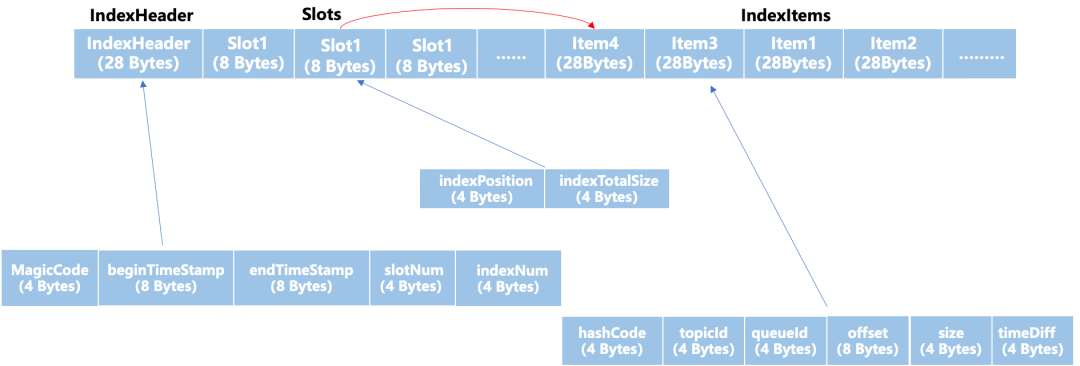

The three parts are: IndexHeader, Slots, and IndexItems.

Index file structure

Indexes with a hash collision are connected by using one-way linked lists. IndexItems are appended to the end of the file to improve the write performance.

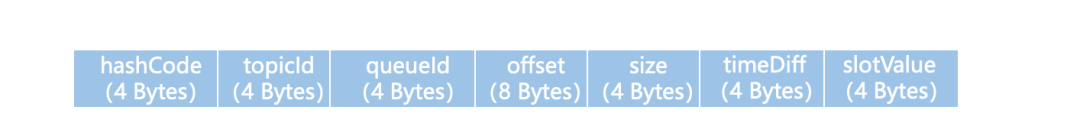

IndexItem

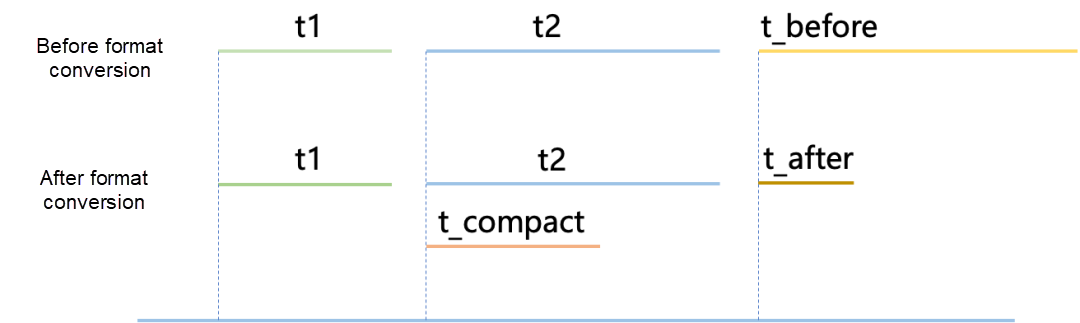

The index module in RocketMQ involves a large number of write requests, a small number of read requests, and zero update requests. Therefore, certain read amplification overhead for a single read is acceptable to reduce the average operation cost of the system. Assume that the time overhead of writing a message index is t1, and each message index is queried after t2 on average; the time overhead of converting the format is t_compact. Usually, t_compact is much shorter than t2. Therefore, t_compact can be completed asynchronously within t2. Assume the message index query time before format conversion is t_before, and the average message index query time overhead after format conversion is t_after. t_before < t_after, so t1 + t2 + t_before > t1 + t2 + t_after.

Timeline

The RocketMQ index file is based on the head-inserted HashTable that uses separate chaining, so indexes can be written sequentially. However, as the index file uses one-way linked lists, the specified key will be hashed to the specified slot when queried, and the head node of the linked lists will be obtained. Then, the one-way linked lists are traversed according to the head node. This is a random I/O query, where the OSS has features similar to those of a mechanical hard disk, and the time for reading 20 bytes and reading several KB is almost the same. Multiple random I/O queries will cause a large time overhead. Therefore, a large number of hash conflicts may cause serious data read amplification.

To reduce random queries to the OSS file, the tiered storage asynchronously converts the format of the index file. After conversion, the index file can retrieve large blocks of data at one time, which can greatly reduce I/O queries on OSS files.

The following are the specific steps of asynchronous rearrangement of random indexes.

In this way, random queries performed on OSS files are greatly reduced, thereby improving the query efficiency and reducing the time overhead. At the same time, as local index files require format conversion and grouping, they also occupy certain computing and storage resources.

Before format conversion

After format conversion

After rearrangement, the index file realigns the linked lists with discontinuous physical addresses into an array with continuous physical addresses. Each SlotItem has 8 bytes, the first 4 bytes are used to record the first address of the array, and the last 4 bytes are used to record the length of the array. Such format conversion has the following benefits.

• Subsequent reads of the index change from random I/O of the linked lists to sequential I/O of the array. The time overhead of random I/O is reduced.

• The page cache hit rate is increased owing to the spatial locality.

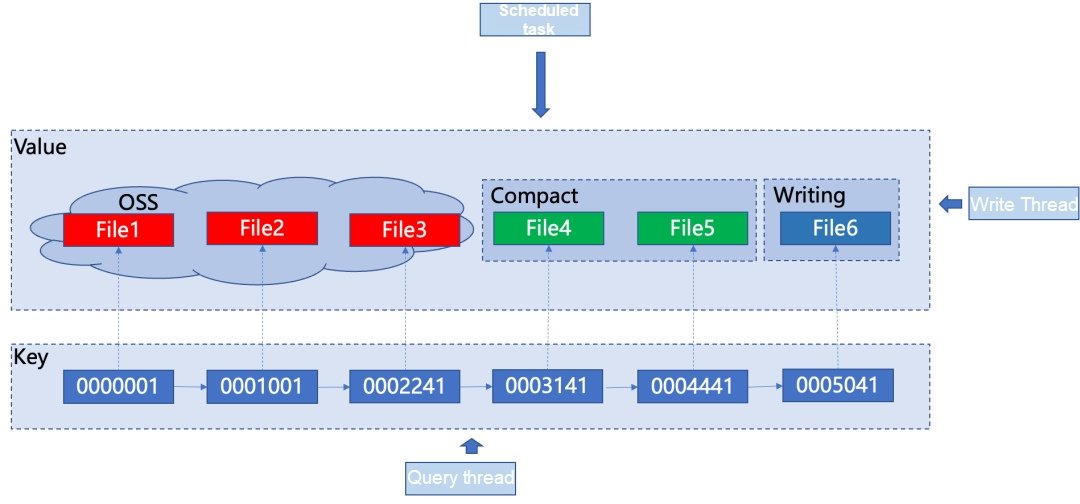

Lifecycle of a single index file

The capacity of a single index file is limited. When multiple indexes are written to one index file and the number of indexes stored in the file reaches the upper limit, a new file requires to be created for subsequent writes. Therefore, each file will go through the stages of creating, compacting, uploading to become an OSS file, and expiring and destroying.

When an index file in the writing state is full, it needs to be marked "compact". Compact means that the file no longer needs to be written and has been compacted, but still needs to be retained for subsequent upload to the OSS. At this point, the file can be uploaded to the OSS system for storage and marked as "OSS" state. This corresponds to the three states of a file: unsealed, compacted, and uploaded.

Three different threads are designed to cooperate with each other to implement the non-stop write feature and improve the write performance of the index. They are the write thread, the index query thread, and the backend scheduled task thread. Each thread is responsible for different tasks and uses read-write locks to ensure correctness under concurrent conditions. A message queue is a storage system where data is approximately ordered by time, and different index files store indexes of different periods. Therefore, multiple files can be managed according to the approximate order of time. The skip list data structure is adopted for management, which makes it easy to support fast locating and range searches.

1. The write thread is non-blocking. It is responsible for writing indexes to the file at the end of the queue that is in the writing state. When a file is full, the thread automatically creates a new file at the end of the queue and switches to write to the new file. To improve the write efficiency, the thread is also responsible for caching indexes in memory when writing them to the file. When the cached indexes reach a certain number, the thread then writes them to the file in batches, thus reducing disk I/O.

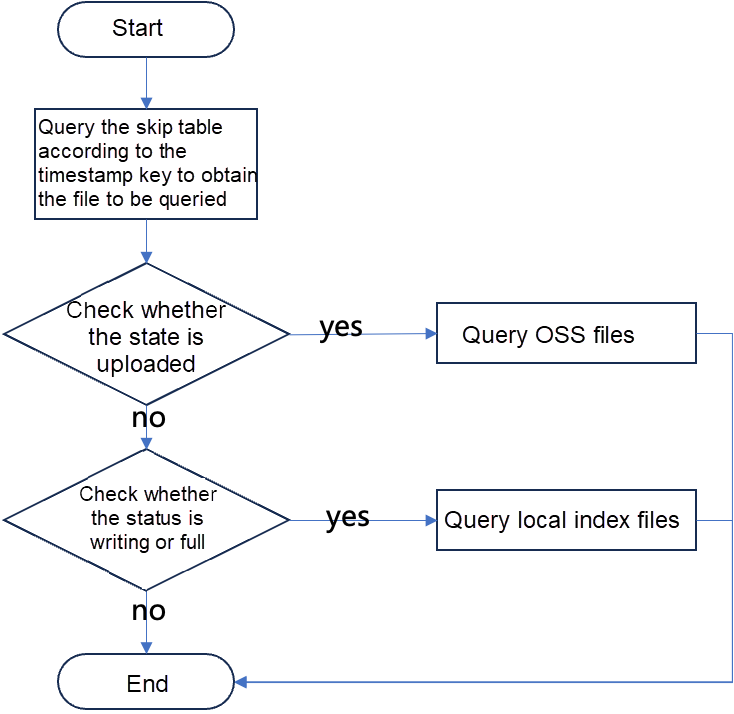

2. The index query thread supports querying index files in different states. The specific query policies are as follows:

3. The backend scheduled task thread is mainly responsible for compacting files that are in the writing state and are full. When compacting a file, the thread must first obtain the read-write lock of the corresponding file to avoid concurrent access to the file by other threads. After compaction, the file is in the compacted state. Then, the thread needs to upload the compacted file to the OSS to become an OSS file. After upload, the file state is switched to uploaded. During the upload, the thread needs to release the read-write lock of the file.

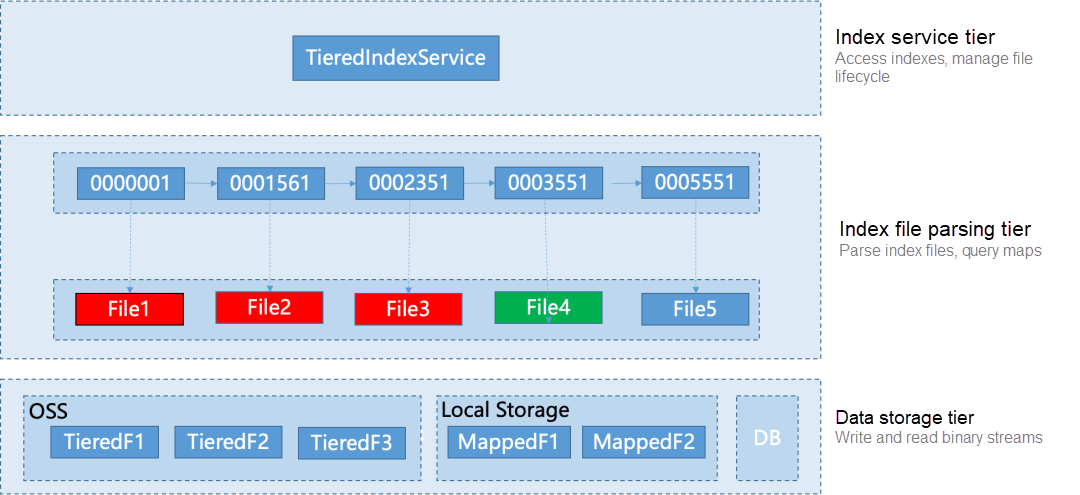

To improve the scalability of the system and for the convenience of writing unit tests, the whole index service system adopts a tiered design. It consists of three tiers from top to bottom: index service tier, index file parsing tier, and data storage tier. Different tiers are responsible for different tasks. The tiers are decoupled from each other, and the upper tier only depends on the services provided by the lower tier.

• Index service tier: This tier provides message index services for RocketMQ. It is responsible for storing and querying message indexes and managing the lifecycle of index files, including creating, compacting, uploading, and destroying files.

• Index file parsing tier: This tier mainly parses the format of single index files in different states, and provides KV query and storage services for single files. Specifically, this tier is responsible for reading the data in an index file and parsing it into a readable format for upper tiers to call.

• Data storage tier: This tier is responsible for writing and reading binary streams and supports different types of storage formats, including OSS, local disk, and database. Specifically, this tier stores data in a local disk, OSS, or database file. When reading data, this tier is responsible for obtaining data from local disk or OSS files and converting it into binary streams to return to the caller.

Based on the idea of tiered design, the whole index service is divided into three different tiers, which makes the system easier to scale and maintain and makes it convenient for subsequent upgrades and maintenance. Meanwhile, the clear responsibilities between the decoupled tiers make it easy to carry out unit testing and maintenance.

Index files have different states, so they are managed and maintained through the skip table data structure. When the system fails, index files in different states need to be recovered. To this end, we sort files and put them into different sorts of folders, and manage, and record the index files of different states based on the folder name.

When recovering the system, we adopted the following process design:

RocksDB is a high-performance KV persistent storage engine developed based on Google LevelDB. It adopts the Log-Structured Merge-tree (LSM-tree) as the basic data storage structure. When data is written to RocksDB, it is first written to a MemTable in memory and persisted to a Write-Ahead-Log (WAL) file on disk.

Whenever the cached data in the MemTable reaches a preset volume, the MemTable and WAL file will be in an immutable state, and a new MemTable and WAL will be allocated for subsequent writes. Then, the same key in the immutable MemTable will be merged. The LSM-tree has multiple tiers, each consisting of multiple SSTables, and the latest SSTable will be placed at the bottom tier. SSTables in lower tiers are created through asynchronous compression.

The total size of SSTables in each tier is determined by the configuration parameter. When the size of data in the L tier exceeds the preset value, the overlapping parts of the SSTables in the L tier and the L +1 tier are merged. This process is repeated to optimize the data read performance. However, compaction may result in greater read and write amplification.

InnoDB is a transactional storage engine of MySQL. It provides high performance, high reliability, and high concurrency. Its underlying is implemented by using B+ tree, and data files are index files. To address data loss during downtime, InnoDB uses redo log to synchronously record writes. As data is sequentially written to redo log, the writing efficiency is high. Data is first written to the cache and redo log, and then asynchronously written from the redo log to the B+ tree. Due to the tiered structure of the B+ tree, there is an upper limit on the number of indexes that can be supported. For example, if the number of records in a single table exceeds hundreds of millions, significant performance degradation will occur. At the same time, the splitting and merging of B+ tree leaf nodes will also bring more read and write overhead.

RocketMQ involves a large number of write requests, a small number of read requests, and zero update requests. It is a storage system where data is approximately ordered by time. Therefore, RocketMQ can separate cold and hot storage in a simple and efficient manner based on time. Asynchronous file format conversion is also supported to reduce the overall system time overhead.

The current index design is simple and reliable, but there are still some design shortcomings. For instance, when a message queue queries a message by key, there is a maxCount parameter involved. Due to concurrent queries on different index files, the current system implementation has defects. It may require querying all index files and then aggregating the results to check if the specified maxCount parameter is reached.

When there are numerous index files, a large number of queries can result in unnecessary time overhead. Therefore, a reasonable solution would be to implement a multi-threaded global counter that stops subsequent queries on index files once the maxCount is met. This introduces potential thread safety issues when accessing multiple threads.

The tiered storage index in RocketMQ offers KV query and storage services, allowing for the redesign of IndexItems. It also enables the system to migrate to other systems. To provide index services to other systems, it simply requires adding a new class that inherits the index items as the parent class, rewriting relevant functions, and adding custom fields.

[1] Zhang, H., Wu, X., & Freedman, M. J. (2008). PacificA: Replication in Log-Based Distributed Storage Systems. [Online]. Available:

https://www.microsoft.com/en-us/research/wp-content/uploads/2008/02/tr-2008-25.pdf

[2] Facebook. (n.d.). RocksDB Compactions. [Online]. Available:

https://github.com/facebook/rocksdb/wiki/Compaction

[3] Oracle Corporation. (n.d.). "Inside InnoDB: The InnoDB Storage Engine" - Official MySQL Documentation. [Online]. Available:

https://dev.mysql.com/doc/refman/8.0/en/innodb-internals.html

667 posts | 55 followers

FollowAlibaba Cloud Native Community - March 14, 2023

Alibaba Cloud Native Community - February 1, 2024

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native Community - July 12, 2022

Alibaba Cloud Native - July 18, 2024

Alibaba Developer - January 28, 2021

667 posts | 55 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Message Queue for RabbitMQ

Message Queue for RabbitMQ

A distributed, fully managed, and professional messaging service that features high throughput, low latency, and high scalability.

Learn More NAT(NAT Gateway)

NAT(NAT Gateway)

A public Internet gateway for flexible usage of network resources and access to VPC.

Learn MoreMore Posts by Alibaba Cloud Native Community