By Yuan Xiaodong, Cheng Junjie

With the popularization and development of mobile Internet and cloud computing technologies across various industries, big data computing frameworks such as Flink and Spark have become popular. These big data frameworks, which adopt a centralized Master-Slave architecture, have heavy dependencies and deployments. Each task has a large overhead and cost.

RocketMQ Streams focuses on building a lightweight computing engine. In addition to message queues, it has no additional dependencies. It has made a lot of optimizations for filtering scenarios, improved performance by 3-5 times, and saved resources by 50%-80%.

RocketMQ Streams is suitable for scenarios of large data volume → high filtering → light-window computing. It focuses on light resources and high performance, so it has great advantages in resource-sensitive scenarios, with a minimum of 1core and 1g deployable. Recommended application scenarios include security, risk control, edge computing, and message queues stream computing.

RocketMQ Streams is compatible with SQL, UDF, UDTF, and UDAF of Blink (the internal version of Flink). Most Blink tasks can be migrated to RocketMQ Streams tasks. In the future, a converged version with Flink will be released. RocketMQ Streams can be released as Flink tasks. It can not only enjoy the high performance and light resources brought by RocketMQ Streams but also can be operated and managed in a unified manner with existing Flink tasks.

This section provides an overall introduction to RocketMQ Streams from the following aspects: basic introduction, design ideas, and features.

RocketMQ Streams is a Lib package, which runs as soon as it starts and is integrated with the business; It has SQL engine capability, is compatible with Blink SQL syntax, and is compatible with Blink UDF/UDTF/UDAF. RocketMQ Streams includes an ETL engine, which can realize ETL, filtering and transfer of data without coding. It is based on data development SDK, and a large number of practical components can be directly used, such as source, sink, script, filter, lease, scheduler, and configurable.

Based on the preceding implementation ideas, RocketMQ Streams have the following features:

1 core or 1g can be deployed with light dependency. In the test scenario, it can be run by writing the main method with the Jar package. In the formal environment, it depends on MSMQ and storage at most (storage is optional, mainly for fault tolerance during shard switching).

It can Implement a high-filter optimizer, including pre-fingerprint filtering, the automatic merging of same-origin rules, hyperscan acceleration, and expression fingerprints. This improves performance by 3-5 times and saves 50% more resources than before.

It is designed to store data in highly compressed memory, without the overhead of java headers and alignment. The storing data is close to the original size. It operates with pure memory and maximizes performance. In addition, it provides multi-threaded concurrent loading for Mysql to improve the speed of loading dimension tables.

1) Source can be expanded on demand and has been implemented: RocketMQ, File, Kafka;

2) Sink can be expanded on demand and has been implemented: RocketMQ, File, Kafka, Mysql, and ES;

3) UDF/UDTF/UDAF can be extended according to Blink specification;

4) Lighter UDF/UDTF extension capability is provided, and function extension can be completed without any dependency.

It includes an accurate calculation of one-time flexible windows, dual-stream join, statistics, window function, and various conversion filtering to meet various scenarios of big data development and support elastic fault tolerance.

RocketMQ Streams provides two external SDKs. One is DSL SDK and the other is SQL SDK, which can be selected as needed. DSL SDK supports DSL semantics in real-time scenarios. SQL SDK is compatible with the syntax of Blink (internal version of Flink) SQL. Most Blink SQL can be run through RocketMQ Streams.

Next, let's introduce these two SDKs in detail.

1) JDK 1.8 or later;

2) Maven 3.2 or later.

When using DSL SDK to develop real-time tasks, you need to make the following preparations:

<dependency>

<groupId>org.apache.rocketmq</groupId>

<artifactId>rocketmq-streams-clients</artifactId>

<version>1.0.0-SNAPSHOT</version>

</dependency>After the preparation is completed, you can develop your real-time program.

DataStreamSource source=StreamBuilder.dataStream("namespace","pipeline");

source.fromFile("~/admin/data/text.txt",false)

.map(message->message + "--")

.toPrint(1)

.start();Here:

1) Namespace is business isolated. The same business can be written as the same Namespace. The same Namespace can be run in the process in the task scheduling and can share some configurations;

2) pipelineName can be understood as job name, which distinguishes job;

3) The DataStreamSource is to create sources, and then the program runs. The final result is to add "--" to the original message and print it out.

RocketMQ streams provide a wide range of operators, including:

1) source operators: It includes fromFile, fromRocketMQ, fromKafka, and the from the operator that can customize the source;

2) sink operators: It includes toFile, toRocketMQ, toKafka, toDB, toPrint, toES, and to operators that can customize sink;

3) action operator: It includes multiple operators such as Filter, Expression, Script, selectFields, Union, forEach, Split, Select, Join, and Window.

It completes the development based on the DSL SDK, and run the following command to type the jar package, execute the jar, or execute the main method of the task:

mvn -Prelease-all -DskipTests clean install -U

java -jar jarName mainClass & <dependency>

<groupId>com.alibaba</groupId>

<artifactId>rsqldb-clients</artifactId>

<version>1.0.0-SNAPSHOT</version>

</dependency>First, develop business logic code, which can be saved as a file or used in the text:

CREATE FUNCTION json_concat as 'xxx.xxx.JsonConcat';

CREATE TABLE `table_name` (

`scan_time` VARCHAR,

`file_name` VARCHAR,

`cmdline` VARCHAR,

) WITH (

type='file',

filePath='/tmp/file.txt',

isJsonData='true',

msgIsJsonArray='false'

);

-- data normalization

create view data_filter as

select

*

from (

select

scan_time as logtime

, lower(cmdline) as lower_cmdline

, file_name as proc_name

from

table_name

)x

where

(

lower(proc_name) like '%.xxxxxx'

or lower_cmdline like 'xxxxx%'

or lower_cmdline like 'xxxxxxx%'

or lower_cmdline like 'xxxx'

or lower_cmdline like 'xxxxxx'

)

;

CREATE TABLE `output` (

`logtime` VARCHAR

, `lower_cmdline` VARCHAR

, `proc_name` VARCHAR

) WITH (

type = 'print'

);

insert into output

select

*

from

aegis_log_proc_format_raw

;Here:

1) CREATE FUNCTION: Introduce external functions to support business logic, including flink and system functions;

2) CREATE Table: Create source/sink;

3) CREATE VIEW: Perform field conversion, splitting and filtering;

4) INSERT INTO: writes data to the sink;

5) Function: built-in function and udf function.

RocketMQ streams support three SQL extension capabilities. The implementation is shown below:

1) Use Blink UDF/UDTF/UDAF to extend SQL capabilities;

2) Extend SQL capabilities through RocketMQ streams, as long as the java bean whose function name is eval is implemented;

3) Extend SQL capabilities through existing java code. The create function name is the method name of the Java class.

You can download the latest RocketMQ Streams code and build it.

cd rsqldb/

mvn -Prelease-all -DskipTests clean install -U

cp rsqldb-runner/target/rocketmq-streams-sql-{version number}-Directory for distribution.tar.gz deploymentDecompress the tar.gz package and enter the directory structure

tar -xvf rocketmq-streams-{version number}-distribution.tar.gz

cd rocketmq-streams-{version numberThe following is the directory structure:

1) Bin instruction directory(start and stop instructions)

2) Conf configuration directory(log configuration and application-related configuration files)

3) SQL in jobs(It can be stored in two levels of directories.)

4) Extended UDF/UDTF/UDAF/Source/Sink stored by ext

5) Lib dependency package

6) Log

# Specify the path of the SQL statement to start the real-time task.

bin/start-sql.sh sql_file_pathIf you want to execute SQL in batches, you can put SQL into the jobs directory, which can have up to two layers. Put SQL into the corresponding directory, and specify subdirectories or SQL to execute tasks by start.

# If any parameters are not added to the stop process, all currently running tasks will be stopped at the same time.

bin/stop.sh

# If the task name is added to the stop process, all currently running tasks with the same name will be stopped.

bin/stop.sh sqlnameCurrently, all operational logs are stored in the log/catalina.out.

After the basic understanding of RocketMQ Streams, let's look at the design ideas which are introduced from two aspects: design objectives and strategies:

1) Less dependency, simple deployment. 1 core and 1GB single instance can be deployed, and can be expanded at will;

2) To create scene advantages and focus on scenes with large data volume-> high filtering-> light window calculation. Full functional coverage, and realize the required big data characteristics: Exactly-ONCE, flexible window (scroll, slide, session window);

3) On the premise of low resources, it is necessary to make a breakthrough in high filtration performance and create performance advantages;

4) Compatible with Blink SQL, UDF/UDTF/UDAF, making it easier for non-technical personnel to get started.

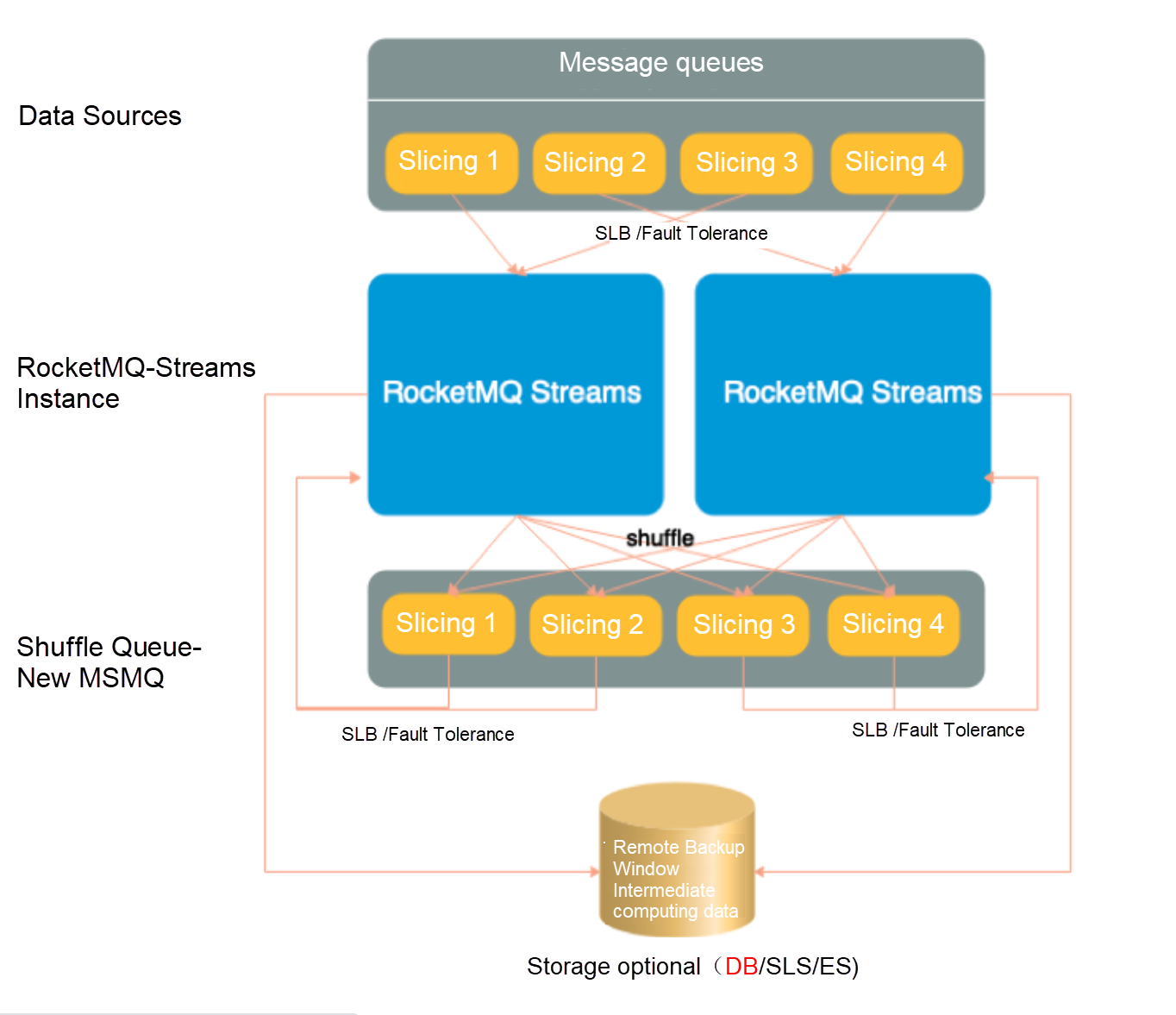

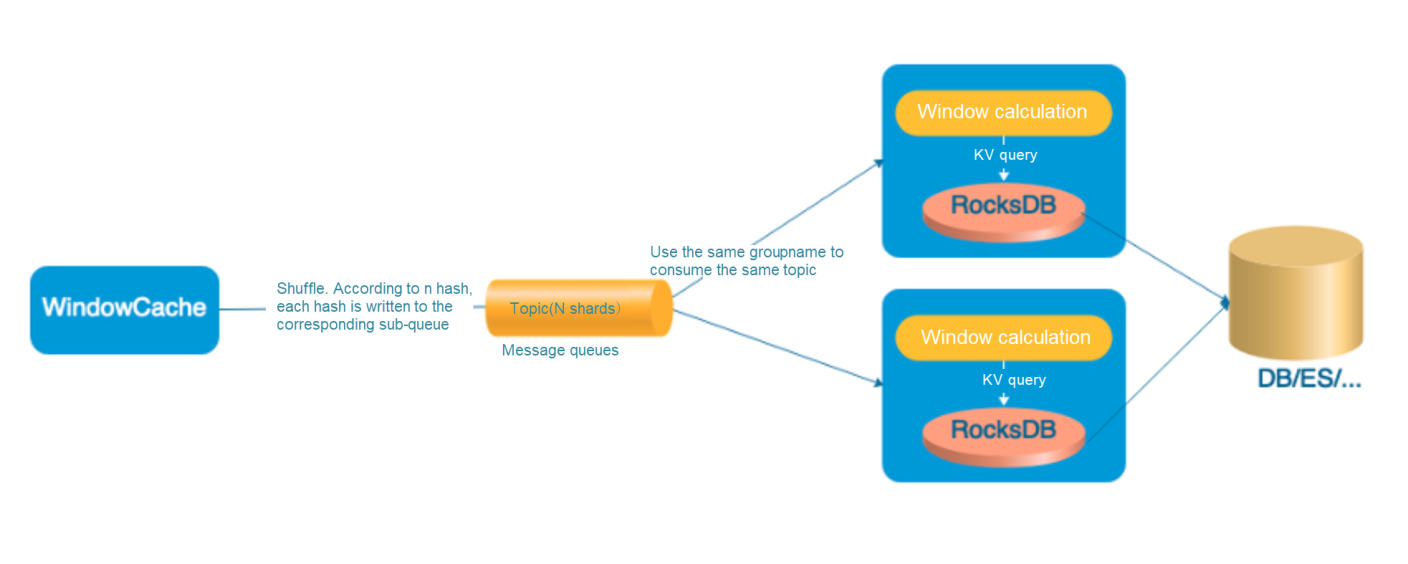

1) Adopt distributed architecture of shared-nothing, which relies on message queues for SLB and fault tolerance mechanism. A single instance can start it, and the instance implementation capability is expanded. The concurrency capability depends on the number of shards.

2) Use message queues shards as shuffle, and use message queues SLB to realize fault tolerance;

3) Use storage to implement state backup and implement the semantics of Exactly-ONCE. Use structured remote storage to fast startup and not wait for local storage recovery.

4) Focus on building a filter optimizer and through pre-fingerprint filtering, automatically merge homologous rules. Hyperscan acceleration and expression fingerprint can improve filtering performance.

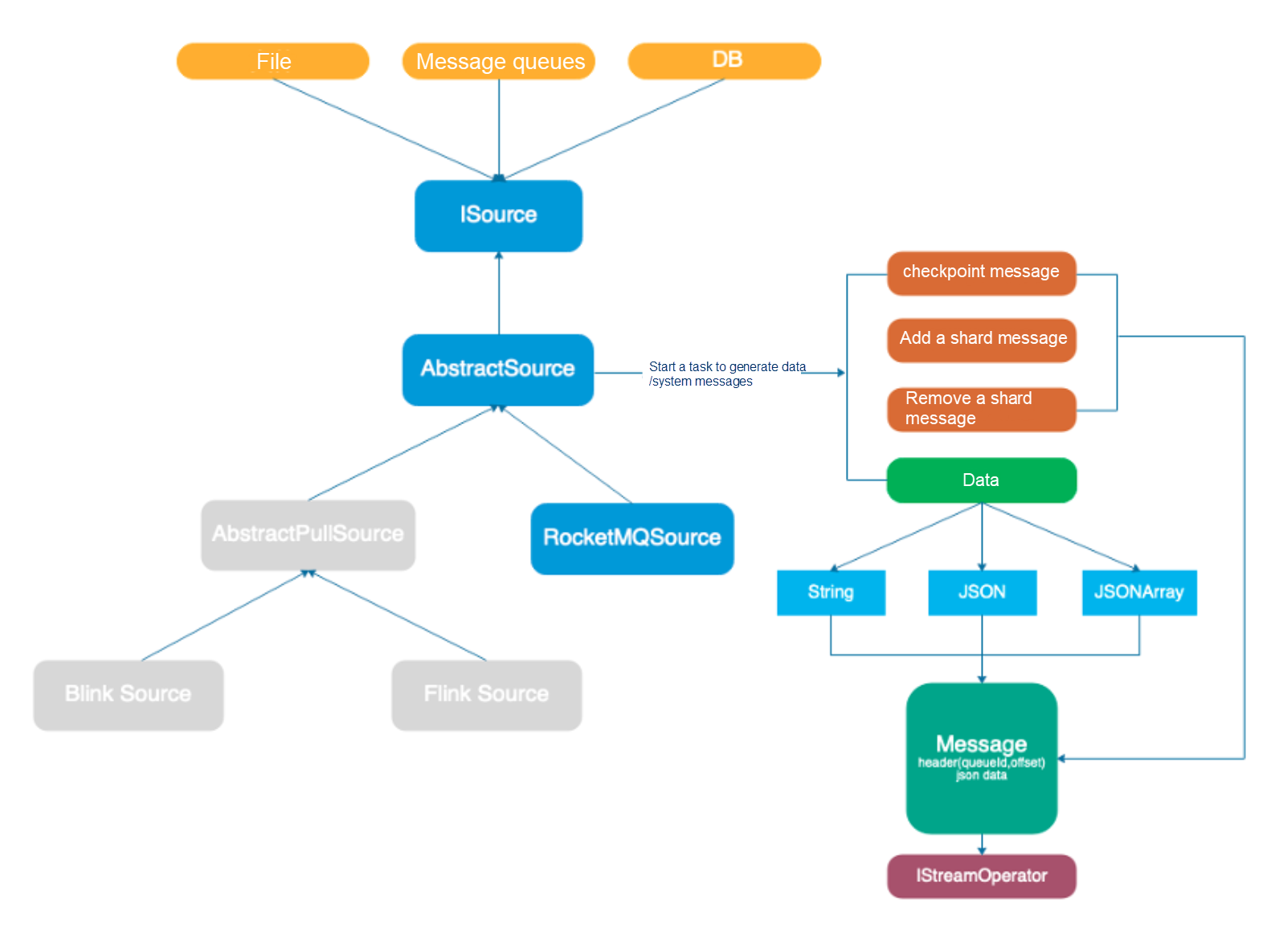

1) Source is required to implement the semantics of consuming at least once. The system implements it by using a checkpoint system message. A checkpoint message is sent before the offset is submitted to notify all operators to refresh the memory.

2) Source supports automatic load and fault tolerance for sharding

3) The data source uses the start method to start consumer to obtain the message; 4) The original message is encoded and the additional header is packaged as a Message to be delivered to subsequent operators.

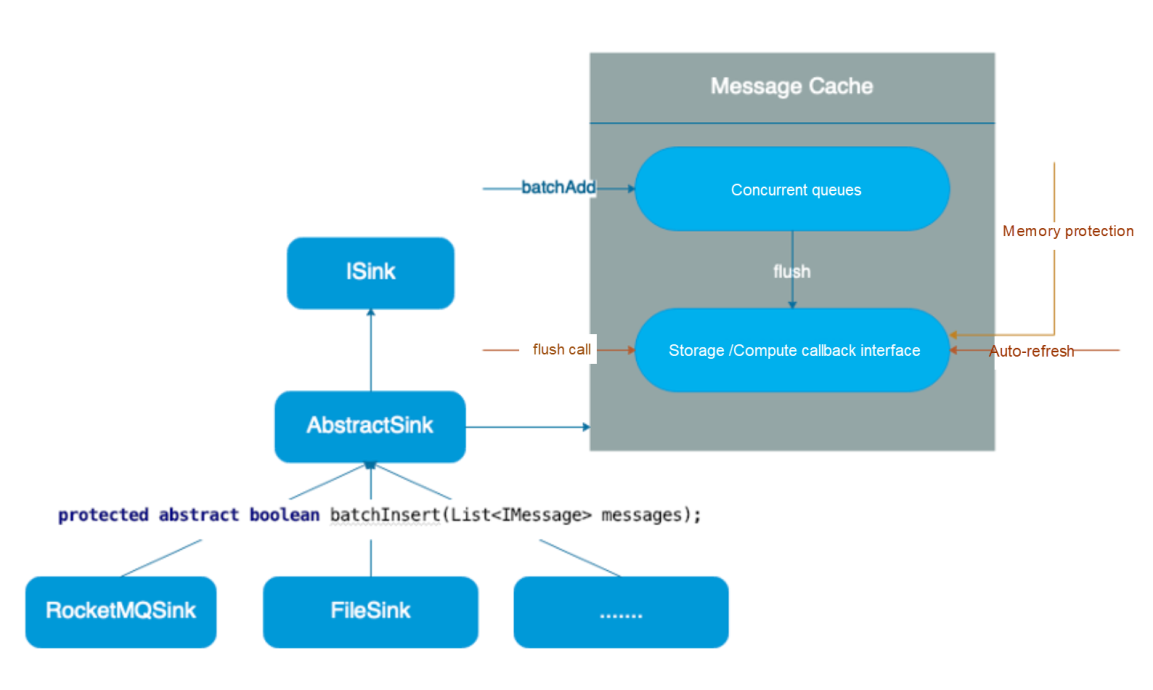

1) Sink is a combination of real-time and throughput;

2) To implement a sink, you only need to inherit the AbstractSink class and implement the batchInsert method. BatchInsert means that a batch of data is written to storage, which requires subclasses to call the storage interface to implement and try to apply the storage batch interface to improve throughput.

3) The conventional usage is to write message->cache->flush-> storage. The system will ensure that the amount of write storage in each batch does not exceed the amount of batchsize. If it exceeds, it will be split into multiple batches of write.

4) Sink has a cache. By default, data is written cache and stored in batches to improve throughput (One shard has one cache.);

5) Automatic refresh can be enabled. Each shard will have a thread to regularly refresh cache data to storage to improve real-time performance. Implementation class: DataSourceAutoFlushTask;

6) Refresh cache to storage by calling flush;

7) The cache in Sink has memory protection. When the number of cached messages is more than the batch size, it will be forced to refresh and release the memory.

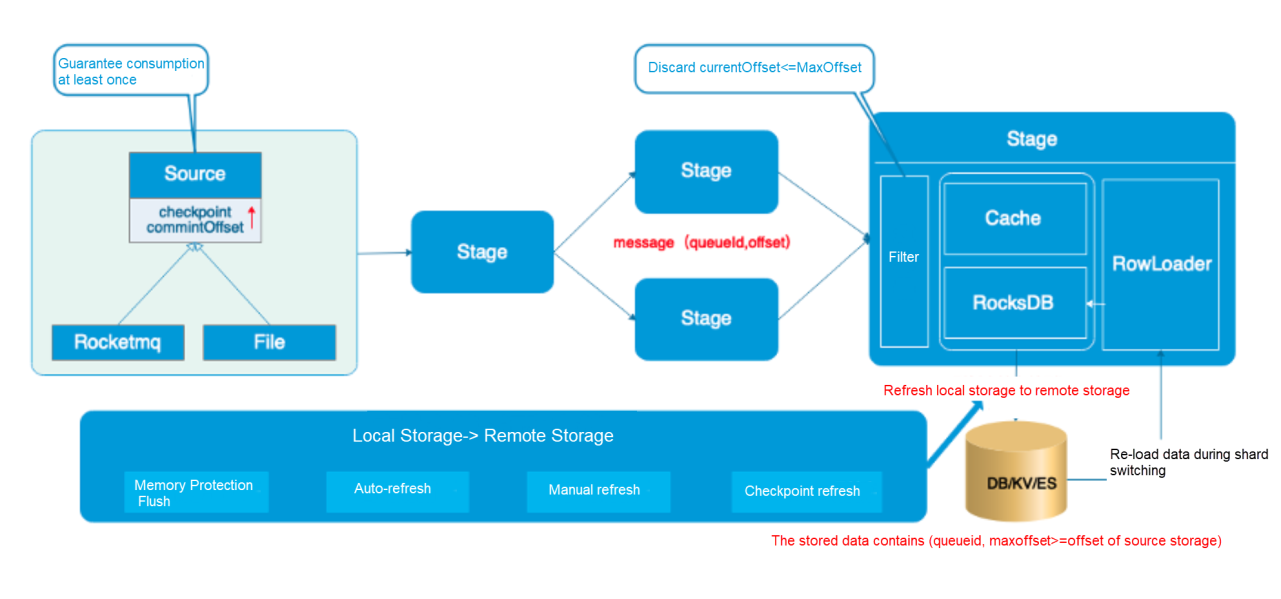

1) Source ensures that the checkpoint system message will be sent when the commit is offset. The component receiving the message will complete saving, and the message will be consumed at least once;

2) Each message will have a message header, which encapsulates queueld and offset;

3) When the component stores data, it will store queueld and the maximum offset. When there is a message duplication, it will be deduplicated according to the maxoffset;

4) Memory protection. A checkpoint cycle may have multiple flushes (number of triggers) to ensure controllable memory usage.

1) Support scrolling, sliding, and session windows. It also supports event time and natural time (the time when the message enters the operator);

2) Emit syntax is supported. Data can be updated every n segments before or after triggering. For example, in a one-hour window, the latest results can be seen every minute before the window is triggered. After the window is triggered, the last data can be seen and be updated every 10 minutes.

3) Support high-performance model and high-reliability mode. The high-performance mode does not rely on remote storage, but there is a risk of losing window data when shard switching;

4) Quickstart. There is no need to wait for the local storage to recover. When an error or shard switch occurs, asynchronously recover data from the remote storage, and access the remote storage calculation;

5) Use massage queues SLB to realize capacity expansion and reduction. Each queue is a group, and a group is consumed by one machine at the same moment;

6) Normal computing depends on local storage and has similar computing performance with flink.

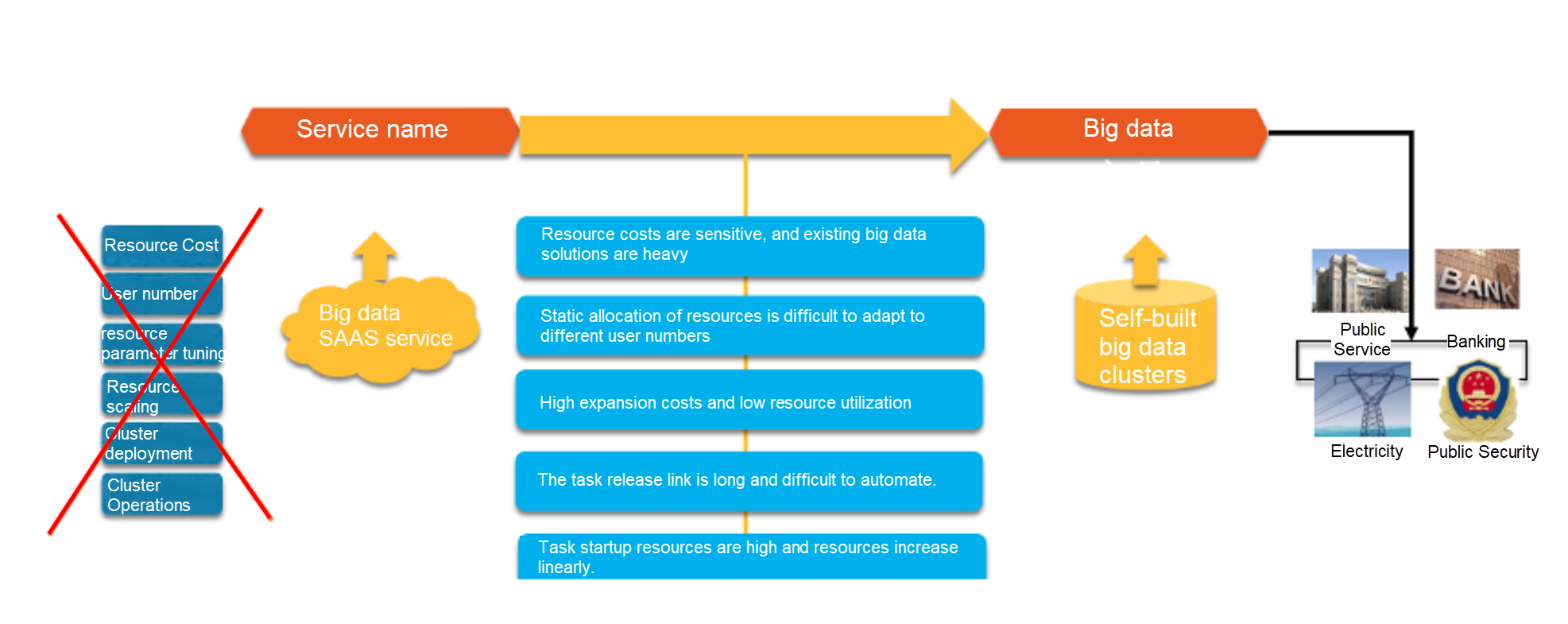

From the public cloud to Apsara Stack, there are new problems. Since SaaS services such as big data are non-essential for Apsara Stack, and the minimum output scale is relatively large, the user cost increases a lot and it is difficult to implement. As a result, security capabilities are unable to be quickly synchronized to Apsara Stack.

1) Build a lightweight computing engine based on security scenarios. According to the characteristics of security and high filtering scenarios, it can be optimized for high filtering scenarios, and then do heavier statistics, windows, and join operations. Since the filtering rate is relatively high, you can use lighter solutions to achieve statistics and join operations;

2) Both SQL and the engine can be hot upgraded.

1) Rule coverage: a user-created engine that overrides 100% rules (regular expression, join, and statistics);

2) Light resources. Memory is 1/24 of the public cloud engine and CPU is 1/6. It depends on the filter optimizer. The resources do not increase linearly with rules. New rules have no resource pressure, supporting tens of millions of intelligence through high compression table;

3) SQL releases through c/s deployment mode. SQL engine hot release, especially in network protection scenarios, can quickly launch rules;

4) Performance optimization. Thematic performance optimization of core components is implemented to maintain high performance and each instance (2G,4 cores, 41 rules) is above 5000qps.

1) Integrate with RocketMQ, remove DB dependencies, and integrate RocketMQ KV;

2) Hybrid with RocketMQ to support local computing and make use of local features to achive high performance;

3) Create the best practices for edge computing

1) The pull consumption method is supported. The checkpoint is refreshed asynchronously.

2) Compatible with blink/flink connector.

1) Increase the data access capability of files and syslog;

2) Compatible with Grok parsing and increase the parsing capability of common logs;

3) Best practice for making log ETL

1) Window multi-scene test to improve stability and optimize performance;

2) Supplement test cases, documents, and application scenarios.

This is the overall introduction to RocketMQ Stream. I hope it will be helpful and enlightening to you.

Observability and Cause Diagnosis of DNS Faults in Kubernetes Clusters

A Discussion on the Cost Reduction and Efficiency Improvement of Enterprises

664 posts | 55 followers

FollowAlibaba Cloud Community - December 21, 2021

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native - November 13, 2024

Alibaba Cloud Native Community - July 12, 2022

Alibaba Cloud Native Community - May 16, 2023

Alibaba Cloud Native Community - March 20, 2023

664 posts | 55 followers

Follow Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Bastionhost

Bastionhost

A unified, efficient, and secure platform that provides cloud-based O&M, access control, and operation audit.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn MoreMore Posts by Alibaba Cloud Native Community