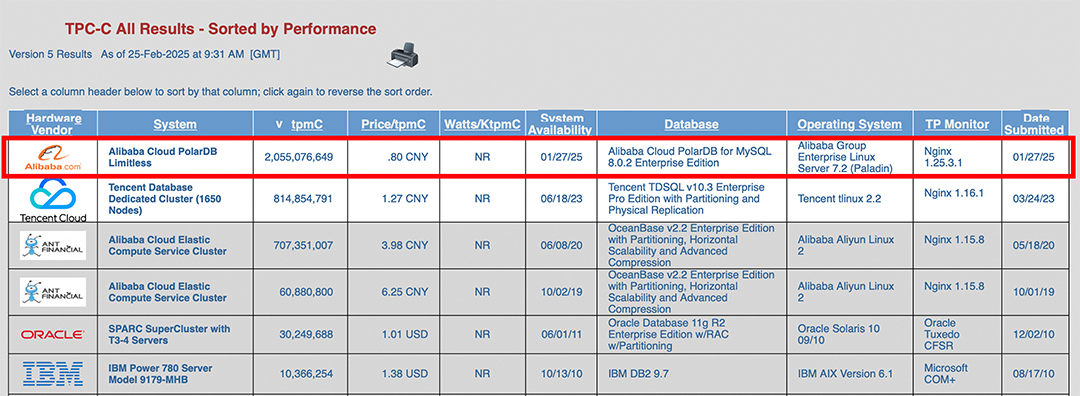

Recently, PolarDB topped the TPC-C benchmark test list with a performance that exceeded the previous record by 2.5 times, setting a new TPC-C world record for performance and cost-effectiveness with a performance of 2.055 billion transactions per minute (tpmC) and a unit cost of CNY 0.8 (price/tpmC).

Each seemingly simple number contains countless technical personnel's ultimate pursuit of database performance, cost-effectiveness, and stability. The pace of innovation in PolarDB has never stopped. A series of articles on "PolarDB's Technical Secrets of Topping TPC-C" are hereby released to tell you the story behind the "Double First Place". Please stay tuned!

This is the fifth article in the series - Technical Secrets of PolarDB - Elastic Parallel Query.

Previous articles:

TPC-C is a benchmark model issued by the Transaction Processing Performance Council (TPC) specifically designed to evaluate OLTP (Online Transaction Processing) systems. It covers typical database processing paths such as addition, deletion, modification, and query to test the OLTP performance of the database. The final performance metric is measured by tpmC (transaction per minute). The TPC-C benchmark model intuitively evaluates the performance of a database.

We use PolarDB for MySQL 8.0.2 for this TPC-C benchmark test. PolarDB uses a cloud-native architecture that efficiently combines software and hardware to improve performance, scalability, and cost-effectiveness. Its innovative cloud-native architecture breaks through the scalability bottleneck of a single cluster and supports the scale-out of thousands of nodes. A single cluster can manage 100 petabytes of data. In addition to breakthroughs in write performance, queries also have the ability to scale linearly. The query scenarios are divided into:

• Query data only involves a single node, such as a transaction query in TPC-C. This type of query is directly routed to the corresponding node for calculation.

• Query data involves multiple or all nodes, such as partial queries in TPC-C audit. Such queries are processed by the ePQ parallel computing engine for distributed queries.

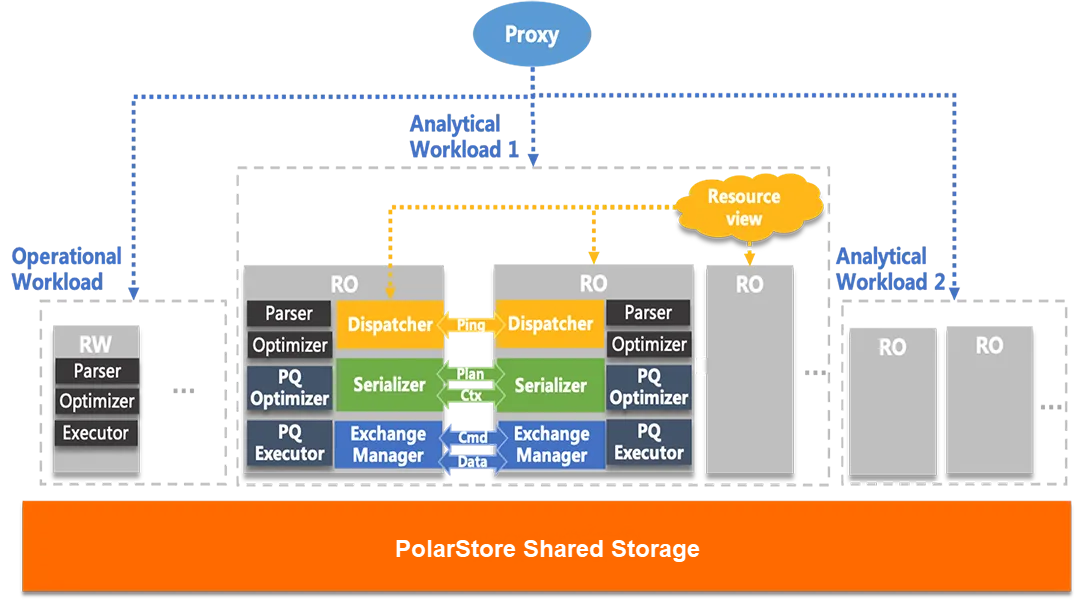

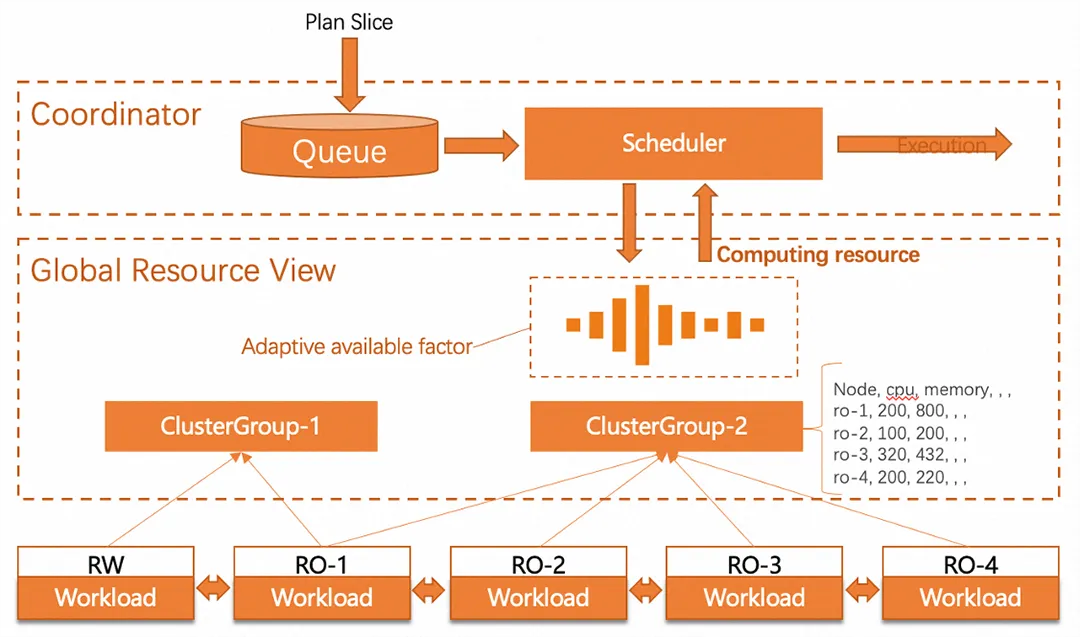

PolarDB for MySQL provides an enterprise-level query acceleration feature, Elastic Parallel Query (ePQ). This feature supports both single-node and multi-node parallel modes. Single-node parallel accelerates queries by using the computing resources within the node. Multi-node parallel accelerates queries by using the idle resources of any node in a cluster. This feature provides distributed execution. From the perspective of users, PolarDB ePQ provides external services by using cluster endpoints. Different node groups constitute cluster groups. You can configure different groups for businesses in different scenarios and configure appropriate parallel policies for each group to implement business isolation. The following figure shows the deployment architecture of ePQ:

The deployment architecture of PolarDB ePQ

The ePQ parallel computing engine provides a distributed execution framework with extreme scalability for PolarDB. In the mode of one primary node and multiple read-only nodes, all data can be accessed by any node due to the shared storage, and the sub-tasks of ePQ parallel computing can be scheduled to run on any node. The scheduler mainly considers resource load. However, in the multi-master sharded table mode of PolarDB, each physical partition is accessed by the corresponding node. The ePQ parallel computing engine can also sense the distribution attributes of data and generate the optimal distributed execution plan based on the cost.

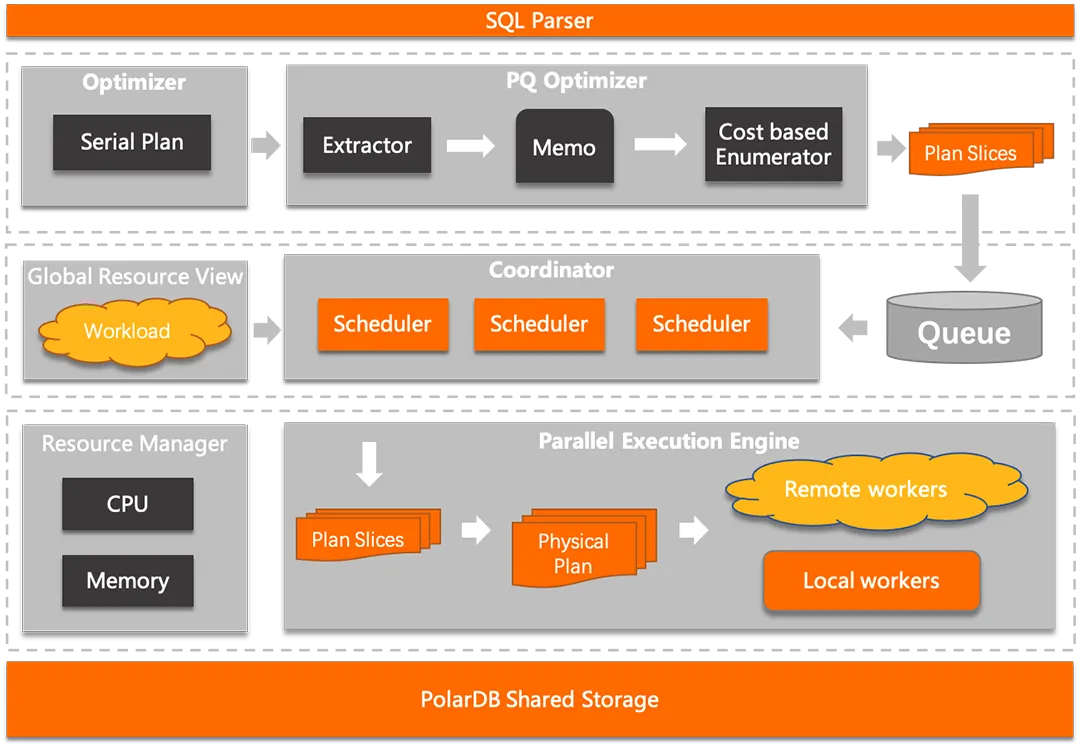

PolarDB ePQ splits a complex query into multiple compute tasks and then allocates the tasks to multiple cluster computing layers for parallel execution. This way, the compute resources of all nodes in a cluster can be fully utilized. The PolarDB ePQ feature is a kernel feature. All nodes can provide complete services. The following figure shows the architecture of elastic parallel query from the kernel perspective.

PolarDB ePQ architecture

• PQ optimizer obtains the serial plan and generates the optimal plan slices by using cost-based enumeration.

• Global resource view maintains real-time load information of all nodes in the cluster, making it easy for the coordinator to quickly find nodes with idle computing resources.

• The task coordinator schedules and executes tasks from queues based on real-time resource loads.

• The parallel execution engine generates physical plans based on scheduled plan slices and submits the physical plans to the executor. To execute physical plans in remote nodes, physical plans must be converted based on a binary protocol and then transmitted to the remote nodes over an internal network.

• Resource manager allows you to limit the resources used by parallel queries. For example, when the CPU utilization exceeds 70%, parallel execution is not selected.

• The cross-node parallel architecture is implemented based on the shared storage architecture, which ensures real-time query results. The cross-node consistent views ensure that the correct data can be read regardless of which nodes execute the subqueries.

PQ optimizer obtains the serial plan and generates the optimal plan slices by using cost-based enumeration.

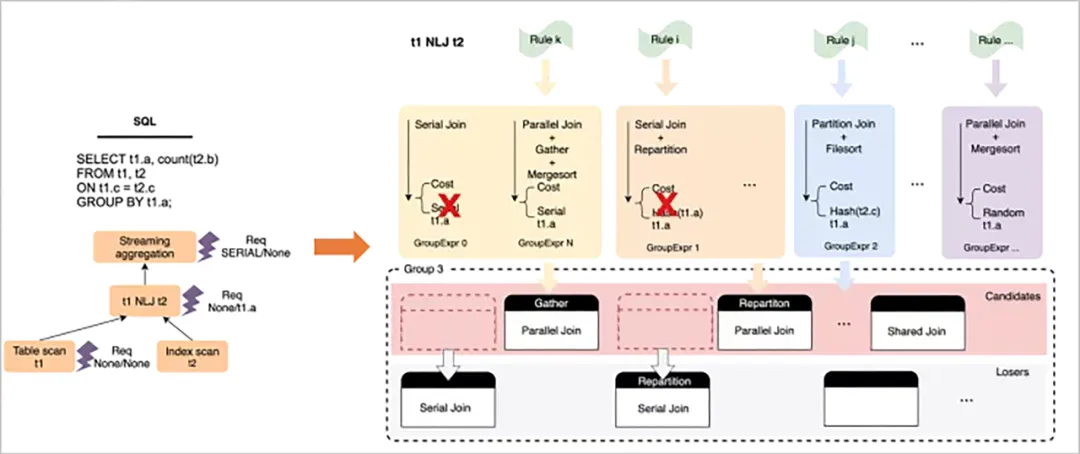

Parallel optimization is a bottom-up, exhaustive enumeration process based on dynamic programming. In the process, possible parallel execution and data distribution methods are enumerated for each operator, and physical equivalence classes are constructed based on the physical property (distribution + order) of the output data. Local pruning is then performed to obtain the optimal solution of the local sub-problem, pass it to the upper layer, and finally obtain the global optimal solution to the root operator.

The following is a brief example of the enumeration process for the t1 NLJ t2 operator:

After the overall enumeration is completed, a series of physical operator trees with data distribution Exchange Enforcer are generated in the plan space, and the optimal tree can be selected based on the cost. Then, a series of abstract descriptions of execution plans can be constructed with Enforcer as the sharding rule of the sub-plan, and output them to the plan generator.

The execution engine is primarily responsible for task dispatching, execution, and status management. The thread that receives customer queries is called the leader thread, which is responsible for dispatching parallel query tasks to each node. Each remote node also has a migrant leader thread. The workers tasks of the remote nodes are managed by their respective migrant leaders, and the leader is responsible for managing the migrant leader and local workers. Such a two-level management mode has the advantage of minimizing the transmission content and frequency of the remote signaling control channel.

There are two types of communication channels in the engine. One is the signaling control channel responsible for task dispatching and worker status collection, which is a bidirectional asynchronous message channel. The other tunnel is only responsible for transmitting the result data of the worker, which is a unidirectional transmission channel. Although the transmission direction is unidirectional, it needs to support multiple data shuffle methods, such as repartition/broadcast, and needs to support both local and remote cross-node modes.

The data-driven model of the engine adopts Pull mode, which can be flexibly embedded into MySQL's volcanic execution engine. In addition, Pull mode has the advantage of high real-time data and can achieve extreme pipeline processing.

Due to the shared storage architecture, parallel tasks can be scheduled to any node for execution in theory, but the goal of the parallel query is to use idle computing resources for acceleration, and does not harm customer online business.

The global resource view module is mainly responsible for maintaining real-time node load information. Each node collects its workload information, such as CPU/IO and memory, and then broadcasts the load information to all other nodes using UDP protocol. After broadcasting each other, all nodes maintain a resource view list of each node.

Adaptive available factor mechanism avoids resource contention. The principle is to set an available ratio factor for each node. Assuming that the remaining available resources of a node are found to be 100, and the initial factor is a conservative value, such as 20%, then only 20% of idle resources will be used at most. When it is detected that the resource utilization rate for n consecutive seconds still does not exceed the threshold, the factor ratio will be gradually increased. Once resource consumption is found to exceed expectations, the factor is quickly lowered. This is an adaptive adjustment mechanism with slow starts and fast callbacks to achieve a dynamic balance as much as possible.

Workload-based adaptive scheduling

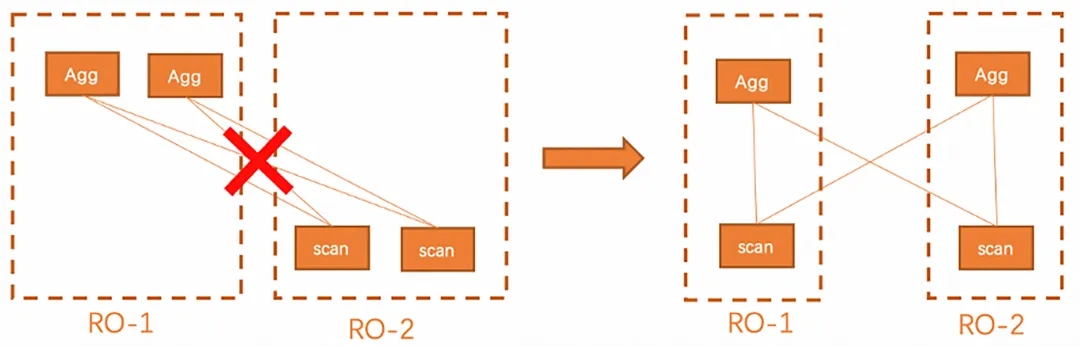

The worker allocation principle determines how to allocate multiple groups of workers in parallel queries within the known resource list:

1. The principle of minimum cross-node transmission: Since workers need to exchange data with each other, the system should minimize data transmission across nodes as much as possible.

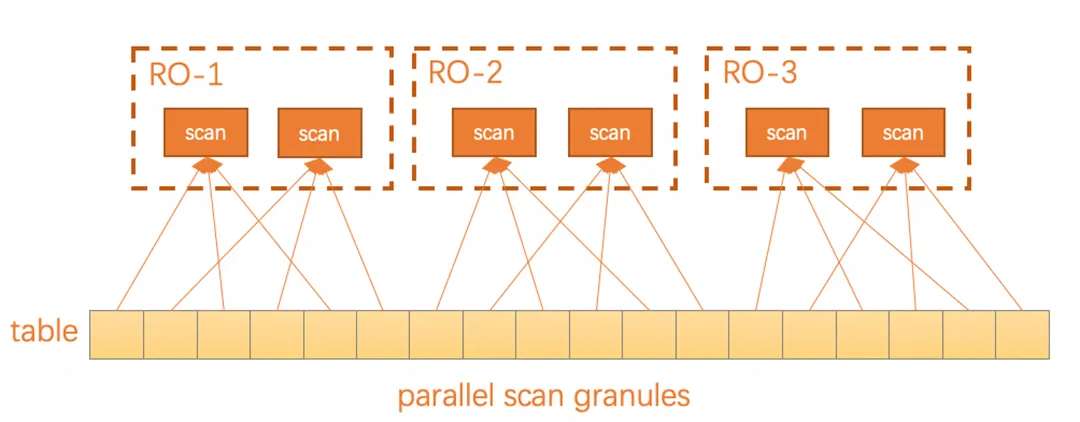

2. Workers on the same node try to access continuous data shards as they can achieve better cache affinity and superior performance.

Based on the comprehensive components of the ePQ execution engine, it can support a variety of parallel JOIN methods.

• Parallel NestLoop JOIN

• Parallel Hash JOIN

• Parallel Partition-wise JOIN

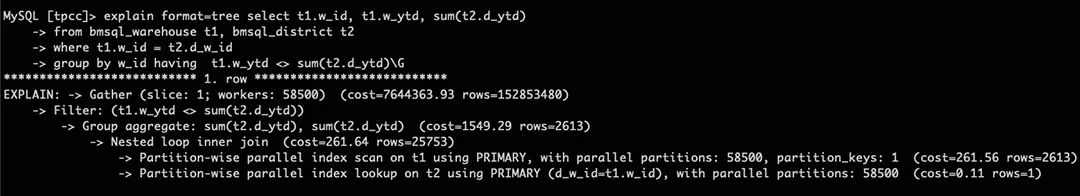

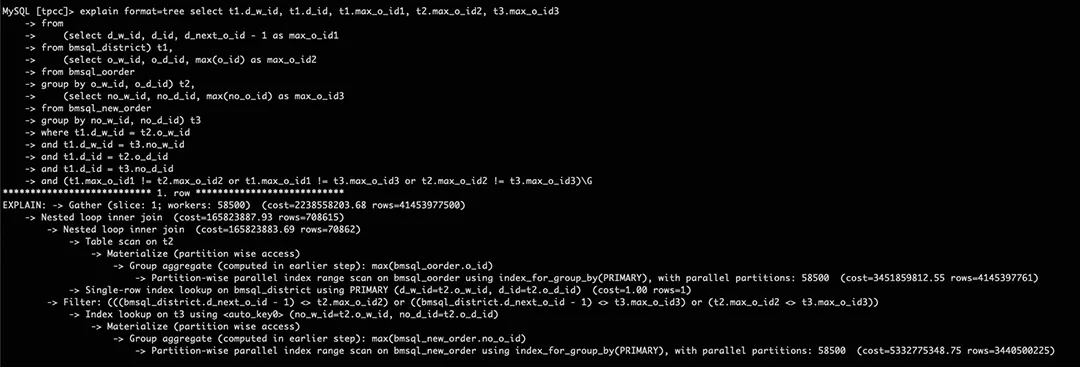

Parallel Partition-wise JOIN plays an important role in SQL statements of TPC-C Consistency audit because it uses the multi-master sharded table mode. When the join column is a partition key, data shuffle is not required, and the JOIN operation is completed on the local node. It supports not only Partition-wise Hash Join but also Partition-wise Nest loop Join. For example:

SQL statements in TPC-C Consistency 1

When a query statement contains derived tables and requires parameter JOIN operations, the general method is to materialize tables in parallel, and then broadcast the materialized tables as broadcast tables to other nodes to participate in the JOIN. When the result set of materialized tables is relatively large, it needs to be broadcast to thousands of nodes, leading to significant performance loss. Additionally, there are single-point bottlenecks in the shuffle process. Multiple such scenarios exist in the TPC-C Consistency audit, as exemplified by the query in consistency2:

select t1.d_w_id, t1.d_id, t1.max_o_id1, t2.max_o_id2, t3.max_o_id3

from

(select d_w_id, d_id, d_next_o_id - 1as max_o_id1

from bmsql_district) t1,

(select o_w_id, o_d_id, max(o_id) as max_o_id2

from bmsql_oorder

groupby o_w_id, o_d_id) t2,

(select no_w_id, no_d_id, max(no_o_id) as max_o_id3

from bmsql_new_order

groupby no_w_id, no_d_id) t3

where t1.d_w_id = t2.o_w_id

and t1.d_w_id = t3.no_w_id

and t1.d_id = t2.o_d_id

and t1.d_id = t3.no_d_id

and (t1.max_o_id1 != t2.max_o_id2 or t1.max_o_id1 != t3.max_o_id3 or t2.max_o_id2 != t3.max_o_id3);The two materialized tables t2 and t3 have group by operators, which do not support expansion and can only be materialized before JOIN. To optimize such queries, ePQ supports Partition-wise Materialize and pushes materialization down to each node for separate materialization. This approach eliminates the overhead of cross-node shuffle of data and the single-point bottleneck issue of gathering to the leader node and then broadcast, thus achieving more comprehensive parallelism.

Partition-wise Materialize in TPC-C Consistency 2 queries

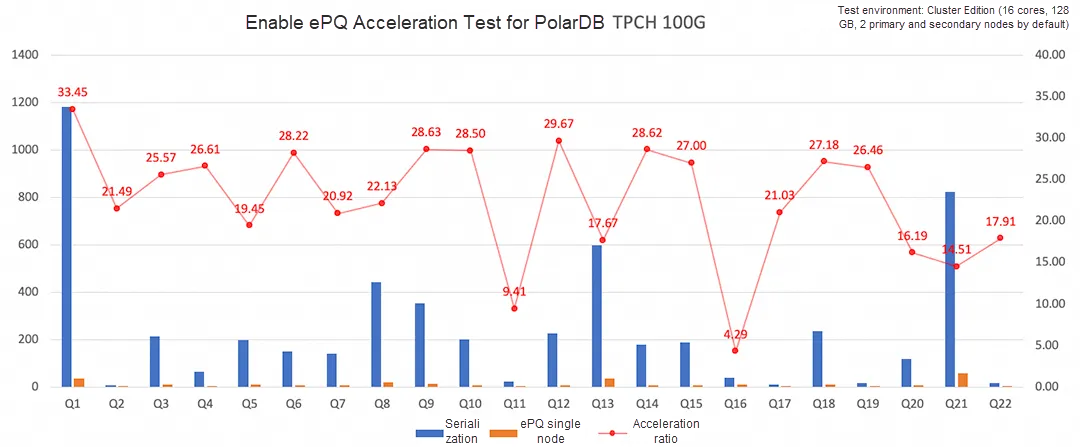

The TPC-C test examines transaction business scenarios. As a parallel execution framework, ePQ is mainly applied to accelerate light analysis queries in online businesses. The query acceleration ratio is an important metric for evaluating the performance of parallel queries. With the same computing resources, the acceleration ratio of parallel queries is compared with that of the original serial queries. The following is an acceleration ratio test conducted on a data volume of TPC-H 100G.

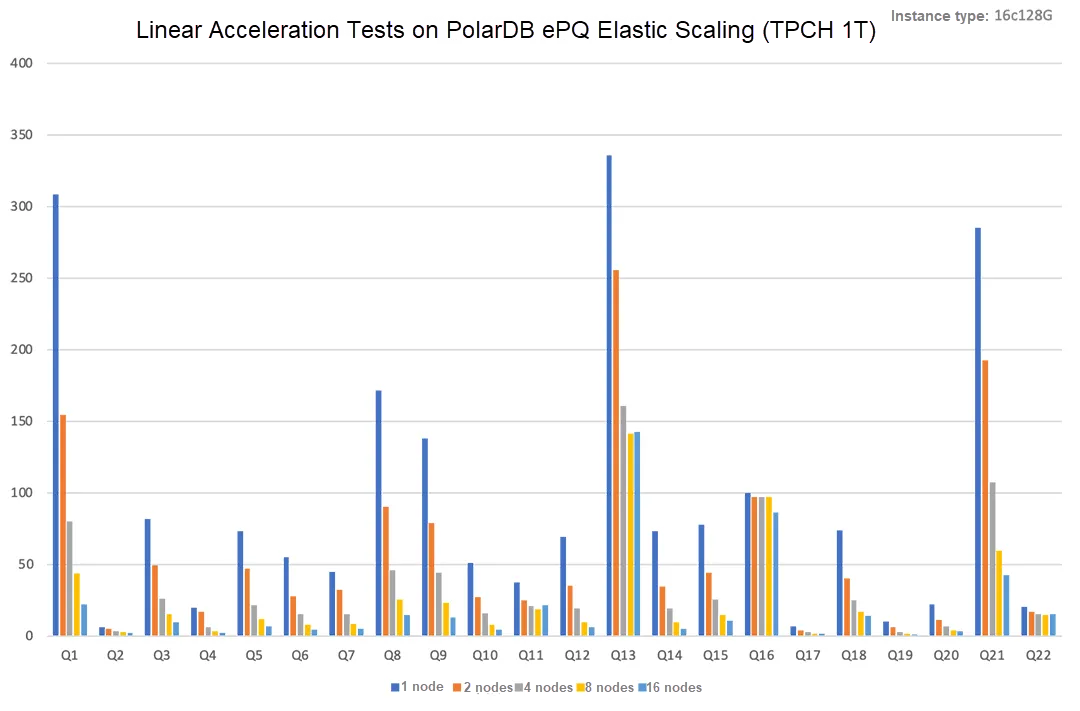

The test instance specifications are 16 cores, 128GB, and 2 primary and secondary nodes, and ePQ is enabled. In TPC-H, 22 SQL statements can be accelerated in parallel, and the average acceleration ratio is 22.5 times. Because ePQ supports distributed multi-node parallelism, scale-out nodes can continue linear acceleration. The following tests evaluate the linear acceleration capability of a larger amount of data (TPC-H 1T) in the scale-out scenario.

All 22 SQL statements support multi-node parallelism. 17 SQL statements can be linearly accelerated.

From the perspective of users, the core value of a cloud-native database is to provide scalable database services with low O&M costs, so that users can focus more on their own business. The critical capability is maintaining consistently predictable query performance as data volumes grow, ensuring sustainable access to key data insights and maximizing business value. With the growth of user data, performance degradation in report analysis queries that cannot be remedied through resource scaling constitutes an unacceptable limitation. Therefore, support for parallel queries with scalable linear acceleration capabilities represents an essential requirement for cloud-native data.

Import CSV Files to PolarDB for MySQL by Using Foreign Tables

Full Compatibility with MySQL! How to Build a RAG System Based on PolarDB

ApsaraDB - April 27, 2023

ApsaraDB - April 9, 2025

ApsaraDB - January 6, 2023

ApsaraDB - July 11, 2023

ApsaraDB - May 30, 2023

ApsaraDB - May 29, 2025

Application High Availability Service

Application High Availability Service

Application High Available Service is a SaaS-based service that helps you improve the availability of your applications.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn MoreMore Posts by ApsaraDB