Disclaimer: This is a translated work of Qinxia's 漫谈分布式系统. All rights are reserved to the original author.

As mentioned in the previous two articles on distributed storage, We need to save the data and do so cost-effectively.

Massive amounts of data equal massive costs. Similarly, it is not enough to calculate fast, and it needs to push the computing performance as much as possible to save costs.

Different from the distributed storage engine, which focuses on the ways of storage, distributed computing frameworks try to optimize resource scheduling because the space for computing logic optimization is limited. Also, the application layer needs to be considered. Distributed computing frameworks contributed to the creation of a series of Resource Managers (such as the Google Borg and K8s and Apache YARN and Mesos).

In the past, each company had its own dedicated servers and clusters for different businesses. This is easy to understand, as each business hopes to operate independently without interference from other programs and security hazards.

However, the business load differs dynamically, with regular peaks and troughs. Irregular business grows and declines, and frequent expansion and contraction follow. In order to reduce the impact of the changing workload on the business caused by expansion and contraction, there is generally a margin of resources.

The so-called margin, to put it another way, is a waste.

Therefore, how to use these wasted resources flexibly and in a timely manner and reduce the maintenance cost caused by expansion and contraction along the way are the main problems to be solved by various resource managers.

The most intuitive fix is to mix. Computing machines will no longer be exclusive to a certain business but shared by everyone. When Jack's business load is not occupied, Harry can use it, and vice versa. This way, the overall utilization rate will come up.

This is called multi-tenancy.

How do multi-tenants share resources? If everyone is submitting their tasks, how should resource scheduling be done?

The simplest way is the queued first-in-first-out (FIFO). After the previous tasks are finished and the resources are released, the resources can be allocated to the following tasks.

The benefit of multi-tenancy is the increase in the overall resource rate, which is beneficial to all. However, the disadvantage is that it sacrifices the individual. That means all of them will be impacted in the end.

How can we ensure the needs of individual resources?

There is only one way to ensure the needs of individuals, isolation.

Therefore, various resource scheduling systems proposed concepts (such as pool and queue) to allocate resources logically. You can set a quota of computing resources for each pool and only allow a certain business to use this pool. Nested and multi-layered pools also facilitate isolation within the business.

After isolation, resources can no longer be shared, and the overall utilization rate drops.

Therefore, it cannot be hard-isolated, only soft-isolated. The quota for each pool is dynamically balanced and supports borrowing and returning.

If the borrowed share is not returned for a long time (such as a resource being borrowed by a program that runs for three days), should we wait for three days?

So, preemption comes into play. “Excuse me, I can't wait that long, so please return the part that exceeds your quota immediately.” In order not to cause a lot of missions to hang up, preemption is only activated when exceeding a certain percentage and/or amount of time.

Setting a resource limit for the pool means you can only use the set resources.

There are various schedulers (such as Capacity Scheduler and Fair Scheduler). After learning from each other and improving, they gradually converge.

All of them are doing trade-offs between improving the overall utilization rate and ensuring individual quotas.

The solutions mentioned above are ideas that avoid the structure and principle of the specific framework. Since those are all implementations, they will be mentioned later when necessary. You need to understand that the process of designing ideas and solving problems is more important than the implementation.

Having said so much, it is all technical. How do we set up so many mechanisms and parameters?

Let's take quotas as an example.

This is a problem of resource allocation. Just like resource allocation in real life, one needs to have convincing rules. Then, all businesses will discuss and come to the same conclusion (which is usually hard) or let the boss decide.

If you are in an infrastructure team (such as a data platform), remember you are only an administrator of resources, and you are holding management responsibilities, not distribution rights.

You have to explain the rules, use technical means to ensure the overall utilization, and provide various indicators to help with decision-making. Don't go overboard and let yourself be on the cusp.

Below is a brief list of some indicators worthy of attention and reference. (This list is incomplete, just for reference.)

Here are two previous examples from our operating system to illustrate how to use some indicators to guide the allocation of resources.

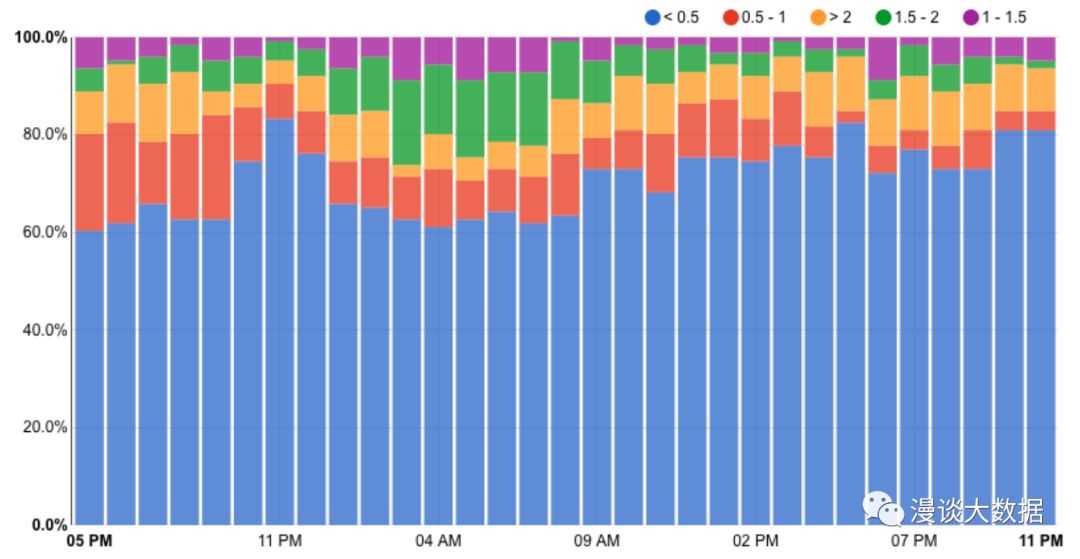

The following figure intercepts the distribution of the overall utilization rate of each queue in a certain time interval.

The figure above shows that most of the queues have a usage rate of less than 50% most of the time, indicating that the quota setting at that time is inappropriate, and idle resources should be allocated to the busier queues.

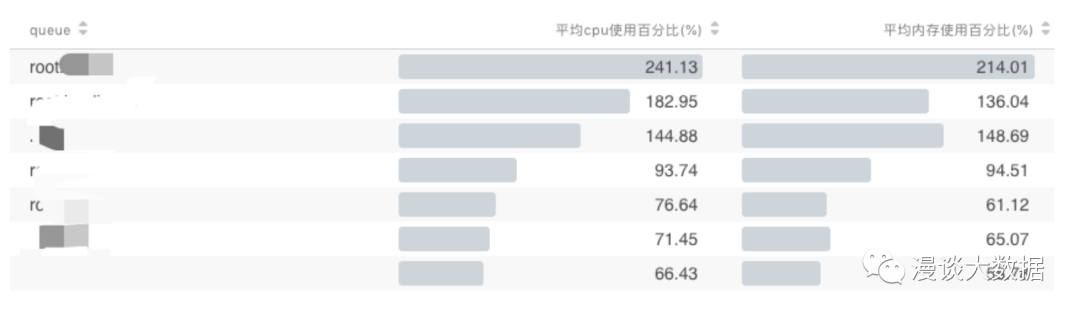

The following figure shows the details of the calculation resource usage on some queues. The calculation formula is actual resource usage/resource quota.

The figure shows that the first queue constantly has insufficient resources, so it uses two times the quota through preemption, but the last queue only uses a little over half the quota (on average). It is because there is a situation like the last queue, a queue that doesn't use up its resource, which makes the first queue possible to be preempted.

The preemption is a lagging process and will be constrained by the upper limit of the hard cap, resulting in low overall utilization. Therefore, the solution should be to reduce the quota for the last queue and increase the quota for the first queue.

In summary, from the first graph, we know that the allocation of resources is unreasonable, and there is a general phenomenon of not having enough and having more than enough. Furthermore, from the second graph, we know which queues don't have enough resources to use and which one does, so we know how to adjust the quota.

Now, we only need to figure out how to implement it into our business scenarios.

In summary, after reading the recent articles, you should have a basic understanding of distributed storage engines and distributed computing frameworks.

You should understand why there is a distributed system, how the distributed system is designed, and how to use the distributed system to save costs.

However, there is no free lunch in the world, and with benefits, there must be problems. Distributed systems are far from being operated hands-free while waiting for the API to be adjusted.

In the following articles, we will take a look at a series of problems introduced by the distributed system and how to solve them. Stay tuned.

This is a carefully conceived series of 20-30 articles. I hope to give everyone a core grasp of the distributed system in a story-telling way. Stay tuned for the next one!

Learning about Distributed Systems - Part 5: Cracking Slow Calculation

Learning about Distributed Systems – Part 7: Improve Scalability with Partitioning

64 posts | 57 followers

FollowAlibaba Clouder - December 13, 2019

Alibaba Cloud Native Community - March 29, 2024

Apache Flink Community China - February 28, 2022

Alibaba Cloud Serverless - November 10, 2022

Alibaba Cloud Native - July 29, 2024

Alibaba Clouder - November 8, 2018

64 posts | 57 followers

Follow Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn More Application Real-Time Monitoring Service

Application Real-Time Monitoring Service

Build business monitoring capabilities with real time response based on frontend monitoring, application monitoring, and custom business monitoring capabilities

Learn MoreMore Posts by Alibaba Cloud_Academy