By Jingxiao

As a common mode of executing expected logic according to the agreed time, timing tasks can be applied to various business scenarios in enterprise-level development, such as background timing data synchronization to generate reports, regular cleaning of disk log files, and scheduled scanning of overtime orders for compensation and callback.

There are many frameworks and solutions available to program developers in the field of timing tasks to implement business functions and product launches quickly. This article introduces and analyzes the current mainstream timing task solutions, which are expected to be used as a reference in enterprise technology selection and project architecture reconstruction.

Crontab is a built-in executable command in Linux. It can execute specified system instructions or shell scripts based on the time when cron expressions are generated.

Crontab command syntax:

crontab [-u username] [-l | -e | -r ]

Parameters:

-u : Only a root user can perform this task. It means to edit the crontab of a user

-e : It means to edit the work content of crontab

-l : It means to check the work content of crontab

-r : It means to remove all content of crontabExample of the configuration file:

* * * * * touch ~/crontab_test

* 3 * * * ~/backup

0 */2 * * * /sbin/service httpd restartThe crond daemon is started by the init process when Linux is started. The crond daemon checks every minute whether the /etc/crontab configuration file contains tasks to be executed and outputs the execution status of timing tasks through the /var/log/cron file. Users can use the Crontab command to manage the /etc/crontab configuration file.

With the help of Crontab, users can easily and quickly implement the simple timing task function, but it has the following disadvantages:

The Spring framework provides an out-of-the-box timing scheduling. Users can identify the execution cycle of a specified method by annotations of xml or @Scheduled.

Spring Task supports a variety of task execution modes, including the corn configured with time zones, fixed latency, and fixed rate.

The code instance is listed below:

@EnableScheduling

@SpringBootApplication

public class App {

public static void main(String[] args) {

SpringApplication.run(App.class, args);

}

}

@Component

public class MyTask {

@Scheduled(cron = "0 0 1 * * *")

public void test() {

System.out.println("test");

}

}The principle of Spring Task is to use ScheduledAnnotationBeanPostProcessor to intercept the methods annotated by @Scheduled when initializing Bean and build corresponding Task instances to register in the ScheduledTaskRegistrar according to each method and its annotation configuration.

After the singleton bean is initialized, configure the TaskScheduler in the ScheduledTaskRegistrar by calling back the afterSingletonsInstantiated. The implementation of the underlying layer of the TaskScheduler depends on the ScheduledThreadPoolExecutor in the JDK concurrent package. All tasks are added to the TaskScheduler to be scheduled for execution while executing the afterPropertiesSet method.

With the help of Spring Task, users can quickly implement periodic execution of specified methods through annotations, and Spring Task supports multiple periodic policies. However, similar to Crontab, it has the following disadvantages:

As an open-source distributed task framework from Dangdang, ElasticJob provides flexible scheduling, resource management, job governance, and many other features. It has become a subproject of Apache Shardingsphere.

ElasticJob currently consists of two independent subprojects: ElasticJob-Lite and ElasticJob-Cloud. ElasticJob-Lite is a lightweight, decentralized solution that provides coordination services of distributed tasks in the form of jar. ElasticJob-Cloud uses Mesos to provide additional services (such as resource management, application distribution, and process isolation). Generally, ElasticJob-Lite is enough to meet the demand.

Users need to configure the address of zk registry and the configuration information of the task in yaml:

elasticjob:

regCenter:

serverLists: localhost:6181

namespace: elasticjob-lite-springboot

jobs:

simpleJob:

elasticJobClass: org.apache.shardingsphere.elasticjob.lite.example.job.SpringBootSimpleJob

cron: 0/5 * * * * ?

timeZone: GMT+08:00

shardingTotalCount: 3

shardingItemParameters: 0=Beijing,1=Shanghai,2=GuangzhouImplement the corresponding API to identify the corresponding task and configure a monitor to implement callbacks before and after a task execution:

public class MyElasticJob implements SimpleJob {

@Override

public void execute(ShardingContext context) {

switch (context.getShardingItem()) {

case 0:

// do something by sharding item 0

break;

case 1:

// do something by sharding item 1

break;

case 2:

// do something by sharding item 2

break;

// case n: ...

}

}

}

public class MyJobListener implements ElasticJobListener {

@Override

public void beforeJobExecuted(ShardingContexts shardingContexts) {

// do something ...

}

@Override

public void afterJobExecuted(ShardingContexts shardingContexts) {

// do something ...

}

@Override

public String getType() {

return "simpleJobListener";

}

}The underlying time scheduling of ElasticJob is based on Quartz. Quartz needs to persist business Bean to an underlying data table. Thus, the system is intruded severely. At the same time, Quartz preempts tasks by db locks, and parallel scheduling and scalability are not supported.

ElasticJob implements horizontal scaling through the feature of data sharding and custom sharding parameters, which means one task can be split into N independent task items, and then distributed servers can execute the shard items assigned to them in parallel. For example, a database contains 0.1 billion pieces of data. If we need to read and calculate the data, we can divide the 0.1 billion pieces of data into ten shards. Each shard reads 10 million pieces of data and then writes the data to databases after calculation.

If there are three machines to execute tasks, machine A is allocated with shard (0,1,2,9), machine B is allocated with shard (3,4,5), and machine C is allocated with shard (6,7,8). This is also the most significant advantage over Quartz.

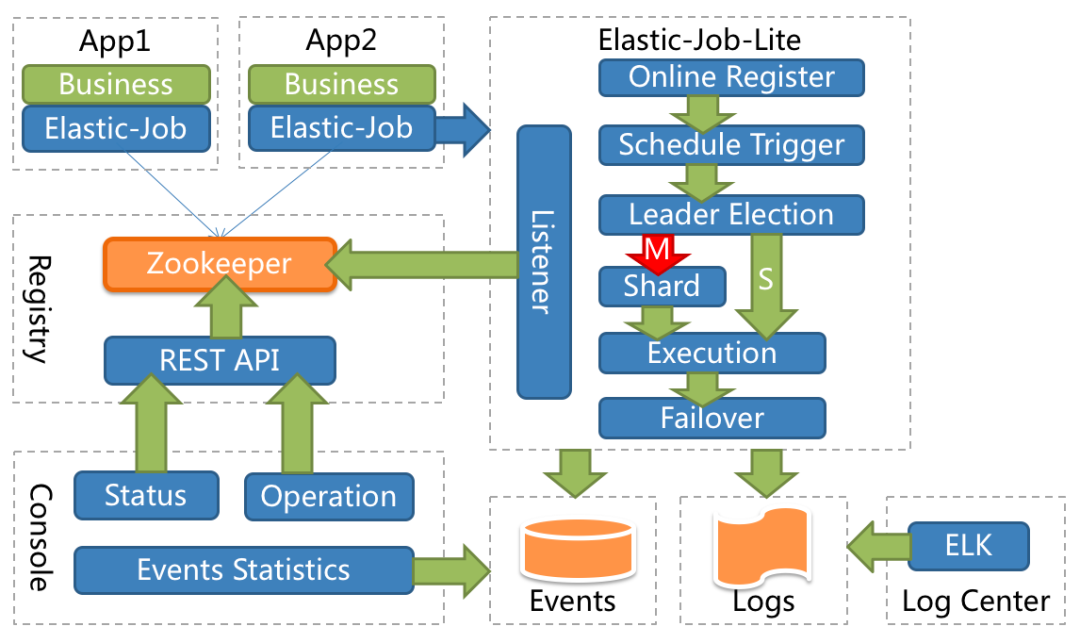

In the implementation, ElasticJob uses Zookeeper as the registry to carry out distributed scheduling coordination and leader election and to sense the increase or decrease of servers by the change of temporary nodes in the registry. Every time the leader node is elected, the server goes online and offline, and the total number of shards changes; subsequent re-shards will be triggered. The leader node will perform a specific shard division when the next scheduled task is triggered. Then, each node will obtain shard information from the registry to execute as the running parameter of the task.

As a distributed task framework, ElasticJob solves the problem that the single-node task execution mentioned above cannot guarantee high availability and good performance during highly concurrent task execution and supports advanced mechanisms (such as failover and misfire). However, it has the following disadvantages:

XXLJob is an open-source distributed task framework from Dianping employees. Its core design goals are rapid development, simple learning, lightweight, and ease of expansion.

XXLJob provides various features, such as task sharding broadcasts, timeout control, failure retries, and blocking policies. It also manages and maintains tasks through the experience-friendly automated O&M console.

XXLJob consists of two parts: central scheduler and distributed executor, which need to be started separately when used. When the scheduling center starts, the dependent db configuration needs to be configured.

The executor needs to configure the address of the scheduling center:

xxl.job.admin.addresses=http://127.0.0.1:8080/xxl-job-admin

xxl.job.accessToken=

xxl.job.executor.appname=xxl-job-executor-sample

xxl.job.executor.address=

xxl.job.executor.ip=

xxl.job.executor.port=9999

xxl.job.executor.logpath=/data/applogs/xxl-job/jobhandler

xxl.job.executor.logretentiondays=30We can use the following methods to create tasks and callback before and after tasks in bean mode:

@XxlJob(value = "demoJobHandler2", init = "init", destroy = "destroy")

public void demoJobHandler() throws Exception {

int shardIndex = XxlJobHelper.getShardIndex();

int shardTotal = XxlJobHelper.getShardTotal();

XxlJobHelper.log("Shard parameter: sequence number of current shard = {}, total number of shards= {}", shardIndex, shardTotal);

}

public void init(){

logger.info("init");

}

public void destroy(){

logger.info("destory");

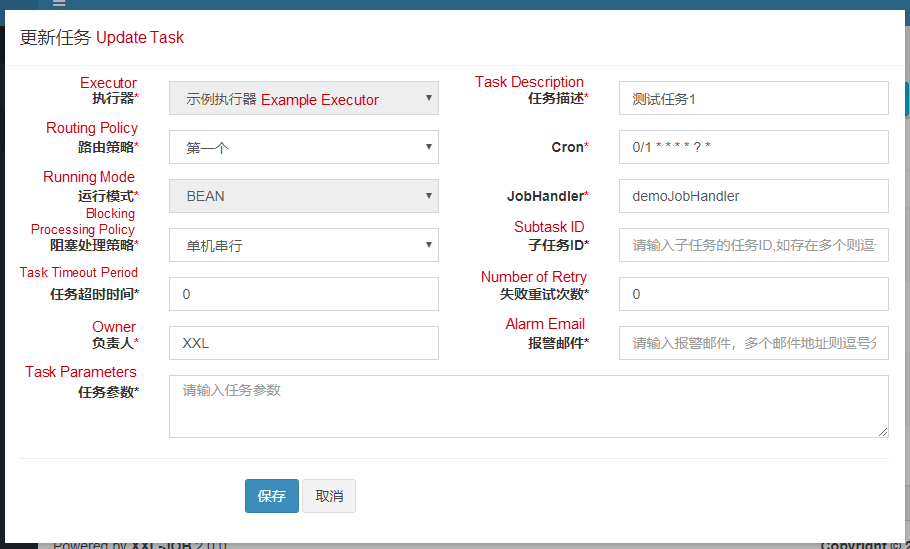

}After the task is created, we need to configure the task execution policy on the console:

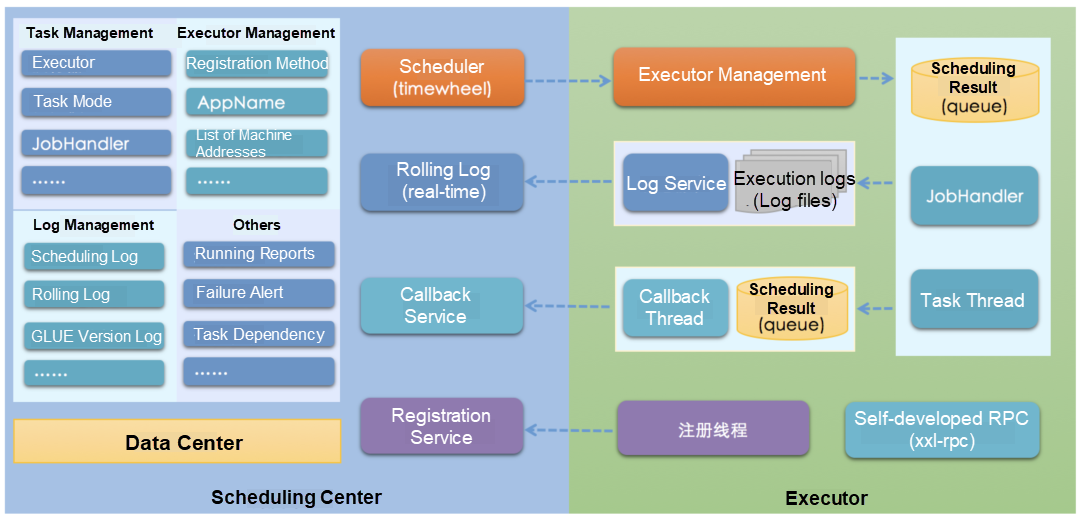

The XXLJob implementation decouples the scheduling system from tasks. The self-developed scheduler of XXLJob is responsible for managing scheduling information and issuing scheduling requests based on scheduling configurations. It supports visualized, simple, and dynamic management of scheduling information, automatic discovery and routing, scheduling expiration policy, retry policy, and executor Failover.

The executor is responsible for receiving scheduling requests, executing task logic, and receiving task termination requests and log requests. It is also responsible for task timeout and blocking policies. The scheduler and executor communicate through RESTful APIs. The scheduler supports stateless cluster deployment, improving the disaster recovery and availability of the scheduling system and maintaining lock information and persistence through MySQL. Executor supports stateless cluster deployment, improving scheduling system availability and task processing capabilities.

This is a complete task scheduling communication process of XXLJob:

First, the scheduling center sends an http scheduling request to the embedded Server of the executor, and then the executor executes the corresponding task logic. After the task execution is completed or timed out, the executor sends an http callback to return the scheduling result to the scheduling center.

XXLJob is widely popular in open-source communities. With its simple operation and rich functions, it has been put into use in many companies, which can better meet the needs of the production level. However, it has the following disadvantages:

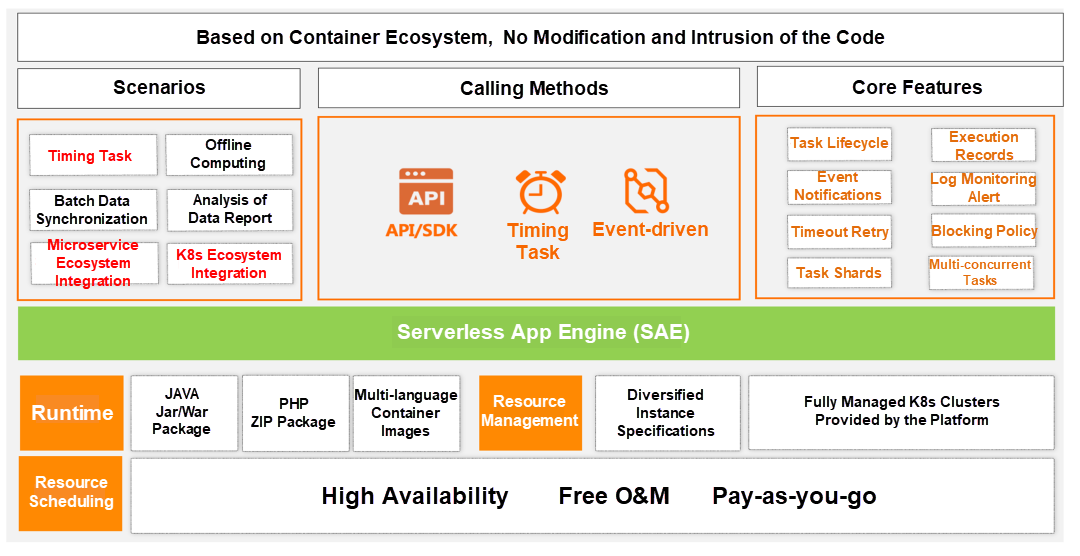

Serverless is the best practice and future evolution trend of cloud computing. Its usage experience (fully managed and O&M-free) and cost advantage (pay-as-you-go) make it highly regarded in the cloud-native era.

As the first task-oriented Serverless PaaS, Serverless App Engine (SAE) Job provides a traditional user experience.

The current focus supports tasks in the standalone, broadcast, and parallel sharding models. It also supports many features (such as event-driven, concurrent policies, and timeout retries) and provides low-cost, multi-specification, and highly elastic resource instances to fulfill the execution of short-term tasks.

For existing applications that use all of the preceding solutions, SAE Job supports zero code modifications and imperceptible migration while being compatible with the feature experience.

If users use Crontab, Spring Task, ElasticJob, or XXLJob, they can directly deploy code packages or images to SAE Job and upgrade them to the Serverless architecture. This immediately provides the technical advantages of Serverless and saves resource costs and O&M costs.

Java, Shell, Python, Go, and Php can be directly deployed to SAE Job for programs stopped immediately after the operation. Then, the automated O&M control, the experience in fully managed service and free O&M, and out-of-the-box observability are available.

The underlying layer of Serverless App Engine (SAE) Job is the multi-tenant Kubernetes, which provides cluster computing resources by two means (including ECS bare metal instance and VK connecting ECI).

Tasks that users run in SAE Job are mapped to corresponding resources in Kubernetes. The multi-tenancy capability isolates tenants by system isolation, data isolation, service isolation, and network isolation.

SAE Job uses EventBridge as an event-driven source, which supports various calling methods and avoids the problem that Kubernetes built-in timers are not triggered on time and time zone precision.

At the same time, the enterprise-level features of the Job controller are continuously improved. Mechanisms are added (such as custom sharding, injection configuration, differentiated GC, active sidecar exit, real-time log persistence, and event notification). With the help of Java bytecode enhancement technology, SAE Job takes over task scheduling and realizes the Serverless mode of the universal Java target framework with zero code modifications.

With the KubeVela software delivery platform as a carrier for task release and management, the entire cloud-native delivery is completed in a declarative manner in the guidance of centering on tasks and open-source. SAE Job continuously optimizes the efficiency of etcd and scheduler in short-term high-concurrency task creation scenarios and the ultimate elasticity of instance startup. It combines the elastic resource pools to ensure low latency and high availability of task scheduling.

SAE Job solves various problems of the preceding timing task solutions. Users do not need to pay attention to task scheduling and cluster resources, deploy additional O&M dependency components, and build a complete monitoring and alerting system. More importantly, they do not need to have cloud hosting standing around 24/7, continuously consuming idle resources in a low resource utilization environment.

Compared with traditional timing task solutions, SAE Job provides three core values:

This article expounds on the target positioning, usage, and principle of several mainstream timing task solutions (Crontab, Spring Task, ElasticJob, XXLJob, and Serverless Job) and makes a horizontal evaluation of the functional experience and performance cost issues enterprises pay close attention to.

Finally, I hope you can choose Serverless Job and experience the new changes it has brought to traditional tasks.

An Innovative Paper on Alibaba Cloud Serverless Scheduling from ACM SoCC

99 posts | 7 followers

FollowAlibaba Cloud Community - November 11, 2022

Biexiang - April 14, 2021

Alibaba Clouder - February 3, 2021

Alibaba Cloud Native Community - December 23, 2025

Alibaba Clouder - November 20, 2018

Aliware - May 24, 2021

99 posts | 7 followers

Follow Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn MoreMore Posts by Alibaba Cloud Serverless