By Wuhuan

It is known that messages (queues) represented by RocketMQ originate from asynchronous decoupling communication between different application services. Together with RPC service communication represented by Dubbo, it carries communication scenarios between distributed systems (services). Therefore, message distribution between services is the basic requirement of messages. However, in the field of messages (queues), there is a trend that the data based on messages can be extended to different scenarios like stream batch computing and event-driven scenarios, such as RocketMQ-streams, Kafka-Streams, Rabbit-Streams.

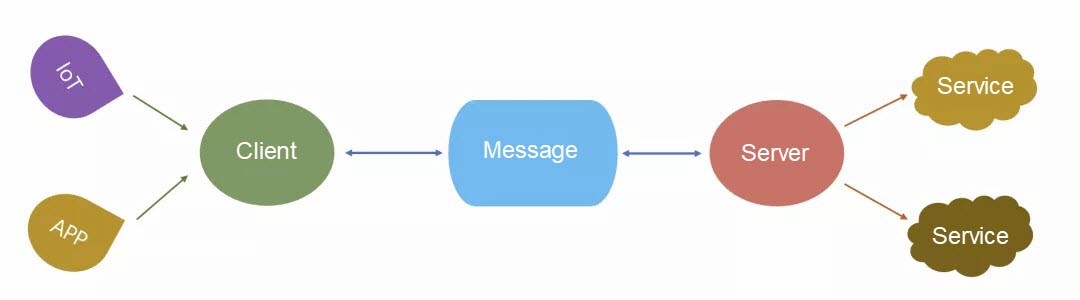

The traditional message queue (MQ) is mainly used for message communication between services (ends), such as transaction messages, payment messages, and logistics messages in the e-commerce field. However, under the message category, there is an important and common message field, namely the client message. The essence of a message is sending and receiving, so there is no essential difference between a client message and a server message.

If there is a unified messaging system (product) to provide multi-scenario computing (such as stream, event) and multi-scenario (IoT, APP) access, it is valuable since messages are also important data. If data exists in only one system, the storage cost can be reduced to the greatest extent, and the consistency problem caused by data synchronization between different systems can be avoided.

This article will describe the integration design and practice of client messages and server messages, so first we will do a basic analysis of this category of client-oriented messages.

In recent years, we have seen explosive growth in IoT-oriented messages with the rise of smart homes and industrial interconnection, while mobile phone APP-side messages on the mobile Internet, which has been developed for more than ten years, are still huge. The magnitude of client-oriented messages is much larger than that of traditional server-side messages and is still growing rapidly.

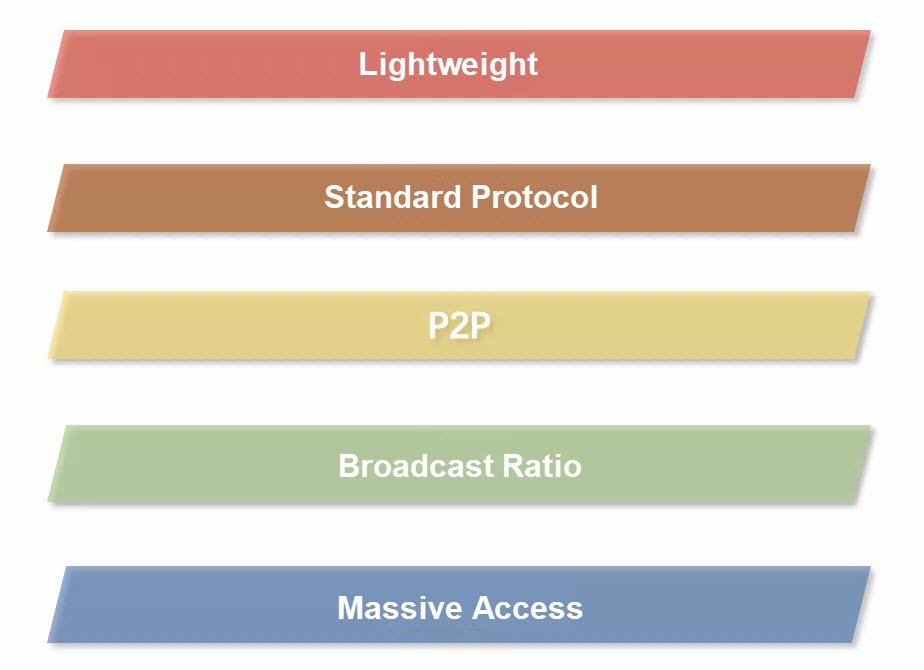

Although the essence of both client and server messages is the sending and receiving of messages, client scenarios still have different characteristics from the server. The following is a brief analysis:

(1) Lightweight clients. The server generally uses a heavy client SDK to encapsulate many functions and features. However, the client must use a lightweight and concise client SDK because of its limited operating environment and complexity.

(2) Since the server has a heavyweight client SDK, it encapsulates all functions including protocol communication, and can even weaken the existence of protocols. Users do not need to be aware of it. To support the access of various complex devices and scenarios, client scenarios must have a standard protocol definition.

(3) In P2P, if a server fails to process a message, the message can be processed successfully by another server. The message must be sent to a specific client. If the client fails to process the message, the message must be sent to the client again until it succeeds. This is different from the server.

(4) Broadcast ratio. Server-side messages such as trading systems send an order message, which may be of interest to several systems such as marketing, inventory, and logistics, while client scenarios such as group chat and live broadcasts may be received by thousands of terminals or users.

(5) Massive access. The client is connected to the client devices, while the server scenario is connected to the server. The former is much larger than the latter in magnitude.

Before realizing integration, let's analyze the problem and feasibility theoretically. Whether it is a client message or a server message, it is a communication method. From the communication level, the basic problems to be solved are summarized as: protocol, match, and reach.

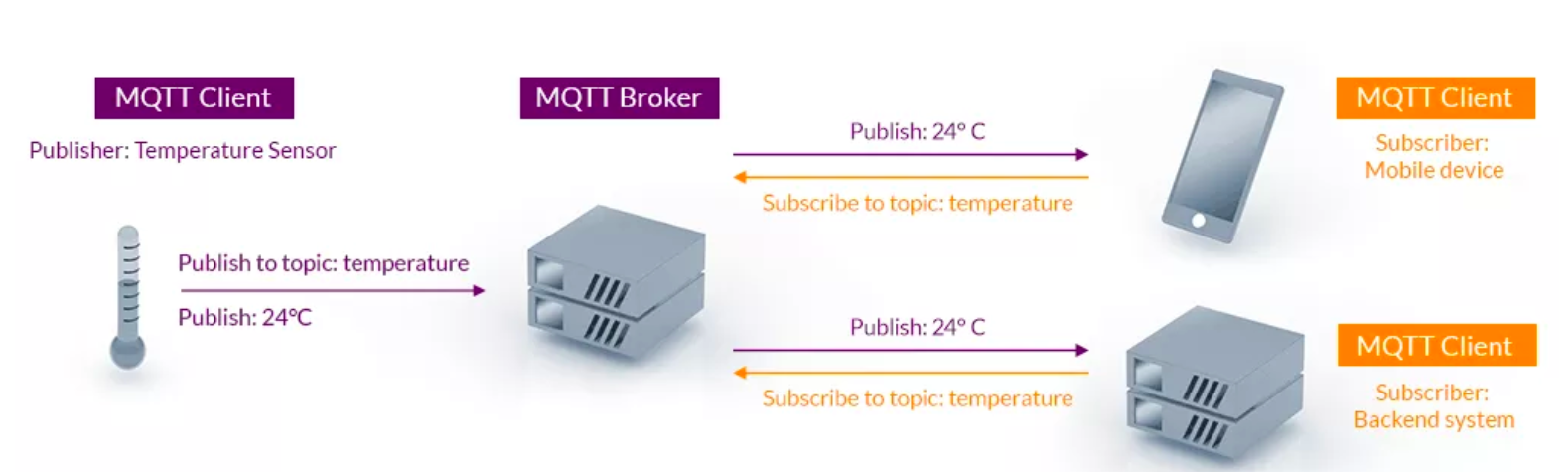

The agreement is to define a communication language channel, and both parties can understand the content semantics. In client scenarios, the MQTT protocol is used in the industry, which originated from IoT scenarios and is a standard open protocol defined by the OASIS consortium.

MQTT protocol defines a Pub/Sub communication model, which is similar to RocketMQ, but it is more flexible in subscription mode. It can support multi-level topic subscription (such as "/t/t1/t2") and wildcard subscription (such as "/t/t1/ +").

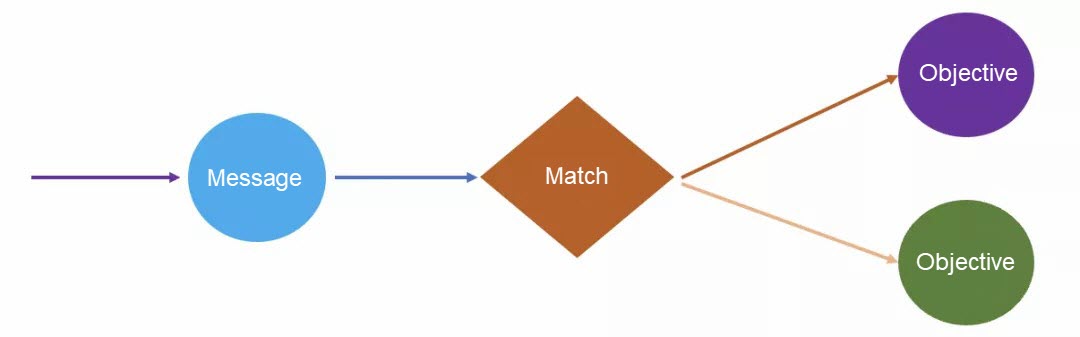

The match is to find all recipients after sending a message. The search process is indispensable.

In RocketMQ, there is a similar matching process. It allocates a queue to a machine in a consumer group through rebalance. The message corresponds to the consumer machine through the queue and then matches the consumer through subscription filtering (Tag or SQL). The reason why the queue can be used to match the consumer machine is that the server scenario message does not need to specify a consumer machine. A message can be placed in any queue, and any consumer machine can correspond to this queue. The message does not need to be explicitly matched with the consumer machine.

In the client scenario, a message must specify a recipient (device), and all recipients must be found. Moreover, the client will generally be connected to a backend service node, which is a single connection, and the node generated by the message is not the same. There must be a more complicated matching process to find the target and more flexible matching features such as the MQTT wildcard.

Reach is to find all recipient targets after matching lookup, and the message needs to be sent to the recipient in some reliable way. There are two common trigger methods: Push and Pull. Push means the server actively pushes the message to the client. The initiative is on the server. The client uses ACK to feedback on whether the message is received or processed. The server needs to decide whether to revote according to whether the client returns ACK. Pull means the client actively obtains all its messages from the server. The initiative is on the client. Generally, the Offset is used to obtain messages in sequence. RocketMQ is this message acquisition method.

Comparing the two methods, we can see that the Pull method needs the client to manage message acquisition logic. This logic has certain complexity (refer to RocketMQ's client management logic), and the client operating environment and conditions are also complex, which is not suitable for the Pull logic implementation and is more suitable for the passive Push method.

In addition, an important difference between client messages is that the ACK for reliability assurance must be specific to one client, while the reliability of server messages is that as long as one consumer machine processes it. It is not concerned about which consumer machine it is. The reliability ACK identifier of messages can be concentrated in the consumer group dimension, while the reliability ACK identifier of client messages needs to be specific to the client dimension. Simply put, one is the Retry queue of the client device dimension and the other is the consumer group dimension.

Based on the previous general analysis of the message base, we design the message model mainly to solve the two core problems of matching search and reliable reach.

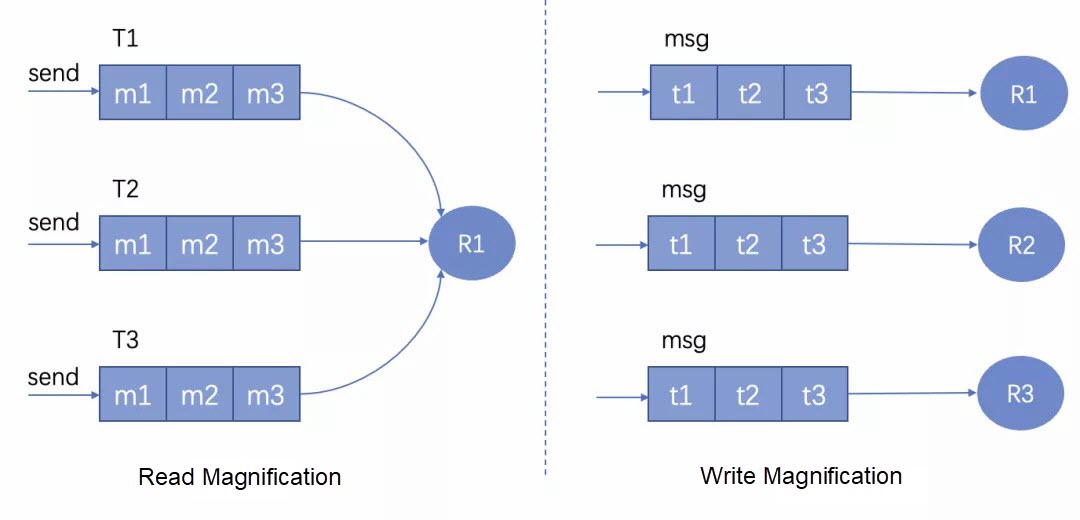

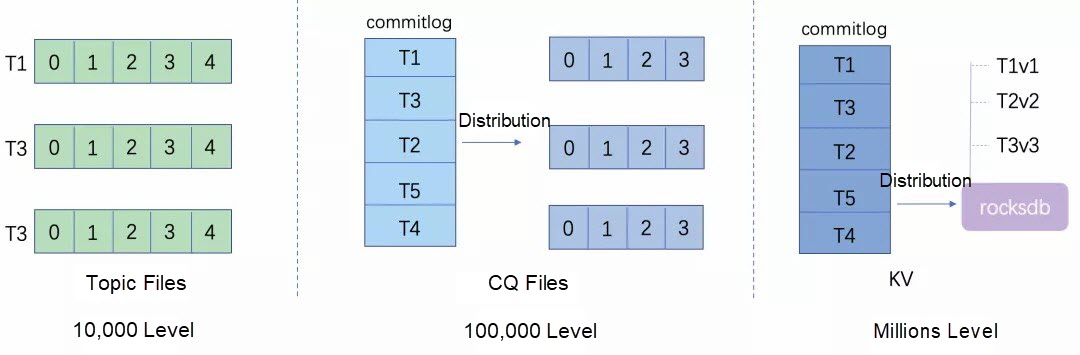

The premise of reliable message reach is to store it reliably. The purpose of message storage is to enable the recipient to obtain the message. The recipient generally has two message retrieval dimensions: 1) to find the message according to the subscribed topic; 2) to find the message according to the subscriber ID. This is what the industry often calls the amplification model: read amplification and write amplification.

Read Amplification: The message is stored by topic. The recipient reads the message from the corresponding topic queue based on the subscribed topic list. Write Amplification: The message is written to all subscribed recipient queues separately, and each recipient reads its client queue.

It can be seen that in the read amplification scenario, only one message is written to the queue of the topic dimension. However, the recipient needs to read multiple times according to the subscribed topic list when reading, while in the write amplification scenario, the message needs to be written to the client queues of all recipients. The storage cost is relatively large, but the recipient needs to read only one queue of his client.

We adopt a strategy of reading amplification as the main and writing amplification as the auxiliary, because the cost and efficiency of storage are most obvious to the user. Writing multiple copies not only increases storage costs but also challenges performance and data accuracy and consistency. However, there is one place where we use the write amplification mode, which is wildcard matching. Since the recipient subscribes to wildcards and the topic of the message is not the same, and the recipient is unable to reverse the topic of the message when reading the message, it is necessary to write an additional wildcard queue according to the wildcard subscription when the message is sent so that the recipient can read the message according to the wildcard queue it subscribes to.

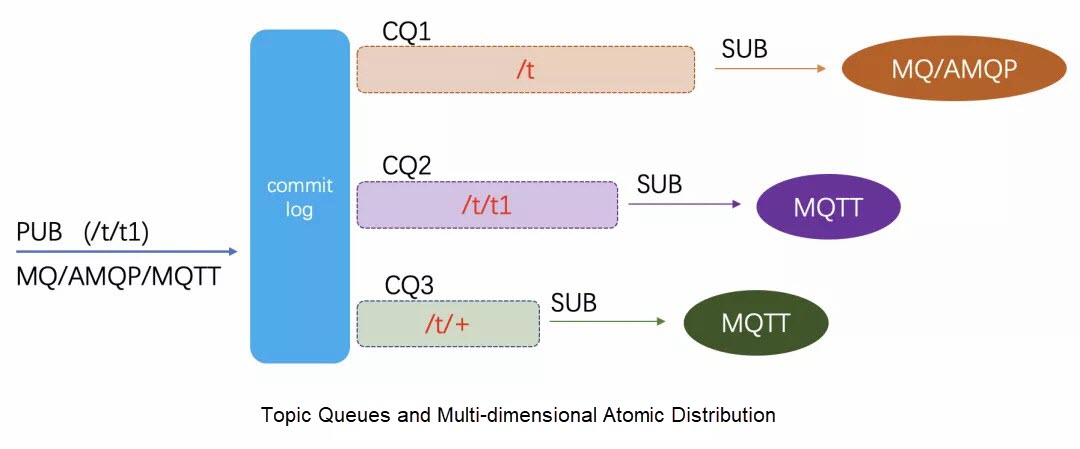

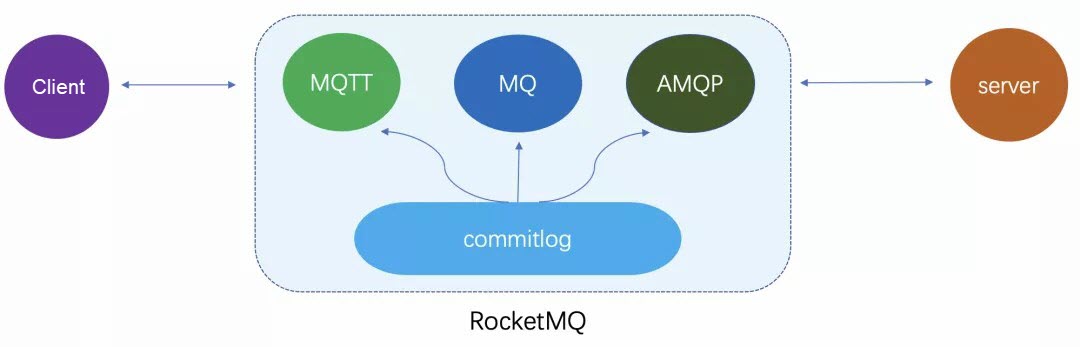

The queue storage model described in the above figure accepts us. Messages can come from various access scenarios (such as MQ/AMQP on the server and MQTT on the client), but only one copy will be written and stored in commitlog. Then the queue index (ConsumerQueue) of multiple demand scenarios will be distributed. For example, the server scenario (MQ/AMQP) can be consumed by the traditional server according to the first-level Topic queue. The client can consume messages based on MQTT multi-level topics and wildcard subscriptions.

Such a queue model can support both server and client scenarios for access and message sending and receiving, achieving the goal of integration.

After introducing the underlying queue storage model, let's describe in detail how matching lookup and reliable reach are done.

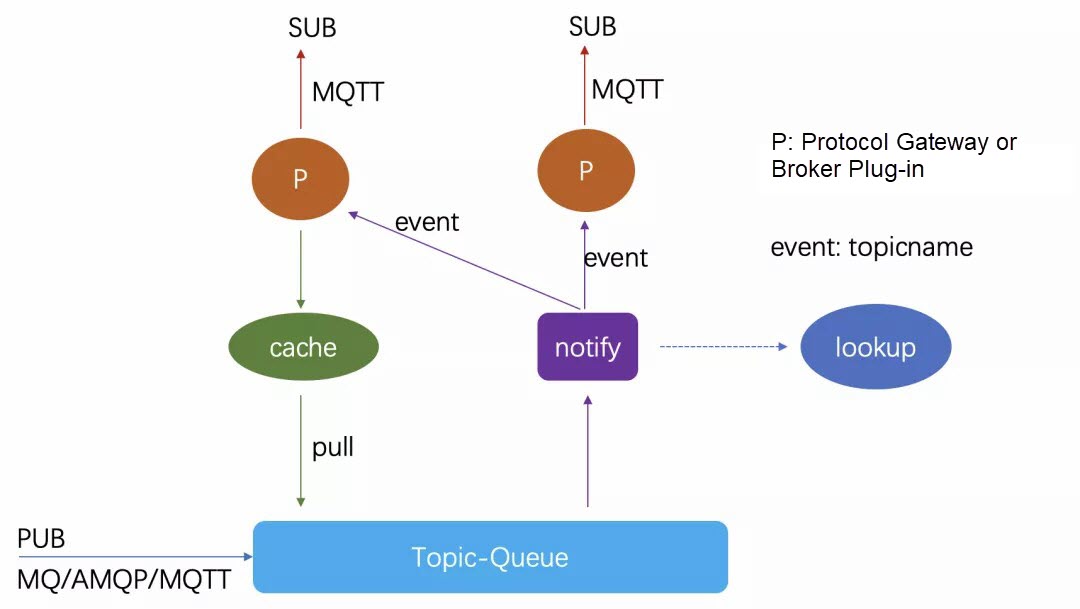

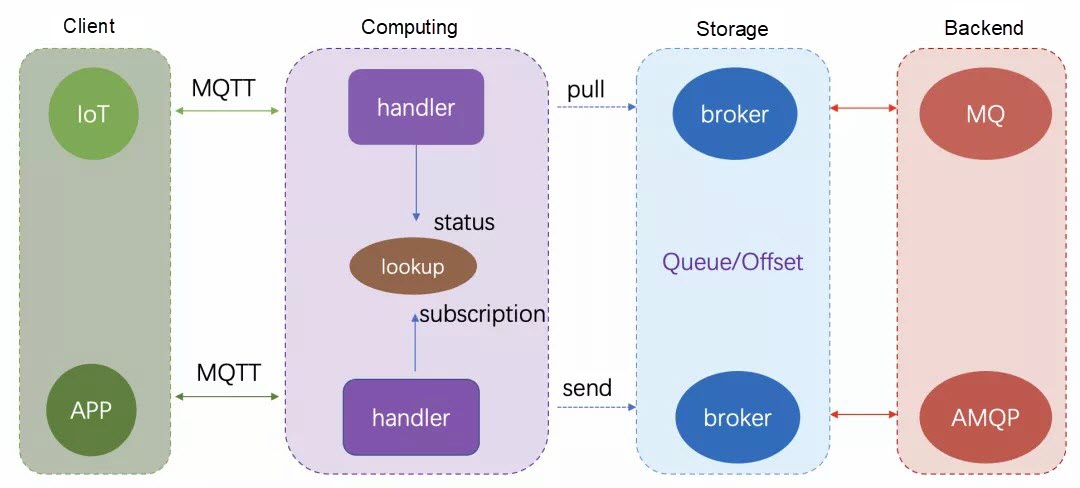

The preceding figure shows a push-pull model. The P node in the figure is a protocol gateway or broker plug-in, and the client connects to this gateway node through the MQTT protocol. The message can be sent from various scenarios (MQ/AMQP/MQTT). After being stored in the topic queue, a notify logic module will sense the arrival of the new message in real-time. Then, a message event (the topic name of the message) will be generated and pushed to the gateway node. The gateway node will perform internal matching according to the subscription status of the client connected to it and find out which client can be matched. A pull request is then triggered to read the message from the storage layer and then push the client.

The question is how the notify module knows which gateway node the client of a message is interested in. This is the key matching problem. Generally, there are two ways: 1) simple broadcast events; 2) centralized storage of online subscription relationships (as shown in the lookup module), and then matching search and accurate push. The event broadcast mechanism seems to have an extensibility problem, but its performance is not bad. Since the data we pushed is the small Topic name and the same Topic message event can be combined into a single event, we adopt this method by default online. Centralized storage of online subscription relationships is also a common practice, such as saving to Rds and Redis. However, it is difficult to ensure real-time consistency of data, and matching searches will also affect the real-time link RT overhead of the entire message.

In the push-pull process of reliable reach and real-time performance, the gateway node is first informed in real-time through the event notification mechanism, then the gateway node exchanges messages through the Pull mechanism, and Push to the client. The Pull + Offset mechanism can ensure the reliability of messages. This is the traditional model of RocketMQ. The endpoint passively accepts the Push of the gateway node, which solves the problem of a lightweight client. The real-time performance is guaranteed by the new message event notification mechanism.

In the above figure, there is also a Cache module for MSMQ cache. In the large broadcast ratio scenario, if a queue pull request is initiated for each client, the broker read pressure is greater. Since each request reads the same topic queue, the local queue cache can be reused.

The above push-pull model uses a new message event notification mechanism to solve the real-time reach problem. When events are pushed to the gateway, a matching search process is required. Although a simple event broadcast mechanism can meet certain performance requirements, it is still a broadcast model and there are still performance bottlenecks in large-scale gateway node access scenarios. In addition, client scenarios have many status query demands, such as finding online status, connection kicks, and need a KV lookup component, which is "lookup".

We can use external KV storage such as Redis, but we are unable to assume that the system (product) has reliable external storage service dependencies in the user's delivery environment, especially the special environment of Apsara Stack.

This lookup component is a KV query, which can be understood as a distributed memory KV, but it is at least one level less difficult than distributed KV implementation. Let's recall what are the basic elements of a distributed KV:

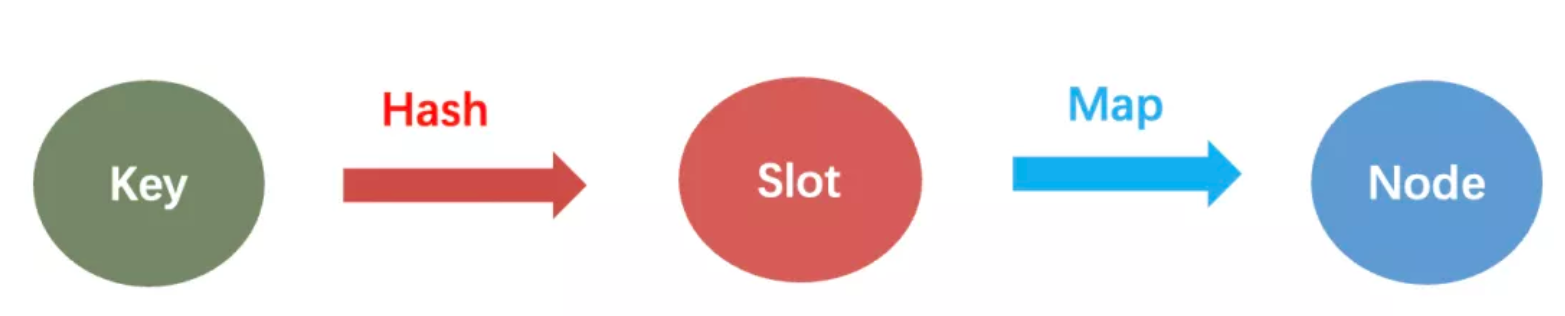

As shown in the preceding figure, a distributed KV read-write process is that the key obtains a logical slot through the hash and the slot obtains a specific node through a mapping table. Hash algorithms are generally fixed modulus, and mapping tables are configured centrally or using consistency protocols. Node scaling is achieved by adjusting the mapping table.

A distributed KV implementation has three basic key points:

1) Mapping Table Consistency

Both reading and writing need to find nodes according to the mapping table in the preceding figure. If the rules are inconsistent, the data will be messed up. The mapping rule configuration itself can ensure strong consistency through centralized storage or protocols such as zk and raft. However, switching between old and new configurations fails to guarantee that nodes are performed at the same time, and there is still an inconsistency window.

2) Multiple Copies

Synchronously stores multiple backup nodes through the consistency protocol for disaster recovery or multiple reads.

3) Load Distribution

The slot mapping node is an allocation. You must ensure that the node is SLB. For example, you may need to migrate slot data in scaling.

We mainly query and save online state data. If the stored node nodes are down and lose data, we can rebuild the data in real-time. Since they are all online, we do not need to consider the problem of multiple copies or the problem of scaling slot data migration. We can directly lose and rebuild and only need to ensure the key point: the consistency of the mapping table. We have a bottom-up mechanism-broadcast. When the part data is unreliable or unavailable, it degenerates to the broadcast mechanism.

Based on the previous theoretical and model analysis, we are considering what architectural form to use to support the goal of integration, and we will describe it in terms of layering, expansion, and delivery.

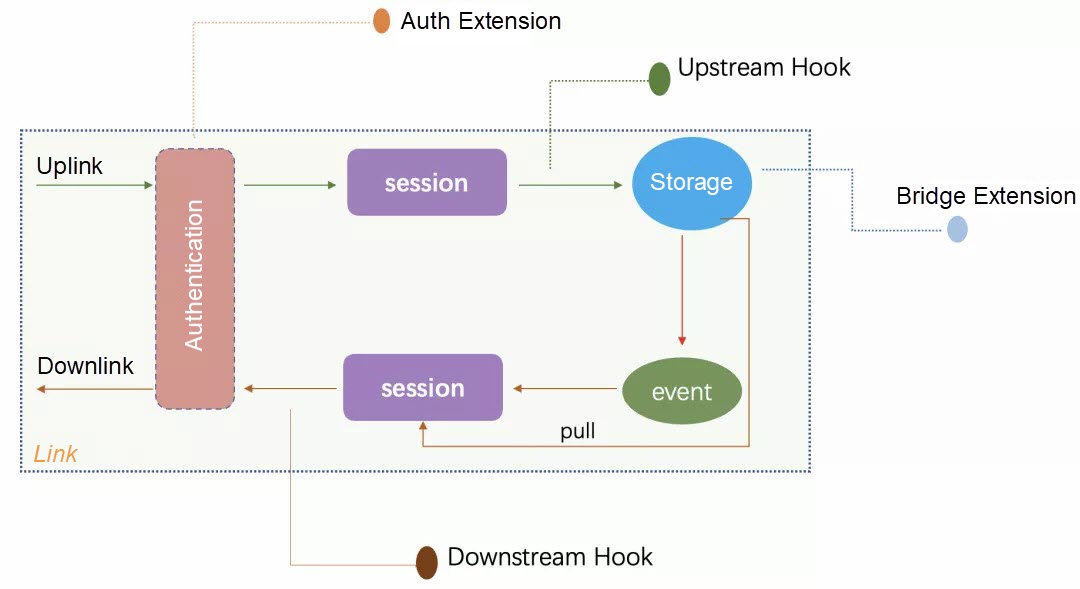

Our goal is to expect to implement an integrated and self-closed loop based on RocketMQ, but we do not want brokers to be intrusive to more scenario logic. We have abstracted a protocol computing layer. This computing layer can be a gateway or a broker plug-in. Broker focuses on solving Queue's problems and adapting or transforming Queue storage to meet the preceding computing requirements. The protocol calculation layer is responsible for protocol access and must be pluggable and deployed.

We all know that the message product belongs to the Paas product, which is closely linked to the upper Saas business. To meet the different needs of the business, we roughly sort out the key core links and add some expansion points on the upper and lower links, such as authentication logic, the most business-oriented logic. Different business requirements are diversified, such as Bridge expansion, which can connect the client status and message data with some external ecosystems (products).

A good architecture design needs to consider the final landing problem: deliver. The current situation is the landing of various environmental conditions such as public cloud, private cloud, and even open-source, which is challenging. One of the biggest challenges is the problem of external dependence. If the product is to rely heavily on an external system or product, there will be uncertainty about the entire delivery.

To cope with various complicated delivery scenarios, on the one hand, we will design an extension interface and adapt it according to the delivery environment conditions. On the other hand, we will also provide default internal implementation for some modules as much as possible, such as the lookup component. Repeated wheel building is a last resort. This may be the biggest difference between making products and platforms.

The entire protocol model and architecture were described earlier, and further transformation and adaptation are needed in the Broker storage layer. We hope that a unified storage kernel based on RocketMQ can support the sending and receiving of messages between clients and servers to achieve the goal of integration.

As mentioned earlier, a big difference between the client message scenario and the server is that the client must have a client-side queue to ensure reliable reach, while the server can use a centralized queue, because messages can be consumed by any machine. But client messages must be explicitly and reliably pushed to specific clients. The queue of the client dimension means that the queue is much larger in order of magnitude than the traditional RocketMQ server topic queue.

In addition, in the queue model described earlier, messages are also stored according to the topic queue. MQTT topic is a flexible multi-level topic that can be generated by clients at will, unlike the server scenario topic, which is heavy metadata management and also means that the topic queue is of a large order of magnitude.

Each topic of a message queue like Kafka is a separate file. However, as the number of topics and message files increases, sequential writing degenerates into random writing, and the performance decreases. RocketMQ has been improved based on Kafka. It uses a Commitlog file to save all the message contents and then uses a CQ index file to represent the message queue in each topic. Since CQ index data is small and the increase of files has much less impact on IO, the number of queues can reach 100,000. However, in this terminal queue scenario, the number of 100,000 queues is still small. We hope to further increase the number of million queues by an order of magnitude. We introduced the Rocksdb engine to distribute CQ indexes.

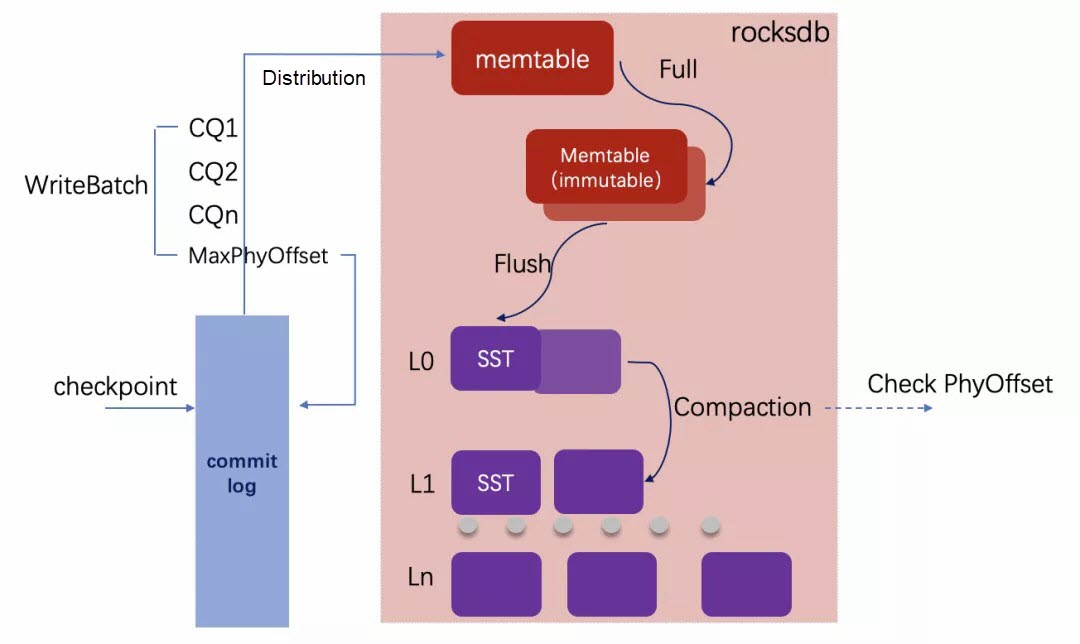

Rocksdb is a widely used stand-alone KV storage engine with high-performance sequential write capabilities. Since we have commitlog and message sequential stream storage, we can remove WAL from the Rocksdb engine and save CQ indexes based on Rocksdb. When distributing, we used the WriteBatch atomic feature of Rocksdb and injected the current MaxPhyOffset into the distribution. Rocksdb can guarantee atomic storage, and then we can use this MaxPhyOffset to make the checkpoint for Recover. We have provided a custom implementation of Compaction to confirm PhyOffset to clean up deleted dirty data.

Topics in RocketMQ are important metadata, which must be created in advance. It will be registered on namesrv, and perform service discovery through Topicroute. As mentioned earlier, topics subscribed to in client scenarios are flexible and can be generated at will. It is difficult to re-manage topics based on the existing RocketMQ topic logic. We have defined a lightweight topic that specifically supports the client scenario. You do not need to register namesrv for management. The upper-layer protocol logic layer manages itself. The broker is only responsible for storage.

This article first introduces the background of the integration of the end and cloud message scenario, then focuses on the analysis of the characteristics of the terminal message scene, as well as the client message scene support model, and finally expounds on the architecture and storage kernel. We expect RocketMQ-based unified kernel integration to support message access targets in different scenarios of terminals and servers, to bring integrated value to customers, such as reducing storage costs and avoiding the consistency challenges brought by data synchronization between different systems.

How to Use CPU Management Policies Reasonably to Improve Container Performance

667 posts | 55 followers

FollowAlibaba Cloud Native Community - February 15, 2023

Alibaba Cloud Native - November 13, 2024

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native - June 6, 2024

Alibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native Community - March 14, 2022

667 posts | 55 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More AliwareMQ for IoT

AliwareMQ for IoT

A message service designed for IoT and mobile Internet (MI).

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn MoreMore Posts by Alibaba Cloud Native Community