With the diversification and rapid iteration of application scenarios, business systems often utilize semi-structured data types for storage and analysis. PolarDB, developed by Alibaba Cloud as a new generation cloud-native HTAP database, introduces In Memory Column Index (IMCI) which provides complete virtual column and columnar JSON functions. It enables fast processing of big data, supports multiple data types (including structured and semi-structured data), and offers efficient data analysis, querying, and stream computing capabilities. It is suitable for application scenarios such as data analysis, data warehousing, and extended stream computing.

To compute and analyze large volumes of semi-structured data, this article takes JSON as an example and describes traditional database and data warehouse solutions. It then delves into the technical aspects of PolarDB's IMCI columnar JSON, including virtual columns, second-level column addition and deletion, and table column expansion. Finally, it provides real-time analysis and extended stream computing solutions for PolarDB's IMCI columnar JSON.

The use of JSON type in business systems is primarily due to the flexibility of semi-structured data, often requiring efficient analysis of such data. In this regard, flexibility and high performance become key indicators for evaluating solutions for massive semi-structured data analysis.

Traditional relational databases like MySQL, PostgreSQL, and ClickHouse often encode raw JSON-formatted data into binary format and store it in JSON columns of tables when processing JSON data. Real-time parsing and calculations of entire JSON column data are done using JSON functions during queries.

JSON is a semi-structured data type that allows for the addition, deletion, and modification of its attributes as needed. When business requirements change, the system can dynamically add, delete, and modify relevant attributes for new JSON column data without modifying the table structure, effectively reducing maintenance and management costs associated with table structures. However, during business queries, complete JSON column data needs to be read and parsed in real-time, resulting in significant IO resource consumption and potential issues with repetitive parsing and calculations. Additionally, it is not possible to create or use secondary indexes for specific fields within JSON column data.

select product.item->"$.name"

from product, purchase

where product.id = purchase.item->"$.id"

group by product.item->"$.name";When executing the aforementioned SQL statements in a traditional database, if the NestedLoopJoin operator is used, each row of each product table needs to repeatedly read the entire item column data of the purchase table, parse the JSON column data repeatedly, and extract the specified fields. As a result, the query efficiency is low.

The processing flow of a data warehouse typically includes the following steps:

The production data from the business system is usually processed by intermediate ETL tasks before being imported into the data warehouse.

When processing JSON-formatted data, data warehouses often pre-parse the data in ETL jobs to improve query performance. They calculate the required values and insert them as separate columns in the table, forming a large wide table after processing certain attributes of the JSON-formatted data as needed. This eliminates the need to read and parse the entire JSON column data. Instead, the corresponding regular columns can be directly read, saving a significant amount of I/O resources. Additionally, indexes can be created and used for these columns to effectively improve query performance.

However, when the business requirements change and attributes of the JSON-formatted data are added or deleted as needed, the data warehouse needs to modify the intermediate ETL jobs and table structure to adapt to the upstream production data. This may involve republishing ETL jobs, adding or deleting DDL columns, and maintaining the logic of ETL jobs and business table structure simultaneously. Frequent publishing of ETL jobs can impact upstream data consumption and downstream result warehousing, and modifying the structure of large tables without INSTANT DDL support can be costly and potentially disrupt normal query operations.

Overall, although traditional data warehouse solutions can provide efficient queries, they lack flexibility and have high maintenance costs.

When dealing with analysis scenarios of massive semi-structured data, traditional databases and data warehouses cannot meet both the requirements of high query performance and flexible architecture. Therefore, the industry urgently needs a new solution: PolarDB IMCI.

To address this, PolarDB IMCI introduces a series of related features such as columnar JSON, virtual columns, second-level column addition and deletion, and table column number extension. The following section describes the technical details of each feature:

Semi-structured data is a form of data that lies between structured and unstructured data. It has partially structured features but lacks a clear data structure like structured data. Semi-structured data can be described and organized using tags, marks, metadata, and other methods, and its structure and organization methods can be dynamically adjusted as the content of the data changes. Semi-structured data often exists in scenarios such as web pages, XML, JSON, and NoSQL databases, and its flexibility and ease of extensibility make it an essential part of the big data era.

PolarDB for MySQL is a relational database management system designed for storing structured data. However, it also supports storing and querying semi-structured data, such as XML and JSON-formatted data. PolarDB IMCI fully supports JSON-formatted data types and introduces columnar functions for JSON. It uses the binary JSON format to store semi-structured data. You can use columnar JSON functions to parse, query, modify, and delete JSON documents. Based on the description above, we can conclude that PolarDB IMCI is fully compatible with MySQL syntax.

PolarDB IMCI uses a simplified binary method to store JSON-formatted columnar storage data and utilizes the RapidJSON library to parse the JSON-formatted data. During processing, PolarDB IMCI reads data as needed and applies columnar storage compression technology to effectively reduce I/O. Additionally, PolarDB IMCI leverages SIMD, vectorization, and parallelization techniques to accelerate operations.

Actual test data is used as an example to demonstrate the usage of JSON in columnar storage and the performance comparison between row-oriented and column-oriented storage.

1. Create a table and add JSON columns and their column indexes.

create table produce (

id bigint(20) NOT NULL,

attributes json DEFAULT NULL

) comment='columnar=1';2. Use columnar JSON functions to query.

select count(*)

from produce

where attributes->"$.delivery.width" > 10 and attributes->"$.delivery.height" > 10 and attributes->"$.delivery.weight" > 10;Column-oriented storage execution plan:

Project | Exprs: temp_table1.COUNT(0)

HashGroupby | OutputTable(1): temp_table1 | Grouping: None | Output Grouping: None | Aggrs: COUNT(0)

CTableScan | InputTable(0): produce | Pred: ((JSON_EXTRACT(produce.attributes, "$.delivery.width") > "10(json)") AND (JSON_EXTRACT(produce.attributes, "$.delivery.height") > "10(json)") AND (JSON_EXTRACT(produce.attributes, "$.delivery.weight") > "10(json)"))The following table lists the column-oriented and row-oriented storage time of PolarDB when there are tens of millions of produce tables:

| Row-oriented storage | IMCI (32 Cores) | |

| SQL duration | 9.29sec | 0.14sec |

Test experiments show that PolarDB IMCI can efficiently analyze JSON-formatted data, which is two orders of magnitude faster than IMCI. Data sets and query modes are different. Therefore, the performance benefits of online services may vary based on the actual situation.

A virtual column is a special type of column whose values are not stored by insertion or update, but are dynamically calculated, merged, or filtered based on the values of other columns in the table. Virtual columns can be used for queries and indexes, but cannot be directly modified or deleted. Virtual columns provide a way to quickly access and process data without recalculating it for each query, so they are often used to optimize queries and simplify operations.

PolarDB IMCI can implement complete virtual column functions and supports two types of generated columns: virtual generated column (default) and stored generated column. The virtual generated column persistently stores the calculated values of the generated column in the column-oriented storage but not in the row-oriented storage. The feature performs real-time computing each time the row-oriented storage is read. The stored generated column persistently stores the calculated values of the generated column in the row-oriented storage and column-oriented storage, but consumes more disk space. In the PolarDB IMCI ecosystem, we recommend that you use the default virtual generated column to save disk space and provide high column-oriented storage performance.

Virtual column syntax:

col_name data_type [GENERATED ALWAYS] AS (expr)

[VIRTUAL | STORED] [NOT NULL | NULL]

[UNIQUE [KEY]] [[PRIMARY] KEY]

[COMMENT 'string']The actual test data is used as an example to show the usage of virtual columns in column-oriented storage and the comparison between the row-oriented and column-oriented storage performance:

1. Create a table and add virtual columns and their column indexes.

create table produce (

id bigint(20) NOT NULL,

attributes json DEFAULT NULL,

`delivery_volume` double GENERATED ALWAYS AS (((json_extract(`attributes`,'$.delivery.width') * json_extract(`attributes`,'$.delivery.height')) * json_extract(`attributes`,'$.delivery.weight'))) VIRTUAL

) comment='columnar=1';2. Use regular columns and virtual columns to query.

(1) Regular column query

select count(*)

from produce

where (attributes->"$.delivery.width" * attributes->"$.delivery.height" * attributes->"$.delivery.weight") > 1000;Column-oriented storage execution plan:

Project | Exprs: temp_table1.COUNT(0)

HashGroupby | OutputTable(1): temp_table1 | Grouping: None | Output Grouping: None | Aggrs: COUNT(0)

CTableScan | InputTable(0): produce | Pred: ((CAST JSON_EXTRACT(produce.attributes, "$.delivery.width")/JSON as DOUBLE(38, 31)) * (CAST JSON_EXTRACT(produce.attributes, "$.delivery.height")/JSON as DOUBLE(38, 31)) * (CAST JSON_EXTRACT(produce.attributes, "$.delivery.weight")/JSON as DOUBLE(38, 31)) > 1000.000000)The following table lists the column-oriented and row-oriented storage time of regular columns when there are tens of millions of produce tables:

| Row-oriented storage | IMCI (1 Core) | IMCI (32 Cores) | |

| SQL duration | 13.43sec | 5.72sec | 0.24sec |

(2) Virtual column query

select count(*)

from produce

where delivery_volume > 1000;Column-oriented storage execution plan:

Project | Exprs: temp_table1.COUNT(0)

HashGroupby | OutputTable(1): temp_table1 | Grouping: None | Output Grouping: None | Aggrs: COUNT(0)

CTableScan | InputTable(0): produce | Pred: (produce.delivery_volume > 1000.000000)The following table lists the column-oriented and row-oriented storage time of virtual columns when there are tens of millions of produce tables:

| Row-oriented storage | IMCI (1 Core) | IMCI (32 Cores) | |

| SQL duration | 14.30sec | 0.03sec | 0.01sec |

Test experiments show that the virtual column function of PolarDB IMCI can effectively improve the query performance. Data sets and query modes are different. Therefore, the performance benefits of online services may vary based on the actual situation.

In summary, the virtual column feature of PolarDB for MySQL is flexible and powerful. When you process semi-structured data, such as JSON data, you can use virtual columns to store irregular data as structured data. This eliminates the need for additional ETL processing logic and allows you to use traditional SQL query languages for query and analysis. Virtual columns help simplify complex calculations and queries and improve the flexibility of the architecture. It not only avoids redundancy of row-oriented storage data, but also creates IMCI on virtual columns and makes full use of the column-oriented storage pruner mechanism for filtering, which effectively improves query performance.

The previous section shows that PolarDB IMCI provides an efficient and flexible virtual column feature when processing semi-structured data. However, a virtual column is just a single column of a table. When you add or delete JSON columns to semi-structured data based on your business requirements, the business table needs to use DDL statements to add or delete columns to change the table structure. In this case, efficient column addition or deletion is an essential feature. Of course, you do not have to change the table structure every time the structure of semi-structured data changes. You can directly use JSON column-based functions to compute data in real time when the query frequency is not high. In most cases, real-time computing can meet the query performance, especially when the PolarDB IMCI JSON column-oriented storage version is implemented.

PolarDB IMCI provides the INSTANT DDL statement that allows you to add or delete virtual columns in column-oriented tables within seconds. This allows you to add or delete virtual columns instantly without blocking read and write operations and affecting normal query operations.

Second-level virtual column addition

alter table produce add column delivery_volume DOUBLE AS (attributes->"$.delivery.width" * attributes->"$.delivery.height" * attributes->"$.delivery.weight");Second-level virtual columns deletion:

alter table produce drop column delivery_volume;When semi-structured data-related attributes are converted to columns of a large wide table through virtual columns, the number of columns of a large wide table increases as the semi-structured data attributes increase. However, native MySQL has a limit on the maximum number of columns, which generally depends on the limit of the storage engine of the table. For example, the InnoDB storage engine supports a maximum number of 1017 columns (about 1KB columns).

For row-oriented storage, the maximum number of columns in a table meets most business requirements. When designing the table structure of relational databases, try to avoid using a large wide table as much as possible, because too many columns often increase the I/O and memory burden and thus affect the performance. For example, even if only a small number of column data is needed, the entire rows must be read, resulting in a large number of invalid I/O. Generally, methods such as splitting tables and using associated tables are considered to optimize the table structure. However, for column-oriented storage, large wide tables become a powerful query tool to avoid table association. Because column-oriented storage is stored by column, it has a better compression effect, and only the required column needs to be read without reading the entire columns, effectively reducing the amount of I/O.

When processing semi-structured data, PolarDB IMCI often converts some attributes in this data into separate virtual columns in the table as needed. If the number of attributes is too large, the number of columns of the table may exceed the current maximum limit. Therefore, PolarDB IMCI expands the maximum number of columns in InnoDB and column-oriented storage tables based on the native MySQL database. Currently, PolarDB IMCI supports a maximum number of 4089 columns (about 4KB columns).

For semi-structured data analysis, PolarDB IMCI implements technologies such as columnar JSON and column-oriented storage virtual columns. This section uses real-time event data from GitHub (July 2023) to verify the real-time data analysis capability of PolarDB IMCI JSON.

The JSON-formatted data of GitHub real-time events can be obtained from GH Archive. For example, use wget to download the data for each hour in July 2023. After downloading, parse and insert it into the github_events table.

Define the github_events table according to GitHub event types:

create table github_events (id bigint, type varchar(16), public bit, payload json, repo json, actor json, org json, created_at datetime);Select and rewrite two test SQL statements from the Everything You Always Wanted To Know About GitHub:

Query the most popular programming languages in a week:

SELECT

repo_language AS language,

count(*) AS total

FROM

github_events

WHERE

created_at >= "2023-07-25 00:00:00"

AND created_at <= "2023-07-31 23:59:59"

AND repo_language IS NOT NULL

GROUP BY

repo_language

ORDER BY

total DESC

LIMIT 10;Rank all followers of Linux warehouse by star number:

SELECT repo_name, count(*) AS stars

FROM github_events

WHERE (type = 'WatchEvent') AND (actor_login IN

(

SELECT actor_login

FROM github_events

WHERE (type = 'WatchEvent') AND (repo_name IN ('torvalds/linux')) AND created_at >= "2023-07-31 00:00:00" AND created_at <= "2023-07-31 23:59:59"

)) AND (repo_name NOT IN ('torvalds/linux')) AND created_at >= "2023-07-31 00:00:00" AND created_at <= "2023-07-31 23:59:59"

GROUP BY repo_name

ORDER BY stars DESC

LIMIT 10;Create a column index for the github_events table and add virtual columns such as actor_login, repo_name, and repo_language to the index based on the preceding SQL statement.

alter table github_events add column actor_login varchar(256) generated always as (json_unquote(json_extract(`actor`,'$.login'))) virtual, add column repo_name varchar(256) generated always as (json_unquote(json_extract(`repo`,'$.name'))) virtual, add column repo_language varchar(32) generated always as (json_unquote(json_extract(`payload`,'$.pull_request.base.repo.language'))) virtual, comment 'columnar=1';Configure the row-oriented storage cache to 500 GB and the column-oriented storage cache to 128 GB. The test results are as follows in the case of hot data:

| Query | Row-oriented storage | Column-oriented storage (1 Core) | Column-oriented storage (32 Cores) |

| SQL1 | 45.01sec | 0.79sec | 0.04sec |

| SQL2 | 8.57sec | 0.54sec | 0.07sec |

As shown in the preceding table, the real-time analysis performance of PolarDB IMCI columnar JSON is much higher than that of row-oriented storage. This allows you to analyze massive semi-structured data.

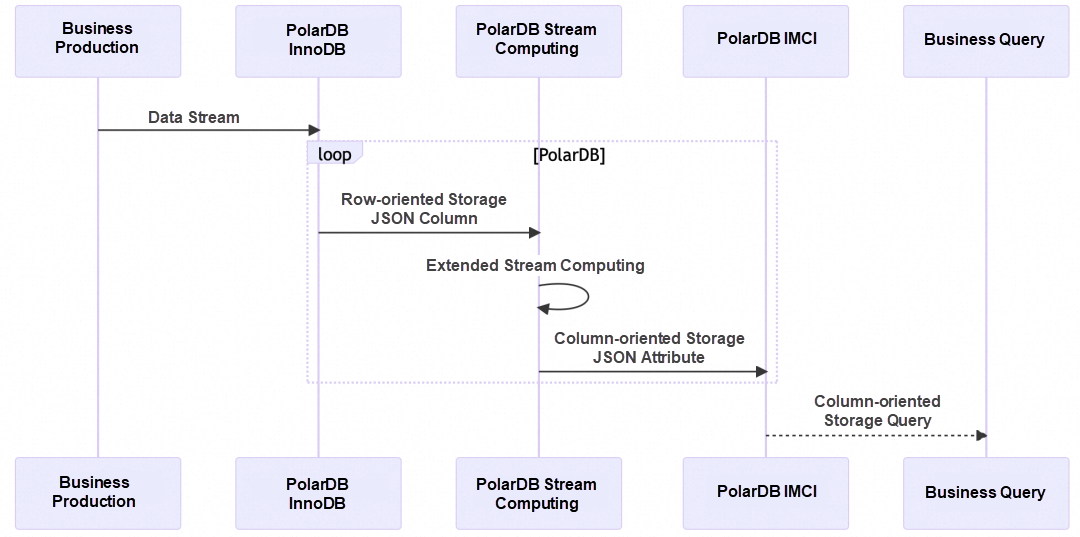

Based on the preceding features, such as columnar JSON, virtual columns, second-level column addition and deletion, and column number extension, PolarDB IMCI provides an automated solution for analyzing massive semi-structured data: extended stream computing.

Stream computing is a real-time data processing technique that focuses on continuous data streams for real-time computing and analysis. Extended stream computing, on the other hand, can be seen as a lightweight version of stream computing that emphasizes fast data stream processing and real-time computing results while minimizing resource usage and system complexity. Unlike traditional stream computing systems, extended stream computing places more emphasis on being lightweight, responsive, and highly automated.

PolarDB IMCI's extended stream computing feature allows you to define data stream processing logic using SQL statements and record it as virtual columns within the table structure. The extended stream computing framework then automatically calculates real-time results based on the business data streams and persists them in column-oriented storage tables. During queries, the IMCI efficiently retrieves and reads the resulting values. The entire process of extended stream computing is seamlessly integrated into PolarDB IMCI. You only need to use SQL DDL statements to define different data stream processing logic (virtual columns) and modify them when your business requirements change.

To analyze large volumes of semi-structured data, you can utilize JSON functions and the attributes of JSON-formatted columns to define virtual columns (i.e., data stream processing logic) based on your specific business needs. You can also add column indexes to these virtual columns. PolarDB IMCI will then automatically calculate and store the data streams in real-time, continuously updating the wide tables. When querying data, you can directly use the specified virtual columns, avoiding the need to re-read and parse complete JSON-formatted column data. This significantly improves query efficiency. Even when using JSON functions directly in queries, the PolarDB optimizer checks for matching virtual columns based on the JSON function and JSON columns. If a matching virtual column exists, the optimizer prioritizes its selection, enhancing query performance.

When business requirements change, such as adding, deleting, or modifying attributes of JSON-formatted column data, you can easily add or delete columns in seconds using INSTANT DDL statements. There is no need to maintain ETL job logic like traditional data warehouses. Additionally, the table structure can be instantly modified by adding or deleting columns without any impact on ongoing business operations. This feature allows for flexible adaptation to changing business requirements without incurring additional maintenance costs. If the query frequency for new requirements is not high, the table structure can remain unchanged. During querying, you can utilize the JSON function provided by the column-oriented storage version for real-time parsing, which meets most business needs.

In summary, PolarDB IMCI's extended stream computing feature combines the flexibility of traditional databases with the high performance of data warehouses when analyzing large volumes of semi-structured data.

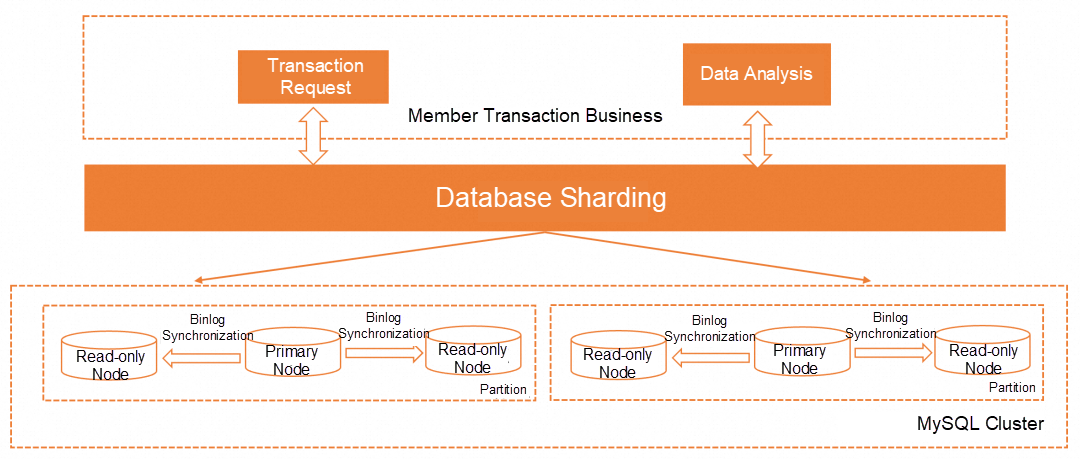

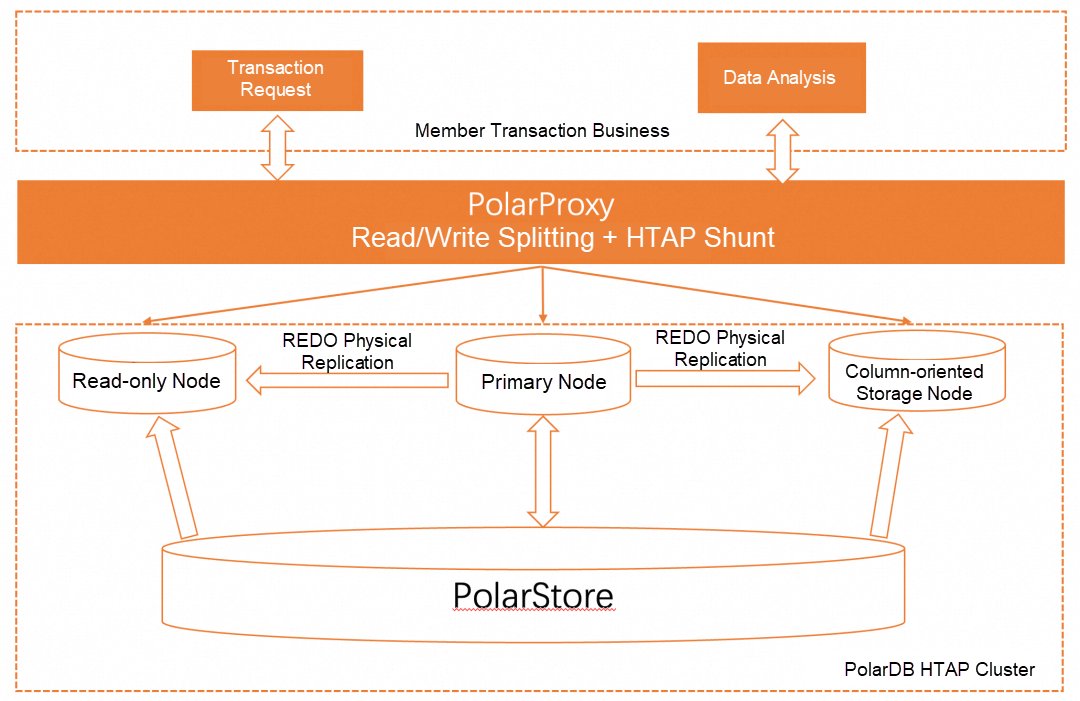

The membership-based short/long video platform relies on a large user base and generates revenue through member payments, advertising, and other sources. Members pay for access to high-definition content, ad-free viewing, and other exclusive benefits. In this business model, transaction data from members plays a crucial role and requires high real-time performance. It is used for business compensation and verification, real-time monitoring of order reversals, automatic replenishment and allocation of benefits, and handling delayed benefit arrivals. As the number of members increases, the daily transaction data also grows, leading to similar business challenges in various scenarios.

If the member transaction database system adopts a MySQL sharding scheme, with each database table based on a MySQL cluster for one-write multi-read deployment, the database cluster can be expanded to meet the needs of business growth by increasing the number of partitions and read-only nodes, and utilizing binary log synchronization between nodes. To facilitate faster iteration, the transaction business often utilizes the semi-structured JSON type. However, as the business continues to develop rapidly, the existing database architecture struggles to handle large tables and high-concurrency JSON queries. The only solution seems to be continuously increasing the number of databases and partitions. This results in complex operations and maintenance and compromised performance, leading to higher costs.

How can we simplify operations and maintenance, reduce costs, and improve efficiency? These problems pose significant challenges for the system to adapt to the fast-paced business growth.

To address these challenges, Alibaba Cloud offers the PolarDB HTAP integrated solution, which leverages real-time analysis on column-oriented storage technology. This solution enhances the analysis performance of large volumes of JSON-formatted data and effectively simplifies operations and maintenance.

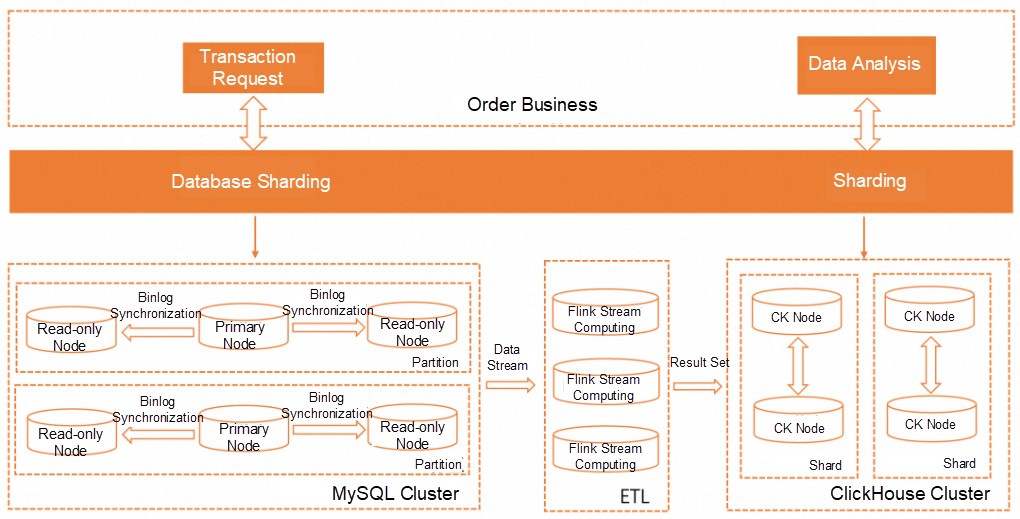

Fintech and e-commerce platform businesses share similarities in their database system requirements, particularly in their order systems which can be categorized into two main types: transaction processing and data analysis. In the transaction system, JSON is used to store various business attributes, while the analysis system requires the calculation and analysis of a large number of business attributes. The order business database system utilizes a MySQL cluster to handle transactional data, while the business analysis system relies on a ClickHouse cluster for the overall system architecture. By subscribing to a MySQL data stream and converting JSON-formatted data into structured data using Apache Flink, a wide table is constructed and then inserted into the ClickHouse cluster in real time. However, this complex database architecture may result in challenges such as data loss, limited real-time analysis, poor query performance, and complex operations and maintenance. For instance, modifying JSON attributes due to business requirement changes necessitates adjustments to the processing logic of Flink and the table structure of ClickHouse. Unfortunately, ClickHouse does not support instantaneous column addition or deletion, making modifications to a large table time-consuming and affecting query performance.

Businesses face essential challenges in terms of system stability, complexity of operations and maintenance, analysis performance, and cost as they experience rapid growth.

To address these challenges, Alibaba Cloud offers the PolarDB HTAP integrated solution, which enables real-time transaction processing and data analysis. This solution simplifies the business architecture, reduces operations and maintenance difficulties, and improves system stability. By utilizing PolarDB IMCI, semi-structured data is automatically transformed into structured data, extending stream computing and replacing Flink. The solution also supports second-level column addition and deletion, enhancing system agility, efficiency in operations and maintenance, and analysis performance.

ApsaraDB - August 4, 2023

ApsaraDB - January 4, 2024

ApsaraDB - March 26, 2024

ApsaraDB - August 7, 2023

ApsaraDB - August 8, 2023

ApsaraDB - November 18, 2025

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB