In the Generative AI (GenAI) era, Large Language Models (LLMs) are no longer confined to text. Multimodal models like Qwen2.5 Omni bridge the gap between text, images, audio, and videos, enabling AI to think, see, hear, and speak - like us humans.

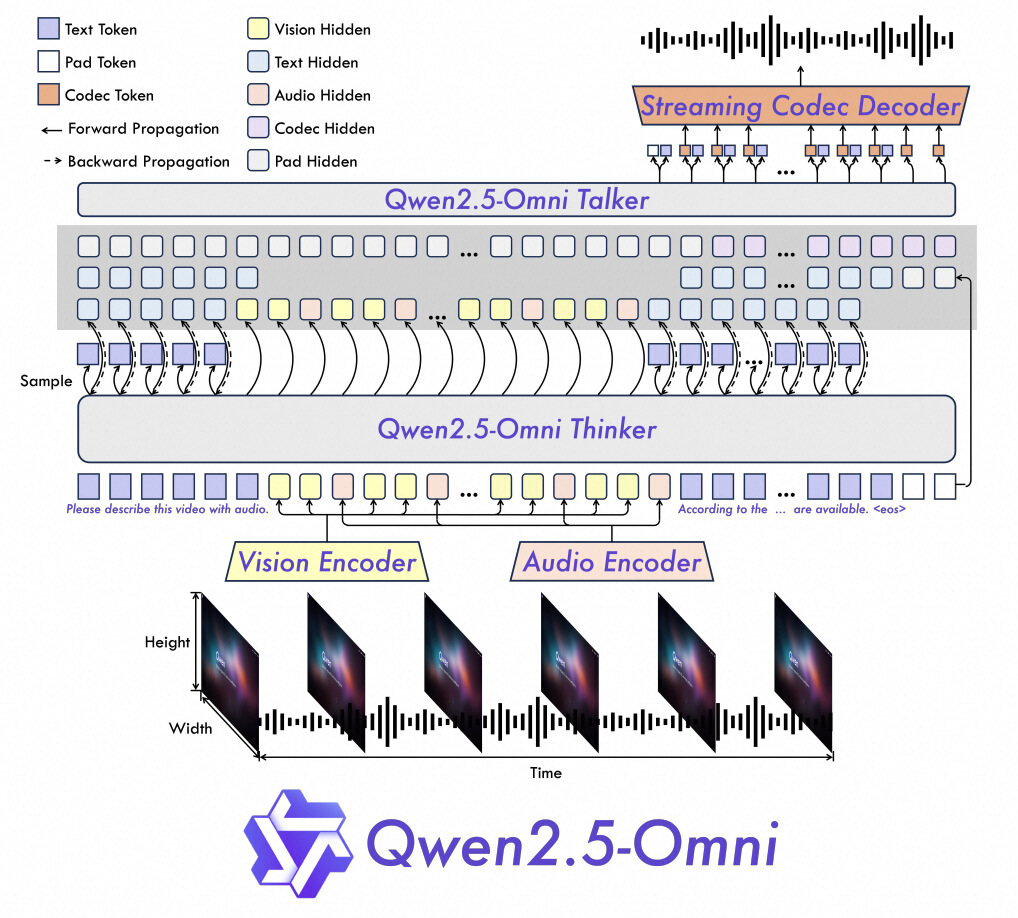

1. TMRoPE Positional Encoding:

2. Thinker-Talker Architecture:

3. Streaming Efficiency:

| Task | Qwen2.5-Omni | Qwen2.5-VL | GPT-4o-Mini | State-of-the-Art |

|---|---|---|---|---|

| Image→Text | 59.2 (MMMUval) | 58.6 | 60.0 | 53.9 (Other) |

| Video→Text | 72.4 (Video-MME) | 65.1 | 64.8 | 63.9 (Other) |

| Multimodal Reasoning | 81.8 (MMBench) | N/A | 76.0 | 80.5 (Other) |

| Speech Generation | 1.42% WER (Chinese) | N/A | N/A | 2.33% (English) |

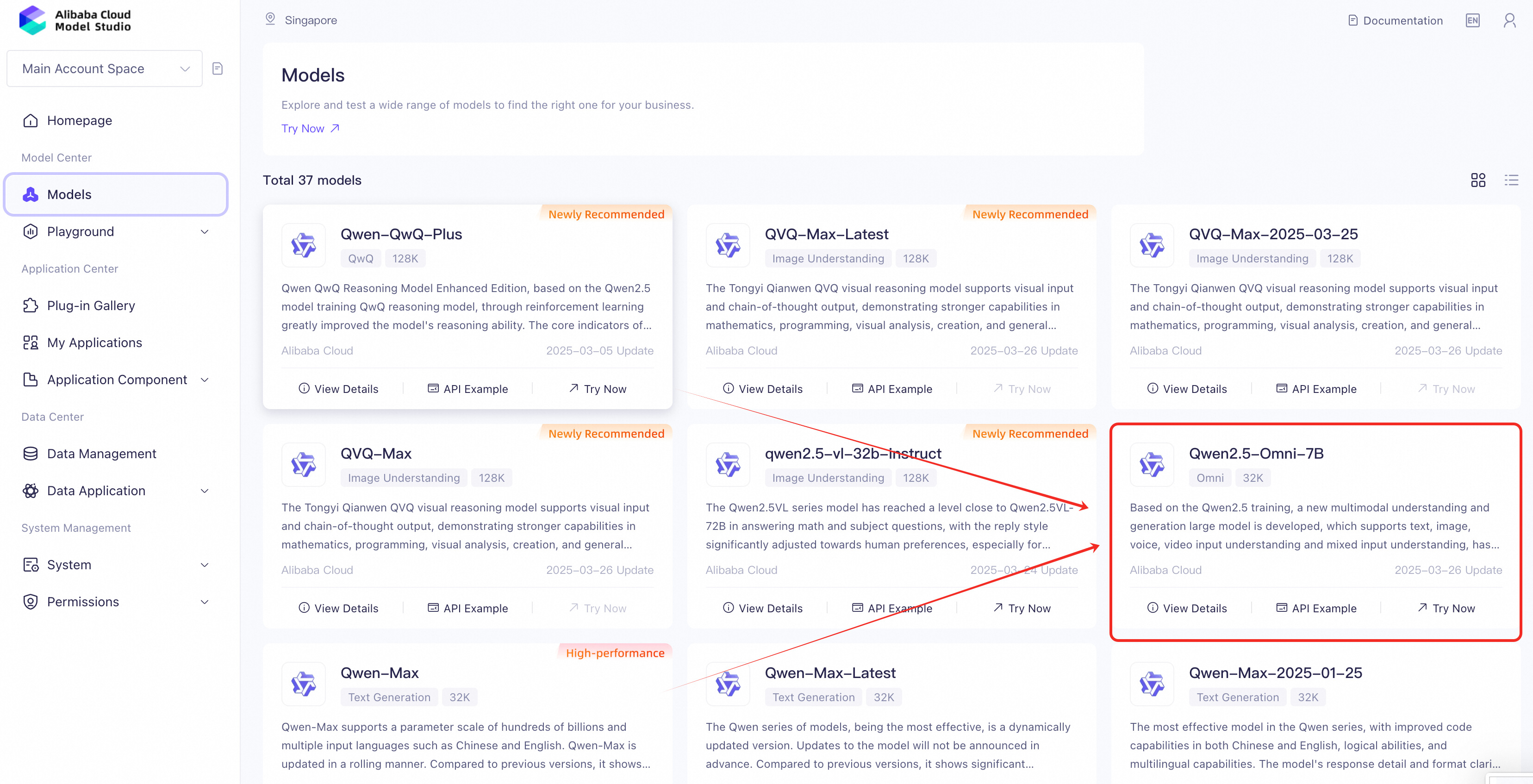

1. Go to Alibaba Cloud ModelStudio or the Model Studio introduction page.

2. Search for “Qwen2.5-Omni” and navigate to its page.

3. Authorize access to the model (free for basic usage).

Security-first setup:

1. Create a virtual environment (recommended):

python -m venv qwen-env

source qwen-env/bin/activate # Linux/MacOS | Windows: qwen-env\Scripts\activate2. Install dependencies:

pip install openai3. Store API key securely:

Create a .env file in your project directory:

DASHSCOPE_API_KEY=your_api_key_hereUse the OpenAI library to interact with Qwen2.5-Omni:

import os

from openai import OpenAI

client = OpenAI(

api_key=os.getenv("DASHSCOPE_API_KEY"),

base_url="https://dashscope-intl.aliyuncs.com/compatible-mode/v1",

)

# Example: Text + Audio Output

completion = client.chat.completions.create(

model="qwen2.5-omni-7b",

messages=[{"role": "user", "content": "Who are you?"}],

modalities=["text", "audio"], # Specify output formats (text/audio)

audio={"voice": "Chelsie", "format": "wav"},

stream=True, # Enable real-time streaming

stream_options={"include_usage": True},

)

# Process streaming responses

for chunk in completion:

if chunk.choices:

print("Partial response:", chunk.choices[0].delta)

else:

print("Usage stats:", chunk.usage)| Feature | Details |

|---|---|

| Input Type | Text, images, audio, video (via URLs/Base64) |

| Output Modality | Specify modalities parameter (e.g., ["text", "audio"] for dual outputs) |

| Streaming Support | Real-time results via stream=True

|

| Security | Environment variables for API keys (.env file) |

Use Case: Live event captioning with emotion detection.

Use Case: Generate product descriptions from images and user reviews.

# Input: Product image + "Write a 5-star review in Spanish"

# Output: Text review + audio version in Spanish. 1. File Size Limits:

2. Optimize for Streaming:

stream=True for real-time outputs.

As GenAI evolves, multimodal capabilities will dominate industries from healthcare to entertainment. By mastering Qwen2.5 Omni, you’re entering the next era of human-AI collaboration.

Start experimenting today and join the revolution!

Regional Content Hub - April 21, 2025

Alibaba Cloud Community - March 27, 2025

Regional Content Hub - April 29, 2025

Kidd Ip - May 13, 2025

Regional Content Hub - April 21, 2025

Alibaba Cloud Community - January 4, 2026

Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Farruh