By Farruh Kushnazarov | December 2025

The world has watched in awe as artificial intelligence evolved from a niche academic pursuit into a transformative force reshaping industries. At the heart of this revolution are foundation models—colossal neural networks that have demonstrated breathtaking capabilities in language, reasoning, and creativity. However, the story of their success is inextricably linked to the story of the infrastructure that powers them. This is a tale of exponential growth, of confronting physical limits, and of the relentless innovation required to build the computational bedrock for the next generation of intelligence.

This article explores the dramatic history of AI training, dissects the critical bottlenecks that threaten to stall progress, and peers into the future of the infrastructure being engineered to overcome these monumental challenges.

The journey to today’s trillion-parameter models was not a straight line but a series of punctuated equilibria, where conceptual breakthroughs were unlocked by new levels of computational power. For decades, the promise of neural networks was constrained by limited data and processing capabilities. That all changed in 2012.

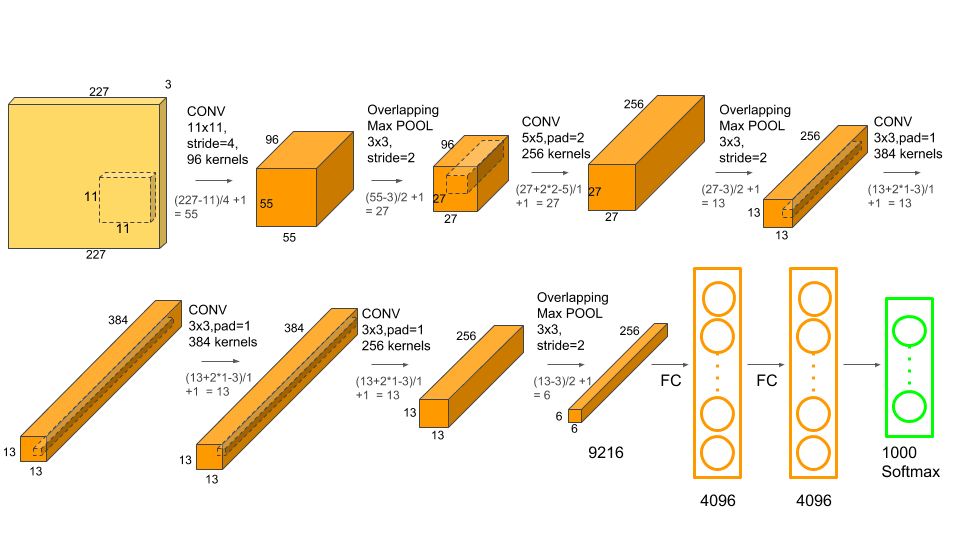

The 2012 ImageNet Large Scale Visual Recognition Challenge (ILSVRC) is widely considered the “Big Bang” of the modern AI era. A deep convolutional neural network (CNN) named AlexNet, developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, achieved a stunning victory [1]. It didn’t just win; it shattered records with an error rate of 15.3%, a massive leap from the 26.2% of the next best entry.

AlexNet’s success was not just due to its novel architecture. Critically, it was one of the first models to be trained on Graphics Processing Units (GPUs). By leveraging the parallel processing power of two NVIDIA GTX 580 GPUs, the researchers were able to train a model with 60 million parameters on the 1.2-million-image dataset—a scale previously unimaginable. This moment proved that with enough data and compute, deep learning was not just viable but vastly superior to traditional methods, igniting a Cambrian explosion in AI research.

The AlexNet architecture, which combined deep convolutional layers with GPU processing to achieve its breakthrough performance. Source: Krizhevsky et al., 2012.

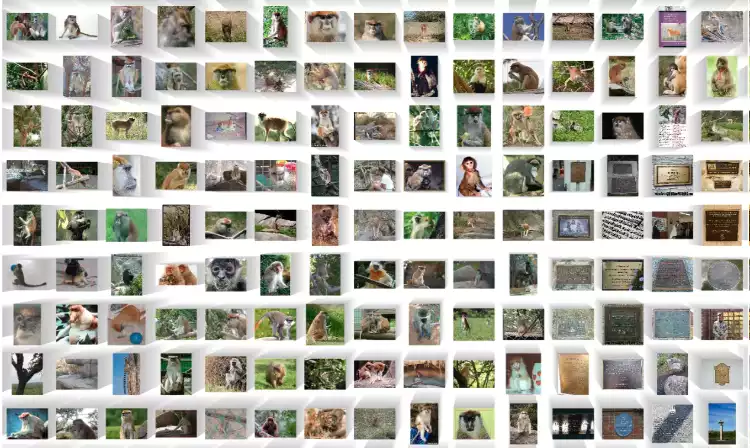

A small sample of the millions of labeled images in the ImageNet dataset that fueled the deep learning revolution. Source: ImageNet

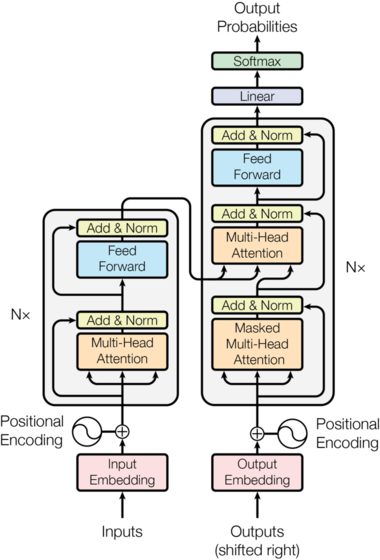

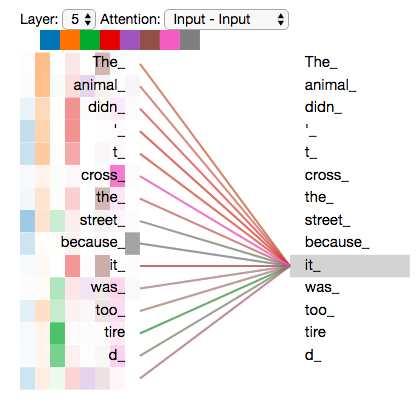

Five years later, another seismic shift occurred. In 2017, a paper from Google titled “Attention Is All You Need” introduced the Transformer architecture [2]. This new design dispensed entirely with the recurrent and convolutional structures that had dominated the field. Instead, it relied on a mechanism called “self-attention,” which allowed the model to weigh the importance of different words in an input sequence simultaneously.

The key innovation was parallelization. Unlike Recurrent Neural Networks (RNNs) that process data sequentially, Transformers could process all parts of the input at once. This architectural change was a perfect match for the massively parallel nature of GPUs, unlocking the ability to train vastly larger and more complex models at an unprecedented speed. The Transformer became the foundational blueprint for nearly all subsequent large language models (LLMs), including the GPT series.

The original Transformer architecture as introduced in the "Attention Is All You Need" paper. Source: Vaswani et al., 2017.

A visualization of the self-attention mechanism, which allows the model to weigh the importance of different words in a sentence. Source: Jay Alammar

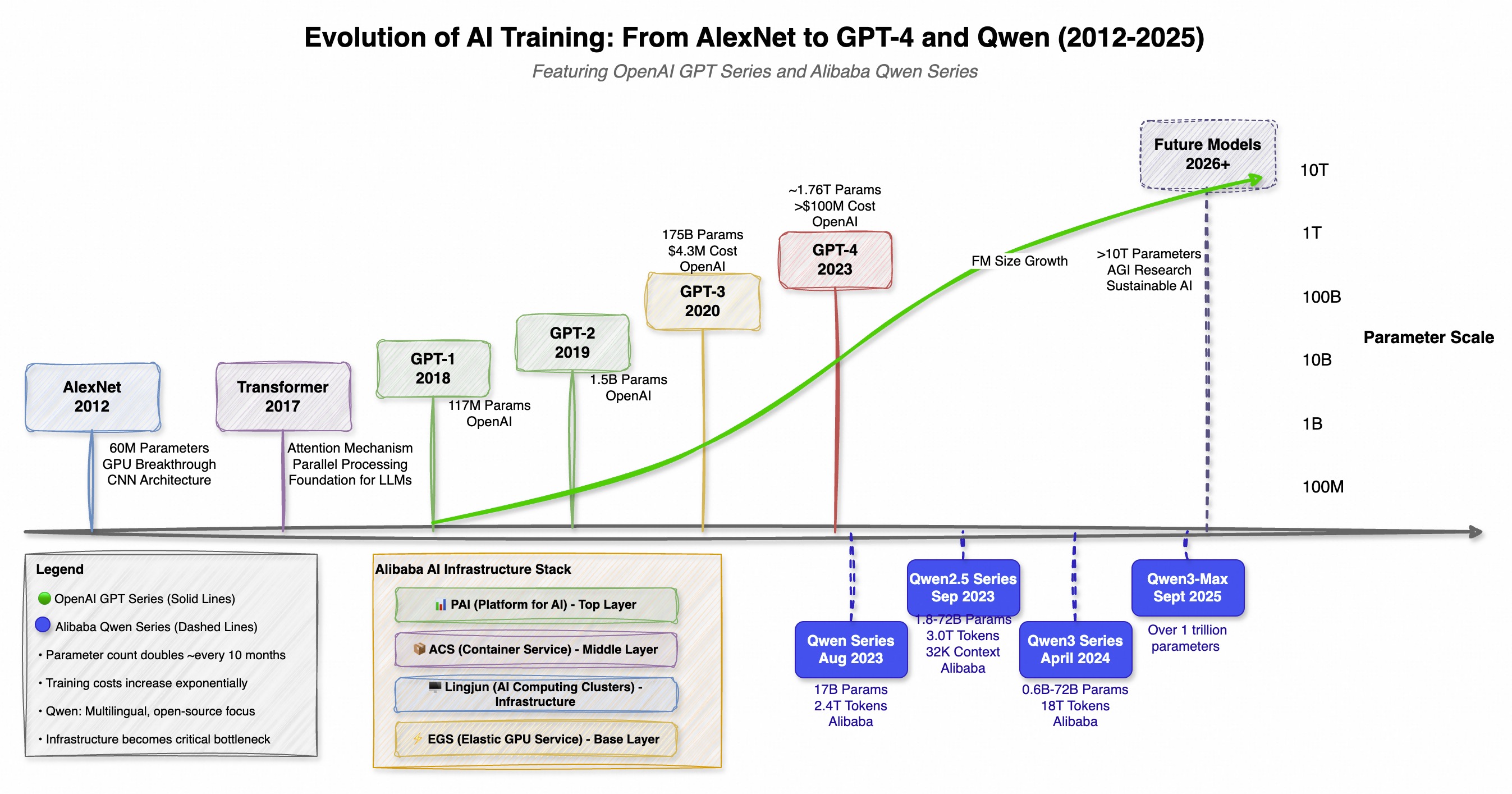

The introduction of the Transformer kicked off a global computational arms race. The size, cost, and complexity of AI models began to grow at an exponential rate. This trend is exemplified not just by one company, but by parallel developments across the globe, most notably with OpenAI’s GPT series in the West and Alibaba’s Qwen (通义千问) series in the East. Both have pushed the boundaries of scale, but with different philosophies—GPT focusing on groundbreaking capabilities and Qwen emphasizing a strong open-source and multilingual approach.

This dual-track evolution highlights a worldwide sprint toward more powerful and efficient AI, with each new model release setting a higher bar for the next.

| Model | Release Date | Parameters | Key Innovation / Focus |

|---|---|---|---|

| GPT-1 | 2018 | 117 Million | Unsupervised Pre-training (OpenAI) |

| GPT-2 | 2019 | 1.5 Billion | Zero-shot Task Performance (OpenAI) |

| GPT-3 | 2020 | 175 Billion | Few-shot, In-context Learning (OpenAI) |

| Qwen-7B | Aug 2023 | 7 Billion | Strong open-source multilingual base (Alibaba) |

| GPT-4 | Sep 2023 | ~1.76 Trillion (MoE) | Advanced Reasoning, Multimodality (OpenAI) |

| Qwen-72B | Nov 2023 | 05-72 Billion | 32K context length, open source (Alibaba) |

| Qwen2.5 | Sep 2024 | Up to 72 Billion | Trained on 18T tokens, enhanced coding/math (Alibaba) |

| Qwen3-Max | Sep 2025 | ~1 Trillion | Qwen's largest and most capable model to date (Alibaba) |

This explosive growth, where compute demand doubles every five to six months, has pushed the development of frontier AI into a domain accessible only to a handful of hyperscale corporations with the capital to build and operate planet-scale supercomputers [5].

A timeline showing the parallel evolution of OpenAI's GPT series and Alibaba's Qwen series, highlighting the global race in foundation model development.

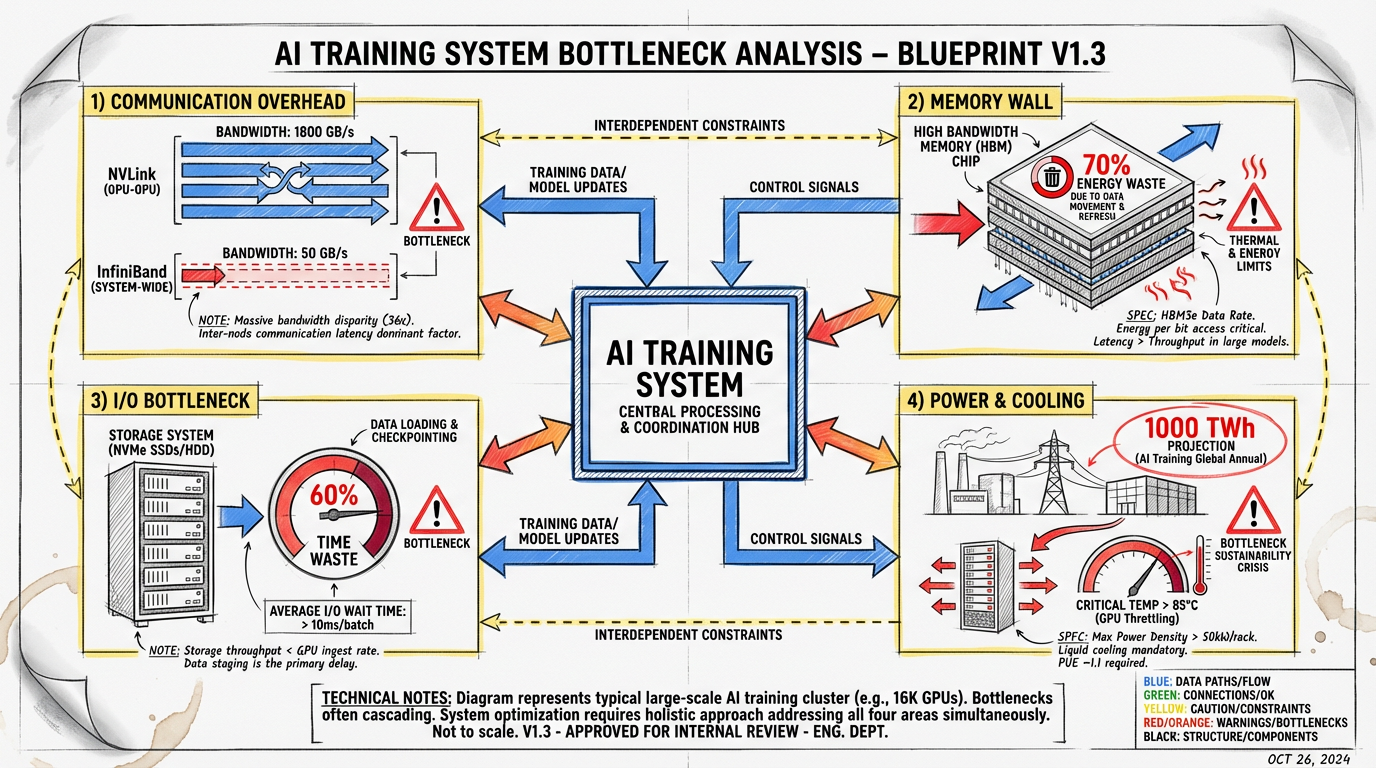

This relentless pursuit of scale has pushed the underlying infrastructure to its breaking point. Training a trillion-parameter model is not simply a matter of adding more GPUs; it is a complex engineering challenge fraught with physical and systemic bottlenecks. The primary goal is to keep every expensive processor fully utilized, but a host of issues stand in the way.

Modern AI factories are vast data centers containing thousands of interconnected GPUs. Source: NVIDIA

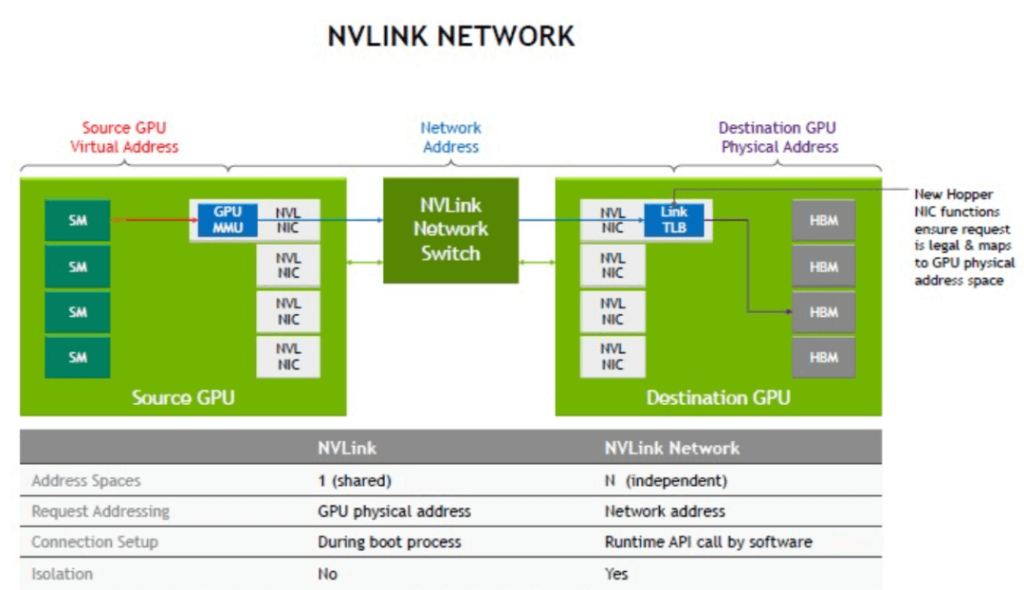

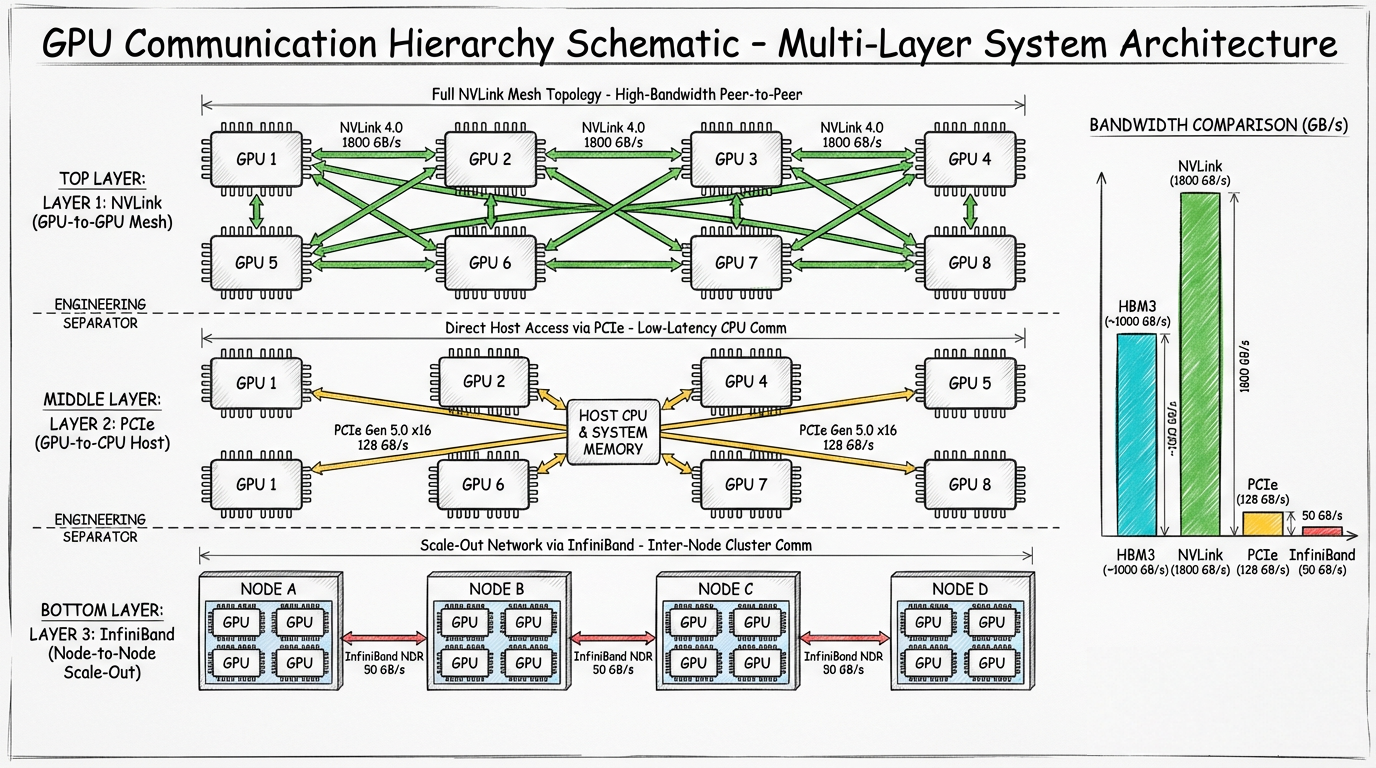

In distributed training, a model is spread across thousands of GPUs, each working on a piece of the puzzle. The most critical and time-consuming step is gradient synchronization, where all GPUs must communicate their results to agree on the next update to the model’s weights. This creates a massive communication bottleneck.

Diagram of NVIDIA's NVLink technology, which provides high-speed, direct communication between GPUs within a server. Source: NVIDIA

A schematic showing the communication bottleneck in a distributed training setup, where GPUs communicate with each other and with the network fabric.

Modern AI models are voracious consumers of memory. The parameters, optimizer states, and intermediate activations for a trillion-parameter model can require terabytes of storage, far exceeding the memory available on a single GPU (typically 80-120 GB). This leads to the “memory wall” problem.

Data must be constantly shuffled between the GPU’s high-bandwidth memory (HBM) and the slower system RAM or even network storage. This data movement is not only slow but also incredibly energy-intensive. According to NVIDIA, up to 70% of the energy in a typical GPU workload is consumed just moving data around [8].

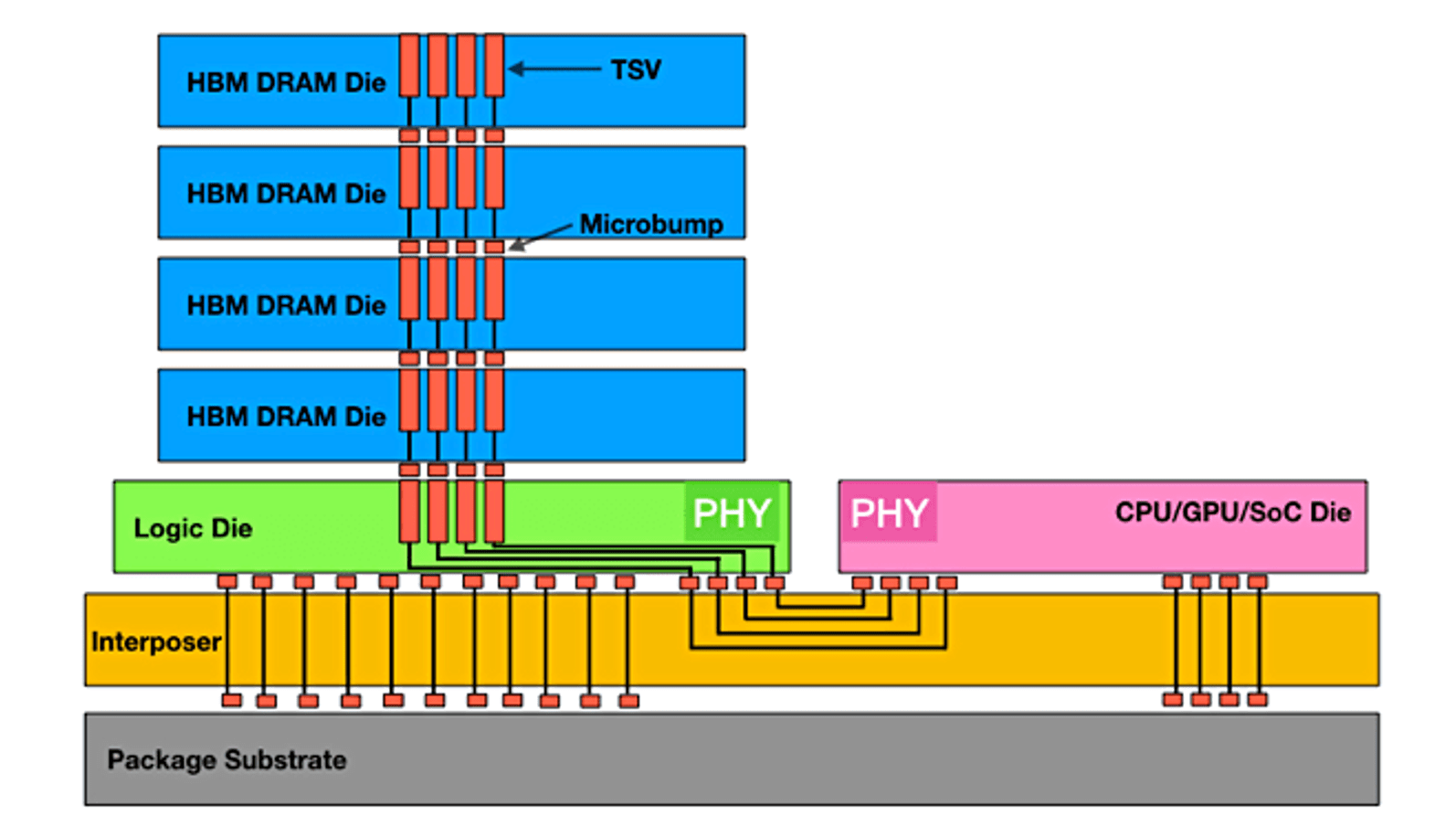

High-Bandwidth Memory (HBM) stacks memory dies vertically to provide the massive bandwidth required by modern GPUs, but it is still a finite and expensive resource. Source: Semiconductor Engineering

Before a GPU can even begin its calculations, it needs data. In many large-scale training scenarios, the process of loading training data from storage to the GPUs can become a major bottleneck. High-performance parallel file systems are required to deliver data at terabytes per second to keep tens of thousands of GPUs fed. If the data pipeline is not perfectly optimized, GPUs can sit idle, wasting millions of dollars in computational potential. In some cases, data loading can consume over 60% of the total training time, effectively halving the efficiency of the entire cluster [9].

The sheer energy required to power these AI factories is staggering. A single large-scale training run can consume as much electricity as a small city. The International Energy Agency estimates that data center electricity use could double to 1,000 terawatt-hours by 2026, largely driven by AI [10]. This immense power consumption generates an enormous amount of heat, creating a parallel challenge in cooling. Traditional air cooling is becoming insufficient, pushing the industry toward more exotic solutions like liquid and immersion cooling to manage the extreme thermal densities of modern AI hardware.

Dense racks of GPU servers generate immense heat, making cooling a critical infrastructure challenge. Source: AMAX

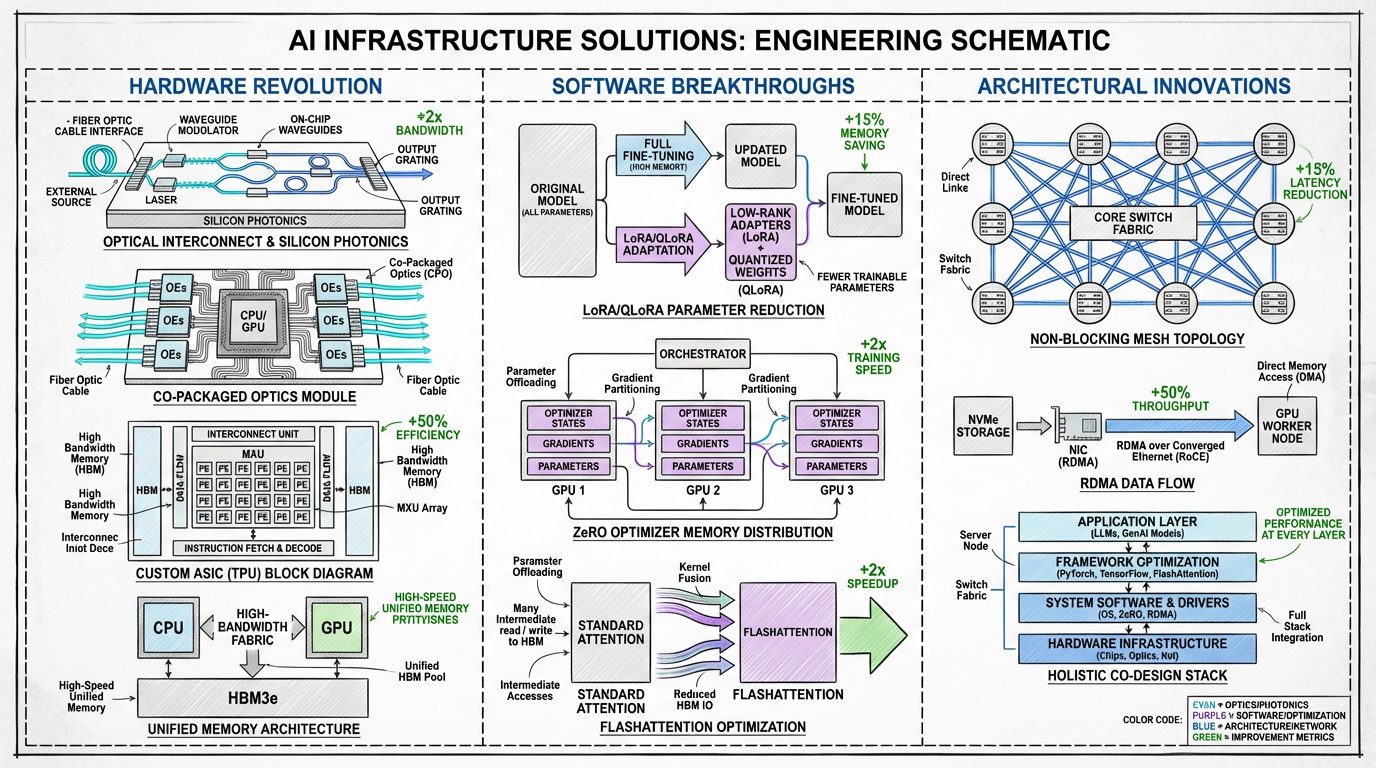

The industry is tackling these bottlenecks with a multi-pronged approach, innovating across hardware, software, and system architecture. The future of AI infrastructure is not just about more powerful chips, but about a holistic, co-designed system where every component is optimized for efficiency and scale.

A schematic of the proposed solutions to overcome the infrastructure bottlenecks.

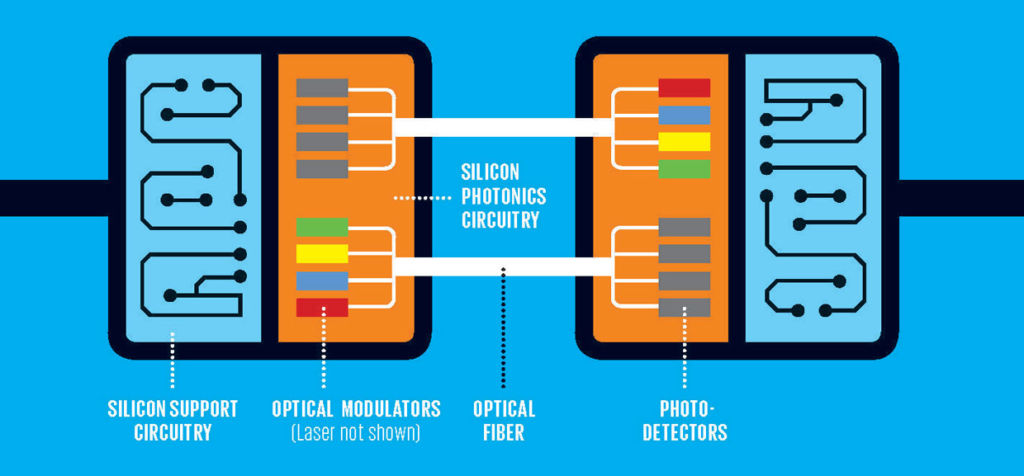

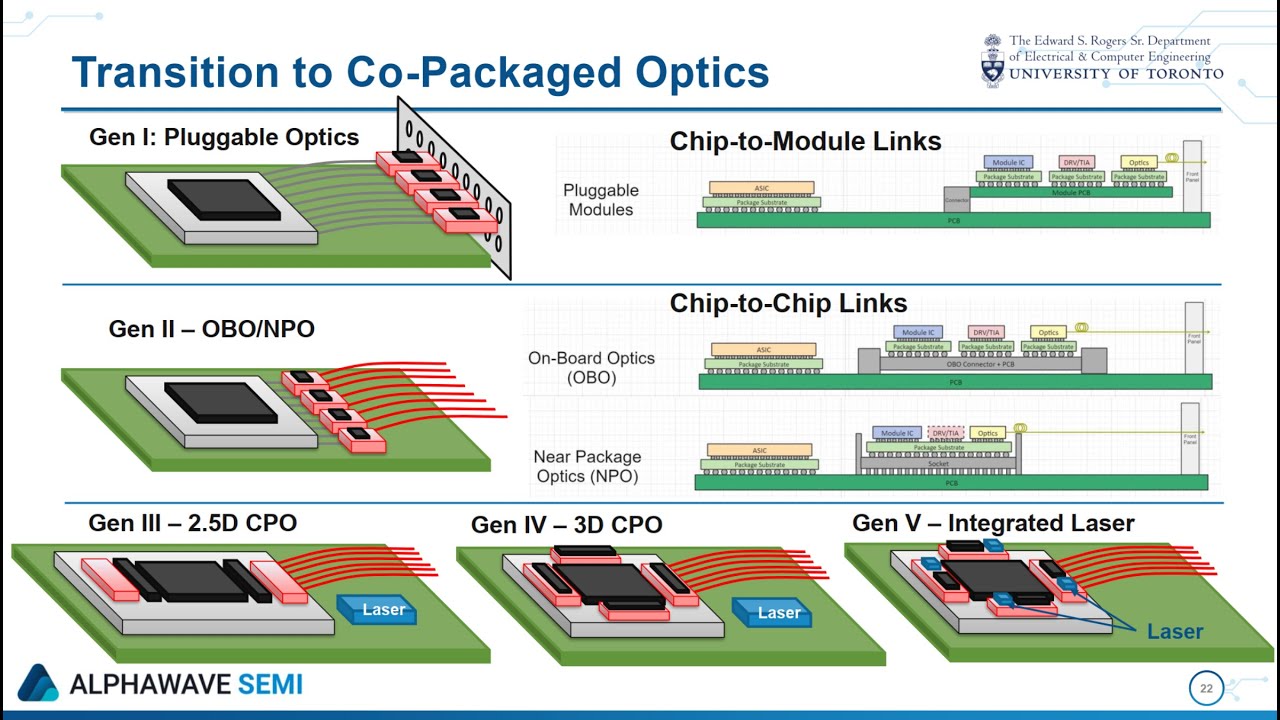

Optical Interconnects: The most profound shift on the horizon is the move from electrical (copper) to optical (light-based) interconnects. Silicon photonics promises to transmit data at terabits per second with significantly lower latency and power consumption. Co-packaged optics, which integrate optical I/O directly onto the processor package, will eliminate the need for slow, power-hungry electrical connections, directly addressing the communication and energy bottlenecks [11].

Silicon photonics uses light to transmit data, offering a path to dramatically higher bandwidth and lower power consumption compared to traditional copper wiring. Source: FindLight

Co-packaged optics (CPO) integrates optical transceivers directly with the processor chip, minimizing data travel distance and maximizing efficiency. Source: Anritsu

Next-Generation Accelerators: While GPUs remain dominant, the landscape is diversifying. Custom ASICs (Application-Specific Integrated Circuits) like Google’s TPUs and Meta’s MTIA are designed for specific AI workloads, offering superior performance and efficiency for their target tasks. This trend toward domain-specific accelerators will allow for more optimized infrastructure beyond the one-size-fits-all GPU.

Unified Memory Architectures: To break down the memory wall, companies are developing tightly integrated chipsets. NVIDIA’s Grace Hopper Superchip, for example, combines a CPU and GPU on a single module with a high-speed, coherent interconnect. This allows both processors to share a single pool of memory, drastically reducing the costly data movement between CPU and GPU memory domains.

Parameter-Efficient Fine-Tuning (PEFT): Not every task requires retraining a multi-billion dollar model from scratch. Techniques like LoRA (Low-Rank Adaptation) and QLoRA allow for efficient fine-tuning by only updating a tiny fraction of the model’s parameters [12]. This dramatically reduces the computational and memory requirements, making model adaptation accessible to a much broader range of users and organizations.

Advanced Optimizers and Algorithms: Software innovations are playing a crucial role in improving efficiency. Microsoft’s ZeRO (Zero Redundancy Optimizer) partitions the model’s state across the available GPUs, allowing for the training of massive models with significantly less memory per device [13]. Algorithms like FlashAttention re-engineer the attention mechanism to reduce memory reads/writes, leading to significant speedups and reduced memory footprint.

Purpose-built AI supercomputers are integrating these hardware and software solutions into a cohesive whole. A key trend in this area is the development of supernode architectures, which aim to create larger, more powerful, and more efficient units of computation. These architectures rethink the traditional server rack to optimize for AI-specific workloads.

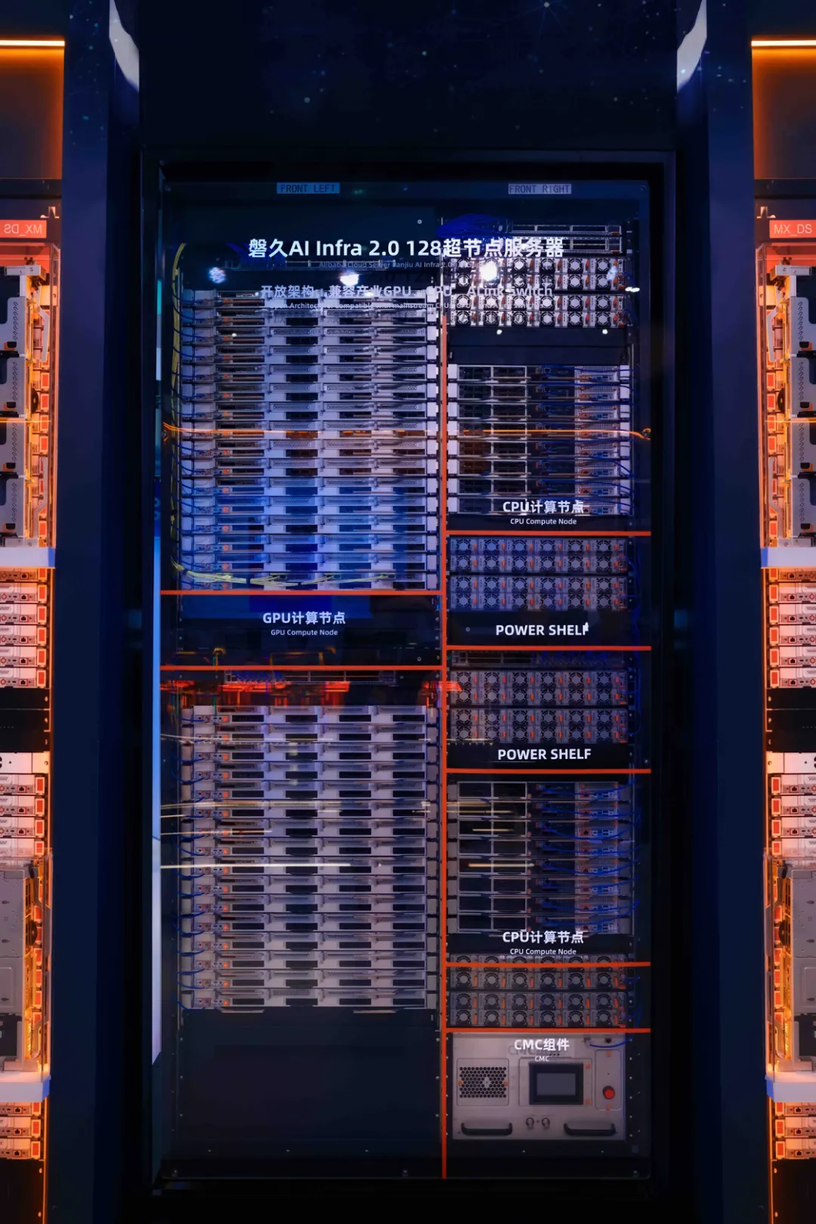

One prominent example is Alibaba Cloud’s Lingjun platform, which utilizes the Panjiu AL128 supernode design. This architecture represents a shift toward a more modular and decoupled system. Key features include:

The Panjiu AL128 supernode architecture, featuring an orthogonal interconnect design and decoupled modules for CPU, GPU, and power. Source: Alibaba Cloud

While such tightly integrated, high-density systems offer significant performance gains (Alibaba claims a 50% improvement in inference performance for the same computing power), they also present challenges. The pros include higher efficiency, lower communication latency, and greater scalability within the supernode. However, the cons involve increased complexity, reliance on custom hardware and interconnects (like UALink), and the need for sophisticated liquid cooling and power infrastructure, which can increase capital and operational costs.

These architectural innovations, combined with other techniques, are creating a new blueprint for AI factories:

For a deeper technical dive into the Panjiu AL128 architecture, see this detailed analysis and video overview from Alibaba Cloud here and here.

The first era of the AI revolution was defined by brute-force scaling—bigger models, more data, and more compute. While scale will always be important, the next era will be defined by efficiency and finesse. The future of AI is not just about building larger models, but about building smarter, more sustainable, and more accessible infrastructure to train and run them.

The journey from AlexNet’s first use of GPUs to the optical, co-designed AI factories of tomorrow is a testament to the relentless pace of innovation. Overcoming the bottlenecks of communication, memory, and power is the grand challenge of our time, and the solutions being developed today will lay the foundation for the next wave of artificial intelligence.

[1] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet Classification with Deep Convolutional Neural Networks. Advances in Neural Information Processing Systems 25.

[2] Vaswani, A., et al. (2017). Attention Is All You Need. Advances in Neural Information Processing Systems 30.

[3] Visual Capitalist. (2023). Charted: The Skyrocketing Cost of Training AI Models Over Time.

[4] Forbes. (2024). The Extreme Cost of Training AI Models.

[5] R&D World. (2024). AI’s great compression: 20 charts show vanishing gaps but still soaring costs.

[6] NVIDIA. (2024). NVIDIA GB200 NVL72.

[7] Zhai, E., et al. (2024). HPN: A Non-blocking, Dual-plane, Application-layer-agnostic High-performance Network for Large-scale AI Training. SIGCOMM 2024.

[8] Szasz, D. (2024). Understanding Bottlenecks in Multi-GPU AI Training. Medium.

[9] Alibaba Cloud. (2024). PAI-Lingjun Intelligent Computing Service Features.

[10] Forbes. (2025). Why Optical Infrastructure Is Becoming Core To The Future Of AI.

[11] Yole Group. (2023). Co-Packaged Optics for Datacenters 2023.

[12] Dettmers, T., et al. (2023). QLoRA: Efficient Finetuning of Quantized LLMs. Advances in Neural Information Processing Systems 36.

[13] Microsoft Research. (2020). ZeRO & DeepSpeed: New system optimizations enable training models with over 100 billion parameters.

[14] Alibaba Cloud. (2025). In-depth Analysis of Alibaba Cloud Panjiu AL128 Supernode AI Servers and Their Interconnect Architecture. Alibaba Cloud Blog.

[15] Alibaba Cloud. (2025). Panjiu AL128 Supernode AI Server Video Overview. YouTube.

Regional Content Hub - December 15, 2025

Regional Content Hub - December 10, 2025

Regional Content Hub - December 10, 2025

Alibaba Clouder - September 6, 2018

Alibaba Cloud Community - November 26, 2024

ApsaraDB - October 24, 2025

Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by Farruh