Datasets are the lifeblood of artificial intelligence, especially in training large language models (LLMs) that power everything from chatbots to content generators. These datasets form the foundation upon which AI models learn and develop their capabilities. However, as the demand for more advanced AI systems grows, so does the need for high-quality, diverse, and extensive datasets. This article delves into the history of dataset usage, the types of data required at various stages of LLM training, and the challenges faced in sourcing and utilizing these datasets.

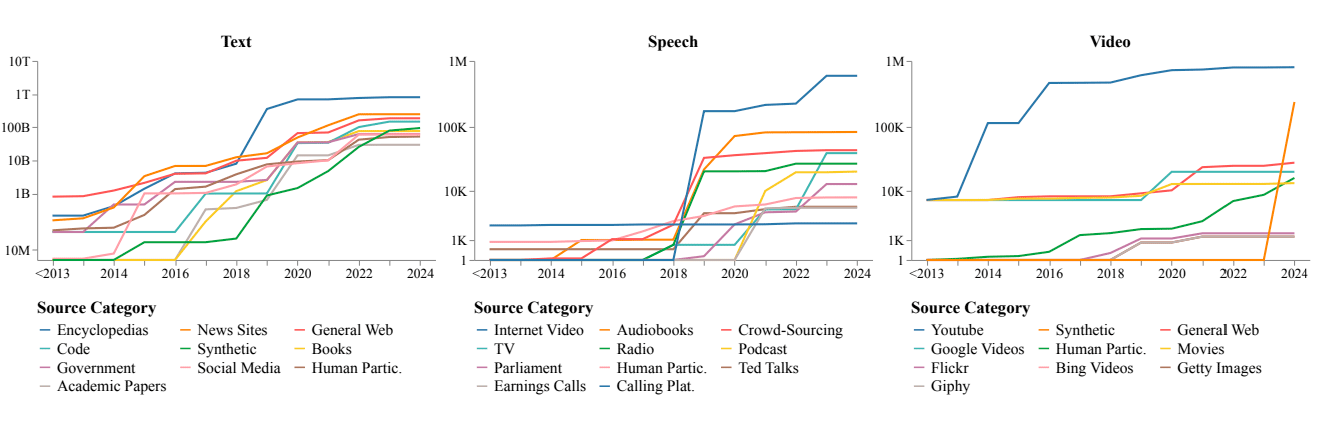

In the early days of AI research, datasets were meticulously curated from various sources, such as encyclopedias, parliamentary transcripts, phone call recordings, and weather forecasts. Each dataset was tailored to address specific tasks, ensuring relevance and quality. However, with the advent of transformers in 2017—a neural network architecture pivotal to modern language models—the focus shifted toward sheer volume, marking a significant change in the AI research approach. Researchers realized that the performance of LLMs improved significantly with larger models and datasets, leading to indiscriminate data scraping from the internet.

By 2018, the internet had become the dominant source for all data types, including audio, images, and video. This trend has continued, resulting in a significant gap between internet-sourced data and manually curated datasets. The demand for scale also led to the widespread use of synthetic data—data generated by algorithms rather than collected from real-world interactions.

Pre-training is the initial phase, where the model is exposed to vast amounts of text data to learn general language patterns and structures. During this stage, the model requires:

However, ethical considerations arise when using copyrighted materials without permission.

Continuous pre-training involves updating the model with new data to keep it current and improve its knowledge base. This phase requires:

Fine-tuning adapts the pre-trained model to specific tasks or domains. It typically uses smaller, more targeted, carefully labeled, and curated datasets. For example:

Below are examples of datasets and methods used in this process.

By combining curated datasets with advanced methods like PEFT, researchers and developers can optimize LLMs for niche applications while addressing resource constraints and scalability challenges

Reinforcement learning from human feedback (RLHF) involves training the model to align better with human preferences. This stage needs:

Below are examples of datasets and methods central to RLHF:

Preference Datasets: RLHF begins with collecting human-labeled preference data, where humans rank or rate model outputs. For instance, OpenAI's early RLHF experiments used datasets where annotators compared multiple model-generated responses to the same prompt, labeling which ones were more helpful, truthful, or aligned with ethical guidelines. These datasets often include nuanced examples, such as distinguishing between factual and biased answers in sensitive topics like politics or healthcare.

By combining preference datasets, reward modeling, and iterative human feedback, RLHF ensures LLMs evolve from generic text generators to systems prioritizing safety, relevance, and human alignment.

One of the most pressing issues today is readily available textual data exhaustion. Major tech players have reportedly indexed almost all accessible text data from the open and dark web, including pirated books, movie subtitles, personal messages, and social media posts. With fewer new sources to tap into, the industry faces a bottleneck in further advancements.

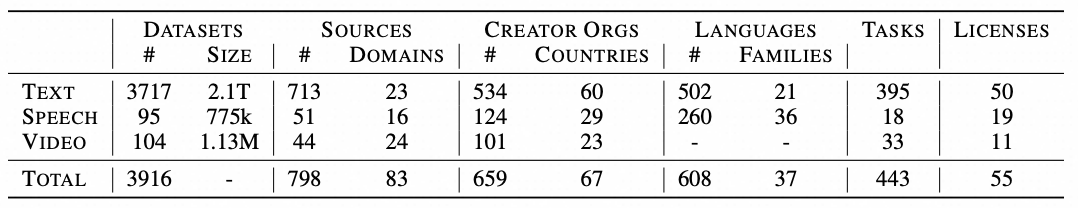

Cumulative amount of data (in logarithmic scale for text, in hours for speech/video) from each source category, across all modalities. Source categories in the legend are ordered in descending order of quantity.

Most datasets originate from Europe and North America, reflecting a Western-centric worldview. Less than 4% of analyzed datasets come from Africa, highlighting a significant cultural imbalance. This bias can lead to skewed perceptions and reinforce stereotypes, particularly in multimodal models that generate images and videos.

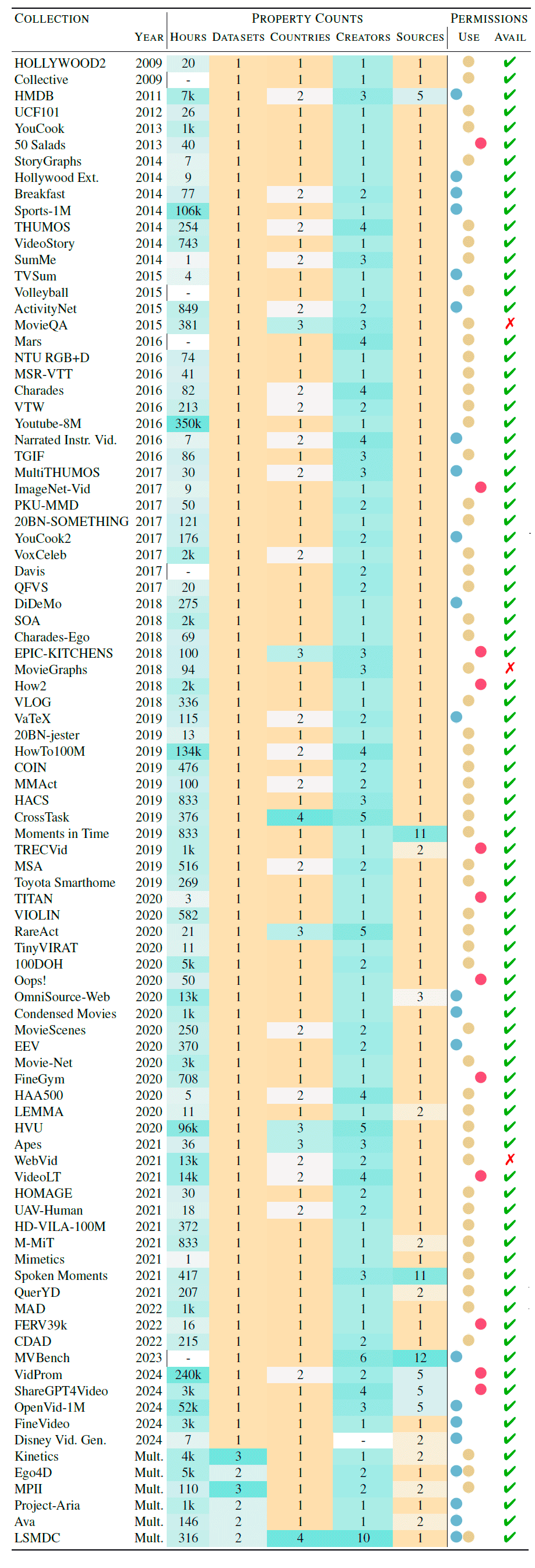

Large corporations dominate the acquisition and control of influential datasets. Platforms like YouTube provide over 70% of video data used in AI training, concentrating immense power in the hands of a few entities. This centralization hinders innovation and creates barriers for smaller players who lack access to these resources.

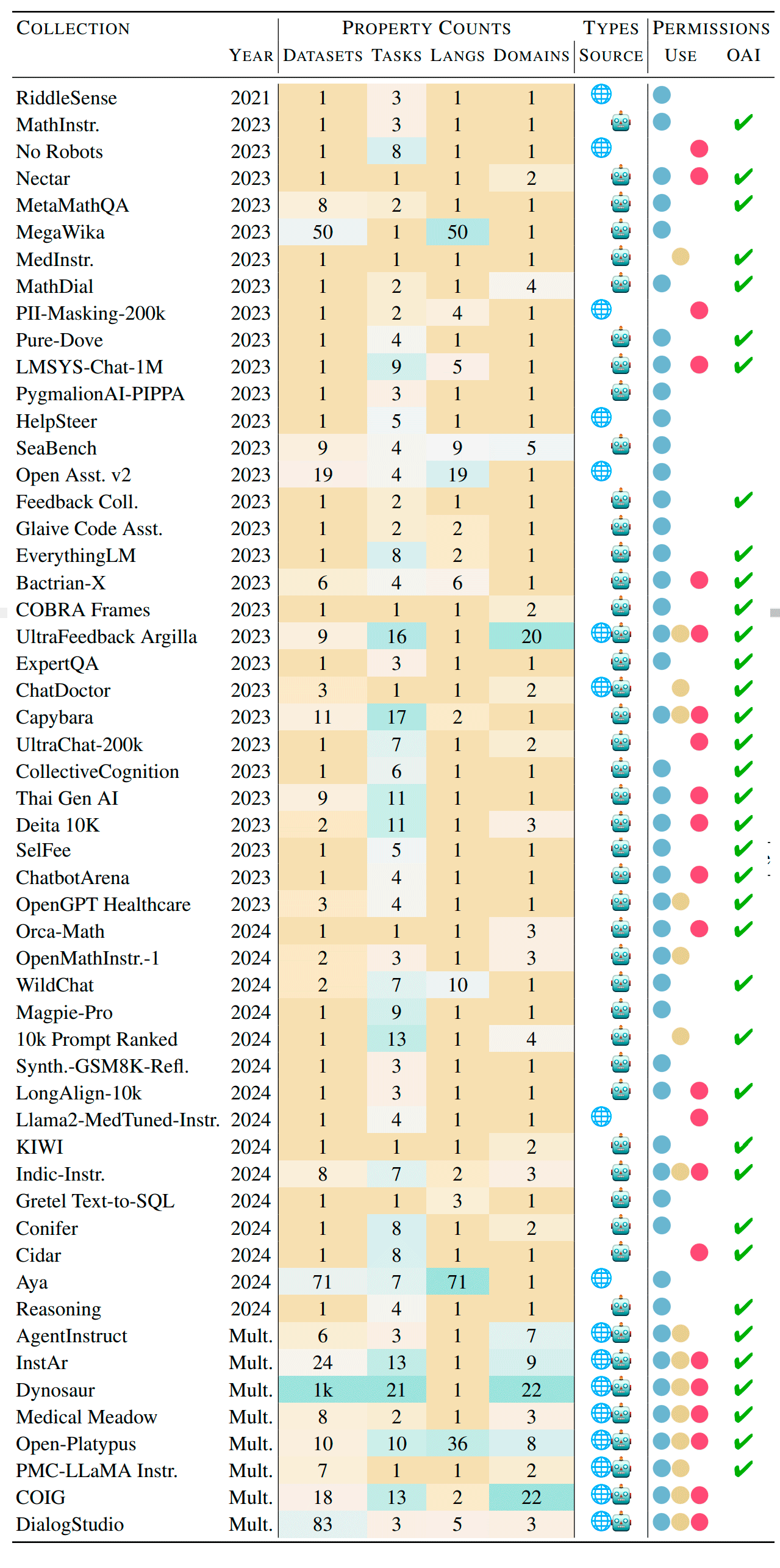

The following table shows the sources of text collections. Properties include the number of datasets, tasks, languages, and text domains. The Source column indicates the content of the collection: human-generated text on the web, language model output, or both. The final column indicates the collection's licensing status: blue for commercial use, red for non-commercial and academic research, and yellow for unclear licensing. Finally, the OAI column indicates collections that include generations of OpenAI models. The datasets are sorted chronologically to emphasise trends over time. Source here

Collection of the text data:

Collection of the video data:

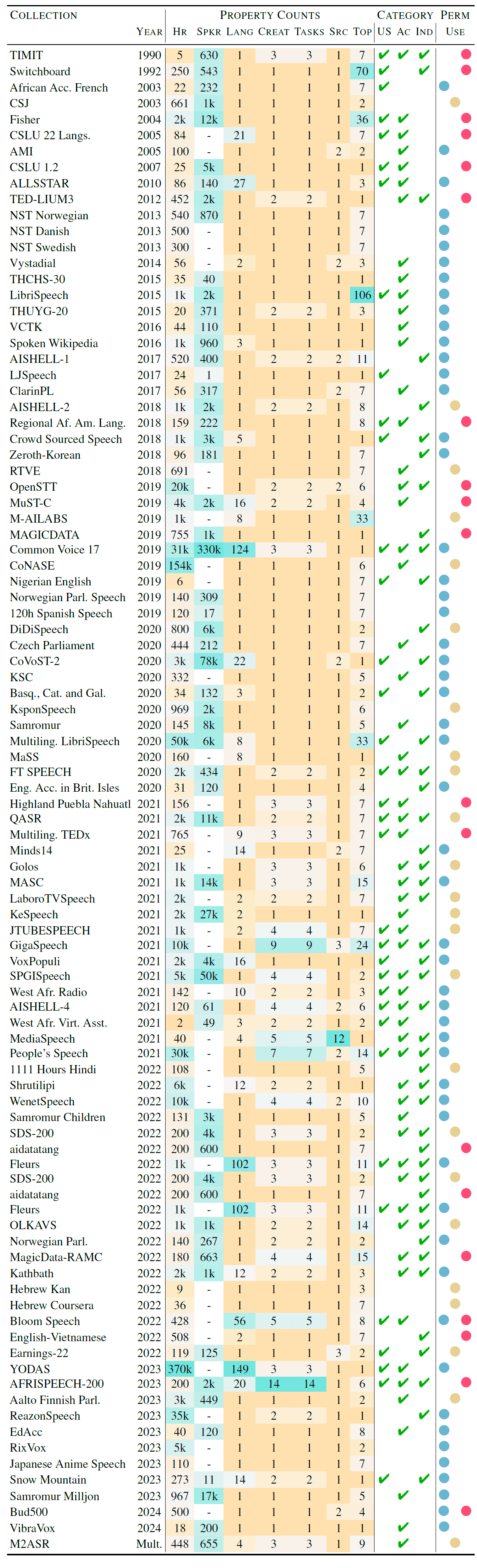

Collection of the audio data:

Despite the apparent depletion of easily accessible data, numerous untapped sources remain:

Advanced LLMs can help structure and utilize these latent datasets for future training.

Federated learning allows models to be trained on sensitive data without transferring it outside secure environments. This method is ideal for industries dealing with confidential information, such as healthcare, finance, and telecommunications. By keeping data localized, federated learning ensures privacy while enabling collaborative model improvement.

Synthetic data generation and data augmentation present promising avenues for expanding training datasets:

As the field of AI continues to evolve, the role of datasets remains paramount. While the exhaustion of readily available data poses a challenge, it's crucial that we, as AI researchers and enthusiasts, are aware of and take responsibility for addressing issues of cultural asymmetry and centralization. Innovative solutions like leveraging untapped sources, federated learning, and synthetic data generation offer pathways forward. By combining these strategies, we can ensure equitable and diverse AI development, paving the way for more sophisticated and inclusive artificial intelligence systems.

Academy Insights - December 25, 2025

Alibaba Cloud Community - September 19, 2025

Alibaba Cloud Community - January 4, 2024

Alibaba Cloud Community - October 8, 2024

Alibaba Cloud Community - August 14, 2025

CloudSecurity - September 11, 2025

Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn MoreMore Posts by Farruh