By Hongwei

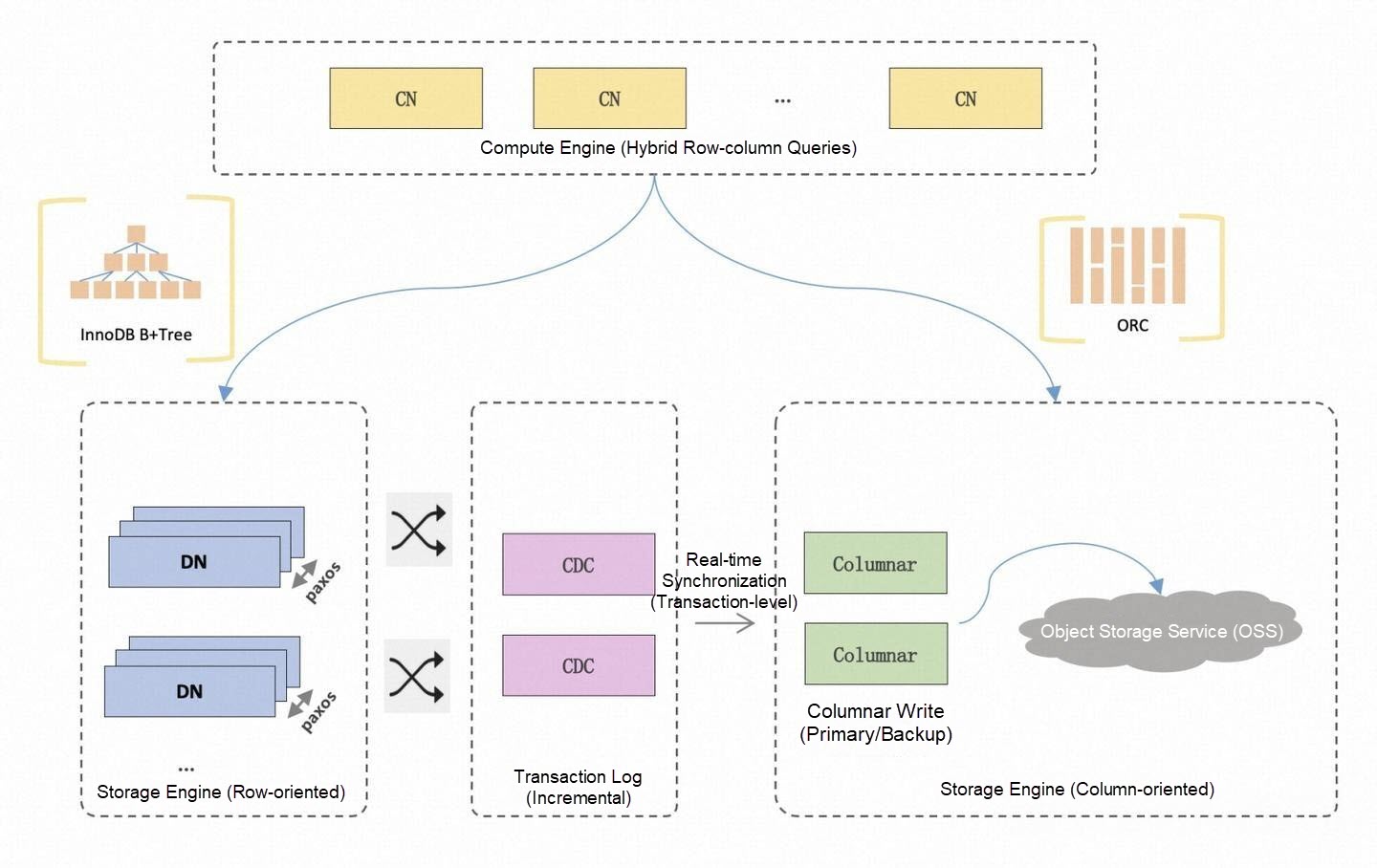

PolarDB for Xscale (PolarDB-X), as a cloud-native distributed database, provides Hybrid Transactional and Analytical Processing (HTAP), compute-storage separation, global secondary indexes, and other key features. For HTAP workloads, PolarDB-X implements a vectorized execution engine and supports query and caching for column-oriented storage. Previous TPC-H reports have already demonstrated that its query performance ranks among the industry's best. (Reference: PolarDB-X TPC-H 100G White Paper).

ClickBench, developed by ClickHouse, Inc., is a test dataset designed to evaluate Online Analytical Processing (OLAP) systems' performance in large wide table scenarios. The dataset consists of a single table of 70 GB with 105 columns and approximately 99,997,497 (approximately 100 million) rows, and includes 43 SQL queries. Since ClickBench queries cover a wide variety of large wide table scenarios, many OLAP vendors around the globe have competed on this benchmark in recent years.

This article presents PolarDB-X's exploration and optimization practices on the ClickBench dataset. At the optimizer level, new rules were added to rewrite SQL queries for more efficient execution plans. At the executor level, the DISTINCT operator was optimized using HashSet, and an adaptive two-phase aggregation was implemented to automatically determine whether pre-aggregation is beneficial based on the workload. Because ClickBench places high demands on low-level implementation details, additional optimizations were applied to the NoGroupBy aggregate functions and the Group By Long, Long scenarios.

After these optimizations, PolarDB-X achieved top-tier performance on large wide table workloads. Specific performance results can be found in the ClickBench performance white paper, showing that queries on a 4×8-core, 32 GB memory setup completed in just 23 seconds.

In the final section, we analyze the bottlenecks in the ClickBench dataset and discuss two potential optimization directions: Swiss Hash Table and HyperScan. We believe these improvements will further enhance PolarDB-X's performance in the future.

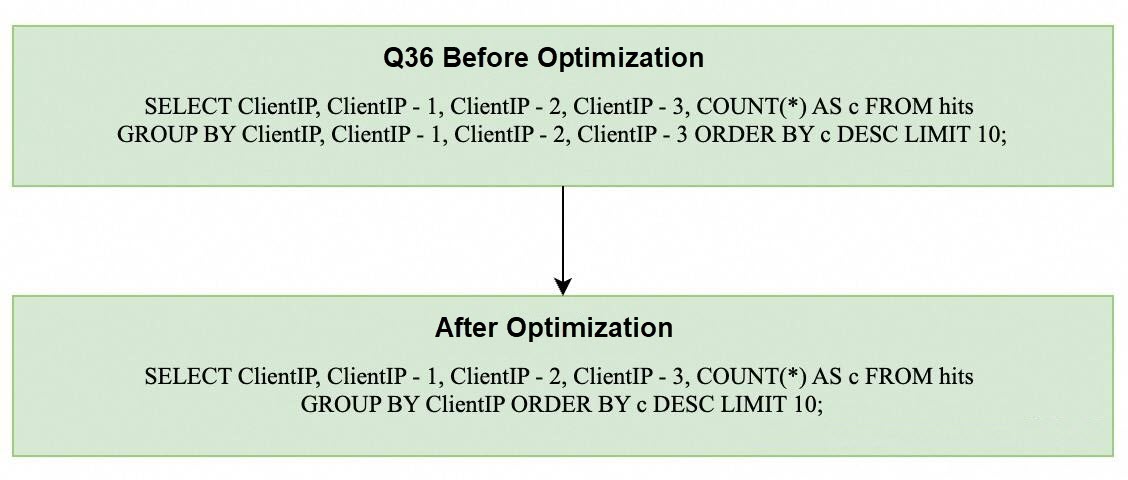

For Q36, although the GROUP BY clause contains four columns, all of them are expressed as ClientIP minus a constant. Once ClientIP is determined, the values of ClientIP, ClientIP - 1, ClientIP - 2, and ClientIP - 3 are uniquely determined as well. Therefore, the groupId in the GROUP BY operation will be the same, allowing us to safely reduce the aggregate columns to just GROUP BY ClientIP and eliminate unnecessary computation.

The same logic applies to Q35. In the GROUP BY 1, URL clause, the first column is a constant (1), so rewriting it as GROUP BY URL does not affect the final result.

SELECT 1, URL, COUNT(*) AS c FROM hits GROUP BY 1, URL ORDER BY c DESC LIMIT 10;

PolarDB-X's optimizer is implemented based on the Cascade model. Thanks to its highly extensible rule framework, adding a new Rule-Based Optimization (RBO) rule is straightforward. For Q36, the simplified logic is shown below.

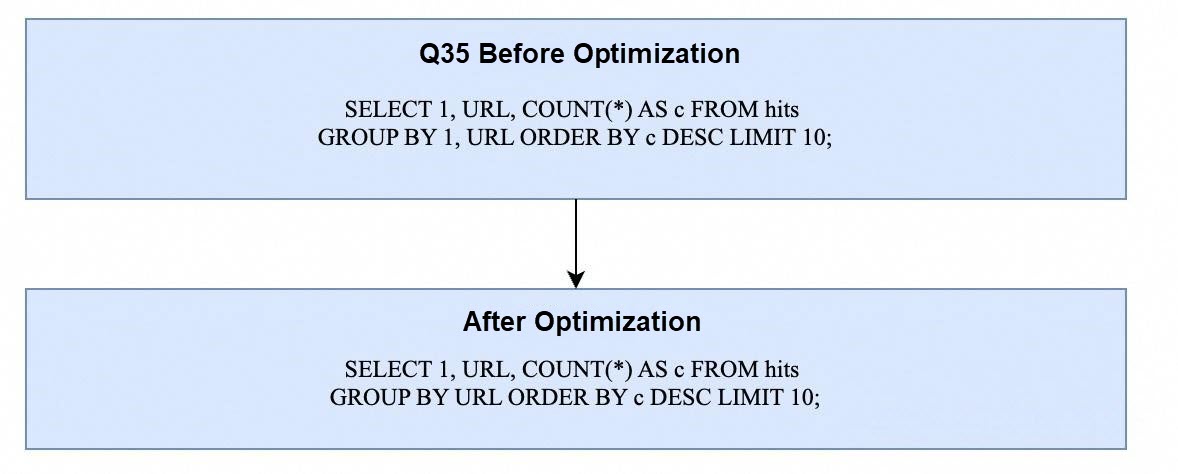

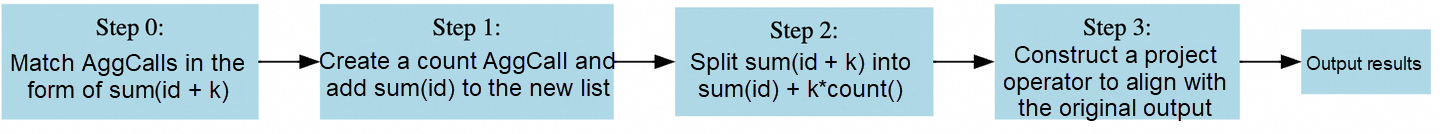

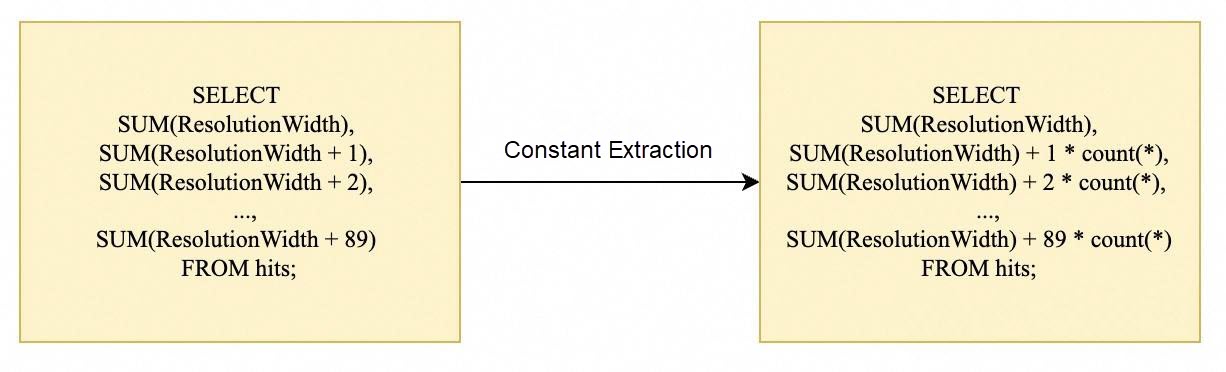

In ClickBench, some SQL statements can be rewritten using the optimizer's RBO rules without altering their original semantics. For example, Q30 requires computing the sum of ResolutionWidth plus a constant 90 times. In the executor, this would normally require maintaining 90 separate columns. However, by applying the distributive law, the SQL statement can be rewritten in the following way:

# Q30 before optimization

SELECT SUM(ResolutionWidth), SUM(ResolutionWidth + 1), SUM(ResolutionWidth + 2) \

, SUM(ResolutionWidth + 3), SUM(ResolutionWidth + 4), ..., SUM(ResolutionWidth + 89) FROM hits;

# Q30 after optimization

SELECT SUM(ResolutionWidth), SUM(ResolutionWidth) + 1 * count(*)\

, SUM(ResolutionWidth) + 2 * count(*) \

, SUM(ResolutionWidth) + 3 * count(*) \

, SUM(ResolutionWidth) + 4 * count(*) \

, ..., SUM(ResolutionWidth) + 89 * count(*) FROM hits;This allows the executor to compute only the SUM(ResolutionWidth) column and the COUNT(*) column, significantly reducing the computational overhead.

In ClickBench, many queries use COUNT(DISTINCT()), such as Q5.

SELECT COUNT(DISTINCT UserID) FROM hits;In PolarDB-X, DISTINCT is implemented by inserting the groupID together with the DISTINCT column into a hash table to remove duplicates.

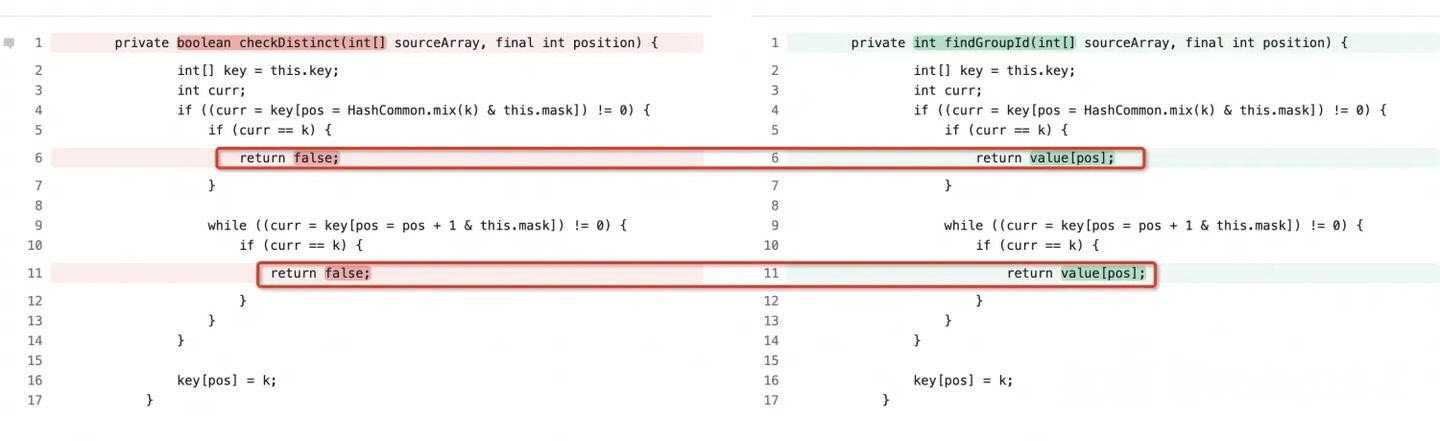

In the original implementation of PolarDB-X, DISTINCT and GROUP BY shared the same aggOpenHashMap.

However, since DISTINCT needs to only check for duplicates and does not require tracking groupID, we designed a dedicated HashSet for DISTINCT. In a HashMap, both a key array and a value array are needed: The key array maps hash to groupByKey, and the value array maps hash to groupID. In contrast, a HashSet requires only the key array.

Because the HashSet only checks whether each row is distinct, it does not need to compute any groupID-related information, reducing computational overhead. (In the diagram below, the left side shows the HashSet code example, whereas the right side shows the HashTable code example.)

For example, in ClickBench Q5 (SELECT COUNT(DISTINCT UserID) FROM hits;), using a HashSet instead of a HashMap achieves a 20% performance improvement.

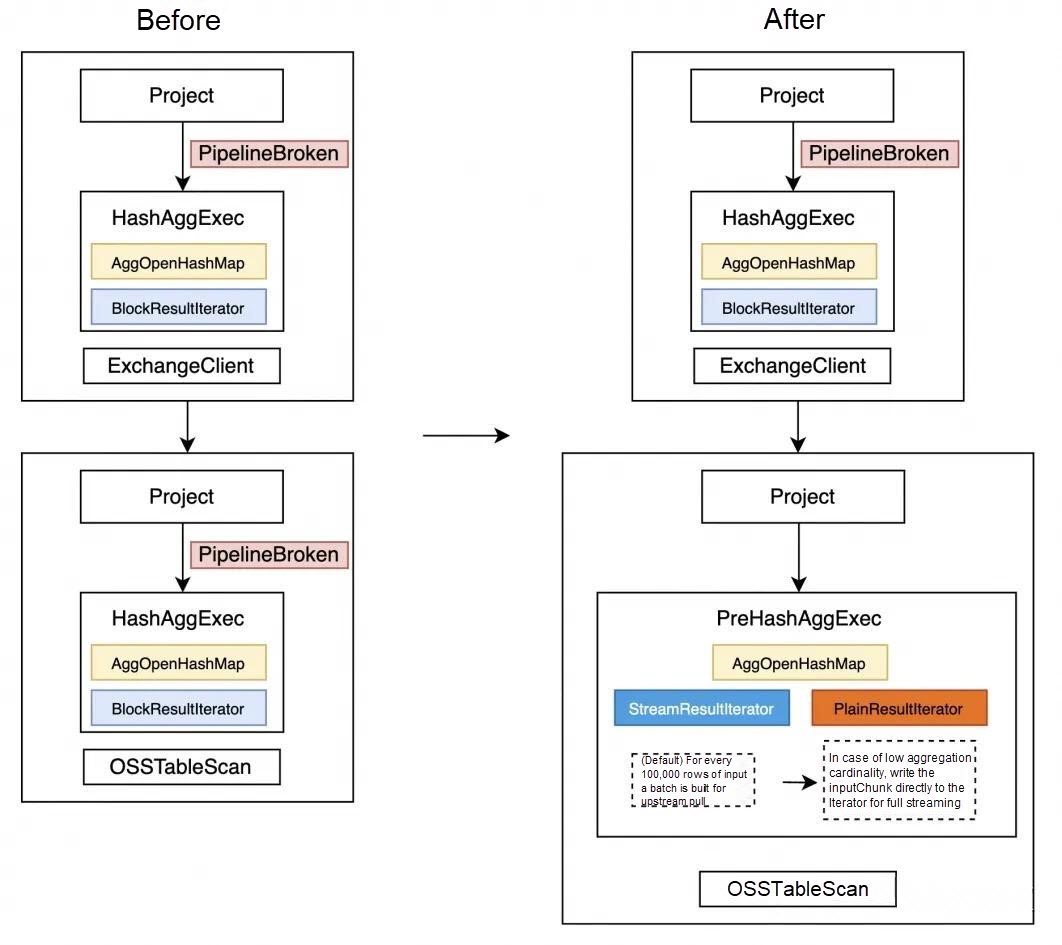

Two-phase aggregation accelerates the majority of SQL queries in ClickBench. For example, in Q10 with GROUP BY RegionID, the cardinality of RegionID is only 150,000, so pre-aggregation can significantly reduce the amount of shuffled data.

However, for Q32 and Q33, which perform GROUP BY on WatchID (Long) and ClientIP (Int), the combined cardinality is close to the table size. In these cases, performing pre-aggregation does not reduce the amount of data shuffled. It only incurs hash table overhead. In our tests, two-phase aggregation for Q33 was twice as slow as single-phase aggregation.

In the original PolarDB-X implementation, enabling ENABLE_PREFER_AGG lets the optimizer decide whether to use two-phase aggregation based on table cardinality statistics. However, these estimates are often inaccurate, especially for multi-column cardinalities, which can deviate from reality by one to two orders of magnitude.

Therefore, we implemented adaptive two-phase aggregation at the executor level with the following steps:

In our tests, the adaptive two-phase aggregation correctly determines whether to enable two-phase aggregation for each SQL query. While ensuring that the performance of other queries remains unaffected, it reduced the execution time of Q33 from 5.5 seconds to 3.4 seconds.

For Q34, the SQL statement is:

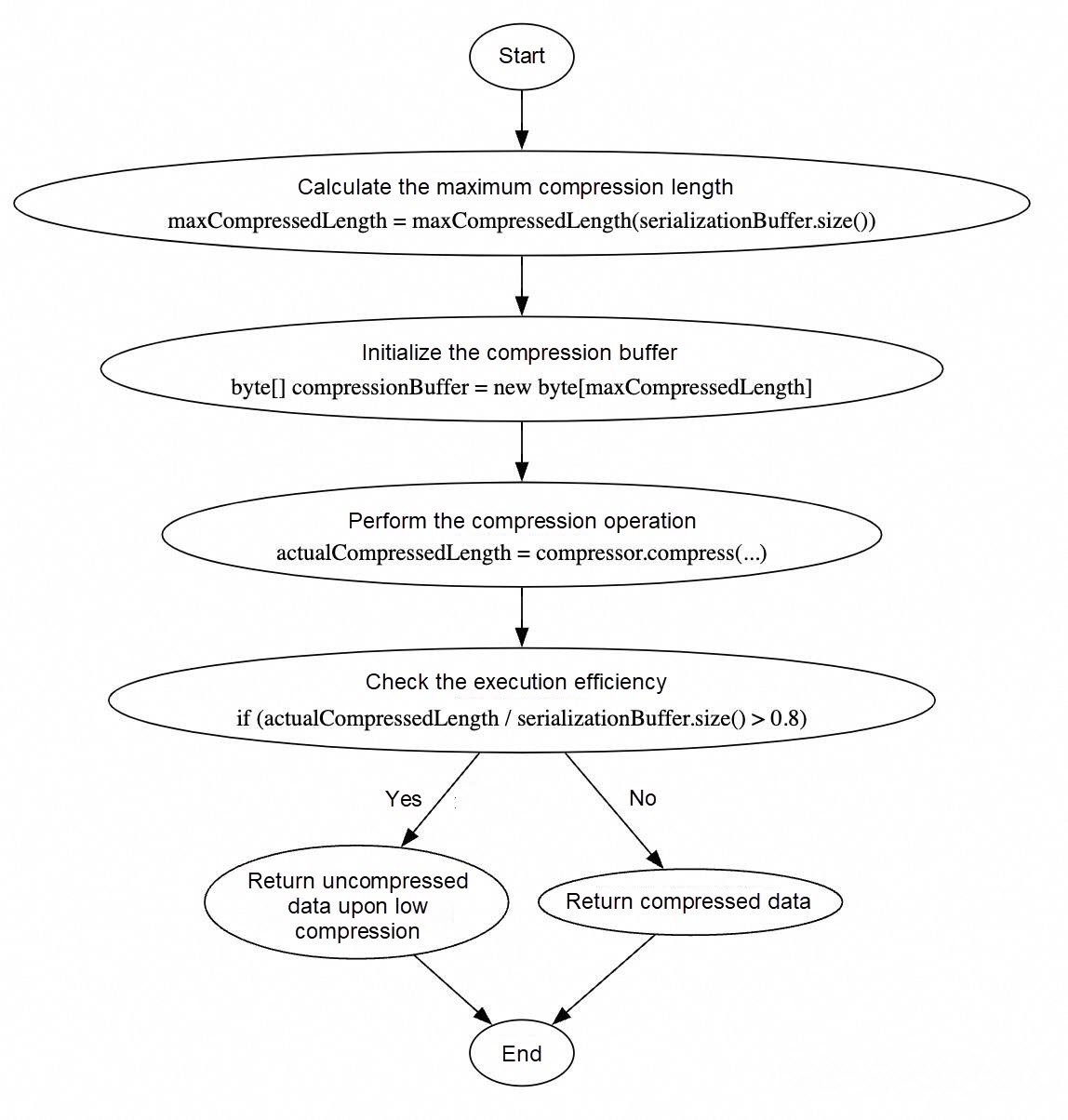

SELECT URL, COUNT(*) AS c FROM hits GROUP BY URL ORDER BY c DESC LIMIT 10The URL column has a relatively large size (averaging 100 bytes), and we observed that network I/O during node shuffles became a bottleneck. On a 2×16-core and 64 GB memory setup, the measured shuffle data reached 2.4 GB. To address this, we applied LZ4 compression to chunks during network transmission.

However, when compression ratios are low, the shuffle data volume may not decrease, and decompression overhead is introduced. Therefore, we dynamically evaluate the compression ratio for each chunk and only return the compressed chunk upstream if the ratio exceeds a specific threshold.

The main workflow is illustrated in the diagram below.

With compression enabled, the network transmission data for Q34 was reduced from 2.4 GB to 300 MB, achieving a 2-second improvement in execution time.

private SerializedChunk serializeForce(Chunk page) {

int maxCompressedLength = maxCompressedLength(serializationBuffer.size());

byte[] compressionBuffer = new byte[maxCompressedLength];

int actualCompressedLength = compressor.get()

.compress(serializationBuffer.slice().getBytes(), 0, serializationBuffer.size(), compressionBuffer, 0,

maxCompressedLength);

//MINIMUM_COMPRESSION_RATIO is 0.8 by default

if (((1.0 * actualCompressedLength) / serializationBuffer.size()) > MINIMUM_COMPRESSION_RATIO) {

return new SerializedChunk(serializationBuffer.slice(), ChunkCompression.UNCOMPRESSED,

page.getPositionCount(), serializationBuffer.size());

}

return new SerializedChunk(

Slices.copyOf(Slices.wrappedBuffer(compressionBuffer, 0, actualCompressedLength)),

ChunkCompression.COMPRESSED,

page.getPositionCount(),

serializationBuffer.size());

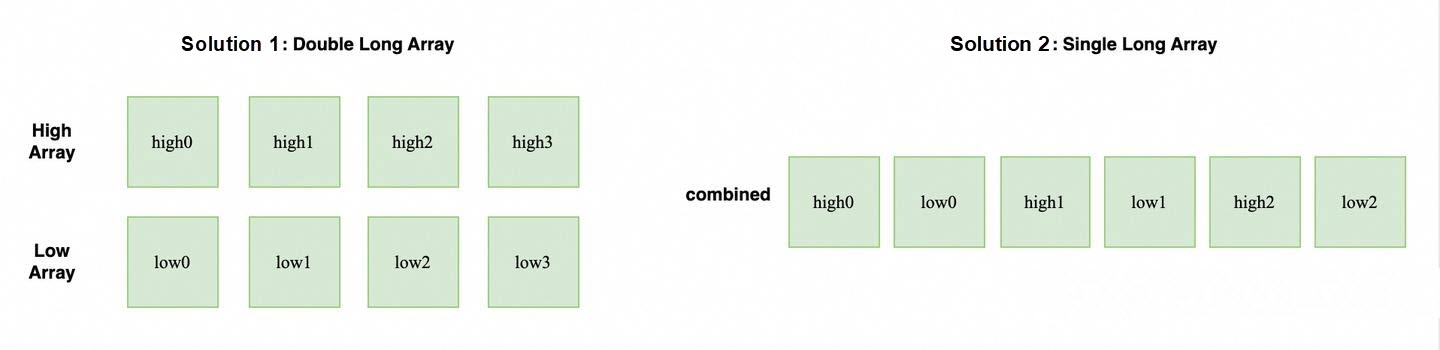

}Applying type-specific optimizations to inputChunk during GroupBy can significantly boost aggregation performance. Since Java does not natively support Int128, it must be simulated using long arrays.

There are two possible implementations:

Testing results show that the two-array approach slightly outperforms the single-array approach.

In the implementation, the serializedInputBlock needs to only maintain references to the two long arrays in the chunk without additional memory allocation. (However, a new key array must still be created since the hash table must remain in memory.)

// only maintain reference

serializedBlock = new Int128Array(longBlock1, longBlock2);Take ClickBench Q9 SELECT RegionID, COUNT(DISTINCT UserID) AS u FROM hits GROUP BY RegionID ORDER BY u DESC LIMIT 10; as an example. Compared to the default GroupBy implementation, Int128GroupBy achieves a 3× performance improvement.

ClickBench Q3: SELECT MIN(EventDate), MAX(EventDate) FROM hits;

For aggregate functions without a GROUP BY clause, there is no need to compute groupId values or perform accumulation for each group. Therefore, a dedicated aggregation semantics can be designed for such cases.

Specifically for Q3, we can implement a specialized DateMaxMinNoGroupByAccumulator, which simply iterates over each input row to track the minimum and maximum values.

@Override

public void accumulate(Chunk chunk, Chunk inputChunk) {

Block block = chunk.getBlock(0);

if (block instanceof DateBlock) {

int position = block.getPositionCount();

if (!isVisited) {

beforeValue = ((DateBlock) block).getPackedLong(0);

isVisited = true;

}

for (int i = 0; i < position; i++) {

long newValue = ((DateBlock) block).getPackedLong(i);

boolean cmp = newValue > beforeValue;

if ((isMin && !cmp) || (!isMin && cmp)) {

beforeValue = newValue;

}

}

}

}ClickBench Q4 involves computing the AVG function:

SELECT AVG(UserID) FROM hits;In PolarDB-X, the AVG function is calculated as

Since the UserID column is of the Long type, SUM(UserID) may exceed LONG_MAX, so it must be stored using the Decimal type.

In a naive decimal implementation, a byte array is maintained in the underlying layer to represent each digit, and addition or subtraction requires handling carry and borrow operations.

This is relatively time-consuming. To optimize this, PolarDB-X introduces Decimal64 and Decimal128 types. Decimal64 is implemented with a single long variable, whereas Decimal128 uses two long variables to represent the low and high digits, respectively.

When computing SUM, Decimal64 is used by default. If it is about to overflow, the system switches to Decimal128. If Decimal128 approaches overflow, it falls back to the naive decimal implementation.

@Override

public void accumulate(int groupId, Chunk inputChunk, int[] groupIdSelection, int selSize) {

Block inputBlock = inputChunk.getBlock(0);

DecimalBlock decimalBlock = inputBlock.cast(DecimalBlock.class);

// Prepare result array and execute summary in vectorization mode.

results[0] = results[1] = results[2] = 0;

decimalBlock.cast(Block.class).sum(groupIdSelection, selSize, results);

// Check sum result state and try to directly append sum result.

if (results[2] == E_DEC_DEC64) {

rescale(decimalBlock.getScale());

long sumResult = results[0];

accumulateDecimal64(groupId, sumResult);

} else if (results[2] == E_DEC_DEC128) {

rescale(decimalBlock.getScale());

long decimal128Low = results[0];

long decimal128High = results[1];

accumulateDecimal128(groupId, decimal128Low, decimal128High);

} else {

// Fall back to row-by-row mode

for (int i = 0; i < selSize; i++) {

int position = groupIdSelection[i];

accumulate(groupId, inputBlock, position);

}

}

}After continuous optimization, PolarDB-X now delivers excellent performance on the ClickBench dataset. The current performance bottlenecks are mainly in Q29's REGEXP_REPLACE operations and the GROUP BY STRING operations in Q33 and Q34.

For Q29, Q33, and Q34, our team already has internal solutions, which are currently under testing and development.

For Q33, the performance bottleneck lies in the GROUP BY STRING operator, whose underlying implementation is an open-addressing hash table for String types. The industry also offers specialized algorithmic optimizations for this scenario. For instance, ClickHouse's paper "SAHA: A String Adaptive Hash Table for Analytical Databases" proposes a dispatch mechanism that handles strings of different lengths separately.

SELECT URL, COUNT(*) AS c FROM hits GROUP BY URL ORDER BY c DESC LIMIT 10;As a distributed HTAP database targeting general-purpose workloads, PolarDB-X recognizes the value of type-specific optimizations, but we also aim to focus on higher-level, more broadly applicable improvements.

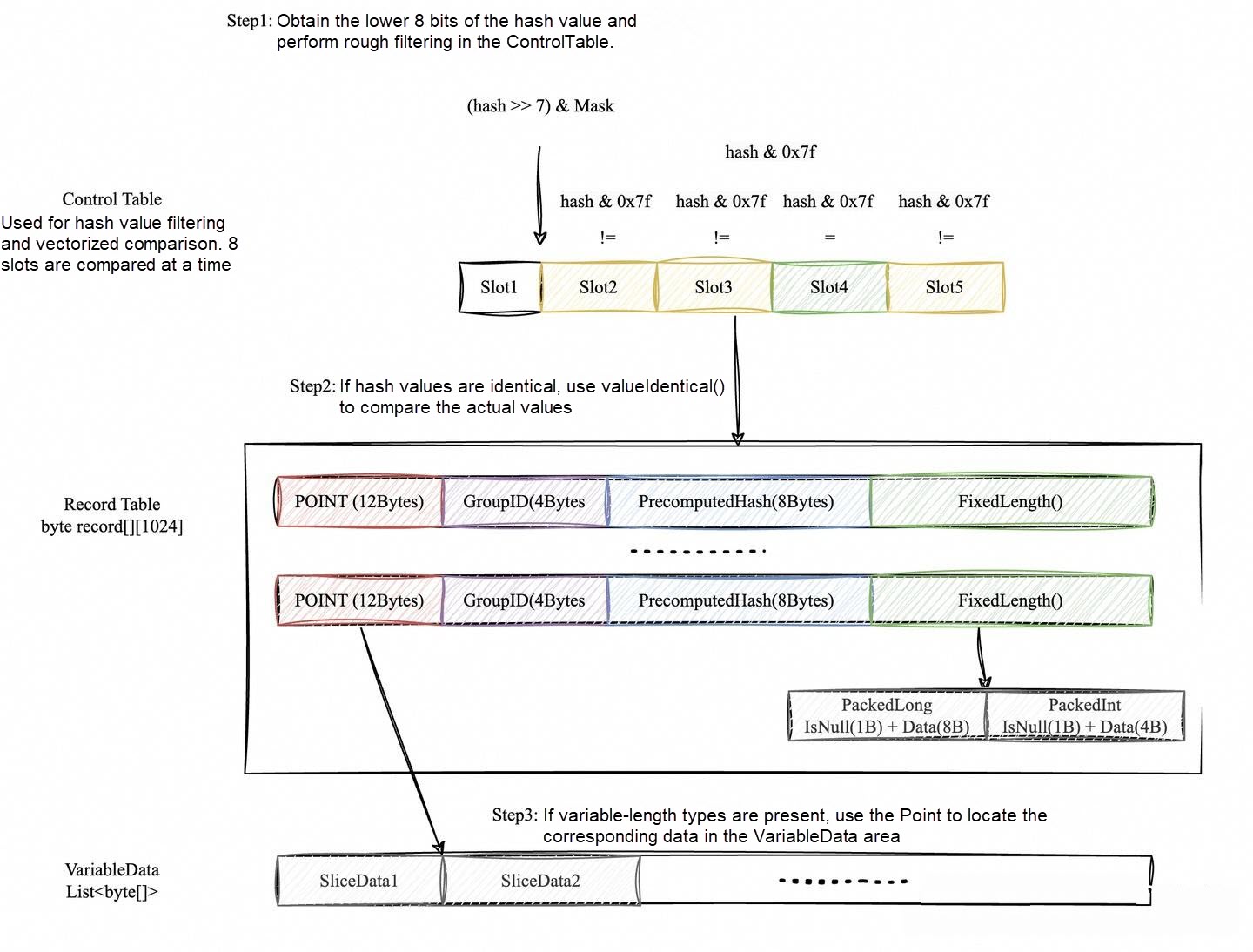

During internal research, we found the Swiss Hash Table, introduced at CppCon 2017, particularly inspiring. This hash table not only optimizes across all data types but also fully leverages CPU-level SIMD acceleration. Combined with PolarDB-X's prior explorations of SIMD instructions, we believe this algorithm can deliver the greatest performance benefits in general-purpose scenarios.

Specifically, the Swiss Hash Table offers the following advantages:

Due to space constraints, we will not provide a detailed introduction of the Swiss Hash Table here. Instead, we present a practical design approach for implementing it in PolarDB-X.

The design of the Swiss Hash Table is divided into three layers:

1. ControlTable: used for rough filtering during probing based on the hash values of elements to be matched.

2. RecordTable: stores fixed-length data.

Each entity is structured in the following way:

3. VariableData: stores variable-length data.

The main probing process works in the following way:

This method filters out a large number of invalid entries in the first step, significantly accelerating the probing process.

For Q29, the performance bottleneck lies in the implementation of REGEXP_REPLACE. Regular expressions can generally be implemented using two approaches: Nondeterministic Finite Automata (NFA) and Deterministic Finite Automata (DFA). The key difference is whether multiple paths are allowed from one state to reach the next.

In the Java ecosystem, three main REGEXP_REPLACE implementations are commonly used:

1. JONI: Originally developed in the JRuby project and evolved from Ruby's Oniguruma library, JONI uses an NFA-based matching strategy. It generally performs well in most cases. However, because NFA matching is driven by the regex pattern, backtracking occurs on the input string when a match fails, which can lead to exponential time complexity in extreme cases.

2. Re2J: Evolved from Google's Re2 project, Re2J uses a DFA-based matching approach. It is driven by the input string, filtering out invalid regex fragments during traversal. This ensures time complexity linear to the input string. However, Re2J is not fully mature, lacks some matching features, and in most scenarios performs worse than JONI.

3. HyperScan-Java: Based on Intel's HyperScan project, first presented in the NSDI 2019 paper "HyperScan: A Fast Multi-pattern Regex Matcher for Modern CPUs," HyperScan has two major advantages over JONI and Re2J:

Mainstream OLAP engines, such as ClickHouse, use HyperScan or its variants (such as VectorScan).

However, HyperScan-Java is currently inefficient due to the high CPU cost of Java-to-C++ object conversions. Moving forward, we plan to integrate PolarDB-X's vectorized expression capabilities and modify HyperScan-Java to perform batch regex replacements, further accelerating performance.

Through the optimizations described above, PolarDB-X has achieved significant performance improvements on the ClickBench large wide table. Enhancements to the optimizer rules have substantially reduced unnecessary computation, whereas executor-level optimizations, such as the independent HashSet, adaptive two-phase aggregation, and efficient compression mechanisms, have greatly improved the efficiency of DISTINCT operations, adaptive aggregation, and network data transmission. Although bottlenecks remain in regex processing and string-based GROUP BY operations, the PolarDB-X team has developed solutions, including the Swiss Hash Table and HyperScan optimizations, which are expected to further enhance system performance. Overall, these continuous optimizations and innovations ensure that PolarDB-X remains competitive in the distributed HTAP database space, providing users with high-performance, reliable analytical processing capabilities.

Address Data Security and Latency to Accelerate Cloud Adoption: PolarDB on ENS

ApsaraDB - March 19, 2025

ApsaraDB - March 26, 2024

ApsaraDB - November 24, 2025

ApsaraDB - September 29, 2021

ApsaraDB - March 26, 2025

ApsaraDB - December 12, 2025

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn MoreMore Posts by ApsaraDB