By Chenyu

PolarDB-X V2.4 supports columnar query acceleration. It builds a mature columnar storage SQL engine on a low-cost OSS storage base to accelerate query analysis with better performance and higher cost-effectiveness.

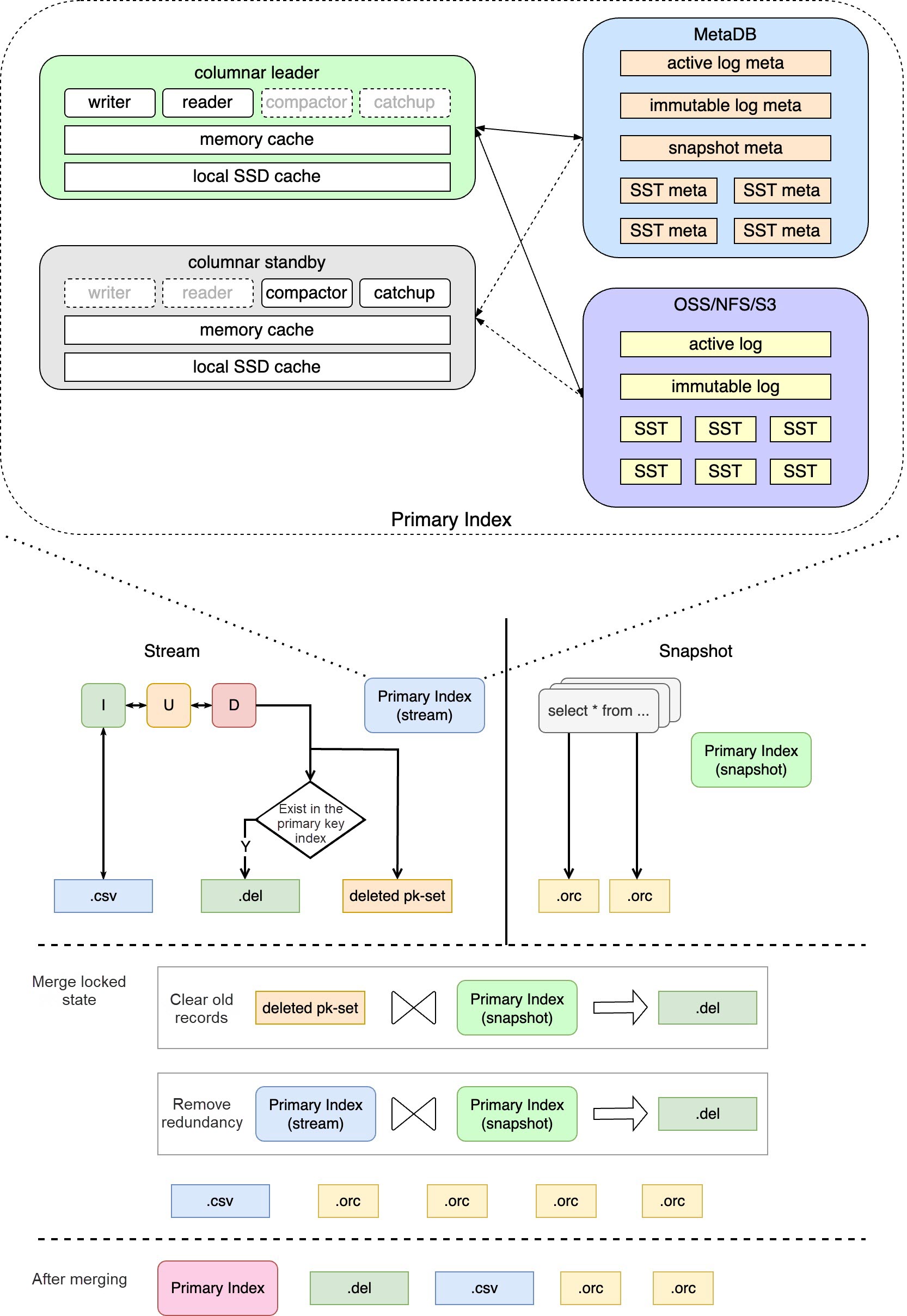

Columnar query acceleration is based on an architecture that separates storage and computing: A columnar engine builds clustered columnar indexes, consumes binlogs of distributed transactions, synchronizes them to clustered columnar indexes, and stores them in OSS. Compute Engine (CN) supports columnar query and transaction management. The storage of columnar indexes adopts an organizational structure of Delta + Main + Delete-and-Insert, and the constructed data will be stored on OSS in the format of CSV + ORC + Delete bitmap. This mode implements low-latency synchronization from row storage to column storage compared with Copy-on-Write and Merge-on-Read. It does not impose excessive overhead on the query side. For more information, please refer to PolarDB-X Clustered Columnar Index | The Birth of the Columnar Engine.

To achieve efficient updates for the Delete-and-Insert scheme in column storage, it is necessary to quickly locate the positions of historically valid data for marked deletion. This introduces the only stateful object required in this column storage: the Primary Index (PkIndex). At the same time, the PkIndex's high performance and persistency have also become essential for achieving large-scale column storage on a single node, presenting significant technical challenges.

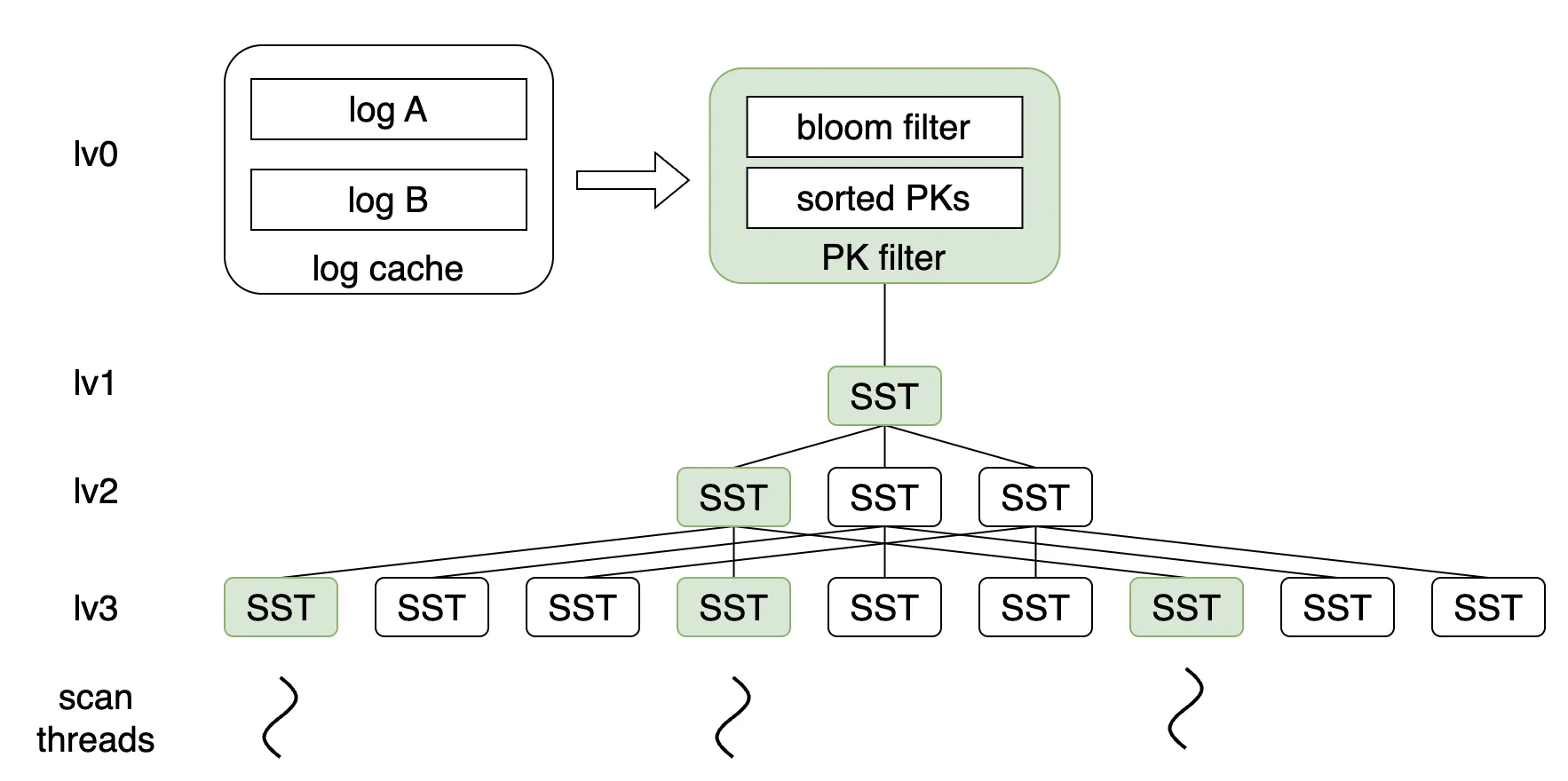

Since it is located on the main path of column storage maintenance, the read and write performance of the PkIndex directly determines the upper limit of the SLA for low-latency column storage. At the same time, it plays a crucial role in ensuring the correctness of column-oriented data during full build and incremental maintenance. The following figure shows the PkIndex's overall module and its role in building a clustered columnar index.

To meet the requirements of low storage costs and stateless migration of columnar engine nodes, PolarDB-X stores the PkIndex data on OSS, while metadata is stored in MetaDB. After considering the features of object storage and the implementations of storage engines in the industry, we have developed our own PkIndex storage engine based on OSS. We have opted for the LSM-tree data structure and carried out a series of optimizations tailored to OSS features, meeting the columnar engine's needs for high-throughput writes and low-latency queries.

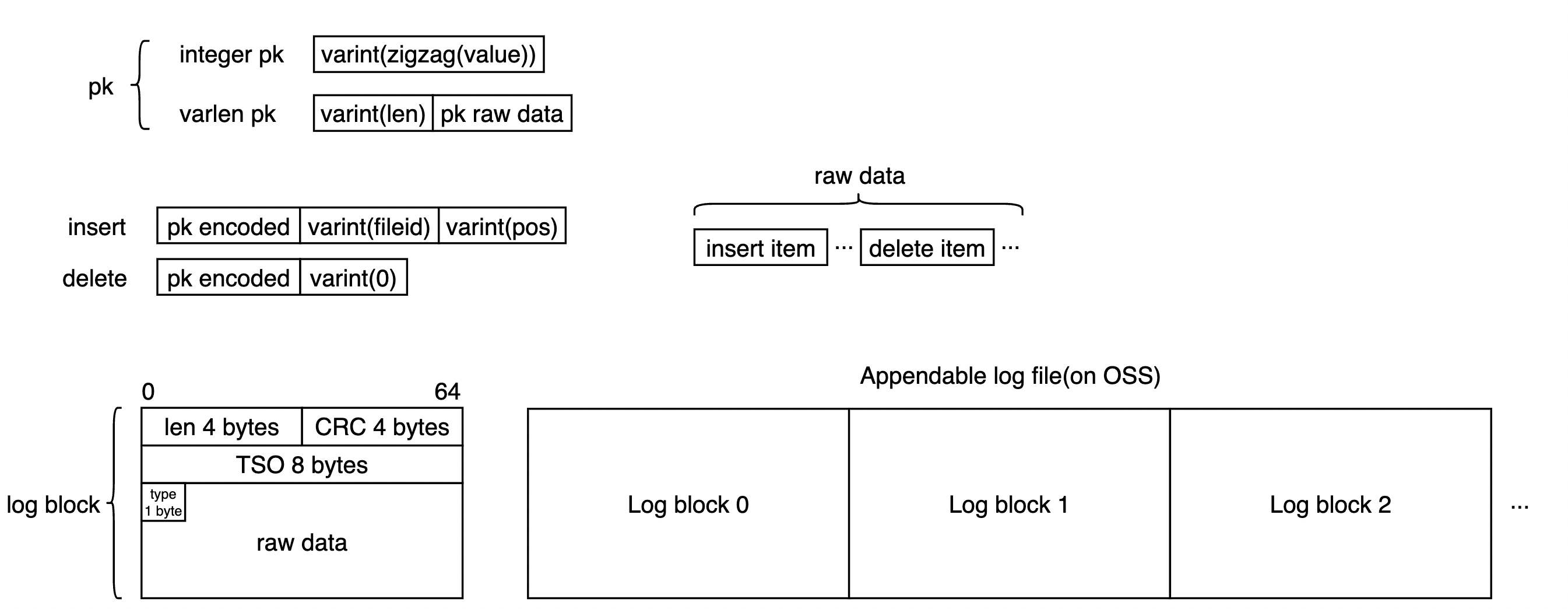

The PkIndex is compressed and encoded based on the characteristics of the data to maximize storage space compression and increase the density of valid data.

In the PkIndex, the Key is the primary key of the data record, and it primarily supports two types:

• Integer primary keys are compressed and stored as variable-length integers after undergoing zigzag encoding.

• Other types of primary keys have their length recorded as variable-length integers, followed by the binary-encoded primary key, supporting any custom-defined types.

Value is the location of the column-oriented data, and there are two types:

• The corresponding column-oriented data is valid, and it consists of the file (fileId) and offset (pos) where the corresponding row data is stored in column storage. Both are compressed and stored using variable-length integers.

• The corresponding column-oriented data is deleted and marked with a single byte 0.

Writes are submitted in batches. Batch data includes inserting entries and deleting entries. Operations with the same key will be compressed into a single record, encoded as described above, and densely arranged in the order of operations within a log block. Each log block header will store the block length, a checksum, the submission timestamp, and the primary key type. The overall functions are shown in the following figure:

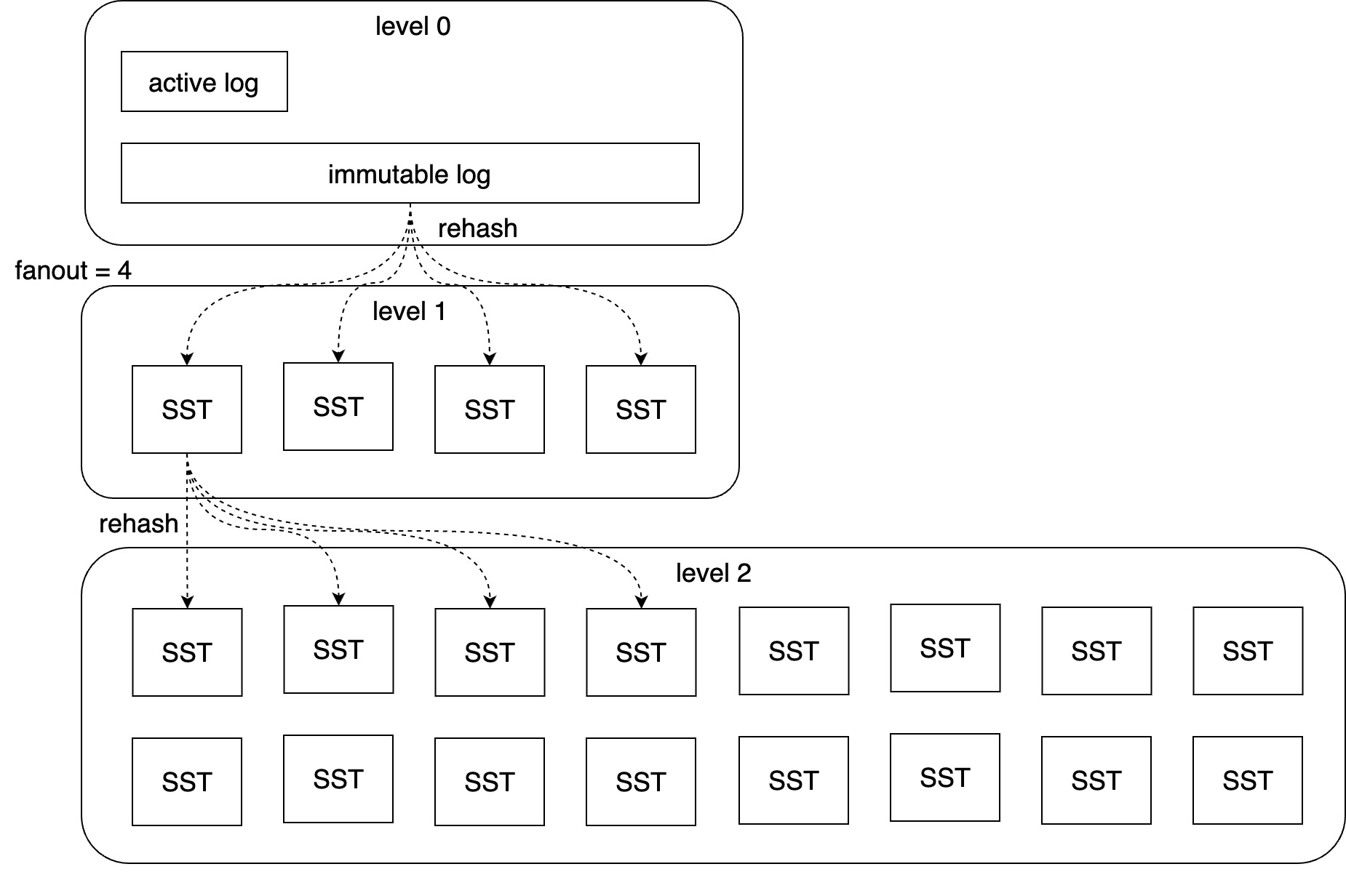

As more data is written, the log becomes longer, reducing the reading and recovery time. The compaction module merges and splits the log module into the lower-level SST. The entire LSM tree is shown in the following figure:

It is based on the LSM tree concept.

Level 0 serves as the log layer, where data is interspersed, and unordered. Data is written in sequence and written in batches during commit times.

Levels 1 - n are the SST layers, where data does not overlap, and the data within each file is sorted by key.

Data storage sharding uses a consistent hashing scheme, ensuring that one SST at layer n strictly corresponds to k files at layer n+1, where k is the parameter fanout.

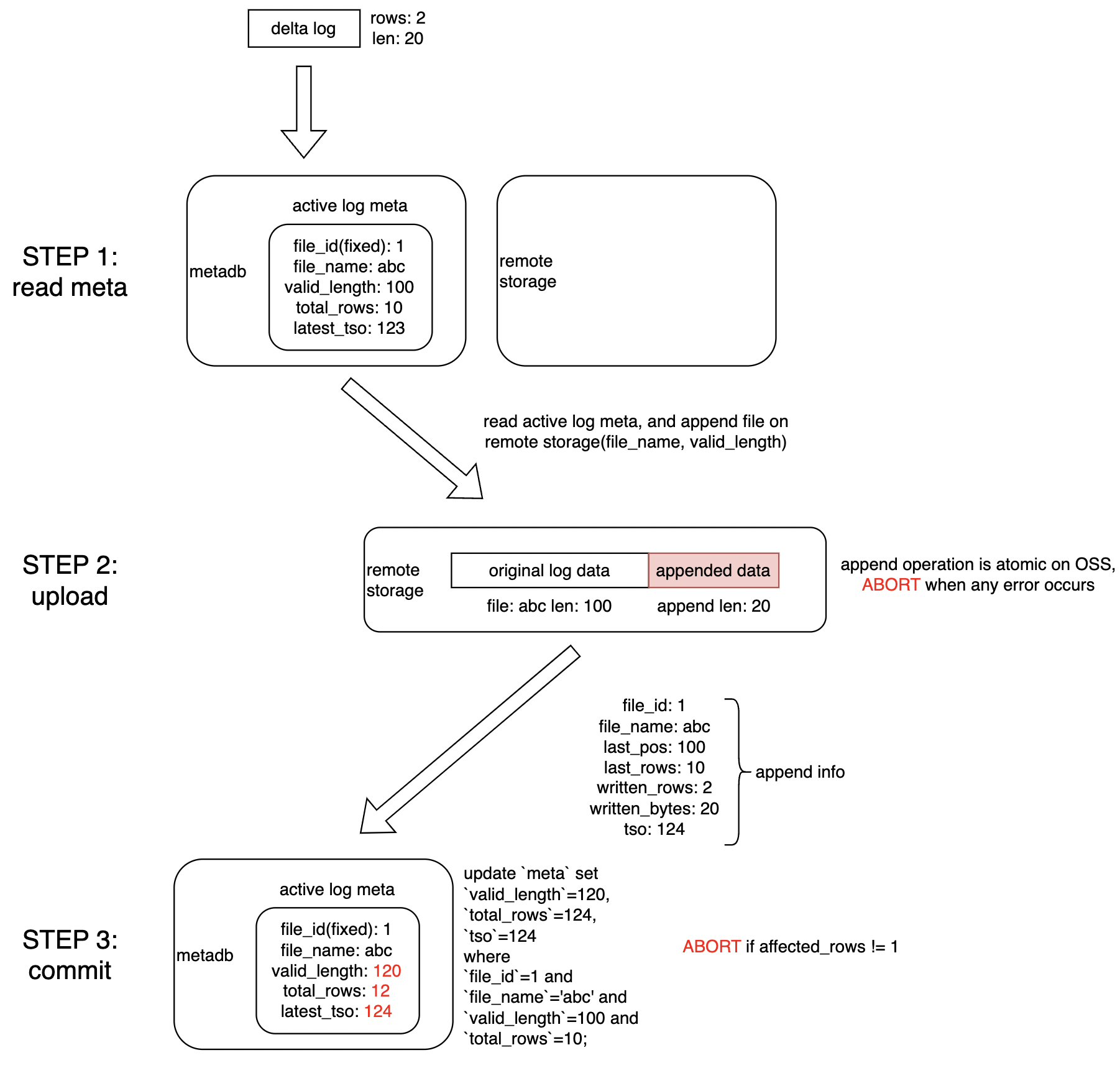

The columnar engine has multiple nodes, such as primary and hot standby nodes. Metadata of the data is stored across OSS and MetaDB. In addition, the PkIndex must be capable of rapid migration to pull up writes. Therefore, ensuring its correctness in multi-node active scenarios is particularly important. Here, we disassemble the writing process into three steps to implement atomic writes in a Compare-And-Set-like manner, ensuring no partial or blind updates.

The specific process is as follows:

• First, use the snapshot read to obtain the current active log checkpoint.

• With OSS's append capability, log data is atomically appended to a specific checkpoint. If a write conflict occurs, an error will be reported at this step.

• An update statement atomically updates the active log checkpoint in the metadatabase, and the number of updated rows determines whether there is a write conflict. Finally, the entire log is submitted.

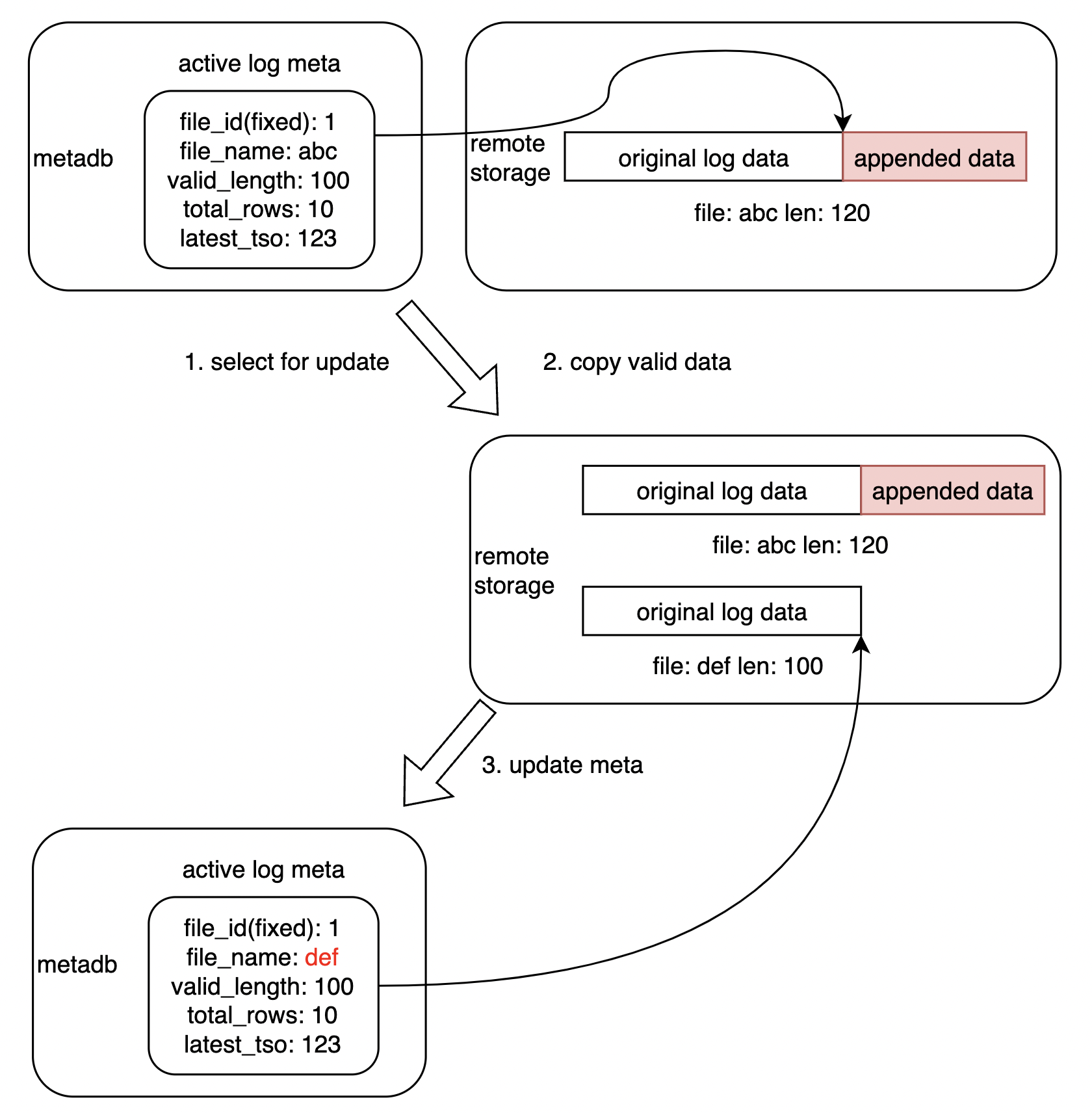

Due to the decoupling of data updates and metadata updates, conflicts or unexpected crashes in certain scenarios may result in residual intermediate states. The following fix process can be used to restore a consistent state.

The specific process is as follows:

• By using select for the update to lock the corresponding log metadata, concurrent operations are avoided.

• Retain the original log file and copy its valid parts to the new file.

• Update the metadata to point to the new file to ensure consistency between the files and the metadatabase on OSS.

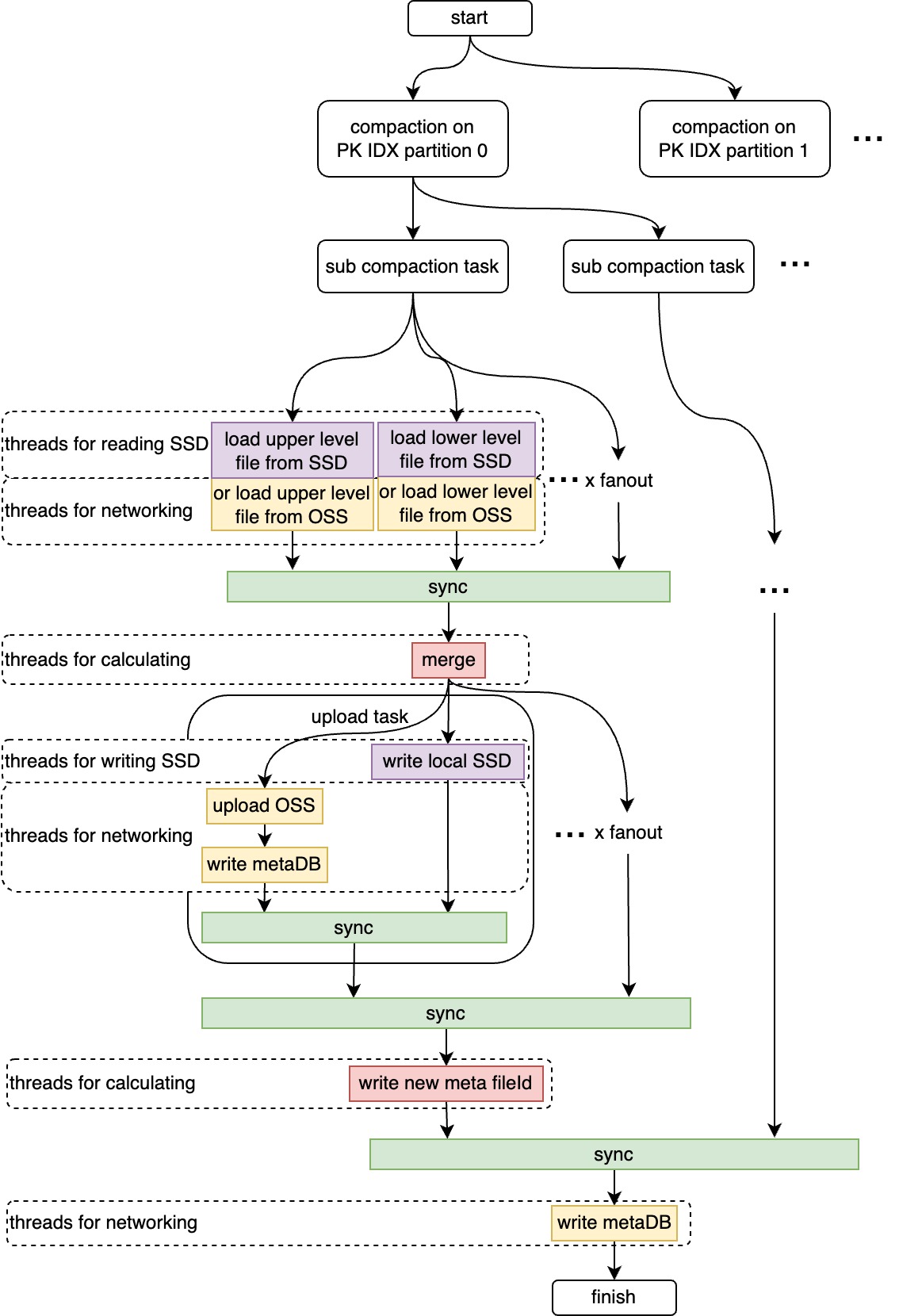

Compaction is an important part of the LSM tree, so the PkIndex storage engine makes optimizations for OSS's high latency and high throughput.

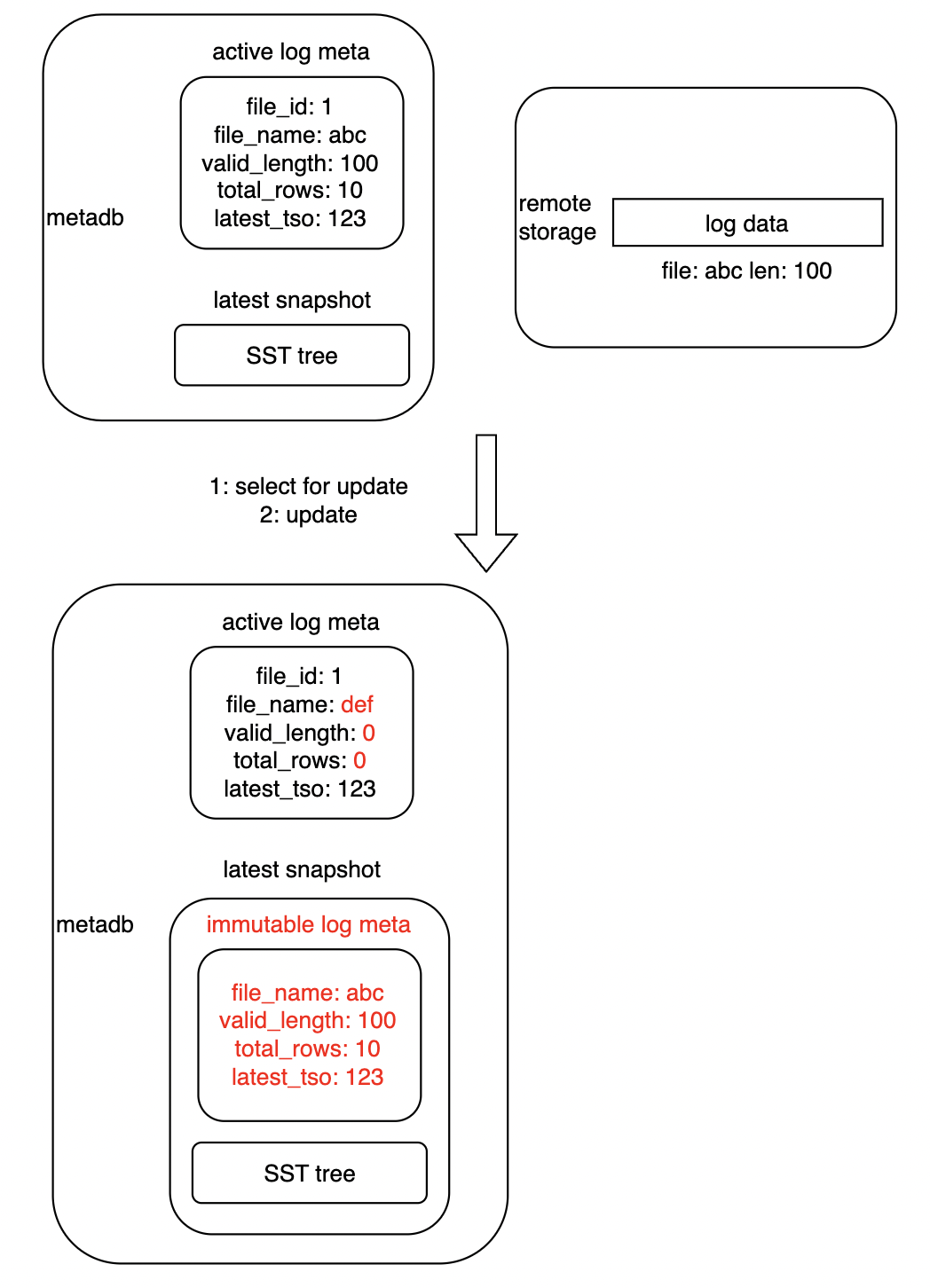

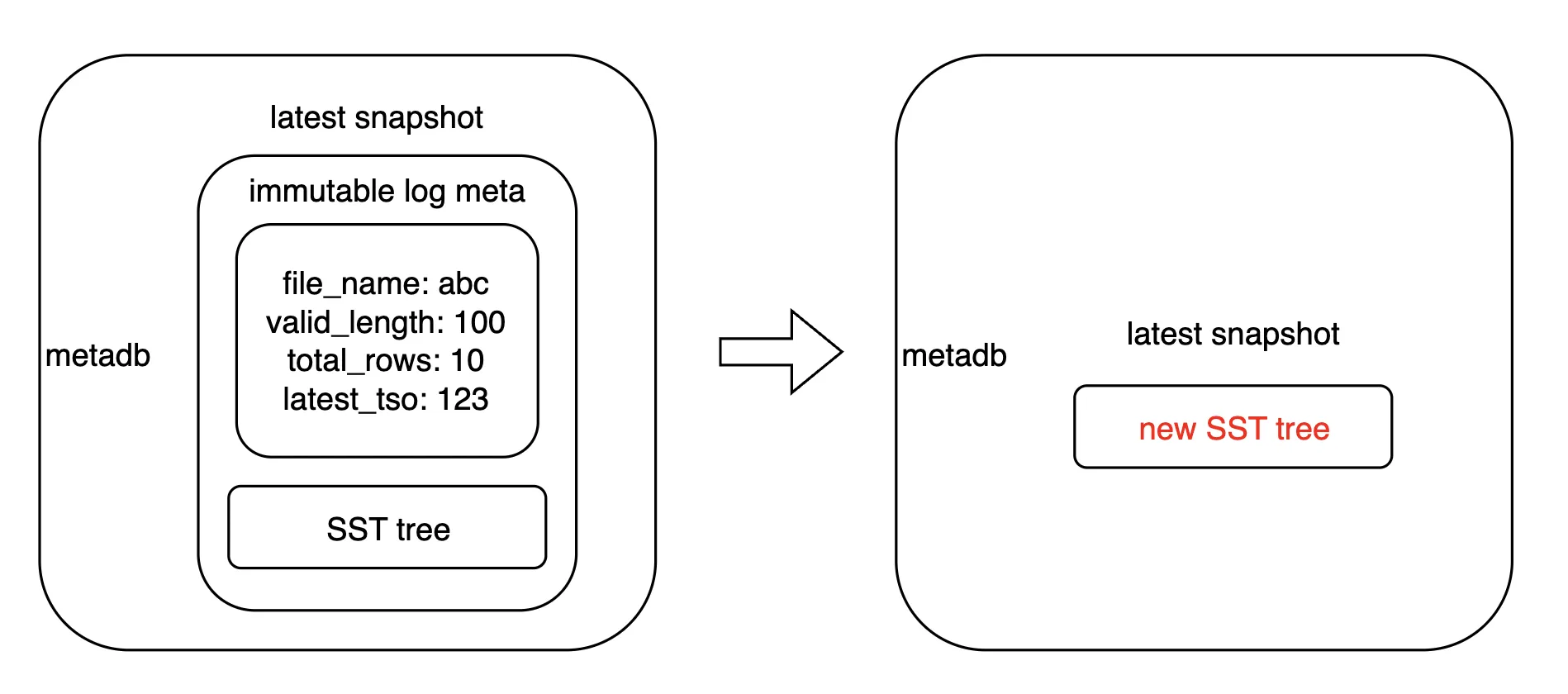

First, to adapt to the decoupling of storage and metadata scenarios, the PkIndex splits the compaction into two steps to improve process reliability and operation idempotence:

• Generate a snapshot to be compacted with an immutable log.

• Perform equivalent transformations on the preceding snapshot to eliminate the immutable log and generate a new snapshot.

Secondly, the data tiering and distribution rules described above divide the LSM tree into several independent compaction subtasks in fanout units. The scheduling task is fine-grained to adapt to low-specification configurations. For high-specification configurations, you can use task types to divide thread pools and parallel scheduling to maximize the high throughput of OSS storage. You can also use high-concurrency, fine-grained, and multi-pipeline task orchestration to accelerate the compaction process.

For the PkIndex, there are only two access modes:

• Point queries are used to find the location of column-oriented data that needs to be deleted and to mark it with a deleted flag.

• Full traversal is used to deduplicate data while creating clustered columnar indexes and merging incremental and full data.

The special access mode is also an important reason for the hierarchical distribution rule of consistent hashing selected by the PkIndex. As point queries are high-frequency requests on the main path of column-oriented data update, they are also the weakness of the LSM tree. To solve this problem, the PkIndex is optimized as follows:

• Based on the assumption of access frequencies for hot and cold data, the LSM tree is inherently capable of separating hot and cold data.

• The hierarchical distribution algorithm of consistent hashing requires only one point query in each layer, and the key hash can be used to quickly and uniquely determine the SST file.

• The metadata stores the Bloom filter for each SST, further reducing unnecessary SST reads.

• The SST file size threshold adapts to the optimal size of OSS to improve OSS access efficiency.

• Multi-layer cache is employed, with all level 0 logs residing in memory and being accelerated by hash tables. For level 1 - n SSTs, a three-layer cache structure of memory, SSD, and OSS is used to minimize the cost of cache misses as much as possible.

For full traversal requests, a bottom-up traversal approach filters out data found in the corresponding upper layers to achieve valid data traversal. At the same time, paralleling the arrangement of scanning threads according to the distribution of consistent hashing can maximize the cache hit rate.

As the only mutable, unordered, and possibly duplicative container within the data structure, the data in the log layer requires auxiliary data structures to speed up read performance. We use the hash table in the PolarDB-X PkIndex to achieve this function. Unlike the traditional LSM tree, which first writes to MemTable and then generates logs, we first create delta logs, and after the atomic submission, we then re-release them into the hash table. The hash table exists only as a log cache. Based on this feature, the compaction process also promotes data iteration in the log cache. The log cache is divided into multiple hash tables. After log switching and log pushing into SST, the hash table is rolled out to release memory.

The SST cache is relatively simple, as SST is an immutable data structure with internal order and a sparse index. The cache only stores binary SST data. Whether it is a point query or traversal, you only need to maintain a cursor, use sparse indexes for binary search positioning, and then spit out data while decoding.

In addition, since the PolarDB-X columnar engine is developed using Java, there is a thorny problem in developing storage engines using Java, which is memory management. For hash tables with non-basic data structures, cache data can easily fall into the old generation in scenarios with many small objects, long lifecycles, and large piles of memory. Billions of small objects consume a significant amount of CPU, increasing scanning time in the FGC process, causing serious performance problems and prolonged Stop-the-World pauses. To solve this problem, we have developed a memory management system based on Arena, hash tables, and sorting algorithms, which will be described in subsequent articles.

The PolarDB-X columnar engine uses the mode of one master mode and one hot standby node, with master election based on lease contention. The PkIndex is used as a stateful data structure and occupies nearly half of the node memory as a buffer pool. When the master node is switched for high availability, the hot standby node can quickly take over from it and provide column store building services. Therefore, the hot standby node needs to be able to promptly follow up on the replay log of the master node. After completing the commit, the master node proactively broadcasts a sync command, and the hot standby node completes rapid replay based on the log checkpoint in the metadatabase.

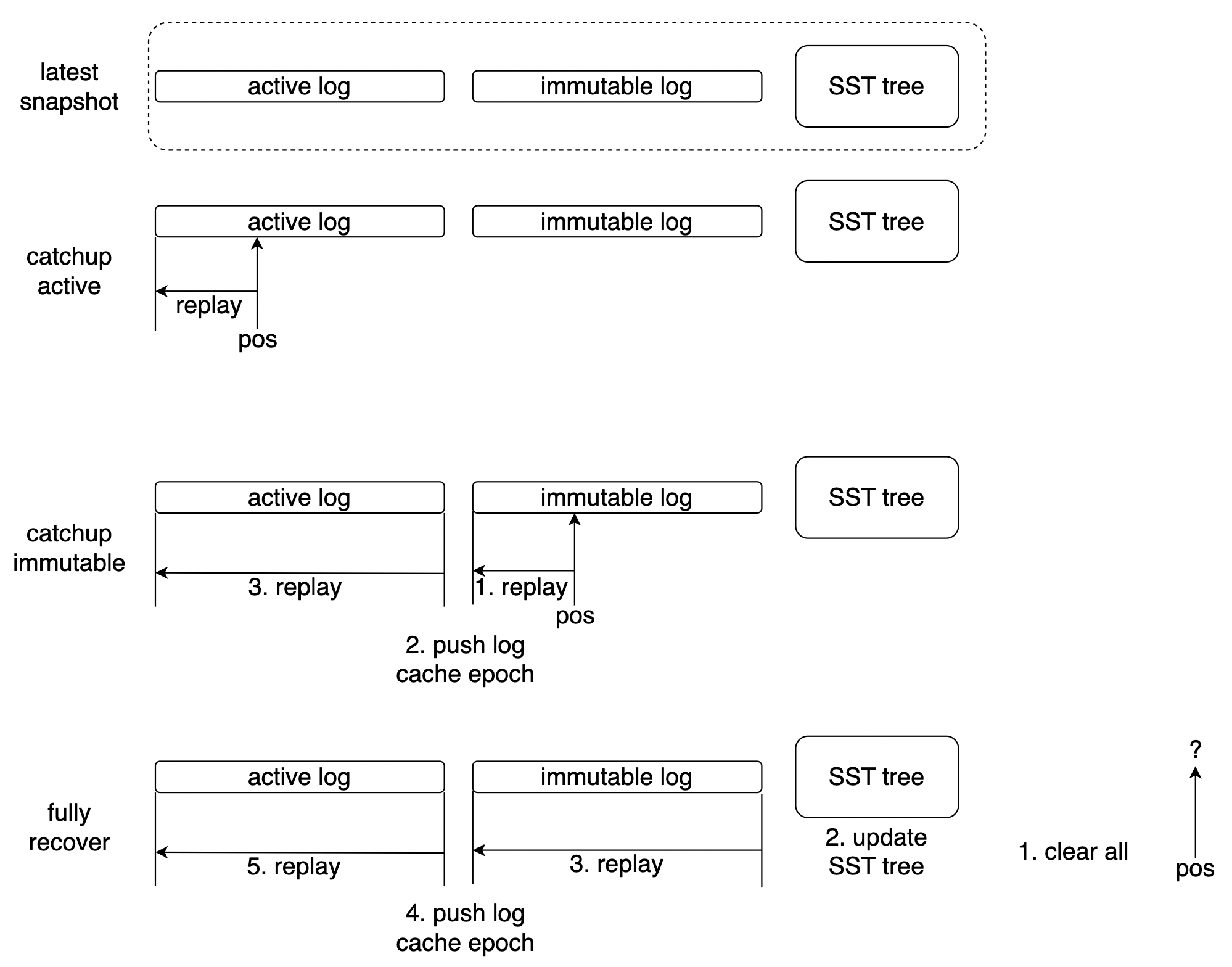

The catchup algorithm classifies the following three scenarios based on the current state and target checkpoint to complete the replay at the minimum cost:

• Catchup active: If the hot standby's replay checkpoint is located on the active log of the latest snapshot, it only needs to replay the lagging part of the active log.

• Catchup immutable: If the hot standby's replay checkpoint is located on the immutable log of the latest snapshot, it needs to replay the lagging part of the immutable log and the entire active log.

• Fully recover: When the hot standby's replay checkpoint cannot be found on the latest snapshot, it indicates a significant lag. At this time, all state data will be cleared, SST metadata will be reset, and all log parts will be replayed to complete a full state recovery.

In addition, the hot standby node is also responsible for the compaction operation on the PkIndex, reducing the CPU and I/O pressure on the master node and fully using all nodes' resources.

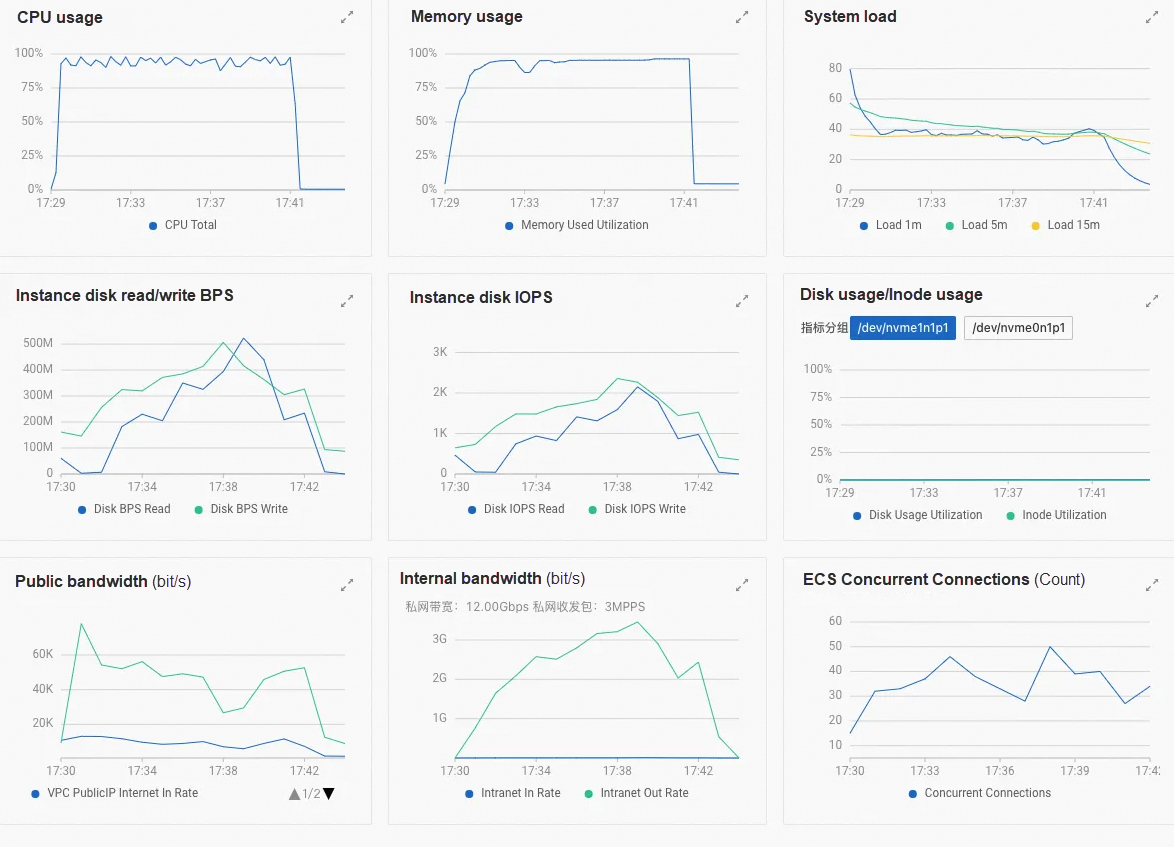

For the group commit mode of PolarDB-X's columnar engine, we have designed two sets of write performance stress tests for the 16c64g nodes:

• Case 1: Random writes within a 160 million primary key range, with a batch size of 3 million (300,000 QPS, group commit in 10 seconds), 100 batch inserts totaling 300 million writes.

• Case 2: Random writes within a 10 billion primary key range, with a batch size of 3 million (300,000 QPS, group commit in 10 seconds), 1,000 batch inserts totaling 3 billion writes.

| case | Time | Write Throughput | Log Write Rate | SST Quantity | Total Number of OSS Writes | OSS Write Throughput |

|---|---|---|---|---|---|---|

| 1 | 35.6 s | 8415214.0 rps | 73.2 MB/s | 136 | 3682.5 MB | 103.2 MB/s |

| 2 | 701.3 s | 4277475.5 rps | 40.6 MB/s | 32609 | 209902.9 MB | 299.2 MB/s |

Based on the test data from the write scenarios, the PkIndex can support the capability of millions of TPS in the future. In addition, from the monitoring perspective, it can occupy the CPU resources of large-specification nodes without throttling, fully leveraging the internal bandwidth and achieving a significant throughput.

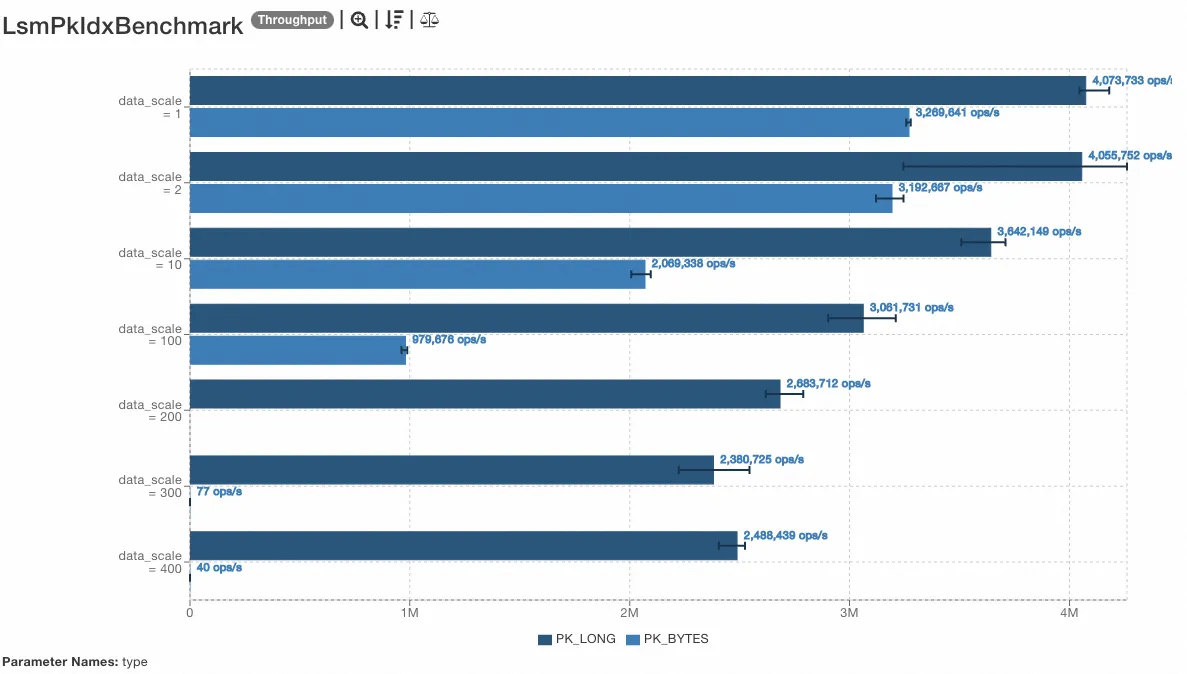

For the read performance, we also tested the point query performance of long-type primary keys and bytes-type primary keys (random 8 - 24 bytes). Under tests with restricted buffer pool size, it is observed that when the buffer is not exhausted, the throughput is substantial, reaching over 2 million QPS. When the buffer pool is exhausted, the performance drops to the QPS level of OSS reads, which is also as expected.

PolarDB-X clustered columnar index uses the Delete-and-Insert solution. The key to the solution's performance is the PkIndex (which can quickly locate row records). By integrating with OSS object storage for high performance and high availability, PolarDB-X offers second-level crash recovery and supports the throughput capability of millions of DML operations.

Related articles in the series of column storage:

PolarDB-X Clustered Columnar Index | Snapshot Reading for Transaction Consistency

[Infographic] Highlights | Database New Features in Feburary 2025

ApsaraDB - June 19, 2024

ApsaraDB - April 10, 2024

ApsaraDB - March 26, 2025

ApsaraDB - October 16, 2024

ApsaraDB - March 26, 2025

ApsaraDB - November 12, 2024

PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by ApsaraDB