By Kun Wu

Batch jobs are widely used in fields such as data processing, simulation computing, scientific computing, and artificial intelligence, primarily for executing one-time data processing or model training tasks. As these tasks often consume substantial computing resources, it is essential to queue them based on task priorities and the submitter's available resources to maximize cluster resource utilization.

Despite the comprehensive pod scheduling functions offered by the current Kubernetes scheduler, it exhibits limitations in scenarios involving a large number of queued tasks:

The default batch processing tasks directly create job pods, which, when the cluster's available resources are insufficient, can significantly slow down the Kubernetes scheduler's processing speed, impacting online business scaling and scheduling. An urgent need exists for an automated queuing mechanism capable of automatically controlling job start and stop based on cluster resources.

To enhance task queuing efficiency, various queuing strategies such as blocking queue, priority queue, and backfilling scheduling should be adopted according to cluster resources and task scale. However, the default scheduling policy of the Kubernetes scheduler primarily relies on pod priority and creation order, making it challenging to accommodate diverse task queuing requirements..

The absence of support for multi-queue management causes issues when a large number of tasks from a single user occupy resources, leading to other users being "starved." To address this, a task queuing system is required to isolate tasks of different users or tenants, a capability currently lacking in the Kubernetes scheduler, which only supports a single queue.

Different application scenarios involve the submission of varied task types, each with distinct resource and priority calculation methods. Integrating these diverse computational logics into the scheduler would increase operational complexity and costs.

Hence, cloud service providers tend to avoid choosing the native Kubernetes scheduler as the primary solution for complex task-scheduling scenarios.

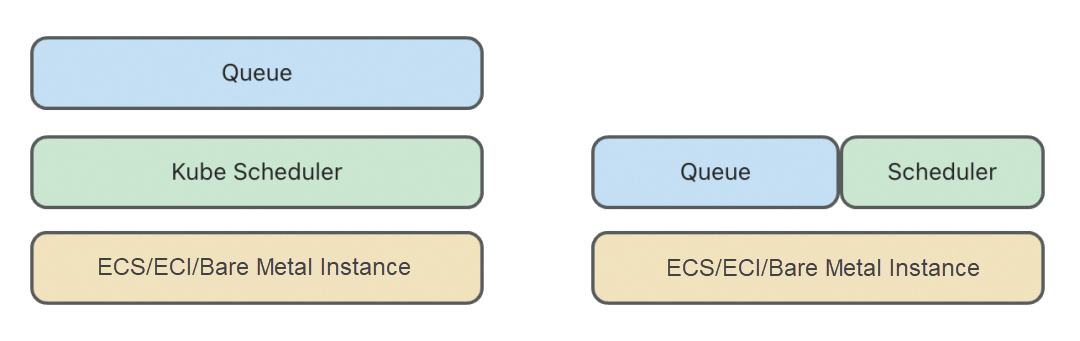

In a Kubernetes cluster, queues collaborate with the scheduler to ensure efficient task scheduling. To avoid typical distributed system problems like "split brain," their responsibilities must be clearly defined. Currently, Kubernetes queues can be categorized into two types:

The first type of queue operates in a different layer than the Kubernetes scheduler. It is responsible for sorting and dequeuing tasks in sequence, focusing on task lifecycle management and implementing a fairer dequeuing policy among users. On the other hand, the scheduler is responsible for the rational orchestration of task pods, seeking the optimal placement policy, and emphasizing node affinity and topology awareness. This type of queue does not directly perceive the underlying physical information. When a task is dequeued, it may fail to be scheduled due to factors such as node affinity and resource fragmentation, leading to head-of-line (HOL) blocking. Thus, this queue type typically requires the introduction of a "task re-enqueuing when unscheduled" mechanism. Both Kube Queue and Kueue, discussed in this article, represent this type of queue.

The second type of queue is closely coupled with the scheduler, ensuring that a task is dequeued only when it can be scheduled. This design alleviates the HOL blocking issue found in the first type of queue but still faces challenges in fully integrating with all the scheduler's scheduling syntax. Additionally, using this second type of queue entails replacing the default scheduler, representing a significant change for the cluster. Notable examples of such queues include Volcano and YuniKorn.

Kube Queue is a key component of the ACK cloud-native AI suite. It is designed to solve the above problems of the Kubernetes scheduler in task scheduling scenarios. Integrated with the Arena component of the cloud-native AI suite and the elastic Quota feature of ACK clusters, Kube Queue efficiently supports automatic queuing of various AI tasks and multi-tenant Quota management.

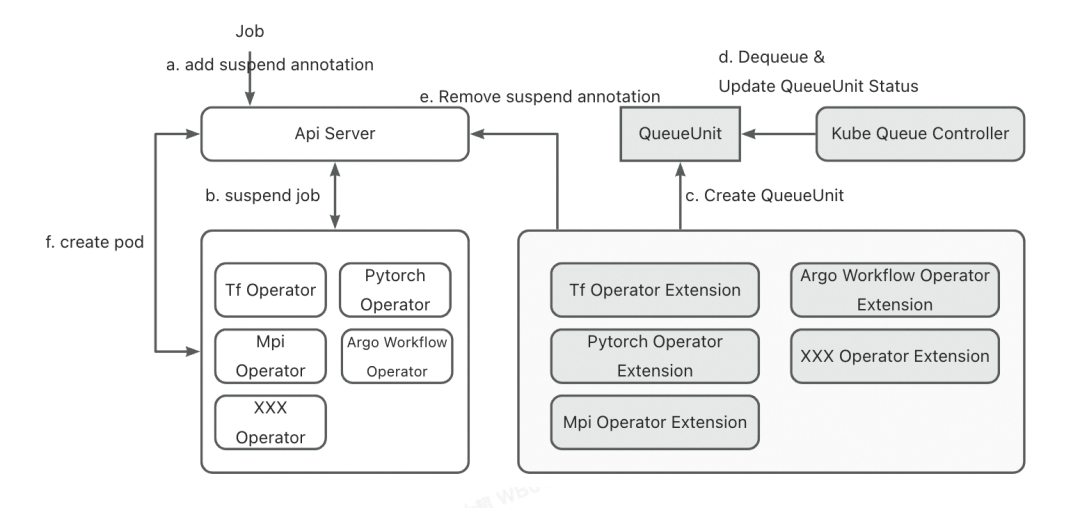

The following figure shows the architecture of Kube Queue, which consists of the following main parts:

1) Kube Queue Controller: The core controller responsible for task queuing, as shown on the left side of the following figure.

2) Operator Extension: An extension component that provides customized support for different task types, as shown on the right side of the following figure.

The task queuing system is based on two core abstractions: Queue and QueueUnit.

Queue: It represents a queue entity and serves as the container for queuing. It includes information such as the policy, quota, and queuing parameters of the queue. Currently, Kube Queue can automatically detect different types of resource quotas, such as Resource Quota, Elastic Quota, and ElasticQuotaTree. Kube Queue Controller automatically creates queue objects based on the configurations of these quotas. Users only need to configure upper-layer quotas, without the need to configure underlying queues.

QueueUnit: It represents a task entity that disregards the parameter information in the original task unrelated to the queuing policy, reducing the types of objects that the Kube Queue Controller needs to listen to and the number of connections established with the API server. Additionally, QueueUnit masks the job types. Therefore, any tasks that need to connect to the queuing system only need to implement the queuing interface of Operator Extension, effectively implementing the extendibility of task types.

The workflow is as follows:

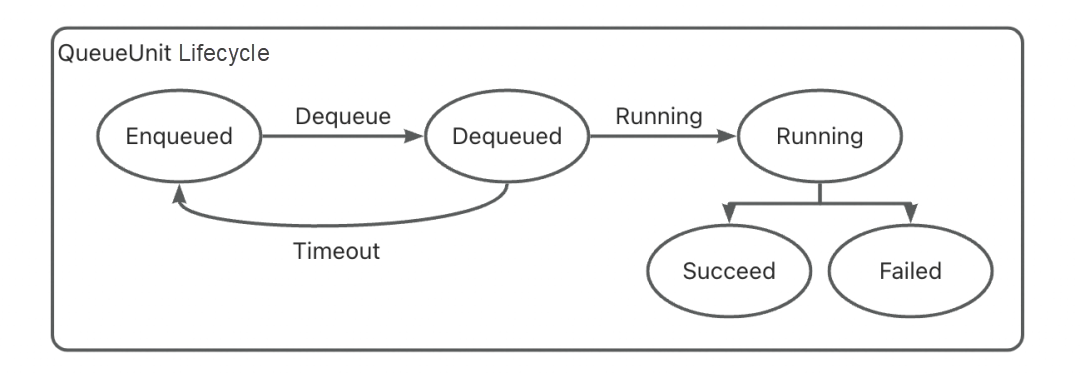

Task submission and enqueuing: When a task that can be identified by Kube Queue is submitted to an API server, the corresponding job operator and operator extension receive a task creation event. They decide whether to take over the task queue based on whether the task is pending when submitted. If the task is pending, Operator Extension will evaluate the resources required by the task and determine key queuing parameters such as the priority. Then, it will create a QueueUnit, a unified object that is queued in Kube Queue with the initial status being Enqueued.

Queuing and waiting for scheduling: After Kube Queue Controller receives a QueueUnit, it determines the queue to which the QueueUnit belongs based on the internal queue ownership policy and sorts the queue based on the priority and creation time. Kube Queue Controller processes all queues through a scheduling loop. In each round of the loop, the first task is extracted from each queue to try scheduling, ensuring that the number and priority of tasks in different queues do not interfere with each other.

Dequeuing and scheduling: After a task is dequeued, the status of the QueueUnit is updated to Dequeued. After Operator Extension receives this status, it removes the pending status of the task. After detecting that the task is released, the operator starts to create a task pod. Then, the task enters the normal scheduling process. If a task does not complete scheduling within a configurable timeout period, Operator Extension resets the corresponding QueueUnit to the Enqueued status. This means that the task startup times out and needs to be re-queued, preventing head-of-line blocking. If the task is executed, the status of the QueueUnit is updated to Running.

The queuing policy is an essential part of the queuing system. Currently, Kube Queue provides three flexible queuing policies to meet the diverse needs of enterprise customers, and each queue can be configured with an independent queuing policy.

By default, ack-kube-queue uses the same task rotation mechanism as kube-scheduler to process tasks, meaning all tasks in the queue request resources in sequence. If a task's request fails, it enters the unschedulable queue and waits for the next scheduling. This policy ensures that each task has an opportunity to be scheduled and maximizes the quota's efficiency. However, it cannot guarantee the priority of dequeued tasks. This policy is recommended in situations where you want to guarantee cluster resource utilization.

When a large number of tasks with small resource demands exist in a cluster, a significant amount of queue rotation time is occupied by small tasks. As a result, tasks with large resource demands cannot obtain resources to execute, leading to the risk of long pending. To avoid this situation, ack-kube-queue provides the blocking queue feature. When the feature is enabled, only the task at the front of the queue is scheduled, allowing large tasks to have the opportunity to execute. This policy is recommended in situations where you want to guarantee task priority.

To ensure that high-priority tasks, even if in the backoff phase, are preferentially attempted to be scheduled when the cluster obtains idle resources, ack-kube-queue provides a strict priority scheduling feature. When this feature is enabled, the earliest submitted high-priority task in the queue will be attempted to be executed after the running task ends. In this way, these tasks preferentially obtain idle cluster resources, preventing resources from being occupied by low-priority tasks. This policy is a compromise between the rotation policy and the blocking policy.

This section demonstrates how to use Kube Queue to perform a basic task queuing operation.

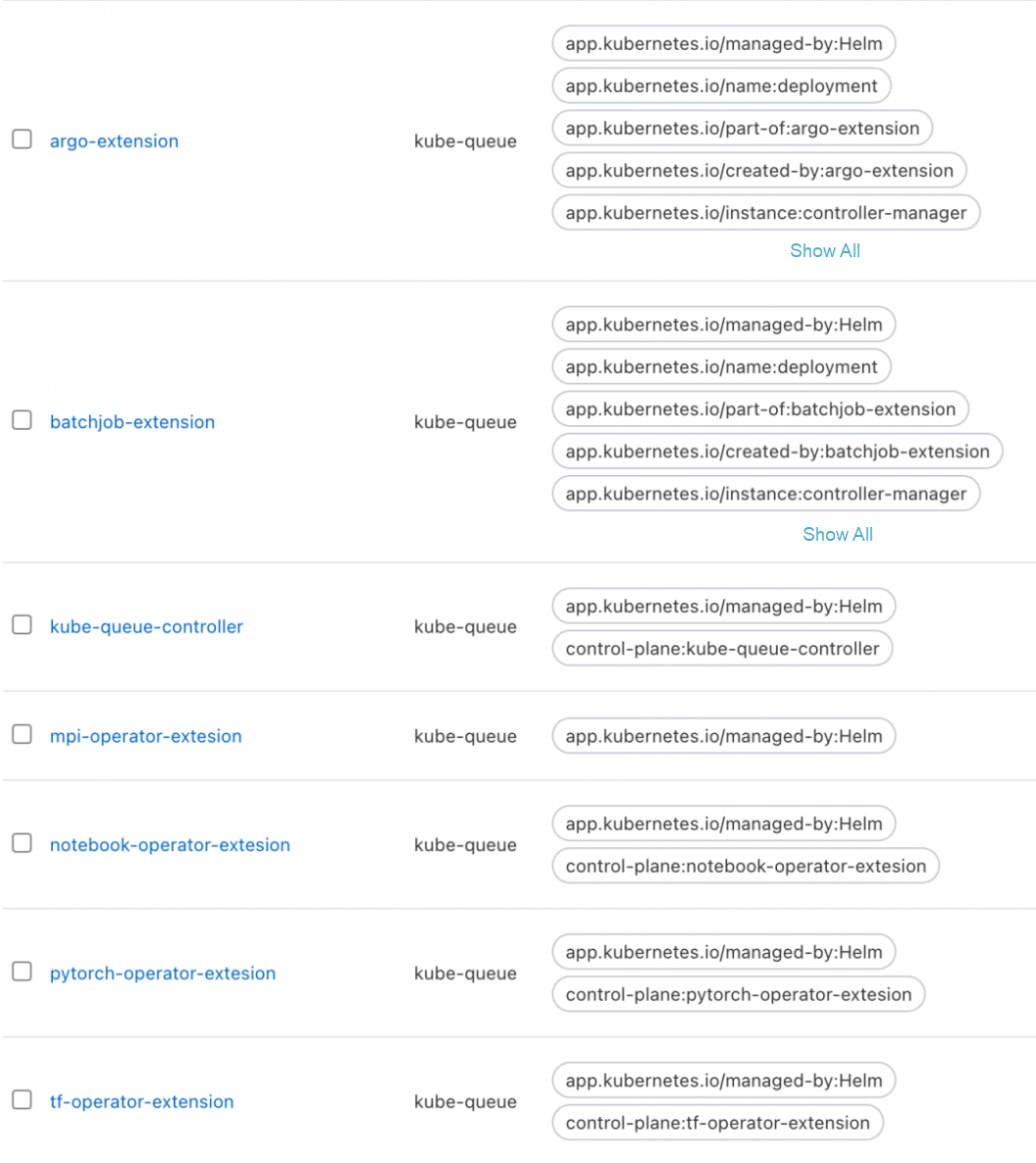

First, you need to install the kube-queue component, which can be installed with one click on the ACK cloud-native AI Suite page. See Use ack-kube-queue to manage job queues

After Kube Queue is installed, Kube Queue Controller and multiple controllers that provide extended support for different job types exist in the kube-queue namespace of the cluster. Each controller is responsible for the connection between Kube Queue and one type of task.

By default, the oversell rate is set to 2 by using the oversell rate parameter in kube-queue-controller. In this case, the queue can dequeue tasks with twice the amount of resources configured. You can set the oversell rate to 1 on the configuration page of the Deployment.

To implement queuing between two jobs, you first need to submit an ElasticQuotaTree that declares the queue. This ElasticQuotaTree declares a queue that can use up to 1 CPU core and 1Gi memory resources and mounts the default namespace to this queue.

apiVersion: scheduling.sigs.k8s.io/v1beta1

kind: ElasticQuotaTree

metadata:

name: elasticquotatree

namespace: kube-system # The ElasticQuotaTree takes effect only if it is created in the kube-system namespace.

spec:

root:

name: root # The maximum amount of resources for the root equals the minimum amount of resources for the root.

max:

cpu: 1

memory: 1Gi

min:

cpu: 1

memory: 1Gi

children:

- name: child-1

max:

cpu: 1

memory: 1Gi

namespaces: # Configure the corresponding namespace.

- defaultAfter that, you need to submit two Kubernetes jobs. When submitting the jobs, set the jobs to the Suspended status, that is, set the .spec.suspend field of the jobs to true.

apiVersion: batch/v1

kind: Job

metadata:

generateName: pi-

spec:

suspend: true

completions: 1

parallelism: 1

template:

spec:

containers:

- name: pi

image: perl:5.34.0

command: ["sleep", "1m"]

resources:

requests:

cpu: 1

limits:

cpu: 1

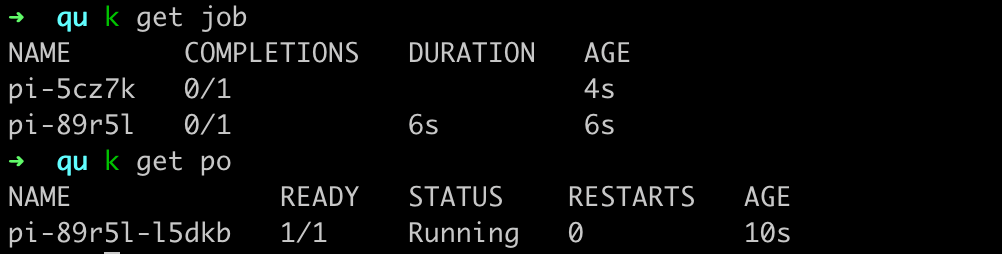

restartPolicy: NeverAfter the two tasks are submitted, view the task status. You can see that only one task starts to execute, and the other task enters the waiting status.

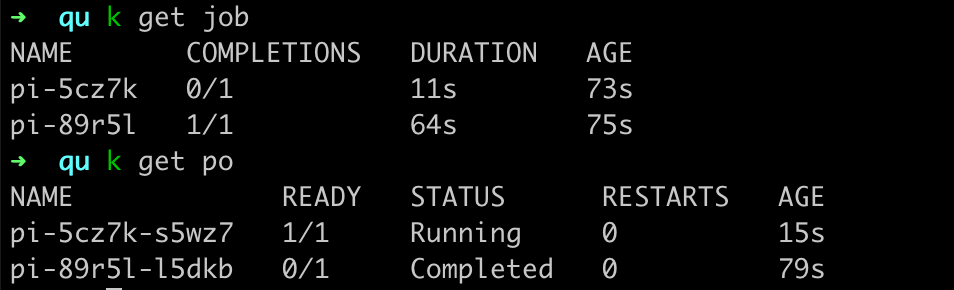

After the previous task is completed, the subsequent tasks automatically start to execute, completing the automatic control of task execution.

This article discusses the importance and necessity of the task queue system in the cloud-native era, and details how Kube Queue of ACK defines its role and contribution in the current Kubernetes ecosystem.

In the follow-up articles, we will further discuss how to use Kube Queue and the efficient scheduling mechanism of ACK to quickly build a task management system based on ElasticQuotaTree that can meet the needs of enterprises.

Interpreting EventBridge Transformation: Flexible Data Transformation and Processing

Exploring How Elastic Scheduling and Virtual Nodes Meet Instant Compute Demands

212 posts | 13 followers

FollowAlibaba Container Service - June 23, 2025

Alibaba Container Service - February 12, 2021

Alibaba Container Service - November 15, 2024

Alibaba Container Service - February 12, 2021

Alibaba Container Service - April 8, 2025

Alibaba Developer - April 7, 2020

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn MoreMore Posts by Alibaba Cloud Native