By Hexi

As the adoption of cloud migration becomes more widespread, it is evident that not only internet-based customers but also traditional enterprises, including manufacturing and industrial clients, are embracing cloud-native approaches to transform their IT architectures. Increasing the utilization of cluster resources has become a common goal for organizations migrating to the cloud. During this migration process, the question arises as to whether there is a cloud-native method to better achieve the core objective of improving cluster resource utilization.

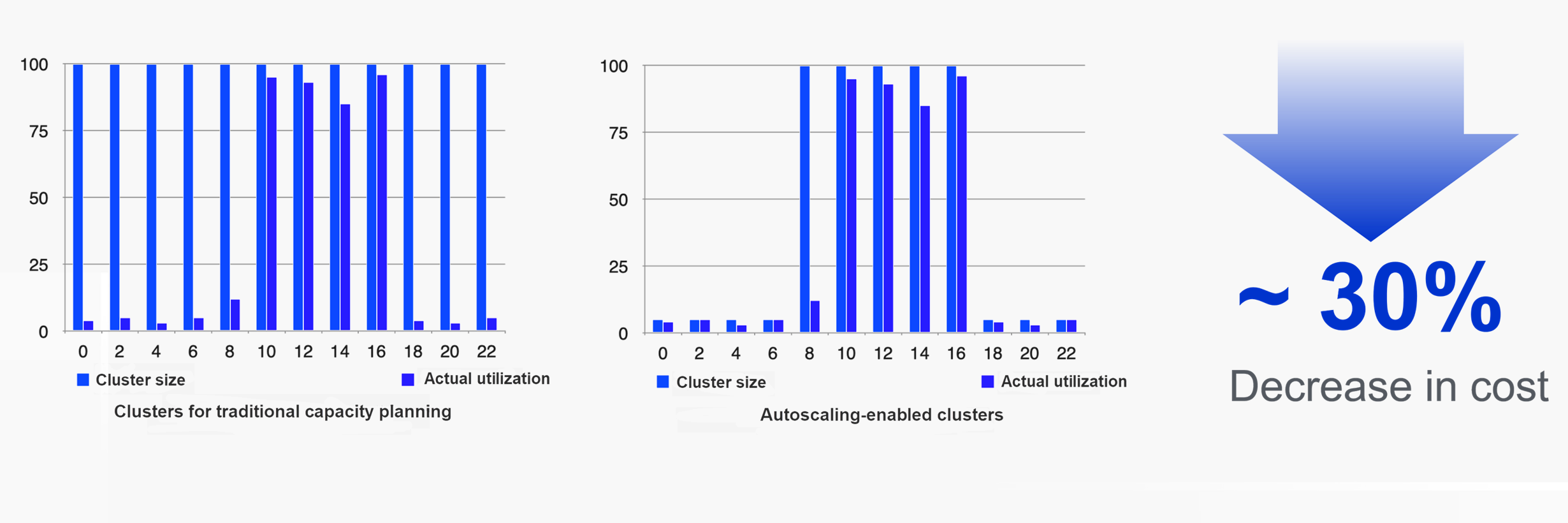

The key to improving cluster resource utilization lies in addressing the disparity between planned capacity and actual demand. Elasticity serves as a crucial solution in this regard, enabling cost optimization. In traditional capacity planning, resources are provisioned based on peak business usage to ensure stability. However, this approach often results in low resource utilization and increased costs, as depicted in the left graph. On the other hand, by enabling autoscaling, resource capacities align better with the actual resource demands, significantly improving resource utilization and reducing overall costs.

After establishing the goal of elasticity, let's explore the elasticity solutions provided by Alibaba Cloud Container Service for Kubernetes (ACK) across various dimensions.

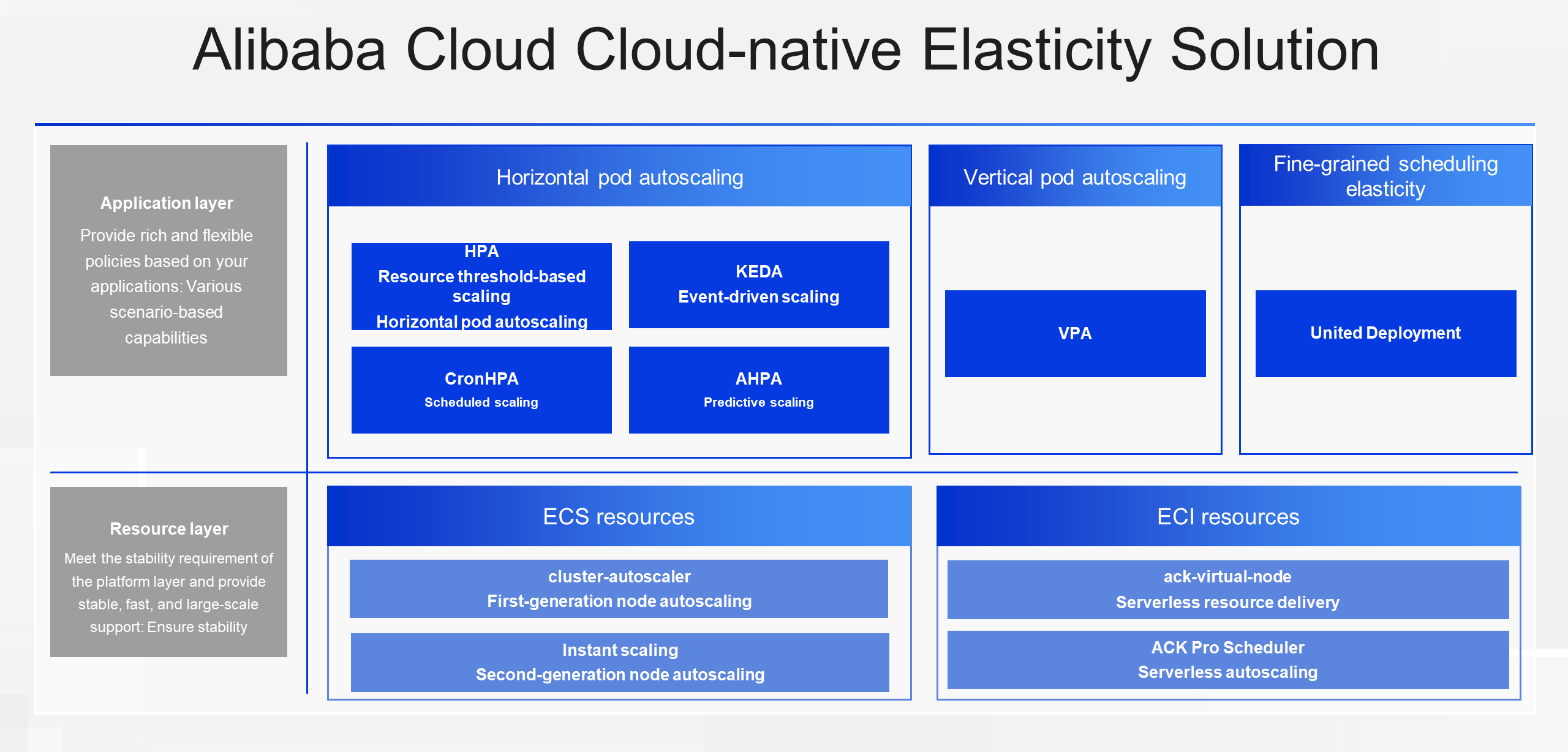

Elasticity is categorized into different dimensions. If we segment the IT architecture into two layers, the top layer represents the application layer. Elasticity at the application layer can be categorized into horizontal and vertical scaling, as well as fine-grained scheduling to address policy issues at the application layer and scheduler. Horizontal pod autoscaling is the most commonly used form of elasticity. Alibaba Cloud offers a range of elasticity solutions, allowing users to select components best suited for scaling their pods based on their business characteristics.

The second layer is the resource layer, where cloud vendors maintain and provide resources to customers, including Kubernetes platforms and underlying virtual machines, networks, storage, and other resources. The resource layer focuses on ensuring application platform stability and bridging the gap between planned capacity and actual demand. This underscores the core capability required for elasticity at the resource layer.

In the following sections, we will provide a detailed analysis of these capabilities, highlighting how Alibaba Cloud invests in elasticity at various levels and the capabilities it has built.

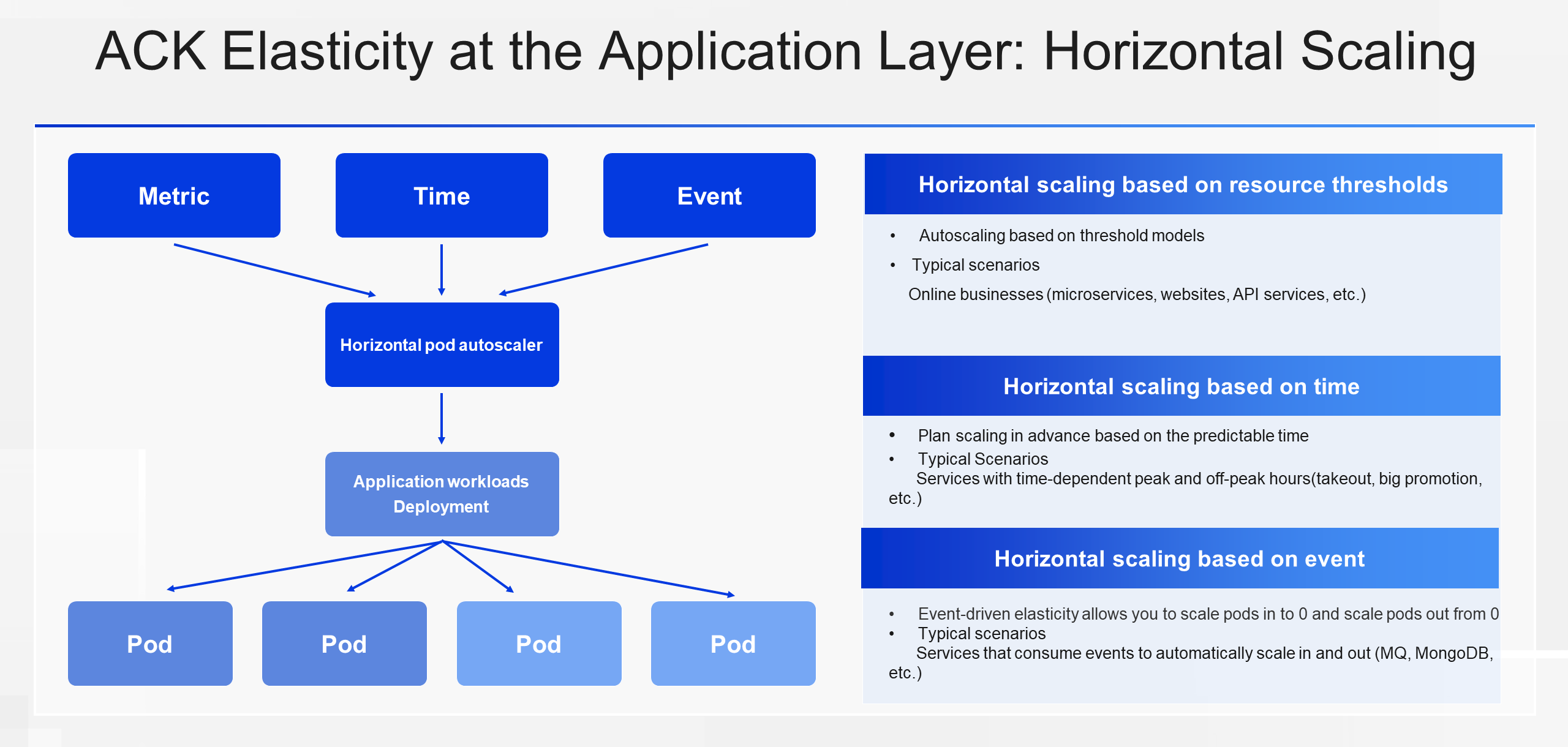

First, let's take a look at horizontal scaling at the application layer. Trigger sources can be divided into three categories: metrics, resources, and events. Horizontal scaling based on indicators or resource threshold models is known as HPA, which is the most commonly used type. HPA is suitable for businesses whose peaks and off-peaks can be described by metrics. For example, peaks and off-peaks of some microservices are reflected in resource metrics such as CPU and memory. Additionally, the scaling of websites or APIs can be determined by page views. HPA is suitable for these scenarios.

Some services are strongly related to time. For example, in the food delivery business, lunchtimes and suppertime are peak hours, and other times are off-peak hours. Additionally, in promotional scenarios, peaks are expected to occur within the planned promotion period. CronHPA is very suitable for this kind of service, as it scales pods based on time. There is another category that cannot be well covered by the above two types, which is the event type. For example, the scaling of services that consume messages needs to be determined by the number of messages in MQ. This type of service can be addressed by KEDA.

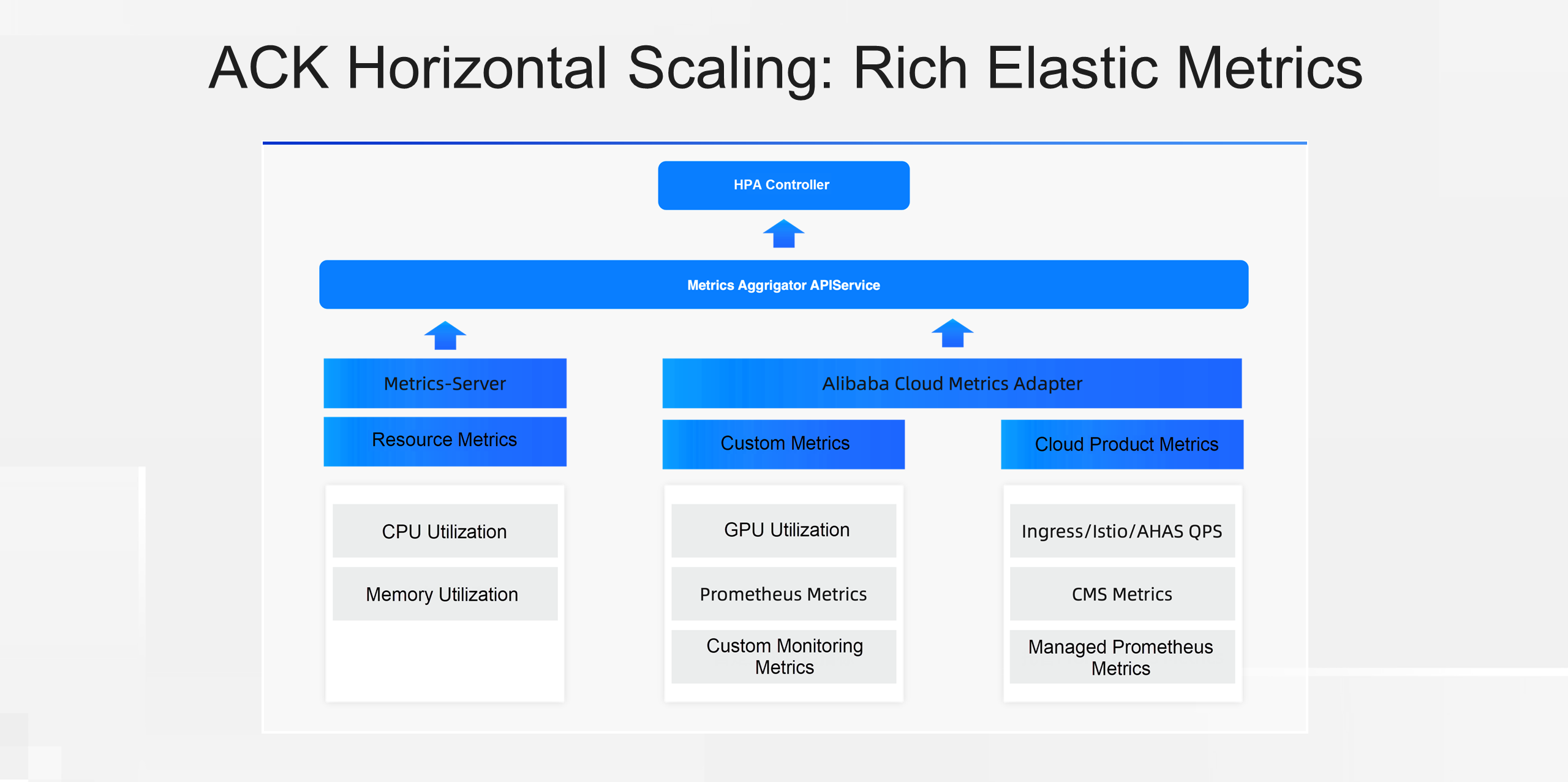

Next, let's take a look at the capabilities that Alibaba Cloud has extended to the most widely applied HPA. First, autoscaling metrics. This is a concern for many, and is also the first key direction of ACK. For traditional HPA, you may equate metrics to CPU and memory. These are the only two types of scaling metrics defined in the community version of resource metrics.

For different business scenarios and different business forms, only CPU and memory cannot meet business demands. What Alibaba Cloud does in the dimension of HPA autoscaling metrics is to find scaling metrics that can suit different business scenarios and make the system easier to use.

To this end, ACK provides the Metrics Adapter component to convert metrics. Metrics Adapter supports custom metrics. For example, you can use it to enable HPA to monitor GPU utilization and Prometheus metrics. In addition, common metrics of Alibaba Cloud products such as Ingress, Istio, and AHAS QPS are also supported by default. If you want to use these metrics, you can directly configure them in HPA.

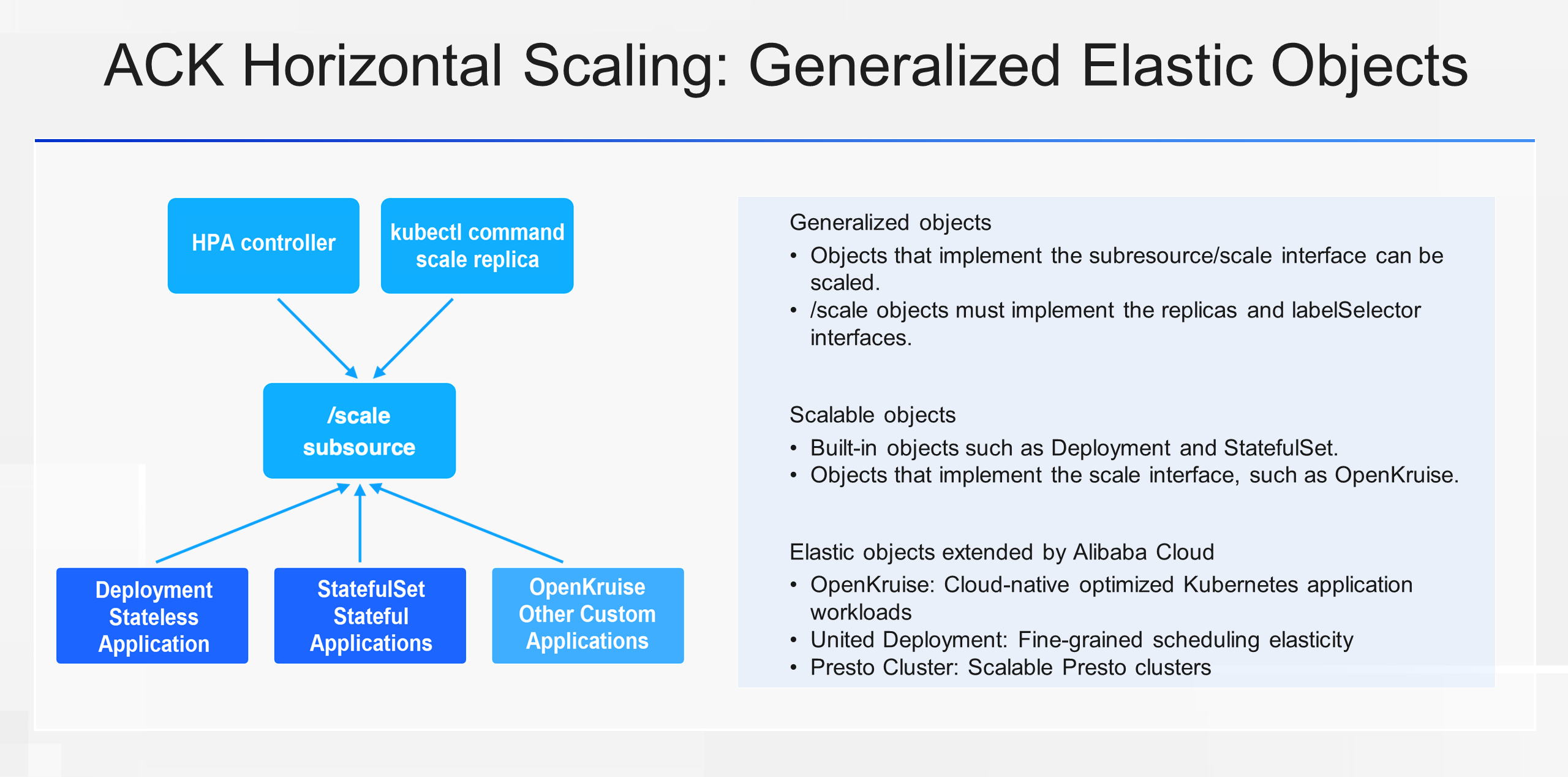

Secondly, let's talk about scaling objects. What scaling objects does HPA support? Deployment? StatefulSet? In fact, HPA does not differentiate between specific scaling objects like Deployment or StatefulSet. It actually operates based on a generalized scale subresource. This means that as long as the subresource is implemented, HPA can manage and scale it. Therefore, when it comes to scaling objects, HPA identifies the scenarios that need to be defined as a scaling object and then provides support for them. For example, ACK has created CRDs for Spark and Presto clusters, and these CRDs can also be managed and scaled by HPA, meeting the requirement for horizontal autoscaling in these scenarios.

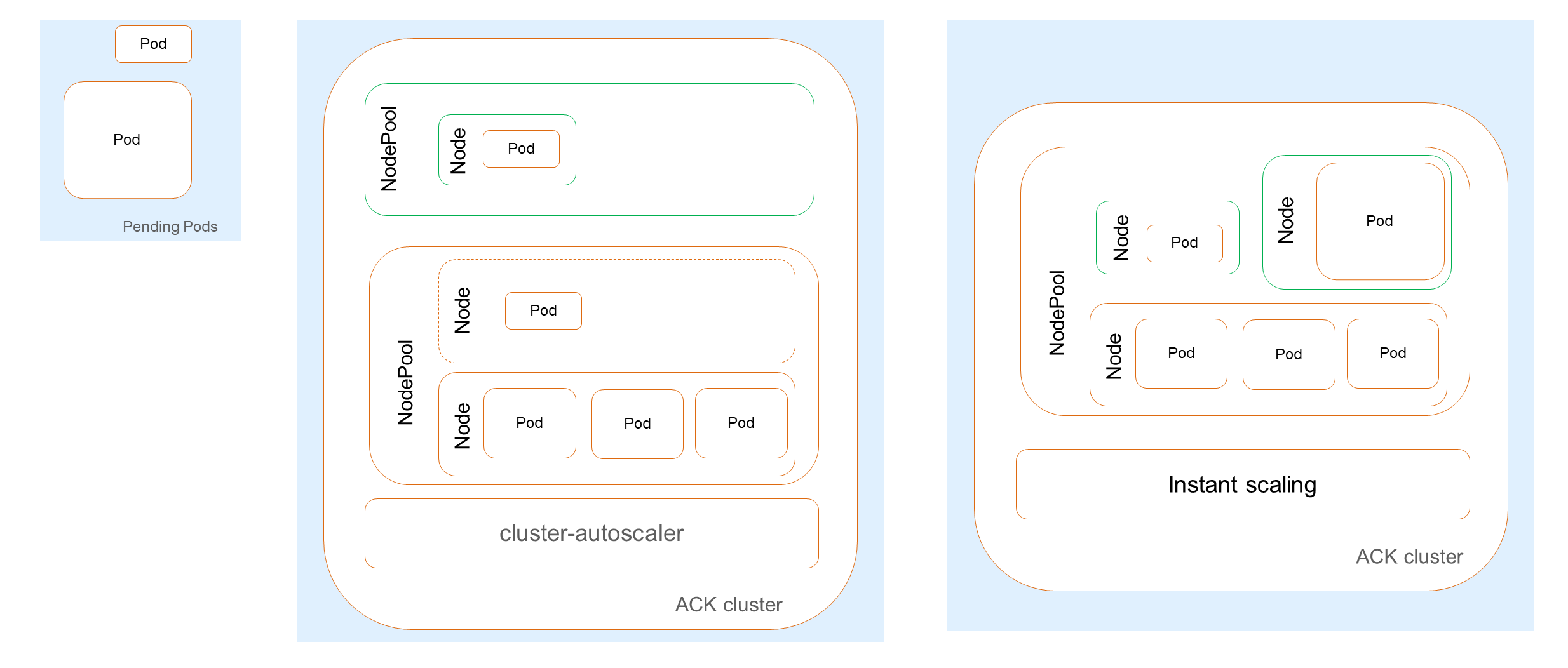

Autoscaling at the application layer controls the autoscaling of pods. Imagine a scenario where a service needs 100 pods to handle traffic spikes, but the cluster only has resources to run 50 pods, leaving the remaining 50 pods without resources for scheduling. This is the issue that resource layer elasticity aims to solve. It ensures that the cluster has enough resources to schedule pods and automatically releases them when they are not needed, preventing excessive waste.

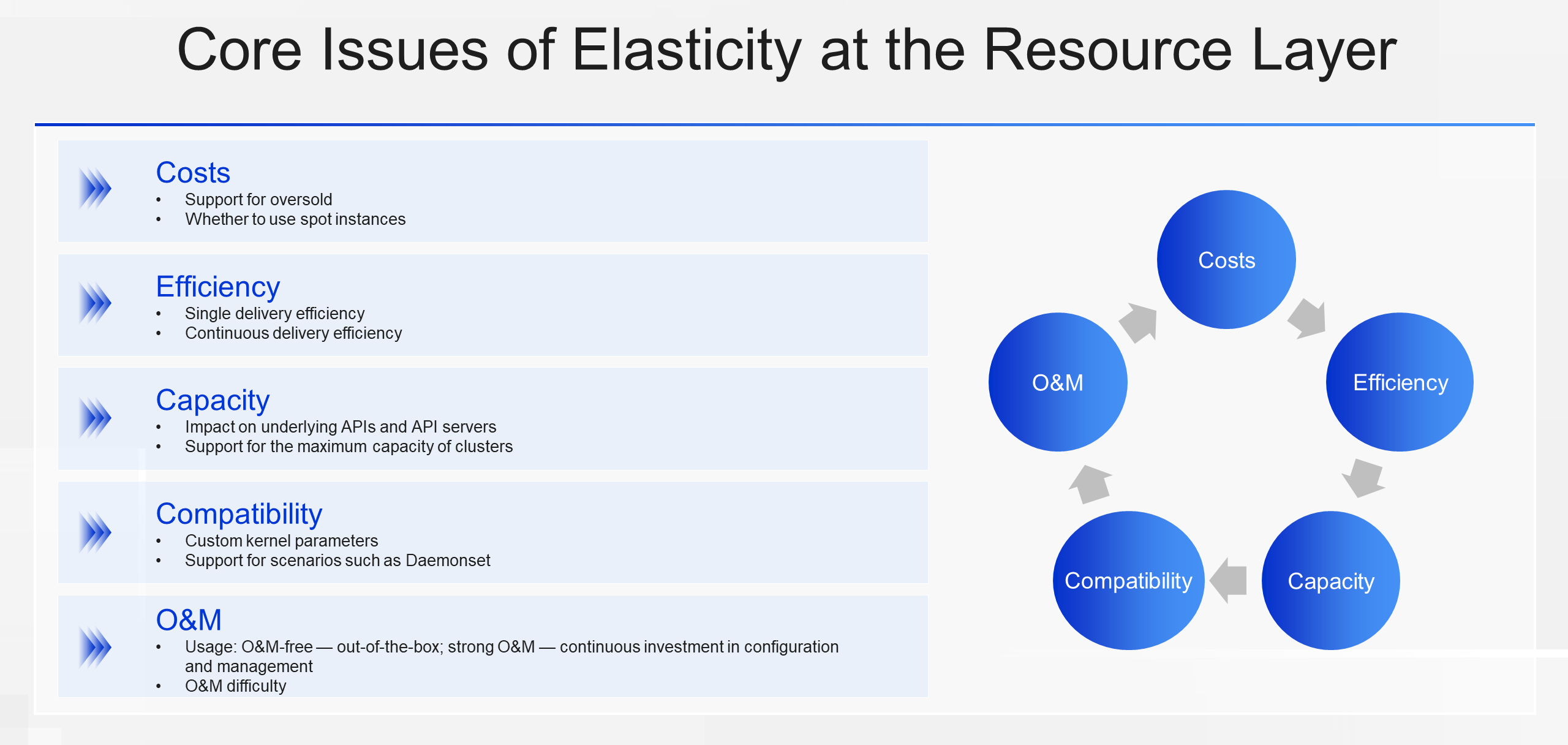

When we break it down further, the core concerns of the five dimensions are what resource layer elasticity focuses on, which also directly affects how we select our elasticity plan at the resource layer. As mentioned earlier, resource layer elasticity can be divided into ECS and ECI based on resource type. Let's take their respective typical components, Cluster Autoscaler and VK, as examples to describe the five dimensions of resource layer elasticity.

First, let's consider the cost. The main difference between VK and Cluster Autoscaler is the oversold ratio. ECI does not support overselling, and the oversold ratio for offline jobs averages between 1:2 and 1:4. Second, efficiency. Cluster Autoscaler delivers in minutes, but may experience unstable delivery times with varying numbers of node pools and continuous elastic loads. ECI supports fast delivery within 1 minute. Third, the supported cluster size. VK scaling is limited by the One Pod One Node model, which has a direct impact on the underlying APIs and API servers. In contrast, Cluster Autoscaler adopts a cluster/node two-level model, resulting in a higher capacity limit. Fourth, compatibility. Cluster Autoscaler is fully compatible, while ECI has differences in support for scenarios that require kernel parameters or DaemonSets. Finally, ease of operations and maintenance. VK requires no maintenance, while Cluster Autoscaler requires strong maintenance.

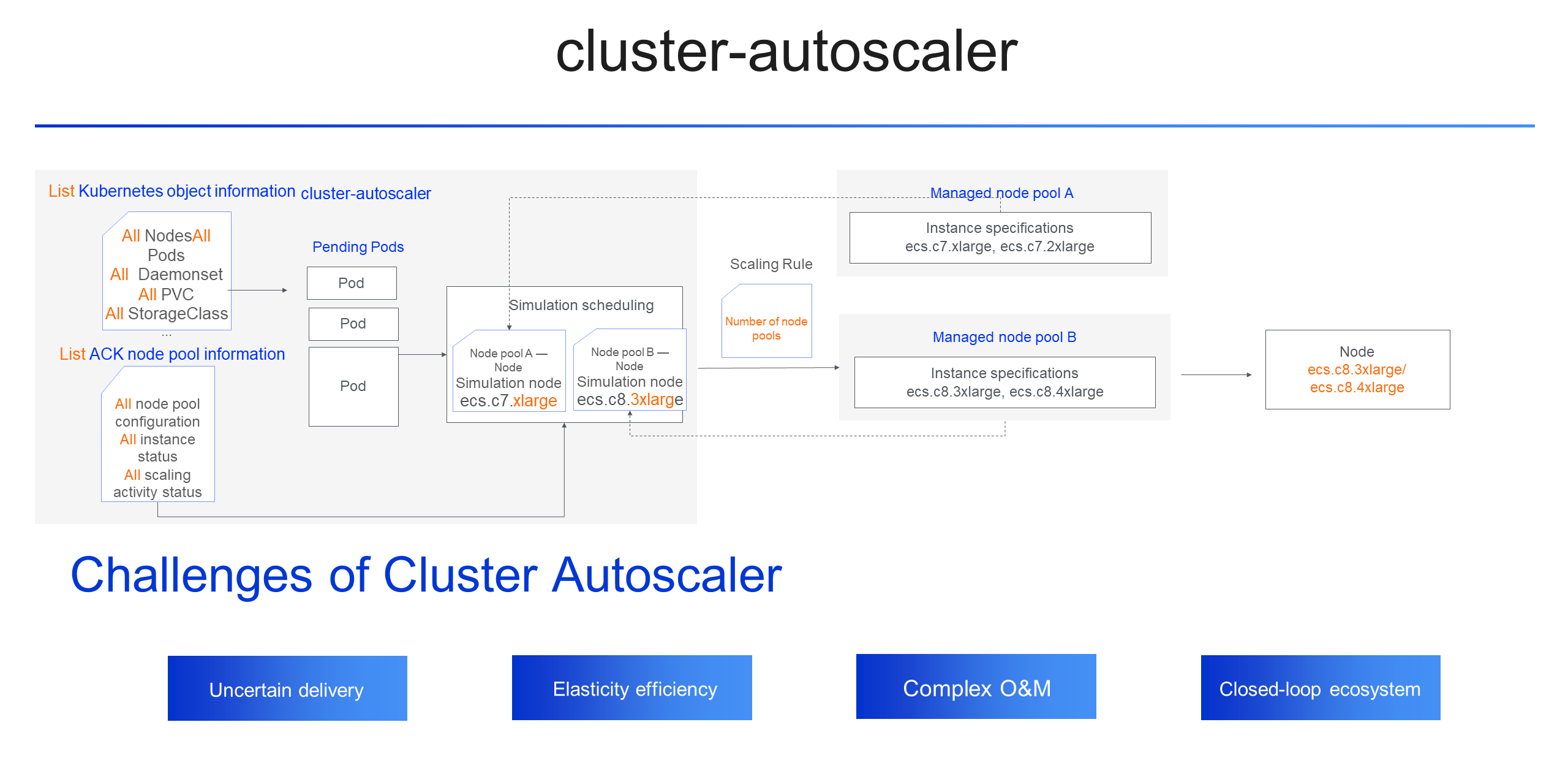

Let's take a closer look at the commonly used Cluster Autoscaler in the industry and the challenges it faces. First, Cluster Autoscaler supports the round robin mode. The diagram illustrates the basic logic of a loop. In each loop, Cluster Autoscaler maintains the state of the whole cluster. It finds the pods that cannot be scheduled in the cluster, and then abstracts each node pool with elasticity enabled into a virtual node (hereinafter referred to as One Node Pool One Virtual Node). After determining whether pods can be deployed (to the resources provided by the node pool), Cluster Autoscaler increases the number of nodes in the corresponding node pool, realizing scale-out. This is the basic logic behind most elasticity customers using Cluster Autoscaler.

At the same time, this mechanism works well for many applications and workloads. However, as more users choose cloud migration, ACK is also more widely applied. Users are migrating various types of workloads to ACK, which introduces more challenges to this mechanism based on Cluster Autoscaler that relies on the maintenance of the whole cluster and One Node Pool One Virtual Node. The challenges center on the uncertain delivery resources, unstable elasticity efficiency affected by cluster size and business type, complex O&M, and closed-loop ecosystem.

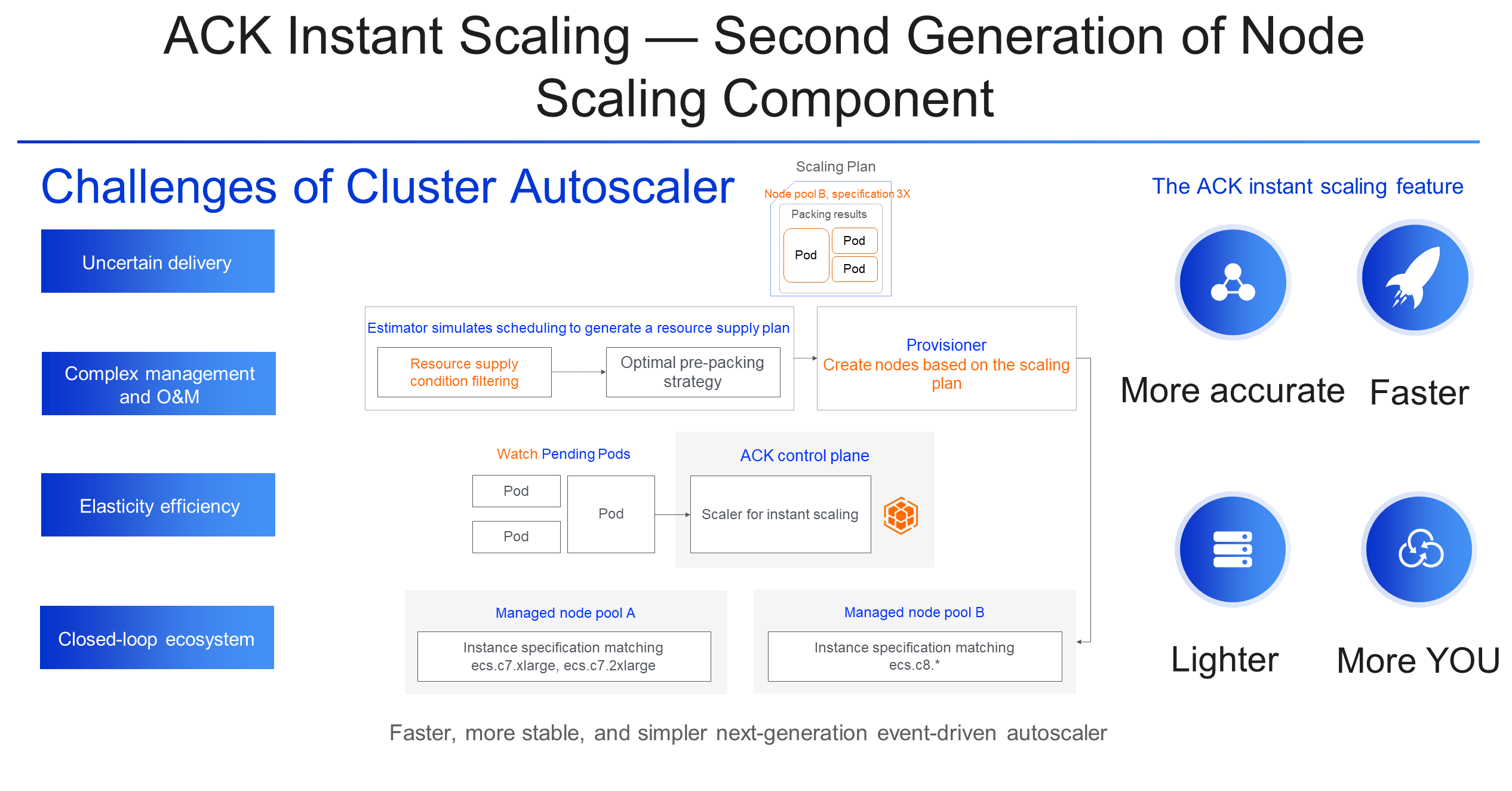

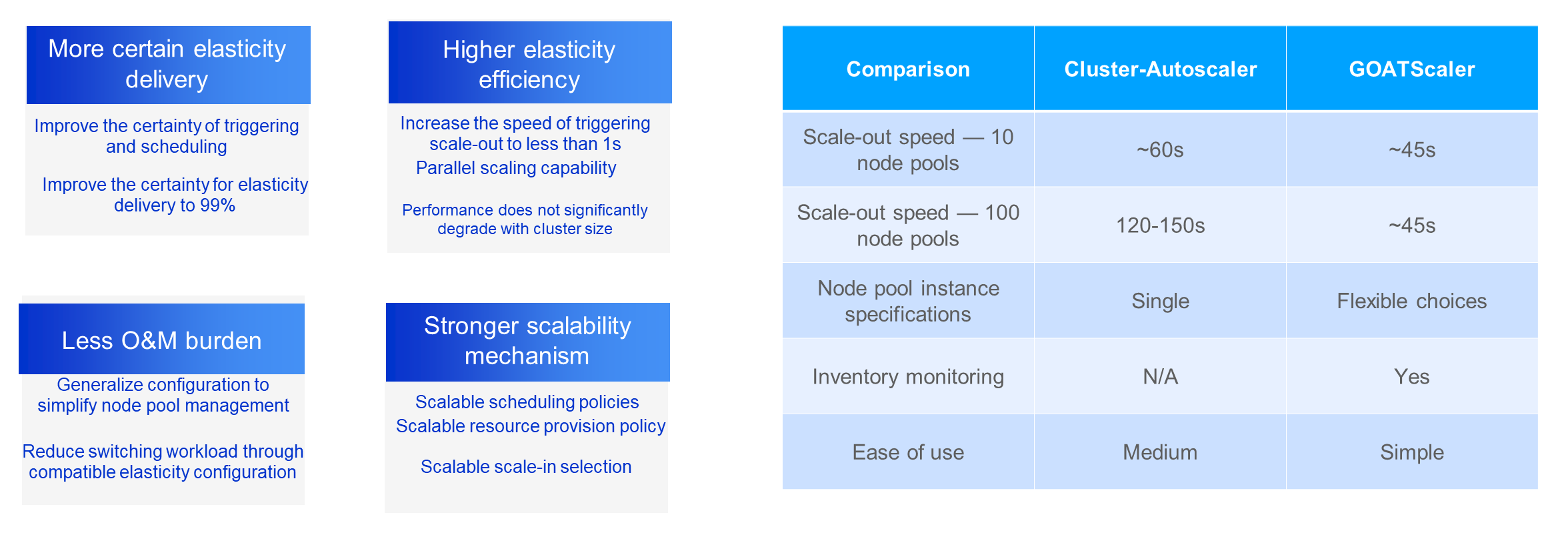

The uncertain delivery, complex O&M, insufficient elasticity speed, and closed-loop ecosystem of Cluster Autoscaler are the four biggest concerns of many developers for enabling elasticity in the production environment. To address these concerns, we have developed a second-generation node scaling product — Instant Scaling.

Instant Scaling is an event-driven node scaling controller. It is compatible with the semantics and behaviors of existing elastic node pools and can be enabled and used by all types of applications. Instant Scaling has the following features:

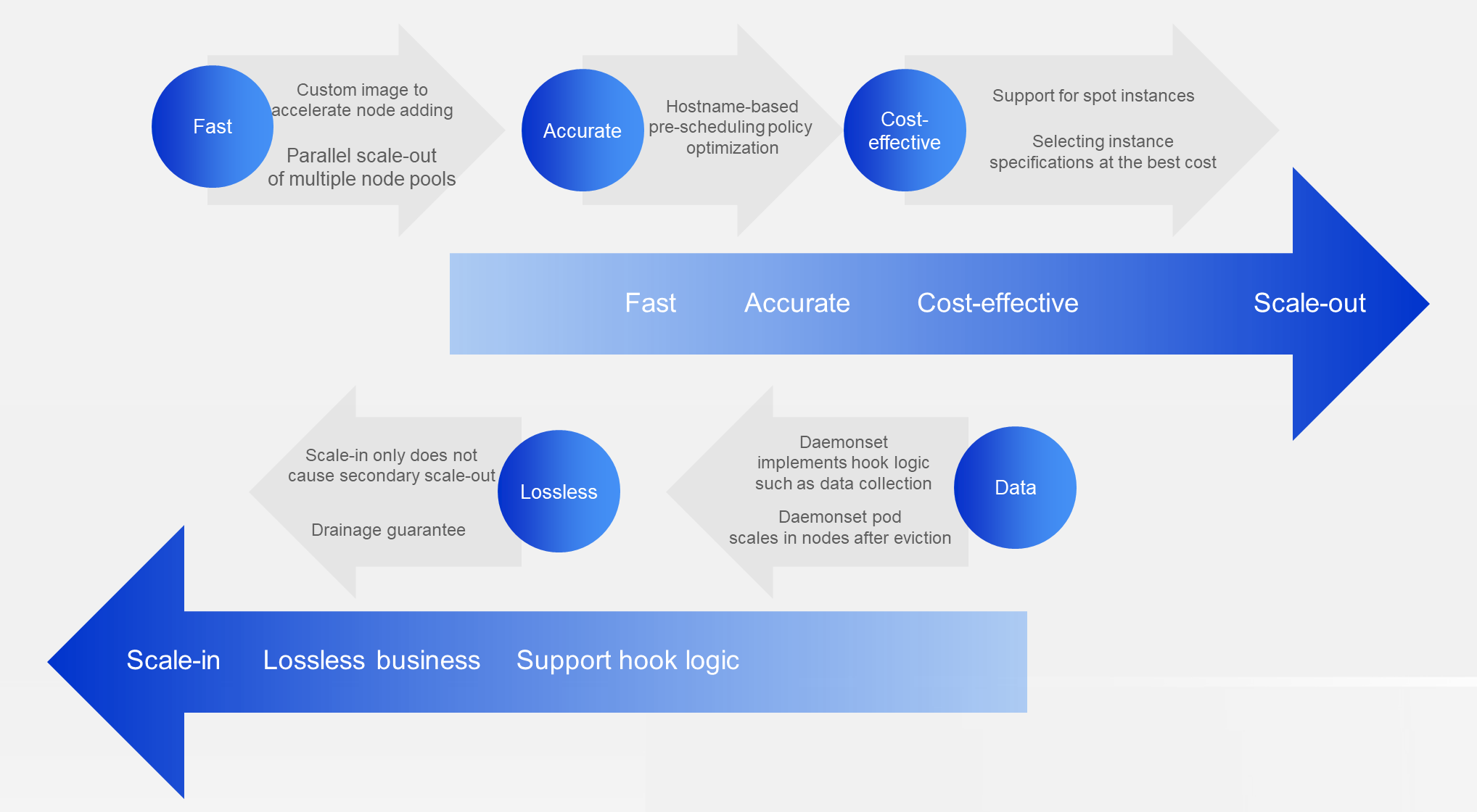

• More accurate: The One Node Pool One Virtual Node abstraction method and pre-scheduling packing mode of the first-generation elastic components are abandoned by Instant Scaling. During scale-out, the scaling decision is expanded from a simple scaling rule that only contains the number of nodes to a scaling plan that supports specific instance types, making scale-out more accurate.

• Faster: Event-driven scaling and parallel scaling make Instant Scaling more sensitive and faster.

• Lighter: Instant Scaling can automatically select instance types and requires fewer node pools. This makes the management and O&M easier.

• More YOU: Instant Scaling supports the scalability mechanism in both scale-out and scale-in phases, allowing the user logic to participate in the lifecycle of elastic nodes.

Now let me give you some specific business cases to compare the detailed differences between the two generations of node scaling components from these four aspects.

In a typical scale-out scenario, the node resources in the cluster have been occupied by three pods. At this time, a pod requesting fewer resources appears. Assume that this pod can be scheduled to a new node in the existing node pool. If you use Cluster Autoscaler, a node of the same size in the existing node pool is triggered. The pod requesting fewer resources can be scheduled, but the node resource utilization is low.

If you want to improve the utilization of node resources, you have to create a new node pool and configure a more appropriate instance specification for small resources. However, this will increase the burden of subsequent O&M. If you use Instant Scaling, you only need to configure multiple instance specifications in the same node pool. Instant Scaling will select the appropriate instance specification based on the requested resource. It can be seen that the delivery certainty of Instant Scaling brings benefits to both resource utilization and O&M.

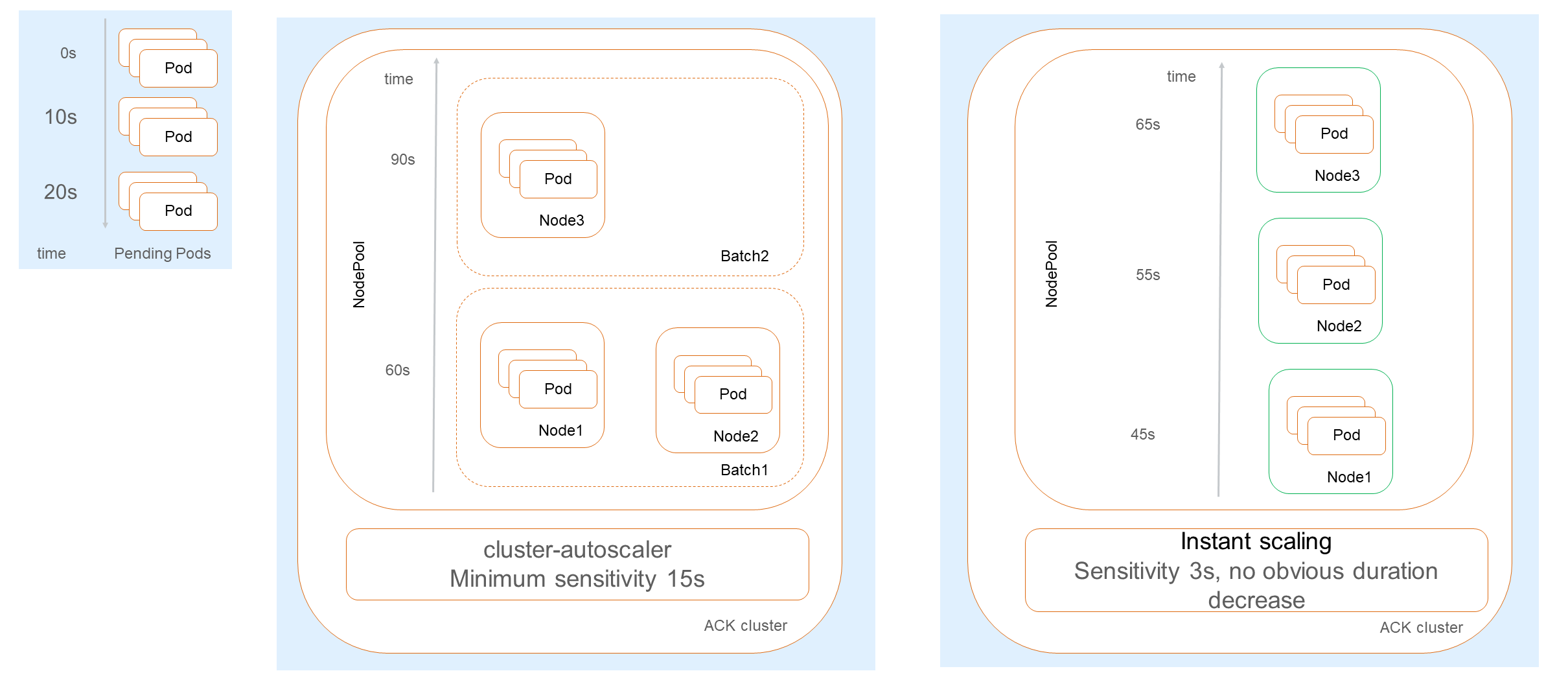

Then we look at the elasticity efficiency. As we mentioned earlier, Cluster Autoscaler supports the round robin mode, with a minimum interval of 15s. It scales in batches, which is more suitable for single-delivery models. However, in scenarios where the scale is in batches or non-schedulable pods continuously occur in a period, such as churn calculation and workflow, Cluster Autoscaler may involve cross-batch processing. Btches may also affect each other, resulting in unstable elasticity efficiency.

In contrast, Instant Scaling is event-based. It starts processing when an unschedulable event occurs in pods, and supports parallel scale-out of the same node pool, which greatly reduces the time required to trigger scale-out. In this example, we can see that there are 3 batches of pods with an interval of 10s. In Cluster Autoscaler, as the last batch of pods is cross-batch, it takes 90s for all pods to be scheduled. However, in Instant Scaling, pods are not delayed due to batches, so each batch takes about 45s.

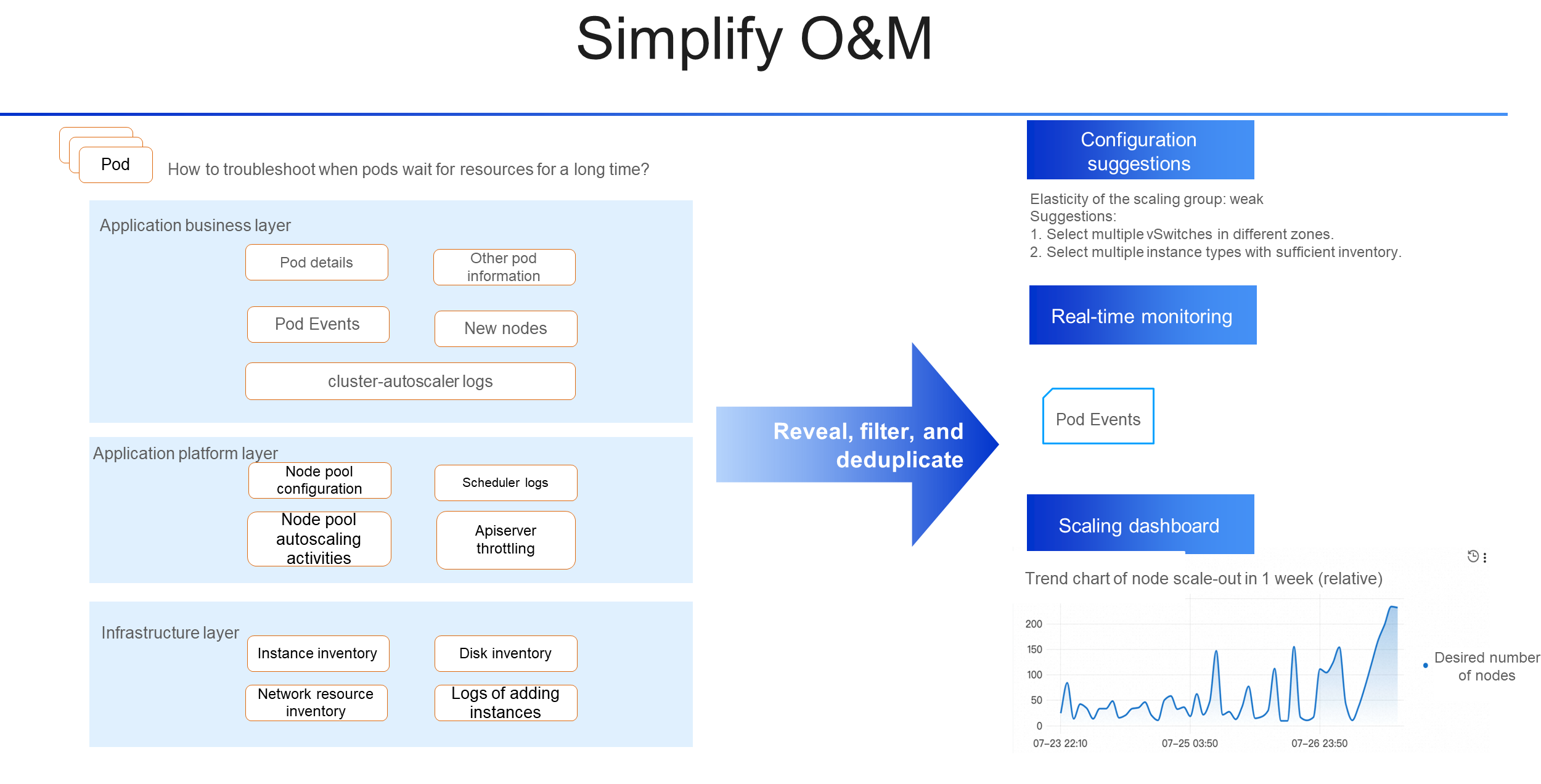

Now let's look at O&M. The O&M cost is often ignored in the early stage and appears as a vexed problem in the later stage. Nearly half of flexible users complain about the complexity of O&M. First, the expansion of node pools caused by the uncertain delivery of Cluster Autoscaler introduces complexity. Besides, the troubleshooting of Cluster Autoscaler is also complex. A question frequently asked by users is that, sometimes pods are expected to be deployed through elastic scaling, but they are not scheduled for a long time. If you want to troubleshoot this problem in Cluster Autoscaler, you may need to troubleshoot logs at all levels from the business layer to the infrastructure layer. As the cluster size increases, the complexity increases exponentially.

What is the ideal way for users to troubleshoot? Let's look at the whole process of the product. First of all, during the configuration, there are prompts to avoid some errors. Second, when pods are pending, all elasticity-related issues that require your attention can be revealed through pod events. You only need to run the DESCRIBE statement to see them. Third, if pods have been deleted or you want to check some statistics, there is a dashboard for tracing. These capabilities that simplify O&M will also be introduced to Instant Scaling products.

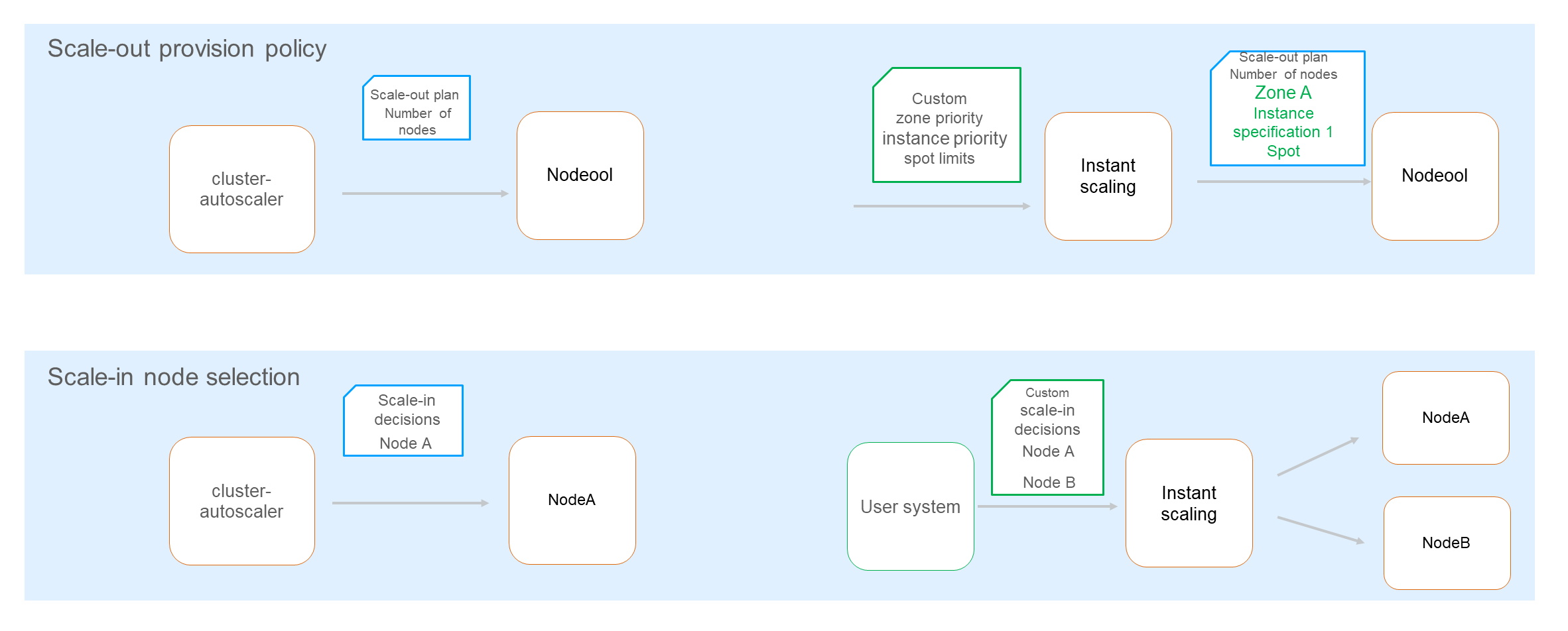

Finally, let's look at the extensibility. Cluster Autoscaler maintains the global state, so its ecosystem is relatively closed and you cannot participate in the node lifecycle. Instant Scaling allows you to specify more detailed requirements during scale-out, such as the priority of zones and instance types, and the limits on spot instances. The final scale-out plan will follow these policies defined by users. Instant Scaling is also very inclusive in terms of scale-in. You can follow the policy of the Instant Scaling protocol to specify scale-in nodes according to your logic.

In summary, compared with the traditional Cluster Autoscaler, Instant Scaling has a faster elasticity speed and more stable elasticity efficiency. Especially when the cluster size increases or the frequency of autoscaling increases, it improves efficiency by over 50%. In addition, Instant Scaling also simplifies the use of node pools. It is troublesome for O&M personnel to choose appropriate specifications according to different applications. Instant Scaling automatically simulates the packing policy and selects the models that are suitable for services within the scope of a small number of filtering rules configured by developers for elastic provisioning. This not only reduces the usage cost but also improves the success rate of node pool elasticity.

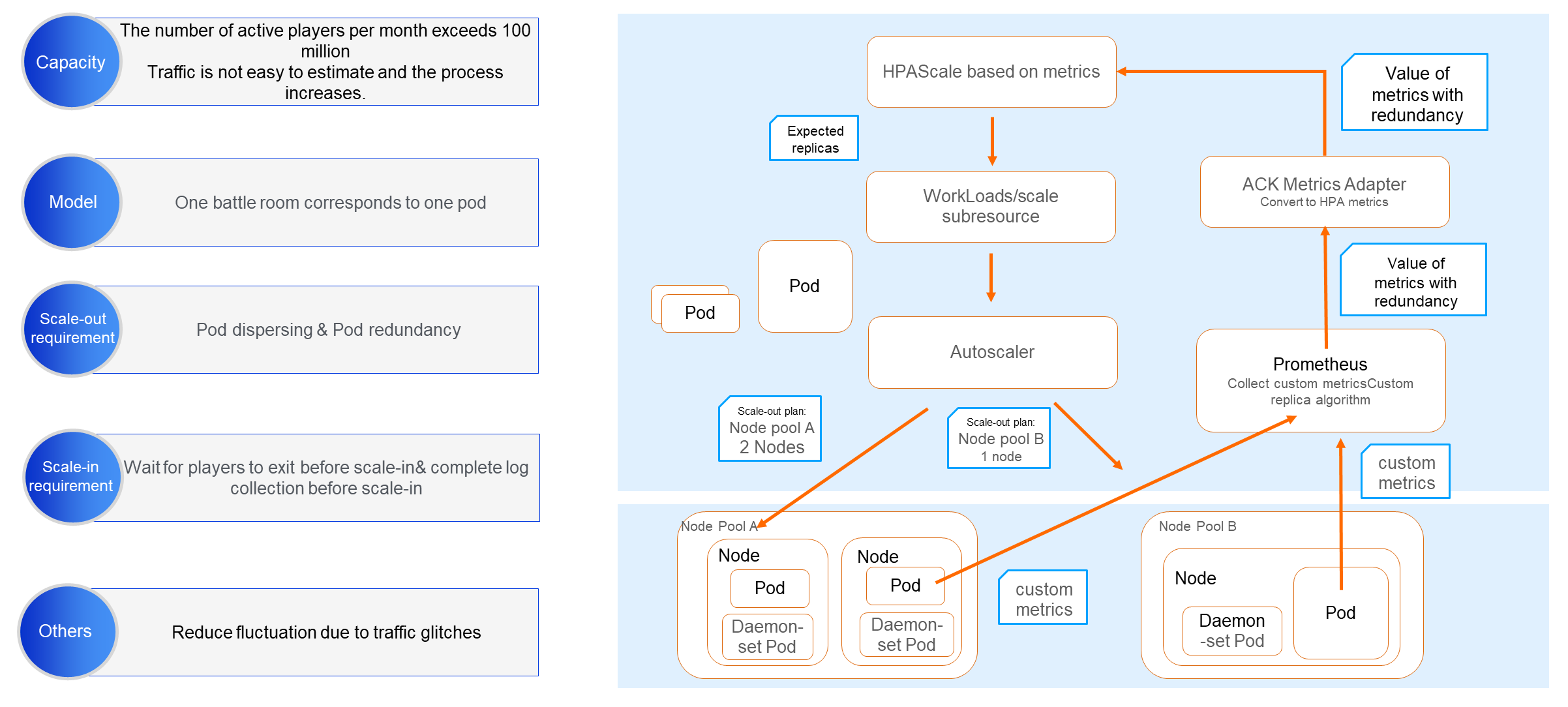

We use a real case in the production environment to illustrate how the preceding elasticity solutions at various levels are selected, operate, and take effect under specific circumstances. Let's start by looking at the basic information and characteristics of the user in this case.

• Capacity: The user expects to apply elasticity to the AI scenes of a game whose active players per month exceed 100 million. The number of active players per month is very large, but the traffic is unpredictable, probably due to sudden spikes.

• Model abstraction: The user maps a game battle room to a pod of business loads, and the number of players in each room has a fixed upper limit.

• Scale-out requirements: In addition to the basic scale-out requirements, the user wants to disperse pods based on the nodes to ensure stability. On the one hand, the user hopes that the pods are automatically scaled during peak and off-peak hours. On the other hand, to better cope with sudden spikes, the user also requires a fixed number of pod redundancies.

• Scale-in requirements: To ensure player experience, the user expects the pods of players and their nodes to be scaled in after the players exit. The node must be scaled after the data logs are collected. Otherwise, data logs may be missing.

Based on the analysis of the user's business, the service load is positively correlated with the number of players, and there is no better law in other dimensions. Therefore, we recommend that the user collect the number of players by using Prometheus, and then install Metrics Adapter to convert the number of players into custom metrics of HPA. Because the intrusive modification of the internal algorithm of HPA is a solution with high maintenance costs, we recommend that the user add the corresponding redundancy on the metric side to ensure that the output replicas of HPA are redundant.

The elasticity solution at the resource layer is discussed in two aspects: scale-out and scale-in.

In terms of scale-out, although redundant pods are implemented at the service load layer, resources still need to be quickly provisioned in the case of sudden spikes. Alibaba Cloud provides a custom image solution and tools to pre-load node component images and user images. This helps save time for image pulling during node scaling and business startup. At the same time, it also provides parallel expansion of multiple node pools, so that the resource supply of businesses belonging to different node pools can be carried out at the same time without affecting each other. In addition to speed, the user also requires disperses based on the HostName node. We optimize the HostName-based pre-scheduling policies in the elasticity component to make elasticity decisions more accurate.

On the premise that stability is guaranteed, resource cost is always one of the most concerned issues for users. The market price of Alibaba Cloud preemptible instances (spot instances) fluctuates based on supply and demand. Compared with pay-as-you-go instances, preemptible instances can save up to 90% of instance costs. The use of preemptible instances in clusters can significantly reduce costs, but preemptible instances are irregularly reclaimed with dynamic inventory and bids.

To minimize the impact of interruptions in reclaiming preemptible instances, Alibaba Cloud's elasticity provides multiple capabilities, such as actively draining preemptible instances before reclaiming them and scaling out new nodes in advance for compensation. In this way, the user can choose preemptible instances as the elastic resource supply type for services that are tolerant to interruptions in reclaiming preemptible instances, such as offline data services. For services that require high stability, we also provide an optimal cost policy to ensure that the instance type with the lowest cost can be scaled out each time among multiple types of pay-as-you-go instances.

In terms of scale-in, to meet the requirement that players exit before scale-in, Alibaba Cloud's elasticity provides a custom drainage waiting time to ensure that drainage is completed within the specified time without deleting node resources. The user can also use the graceful exit of Kubernetes to ensure that players exit before the pods are deleted. This double guarantee allows the final scale-in effect to meet the requirement that players exit before the nodes scale in.

In addition, the requirement that data logs be collected before scale-out is similar. In different time zones, services that are required for each node are generally deployed in Daemonset. In general, Daemonset pods are skipped by default for scale-out. In user scenarios, only one or more types of Daemonset pods need to be drained and waited for. Therefore, we add support for specifying DaemonSet pods to be scaled in during elastic scale-in. At the same time, users can also mark DaemonSet pods that need to wait for drainage to meet the requirement that nodes are scaled in after data logs are collected.

Click here to view the ACK Autoscaling documentation.

Building a Next-generation Intelligent Observability System Based on eBPF

Driving Business Agility and Efficient Cloud Resource Management through Elastic Scheduling

212 posts | 13 followers

FollowAlibaba Cloud Native - April 2, 2024

Alibaba Clouder - July 15, 2020

Aliware - March 19, 2021

ApsaraDB - December 6, 2024

Alibaba Container Service - November 21, 2024

Alibaba Container Service - November 7, 2024

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native