By Zhixin Huo

As we all know, computing power has become an essential factor in the evolution of the AI industry over time. Today, with the increasing amount of data and the growing scale of models, the demand for distributed computing power and efficient model training has become a primary focus. The challenge lies in making more efficient and cost-effective use of computing power to constantly train and iterate AI models. The evolution of distributed training vividly reflects the development process of AI models.

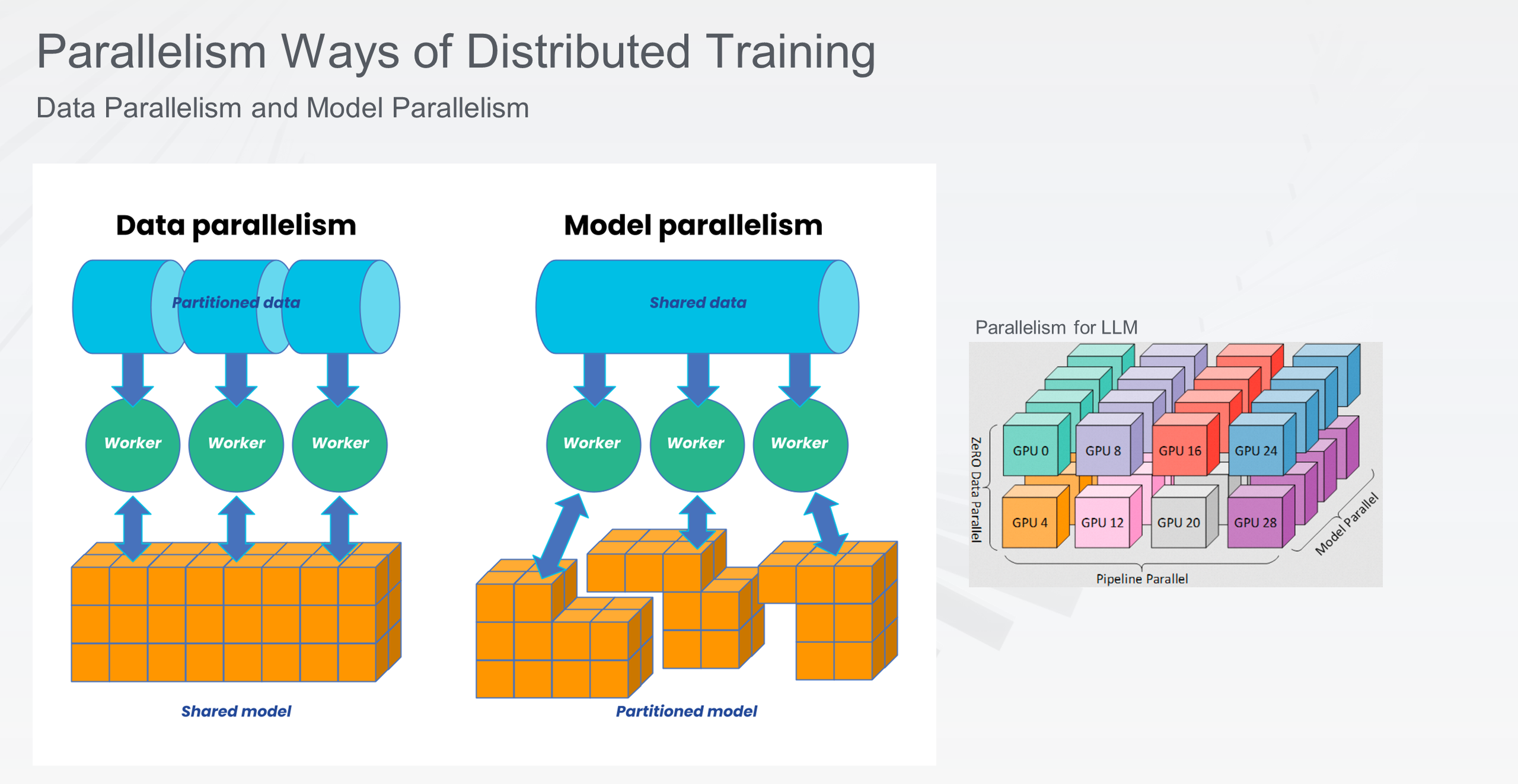

Distributed training is generally categorized into two types: data parallelism and model parallelism. Data parallelism involves each training worker storing a copy of the model and processing large-scale data that is distributed to each training worker for computation, followed by collective communication to consolidate the computing results. For an extended period, data parallelism has been the mainstream approach in distributed training, effectively leveraging computing resources in clusters to process extensive datasets in parallel, thus accelerating training.

In model parallelism, the model is divided and allocated to different training workers when it is extremely large, and the training data is distributed to different workers based on the model's structure to harness distributed computing power for training large models. Currently, large language models are typically trained using model parallelism due to their significant size.

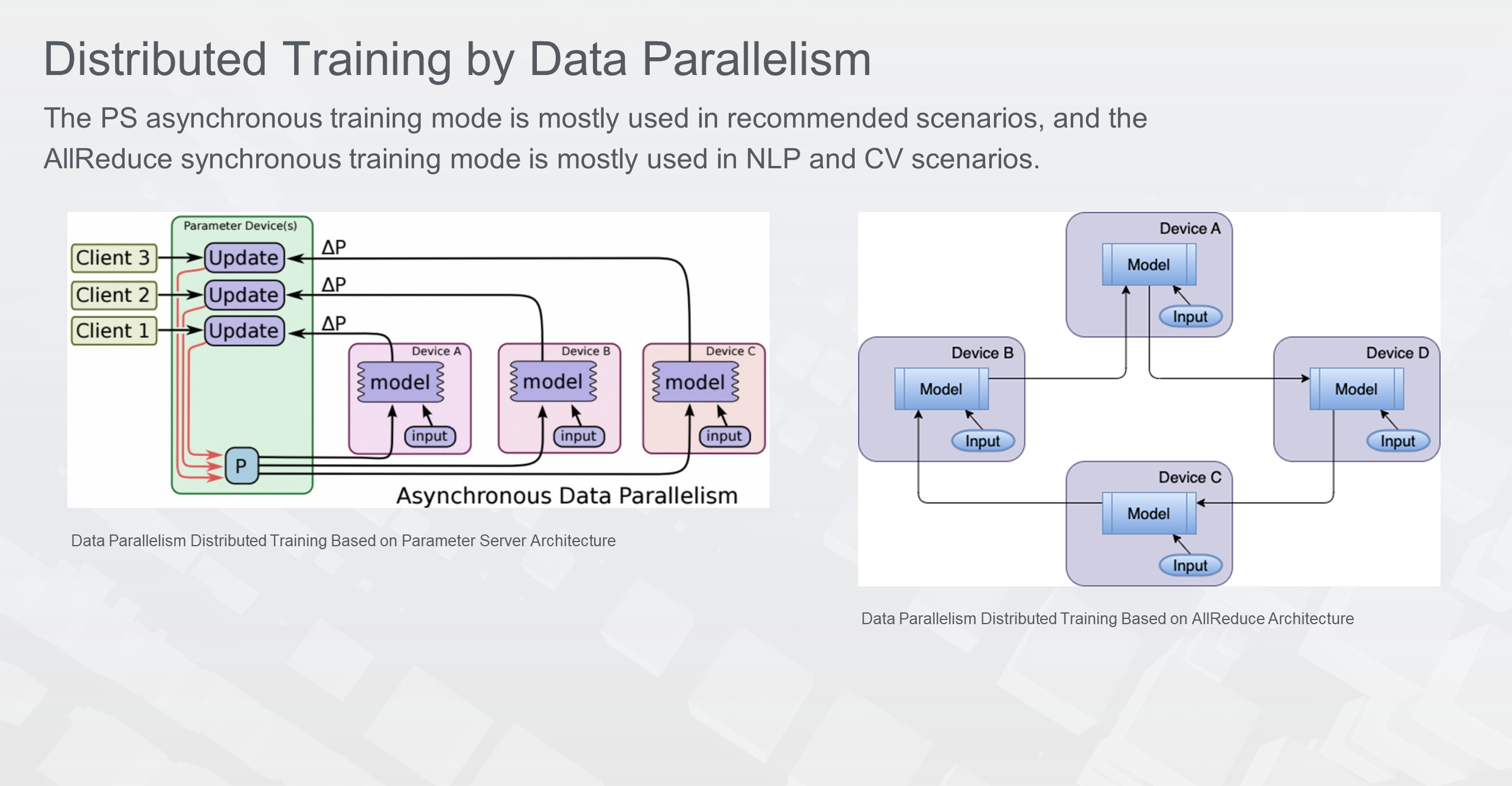

Distributed training based on data parallelism consists of two different architectures.

A centralized parameter server is used to store the gradients for distributed training. Each training worker must pull the latest gradient information from the parameter server before each training step. After the training step is completed, the training result gradient is pushed to the parameter server. In Tensorflow, PS mode training is generally asynchronous distributed training. In this case, each worker of a task does not need to wait for the training process of other workers to synchronize gradients but only needs to complete its own training according to the process. This mode is mostly used in Tensorflow-based search and promotion scenarios.

A decentralized and synchronous distributed training mode. Ring-AllReduce is generally adopted in distributed training. Each training worker only communicates with the workers of its left and right rank, thus forming a communication ring. Through ring communication, the gradient in each worker can be synchronized to other workers and the computing can be completed. This mode is mostly used in CV and NLP application scenarios.

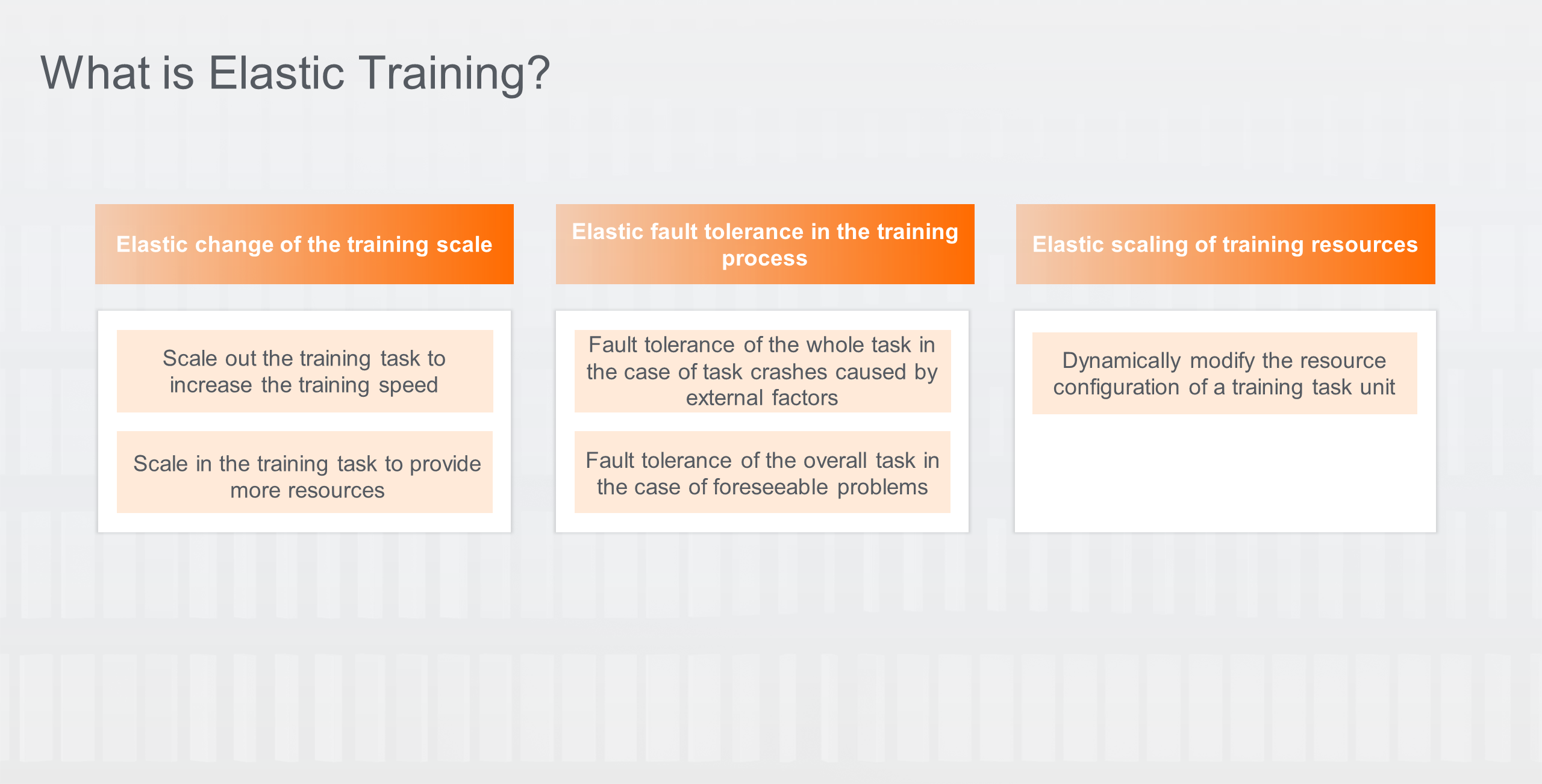

After the introduction of distributed training, let's take a look at elastic distributed training. What is elastic training? Specifically, it has three major capabilities:

The significance of elastic training can be summarized in three points:

1) Large-scale distributed training fault tolerance effectively improves the success rate of training task operation.

2) The utilization of cluster computing power is increased, and elasticity is used to coordinate resource allocation for offline tasks.

3) The cost of task training is reduced. Instances that can be preempted or are less stable but cost-effective are used for training to reduce the overall cost.

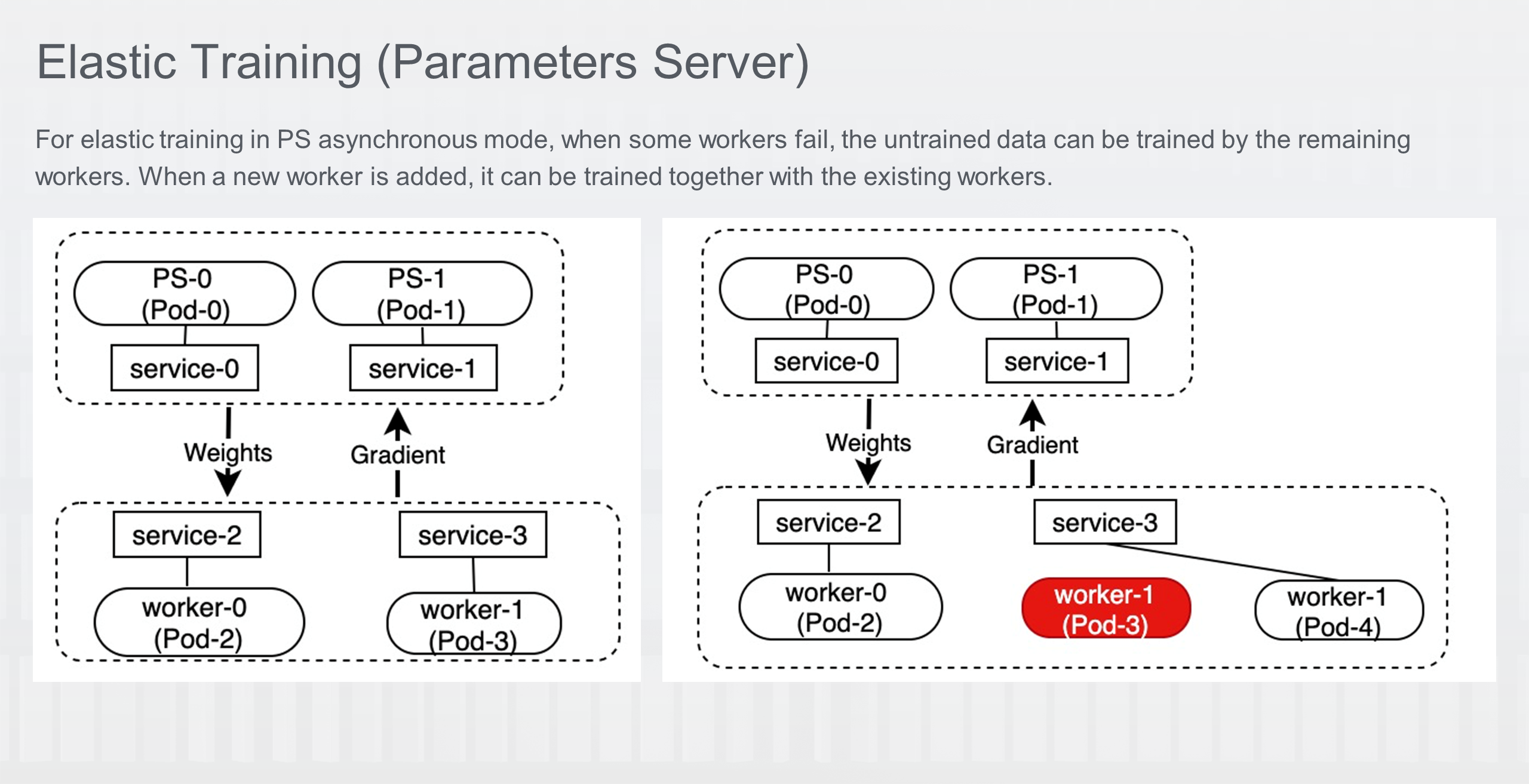

Since the elastic training in PS mode is asynchronous, the key to elasticity lies in the division of the training data. When some of the workers fail, the data that has not been trained or the data in the failed workers can be used by the remaining workers to continue training. When a new worker is added, it can participate in the training together with the existing workers.

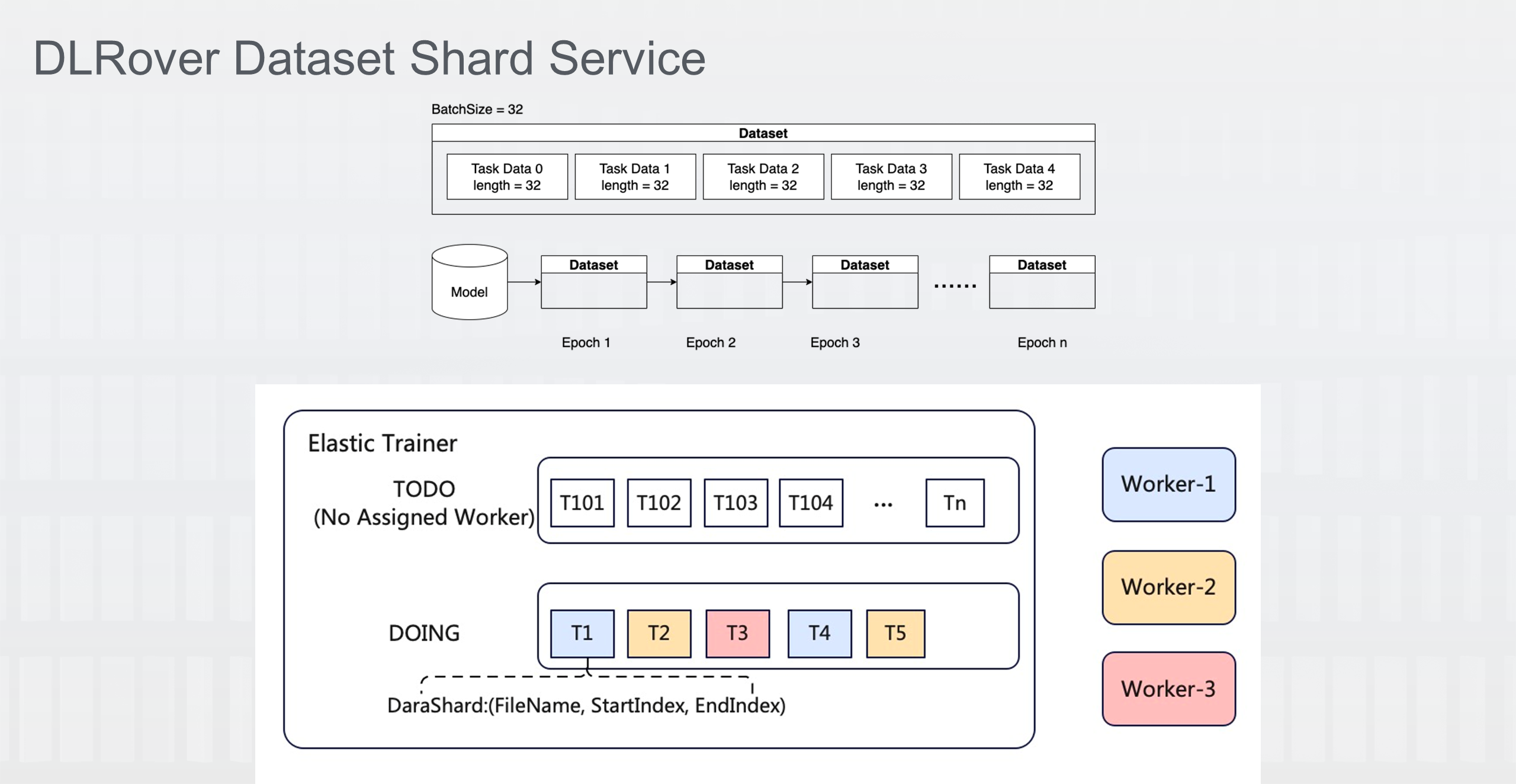

In DLRover, an open-source project of the Ant AI Infra team, Training Master is implemented to participate in elastic training. The Training Master is responsible for monitoring tasks, dividing data sets, and implementing the elasticity of each role's resource. Among them, the division of data sets is the key to elastic training. There is a Dataset Shard Service role in the Master for the division of specific data sets.

According to the batch size, it splits the entire data set into different task data and puts the task data into the data set queue for each training worker to consume. There are two queues in the Dataset Shard Service. All task data that have not been trained and consumed are in the TODO queue, while the task data that is being trained is in the DOING queue. The task data will not be completely dequeued until the worker gives a signal after the data training ends. If a training worker unexpectedly exits midway, the task data that has timed out will re-enter the TODO queue for other normal workers to train.

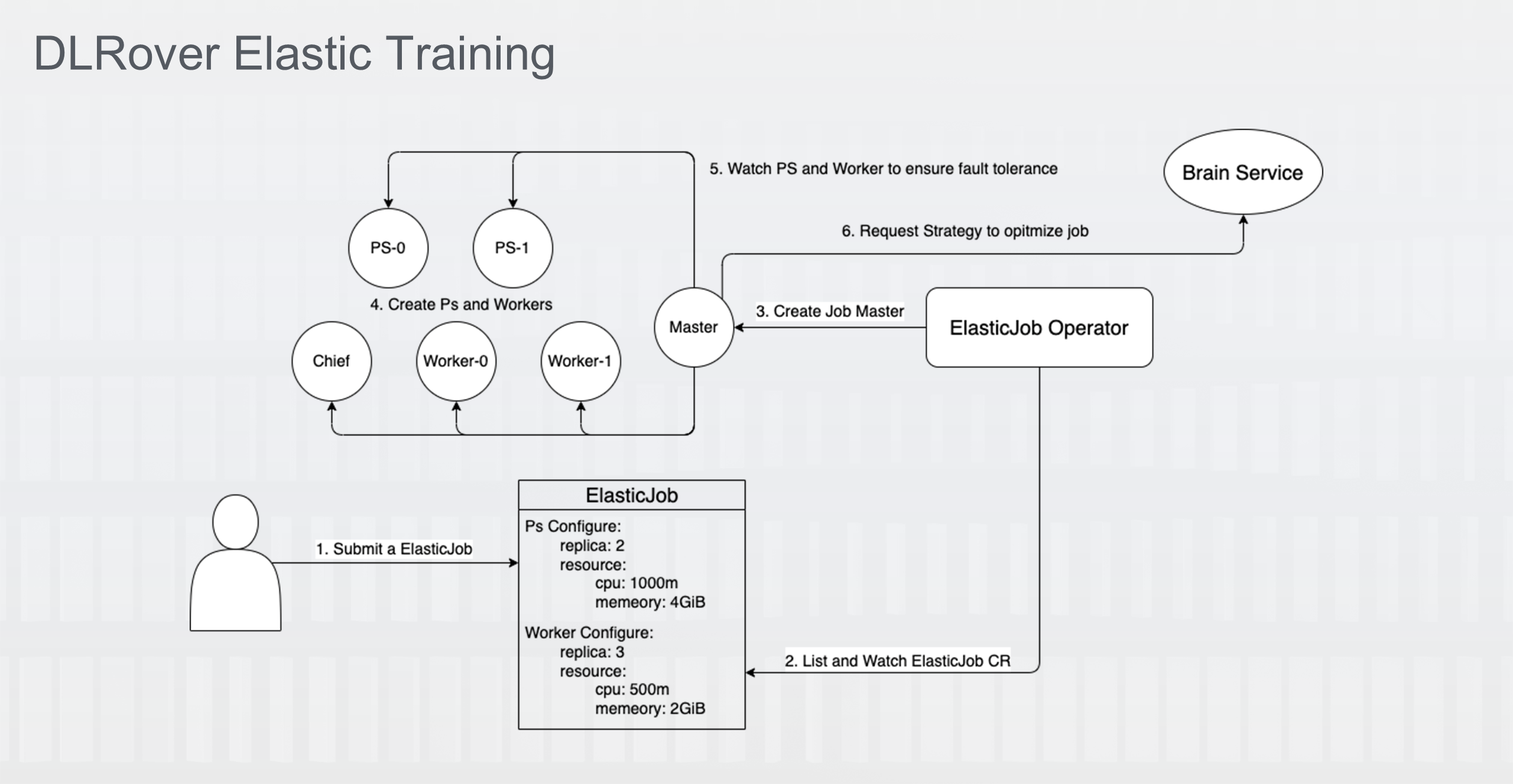

DLRover designs a CRD ElasticJob on Kubernetes. The ElasticJob controller listens to and creates a DLRover Master. The Master then creates pods for PS and workers, and controls the elasticity of PS and workers.

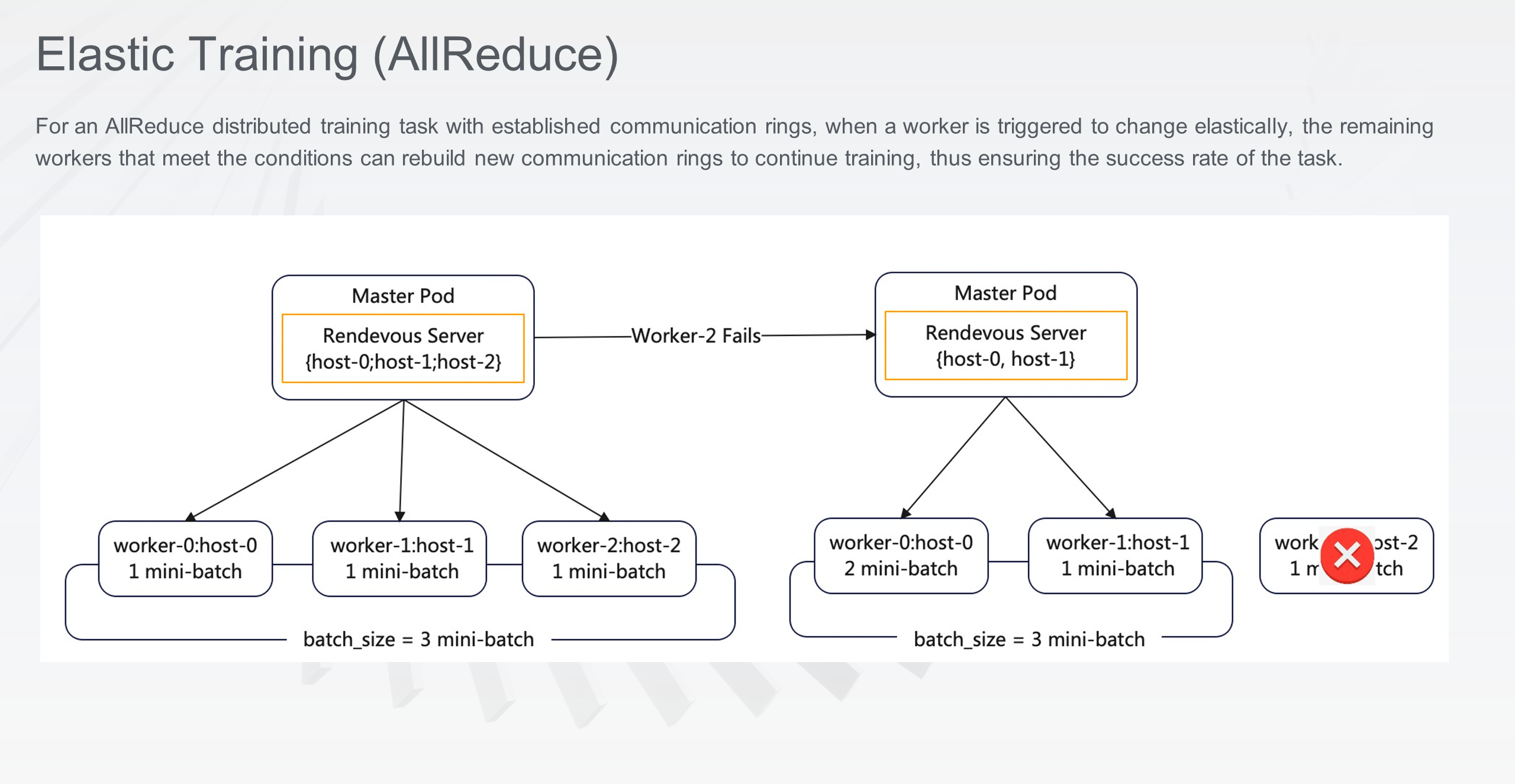

Elastic training in AllReduce mode is synchronous. The key to elasticity lies in how to ensure the synchronization of training and the maintenance of the communication ring established to synchronize gradients. When some of the workers fail, the remaining workers can rebuild the communication ring to continue training. When new workers join in, they can rebuild communication rings with the existing workers for training.

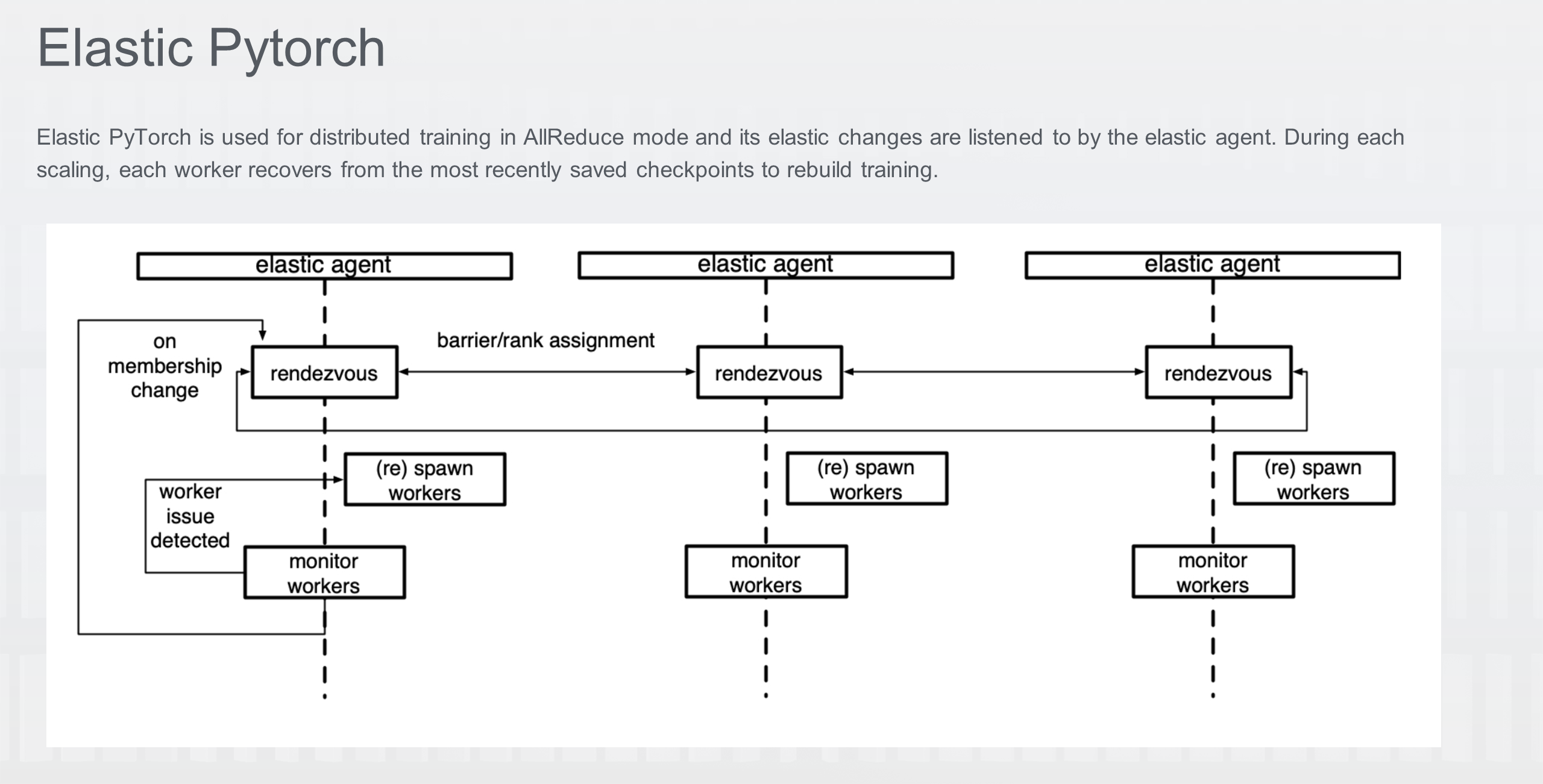

Elastic Pytorch is an elastic distributed training engine in AllReduce mode in Pytorch. Its essence is to start an elastic agent on each worker, use the agent's monitor to listen to each training process, and dynamically register worker information in the rendezvous server in Master according to whether the worker process is normal, thus completing the elasticity of the whole training process. Currently, this process can be run in Kubernetes by using Pytorch Operator.

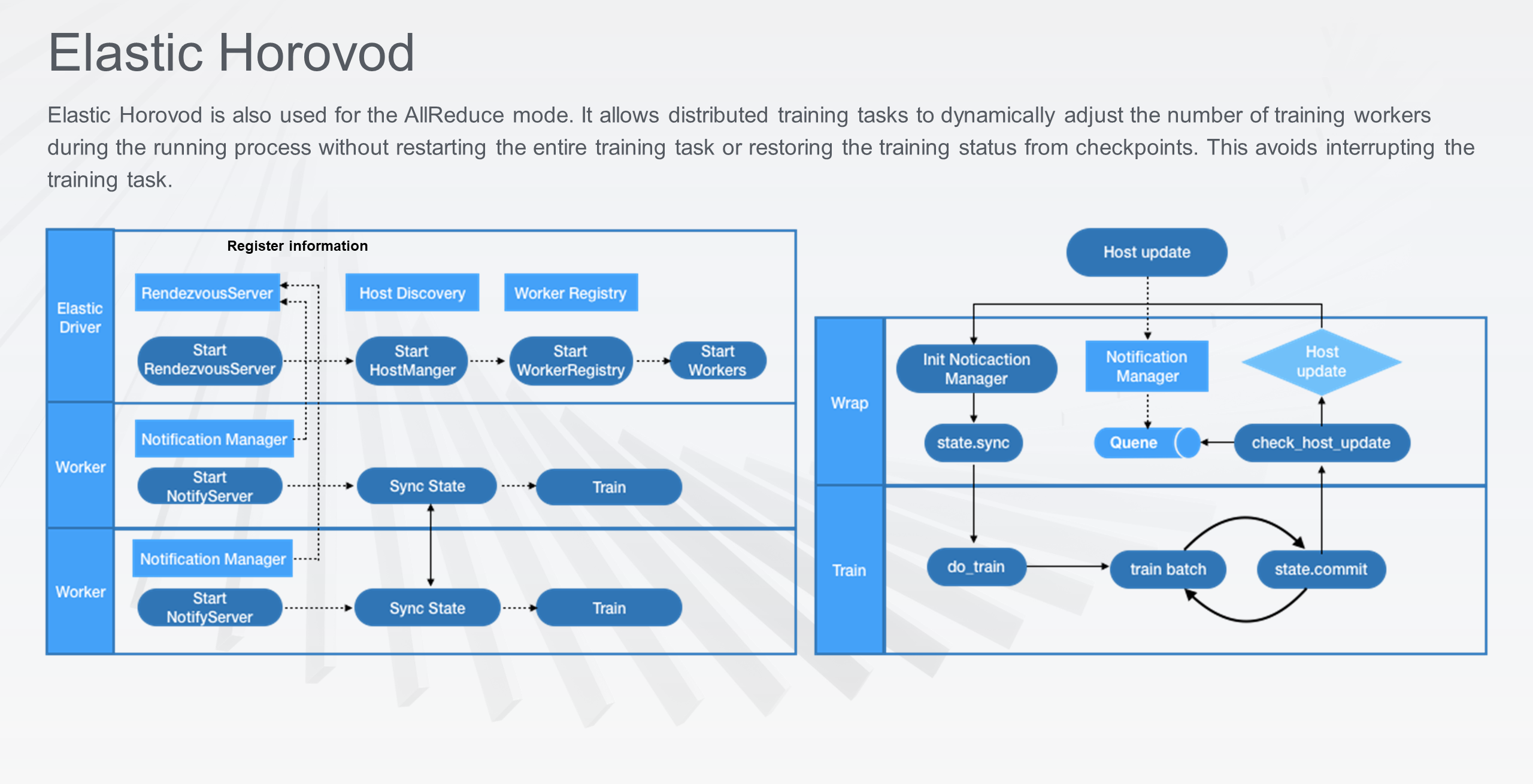

Horovod is a distributed elastic training framework that can be used for distributed training of Tensorflow or Pytorch. Elastic Horovod is also used for distributed training in AllReduce mode. Elastic Horovod can automatically adjust the number of training workers during the running process without restarting the entire training task or restoring the training status from persistent checkpoints. This avoids interrupting the training task.

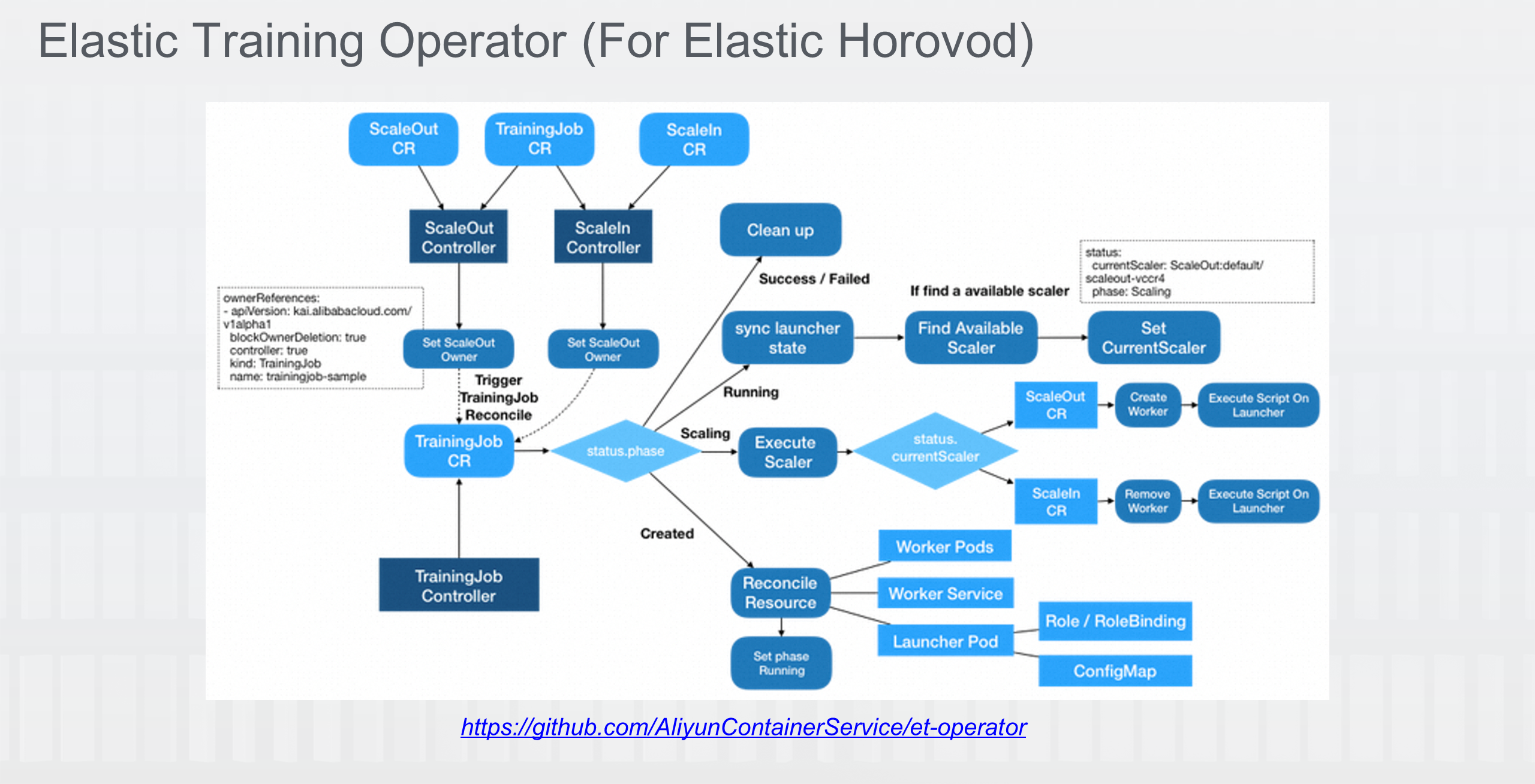

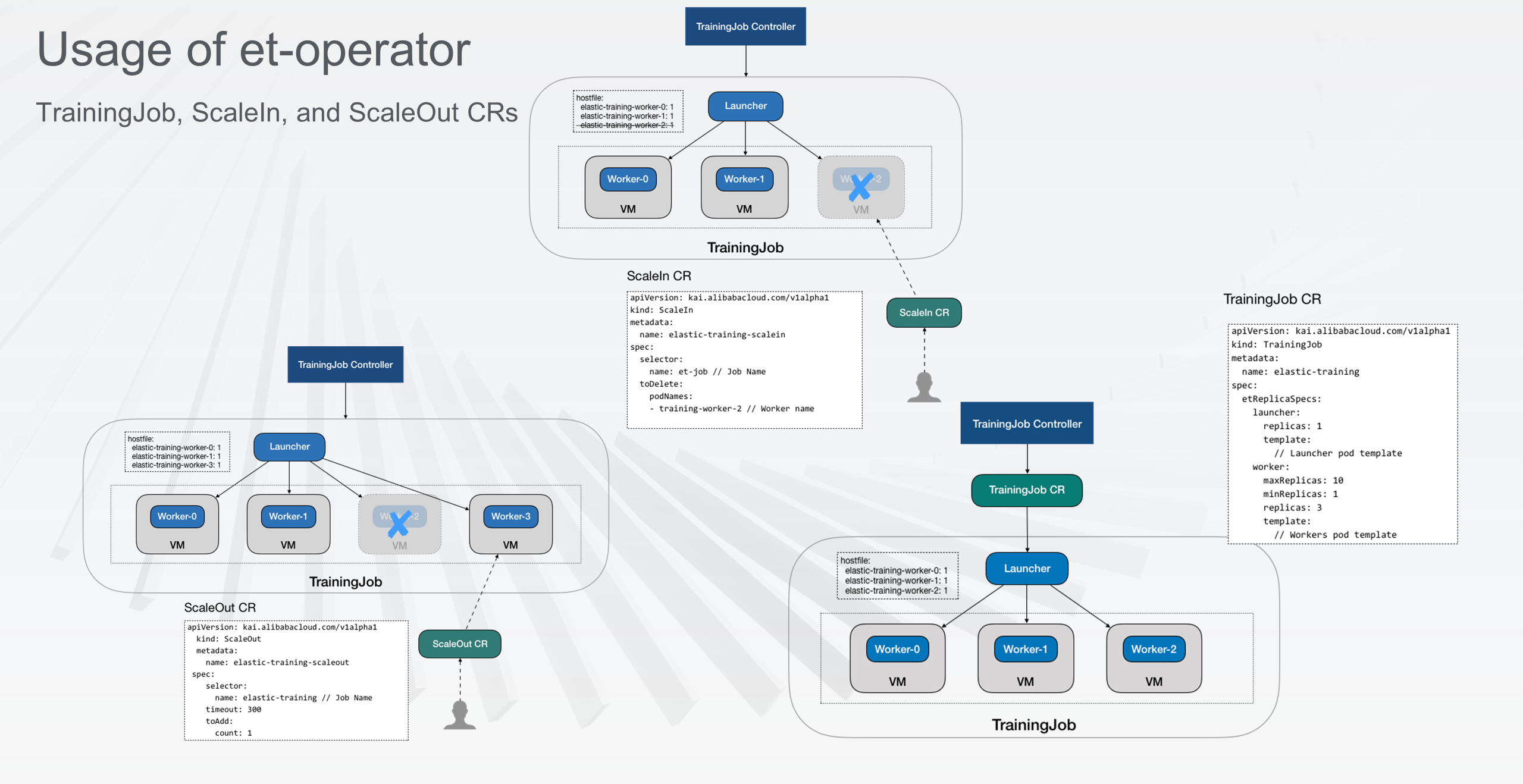

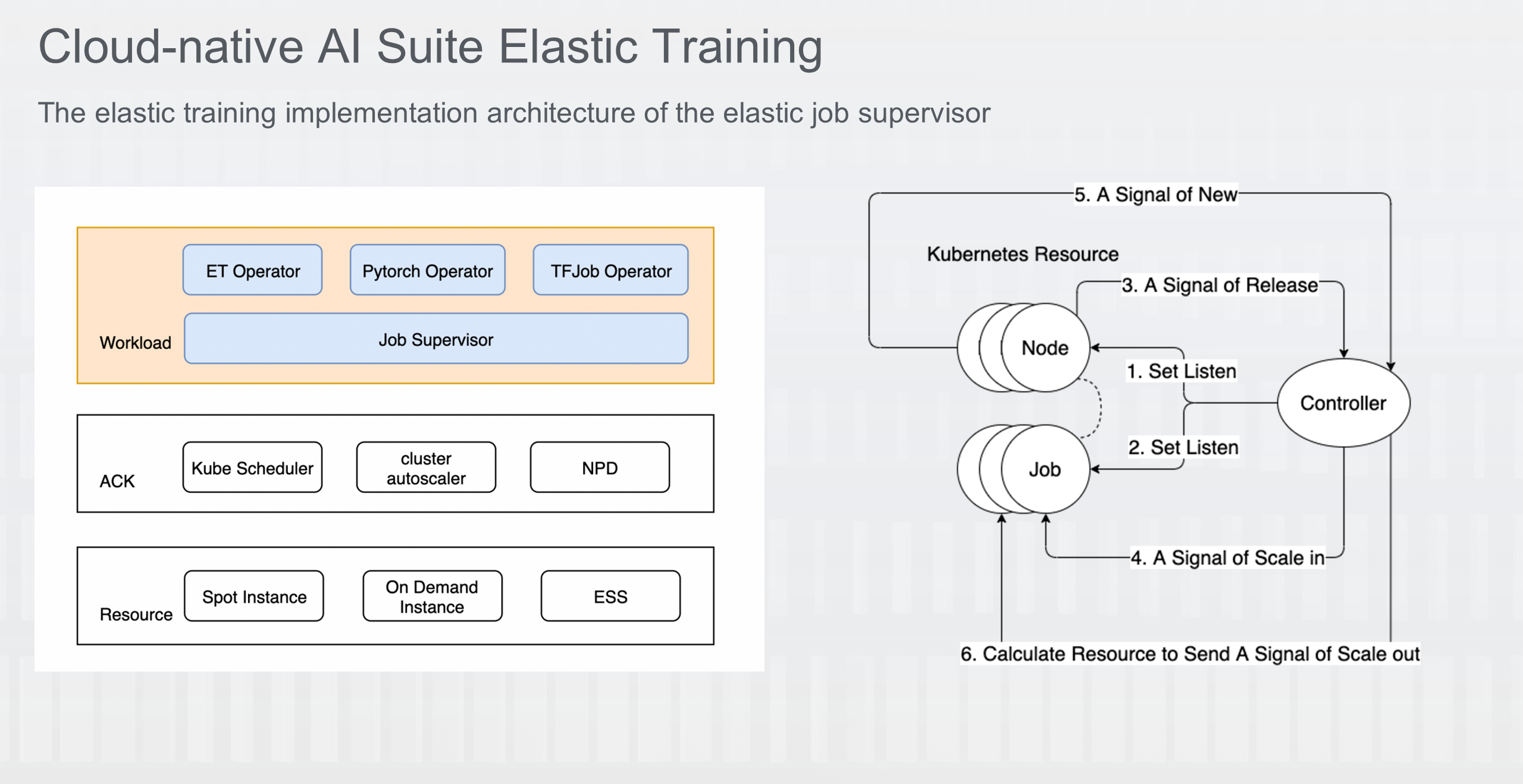

To run Elastic Horovod on Kubernetes, the Alibaba Cloud Container Service for Kubernetes(ACK) team has implemented Elastic Training Operator with three CRs. TrainingJob hosts the tasks that run Elastic Horovod. ScaleIn and ScaleOut are used as the CR for scale-in and scale-out tasks respectively. You can use the preceding three CRs to complete the entire Horovod Elastic process.

You can submit your own Elastic Horovod task by submitting TrainingJob, and submit the scale-in and scale-out actions of this Elastic Horovod task by submitting ScaleIn and ScaleOut.

Based on the preceding solutions of the elastic training framework in Kubernetes (DLRover, Elastic Pytorch, and Elastic Horovod), the ACK cloud-native AI suite proposes a cloud-native AI elastic training solution in spot scenarios.

As models continue to grow and AI job training costs continue to rise, cost savings are becoming a key task in all industries. For cost-sensitive customers who train AI models on ACK, the elastic training scenario that the ACK cloud-native AI Suite is expected to promote on ACK is a cloud-native AI elastic training solution that uses the elastic node pool of spot instances as the underlying training resource.

The overall solution aims at the following targets:

1) It is expected that AI training tasks of more types and more training scenarios will be run on lower-cost preemptible instances as much as possible in an elastic manner in the cluster.

2) These training tasks can dynamically occupy idle resources in the cluster according to customer needs to achieve the purpose of improving resource utilization.

3) The impact of this elastic training method on the accuracy of the customer AI training task is within an acceptable range and does not affect its final performance.

4) Using this elastic training method can prevent the training task of the customer from causing the interruption of the whole task process and eventually the loss of training results due to resource recovery or other reasons.

In ACK, the cloud-native AI suite provides support for Elastic Horovod, DLRover (Tensorflow PS), and Elastic Pytorch. It supports AI training tasks in NLP, CV, and search and promotion scenarios, covering most AI task training scenarios currently on the market.

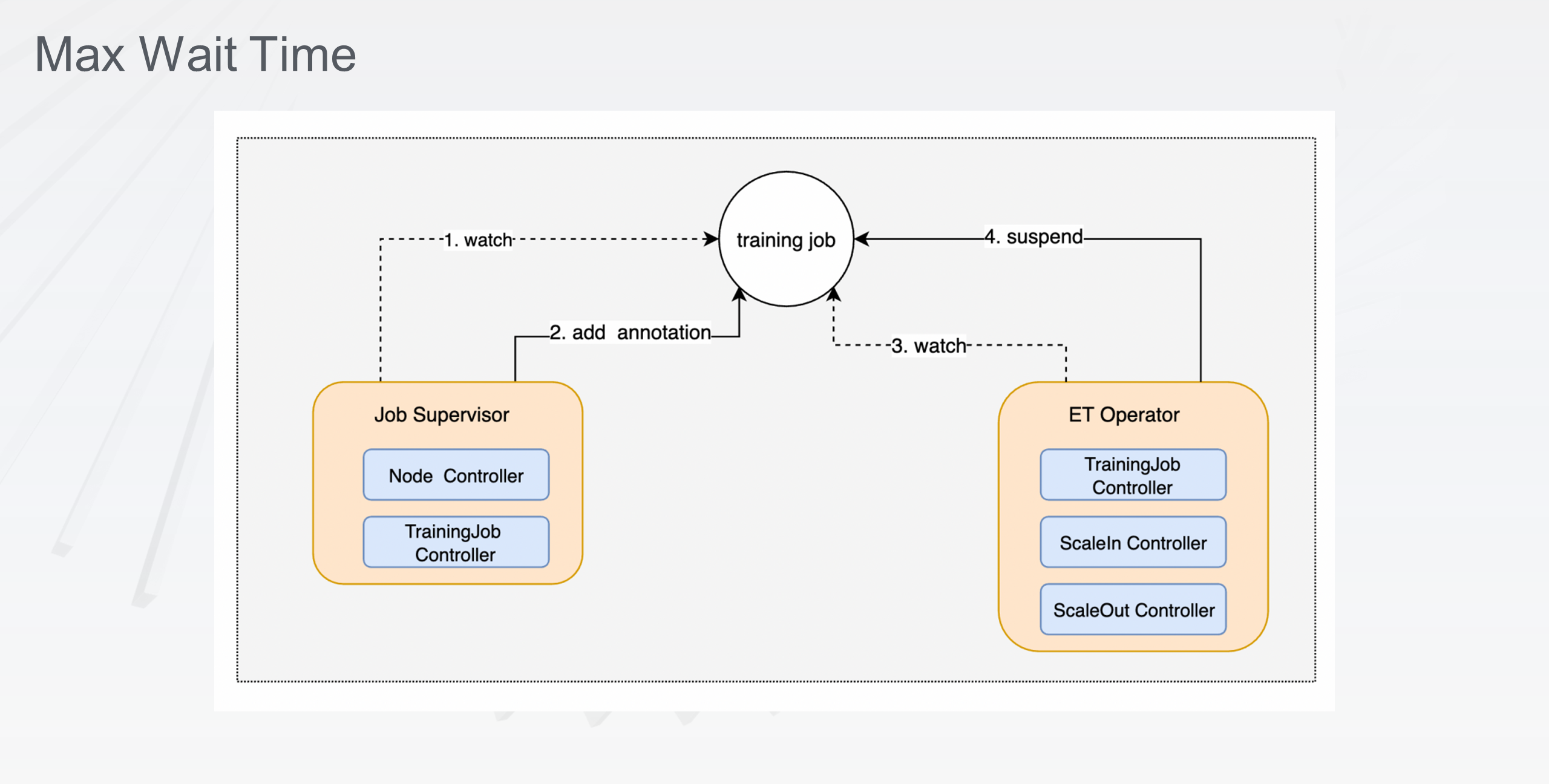

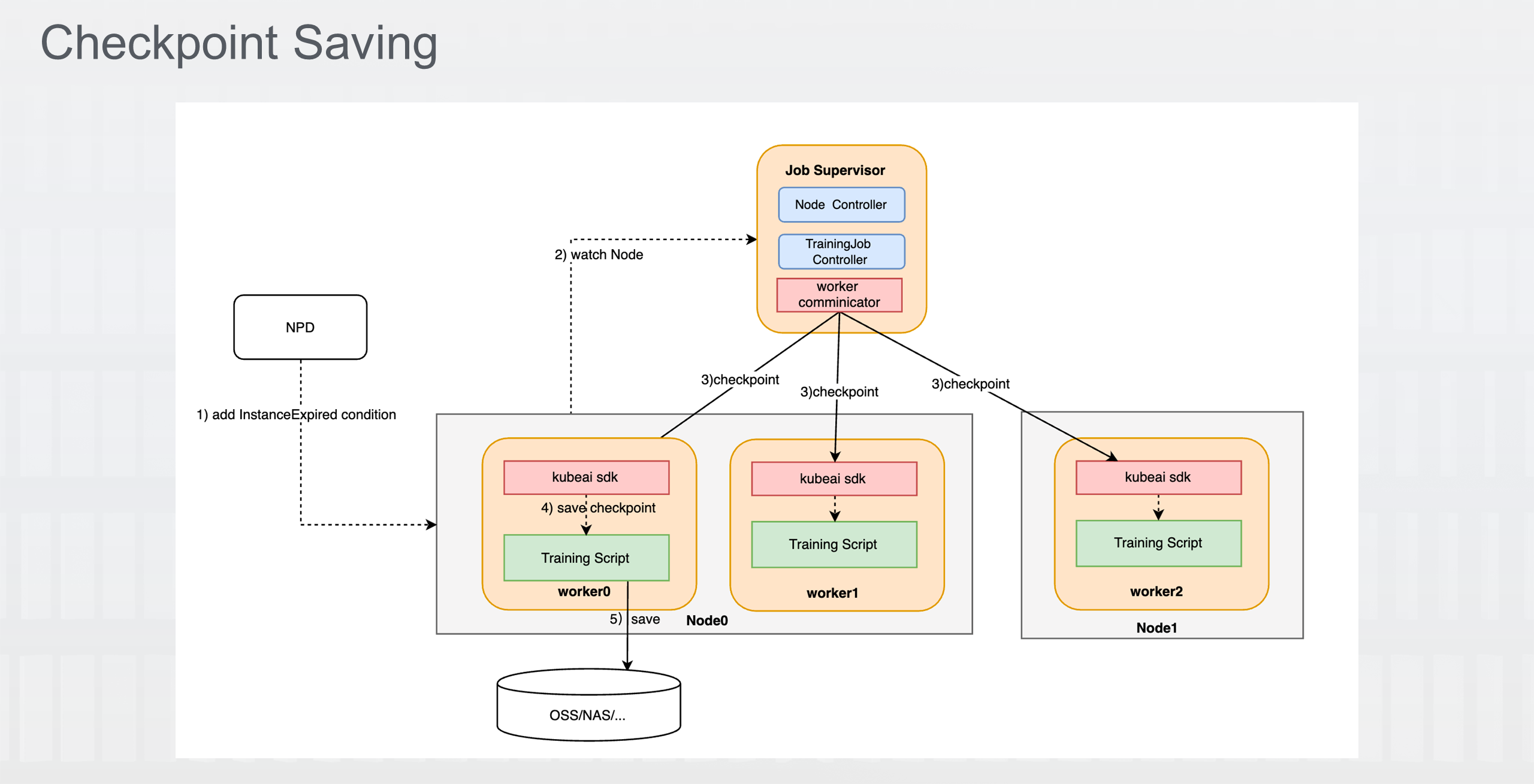

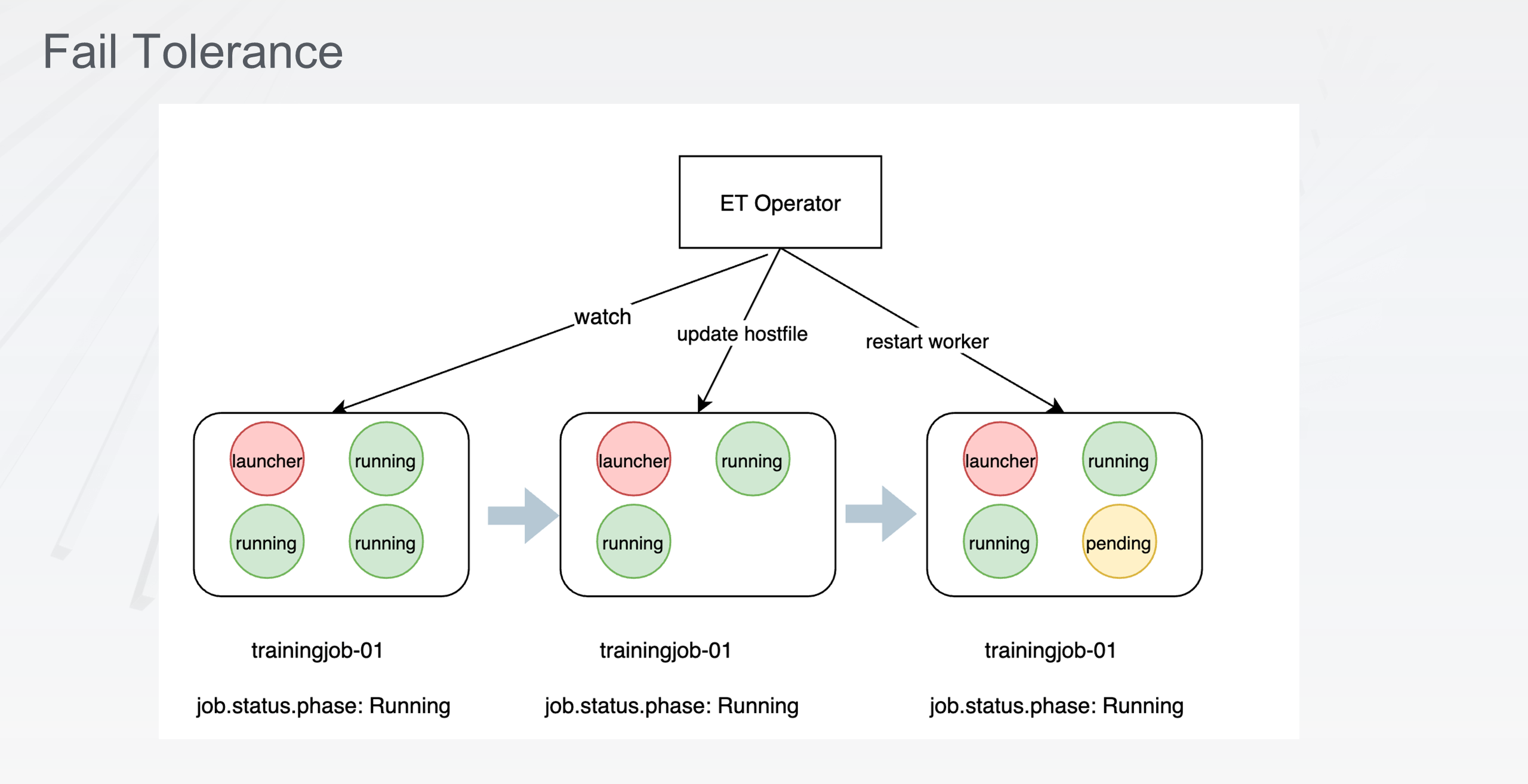

The ACK cloud-native AI suite provides an elastic training control component: elastic job supervisor, which is currently used to control elastic training in various scenarios for spot scenarios. It provides the following elastic training capabilities in spot scenarios:

Max wait time: If the resource requests of the training task can not be met before the maximum waiting time, the task stops waiting for resources to avoid waste caused by some workers applying for resources.

Checkpoint saving: It has an instance reclaim notification mechanism, which enables the training task to automatically perform the checkpoint saving operation when receiving the preemptible instance reclaim notification, thus avoiding the loss of training results.

Fail tolerance: When a distributed elastic training task is submitted and some instances are reclaimed, the distributed training task can continue to run without interruption due to the reclaim of some workers.

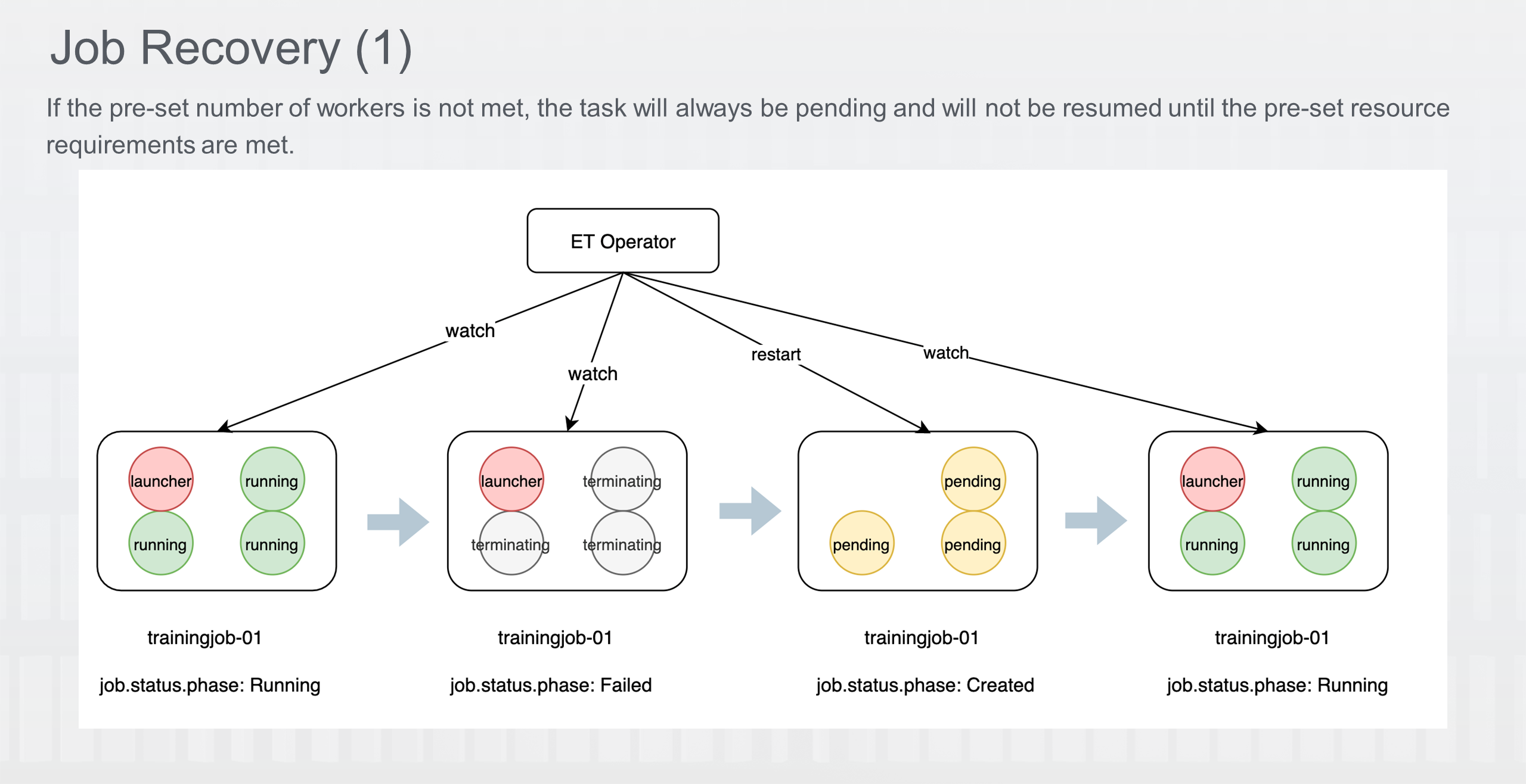

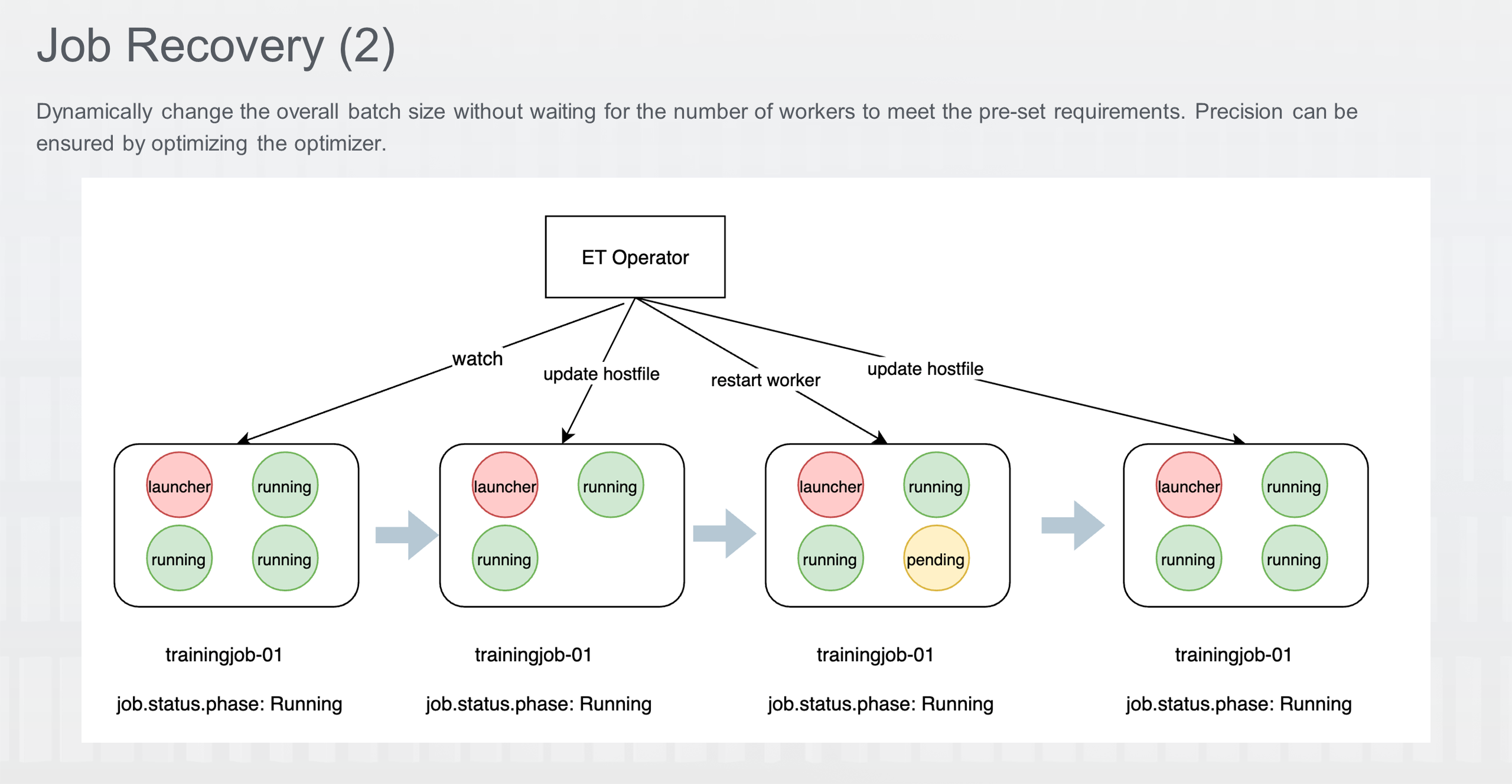

Job recovery: When the available resources for training are re-added to the cluster, the tasks that were previously suspended due to insufficient resources can be pulled up again to continue training, or the distributed training tasks that were previously scaled in can be automatically scaled out to the pre-set replica for training. There are two strategies:

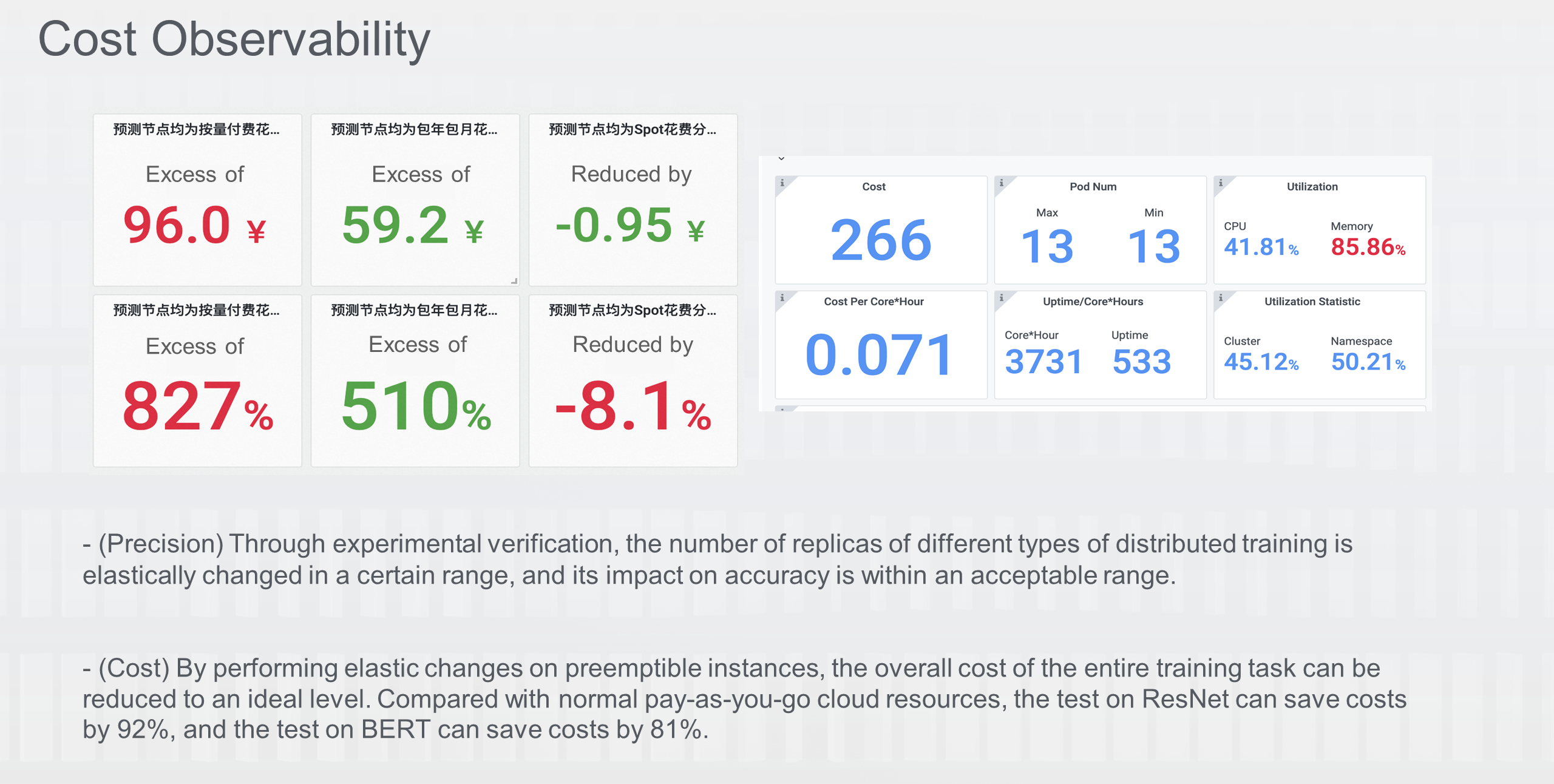

Cost observability: When you use preemptible instances for training, you can use the FinOps feature of ACK to monitor and calculate the overall training cost and show the cost savings of the elastic training based on spot instances.

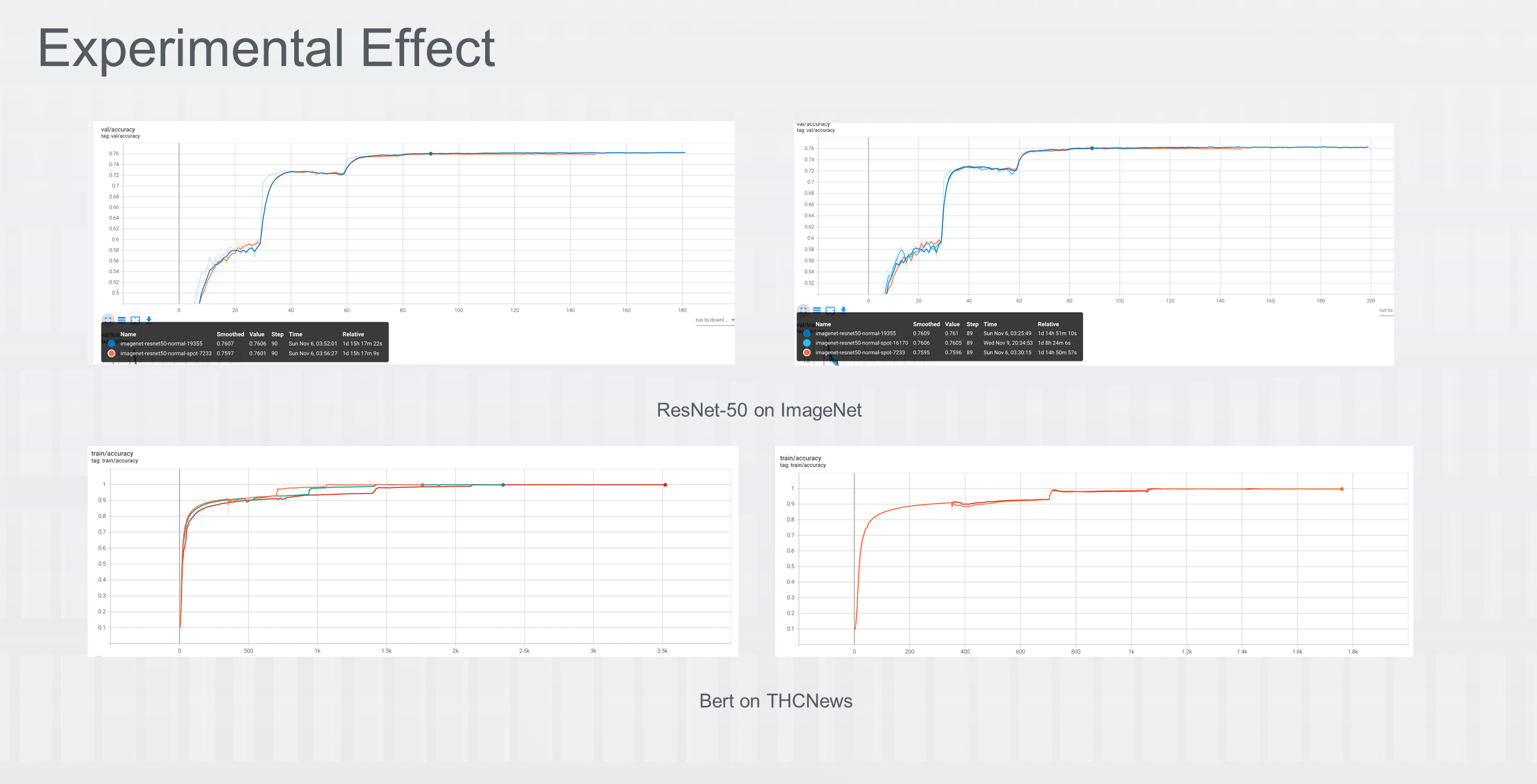

From the experimental verification, it is observed that the number of replicas in different types of distributed training can be elastically adjusted within a certain range. By incorporating relevant optimizations, the impact on precision remains within an acceptable range. In terms of costs, by dynamically changing the number of workers on spot instances, the overall cost of the entire training task can be significantly reduced. In tests conducted on ResNet, the cost savings reached 92%, while for BERT, the cost savings reached 81% compared to normal pay-as-you-go cloud resources.

In the popular LLM scenarios, the ACK cloud-native AI suite is actively exploring elastic training solutions based on LLM training frameworks, such as DeepSpeed. The aim is to reduce costs, improve training success rates, and enhance resource utilization for LLM training.

ARMS eBPF Edition: Technical Exploration for Efficient Protocol Parsing

Unveiling ARMS Continuous Profiling: New Insights into Application Performance Bottlenecks

212 posts | 13 followers

FollowAlibaba Container Service - November 15, 2024

Alibaba Container Service - July 17, 2024

Alibaba Cloud Native Community - September 18, 2023

Alibaba Container Service - November 7, 2024

Alibaba Cloud Native - June 24, 2022

Alibaba Container Service - July 12, 2024

212 posts | 13 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Simple Log Service

Simple Log Service

An all-in-one service for log-type data

Learn MoreMore Posts by Alibaba Cloud Native