By Wang Qingcan (Li Fan) and Zhang Kai

With years of experience in supporting Kubernetes products and customers, the Alibaba Cloud Container Service for Kubernetes Team has significantly optimized and extended Kube-scheduler to stably and efficiently schedule various complex workloads in different scenarios. This series of articles entitled, “The Burgeoning Kubernetes Scheduling System” provides a comprehensive summary of our experiences, technical thinking, and specific implementation methods to Kubernetes users and developers. We hope that articles can help you better understand the powerful capabilities and future trends of the Kubernetes scheduling system.

Kubernetes has now become the de facto standard platform for managing container clusters. It automates deployment, O&M, and resource scheduling throughout the lifecycle of containerized applications. After more than three years of rapid development, Kubernetes has made great progress in stability, scalability, and scale. In particular, the core components of the Kubernetes control plane are maturing. As a scheduler that determines whether a container can run in a cluster. Kube-scheduler has been providing stable performance for a long time and can meet the requirements of most pod scheduling scenarios. As a result, developers barely think about Kube-scheduler any more.

As Kubernetes is widely used on public clouds and in enterprise IT systems, more developers are trying to use Kubernetes to run and manage workloads outside of web applications and microservices. Typical scenarios include training jobs for machine learning and deep learning, high-performance computing jobs, genetic computing workflows, and conventional big data processing jobs. Kubernetes clusters can manage a wide range of resources, including graphics processing units (GPUs), Tensor processing units (TPUs), field-programmable gate arrays (FPGAs), and Remote Direct Memory Access (RDMA) high-performance networks. Kubernetes clusters can also manage various custom accelerators for jobs in various fields, such as artificial intelligence (AI) chips, neural processing units (NPUs), and video codecs. Developers want to use various heterogeneous devices in Kubernetes clusters in a simple way, just as they use the CPU and memory.

Generally, the trend in cloud-native technology is to build a container service platform based on Kubernetes, uniformly manage all kinds of new computing resources, elastically run various types of applications, and deliver services on-demand to a variety of runtime environments, including public clouds, data centers, edge nodes, and terminals.

With years of experience in supporting Kubernetes products and customers, the Alibaba Cloud Container Service for Kubernetes Team has significantly optimized and extended Kube-scheduler to stably and efficiently schedule various complex workloads in different scenarios.

This series of articles entitled “The Burgeoning Kubernetes Scheduling System” provides a comprehensive summary of our experiences, technical thinking, and specific implementation methods to Kubernetes users and developers. We hope the articles can help you better understand the powerful capabilities and future trends of the Kubernetes scheduling system.

First, let's take a look at some solutions that the Kubernetes community has used to improve the scalability of schedulers.

Kube-scheduler was the first to face the challenge of managing and scheduling heterogeneous resources and more types of complex workloads in a unified manner. The Kubernetes community has been constantly discussing how to improve the scalability of schedulers. The conclusion provided by sig-scheduling is that, if more features are added to a scheduler, a larger amount of code will be defined in the scheduler and the logic of the scheduler will become more complex. As a result, maintaining the scheduler, and finding and handling bugs become more difficult. In addition, users that use custom scheduling are hard-pressed to catch up with every feature update of the scheduler.

Alibaba Cloud users face the same challenges. The native scheduler for Kubernetes loops over the fixed logic of a single pod container. As a result, it cannot meet user requirements in different scenarios in a timely and simple manner. To address this problem, we extend our scheduling policies for specific scenarios based on the native Kube-scheduler.

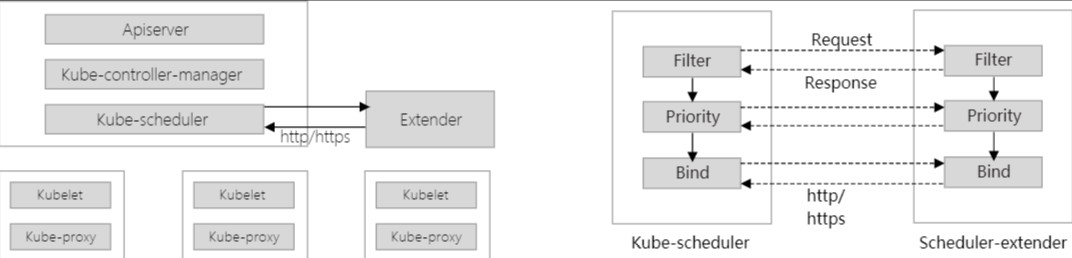

Initially, we extend Kube-scheduler by using a scheduler extender or multiple schedulers. In the following section, we will describe and compare the two methods.

The initial solution proposed by the community was to use an extender to extend the scheduler. An extender is an external service that can make extensions based on Filter, Preempt, Prioritize, and Bind. When the scheduler enters the corresponding phase, the scheduler runs the extension logic by calling the webhook, which is registered using the extender. This way, the scheduling decision made in the phase can be changed.

Let's use the Filter phase as an example. The filtering process varies with the execution result:

As you can see, the extender has the following problems:

Based on the preceding description, extending a scheduler using an extender is a flexible extension solution for small clusters with low scheduling efficiency requirements. However, for large clusters in normal production environments, extenders cannot support high throughput, which results in poor performance.

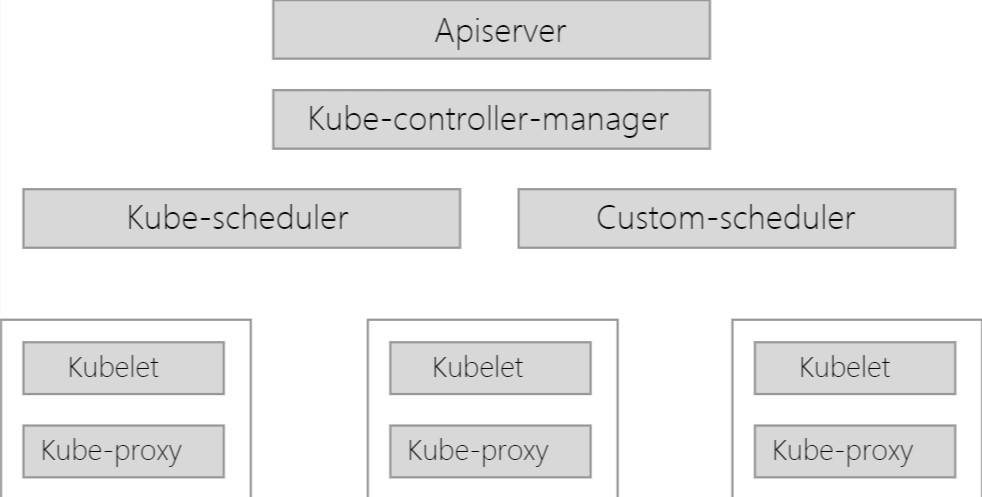

In Kubernetes clusters, a scheduler is similar to a special controller. It listens to pod and node information, selects the best node for the pod, and updates the spec.NodeName information of the pod to synchronize the scheduling result to the node. Therefore, to meet the special scheduling requirements of certain users, developers have developed a custom scheduler to complete the preceding process and then deploy both the custom scheduler and the default scheduler.

A custom scheduler has the following problems:

In conclusion, a scheduler extender requires low maintenance costs but has poor performance, while a custom scheduler has high performance but incurs excessively high development and maintenance costs. This presents developers with a dilemma. At this time, Kubernetes Scheduling Framework V2 emerged, giving developers the best of both worlds.

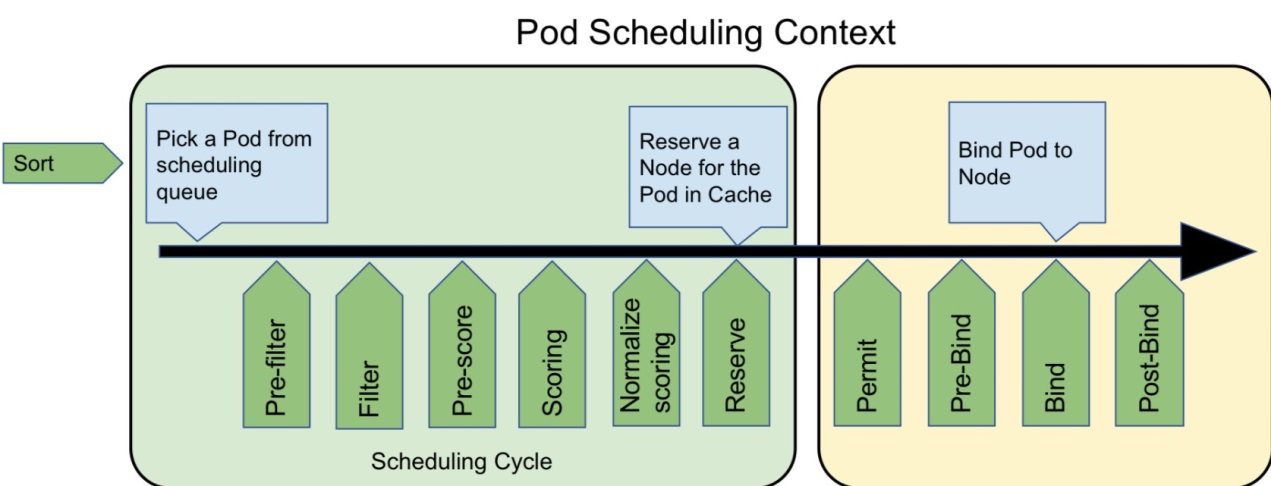

The community gradually noticed the difficulties faced by developers. To resolve the preceding problems and make Kube-scheduler more scalable and concise, the community built the Kubernetes Scheduling Framework after the release of Kubernetes 1.16.

The Kubernetes Scheduling Framework defines a variety of APIs for extension points based on the original scheduling process. Developers can implement plug-ins by implementing the APIs defined for the extension points and registering the plug-ins with the extension points. When the Scheduling Framework runs at the corresponding extension point during scheduling, the Scheduling Framework calls a registered plug-in to change a scheduling decision. In this way, your scheduling logic is integrated into the Scheduling Framework.

The scheduling process of the Scheduling Framework is divided into two phases: the scheduling cycle and the binding cycle. Scheduling cycles are executed synchronously and only one scheduling cycle runs at a time, which is thread-safe. Binding cycles are executed asynchronously. Multiple binding cycles may run at the same time, which is thread-unsafe.

A scheduling cycle is the core of a scheduling process. Its main task is to make scheduling decisions and select the only node.

// QueueSortPlugin is an interface that must be implemented by "QueueSort" plugins.

// These plugins are used to sort pods in the scheduling queue. Only one queue sort

// plugin may be enabled at a time.

type QueueSortPlugin interface {

Plugin

// Less are used to sort pods in the scheduling queue.

Less(*PodInfo, *PodInfo) bool

}The priority queue of the scheduler is implemented using the heap. You can define a comparison function for the heap in the QueueSort plug-in to determine the sorting in the priority queue. Note: Only one comparison function for the heap exists at a given time. Therefore, only one QueueSort plug-in may be enabled at a time. If you enable two QueueSort plug-ins, the scheduler reports an error and exits upon startup. The following code shows the default comparison function for your reference:

// Less is the function used by the activeQ heap algorithm to sort pods.

// It sorts pods based on their priority. When priorities are equal, it uses

// PodQueueInfo.timestamp.

func (pl *PrioritySort) Less(pInfo1, pInfo2 *framework.QueuedPodInfo) bool {

p1 := pod.GetPodPriority(pInfo1.Pod)

p2 := pod.GetPodPriority(pInfo2.Pod)

return (p1 > p2) || (p1 == p2 && pInfo1.Timestamp.Before(pInfo2.Timestamp))

}The PreFilter plug-in is called when a scheduling cycle starts. The scheduling cycle enters the next phase only when all the PreFilter plug-ins return success. Otherwise, the pod is rejected, indicating that the scheduling process fails. The PreFilter plug-in can preprocess pod information before the scheduling process starts. In addition, the PreFilter plug-in can check certain preconditions and pod requirements that the cluster must meet.

The Filter plug-in is the predicate logic in Scheduler V1. It is used to filter out nodes that cannot schedule the pod. To improve efficiency, you can configure the execution order of Filter plug-ins. You can prioritize filtering policies that can filter out a large number of nodes, minimizing the filtering policies to be executed subsequently. For example, you can prioritize the filtering policies for NodeSelector to filter out a large number of nodes. The node executes filtering policies concurrently, so Filter plug-ins are called multiple times in a scheduling cycle.

The new PostFilter API will be released in Kubernetes 1.19. This API is mainly used to process the operations performed after a pod fails in the Filter phase, such as preemption and auto scaling triggering.

The PreScore plug-in was named the PostFilter plug-in in previous versions. It is used to generate information used by Score plug-ins. In this phase, you will obtain a list of nodes that have passed the Filter phase. You can also preprocess some information or generate some logs or monitoring information in this phase.

The Scoring extension point is the priority logic in Scheduler V1. After nodes are filtered using the Filter plug-ins, the scheduler selects the optimal node from the remaining nodes in this phase based on the policy defined by the Scoring extension point.

Operations at the Scoring extension point are divided into two phases:

The Reserve extension point is the assume operation in Scheduler V1. The scheduler caches scheduling results in this phase. If an error or failure occurs in any subsequent phase, the scheduler will enter the Unreserve phase to roll back the data.

The Permit extension point is a new feature introduced in the Scheduler Framework V2. Developers can define their policies to intercept a pod at the Permit extension point after the scheduler reserves resources for the pod in the Reserve phase and before the scheduler performs a Bind operation. Then, based on conditions, developers can perform approve, deny, and wait operations on pods that pass the Permit phase. Specifically, "approve" indicates that the pod is allowed to pass the Permit phase. "Deny" indicates that the pod is denied and does not pass the Permit phase, so the pod fails to be scheduled. "Wait" indicates that the pod is in the waiting state. Developers can set a timeout period for wait operations.

A binding cycle involves a call to the API operations provided by Kube-apiserver and is therefore time-consuming. To improve scheduling efficiency, you need to run binding cycles asynchronously. Therefore, this phase is thread-unsafe.

The Bind extension point is the Bind operation in Scheduler V1. It calls the API operations provided by Kube-apiserver to bind a pod to the corresponding node.

Developers can execute PreBind and PostBind before and after the Bind operation, respectively. You can obtain and update data information in these two phases.

The UnReserve extension point is used to clear the caches generated in the Reserve phase and roll back the data to the initial state. UnReserve and Reserve are defined separately in the current version. In the future, we will unify them and require developers to define both Reserve and UnReserve to ensure that data can be cleared without leaving any dirty data.

To facilitate the management of different scheduling-related plug-ins, the sig-scheduling team, which is responsible for Kube-scheduler in Kubernetes, created a project named scheduler-plug-ins. You can define your plug-ins based on this project. Next, let's use the QoS plug-in as an example to demonstrate how to develop your plug-ins.

The QoS plug-in is implemented mainly based on the Quality of Service (QoS) class of pods. It is used to determine the scheduling order of pods of the same priorities in the scheduling process. The scheduling order is listed below:

First, define an object and a constructor for your plug-in.

// QoSSort is a plugin that implements QoS class based sorting.

type Sort struct{}

// New initializes a new plugin and returns it.

func New(_ *runtime.Unknown, _ framework.FrameworkHandle) (framework.Plugin, error) {

return &Sort{}, nil

}Then, implement the corresponding API based on the extension point corresponding to the plug-in. QoS is part of the QueueSort plug-in. Therefore, you need to implement a function for the QueueSort plug-in API. As shown in the following code, the QueueSort plug-in API defines only the Less function. Therefore, you can only implement this function.

// QueueSortPlugin is an interface that must be implemented by "QueueSort" plugins.

// These plugins are used to sort pods in the scheduling queue. Only one queue sort

// plugin may be enabled at a time.

type QueueSortPlugin interface {

Plugin

// Less are used to sort pods in the scheduling queue.

Less(*PodInfo, *PodInfo) bool

}The following code shows the function that is implemented. By default, the default QueueSort plug-in compares the priorities of pods first, and if the priorities of some pods are equal, the plug-in compares the timestamps of the pods. We redefined the Less function to determine the priorities by comparing QoS values in cases where the priorities are equal.

// Less is the function used by the activeQ heap algorithm to sort pods.

// It sorts pods based on their priority. When priorities are equal, it uses

// PodInfo.timestamp.

func (*Sort) Less(pInfo1, pInfo2 *framework.PodInfo) bool {

p1 := pod.GetPodPriority(pInfo1.Pod)

p2 := pod.GetPodPriority(pInfo2.Pod)

return (p1 > p2) || (p1 == p2 && compQOS(pInfo1.Pod, pInfo2.Pod))

}

func compQOS(p1, p2 *v1.Pod) bool {

p1QOS, p2QOS := v1qos.GetPodQOS(p1), v1qos.GetPodQOS(p2)

if p1QOS == v1.PodQOSGuaranteed {

return true

} else if p1QOS == v1.PodQOSBurstable {

return p2QOS ! = v1.PodQOSGuaranteed

} else {

return p2QOS == v1.PodQOSBestEffort

}

}Register the defined plug-in and corresponding constructor in the main function.

// cmd/main.go

func main() {

rand.Seed(time.Now().UnixNano())

command := app.NewSchedulerCommand(

app.WithPlugin(qos.Name, qos.New),

)

if err := command.Execute(); err ! = nil {

os.Exit(1)

}

}$ makeStart kube-scheduler. During startup, configure the kubeconfig path in the ./manifests/qos/scheduler-config.yaml file and import the kubeconfig file of the cluster and the profile of the plug-in.

$ bin/kube-scheduler --kubeconfig=scheduler.conf --config=./manifests/qos/scheduler-config.yamlNow, you should have a good understanding of the architecture and development method of the Kubernetes Scheduling Framework based on the preceding introduction and examples.

As a new architecture for schedulers, Kubernetes Scheduling Framework has made great progress in scalability and customization. This will allow Kubernetes to gradually support more types of application loads and evolve into a single platform with one IT architecture and technology stack. To better support the migration of data computing jobs to the Kubernetes platform, we are also making efforts to integrate the following common features in the data computing field into the native Kube-scheduler using the plug-in system of the Scheduling Framework: coscheduling, gang scheduling, capacity scheduling, dominant resource fairness, and multi-queue management.

In the future, this series of articles will focus on AI, big data processing, and high-performance computing (HPC) clusters, and describe how we develop the corresponding scheduler plug-ins. Stay tuned for more!

Impacts of Alluxio Optimization on Kubernetes Deep Learning and Training

228 posts | 33 followers

FollowAlibaba Container Service - February 13, 2021

Alibaba Container Service - February 12, 2021

Alibaba Cloud Community - September 26, 2023

Alibaba Developer - June 16, 2020

Alibaba Developer - June 17, 2020

Alibaba Cloud Native Community - December 1, 2022

228 posts | 33 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Alibaba Container Service

aNDREUET August 5, 2022 at 2:21 pm

interesting article