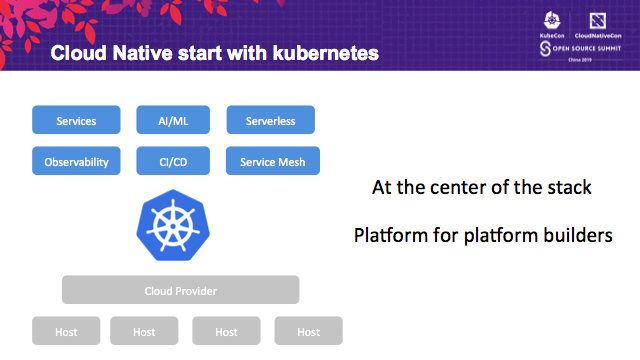

While an increasing number of developers continuously accept and recognize the design concept of the cloud-native applications, it is critical to note that Kubernetes has become the center of the entire cloud-native implementation stack. Cloud service capabilities are revealed from the standard Kubernetes interface to the service layer through Cloud Provider, CRD Controller, and Operator. Developers build their own cloud-native applications and platforms based on Kubernetes. Hence, Kubernetes is now the platform for building platforms. Let's understand how a cloud-native application seamlessly integrates monitoring and autoscaling capabilities in Kubernetes.

This article is a compilation of various excerpts from a speech titled "Cloud-native Application Monitoring and Autoscaling in Kubernetes" by Liu Zhongwei (Mo Yuan), at KubeCon. Liu is a technical expert at Alibaba Cloud Container Platform.

Alibaba Cloud container service for Kubernetes mainly supports the following two types of integrations.

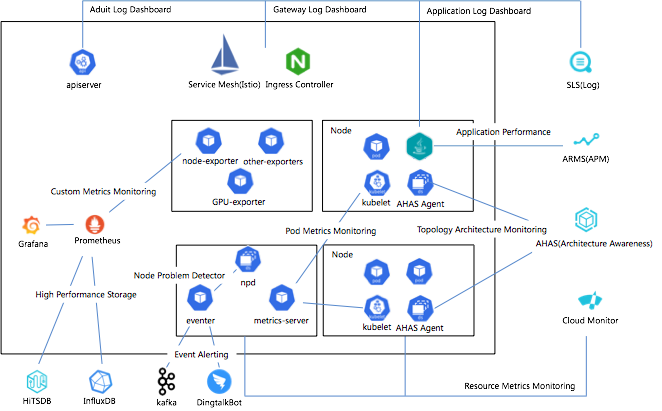

Alibaba Cloud container service for Kubernetes integrates with four cloud monitoring services, including Simple Log Service (SLS), Application Real-Time Monitoring Service (ARMS), and Application High Availability Service (AHAS), and CloudMonitor.

SLS is mainly responsible for collecting and analyzing logs. In the Alibaba Cloud container service for Kubernetes, SLS collects three different types of logs:

In addition to the standard link for collecting logs, SLS also provides the upper-layer log analysis capability. By default, it provides the audit analysis capability based on APIServer, the observability display of the access layer, and log analysis of the application layer. In the Alibaba Cloud container service for Kubernetes, the log component is installed by default, and developers only need to check it while creating the cluster.

ARMS is mainly responsible for collecting, analyzing, and displaying performance metrics of the application. Currently, it mainly supports the integration of Java and PHP, and collects metrics at the JVM layer, such as GC times, slow SQL of applications, and call stacks. It plays a very important role in performance tuning.

AHAS is an architecture-aware monitoring service. Generally, most of the load types in the Kubernetes cluster are microservices, and calling topology of microservices is also complex. Therefore, when the network link of the cluster has problems, the biggest challenge is how to quickly locate, discover, and diagnose problems. AHAS shows the cluster topology through the traffic and trend of the network, providing a higher level of problem diagnosis.

The compatibility and integration of open-source solutions are also part of the monitoring capability of the Alibaba Cloud container service for Kubernetes. It mainly includes the following two parts.

In the Kubernetes community, heapster/metrics-server is a built-in monitoring solution, and core components, such as Dashboard and HPA, depend on the metrics provided by these built-in monitoring capabilities. Due to the inability to ensure the complete synchronization of the release cycle of components in the Kubernetes ecosystem and the release of Kubernetes, some consumers with monitoring capabilities have monitoring problems in Kubernetes. Therefore, Alibaba Cloud came up with enhancements on metrics-server to achieve version compatibility. In addition, for node diagnosis, the Alibaba Cloud container service enhances NPD coverage, supports the FD file handle monitoring, the NTP time synchronization verification, and the inbound/outbound network capability verification. Further, it makes the eventer open-source to support the offline transmission of Kubernetes event data to SLS, Kafka, and DingTalk, thus implementing ChatOps.

To support Prometheus, the standard third-party monitoring platform in the Kubernetes ecosystem, the Alibaba Cloud container service provides integrated charts for developers to integrate with one click. In addition, there are enhancements at the following three levels:

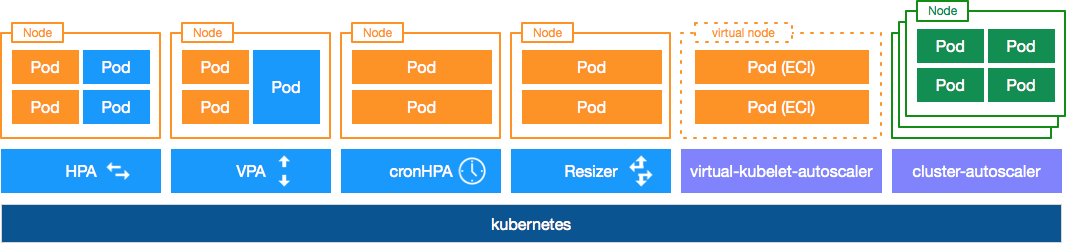

Alibaba Cloud container service for Kubernetes mainly includes the following two types of autoscaling components.

For scheduling layer autoscaling components, all autoscaling operations are pod-related, regardless of the specific resource situation.

Resource layer autoscaling components support operations regarding the relationship between pods and specific resources.

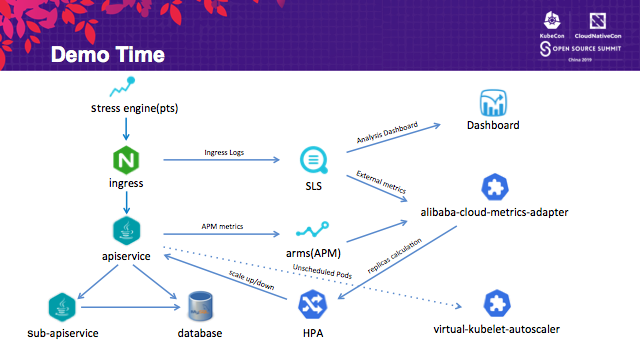

The preceding diagram shows a simple Demo, where the application subject is APIservice. The APIservice calls the database through the sub-APIservice, and the access layer is managed through Ingress. Use PTS to simulate the traffic generated by the upper layer, collect logs of the access layer through SLS, and collect application performance metrics through ARMS. Finally, expose external metrics through alibaba-cloud-metrics-adapster to trigger HPA for re-computing workload copies. When the scaled pod occupies all the cluster resources, virtual-kubelet-autoscaler triggers to generate ECI for hosting loads beyond the cluster capacity planning.

It is very simple to use monitoring and autoscaling capabilities on the Alibaba Cloud Container Service for Kubernetes. Developers only need to install the corresponding component chart in one click to get complete access. With multi-dimensional monitoring and autoscaling capabilities, cloud-native applications obtain higher stability and robustness at the lowest cost.

A Decade of Container Development: Chronicles of Software Delivery

634 posts | 55 followers

FollowAlibaba Clouder - January 13, 2021

Alibaba Cloud Native Community - March 6, 2023

Xi Ning Wang - March 19, 2020

Alibaba Container Service - May 30, 2019

Xi Ning Wang(王夕宁) - August 7, 2023

Alibaba Cloud Storage - June 4, 2019

634 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Managed Service for Prometheus

Managed Service for Prometheus

Multi-source metrics are aggregated to monitor the status of your business and services in real time.

Learn MoreMore Posts by Alibaba Cloud Native Community