By Wang Zhuoran, nicknamed Zhuoran at Alibaba.

The term data replication implies a process that involves storing data replicas on multiple servers through an interconnected network. A data replication solution is important because it will helps to achieve the following:

Data replication is relatively easy to implement when the concerned data remains unchanged. This is because such data is only required to be replicated each node only once and that's it. However, it is very challenging to correctly and effectively complete the replication of data that is constantly changing.

Alibaba Cloud Tablestore is a NoSQL multi-model database of Alibaba Cloud. It provides the storage necessary for a huge volume of structured data, as well as fast queries, and related analysis services. The distributed storage and powerful index engine of Alibaba Cloud Tablestore provide petabytes worth of storage, can support tens of millions of transactions per second (TPS), and latency as low as only a few milliseconds.

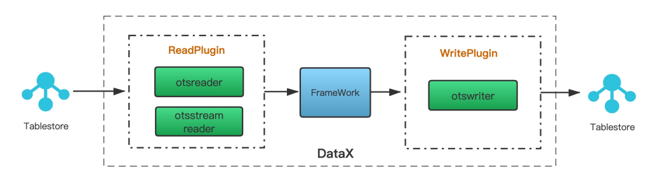

DataX is an offline data synchronization tool widely used within the Alibaba Group. As a data synchronization framework, DataX abstracts the synchronization of different data sources into a Reader plug-in that reads data from various data sources and a Writer plug-in that writes data to the destination database.

The following figure shows how to use DataX for completing data replication of a table in Alibaba Cloud Tablestore. The OTSreader plug-in reads data from Tablestore and extracts incremental data from the specified data extraction scope. The OTSstreamreader plug-in exports incremental data from Alibaba Cloud Tablestore. The otswriter plug-in writes data to Tablestore. Configure Tablestore-related Reader and Writer plug-ins in DataX to complete the data replication process of the tables in Tablestore.

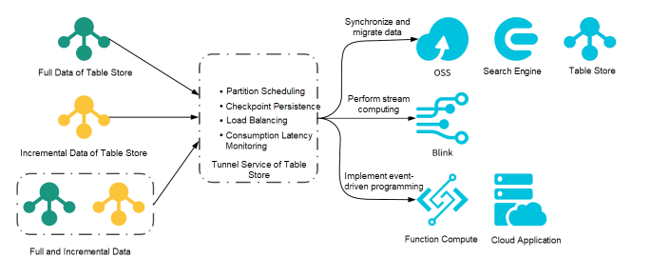

Alibaba Cloud's Tunnel Service is an integrated service for full-incremental data consumption based on the Tablestore API. It provides real-time consumption tunnels for distributed data, including incremental data, full data, and full and incremental data. Creating tunnels for a table, allows consuming historical and incremental data in the table.

Using a full-incremental Tunnel Service, build an efficient and elastic data replication solution. This article describes how to use Tunnel Service for data replication in Tablestore. Refer to the tablestore-examples of GitHub for the complete open-source code. The practice demonstrated in this article is based on the Java SDK of Tunnel Service. Read the Overview and Quick Start documents for more details.

To begin, you'll need to specify the data replication scope. This step helps to complete the table data synchronization before the specified time that is either the current or a future time point. The following snippet shows the specific configuration where the OTSreader plug-in records the configuration of the source table, and the OTSwriter plug-in records the configuration of the target table.

{

"ots-reader": {

"endpoint": "https://zhuoran-high.cn-hangzhou.ots.aliyuncs.com",

"instanceName": "zhuoran-high",

"tableName": "testSrcTable",

"accessId": "",

"accessKey": "",

"tunnelName": "testTunnel",

"endTime": "2019-06-19 17:00:00"

},

"ots-writer": {

"endpoint": "https://zhuoran-search.cn-hangzhou.ots.aliyuncs.com",

"instanceName": "zhuoran-search",

"tableName": "testDstTable",

"accessId": "",

"accessKey": "",

"batchWriteCount": 100

}

}Parameters of the OTSreader plug-in are as follows:

yyyy-MM-dd HH:mm:ss.

Parameters of the otswriter plug-in (with the same parameters omitted):

Note: More functions will be available in the future, such as data replication within a specified time range.

The main logic of data replication consists of four steps. It is critical to note that all the steps execute during the first run, while only the third and fourth steps execute upon program restart or resumable upload.

1. Create a Destination Table for Data Replication

For this step, you'll need to call the DescribeTable operation to obtain the schema of the source table and then create a destination table. Set the valid version offset of the destination table to a sufficiently large value (86,400 seconds by default) since the server checks the version numbers in the attribute column while processing write requests, and the version numbers are written only within a certain range. For inventory data in the source table, the timestamp is usually small and filtered out by the server, causing synchronized data loss.

sourceClient = new SyncClient(config.getReadConf().getEndpoint(), config.getReadConf().getAccessId(),

config.getReadConf().getAccessKey(), config.getReadConf().getInstanceName());

destClient = new SyncClient(config.getWriteConf().getEndpoint(), config.getWriteConf().getAccessId(),

config.getWriteConf().getAccessKey(), config.getWriteConf().getInstanceName());

if (destClient.listTable().getTableNames().contains(config.getWriteConf().getTableName())) {

System.out.println("Table is already exist: " + config.getWriteConf().getTableName());

} else {

DescribeTableResponse describeTableResponse = sourceClient.describeTable(

new DescribeTableRequest(config.getReadConf().getTableName()));

describeTableResponse.getTableMeta().setTableName(config.getWriteConf().getTableName());

describeTableResponse.getTableOptions().setMaxTimeDeviation(Long.MAX_VALUE / 1000000);

CreateTableRequest createTableRequest = new CreateTableRequest(describeTableResponse.getTableMeta(),

describeTableResponse.getTableOptions(),

new ReservedThroughput(describeTableResponse.getReservedThroughputDetails().getCapacityUnit()));

destClient.createTable(createTableRequest);

System.out.println("Create table success: " + config.getWriteConf().getTableName());

}2. Create a Tunnel in the Source Table

Call the CreateTunnel operation of Tunnel Service to create a tunnel. The following snippet shows an example to create a tunnel of the full-incremental type (TunnelType.BaseAndStream).

sourceTunnelClient = new TunnelClient(config.getReadConf().getEndpoint(), config.getReadConf().getAccessId(),

config.getReadConf().getAccessKey(), config.getReadConf().getInstanceName());

List<TunnelInfo> tunnelInfos = sourceTunnelClient.listTunnel(

new ListTunnelRequest(config.getReadConf().getTableName())).getTunnelInfos();

String tunnelId = null;

TunnelInfo tunnelInfo = getTunnelInfo(config.getReadConf().getTunnelName(), tunnelInfos);

if (tunnelInfo != null) {

tunnelId = tunnelInfo.getTunnelId();

System.out.println(String.format("Tunnel is already exist, TunnelName: %s, TunnelId: %s",

config.getReadConf().getTunnelName(), tunnelId));

} else {

CreateTunnelResponse createTunnelResponse = sourceTunnelClient.createTunnel(

new CreateTunnelRequest(config.getReadConf().getTableName(),

config.getReadConf().getTunnelName(), TunnelType.BaseAndStream));

System.out.println("Create tunnel success: " + createTunnelResponse.getTunnelId());

}3. Start a Scheduled Task to Monitor the Backup Progress

Now, call the DescribeTunnel operation to monitor the backup progress. The DescribeTunnel operation obtains the latest consumption time. Comparing the time with the configured backup end time helps to obtain the current synchronization progress. After the end time arrives, the server exits backup.

backgroundExecutor = Executors.newScheduledThreadPool(2, new ThreadFactory() {

private final AtomicInteger counter = new AtomicInteger(0);

@Override

public Thread newThread(Runnable r) {

return new Thread(r, "background-checker-" + counter.getAndIncrement());

}

});

backgroundExecutor.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

DescribeTunnelResponse resp = sourceTunnelClient.describeTunnel(new DescribeTunnelRequest(

config.getReadConf().getTableName(), config.getReadConf().getTunnelName()

));

// 已同步完成

if (resp.getTunnelConsumePoint().getTime() > config.getReadConf().getEndTime()) {

System.out.println("Table copy finished, program exit!");

// 退出备份程序

shutdown();

}

}

}, 0, 2, TimeUnit.SECONDS);4. Start Data Replication

Start the automatic consumption framework of the Tunnel Service to initiate automatic data synchronization. OtsReaderProcessor parses data in the source table and writes data to the destination table. The processing logic will be described later in this article.

if (tunnelId != null) {

sourceWorkerConfig = new TunnelWorkerConfig(

new OtsReaderProcessor(config.getReadConf(), config.getWriteConf(), destClient));

sourceWorkerConfig.setHeartbeatIntervalInSec(15);

sourceWorker = new TunnelWorker(tunnelId, sourceTunnelClient, sourceWorkerConfig);

sourceWorker.connectAndWorking();

}To use Tunnel Service, you'll need to compile the Process logic and Shutdown logic of data. The core of data synchronization is to parse data and write it to the destination table. The following snippet shows the complete code for data processing. The main logic checks whether the data timestamp is within a reasonable time range, converts StreamRecord into the corresponding row in BatchWrite, and then writes data to the destination table in series.

public void process(ProcessRecordsInput input) {

System.out.println(String.format("Begin process %d records.", input.getRecords().size()));

BatchWriteRowRequest batchWriteRowRequest = new BatchWriteRowRequest();

int count = 0;

for (StreamRecord record : input.getRecords()) {

if (record.getSequenceInfo().getTimestamp() / 1000 > readConf.getEndTime()) {

System.out.println(String.format("skip record timestamp %d larger than endTime %d",

record.getSequenceInfo().getTimestamp() / 1000, readConf.getEndTime()));

continue;

}

count++;

switch (record.getRecordType()) {

case PUT:

RowPutChange putChange = new RowPutChange(writeConf.getTableName(), record.getPrimaryKey());

putChange.addColumns(getColumns(record));

batchWriteRowRequest.addRowChange(putChange);

break;

case UPDATE:

RowUpdateChange updateChange = new RowUpdateChange(writeConf.getTableName(),

record.getPrimaryKey());

for (RecordColumn column : record.getColumns()) {

switch (column.getColumnType()) {

case PUT:

updateChange.put(column.getColumn());

break;

case DELETE_ONE_VERSION:

updateChange.deleteColumn(column.getColumn().getName(),

column.getColumn().getTimestamp());

break;

case DELETE_ALL_VERSION:

updateChange.deleteColumns(column.getColumn().getName());

break;

default:

break;

}

}

batchWriteRowRequest.addRowChange(updateChange);

break;

case DELETE:

RowDeleteChange deleteChange = new RowDeleteChange(writeConf.getTableName(),

record.getPrimaryKey());

batchWriteRowRequest.addRowChange(deleteChange);

break;

default:

break;

}

if (count == writeConf.getBatchWriteCount()) {

System.out.println("BatchWriteRow: " + count);

writeClient.batchWriteRow(batchWriteRowRequest);

batchWriteRowRequest = new BatchWriteRowRequest();

count = 0;

}

}

// 写最后一次的数据。

if (!batchWriteRowRequest.isEmpty()) {

System.out.println("BatchWriteRow: " + count);

writeClient.batchWriteRow(batchWriteRowRequest);

}

}Let's take a look at the most common issues pertaining to the practice of using Tunnel Service for data replication in Alibaba Cloud Tablestore.

Backup is divided into full (inventory) data backup and incremental data backup. For full data backup, Tunnel Service logically splits all data in the table into several shards with the size similar to the specified size. The overall parallelism of full data synchronization relates to the number of shards, which effectively ensures throughput. For incremental data backup, process data in a single partition in series to ensure data orderliness. The performance for incremental data backup is directly proportional to the number of partitions (refer to the Incremental Synchronization Performance White Paper). To speed up (add partitions), contact the customer service representatives of Alibaba Cloud Tablestore.

Assume that multiple Tunnel Workers (clients) consume data on the same tunnel (with the same tunnel ID). When Tunnel Workers execute heartbeat messages, the tunnel server automatically redistributes channel resources, so that active channels are evenly distributed for each Tunnel Worker, achieving resource load balancing. Add Tunnel Workers to scale out. Tunnel Workers run on one or more instances. For more information, see Description of the data consumption framework

Data consistency is based on the order-preserving protocol of the Tunnel Service. The idempotence of full and incremental data synchronization ensures the consistency of backup data.

The client of Tunnel Service regularly sends the time points of synchronized (consumed) data to the server for persistence. After the failover or program restart, the next data consumption starts from the recorded checkpoint, without experiencing data loss.

This tutorial has outlined the practice of how you can use Tunnel Service to complete a simple and effective data replication solution, which implements table data replication at a specified time. Using the sample code in this practice helps to set parameters for the source and destination tables to efficiently replicate and migrate table data.

In future evolution, Tunnel Service will also support the creation of tunnels in a specified time range. This will allow more flexibility to develop a data backup plan and implement more functions such as continuous backup and time-based recovery.

Alibaba Cloud Tablestore: A Case Study on Backing up a Massive Volume of Structured Data

A Big Data-Based Public Opinion Analysis System: Architecture Anatomy

57 posts | 12 followers

FollowAlibaba Cloud Storage - February 27, 2020

Alibaba Cloud Storage - February 27, 2020

zhuoran - February 5, 2021

Alibaba Cloud Storage - February 27, 2020

Alibaba Clouder - April 2, 2020

Michelle - July 10, 2018

57 posts | 12 followers

Follow DataHub

DataHub

DataHub is a service that is provided by Alibaba Cloud to process streaming data.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More DataV

DataV

A powerful and accessible data visualization tool

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn MoreMore Posts by Alibaba Cloud Storage