By Zhuoran

In the era of big data, data is used in every sector and by every business function in today's society. It has become an essential factor of production. As business and data grow continuously, the trade-off between performance and cost presents a serious challenge to the design of big data systems. In some cases, this can lead to reforms of the original architecture or migration to a new system. When we begin to design an architecture, it is imperative to consider its overall cost. This involves a consideration of tiered data storage and the selection of storage and computing engines.

As the new data lake solution released by DataBricks, Delta Lake provides a series of features for data ingestion, data structure management, data query, and data outflow as well as ACID (atomicity, consistency, isolation, and durability) and CRUD (create, read, update, and delete) for data operations. Delta Lake, when used together with upstream and downstream components, can build a convenient, easy-to-use, and secure data lake architecture. We generally adopt the concept of hybrid transaction/analytical processing (HTAP) to design a data lake architecture. Tiered storage components and computing engines, when selected appropriately, can support the analysis of massive data and fast transactional updates. They can also effectively separate hot and cold data to reduce costs. This article discusses hierarchical data storage. For more information about cold and hot data separation on ApsaraDB for HBase, please see this document.

Data can be divided into hot data, warm data, and cold data based on its access frequency. You will have a large volume of cold data that is rarely accessed during the entire data life cycle. Its retention for a specified time mainly serves to satisfy business compliance requirements or the needs of specific scenarios. Generally, hot and cold data can be distinguished in two ways:

This article also discusses how to separate hot data from cold data by data creation time.

In relation to hot data, cold data exhibits the following characteristics:

The characteristics of cold data make it easy to conceive of scenarios in which hot-cold data separation can be applied:

When designing a hot-cold data separation solution, we need to answer a few key questions.

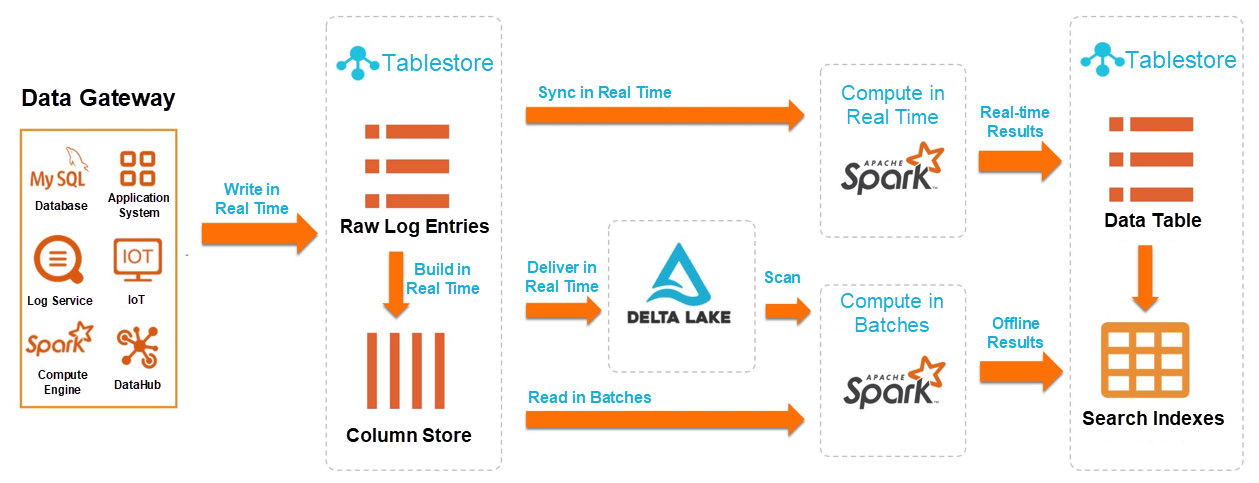

To design a solution that would achieve a separation between hot and cold structured data, the TableStore Team [Note 1] and the Elastic MapReduce (EMR) Team jointly developed a Delta Lake architecture for the separation of massive structured data. This solution effectively addresses the issues involved in hot-cold data separation. The tunnel service of TableStore [Note 2] can utilize CDC technology to derive hot and cold data from raw data and ship the data to various storage components, such as Delta Lake and the column store inherent in the TableStore engine. These components can perform subsequent separation and heterogeneous storage. To reduce costs, the flexible upstream data gateways and Time to Live (TTL) features of TableStore allow users to customize the lifecycle of hot data and continuously deliver cold data to Delta Lake and the column store in real-time. Finally, on the computing and query layers, TableStore and Spark can be used together for full and incremental customized computing of hot data and cold data. Then, they can save the computing results in the index engine of TableStore for a unified query.

Note 1: TableStore is a serverless NoSQL multi-model database independently developed by Alibaba Cloud for storing massive structured data. It is used in a wide range of business scenarios, such as social networking, Internet of Things (IoT), artificial intelligence (AI), metadata, and big data. This solution also offers the Wide Column model, the message model Timeline, and the spatial-temporal model Timestream, all of which are compatible with HBase, for petabyte storage, tens of millions of TPS, and millisecond latency.

Note 2: The tunnel service is an integrated service built on the TableStore data interface for full and incremental data. It creates tunnels for data tables, so users can easily consume historical data and new data in the tables.

This section describes how to put the TableStore and Delta Lake into practice for hot and cold data separation in a data lake.

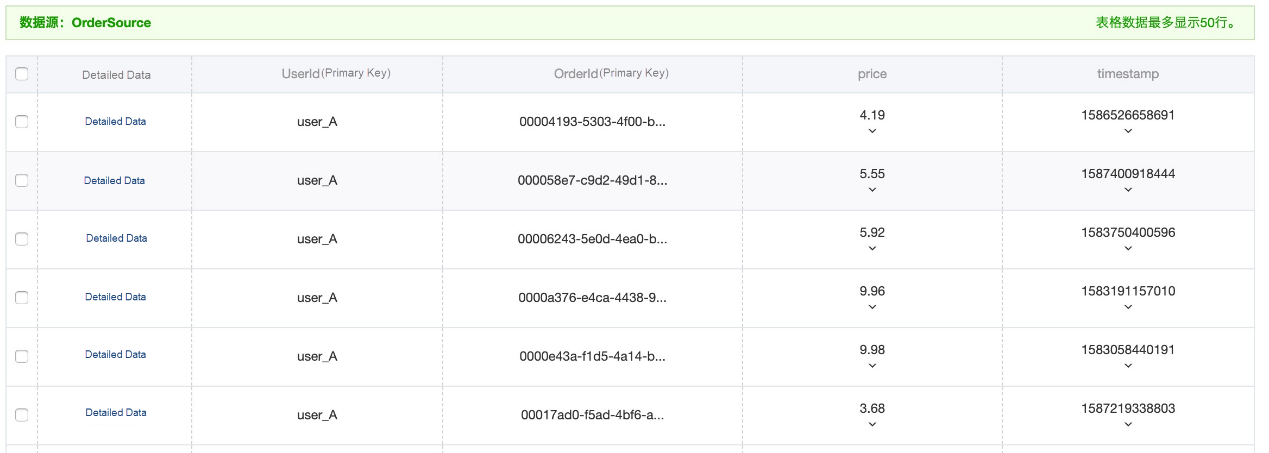

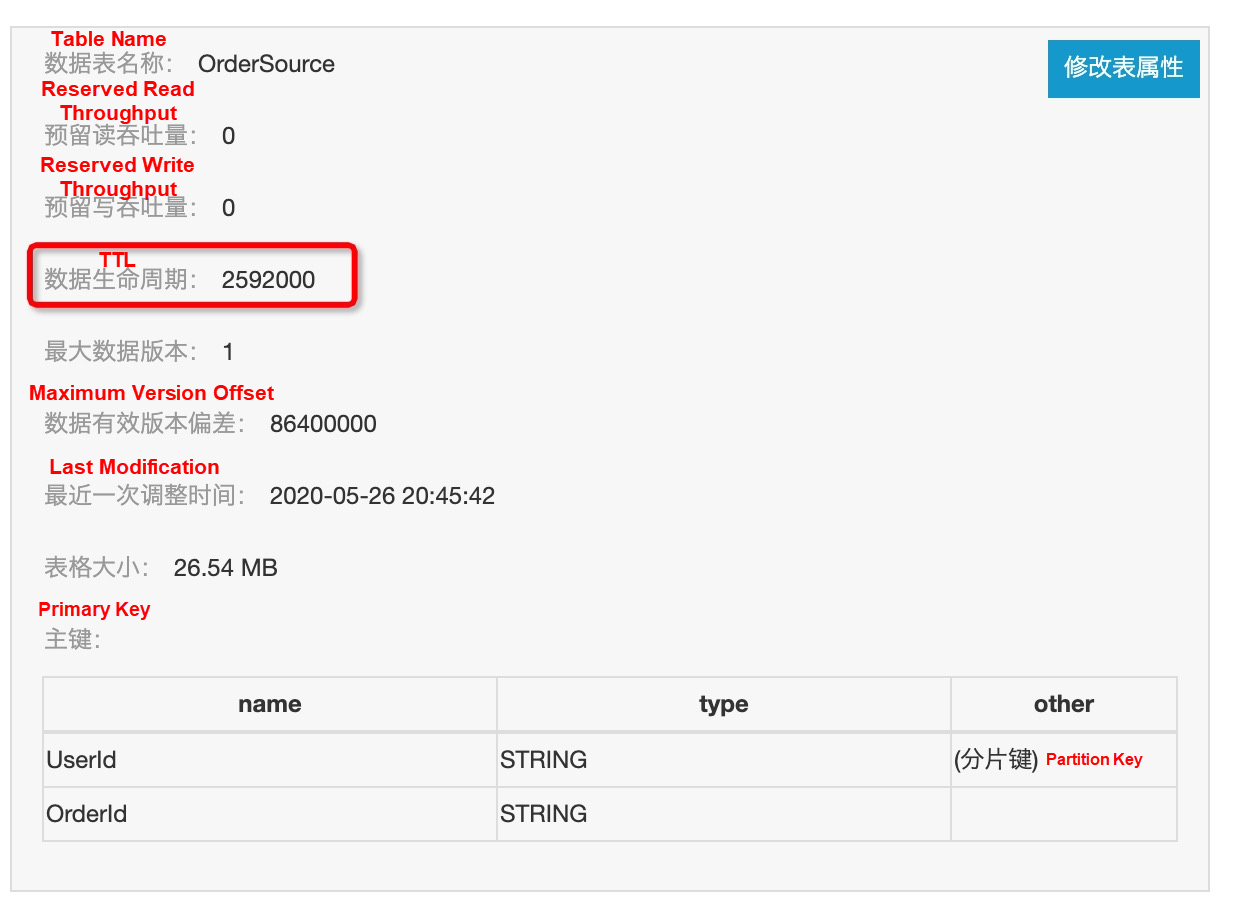

The data source, in this case, is a simple original order table OrderSource, which has two primary keys, UserId (user ID) and OrderId (order ID), and two attribute columns, price, and timestamp. We write the order data through the BatchWrite interface of the TableStore SDK and set the time range of order timestamps to the last 90 days. This simulation uses the time range of February 26, 2020 to May 26, 2020. A total of 3,112,400 entries are written. The following diagram shows a sample of the original data.

When writing a simulated order, the version number recorded in the attribute column in the TableStore table is also set to contain the corresponding timestamp. When the retention period of the written data exceeds the specified TTL attribute in the configuration table, the system automatically cleans up the data with the corresponding version number.

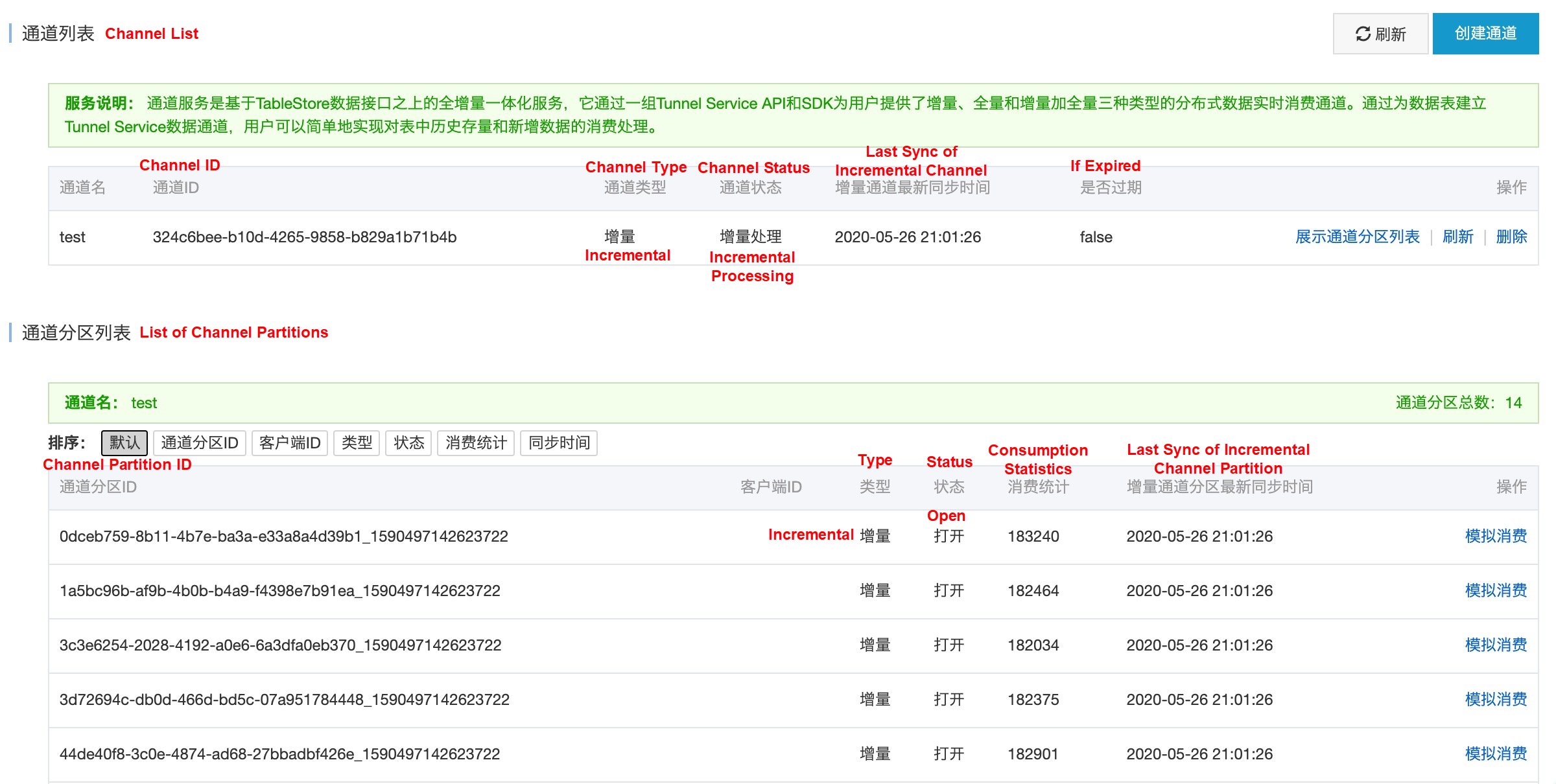

1. When creating a data source table, users can also establish an incremental tunnel in the TableStore console and rely on the CDC technology provided by the tunnel to continuously synchronize new primary table data to the Delta Lake. The ID of the new tunnel will be used for subsequent SQL configuration.

2. First, launch the streaming-sql interactive command line interface (CLI) on the Header node of the Elastic MapReduce cluster.

streaming-sql --master yarn --use-emr-datasource --num-executors 16 --executor-memory 4g --executor-cores 43. Run the following commands sequentially in streaming-sql launched in Step 2.

order_source. The catalog in the OPTIONS parameter is the schema definition of the source table fields. In this example, the table fields are four columns: UserId, OrderId, price, and timestamp.delta_orders, for the data synchronized to Delta. LOCATION indicates the location where the Delta files are stored.incremental_orders. tunnel.id in the OPTIONS parameter is the ID of the incremental data channel created in Step 1, and maxoffsetsperchannel indicates the maximum amount of data written to each partition of the channel (in every Spark micro-batch).__ots_record_type__ is the predefined column provided by the TableStore streaming source, indicating the type of row operation.// Source Table and Destination Table

// 1. Create the source table

DROP TABLE IF EXISTS order_source;

CREATE TABLE order_source

USING tablestore

OPTIONS(

endpoint="http://vehicle-test.cn-hangzhou.vpc.tablestore.aliyuncs.com",

access.key.id="",

access.key.secret="",

instance.name="vehicle-test",

table.name="OrderSource",

catalog='{"columns": {"UserId": {"col": "UserId", "type": "string"}, "OrderId": {"col": "OrderId", "type": "string"},"price": {"col": "price", "type": "double"}, "timestamp": {"col": "timestamp", "type": "long"}}}',

);

// 2. Create Delta Lake Sink: delta_orders

DROP TABLE IF EXISTS delta_orders;

CREATE TABLE delta_orders(

UserId string,

OrderId string,

price double,

timestamp long

)

USING delta

LOCATION '/delta/orders';

// 3. Create an incremental SCAN view on the source table

CREATE SCAN incremental_orders ON order_source USING STREAM

OPTIONS(

tunnel.id="324c6bee-b10d-4265-9858-b829a1b71b4b",

maxoffsetsperchannel="10000");

// 4. Launch the stream job and synchronize the Tablestore CDC data to Delta Lake in real time.

CREATE STREAM orders_job

OPTIONS (

checkpointLocation='/delta/orders_checkpoint',

triggerIntervalMs='3000'

)

MERGE INTO delta_orders

USING incremental_orders AS delta_source

ON delta_orders.UserId=delta_source.UserId AND delta_orders.OrderId=delta_source.OrderId

WHEN MATCHED AND delta_source.__ots_record_type__='DELETE' THEN

DELETE

WHEN MATCHED AND delta_source.__ots_record_type__='UPDATE' THEN

UPDATE SET UserId=delta_source.UserId, OrderId=delta_source.OrderId, price=delta_source.price, timestamp=delta_source.timestamp

WHEN NOT MATCHED AND delta_source.__ots_record_type__='PUT' THEN

INSERT (UserId, OrderId, price, timestamp) values (delta_source.UserId, delta_source.OrderId, delta_source.price, delta_source.timestamp);In actual designs, hot data is usually stored in TableStore tables for efficient transaction processing queries, while cold data or full data is stored in Delta. You can flexibly control the amount of hot data by configuring the TTL of the TableStore table.

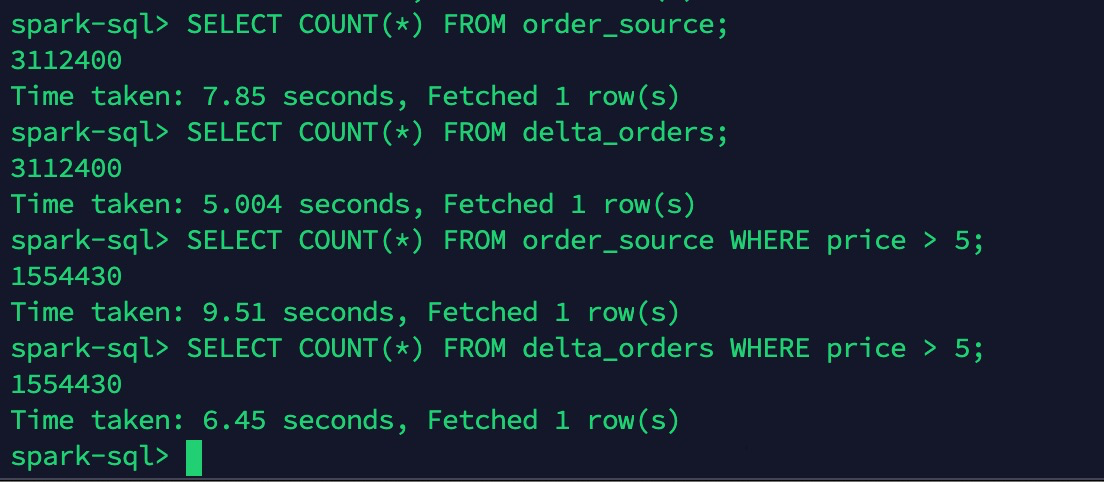

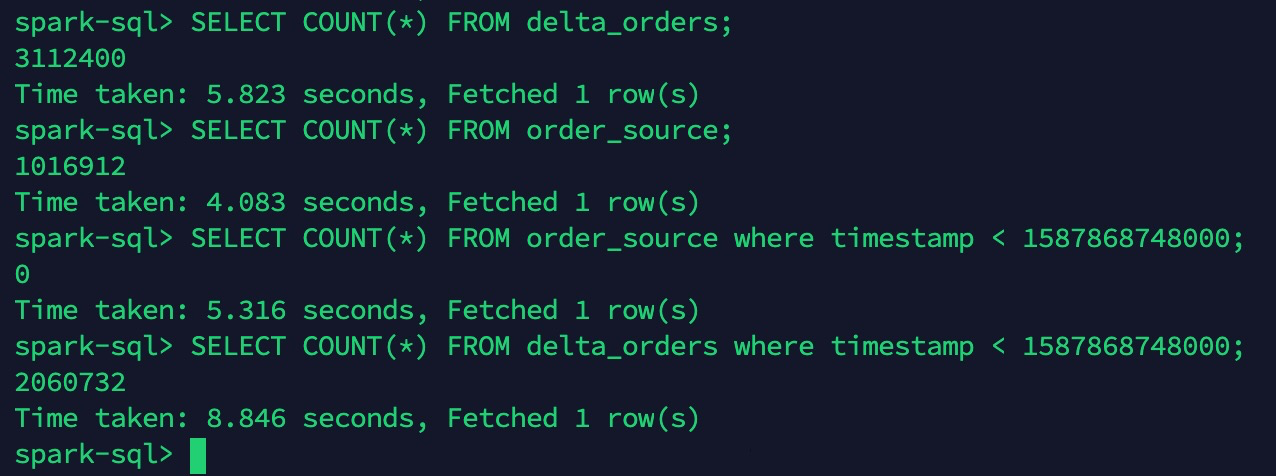

First, before configuring the TTL of the primary table, you need to perform some queries on the source table (order_source) and the destination table (delta_orders) and make sure the query results from the primary and secondary tables are consistent.

For hot-cold data separation, we set the TTL of the TableStore table to the last 30 days, so only data from the last 30 days (hot data) is stored in TableStore, while Delta still keeps the full data.

Finally, you can perform some simple queries after data separation below. The specific query routes are selected according to your business logic. After separation, there are 1,017,004 hot data entries and 3,112,400 cold data entries (full data).

This article introduces the characteristics and applicable scenarios of hot and cold data, describes the design of a hot-cold data separation solution and architecture for massive structured data, and finishes up with a practical example of using TableStore and Delta Lake for hot-cold data separation. We hope this article will help you make full use of your computing and storage resources so you can provide better services at a lower cost.

ApsaraDB - October 20, 2020

ApsaraDB - June 4, 2020

ApsaraDB - October 29, 2024

ApsaraDB - July 3, 2019

ApsaraDB - November 26, 2024

Alibaba Cloud Storage - February 27, 2020

ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More