By Zhang Lei, Senior Technical Expert at Alibaba Cloud Container Platform and CNCF Ambassador

Pod, an atomic unit of scheduling, is a very important concept in Kubernetes. However, this concept is not applicable while using Docker containers. To understand pods, it"s significant to understand containers first.

A container is a process with isolated viewing and restricted resources.

A process with the Process ID (PID) 1 in a container is the application itself. In such a case, virtual machine management is equivalent to infrastructure management. Comparatively, container management is equivalent to application management. This is one of the best practices of immutable infrastructure. Hence, the application is the infrastructure, which is immutable.

Based on the preceding example, what is Kubernetes? Many people say that Kubernetes is the operating system of the cloud era. If this is true, container images are considered to be the software installation packages of the operating system.

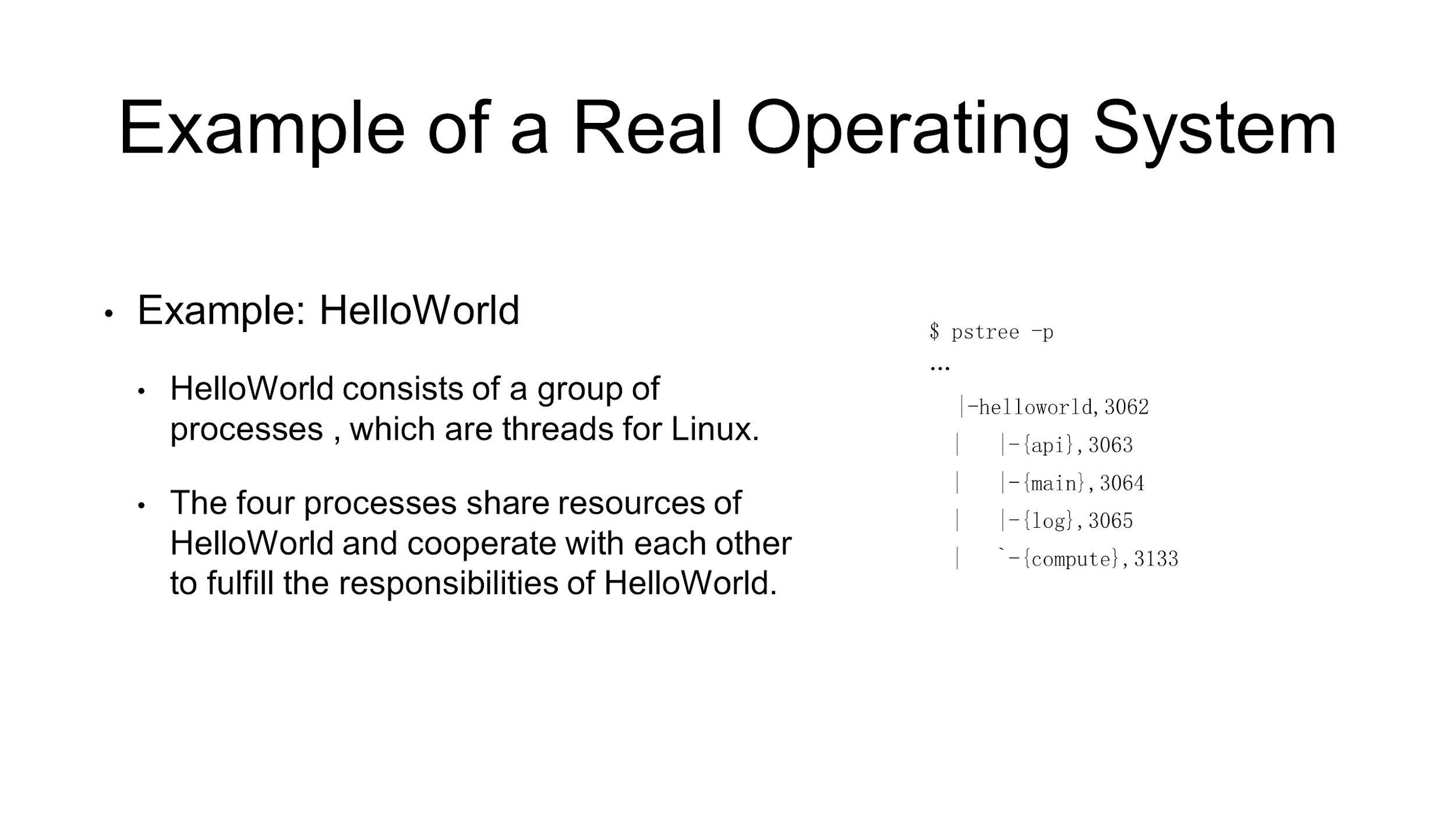

An application named HelloWorld comprises a group of processes. Here, note that the processes are equivalent to threads on Linux.

Threads on Linux are lightweight processes. When viewing pstree in HelloWorld on Linux, see that HelloWorld consists of four threads, which are api, main, log, and compute. These threads cooperate with each other and share the resources of HelloWorld.

The preceding is a real example of a process group or thread group in the operating system.

In a real operating system, a program is managed by a process group. Kubernetes is like an operating system, such as Linux. A container is likened to a process, such as the Linux thread. Furthermore, a pod is a process group or a thread group for Linux.

Before understanding the concept of process groups, let"s understand the concept of a process.

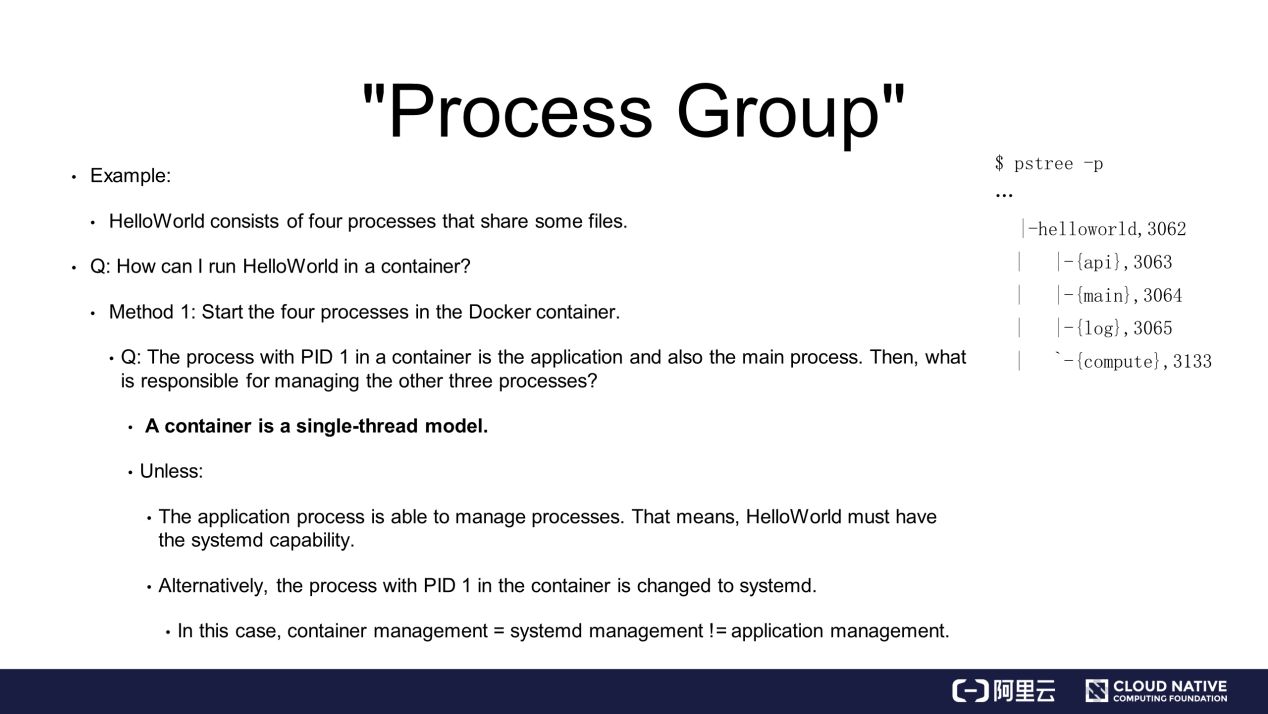

In the same preceding example, HelloWorld consists of four processes that share some resources and files. Then, how HelloWorld be run in a container?

The most common way is to start a Docker container that runs the four processes. In this case, it"s critical to know which process in the container is the one with PID 1. For example, if the PID of the main process is 1, which one is responsible for managing the other three processes?

The container is designed as a single-process model. However, this does not mean only one process is started in the container. Since the applications in the container are processes, only the process with PID 1 is managed, while other started processes are hosted. Therefore, service application processes naturally have process management capability.

For example, HelloWorld has the system capability or directly changes the process with PID 1 in the container to systemd. Otherwise, the application or container cannot manage multiple processes. In case the users kill the process with PID 1 or it crashes during the running process, none is responsible for reclaiming the resources of the other three processes, which leads to a serious problem.

On the contrary, in the case of changing the application to systemd or running systemd in the container, container management becomes systemd management rather than application management. For example, if systemd runs in the container, does the application exit? Does any exception or failure occur? In fact, it"s not possible to determine the answer because the container manages systemd. This is one of the reasons why it is difficult to run a complex program in a container.

To summarize, a container is actually a single-process model. Therefore, if you start multiple processes in a container, only one process has the PID of 1. If this process fails or exits, the other processes become orphan processes, and their resources cannot be reclaimed.

In the single-process model of the Linux container, the lifecycle of the container is the same as that of the process with PID 1, in other words, the container application process. This does not mean multiple processes cannot be created in the container. Generally, a container application process cannot manage processes. Therefore, other processes that are created in the container by using exec or SSH may become orphan processes upon unexpected exit, for example, SSH termination.

Alternatively, run systemd in a container to manage all other processes. In this case, applications cannot be directly managed because they are taken over by systemd. Therefore, the lifecycle of the applications is different from that of the container. The management model is quite complex.

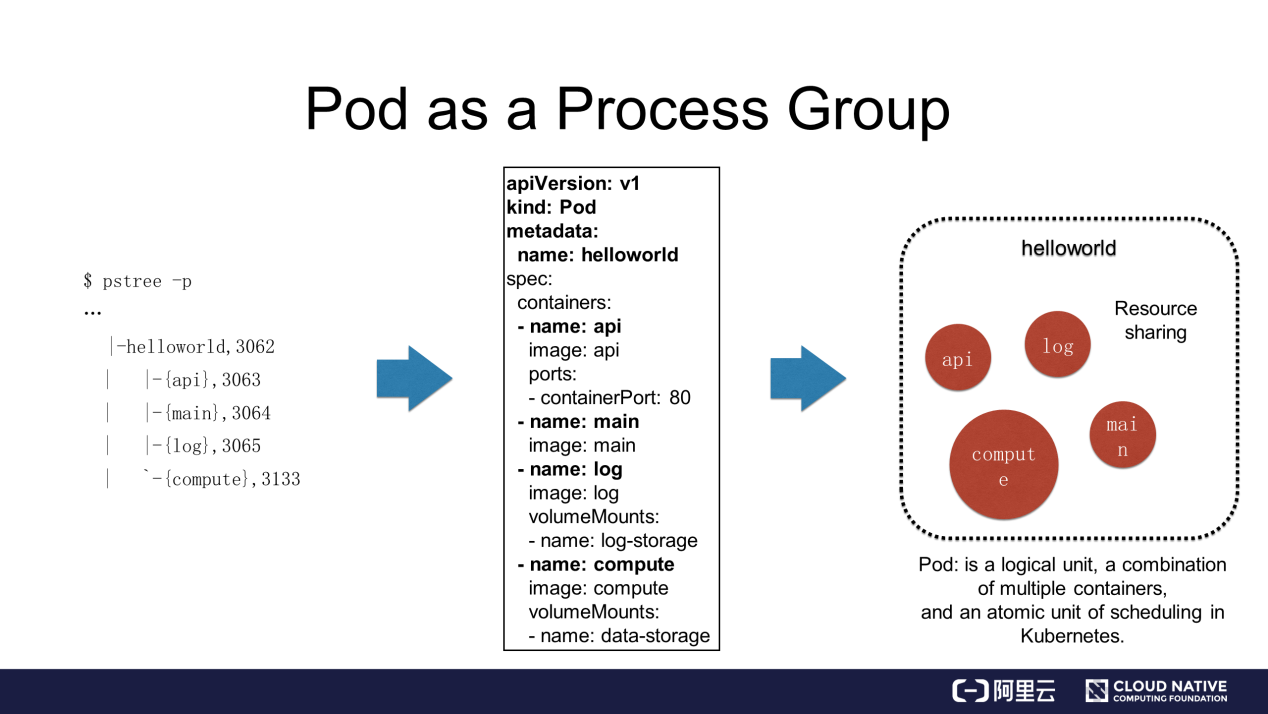

A pod is abstracted by Kubernetes and is similar to a process group.

As mentioned previously, the HelloWorld application consists of four processes. This application is defined as a pod with four containers in Kubernetes. This concept is very important.

In this case, the four processes that cooperate with each other and implement different functions need to run in containers. In Kubernetes, they are placed in different containers to prevent the preceding two problems. Then, how do these processes run in Kubernetes? The four processes are started separately in four independent containers but are defined in the same pod.

When Kubernetes starts HelloWorld, there are four containers that share some of the resources of the pod. Therefore, in Kubernetes, the pod is only a logical unit and does not correspond to any physical entity. Instead, the four containers are the ones that exist physically. The four containers or a combination of multiple containers are called a pod. In addition, understand that a pod is a unit of resource allocation in Kubernetes, because containers in the pod need to share some resources. Therefore, a pod is also an atomic unit of scheduling in Kubernetes.

The aforementioned pod design is not derived from Kubernetes but was discovered when Google engineers were developing Borg. This is described in detail in the Borg paper. In short, Google engineers found relationships similar to those between processes and process groups in many scenarios when deploying applications in Borg. More specifically, these applications cooperate so closely that they must be deployed on the same server and share certain information.

After understanding that a pod is a process group, one might have the following questions:

To answer these questions, let's consider the following example.

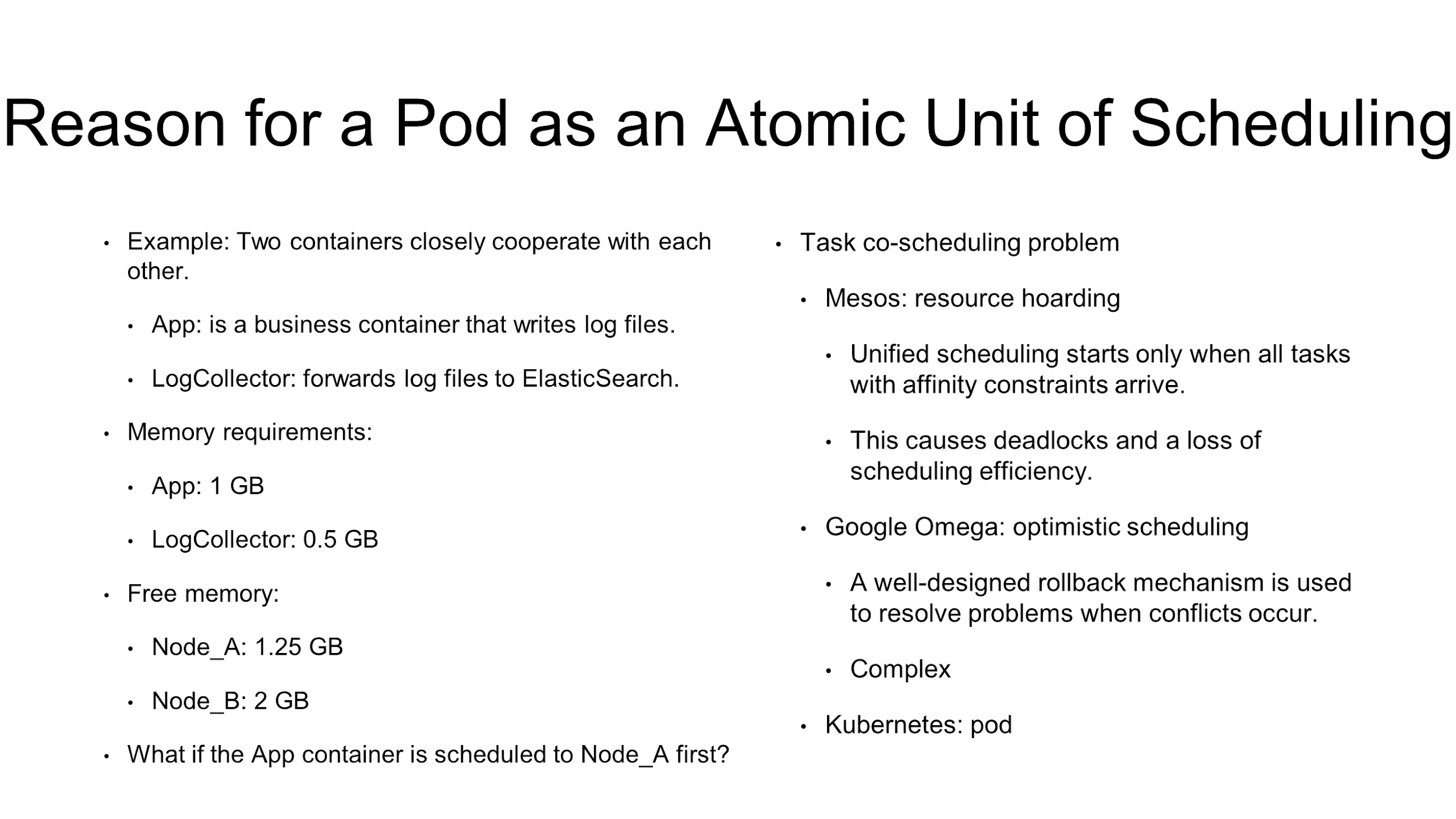

Two containers closely cooperate with each other. Therefore, they must be deployed in the same pod. Specifically, the first container is named App, which is a business container and generates log files. The other container is named LogCollector, which forwards log files generated by the App container to ElasticSearch at the backend.

The App container requires 1 GB of memory, and the LogCollector container requires 0.5 GB of memory. In the current cluster environment, 1.25 GB of memory is available on Node_A and 2 GB on Node_B.

What would happen if no pod definition were available in this case? The answer is, both containers would have to work closely together on the same server. If the scheduler first schedules the App container to Node_A, note that the LogCollector container cannot be scheduled to Node_A due to insufficient resources. In this case, the entire application is defective and the scheduling fails.

The preceding example is a typical case of task co-scheduling failure. This problem can be solved in many ways.

For example, Mesos implements resource hoarding. This implies that unified scheduling starts only after all tasks with affinity constraints are in place. This is a typical solution to the problem.

Therefore, the App and LogCollector containers in Mesos are scheduled in a centralized manner only when both containers are submitted. However, this also introduces new problems. At first, the scheduling efficiency is reduced due to waiting. Furthermore, deadlocks occur due to mutual waiting. It is quite complex to solve these problems in Mesos.

Another solution is Google's optimistic scheduling, a very complex and useful solution in the Omega system, which is the next generation of Borg. For example, scheduling is performed regardless of conflicts, and a well-designed rollback mechanism is in place to solve these conflicts by the rollback. This method is more graceful and efficient, but its implementation mechanism is very complex. It is well-known that the configuration of pessimistic locks is simpler than that of optimistic locks.

Despite this, the task co-scheduling problem is directly solved by using pods in Kubernetes. In Kubernetes, the App and LogCollector containers belong to the same pod and are scheduled in the pod. Therefore, no task co-scheduling problem will occur.

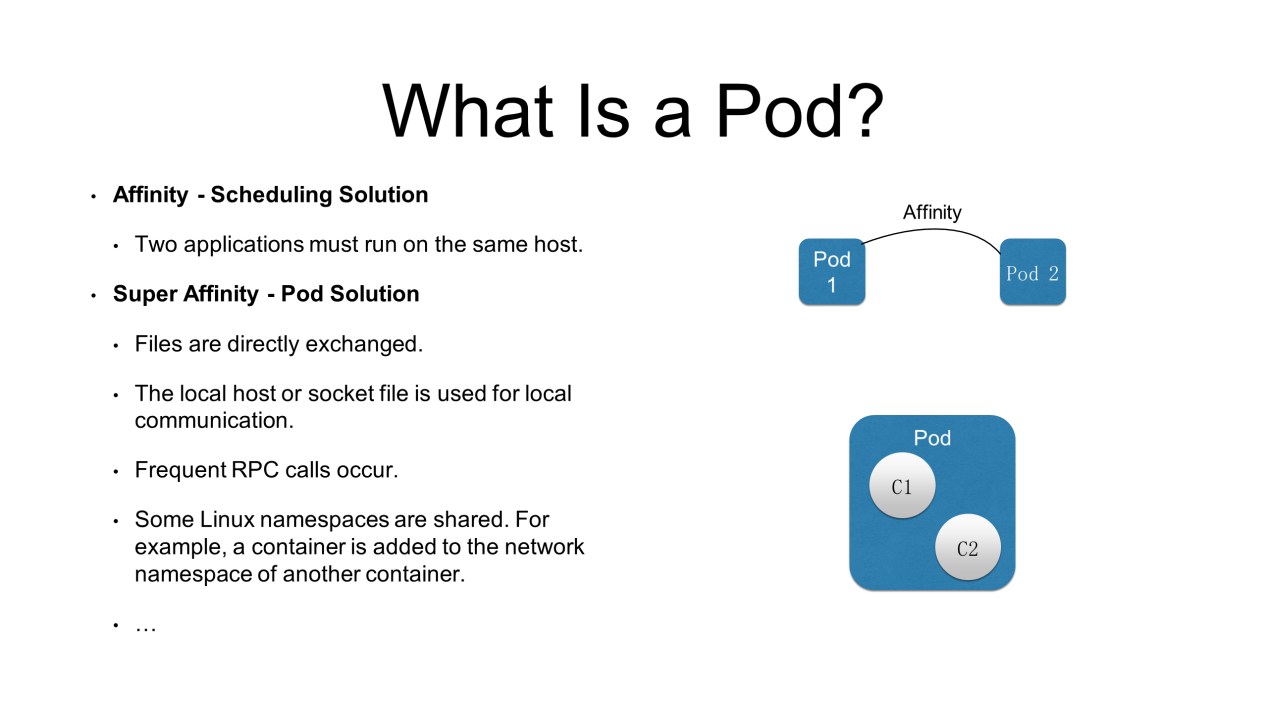

This section helps you further understand pods. Containers in a pod have a super affinity with each other. Generally, an affinity problem is solved by scheduling.

For example, two pods must run on the same host. In this case, both pods have an affinity and the scheduler solves the affinity problem. However, only pods solve a super affinity problem. Without super affinity, all pods or the entire application cannot be started.

Now, what is a super affinity? It can be divided into the following categories:

All the preceding relationships are super affinities and are solved by using pods in Kubernetes.

To conclude why pods are required, it"s important to understand that pods help to:

1) Describe a super affinity

2) Schedule containers or businesses with a super affinity in a centralized manner

A pod is a logical concept. The following explains how to implement a pod on a server:

The core to this case is to efficiently share certain resources and data among multiple containers in a pod.

Containers are separated by Linux namespaces and control groups (cgroups). Therefore, the solution is to remove the separation and share resources and data among containers, which is the major concern for pod design.

The specific solution involves two parts: network and storage.

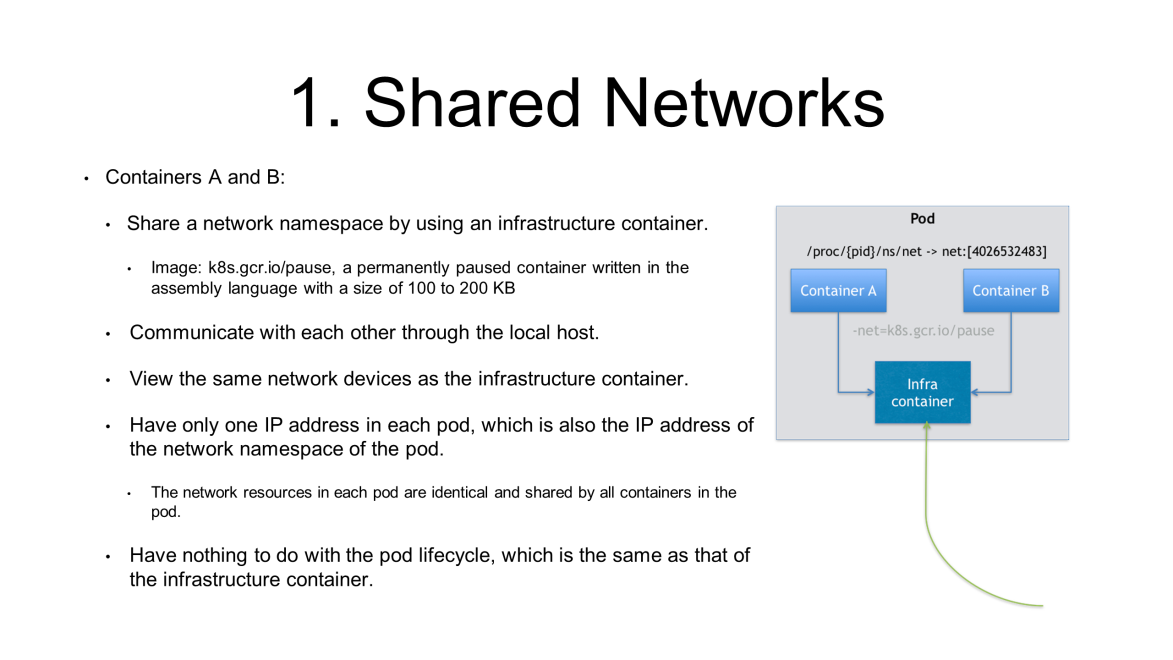

First, let"s figure out how multiple containers in pod share networks.

For example, a pod contains container A and container B and they need to share the network namespace. In Kubernetes, an infrastructure container is created in each pod to share the network namespace of the pod.

The infrastructure container is a small image with a size of 100 to 200 KB. It is a permanently paused container written in the assembly language. All the other containers join the network namespace of the infrastructure container.

Therefore, the network view is identical to all containers in a pod. That is, the network-related information, such as the network device, IP address, and MAC address, is identical for all containers in the pod. Here, the network-related information is from the infrastructure container created in the pod for the first time. This is how network sharing is implemented in a pod.

A pod has only one IP address, which is the IP address of the network namespace of the pod and also the IP address of the infrastructure container. Meanwhile, all other network resources are identical and shared by all containers in each pod. This is how sharing is implemented in a pod.

In this case, an intermediate container is required. Therefore, the infrastructure container in a pod must be started first. In addition, the lifecycle of a pod is the same as that of the infrastructure container but is irrelevant to containers A and B. This is why Kubernetes allows the updating of a single image in a pod. Thus, performing an operation does not recreate or restart the entire pod, which is a very important design idea.

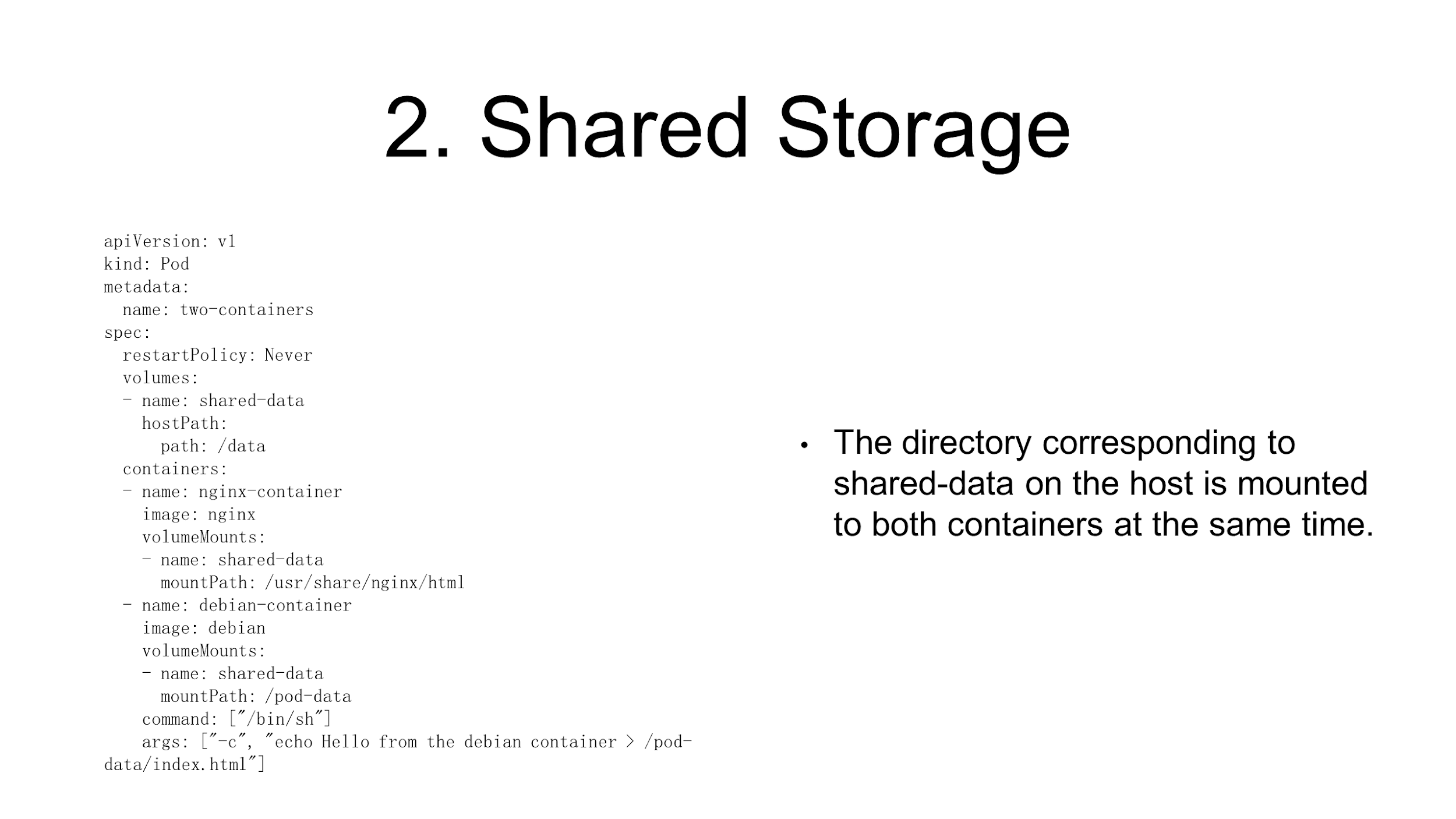

How do containers share storage in a pod? This is quite simple.

For example, a pod contains an Nginx container and a common container. To access files in the Nginx container through Nginx, it"s mandatory to share the directory. Sharing files or directories in a pod is simple, which is actually done by shifting volumes to the pod level. All containers in the pod share all volumes.

As shown in the preceding figure, the volume is named shared-data and is located at the pod level. The shared-data volume is shared by containers after being mounted into the containers. This is how Kubernetes shares storage for containers in a pod.

In the preceding example, the App container writes logs into a volume. The volume is immediately visible to the LogCollector container after being mounted to the container. This is how a pod implements storage sharing.

Now, after understanding why a pod is required and how pods are implemented, let"s deep dive into the container design mode, which is advocated by Kubernetes.

For example, to release an application written in Java, one must save a WAR package in the web apps directory of Tomcat to start the application. Several methods are available to implement the same.

However, maintain a distributed storage system for this method because a container is migratable, with changeable status. The container may start on host A the first time but on host B the second time. Therefore, a distributed storage system is required so that a container finds the WAR package no matter it is on host A or B.

Even with a distributed storage system like volumes, maintain the WAR package in the volume. For example, develop a Kubernetes volume plug-in to download the WAR package required for application startup to the volume before a pod starts. Then, the WAR package can be mounted and used.

This operation is complex, and the container must depend on a persistent storage plug-in for managing the WAR package content in the volume.

A more commonly used method is needed to use or release an application even if no distributed storage is available in the local Kubernetes instance.

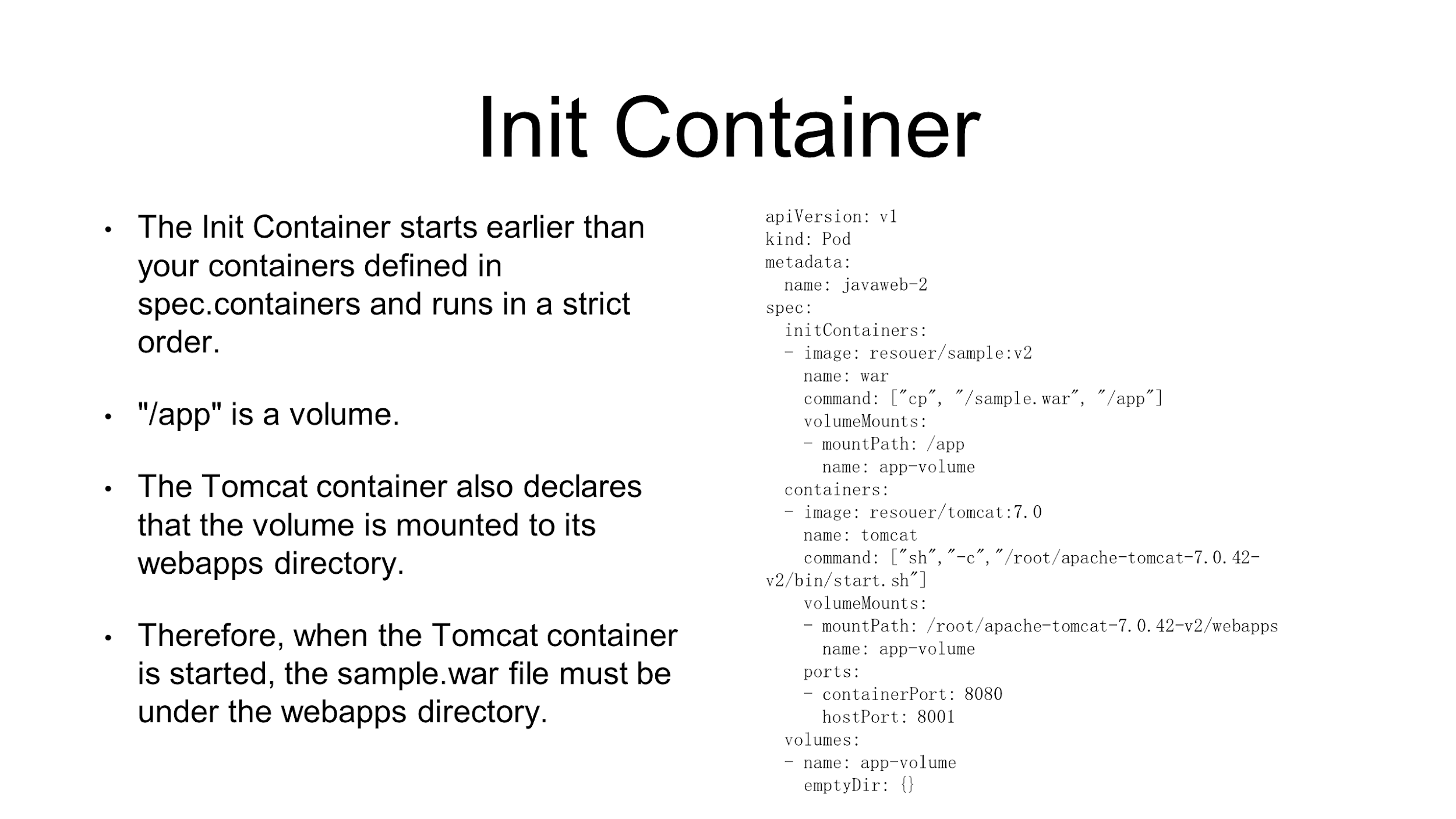

In Kubernetes, Init Container is exactly the method.

For example, in the YAML file shown in the preceding figure, an Init Container is defined only to copy the WAR package from an image to a volume. After the operation, the Init Container exits. Therefore, the Init Container starts before the container and runs in a strict order.

The app directory to which the WAR package is copied is actually a volume. As mentioned previously, a pod contains multiple containers that share a volume. Therefore, only a Tomcat image is packaged in the Tomcat container. However, before startup, the container needs to declare the app directory as its volume and mount the package to the webapps directory.

At this time, since an Init Container has been run to copy data, the volume must contain the sample.war package of the application. While starting the Tomcat container to mount the volume, find the sample.war package in the container.

Therefore, a pod is self-inclusive and may start in any Kubernetes instance in the world. The pod is released regardless of whether a distributed storage system is present or whether the volume is persistent.

In this typical example, two containers playing different roles are combined to package the application according to the Init Container in a pod. This is a typical container design mode in Kubernetes, which is named Sidecar.

It implies defining some special containers in a pod to complete certain auxiliary work for the main business container. In the preceding example, the Init Container is a Sidecar that only copies the WAR package from an image to the shared directory for the Tomcat container.

The sidecar also performs some other operations.

One obvious advantage of this practice is that it actually decouples the auxiliary functions from the business container, allowing the Sidecar container to be released independently. More importantly, this capability can be reused. That is, the monitoring Sidecar or log Sidecar may be shared throughout the company. This is the strength of the design mode.

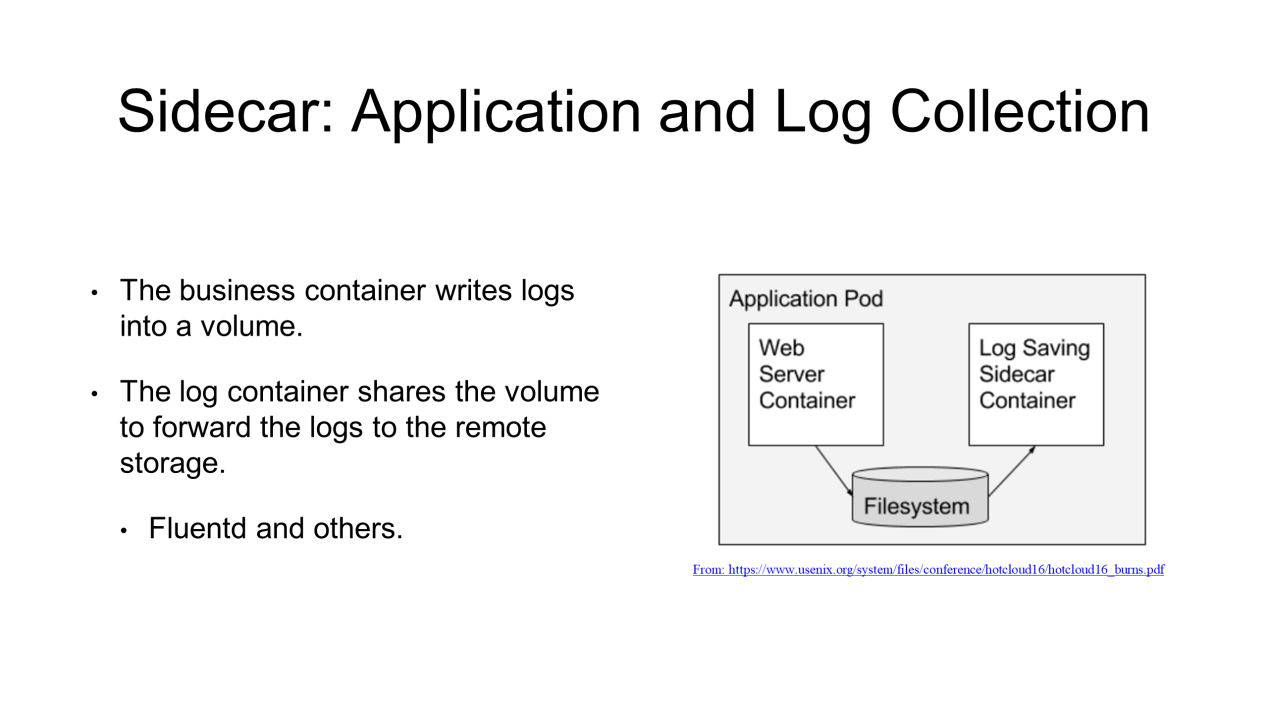

This section introduces other application scenarios of Sidecar.

For example, in the case of application log collection, the business container writes logs to a volume that is shared in a pod. This allows the log container (Sidecar container) to share the volume, read log files, and then store them into remote storage or forward them to another case. For Fluentd log processes or log components that are commonly used in the industry, they work basically in the same way.

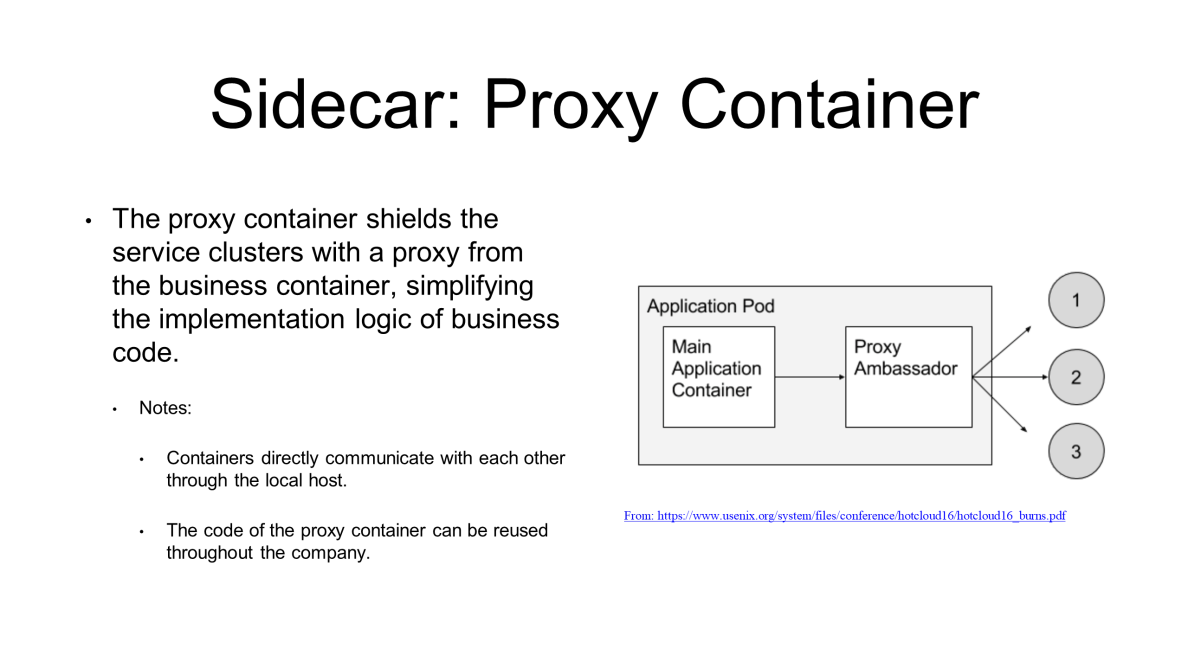

Sidecar also functions as a proxy container. What is a proxy container?

Assume a pod needs to access an external system or some external services, but the external system is a cluster. In this case, how can all the clusters be accessed with one IP address? As a solution, modify the code that records the addresses of these clusters. Alternatively, use a decoupling method- the Sidecar proxy container.

For this method, develop a small proxy to connect to external service clusters, with only the IP address of the proxy exposed. In this case, the business container connects to the proxy, which then connects to the service clusters. Containers in a pod directly communicate with each other through the localhost without any performance degradation, as they share the same network namespace and network view.

Therefore, the proxy container does not compromise performance upon decoupling. More importantly, the code of this proxy container is reused throughout the company.

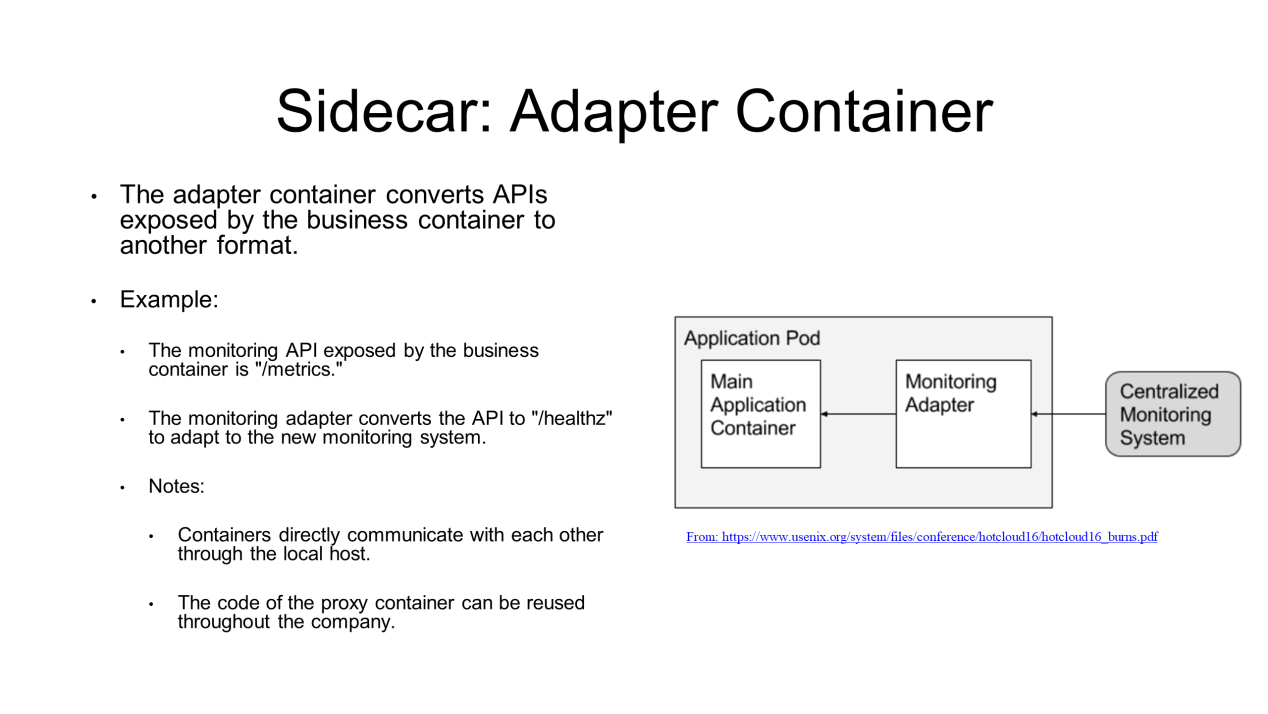

An adapter container is the third design mode of Sidecar.

For example, an exposed business API is in format A, but an external system that only recognizes API format B needs to access the business container. In this case, the code of the business container needs to be modified. In fact, use an adapter to complete the conversion.

For example, the exposed monitoring API of the business container is /metrics, and you can access the API by accessing the /metrics URL of the container. However, the monitoring system has been upgraded and its access URL is /healthz. In this case, monitoring is unavailable because only the /healthz URL is exposed, not the /metrics URL. To address this, either modify the code or develop an adapter to forward the requests destined for /healthz to /metrics. Then, the adapter exposes the /healthz URL to the external system, and the business container continues to work.

Here, the point is that containers in a pod directly communicate with each other through the localhost without any performance degradation. In addition, this adapter container might be reused throughout the company. These are all benefits of the design mode.

The following sums up the article in a lucid manner:

Pods and container design modes are fundamentals of the Kubernetes system. On reviewing the utilization of pods in a company or team, teams might find that the so-called "rich container" design is used. However, this design is only for intermediate transition as it creates many bad O&M habits. We strongly recommend gradually adopting the container design mode to decouple rich containers and split each of them into smaller pods. This is also an important part of Alibaba's full migration to the cloud.

Getting Started with Kubernetes | Application Orchestration and Management of Kubernetes

634 posts | 55 followers

FollowAlibaba Cloud Native Community - February 26, 2020

Alibaba Developer - June 12, 2020

Alibaba Developer - June 22, 2020

Alibaba Developer - June 19, 2020

Alibaba Developer - April 7, 2020

Alibaba Developer - June 19, 2020

634 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Alibaba Cloud Linux

Alibaba Cloud Linux

Alibaba Cloud Linux is a free-to-use, native operating system that provides a stable, reliable, and high-performance environment for your applications.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ChatAPP

ChatAPP

Reach global users more accurately and efficiently via IM Channel

Learn MoreMore Posts by Alibaba Cloud Native Community