By Huang Ke (Zhitian), Senior R&D Engineer at Alibaba

Pod volumes are used in the following scenarios:

Now, let's take a look at the common types of pod volumes.

1) Local Storage) It may include emptydir/hostpath.

2) Network Storage) It is implemented in the in-tree and out-of-tree modes. In the in-tree mode, the implementation code is stored in the Kubernetes code repository. As Kubernetes supports more and more storage types, this mode results in difficult Kubernetes maintenance and development. In the out-of-tree mode, the drivers of different storage devices are removed from the Kubernetes code repository using abstract APIs. Therefore, we recommend the out-of-tree mode for implementing network storage plug-ins.

Next, it's important to understand the following volume concepts:

1) Projected Volumes: Some configurations, such as secret/configmap, are mounted to a container through volumes so that the programs in the container access the configurations through the Portable Operating System Interface (POSIX).

2) Persistent Volumes (PVs) and Persistent Volume Claims (PVCs): This article focuses on these volumes in the following sections.

It is critical to understand the need to introduce PVs when pod volumes are available. The volume lifecycle declared in a pod is the same as the pod lifecycle. The common scenarios are as follows:

In the preceding scenarios, it is difficult to accurately express reuse and sharing semantics through pod volumes, and it is also difficult to expand functions. Therefore, PVs are introduced to Kubernetes, which separate storage from computing, manage storage resources and computing resources using different components, and decouple the pod lifecycle from the volume lifecycle. In this way, after a pod is deleted, its PVs still exists and is reused by new pods.

When using PVs, you are actually using PVCs. So, why is there a need to design PVCs if PVs are available? PVCs are designed to simplify the storage use of Kubernetes users and decouple user storage requirements from implementation details. Generally, while using storage, you only need to declare the required storage size and access mode.

The access mode indicates whether the used storage is shared by multiple nodes (not pods) or exclusively accessed by one node (not a pod) and whether the used storage only supports read-only access or can be read and written. This is the only concern.

PVCs and PVs decouple requirements from implementation details. Declare storage requirements using PVCs. PVs are managed by cluster administrators and storage-related teams in a unified manner, which simplifies the storage use. The design of PVs and PVCs is like the relationship between object-oriented APIs and implementations. When using functions, there is no need to know the implementation details, but simply focus on APIs.

The following section deals with how PVs are generated.

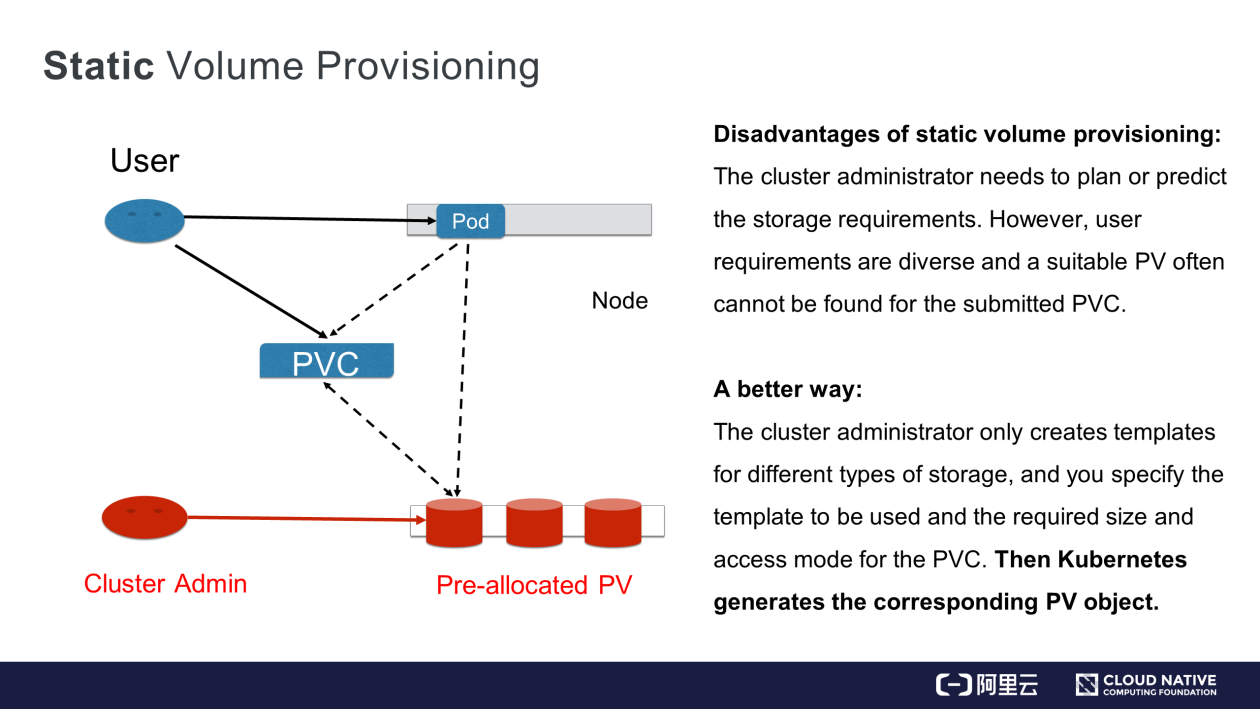

In this mode, the cluster administrator plans how users of the cluster will use the storage. Some PVs are created in advance. After users submit their PVCs, Kubernetes components help to bind the PVCs and PVs. When users need to use storage through pods, they may find the corresponding PVs based on the PVCs and use them.

What are the shortcomings of static volume provisioning? The cluster administrator needs to pre-create PVs. However, it is difficult to predict the actual requirements of the users. For example, if a user requires PVs of 20 GB but the cluster administrator pre-creates PVs of 80 GB or 100 GB, these volumes result in wasted resources and do not meet the user's actual requirements.

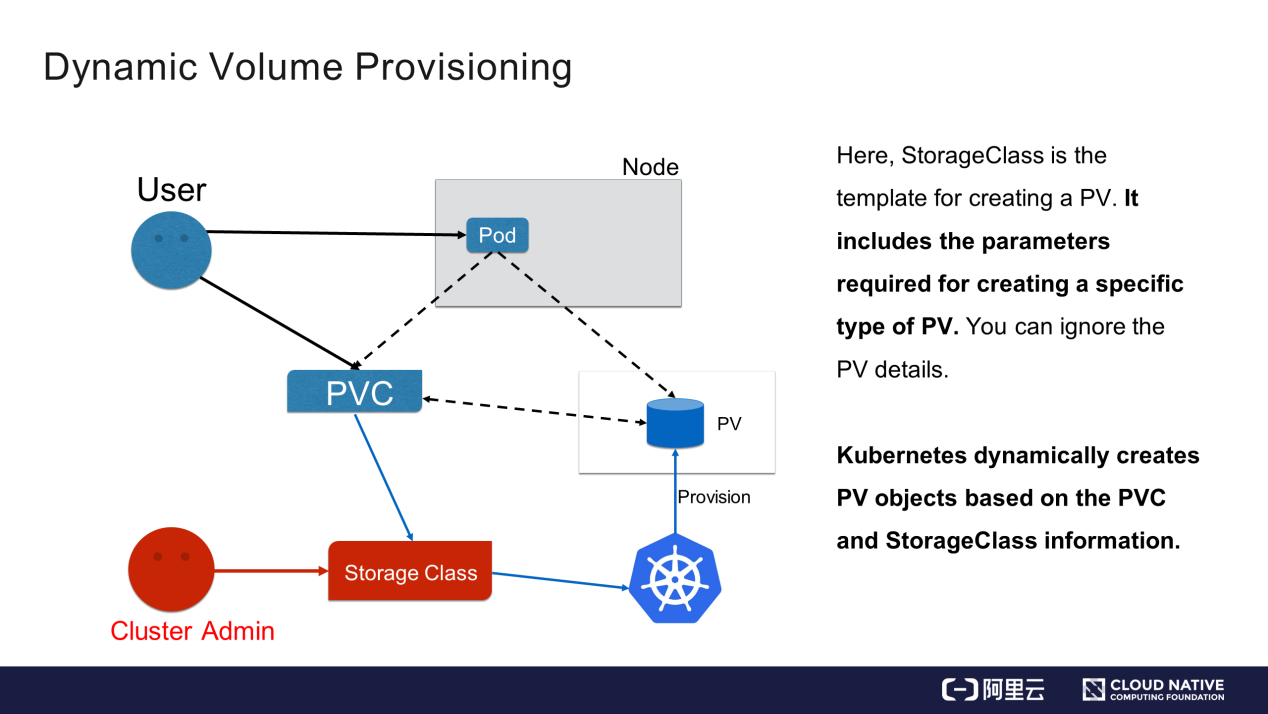

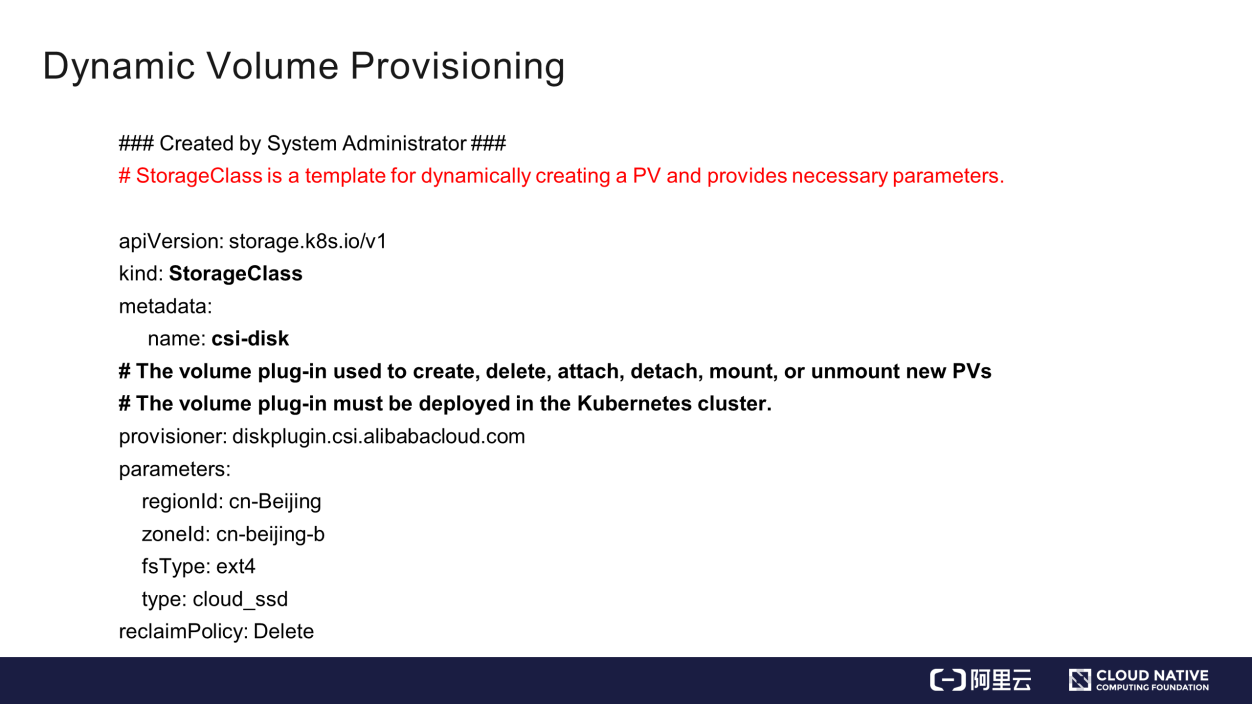

In this mode, the cluster administrator does not pre-create PVs. Instead, the cluster administrator creates a template file containing the parameters required by a certain type of storage devices, such as Block Storage and NAS. These parameters are related to storage implementation but have nothing to do with users. Users only need to submit their PVCs and specify the storage template used (StorageClass) in the PVCs.

The control component in the Kubernetes cluster generates storage devices (PVs) required by users according to the dynamic information of PVCs and StorageClass. After the PVCs and PVs are bound, pods may use the PVs. The control component generates the storage template (StorageClass) required for storage and dynamically creates PVs based on user requirements to achieve on-demand allocation. This reduces the O&M work of the cluster administrator without increasing the usage difficulty for users.

This section describes how to use pod volumes, PVs, PVCs, and StorageClass.

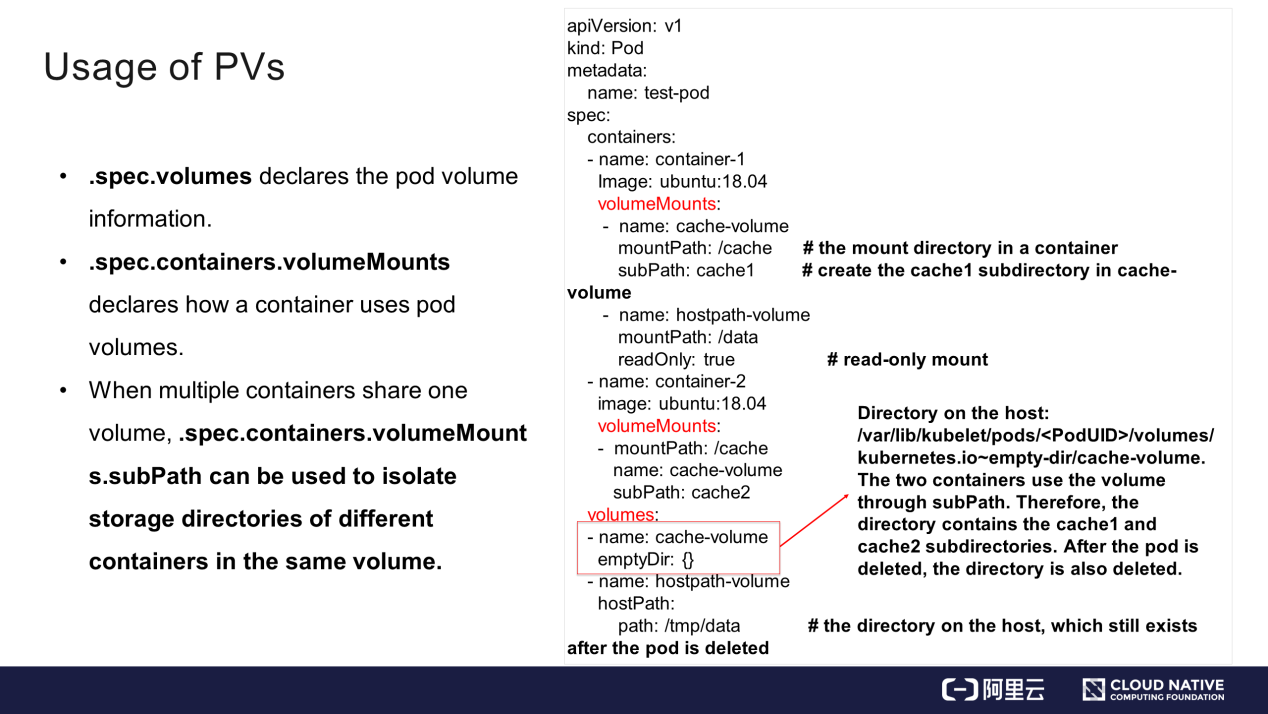

As shown on the left of the preceding figure, declare volume names and types in the "volumes" field in the YAML file of pods. One volume is of the emptyDir type and the other is of the hostPath type. They are both local volumes. The volumes are used in a container on the basis of the "volumeMounts" field. The "name" specified in "volumeMounts" indicates the name of the volume to be used, and "mountPath" indicates the mount directory in the container.

There is still a "subPath" field.

Both containers use the same volume named cache-volume. When multiple containers share the same volume, use "subPath" to isolate data. This creates two subdirectories in the volume. The data written by container-1 to the cache is actually written to the cache1 subdirectory, and the data written by container-2 is actually written to the cache2 subdirectory.

The "readOnly" field disables writing data to the mount point.

Now, what are the differences between emptyDir and hostPath? emptyDir is a temporary directory created when a pod is created. When the pod is deleted, the directory and its data are all deleted. hostPath is a directory on the host. After the pod is deleted, the directory and its data still exist.

Static PVs are created by the administrator. This article uses the Network Attached Storage (NAS) as an example. Create NAS on the Alibaba Cloud NAS console, and then add the NAS information to the PV object. After the PV object is pre-created, use a PVC to declare your storage requirements and then create pods. To create a pod, mount the storage to a mount point in a container based on the fields described above.

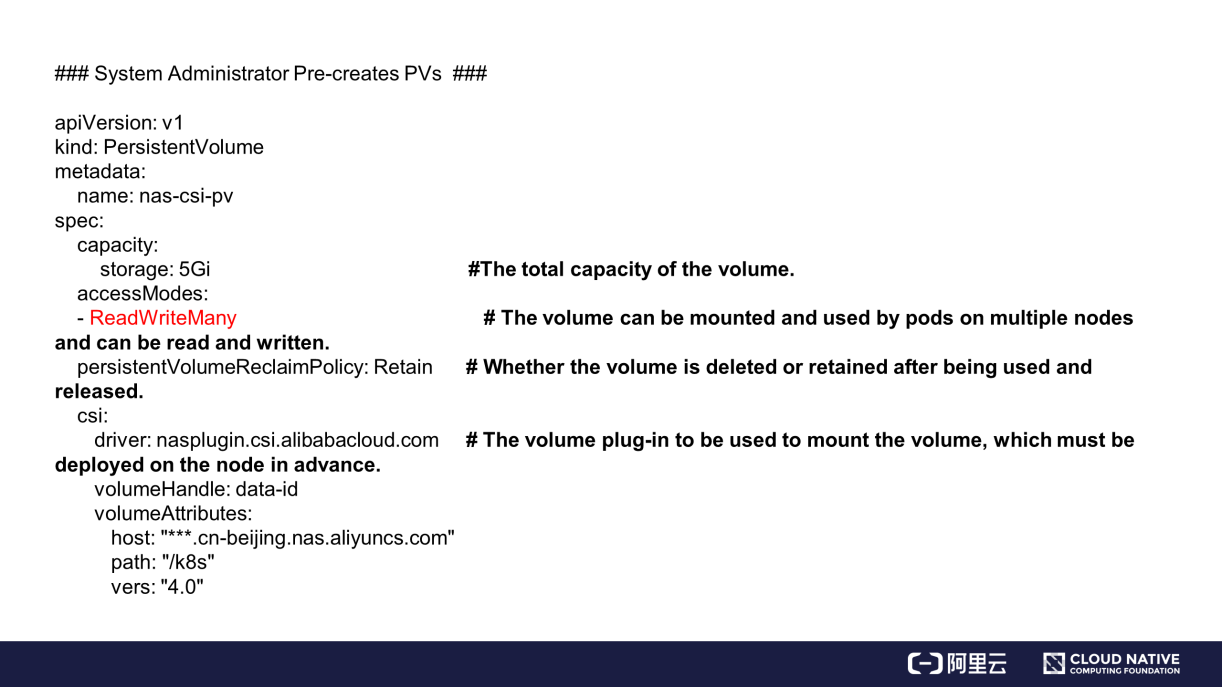

To write a YAML file, the cluster administrator creates a PV on the cloud storage vendor side and then adds related information to the PV object.

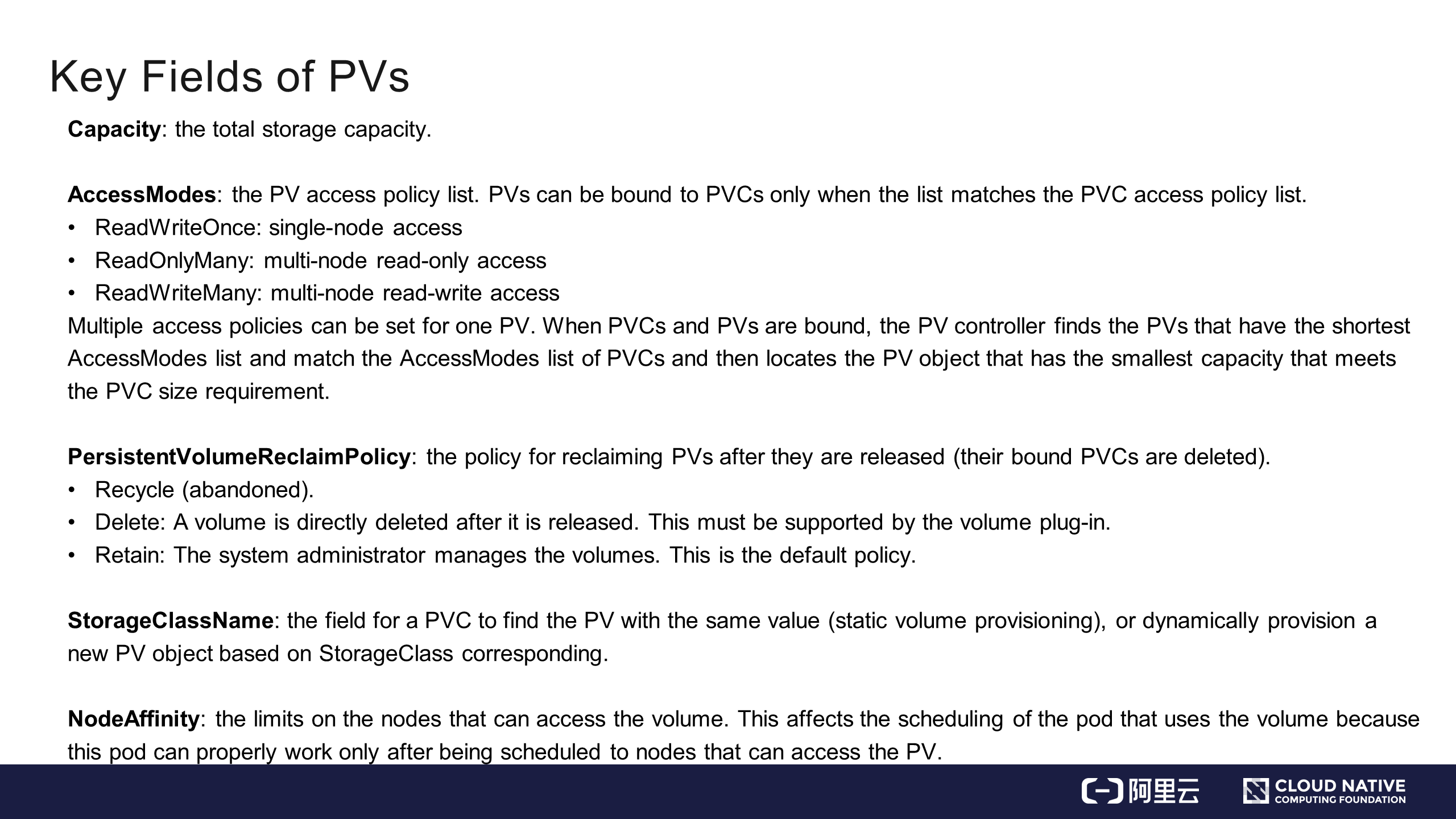

Pay attention to the several important fields for the PV corresponding to the created NAS. The "capacity" field indicates the size of the created storage. The "accessModes" field indicates the access mode of the created storage.

The "persistentVolumeReclaimPolicy" field indicates the reclaim policy of the PV, that is, whether to delete or retain the PV after the pod using the PV and the PVC are deleted.

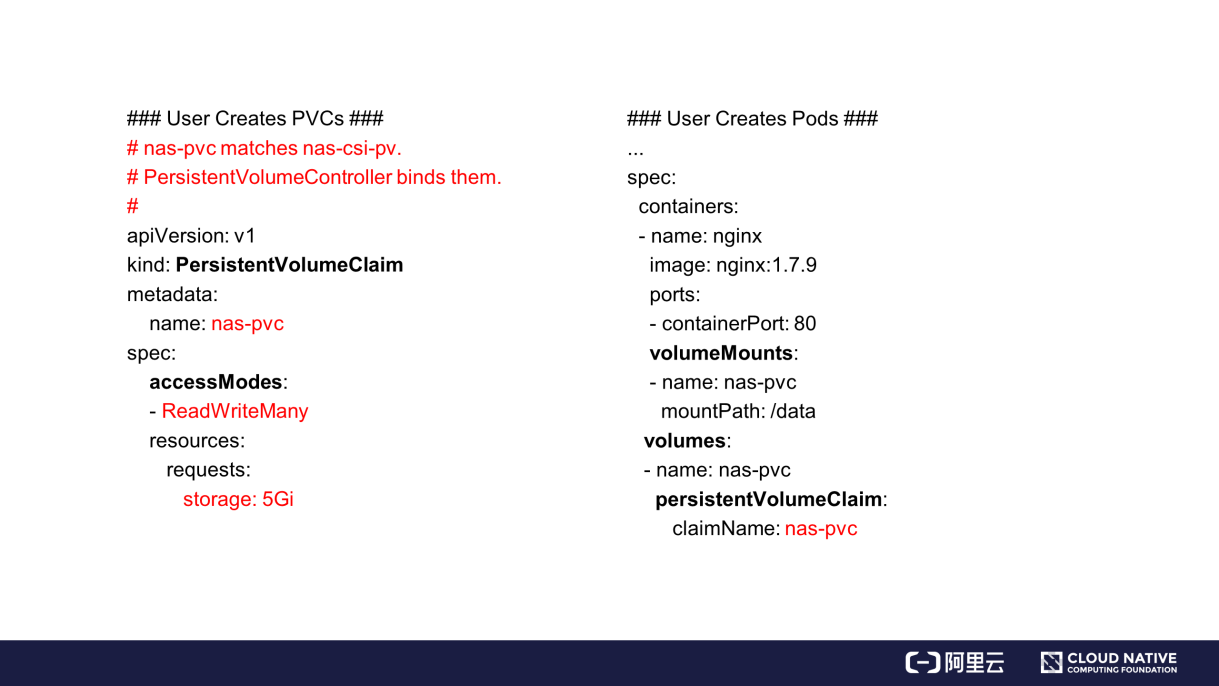

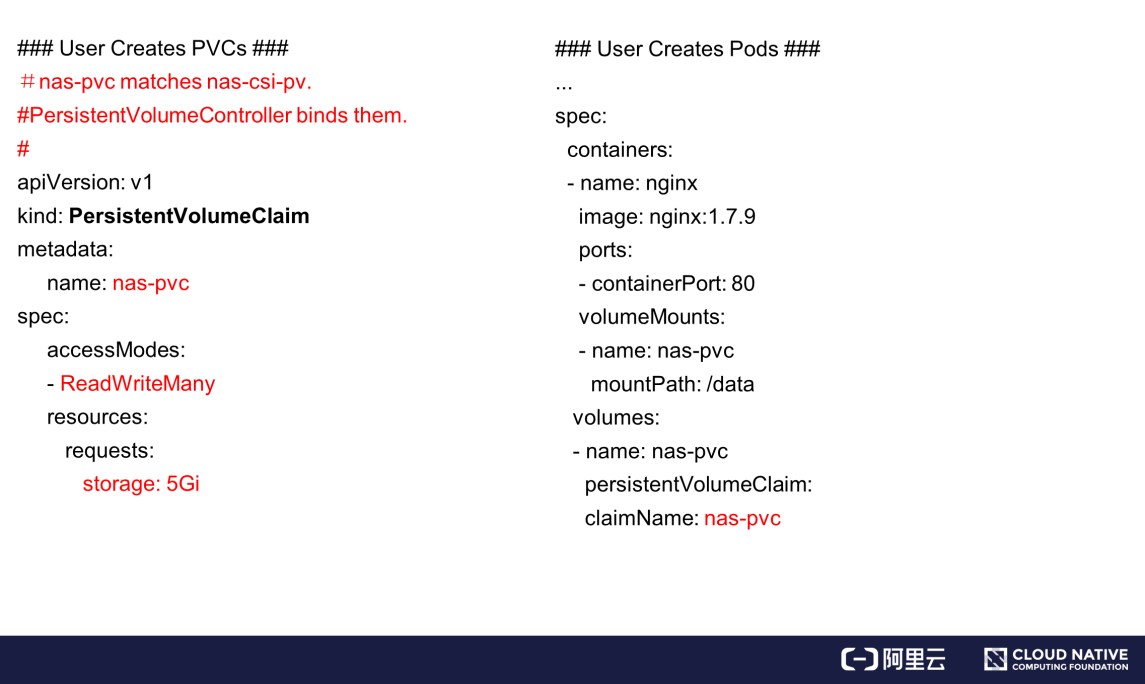

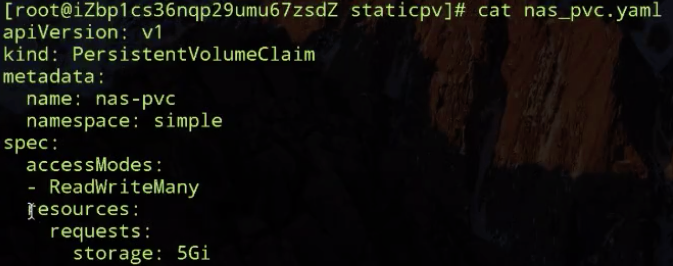

Before using storage, create a PVC object, which only needs to specify the storage requirements, not the implementation details of the storage. The storage requirements include resources.requests.storage, the required storage size and "accessModes", the access mode of the storage. The access mode is declared as ReadWriteMany, indicating that read-write access to multiple nodes is supported, which is a typical feature of NAS.

The size and access mode shown on the left of the preceding figure match those of the created static PV. In this case, while submitting a PVC, the component of the Kubernetes cluster binds the PV and PVC. Then, while submitting the YAML file of a pod, set claimName in the volume to declare the required PVC. The mount method is the same as that mentioned above. After the YAML file is submitted, the bound PV can be found based on the PVC, and then the storage can be used. This is how a PV is statically provisioned and used by a pod.

In dynamic provisioning, the system administrator creates a template file instead of pre-allocating PVs.

The template file is the StorageClass, which requires to set some important information, for example, the provisioner. The provisioner specifies the storage plug-in to be used to create a PV and its corresponding storage.

These parameters must be specified when the storage is created through Kubernetes. Here, ignore these parameters, such as regionld, zoneld, fsType, and type. The reclaimPolicy parameter indicates how a dynamic PV is processed after its pod and PVC are deleted. The "Delete" value indicates that the PV is deleted when the pod and PVC are deleted.

After the cluster administrator submits the StorageClass (PV creation template), create a PVC file first.

The storage size and access mode in the PVC file remain unchanged. In this case, add the StorageClassName field to specify the name of the template file of the dynamic PV. Here, StorageClassName is set to csi-disk.

After the PVC is submitted, the component in the Kubernetes cluster dynamically generates the PV based on the PVC and corresponding StorageClass, and then binds the PV and the PVC. After submitting the YAML file, find the dynamic PV based on the PVC and then mount it to the corresponding container.

1) ReadWriteOnce: Single-node read-write mode

2) ReadOnlyMany: Multi-node read-only mode, which is a common data sharing mode

3) ReadWriteMany: Multi-node read-write mode

The Capacity and AccessModes fields are the most important fields in a submitted PVC. After the PVC is submitted, the component in the Kubernetes cluster finds a proper PV as follows:

The component gets a list of all PVs that meet the AccessModes field of specific PVC based on the AccessModes index created for the PVs and then filters PVs based on the Capacity, StorageClassName, and Label Selector values in the PVC. If multiple PVs meet the conditions, the PV with the shortest size and shortest access mode list is chosen.

1) Recycle: (abandoned)

2) Delete: The PV is deleted when the PVC is deleted.

3) Retain: The PV is retained after the PVC is deleted. It is processed by the system administrator.

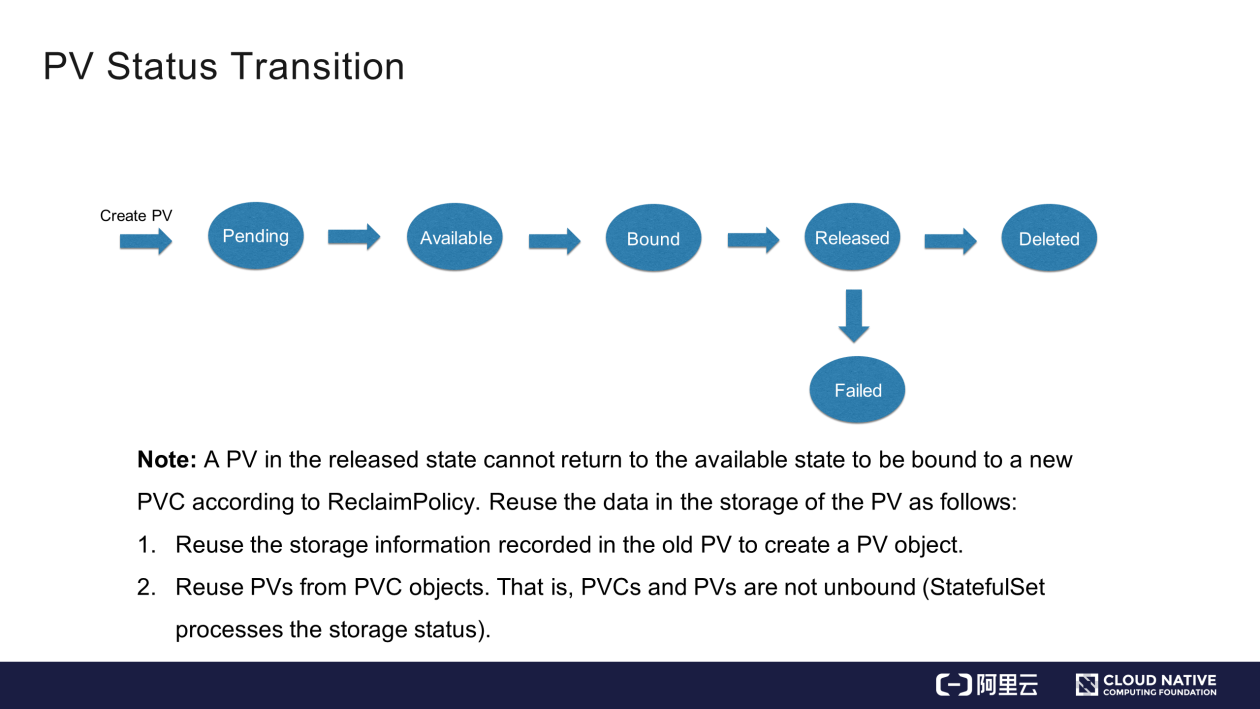

After a PV object is created, it is in the pending state for a short time. After the real PV is created, it is in the available state.

After submitting the PVC, the relevant Kubernetes component binds the PV to the PVC. In this case, both the PV and PVC are in the bound state. After using the PVC and deleting it, the PV is in the released state. Then, the PersistentVolumeReclaimPolicy field determines whether the PV is deleted or retained.

When the PV is in the released state, it cannot be directly returned to the available state, which means it cannot be bound to a new PVC. To reuse a released PV, use one of the following methods.

Method 1: Create a PV object, add the field information of the released PV to it, and then bind the PV to a new PVC.

Method 2: Delete the pod but retain the PVC object. In this case, the PVC bound to the PV still exists, and a pod directly reuses the PV based on the PVC. This is the volume-based pod migration managed by StatefulSet in Kubernetes.

Next, let's see how to perform static volume provisioning and dynamic volume provisioning in the actual environment.

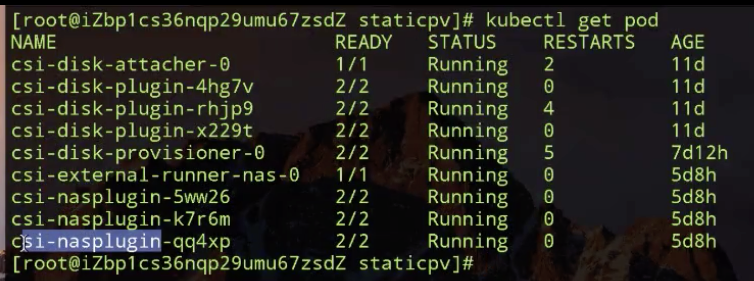

Alibaba Cloud NAS is used for static volume provisioning, while Alibaba Cloud disks are used for dynamic volume provisioning. The required storage plug-ins have been deployed in the Kubernetes cluster, including csi-nasplugin for Alibaba Cloud NAS and csi-disk for Alibaba Cloud disks.

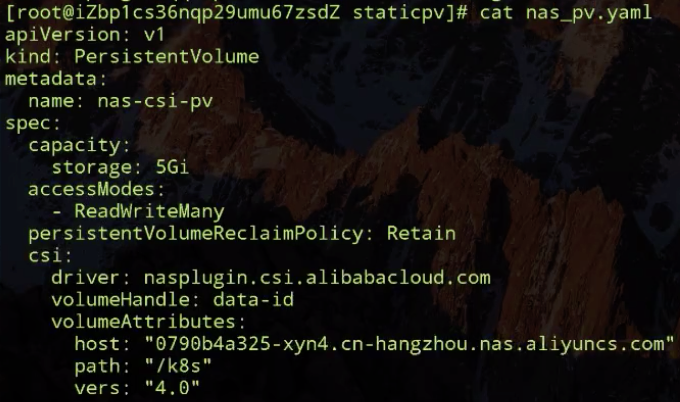

The following figure shows the YAML file of a statically provisioned PV.

volumeAttributes specifies the information relevant to the NAS pre-created by the Alibaba Cloud NAS console. The storage field under capacity is set to 5Gi, the accessModes field is set to ReadWriteMany, the PersistentVolumeReclaimPolicy is set to Retain, and the driver is used by the volume.

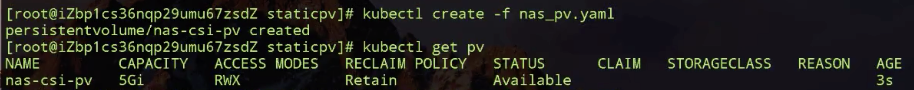

In this case, create the PV.

As shown in the preceding figure, the PV is in the available state.

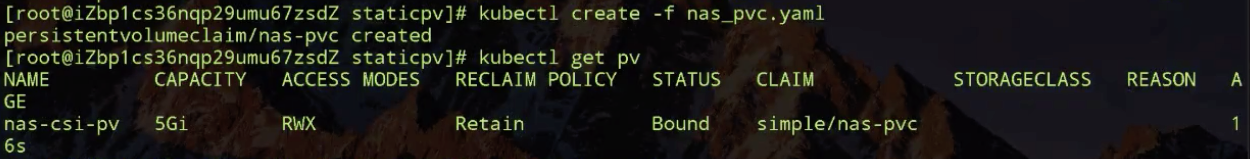

Next, create nas-pvc.

Now, the PVC has been created and bound to the created PV.

The YAML file of the PVC records the required storage size and access modes. After submitting the PVC, it is bound to a matched PV in the cluster.

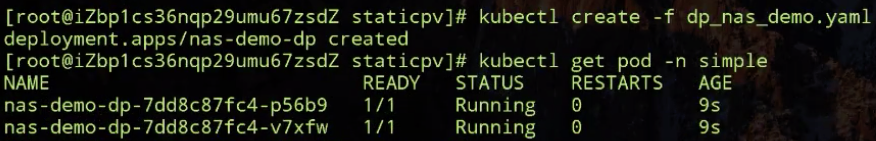

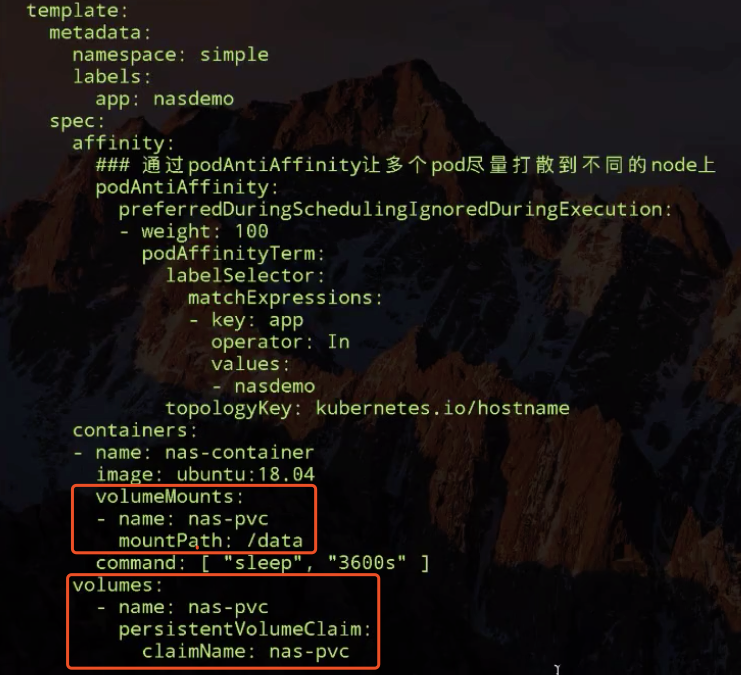

Then, create the pod that uses nas-fs.

As shown in the preceding figure, the two pods are in the running state.

The YAML file of the pod declares the created PVC object, and the PVC object is mounted to the /data directory of the nas-container container. Two replicas are created for the pod through the Deployment and then scheduled to different nodes through anti-affinity.

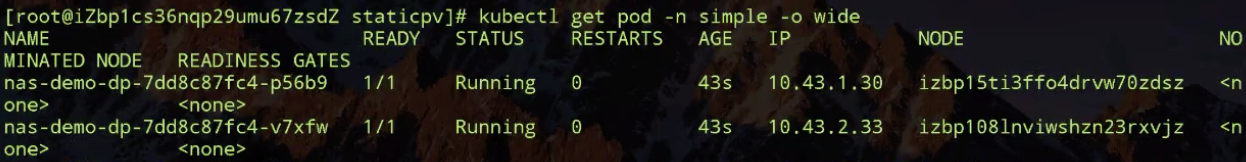

The preceding figure shows that the two pods are located on different hosts.

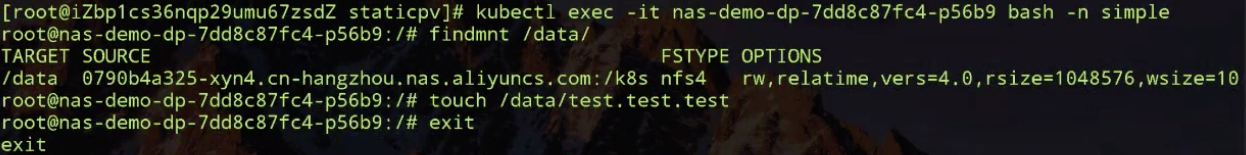

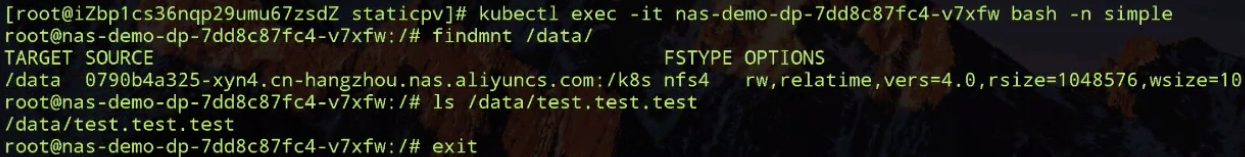

As shown in the following figure, log on to the first container, and run the findmnt command to view its mounting information. Note that it is mounted to the declared nas-fs. Then, run the touch command on the test.test.test file. Also, log on to the other container and check whether it is shared.

Exit and then log on to the first container again.

As shown in the following figure, run the findmnt command to find that the two pods are remotely mounted to the same directory, which means that the same NAS PV is used. Both pods exist, indicating that the two pods running on different nodes share the NAS.

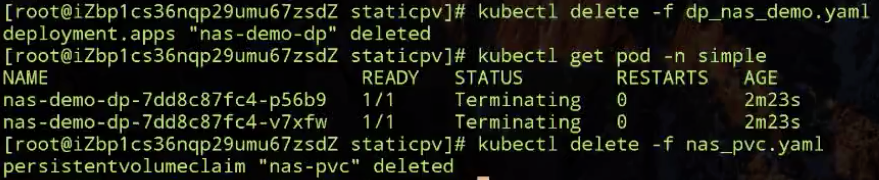

Delete the pods and then delete the corresponding PVCs. PVCs that are being used by pods cannot be deleted.

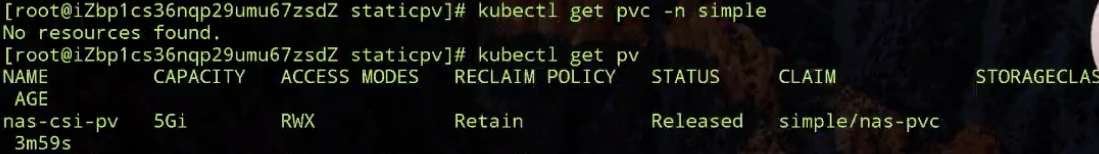

As shown in the following figure, the PVC is deleted.

The NAS PV still exists in the released state, indicating that the PVC that uses it has been deleted and it is released. The PV is retained because RECLAIM POLICY is set to Retain.

Manually delete the retained PV. In this case, there is no PV in the cluster.

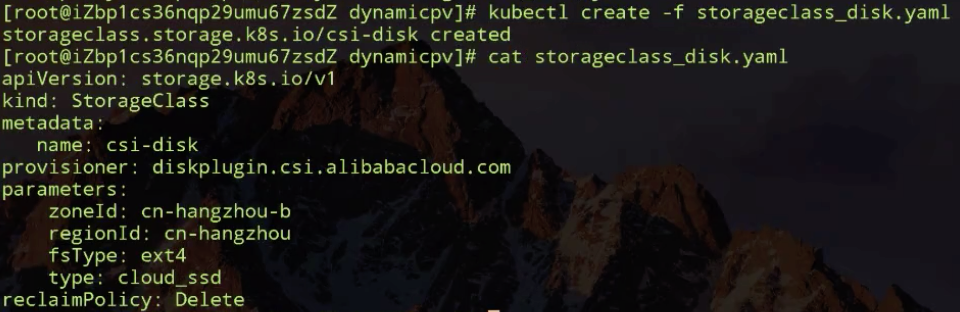

Create the StorageClass template file to generate PVs and view the content in the file.

As shown in the preceding figure, the volume plug-in (Alibaba Cloud disk plug-in created by the Alibaba Cloud team) to be used to create the storage is specified. See that the parameters part lists the parameters required for storage creation. Ignore this part. The reclaimPolicy parameter is set to Delete, indicating that the PV created by StorageClass will be deleted after its bound PVC is deleted.

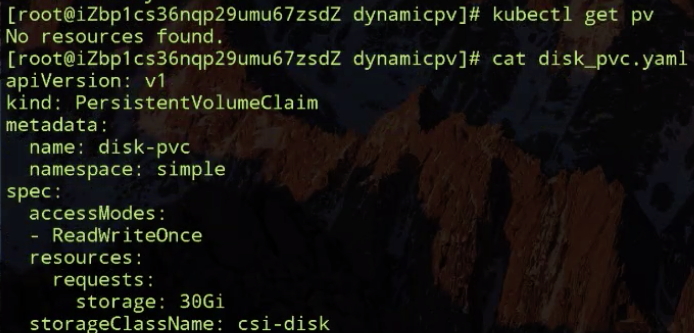

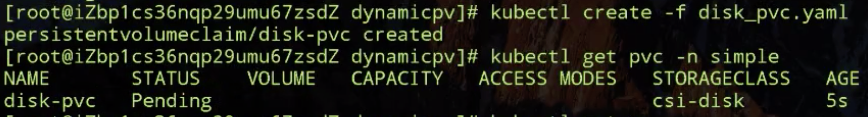

As shown in the preceding figure, the cluster does not contain any PV. Submit a PVC file. In the PVC file, accessModes is ReadWriteOnce (Alibaba Cloud disks only support the single-node read-write mode), storage is 30Gi, and StorageClassName is csi-disk, which is the StorageClass we just created.

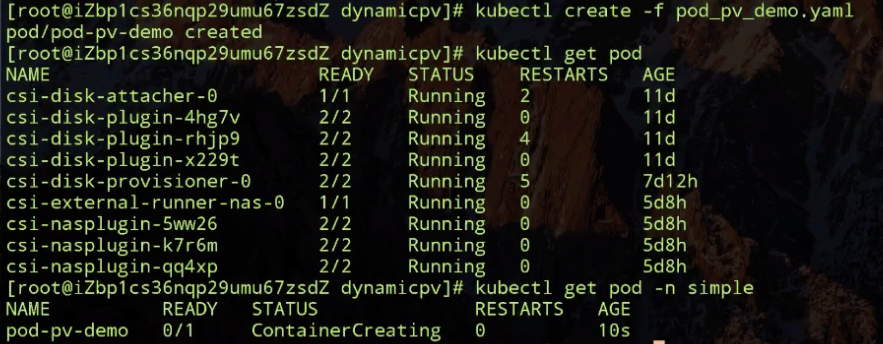

The PVC is in the pending state, indicating that its corresponding PV is being created.

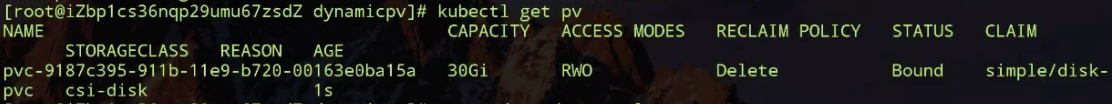

After a time, observe that a new PV is generated. This PV is dynamically generated based on the submitted PVC and the StorageClass specified in the PVC. Next, Kubernetes binds the generated PV with the submitted PVC (disk PVC). Now, use it by creating a pod.

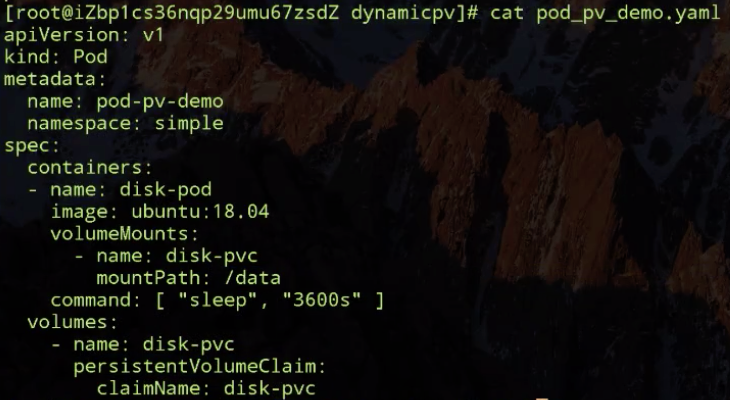

The YAML of the pod declares the used PVC. Next, create a mount point.

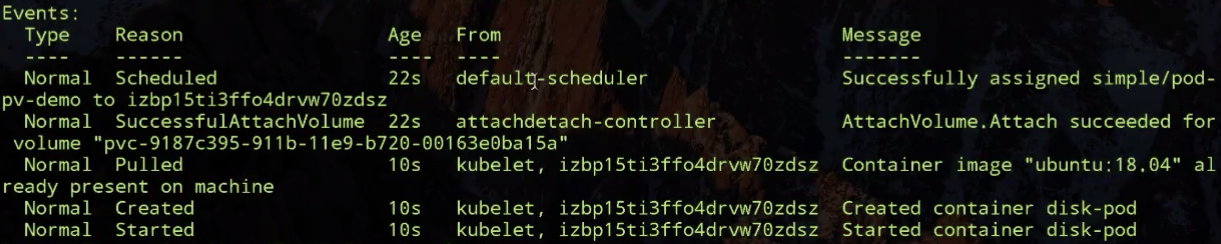

The following figure shows the events. A node is scheduled, and then the attachdetach controller attaches the disk, which implies that it mounts the corresponding PV to the node scheduled by the scheduler. Then, the corresponding container in the pod starts and the corresponding disk are used.

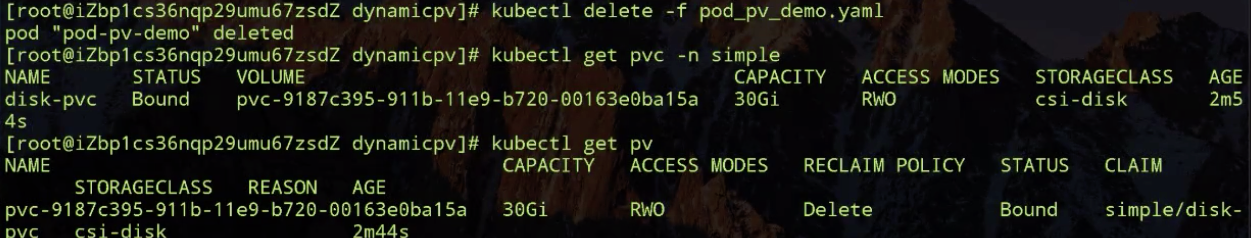

In this case, the PVC and its corresponding PV exist.

Now, delete the PVC. See that the PV is also deleted. Hence, the PV is deleted with the PVC according to reclaimPolicy.

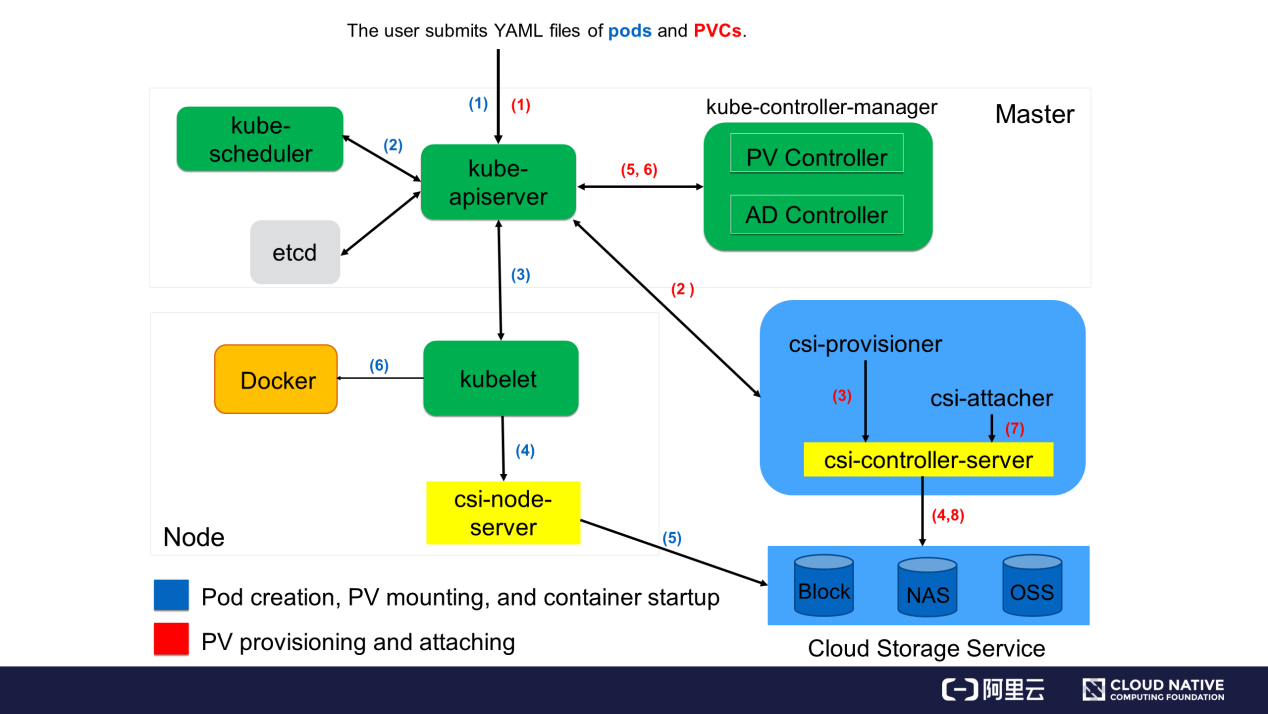

Let's look at the container storage interface (CSI) in the lower-right part of the following figure.

The Kubernetes community recommends the CSI for the out-of-tree mode. The CSI implementation is divided into two parts:

csi-controller-server and csi-node-server in the figure. The CSI connects to the OpenAPI of the cloud storage vendor to implement storage-related operations such as create, delete, mount, and unmount.While submitting the YAML file of the PVC, a PVC object is generated in the cluster and then watched by the csi-provisioner controller. Then csi-provisioner calls csi-controller-server through GRPC based on the PVC object and the StorageClass declared in the PVC object to create real storage on the cloud storage service and create a PV object. Finally, the PV controller in the cluster binds the PVC and PV, after which the PV is used.

After submitting the pod, the scheduler schedules a proper node. During pod creation, the kubelet on the node mounts the created PV to an available directory of the pod through csi-node-server and then creates and starts all containers in the pod.

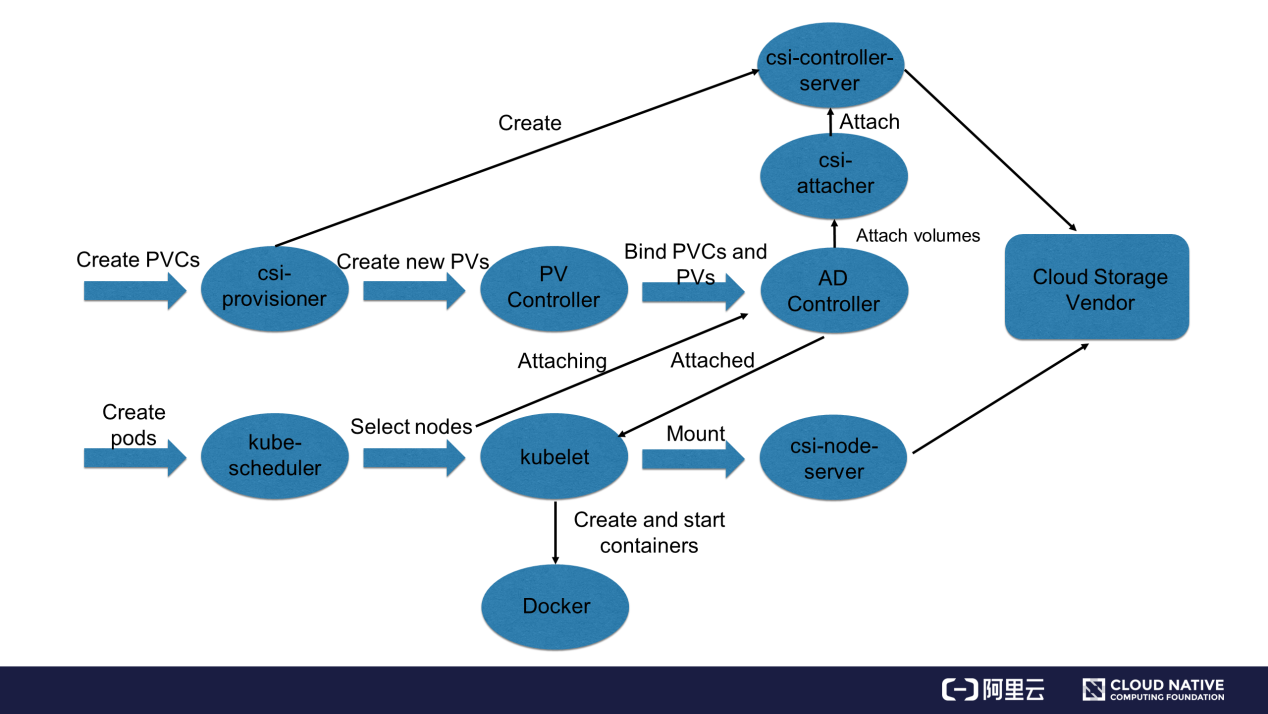

The following figure shows the PVs, PVCs, and how to use storage through CSI.

The entire process is divided into three stages:

csi-controller-server to perform a real attach operation by using the OpenAPI of the cloud storage vendor. The attach operation attaches the storage to the node on which the pod will run.In summary, in the Create stage, storage is created. In the Attach stage, the storage is mounted to a node (usually to /dev of the node). In the Mount stage, the storage is mounted to the directory accessible to the pod.

This article describes the application scenarios and limitations of Kubernetes volumes and defines the PVC and PV systems, based on which Kubernetes enhances Kubernetes volumes in scenarios such as multi-pod sharing, migration, and storage expansion. It further explains different PV provisioning modes (static and dynamic) and how to provision the storage required by pods in clusters across different modes. Finally, it describes the complete processing procedure of PVCs and PVs in Kubernetes to help users understand the working principle of PVCs and PVs.

Get to Know Kubernetes | Application Configuration Management

481 posts | 48 followers

FollowAlibaba Container Service - May 13, 2019

Alibaba Clouder - May 21, 2019

Alibaba Developer - September 17, 2021

Alibaba Developer - August 18, 2020

Alibaba Clouder - July 2, 2019

Alibaba Clouder - June 18, 2019

481 posts | 48 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native Community