First of all, let's define Kubernetes. This section will explain Kubernetes from four different perspectives.

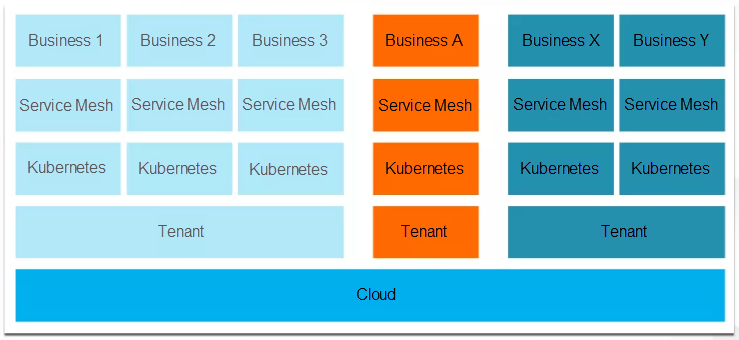

The preceding figure shows the architecture of the backend IT infrastructure we expect most companies to adopt in the future. In the future, we believe all companies will deploy their IT infrastructures on the cloud. Kubernetes allows you to divide underlying cloud resources into specific clusters for different businesses. As microservice architectures become the norm, the service governance logic of service mesh will change to mirror the two underlying layers, becoming part of the infrastructure.

Currently, almost all Alibaba businesses run on the cloud. Among these businesses, almost half of them have been migrated to custom Kubernetes clusters. As I understand it, Alibaba plans to deploy all of its business on Kubernetes clusters this year.

In some Alibaba divisions, such as Ant Financial, service mesh is already used by online businesses.

Although you may think I am exaggerating, the trend toward Kubernetes is very obvious to me. In the next few years, Kubernetes will be as popular as Linux and serve as the operating system for clusters everywhere.

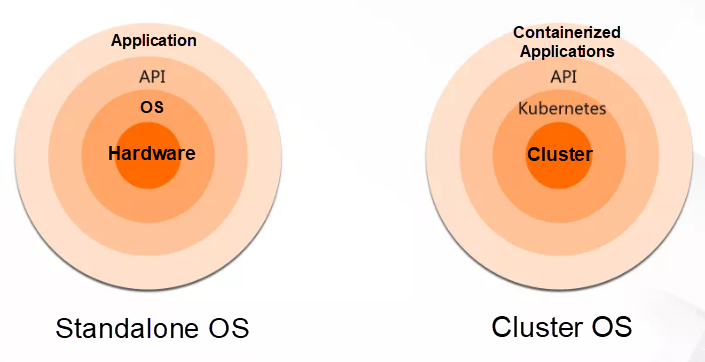

The preceding figure compares a traditional operating system with Kubernetes. Traditional operating systems, like Linux or Windows, serve to abstract underlying hardware. They are designed to manage computer hardware resources beneath them, such as the memory and CPU. Then, they abstract the underlying hardware resources into easy-to-use interfaces to provide support for the application layer above them.

Similarly, we can see Kubernetes as an operating system. In simple terms, an operating system is an abstraction layer. In Kubernetes' case, the managed lower-layer hardware is not memory or CPU resources, but a cluster composed of multiple computers. These computers are ordinary standalone systems with their own operating systems and hardware. Kubernetes manages these computers as a resource pool to provide support for upper-layer applications.

The applications that run on Kubernetes are containerized applications. If you are not familiar with containers, you can think of them as application installers. An installer packages all the required application dependencies, such as libc files. In Kubernetes, applications do not depend on the library files of the underlying operating system.

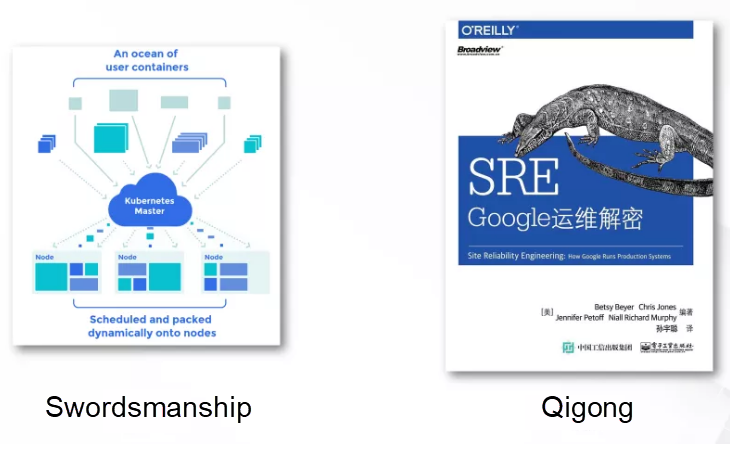

In the preceding figure, a Kubernetes cluster is shown on the left and a famous book, Site Reliability Engineering: How Google Runs Production Systems, is shown on the right. Many of you may have read this book, and many companies are currently practicing the methods it describes, such as fault management and O&M scheduling.

The relationship between Kubernetes and this book is something like the relationship between swordsmanship and the Chinese martial art Qigong. I do not know how many of you have read The Smiling, Proud Wanderer, but it is a book about a legendary swordsman. In the book, there is a school of warriors that is divided into two sections, one practices the martial art Qigong and the other practices swordsmanship. Qigong is a philosophical approach that seeks to coordinate the body, breath, and mind, whereas swordsmanship emphasizes skills with the sword. In the book, the school of warriors is separated into two sections because two disciples learned from a secret book, but each disciple only learned one part of the book.

Kubernetes is derived from Google's cluster automation management and scheduling system, Borg. This system is also the subject of the book, Site Reliability Engineering: How Google Runs Production Systems. The Borg system and the various O&M methods described in the book can be seen as two sides of the same thing. If a company only learns the O&M methods, such as establishing an SRE post, but does not understand the system managed by these methods, it's like only learning one part of the whole system.

Borg is an internal system of Google that is not open to the public. Comparatively, Kubernetes inherits some of its key methods in automatic cluster management. Therefore, if you have read this book and think it is awesome or want to practice the methods it describes, you must first have a deep understanding of Kubernetes.

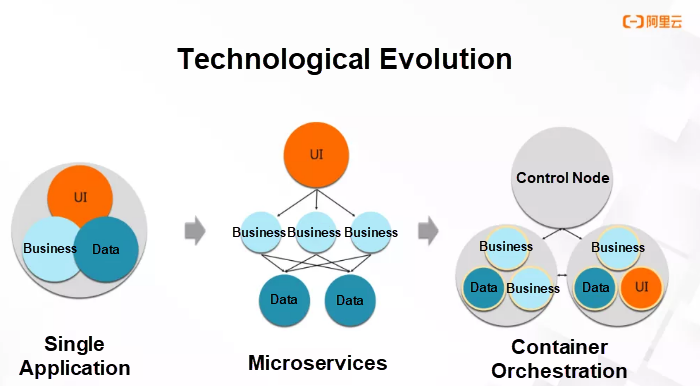

In the early days, we would build a website backend and could combine all the modules in one executable file. Just as in the preceding figure, we would have three modules: UI, data, and business. These modules would be compiled into an executable file and run on a server.

However, if our business volume grew significantly, we could not expand the capacity of the application by upgrading the server configuration. For this reason, we would have to break up the application into microservices.

Microservices are used to split a single application into smaller and loosely coupled applications. Each of these small applications is responsible for a business. Each application has a dedicated server, and they call each other over network connections.

The major advantage of this architecture is that we can scale out the small applications by increasing the number of instances. This solves the problem of our inability to expand an individual server.

Microservices introduce a new problem; a single instance occupies a single server. This deployment pattern wastes a lot of resources. The solution is to deploy multiple instances together on underlying servers.

However, such hybrid deployment introduces two more problems. First, we will encounter problems with the compatibility of dependency libraries. The versions of the library files on which these applications depend may be completely different, resulting in errors when they are installed in the same operating system. The other problem involves application scheduling and cluster resource management.

For example, when a new application is created, we need to consider the target server to host this application, and whether the resources of the server will be sufficient after the application is scheduled to it.

The compatibility of dependency libraries can be solved by containerizing applications. This means that each application comes with its own dependency library and only shares the kernel with other applications on the same server. Kubernetes is partially designed to address scheduling and resource management problems.

Incidentally, when there are too many applications deployed in a cluster and their relationships are complex, we cannot troubleshoot problems, such as slow responses to requests. Therefore, service governance technologies, such as service mesh will become a trend of the future.

This article also provides suggestions on the best way to learn Kubernetes, and shares experiences in troubleshooting Kubernetes cluster problems.

It's not a secret that Twitter has decided to move Twitter's infrastructure from Mesos to Kubernetes. But behind this major decision, what kind of reason and motivation would be supporting this major change to Twitter's infrastructure? This blog outlines how Twitter's switch from Mesos to Kubernetes once again proves that Kubernetes is the industry-wide standard when it comes to all things containers.

This article will take a look at some of the problems and challenges that Alibaba and its ecosystem partner Ant Financial had to overcome for Kubernetes to function properly at mass scale, and will cover the solutions proposed to the various problems the Alibaba engineers encountered. Some of these solutions include improvements to the underlying architecture of the Kubernetes deployment, such as enhancements to the performance and stability of etcd, the kube-apiserver, and kube-controller. Take a look at how Alibaba Ensured the Performance of System Components in a 10,000-node Kubernetes Cluster.

Container Service for Kubernetes (ACK) is a fully managed service. ACK is integrated with services such as virtualization, storage, network and security, providing user a high performance and scalable Kubernetes environments for containerized applications. Alibaba Cloud is a Kubernetes Certified Service Provider(KCSP)and ACK is certified by Certified Kubernetes Conformance Program which ensures consistent experience of Kubernetes and workload portability.

This course aims to help IT companies who want to container their business applications, and cloud computing engineers or enthusiasts who want to learn container technology and Kubernetes. By learning this course, you can fully understand what Kubernetes is, why we need Kubernetes, the basic architecture of Kubernetes, some core concepts and terms of Kubernetes, and how to build a Kubernetes cluster on the Alibaba cloud platform, so as to provide reference for the evaluation, design and implementation of application containerization.

Advantages of Alibaba Cloud Kubernetes

Easy to use

Costs and risks of self-built Kubernetes

2,593 posts | 793 followers

FollowAlibaba Container Service - March 12, 2021

Alibaba Clouder - August 13, 2018

JeffLv - December 2, 2019

Alibaba Clouder - August 17, 2021

Alibaba Developer - September 16, 2020

Alibaba Cloud Native - September 12, 2024

2,593 posts | 793 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Clouder