A comprehensive technical exploration of Apache Flink's evolution from embedded state management to cloud-native disaggregated architecture and future incremental computing paradigms

Author: Yuan Mei, Director of Engineering at Alibaba Cloud, Apache Flink PMC

Event: Flink Forward Asia 2025 - Real-Time Analytics Track

Apache Flink has established itself as the standard for real-time processing, delivering high performance across distributed large-scale streaming environments. But what truly powers Flink's capabilities? The answer lies in its sophisticated state management system—the memory that enables streaming applications to remember past events and influence future processing.

In this comprehensive exploration, we'll journey through the evolution of Flink's state management, from its foundational core primitives to the revolutionary cloud-native architecture of Flink 2.0, and finally to the promising horizon of next-generation incremental computation.

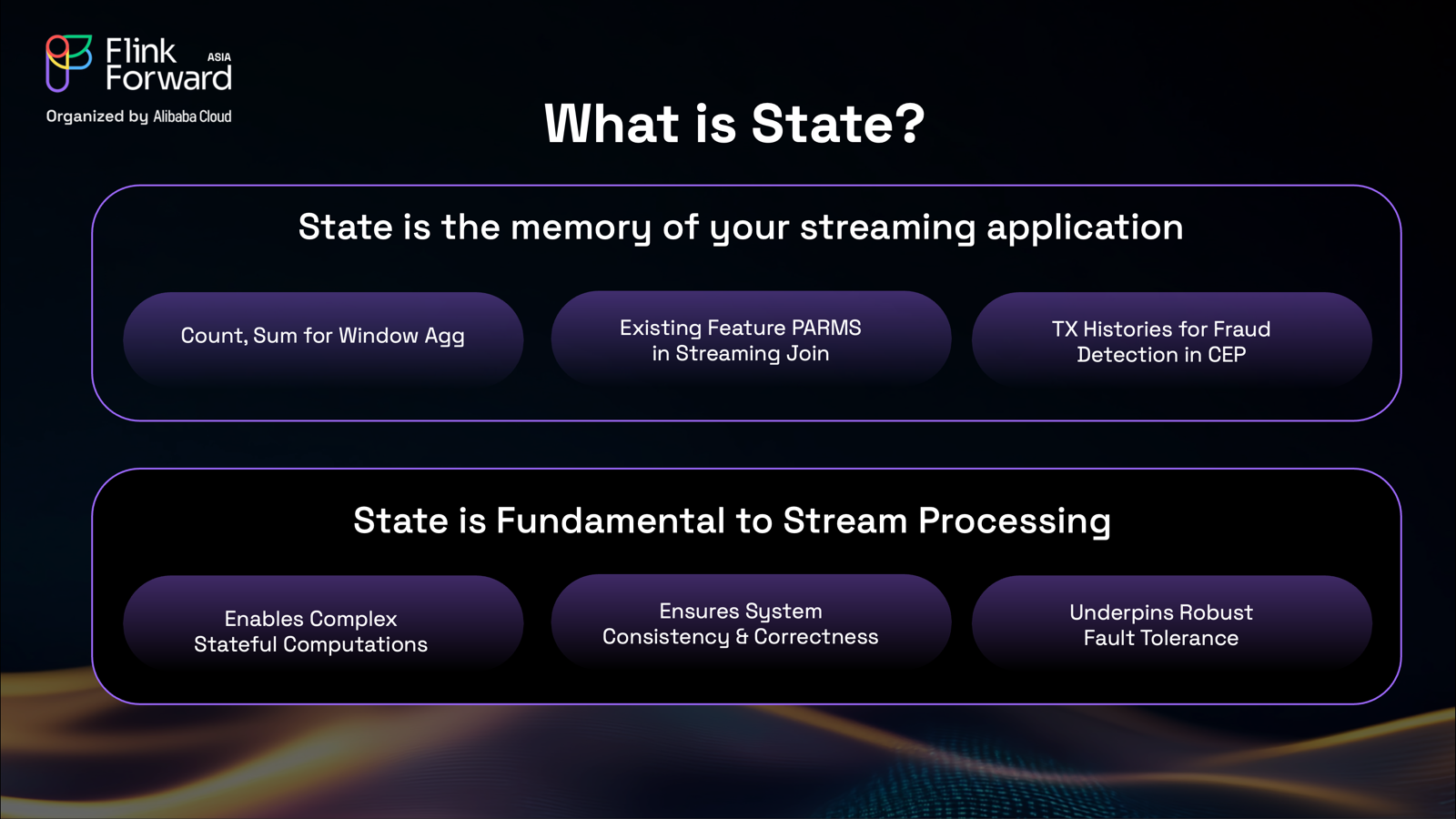

State represents the memory of your streaming applications. It's the fundamental mechanism that enables applications to remember past events and use that historical context to influence future processing decisions. Without state management, streaming systems would be limited to simple ETL operations—unable to perform the complex correlations and analytics that modern real-time applications demand.

State manifests in various forms across streaming applications. It can represent accumulated values for window aggregations such as sums, counts, and averages. State also make possible correlating streams with historical data (Join), maintaining transaction histories for fraud detection in Complex Event Processing (CEP), and preserving machine learning model parameters for real-time inference.

The introduction of robust state management in Flink marked a pivotal transformation in stream processing capabilities. Before this innovation, developers had to rely on external databases for historical data correlation, creating deployment complexity, maintenance overhead, as well as data consistency challenges.

Flink's self-maintained state management mechanism changed everything by enabling the system to memorize information internally, eliminating dependencies on external storage systems while ensuring data correctness and consistency.

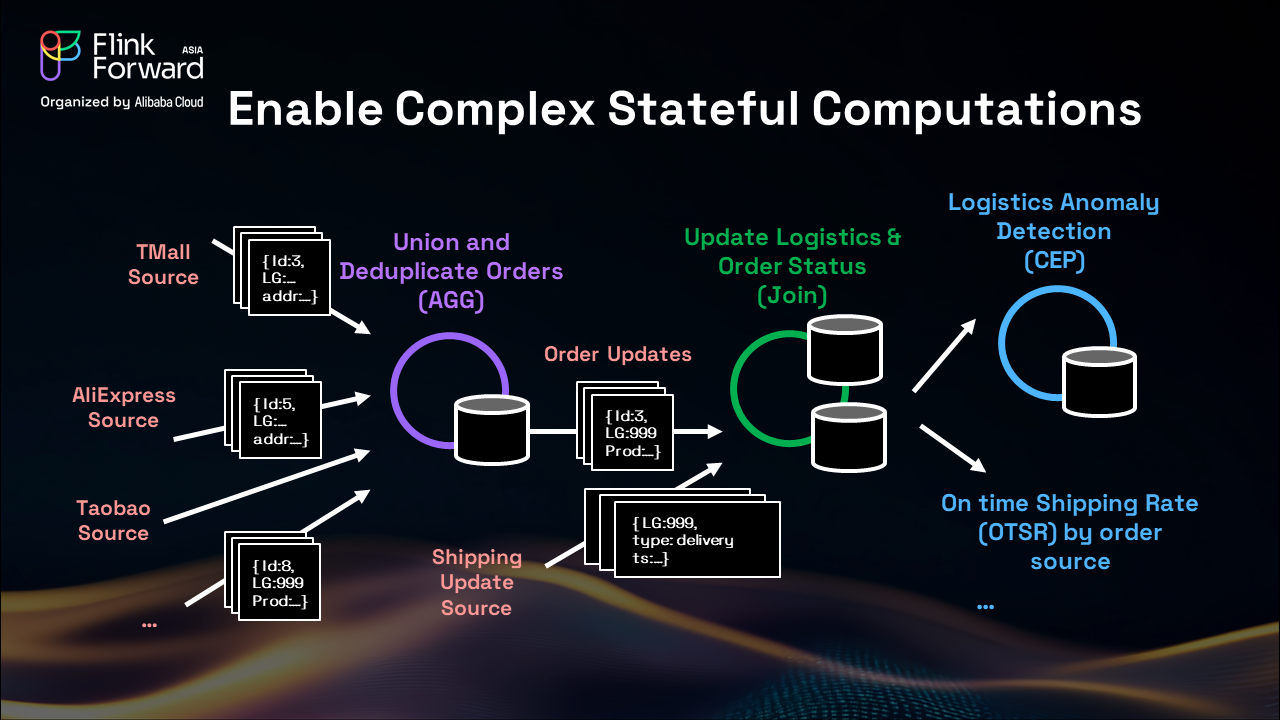

To understand the true power of Flink's state management, let's examine a complex real-world implementation: Alibaba's real-time logistics tracking system for Cainiao Logistics.

This system processes package order events from multiple e-commerce platforms (TMall, Taobao, AliExpress) through a sophisticated pipeline:

This use case demonstrates how Flink's state management enables complex stateful computations that would be impossible with traditional stateless processing.

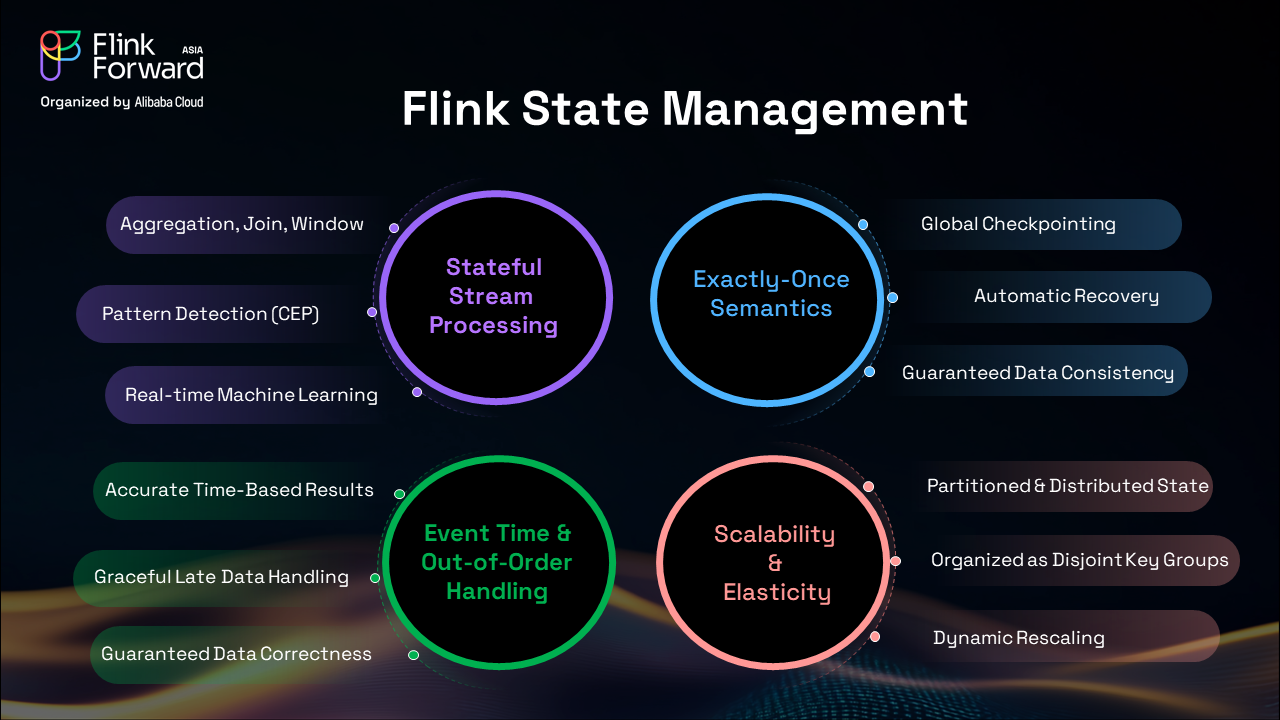

Flink's state management system delivers key critical capabilities that set it apart from other streaming systems.

Flink guarantees exactly-once processing through a global checkpointing mechanism that creates consistent snapshots across distributed state. When failures occur, the system performs atomic recovery to ensure data consistency, maintaining guaranteed data integrity through consistent state snapshots across the entire distributed topology.

Real-world data streams rarely arrive in perfect order, yet Flink delivers accurate time-based results despite significant data arrival variations. The system gracefully handles late data while maintaining processing correctness through watermark coordination that ensures temporal consistency across distributed operators.

Flink's state architecture partitions and distributes state across compute nodes using disjoint key groups that enable independent scaling decisions by repartitioning these key groups. This design supports dynamic rescaling and allows applications to adapt to changing workloads seamlessly.

The system provides low-latency state access essential for real-time requirements while maintaining robust fault tolerance through consistent snapshots. This combination ensures performance under varying load conditions, making Flink suitable for demanding production environments.

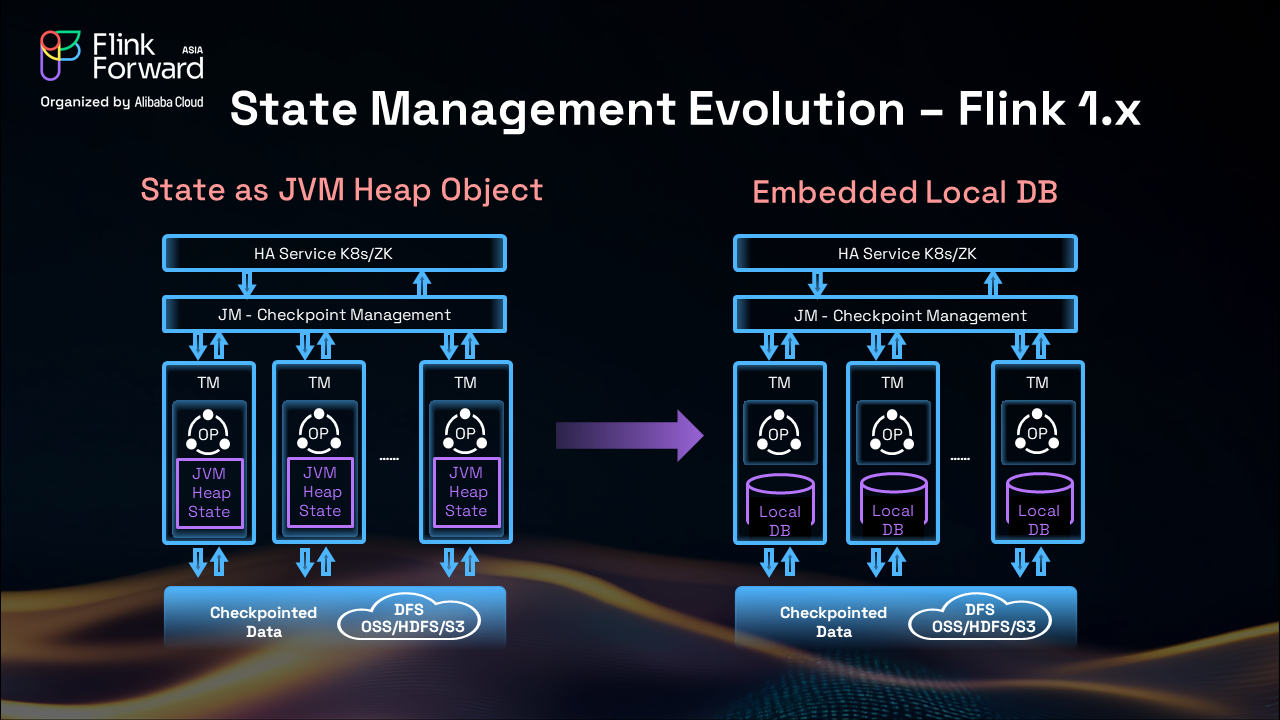

JVM Heap State: the initial approach stored state as JVM heap objects within Task Manager memory, named JVM Heap State. While effective for smaller datasets, maintaining all the states within memory was costly and unstable as state size grew larger than fitting into memory.

Embedded Local Database: to address growing state sizes, an embeded state backend that utilized the local disk was introduced. State was collocated with compute, using local SSDs for fast access while maintaining periodic DFS snapshots for consistency. The checkpionted files are loaded back to rebuild the state on each node for failure recovery and rescaling.

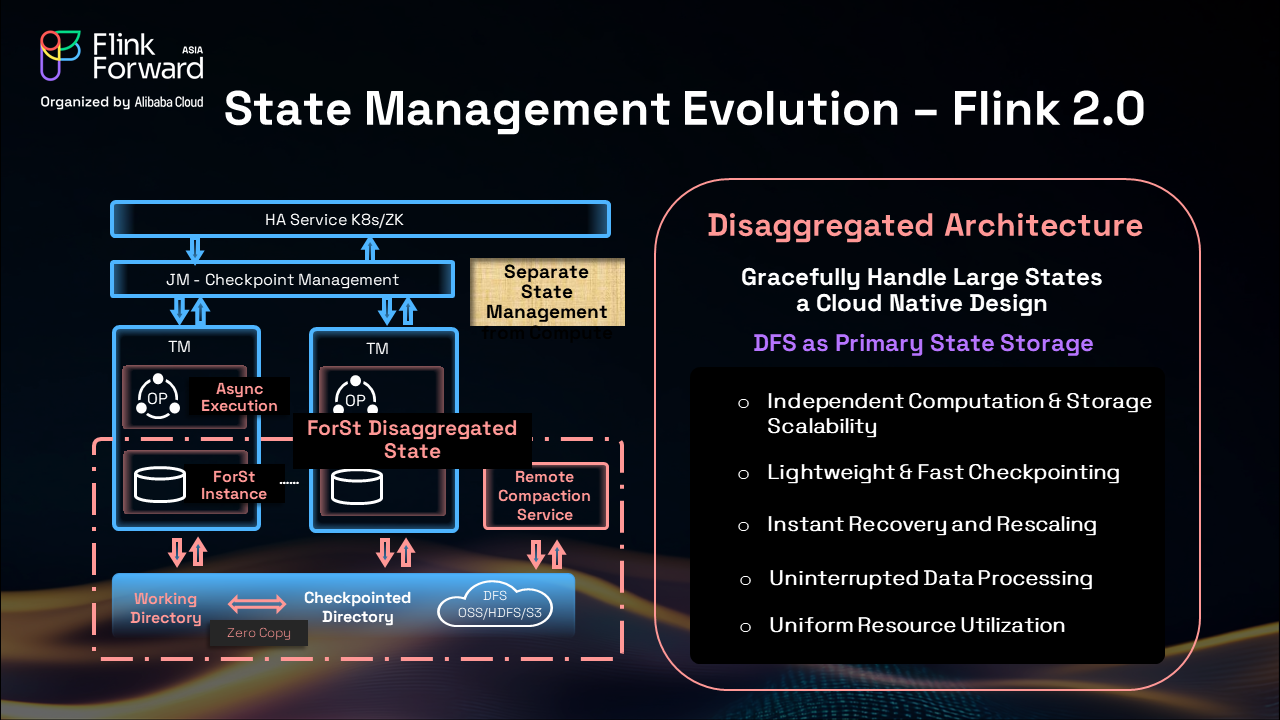

Apache Flink 2.0introduces a revolutionary disaggregated architecture that fundamentally separates compute and state management:

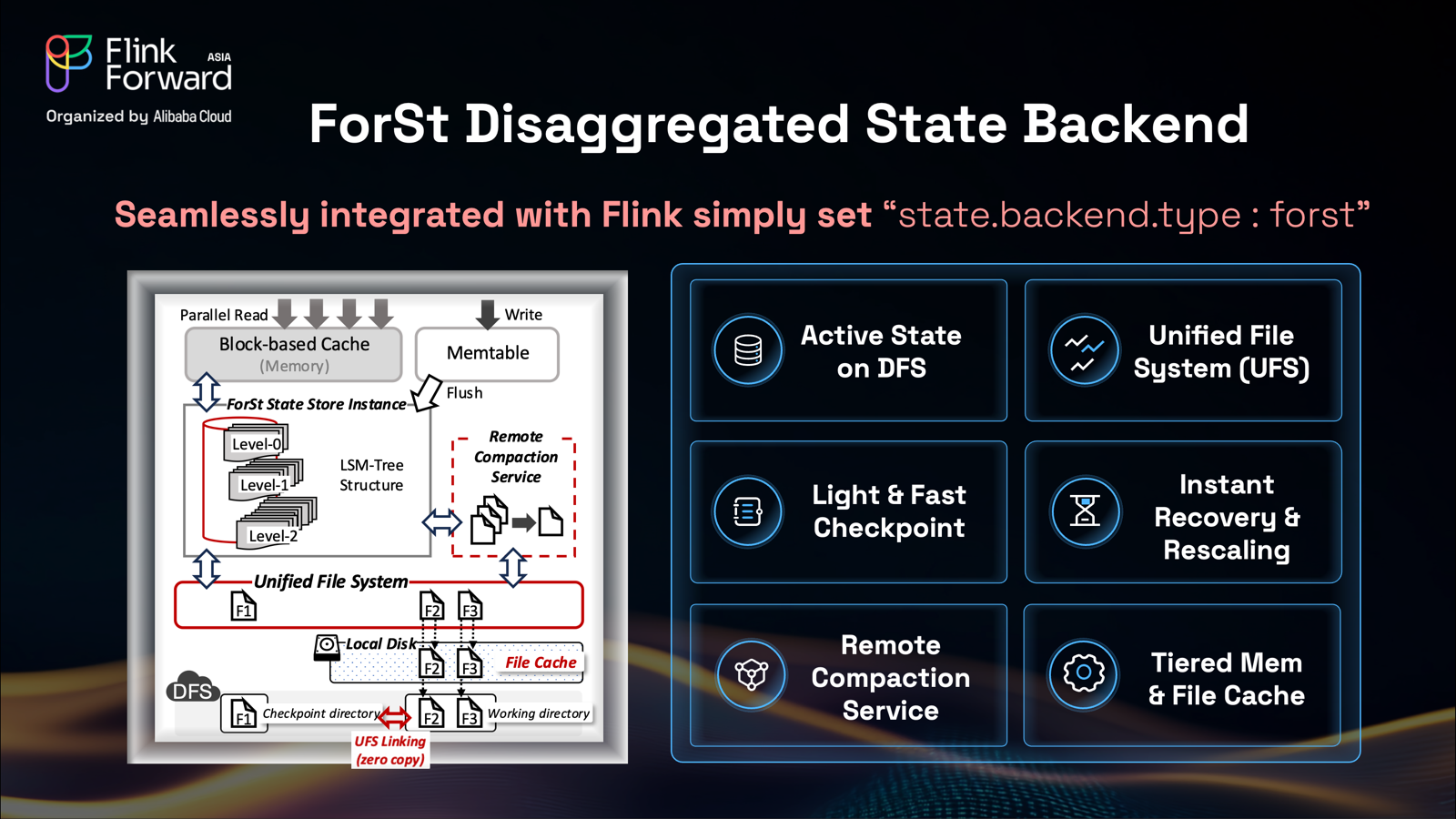

The ForSt Disaggregated State Backend represents a fundamental shift in how Flink manages state.

This architecture enables truly independent scalability where compute and storage dimensions can be optimized separately. Processing capacity can be adjusted independently of state size, while storage can be expanded without requiring changes to compute resources. This separation leads to significant resource optimization and efficient utilization across both dimensions.

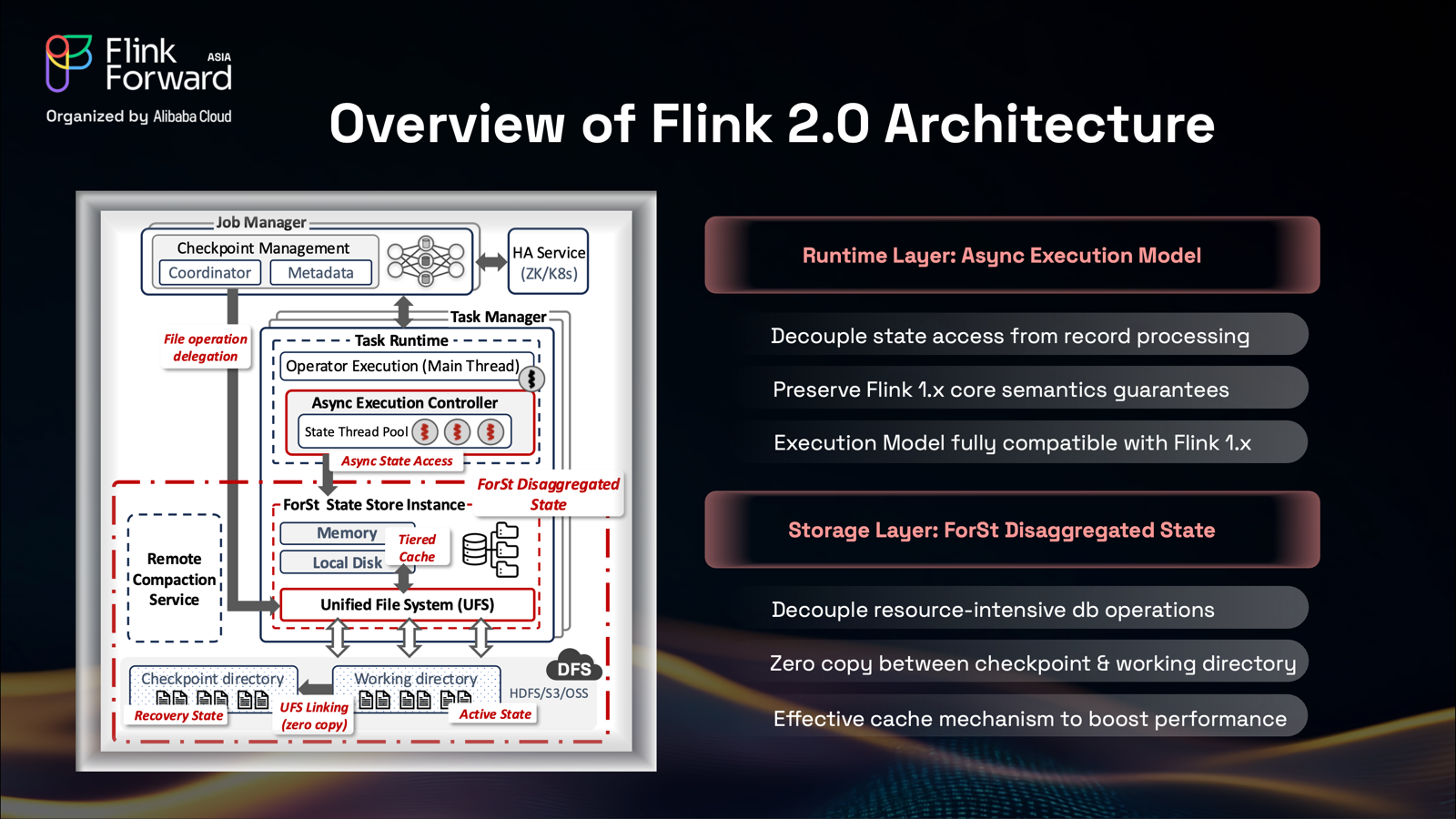

The innovation spans two critical layers:

The runtime layer introduces an asynchronous execution model that decouples state access from record processing, preventing state operations from blocking the main processing pipeline. This innovation preserves full semantic compatibility with Flink 1.x guarantees while providing a seamless migration path for existing applications.

Remote DFS access is approximately 100 times slower than local reads. The asynchronous execution model solves this by redefining record processing lifecycle:

The system separates processing into three distinct phases: non-state transformations that are CPU-intensive and executed in task threads, state access operations that are I/O-intensive and handled by separate thread pools, and post-state callbacks that return CPU-intensive work to task threads.

The Asynchronous Execution Controller (AEC) is introduced in Flink 2.0 to orchestrate this complex workflow while preserving the fundamental guarantees that make stream processing reliable. Per-key FIFO ordering provides the foundation for stream processing correctness, exactly-once processing maintains data consistency guarantees, and event time semantics ensure temporal processing accuracy.

The storage layer implements zero-copy optimization that eliminates expensive data transfers, while resource separation ensures that intensive state db operations remain isolated from core processing workloads. A sophisticated tiered memory and file cache system optimizes performance across different access patterns.

The ForSt backend maintains active state directly on distributed file systems, and implements zero-copy optimization to physically share the active working files and checkpointed files through a unified file system view. Lightweight checkpointing becomes possible in this case by eliminating expensive data transfering and copying operations. The ForSt backend also enables direct active state access on DFS, hence instant recovery is supported by removing traditional local download requirements. Remote compaction separates heavy database operations from the critical processing path, and tiered caching optimizes both memory and file access patterns.

Despite this sophisticated architecture, integration remains simple through a single configuration parameter (state.backend.type: forst).

The results demonstrate remarkable cost efficiency improvements in the mordern K8s containerlized deployment environment. The test set up is based on the Alibaba Public Cloud service pricing model, with 1 CU = 1 Core, 4 GB Mem, and 20 GB ESSD PL1 Disk. With a total state size 290 GB, Flink 2.0 demonstrates a 50% total money save. Operational performance improvements are equally impressive. Recovery, rescale, and scale-out operations now complete in under 10 seconds, with 40X acceleration comparing to Flink 1.x. For checkpointing, Flink 2.0 illustrates a lightweight procedure and checkpointing can consistently finish within 3-4 seconds regardless of state size. Finally, Flink 2.0 also shows a uniform and smooth resrouce utilization.

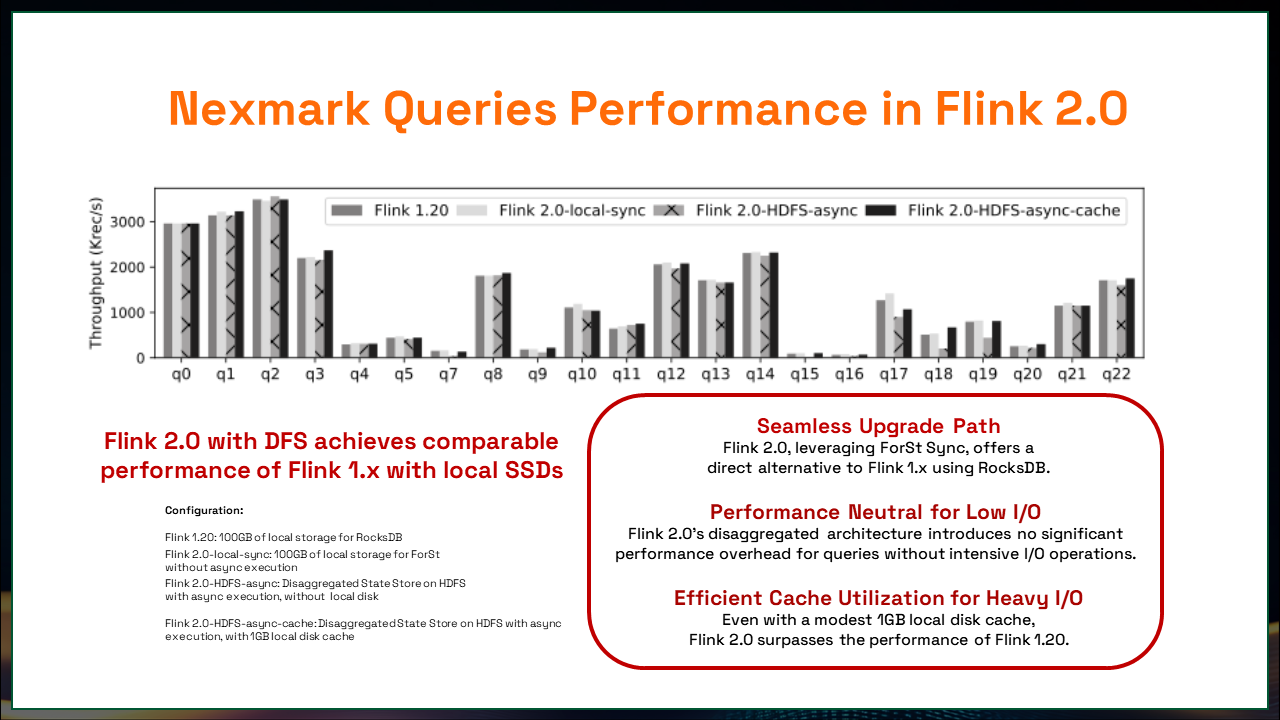

How does the performance of Flink 2.0 behave, given the aforementioned benefits?

Standardized NEXMark streaming benchmarks results validate that Flink 2.0 with active state on DFS demonstrtes performance comparable to Flink 1.x on local SSDs:

Flink 2.0's disaggregated architecture introduces no significant performance overhead for queries without intensive I/O operations, hence Flink 2.0 is performance neutral for low I/O queries. For stateless operators, the async framework is directly bypassed, hence Flink 2.0 does not introduce any overhead for stateless operators. For heavy I/O scenarios, Flink 2.0 can outperform Flink 1.x even with a modest 1GB cache configuration. Hence, users would not see obvious performance differences after they migrate their applications to Flink 2.0. Further, the upgrading path from Flink 1.x remains seamless. ForSt DB with sync execution offers a direct alternative to Flink 1.x with RocksDB.

With large state challenges solved, the next frontier focuses on making real-time processing affordable for everyone through generic incremental computation.

Incremental Compute is not something new; it was there maybe ten years ago. But especially in recent years, this concept has been raised up over and over again. The advantages of incremental computate are clear: near real-time, reduced cost, and unified stream & batch. These are all great. The real difficulty has been how incremental computate can be generic. Solving problems only for specific simple scenarios does not provide a systemic solution.

In this talk, let's step back and take a look what is incremental compute and how a systematic solution should be.

Batch/Full Compute:

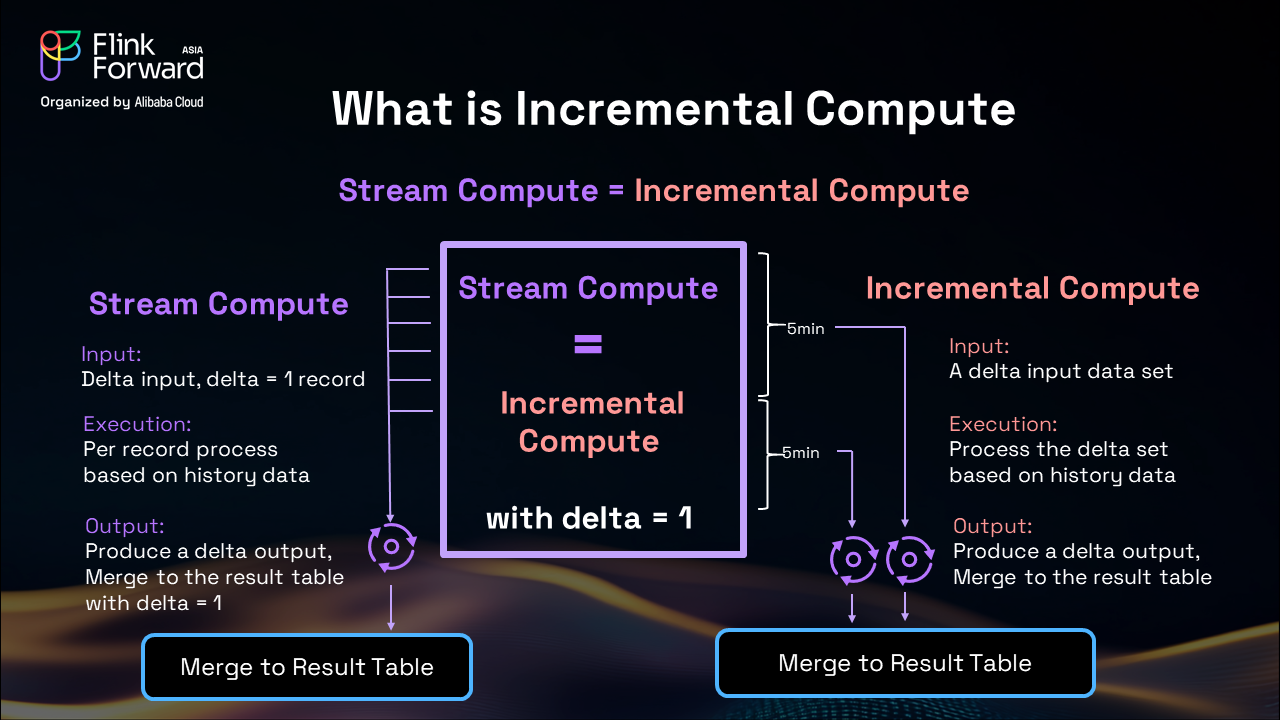

Let's start with what is incremental compute by comparing it with full compute or batch compute.

Full Computation: the input is a complete input data set, and this complete data set are processed all at once, and produce a full output, and overwrite the result table.

Incremental Compute: processes only a delta input data set, for example, last 5 min data. This delta set is executed together with memorized historical data, and producing a delta output that needs to merge into the existing result table.

Incremental compute is very different from batch compute, from input, to execution, and to the output.

Stream Compute: processes a delta input, typically one record at a time, based on history, and merges a delta output to the result table.

Key Insight: Stream compute equals incremental compute with delta = 1, revealing the natural foundation for generic incremental processing.

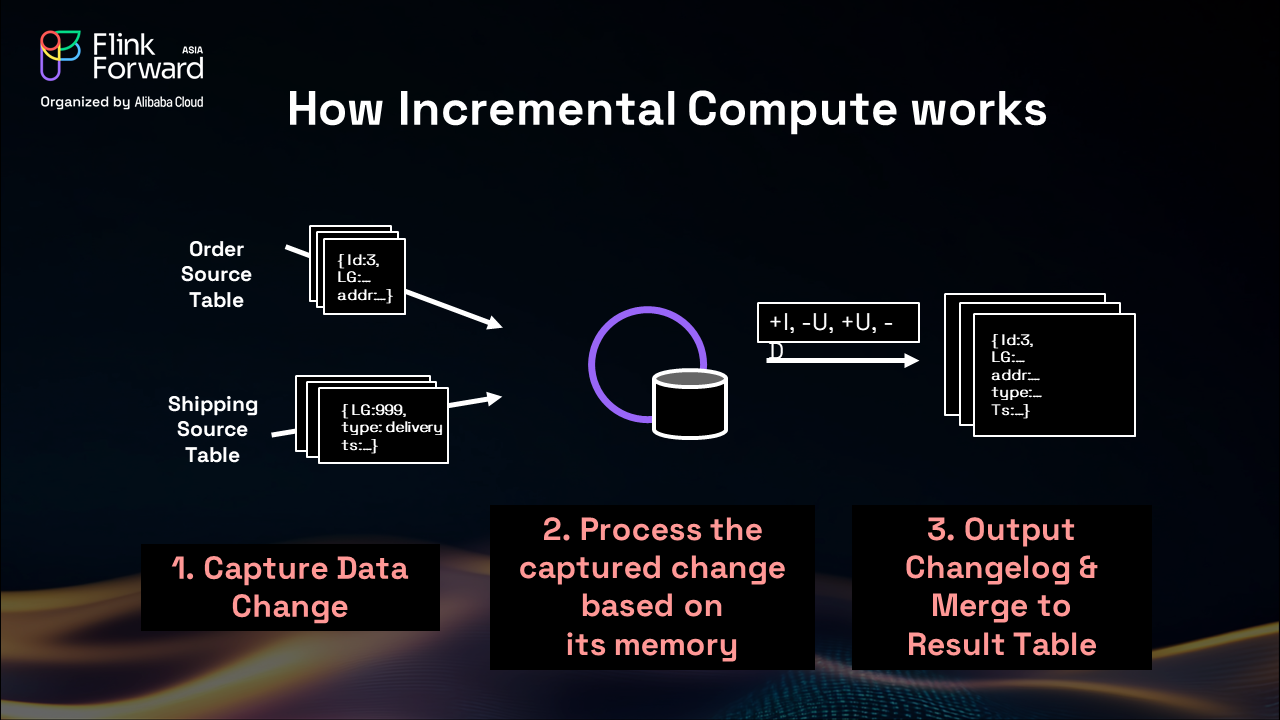

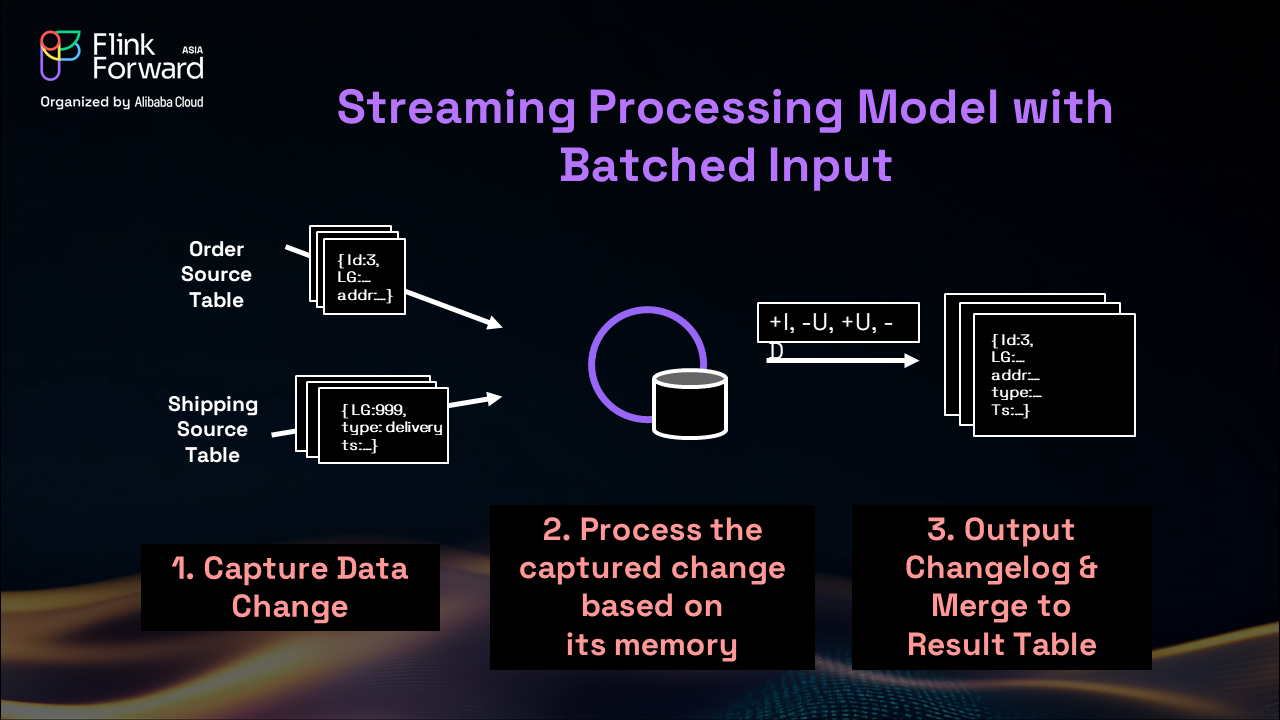

Incremental computation requires three core capabilities that distinguish it from traditional batch processing. First, the system must capture data changes by identifying and collecting delta inputs that represent modifications since the last processing cycle. Second, it must process these changes with historical data, correlating new information with historical state to maintain processing accuracy. Finally, it must output changelog information that generates merge-compatible results using standard change data capture semantics (+I for inserts, -U for update deletions, +U for update insertions, -D for deletions).

These capabilities already exist in streaming processing models—the innovation lies in handling batched input through pipeline processing.

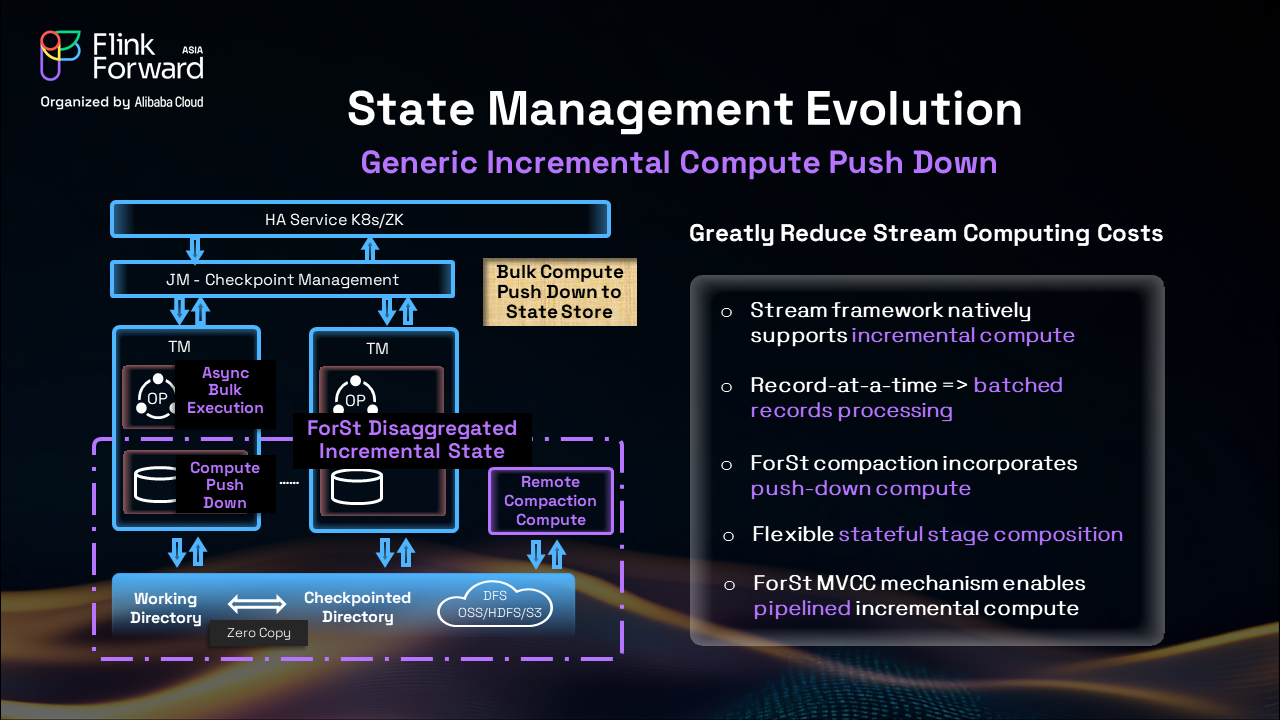

Extending the disaggregated architecture enables three advanced capabilities that push the boundaries of what's possible in incremental processing.

Compute pushdown incorporates computation directly within the compaction service, avoiding unnecessary intermediate caculation and output.

Async bulk execution provides flexible state composition that enables richer query coverage beyond simple aggregations, COUNT DISTINCT (a distinct operation followed by a count) for example.

The MVCC (Multi-Version Concurrency Control) mechanism enables pipeline incremental computation with concurrent input accumulation, allowing the system to process new changes while previous incremental batches are still under processing.

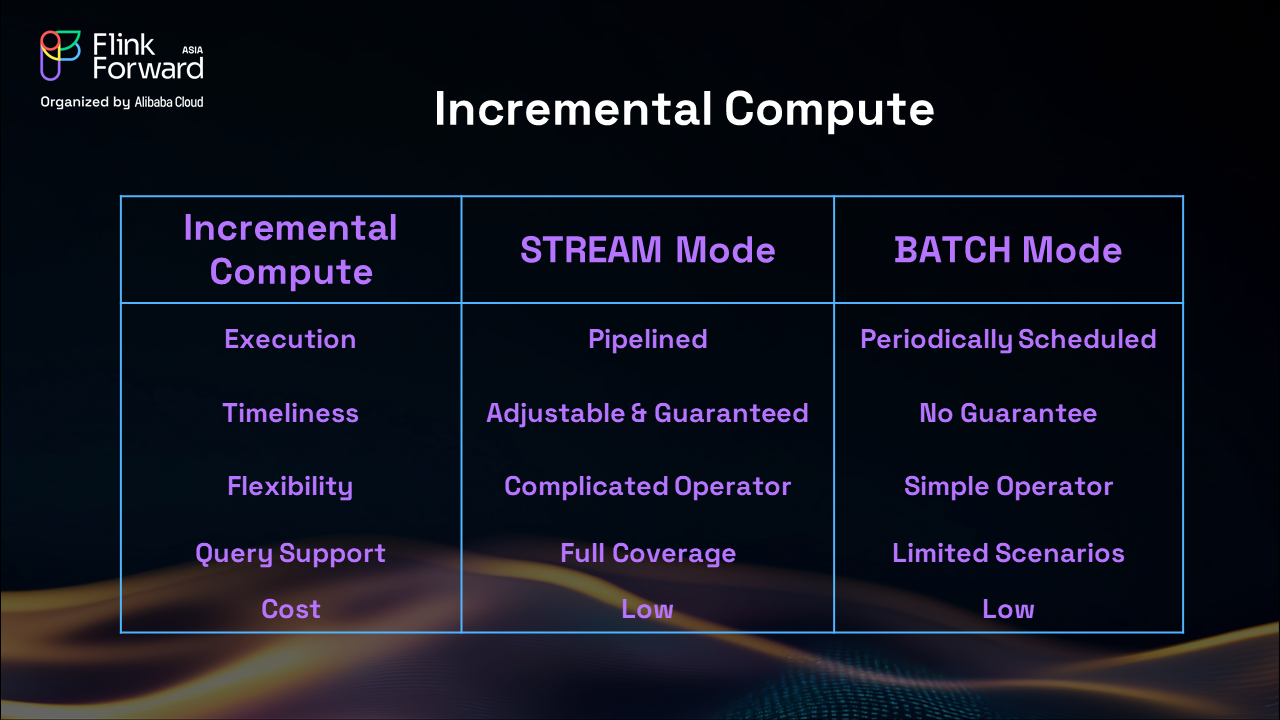

Depending on the execution model, it can be implemented in two primary modes: Streaming (STREAM Mode) and Batch Processing (BATCH Mode). These modes differ significantly in execution behavior, latency guarantees, flexibility, query capabilities, and cost efficiency, as shown in the table below.

Flink's state management serves as a application's memory, providing persistent context that enables streaming applications to maintain complex historical relationships. This capability enables sophisticated processing correlations that would be impossible with stateless systems, while abstracting the underlying complexities of data consistency and correctness from application developers.

The ForSt disaggregated architecture fundamentally solves large state challenges by separating state and compute scaling dimensions. Checkpointing operations now complete in sub-second timeframes regardless of state size, while recovery and rescaling operations execute instantly without delay. These improvements translate directly into significant cost optimization, with many organizations seeing substantial reductions in operational expenses.

Generic incremental computation represents the next major evolution, promising a unified stream and batch processing paradigm that combines the best of both worlds. Pushdown optimization integrates computation directly with state storage for maximum efficiency, while cost accessibility initiatives aim to make real-time processing affordable for organizations of all sizes.

Apache Flink's journey from embedded state management to disaggregated architecture represents a fundamental shift toward cloud-native stream processing. The innovations in Flink 2.0—particularly the ForSt disaggregated state backend and asynchronous execution model—solve long-standing challenges around large state management while maintaining the semantic guarantees that make Flink reliable.

Looking ahead, generic incremental computation promises to bridge the gap between stream and batch processing, offering the best of both worlds: the real-time capabilities of streaming with the cost efficiency of batch processing. This evolution positions Apache Flink not just as a streaming engine, but as a comprehensive platform for all real-time data processing needs.

The future of stream processing lies in making powerful real-time analytics accessible to every organization, regardless of scale or budget. With these architectural innovations, that future is rapidly becoming reality.

Yuan Mei is the Director of Engineering at Alibaba Cloud and a Project Management Committee member of Apache Flink. She leads the development of cloud-native stream processing technologies and has been instrumental in advancing Flink's state management capabilities. The recent work《Disaggregated State Management in Apache Flink® 2.0》 has been published on top-tier conferences VLDB 2025.

Apache Paimon: Real-Time Lake Storage with Iceberg Compatibility 2025

Flink Agents: An Event-Driven AI Agent Framework Based on Apache Flink

206 posts | 56 followers

FollowApache Flink Community China - August 2, 2019

Apache Flink Community China - August 4, 2021

Apache Flink Community China - November 5, 2020

Apache Flink Community - February 24, 2025

Apache Flink Community - January 31, 2024

Apache Flink Community - May 27, 2024

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn More Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn MoreMore Posts by Apache Flink Community