By Wang Feng, compiled by Han Fei.

This article is based on the speech given at the Developer Summit of the Yunqi Computing Conference by Wang Feng, a senior technical expert at Alibaba Computing Platform Division.

With the massive data growth, today is more than ever what one might call the data age. Today, the boundaries of computing have been infinitely extended by the explosion of data in more ways than you can count. It is difficult to compute the value of data. Of course, when it comes to big data processing, you cannot forget to mention the next-generation big data computing engine - Apache Flink. This blog attempts to look into the development process of Flink in an attempt explain why Alibaba chose Flink over its competitors.

With the advent of the AI era and the explosive growth of data, data processing in typical big data business scenarios usually follows the following pattern. Batch processing is used to process the full data and stream computing is used to process real-time incremental data. In most business scenarios, a user's business logic remains the same for batch processing and stream processing. However, computing engines for batch and stream processing are actually quite different. Therefore, that user generally needs to write two sets of code. This undoubtedly causes additional burdens and costs. At Alibaba, two different sets of business processes targeting full data and incremental data, respectively, are often required to process goods data for Alibaba's various e-commerce platforms, including Taobao, Tmall, and AliExpress. Because of this, the technical experts at Alibaba have often wondered if it were possible to have an integrated set of big data processing technologies that allow us to develop a set of code based on our own business logic. In such a case, only one solution can support the processing of full data and incremental data or real-time data processing. This is the reason why Alibaba chose Flink.

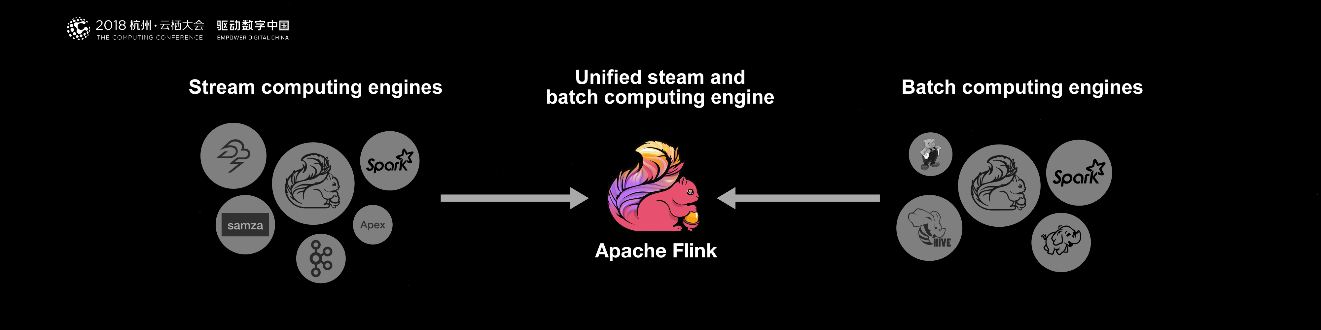

Currently, there are many open-source big data computing engines available on the market, such as Storm, Samza, Flink, and Kafka Stream for steam computing as well as Spark, Hive, Pig, and Flink for batch processing. However, among all of those computing engines, only two support both stream processing and batch processing: Apache Spark and Apache Flink.

Now let's consider different factors including technologies and ecosystems to have a comprehensive view of the two engines. From the perspective of the technical philosophy, Spark simulates stream computing based on batch computing. Flink is the opposite. It simulates batch computing based on stream computing.

From the perspective of technical development, the simulation of stream computing based on batch computing has some technical limitations, which may be difficult to break. However, Flink shows better scalability because it simulates batch computing based on stream computing. Alibaba decided to use Flink to implement a unified and general-purpose big data engine in the long term.

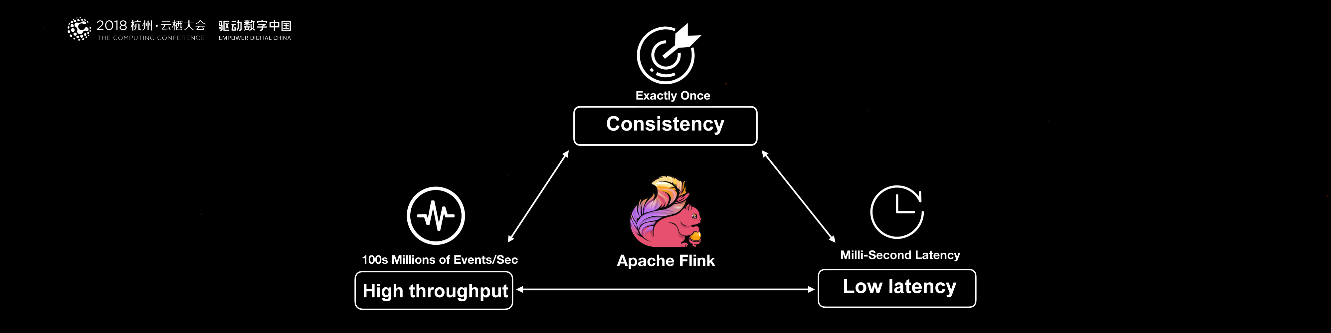

Flink is a unified big data computing engine with low latency and high throughput. When used in production at Alibaba, Flink can process hundreds of millions messages or events every second with only milliseconds of latency. Flink also provides the Exactly-once consistency semantics. This guarantees data security. This allows the big data engine Flink to have the ability to process data in financial scenarios.

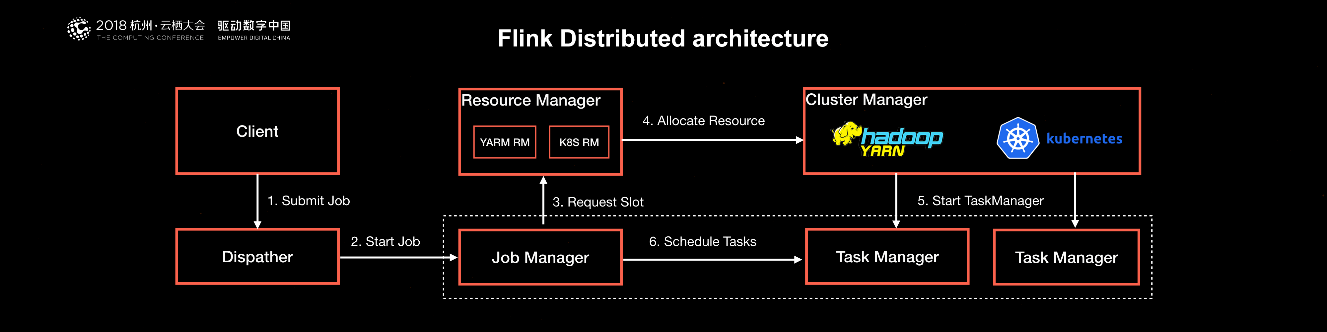

A platform built on Apache Flink at Alibaba was officially launched in 2016 and then was involved in two big scenarios at Alibaba: search and recommendation. Currently, all Alibaba services, including the services found in all of Alibaba subsidiaries, use this Flink-based real-time computing platform. At the same time, the Flink computing engine runs on open-source Hadoop clusters. YARN of Hadoop is used for resource management and scheduling, and HDFS is used for data storage. Therefore, Flink can seamlessly integrate with the open-source big data software Hadoop

This Flink-based real-time computing engine not only provides internal services at Alibaba, but also supports Flink-based cloud products in the entire developer ecosystem by using cloud product APIs at Alibaba Cloud.

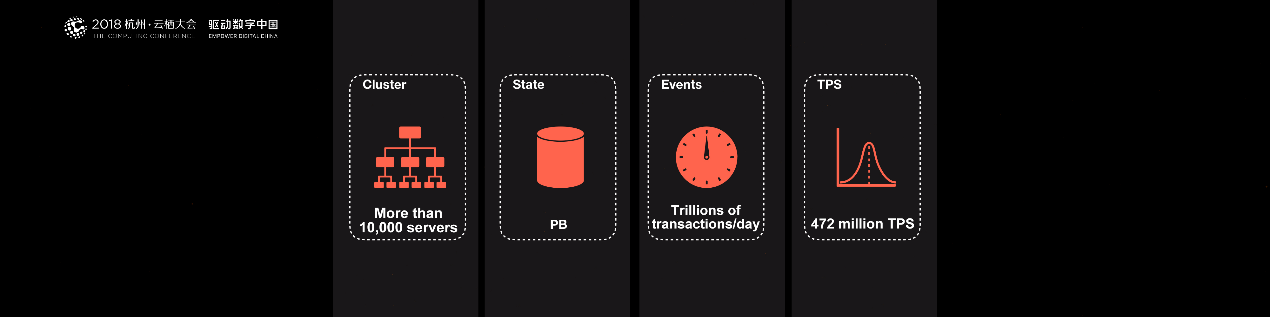

The performance of Flink at Alibaba can be understood in terms of such things like scale, size, events, and speed, as summarized by the figure shown here.

Scale is one important metric to judge if a system is mature. When Flink was initially implemented at Alibaba, only several hundreds of servers were involved with it - a small fraction of those at Alibaba. However, now the scale used is in the tens of thousands. This large scale is rare even internationally speaking. So far, Alibaba has had petabyte-level accumulative state data based on Flink. Currently, more than one trillion events are processed on Flink each day. Flink can process over 472 million transactions per second during business peaks, which is truly astronomical. One of the most notable of application scenarios is the Alibaba data dashboard during Double 11 events.

Next, I am going to talk more about Apache Flink from the open-source development perspective. How was Apache Flink born? How did it gradually mature to what it is now? Why did Alibaba adopt Flink in its evolution process? And last what contributions and support has Alibaba made to Flink?

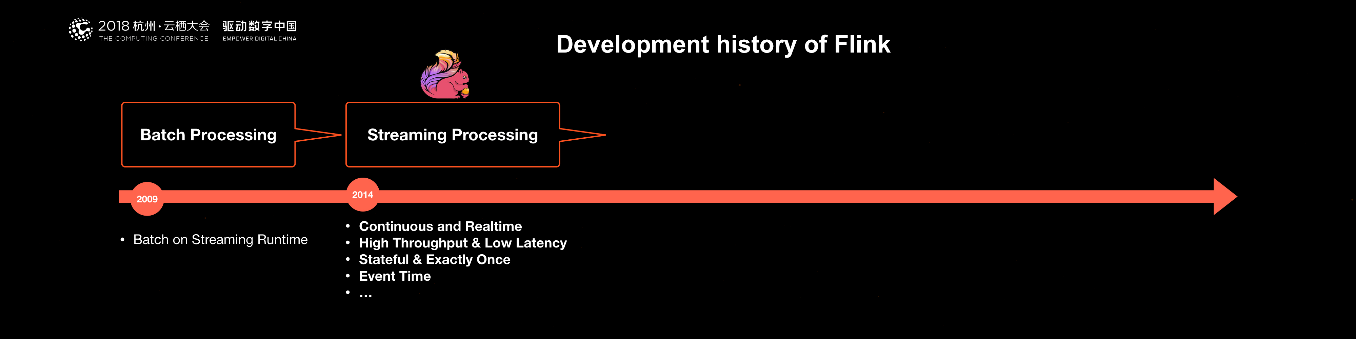

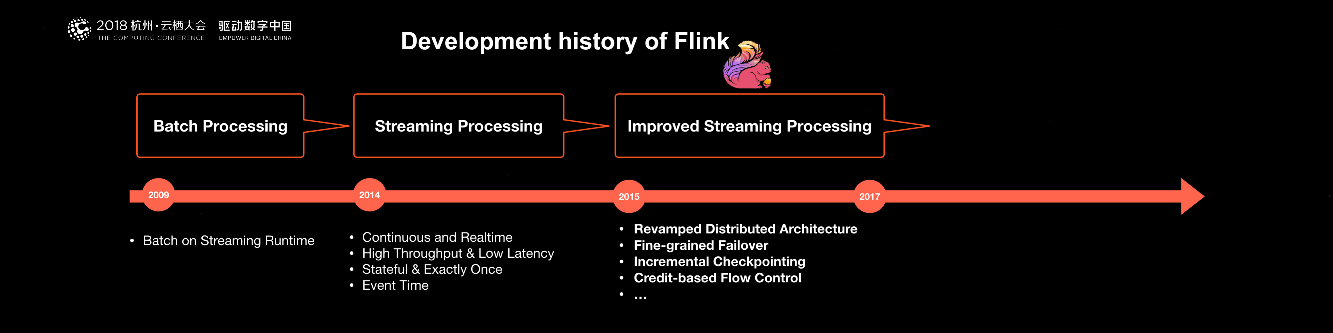

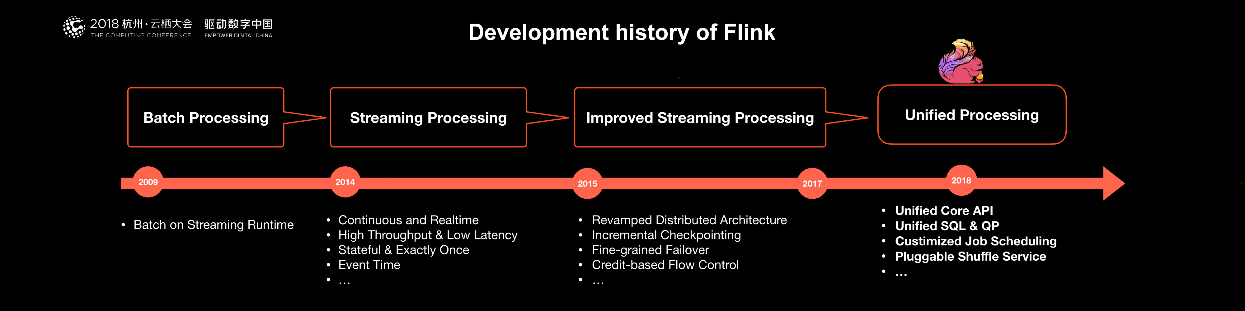

Flink originated from the Stratosphere research project on big data. This research project was launched by the Technical University of Berlin. Originally, Flink was developed for batch computing. Later, core members of the StratoSphere team incubated Flink in 2014 and contributed it to Apache in the same year. Flink became a top-level Apache big data project soon after that. In the meantime, streaming became the primary direction of the research at the Flink project, specifically here we are talking about using stream computing for all big data computations. This is the history of how Flink came to be what it is today.

In 2014, the big data engine Flink merged primarily for stream computing in the open-source big data field. Unlike Storm, Spark Streaming, and other stream computing engines, Flink at the time was a high-throughput and low-latency computing engine that also provided many advanced features. For example, Flink could enable stateful computations, support state management and strong consistency semantics, and last could also provide Event Time and WaterMark for out-of-order message processing.

The most distinguishing feature of Flink is state management.

So you may ask, what is a state? Data statistic values like Sum, Count, Min, and Max are often needed in several different scenarios, for example, for developing a set of stream computing systems and tasks for data processing. These values need to be stored. Because updates are constantly made, these values and variables being static in nature after they are recorded can be seen as a state. If data sources read Kafka and RocketMQ, it may be necessary to record positions and Offsets of data that has been read. These Offset variables are also states to be computed.

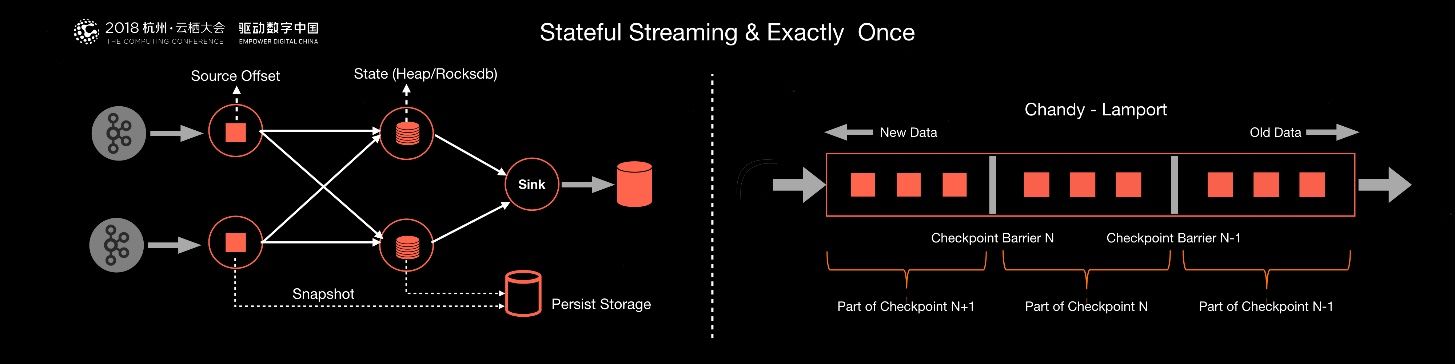

Flink provides built-in state management, which allows states to be stored in Flink instead of external systems. On one hand, this can reduce the dependency of computing engines on external systems and the deployment complexity, making maintenance easier. On the other hand, it can significantly improve the performance. If external systems such as Redis and HBase are used for data access, it must be implemented through the network and RPCs. If the access is implemented by using the internal system of Flink, only processes of Flink itself are needed to access these variables. Flink also regularly persists the state as checkpoints and store checkpoints in a distributed persistence system like HDFS. In this case, if a Flink task fails, the state of the entire stream will be recovered from the last checkpoint and stream processing for that task will continue to run. This does not impact users' data at all.

How does Flink implement zero data loss and redundancy during the recovery process through checkpoints? How does Flink ensure accurate computations?

The answers to these questions is that Flink uses the classic Chandy�CLamport algorithm. The core of this algorithm is to treat the stream computing as a stream topology, regularly insert special barriers from the source node of the topology and broadcast barriers from the upstream to the downstream. When each node receives all barriers, a snapshot of the state is taken. When each node has completed the snapshot, the entire topology performs a complete checkpoint operation. If any faults occurs later, the recovery will always be performed from the last checkpoint.

Flink uses this classic algorithm to ensure strongly consistent semantics. This is also the core difference between Flink and other stateful stream computing engines.

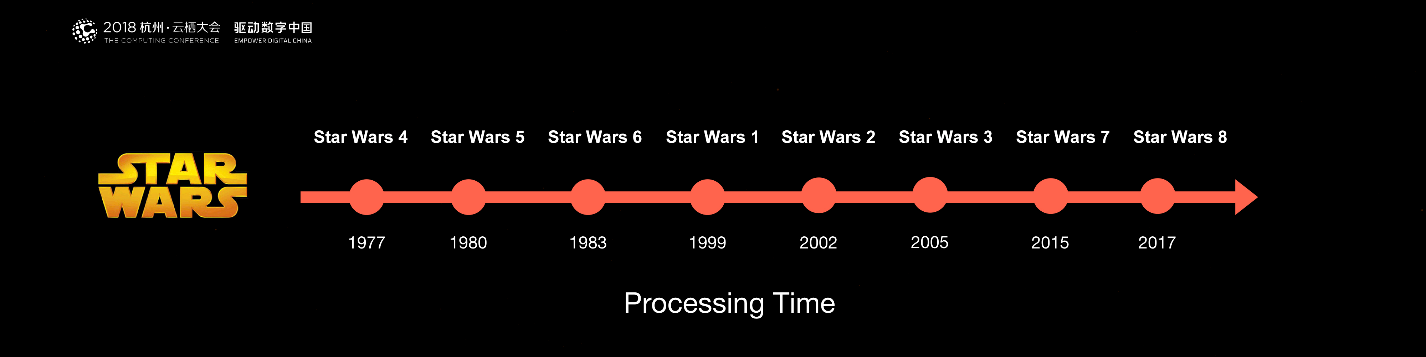

Next, I will explain how Flink processes out-of-order data. For example, you may find that Star Wars plots are not sequentially ordered if you view these movies and films in order of the release date.

In stream computing, many scenarios are actually very similar to this one. For example, there may be a scenario in which the arrival time of all messages are inconsistent with the time when they are generated in the source or in logs of the online system. In stream computing, it is expected to process messages in order of the generation time in the source instead of the time when they finally arrive at the application. Flink provides powerful features like Event Time and WaterMark to process out-of-order data. Therefore, users can process messages in an orderly manner. This is an important feature in Flink.

Next, I am going to describe the core concepts and ideas of Flink in its initial stage (the first development phase). The second development phase of Flink is the period from 2015 to 2017. Alibaba participated in this project during this time. The adoption of Flink at Alibaba all started with a survey in our search division in the middle of the year 2015. At that time, Alibaba had already had its own batch processing and stream processing tools, both in-house and open-source ones. However, Alibaba also did lots of research on the development direction and future trends of next-generation big data engines.

Based on a large number of research results, Alibaba concluded that big data technologies would evolve towards computing engines that meet general-purpose big data computation requirements and support both batch and stream processing. Therefore, Alibaba chose Flink for this reason.

However, Flink in 2015 was not mature enough. Both the scale or stability had not been proven in practical scenarios. As a result, Alibaba chose to build a branch of Flink in Alibaba and made many modifications and improvements to Flink so that the internal branch could meet ultra-large-scale business scenarios at Alibaba. In this process, our team made many improvements and optimizations on performance and stability. In addition, we implemented many core architecture and feature innovations and improvements, which were also contributed to the community, including the new distributed architecture in Flink, the incremental checkpoint mechanism, credit-based network traffic control and Streaming SQL.

Let me show you two design cases. The first is that Alibaba has restructured the distributed architecture of Flink and implemented the clear layering and decoupling between job scheduling and resource management. The main advantage of this design is that Flink can run natively on different open-source managers. With the improvement on the distributed architecture, Flink can run natively on Hadoop YARN and Kubernetes, which are two common resource management systems. In addition, the centralized task scheduling in Flink was changed to distributed scheduling so that Flink can support larger clusters and better resource isolation.

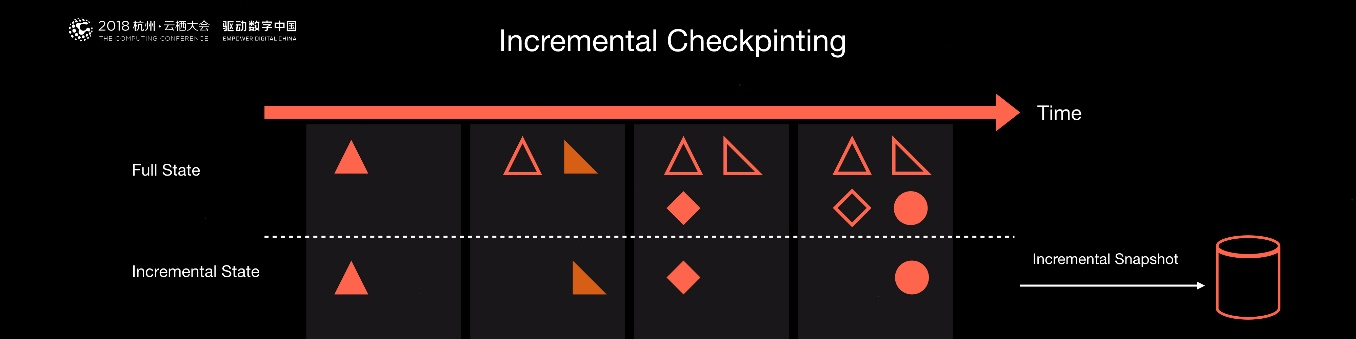

The other is the implementation of the incremental checkpoint mechanism. Flink provides stateful computations and the regular checkpointing mechanism. If internal data grows increasingly larger and checkpointing is continuously performed, checkpoints will become larger and larger, and checkpointing may be impossible in the end. With incremental checkpoints, Flink can automatically discover which are incremental data changes and which is modified data. At the same time, modified data will be persisted. With this mechanism, checkpointing will not become increasingly harder over time, and the performance of the entire system is very stable. This is also one important feature that we have contributed to the community.

The improvements on Flink Streaming from 2015 to 2017 have gradually matured the Flink community. Flink has become the most popular computing engine in the streaming field. Flink was originally designed to be a big data engine for unified batch and stream computing. Efforts towards this design goal actually started in 2018. To implement this goal, Alibaba established a new and unified API architecture and solution. With many improvements to stream computing features, we think that improvements are also required on batch computing. A lot of improvements need to be implemented on the task scheduling layer, data shuffle layer, fault tolerance, and usability.

Due to limitation of space, I just share two improvements with you:

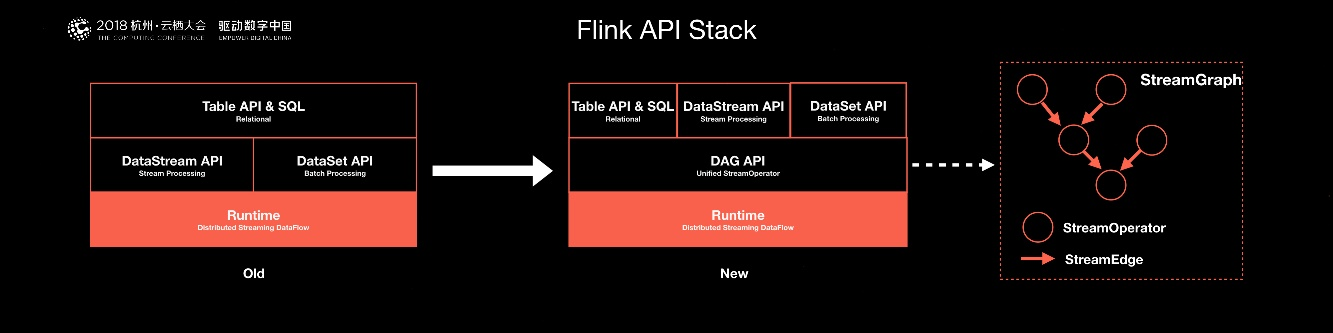

Let's first see the current situation of Flink API Stack. Developers who have researched Flink or used Flink may have already known this. Flink provides two sets of basic APIs: DataStream and DataSet. DataStream API is provided for stream processing, while DataSet API is provided for batch processing. However, the two APIs have completely different execution paths. Even the generation of different tasks to run. This conflicts with the idea of the unified API and is also imperfect. Therefore, this is not a final solution. A basic API layer is required for unified batch and stream processing on top of Runtime. We want to make a unified API layer.

Therefore, we use a DAG API in the new architecture as an API layer for unified batch and stream processing. In this DAG, batch computing and stream computing do not have to be expressed distinctly. Developers only need to define different attributes on different nodes and edges to decide whether data has the stream attribute or the batch attribute. The entire topology can merge the semantic expressions of batch and stream computing. During the computation process, developers only need to express their needs, without having to distinguish between batch computing and stream computing. With this API, a unified API Stack can be implemented in Flink.

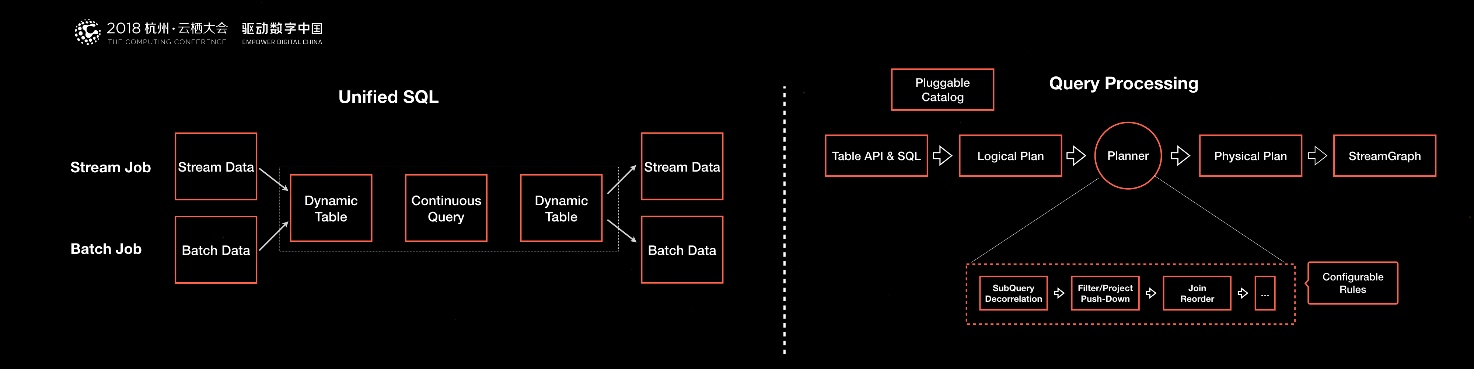

In addition to a unified basic API layer and API Stack, a unified SQL solution in the upper layer is also required. For batch and stream SQL, we can consider that both batch computing and stream computing have a data source. We can simulate the two sources into data tables. The data source of stream data can be considered a continuously updated table, and the data source can be considered a relatively static table, not updated. All of the data processing can be seen as an SQL query, and the final results can also be simulated into a result table.

The result table for stream computing is a continuously updated table. The result table for batch computing is a table that includes results of one update. From the perspective of the SQL semantics, batch and stream computing can be combined. In addition, both stream processing SQL and batch processing SQL can be expressed and reused through the same query. This allows both batch and stream processing tasks to be optimized and resolved by using the same query. Many operators for batch and stream processing can be reused.

First, based on the nature of Flink, Alibaba will make it a general-purpose and unified big data computing engine. Alibaba will make a better Flink ecosystem and make Flink suitable for many scenarios. Currently, Flink has become a popular stream computing engine. Many Internet companies have reached a consensus that Flink is the future of big data and the best stream computing engine. The next important step is to make some breakthroughs in the batch computing of Flink. We need to make Flink applicable in more scenarios and make it a popular computing engine for batch computing. This allows seamless switch between stream and batch processing and the boundary between stream and batch processing will become less distinct. Flink supports both stream computing and batch computing in a computing task.

The second development direction is to make the Flink ecosystem support more languages including Python and Go for machine learning instead of its current support for only Java and Scala. In the future, we expect more programming languages can be used to develop Flink computing tasks and describe computing logic so that Flink can connect with more ecosystems.

Finally, it is also worth to mention AI, because currently many big data computing requirements and data volumes support many popular AI scenarios. Therefore, it is also necessary to improve the machine learning algorithm library in the upper layer of Flink and integrate with mature machine learning and deep learning algorithms. For example, the implementation of TensorFlow On Flink enables the integration of ETL in big data, feature computation in machine learning, computation for training, and other features so that developers can benefit a lot from multiple ecosystems.

For more information, visit Apache Flink Community China

206 posts | 54 followers

FollowApache Flink Community - July 5, 2024

Apache Flink Community China - July 27, 2021

Apache Flink Community - March 24, 2025

Apache Flink Community - May 26, 2025

Apache Flink Community - May 26, 2025

Alibaba Clouder - December 2, 2020

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Apache Flink Community