By Xintong Song

This article is based on a keynote speech given by SONG Xintong during Flink Forward Asia 2023. SONG leads a team that mainly works on Apache Flink's distributed runtime at Alibaba Cloud Intelligence, and is also an Apache Flink Project Management Committee (PMC) member and the release manager of Apache Flink 2.0. In the speech, SONG introduced the main technical directions and plans of the Apache Flink community in 2024, as well as the preparations for releasing Apache Flink 2.0.

The following aspects make up the current and future focus of the Apache Flink community:

This section describes the future plans of the Apache Flink community in the field of stream computing.

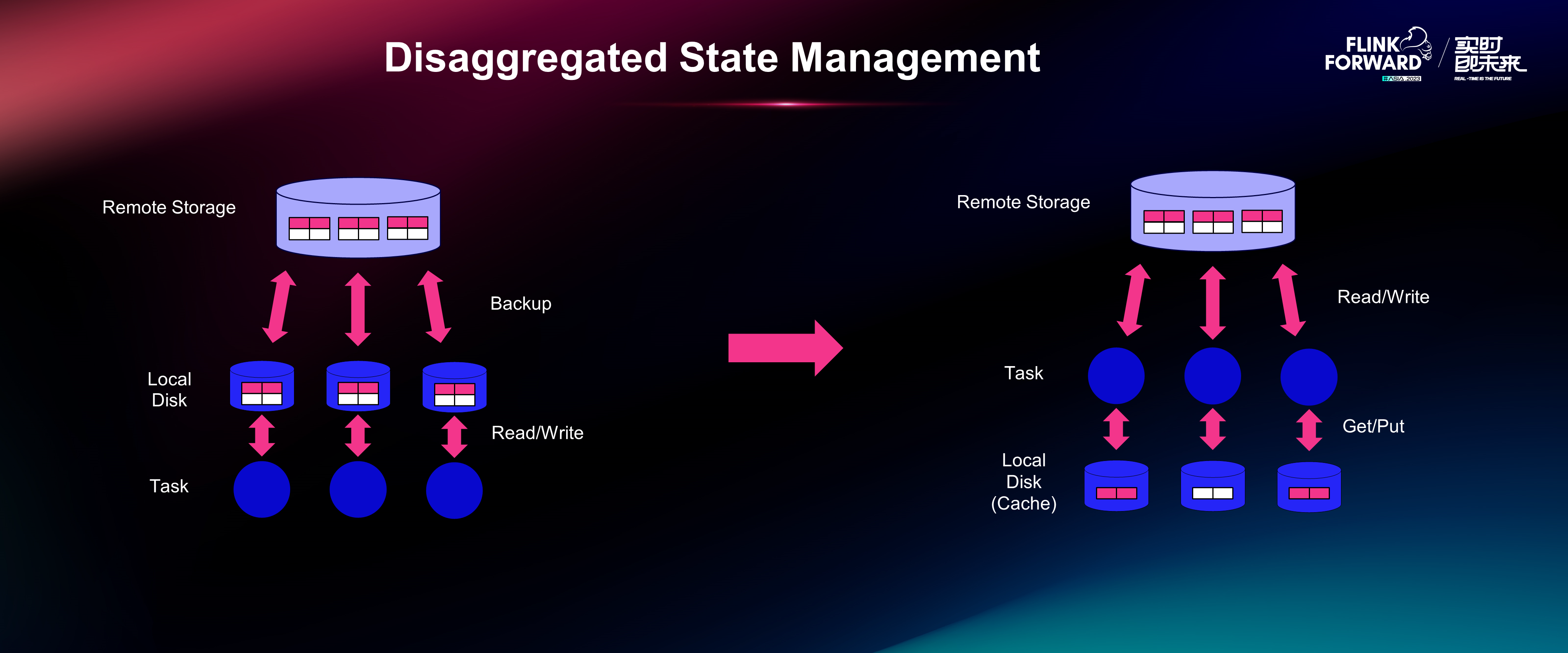

The most important technical endeavor of Apache Flink in streaming is to advance the compute-storage disaggregated architecture for better state management. Because of the stateful nature of Apache Flink, state management has always been a core capability. In the cloud native era, Apache Flink faces new challenges, as massive amounts of data are being migrated to the cloud. To handle this workload, we would often use a unified resource pool on the cloud and apply pod/container-level resource isolation, such as the isolation of disk space. If the state of a Apache Flink job is stored in local disks, the disk space requirements of the pods cannot be determined because the state size is uncertain. This makes it difficult to manage disk space resources. In addition, one of the main advantages of the cloud lies in resource elasticity. Both the Apache Flink community and commercial vendors have launched features that enable Apache Flink to perform auto-scaling based on changes in data traffic, such as Auto-scale and Auto-pilot. However, when you use these features, you still need to suspend and then restart the job during re-scaling. If the state is stored in local disks, Apache Flink must pull the state file to local disks when the job resumes. This results in longer downtime of the job that during re-scaling.

To address the issues, the Apache Flink community has dedicated significant effort in designing and implementing the next-generation disaggregated state management.

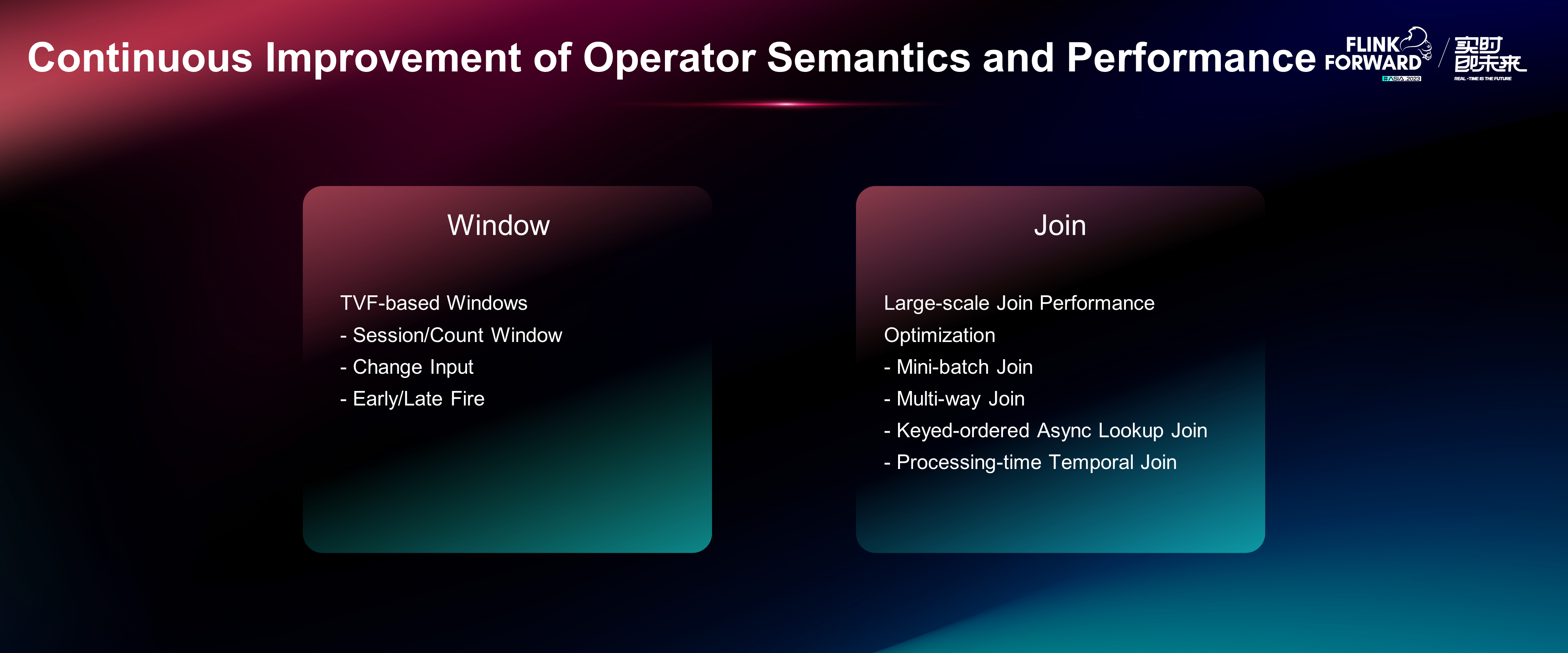

In addition to state management, the Apache Flink community has been optimizing the semantics and features of some key operators in stream processing. This section describes two of them: window and join.

Window is a unique concept in stream processing and is mainly used for aggregation. In Flink SQL, we recommend that users use table-valued functions to define windows. The Apache Flink community will continue to improve the window capabilities based on table-valued functions, such as support for more window types, change inputs, and early or late firing.

Window is a unique concept in stream processing and is mainly used for aggregation. In Flink SQL, we recommend that users use table-valued functions to define windows. The Apache Flink community will continue to improve the window capabilities based on table-valued functions, such as support for more window types, change inputs, and early or late firing.

Join is commonly used in data analytics, and is performance critical in large-scale data processing. The Apache Flink community is exploring various performance optimization technologies for the join operator, such as minibatch join and multi-way join.

This section describes the future plan of the Apache Flink community for unifying batch and stream processing.

Unified batch and stream processing allows you to use a single set of APIs to develop streaming and batch jobs and execute the jobs with the same engine and operators to ensure data consistency. Unified APIs are a crucial prerequisite for achieving unified batch and stream processing. Apache Flink provides unified SQL API and DataStream API to serve this purpose, but it's not always feasible to code once and dynamically switch between the streaming and batch execution modes.

In this context, the Apache Flink community is exploring new unified syntax and semantics for Flink SQL. The new solution leverages ideas similar to materialized views to let users develop code that can be executed in both modes. However, as for the DataStream API, it is not easy to mask the difference between the streaming and batch modes because the DataStream API is a procedural API which awares of many low-level details. The solution offered by the Apache Flink community is to identify the operators or capabilities that can support both batch and stream processing and those that can't, so that users make more informed decisions when developing their business logic.

In addition to unified APIs, the engine must also have the capability and performance to handle both batch and streaming. At present, Apache Flink is already a leading stream processing engine, and the Apache Flink community is working hard to elevate its batch processing capabilities to the same level.

The community has put efforts into the following aspects:

After developing excellent stream and batch processing capabilities, the Apache Flink community plans to achieve the integration of the two modes.

The streaming and batch modes have the same data processing logic but different performance preferences during runtime.

The streaming and batch modes have the same data processing logic but different performance preferences during runtime.

In streaming mode, low latency is preferred, so that the system can generate an output in real time upon receiving each data record. However, low-latency data processing may result in low resource utilization and low computing efficiency.

The batch mode has lower requirements on latency. The time and resources it takes to complete the data processing for an entire dataset are more important. Therefore, high throughput is preferred for data processing in batch mode.

In common data processing scenarios:

To address the preceding issue, we introduced the concept of mixed batch and stream processing. It enables the engine to automatically determine whether high throughput or low latency is required and select the batch or streaming mode accordingly. When job status and performance preferences change halfway, the engine can also dynamically switch the mode.

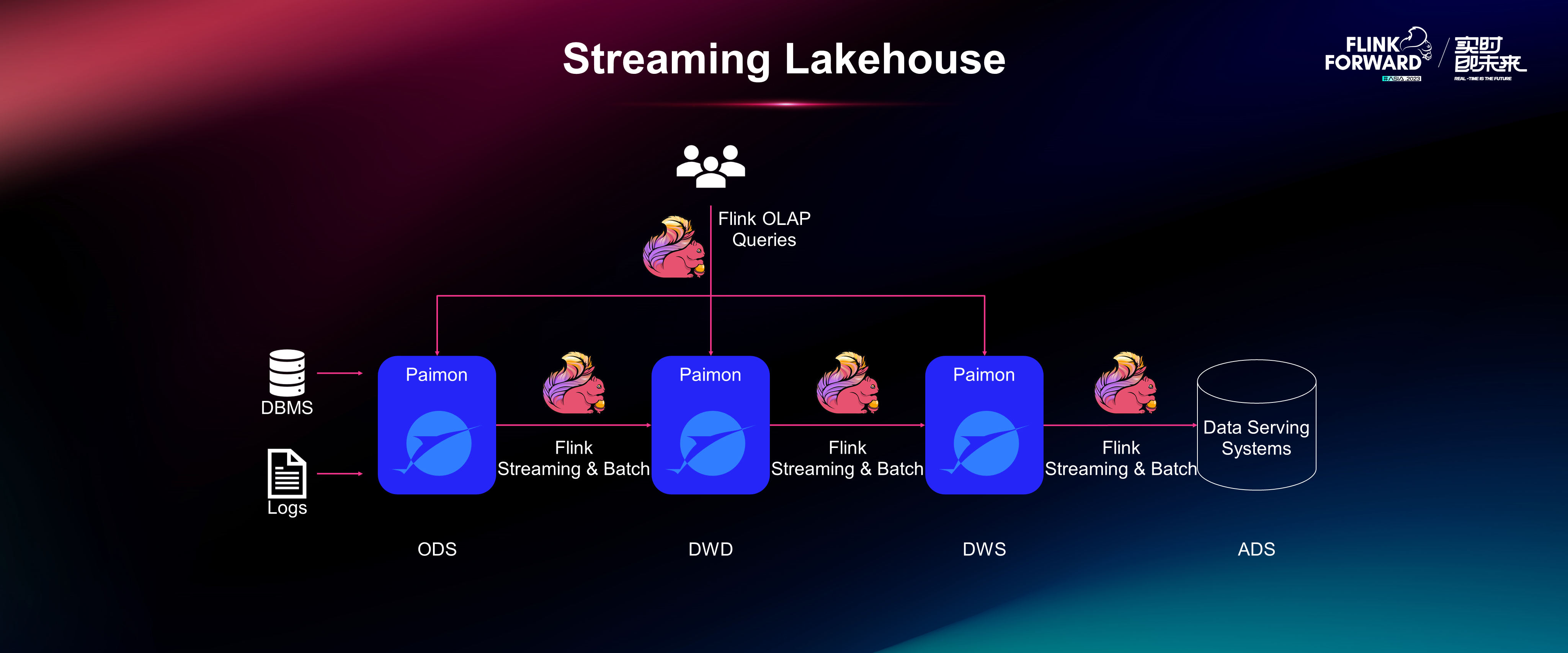

The Apache Flink community continues to improve the streaming lakehouse architecture. The preceding figure shows the streaming lakehouse architecture that is built by integrating the unified computing capability of Apache Flink with the unified storage capability of Apache Paimon.

In addition to the above-mentioned aspects, the Apache Flink community also needs to improve the performance of short online analytical processing (OLAP) queries, enhance the features of SQL Gateway, and promote data management and metadata management capabilities of Flink SQL in lake storage scenarios.

This section describes the improvements that can be made in terms of user experience.

The upgrade of an SQL job often changes the topology of the job, which may lead to state compatibility issues. The Apache Flink community has proposed a solution that is in the minimum viable product (MVP) phase. The solution will be continuously improved.

In terms of serialization, Apache Flink boasts comprehensive features, including a powerful type and serialization system that supports various serializers. However, when it comes to usability, Apache Flink still has a lot to work on. For example, if you want to modify the mappings between data types and serializers or modify the rules for the automatic selection of serializers, you need to modify the code. To address this issue, the Apache Flink community is developing a serialization management solution based on configuration files.

In terms of the configuration system, Apache Flink provides a large number of configuration options. However, the options are not free of issues, such as improper default values, unclear semantics and scope, and exposure of internal details. The Apache Flink community is reworking its configuration mechanism, including the re-evaluation of all existing configuration items.

In terms of APIs, specific outdated APIs, including the DataSet API, Scala API, Legacy Table Source API, and Legacy Table Sink API, will be removed. Existing major APIs such as DataStream APIs and REST APIs and metrics need to be continuously improved.

The above-mentioned optimizations are associated with public interfaces. This hampers the backward compatibility of a project. Apache Flink 2.0 is introduced to address this issue.

Apache Flink 1.0 was released in 2016, and Apache Flink 1.18 was released in October 2023. Over the seven and a half years, 19 minor versions were released. In order to introduce aforementioned compatibility-breaking changes, we plan to bump into the next major version and release Apache Flink 2.0.

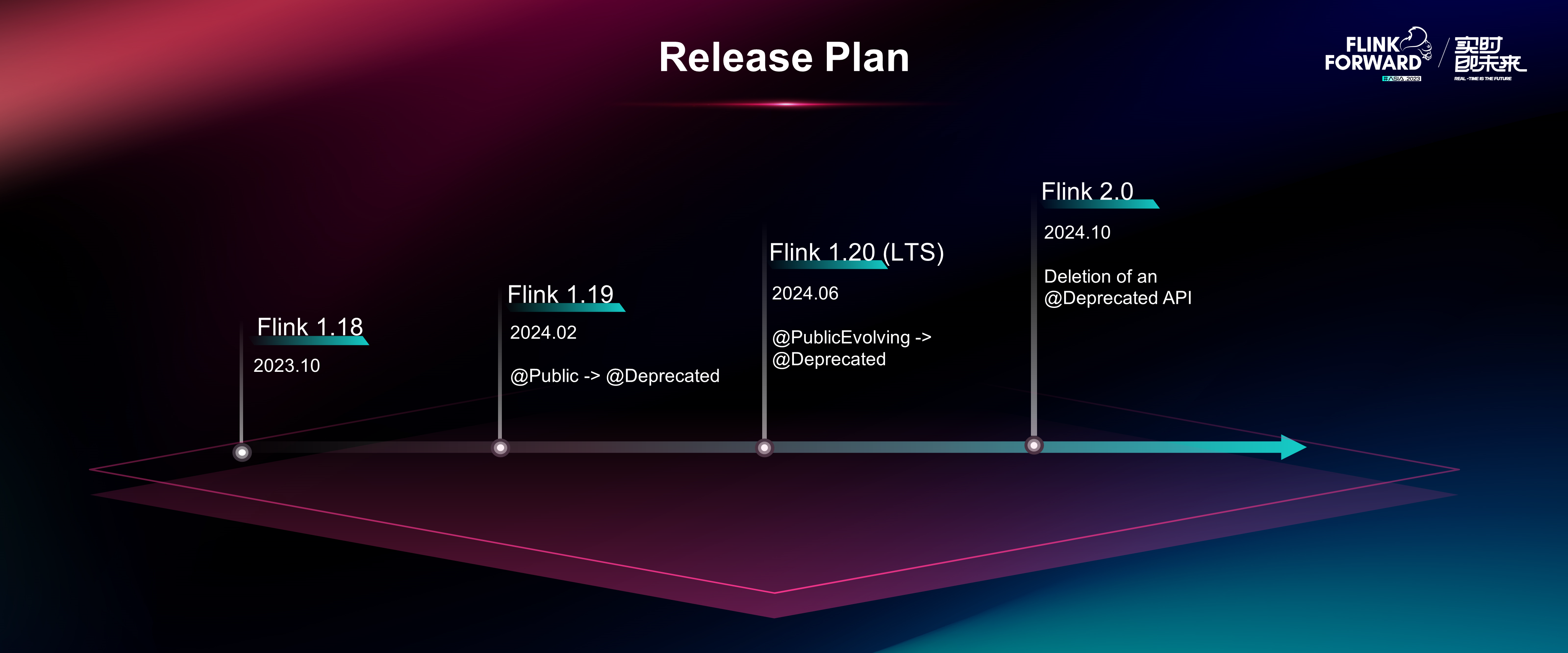

The Apache Flink community formulates API compatibility criteria and defines three API classes @Public, @PublicEvolving, and @Experimental.

For @Public APIs, compatibility is ensured within the same major version. The first number in a version number indicates the major version. For @PublicEvolving APIs, compatibility is ensured within the same minor version. The second number in a version number indicates the minor version. For @Experimental APIs, compatibility is not ensured. In addition to compatibility guarantees, the Apache Flink community recently proposed a requirement for the API migration cycle. This requirement is used to specify the minimum time period from the time an API is marked as an @Deprecated API to the time the API is deleted. An @Public API can be deleted after two later minor versions, and an @PublicEvolving API can be deleted after one later minor version. APIs can be modified only when the requirements for both the compatibility guarantees and API migration cycle are met. For example, if we want to modify an @Public API in Flink 1.18, we need to deprecate it in Flink 1.19, wait for the release of 1.20 and then remove it in Apache Flink 2.0.

The following figure shows the schedule for releasing Apache Flink 2.0 based on the preceding API compatibility requirements.

The Apache Flink community released Apache Flink 1.18 in October 2023 and planned to release Apache Flink 1.19 and 1.20 in February and June 2024. Apache Flink 1.20 is expected to be the last version of Apache Flink 1.x series. Considering the complexity and workloads for upgrading to Apache Flink 2.0, Apache Flink 1.20 is planned to be a long-term support (LTS) version and will be continuously updated with bug fixes. Apache Flink 2.0 is planned to be released in October 2024. In the above-mentioned future work plans, all API changes that cause compatibility issues must strictly comply with the release plan. No strict version requirements are imposed on improvements that do not involve API changes.

One-Click Database Synchronization from MongoDB to Paimon Using Flink CDC

Building a Streaming Lakehouse: Performance Comparison Between Paimon and Hudi

206 posts | 54 followers

FollowApache Flink Community - May 30, 2024

Apache Flink Community - August 29, 2025

Apache Flink Community - December 17, 2024

Apache Flink Community China - August 2, 2019

Apache Flink Community - August 4, 2021

Apache Flink Community - February 24, 2025

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Best Practices

Best Practices

Follow our step-by-step best practices guides to build your own business case.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community