This article was compiled from the speeches delivered by Lin Dong (Alibaba Senior Technical Expert) and Gao Yun (Yunqian) (Alibaba Technical Expert) during the core technology session at Flink Forward Asia 2021. The main contents include:

Flink ML API refers to the interface provided to the user to use the algorithm. Packaging all algorithms into a unified API for users allows all users to have a consistent experience, reduces the cost of learning and understanding algorithms, and provides better interaction and compatibility between algorithms.

For example, some basic function classes are provided in Flink ML API. Different operators can be combined into the advanced operator using these function classes, which can significantly improve the efficiency of algorithm development. At the same time, all data are transmitted in the Table format using the unified Table API, which enables algorithms developed by different companies to be compatible with each other, reduces the cost of operators repeatedly developed by different companies, and improves the efficiency of algorithm cooperation.

There are still many disadvantages to the previous version of the Flink ML API:

Considering the negative effect of the problems above, we decided to optimize the Flink ML API.

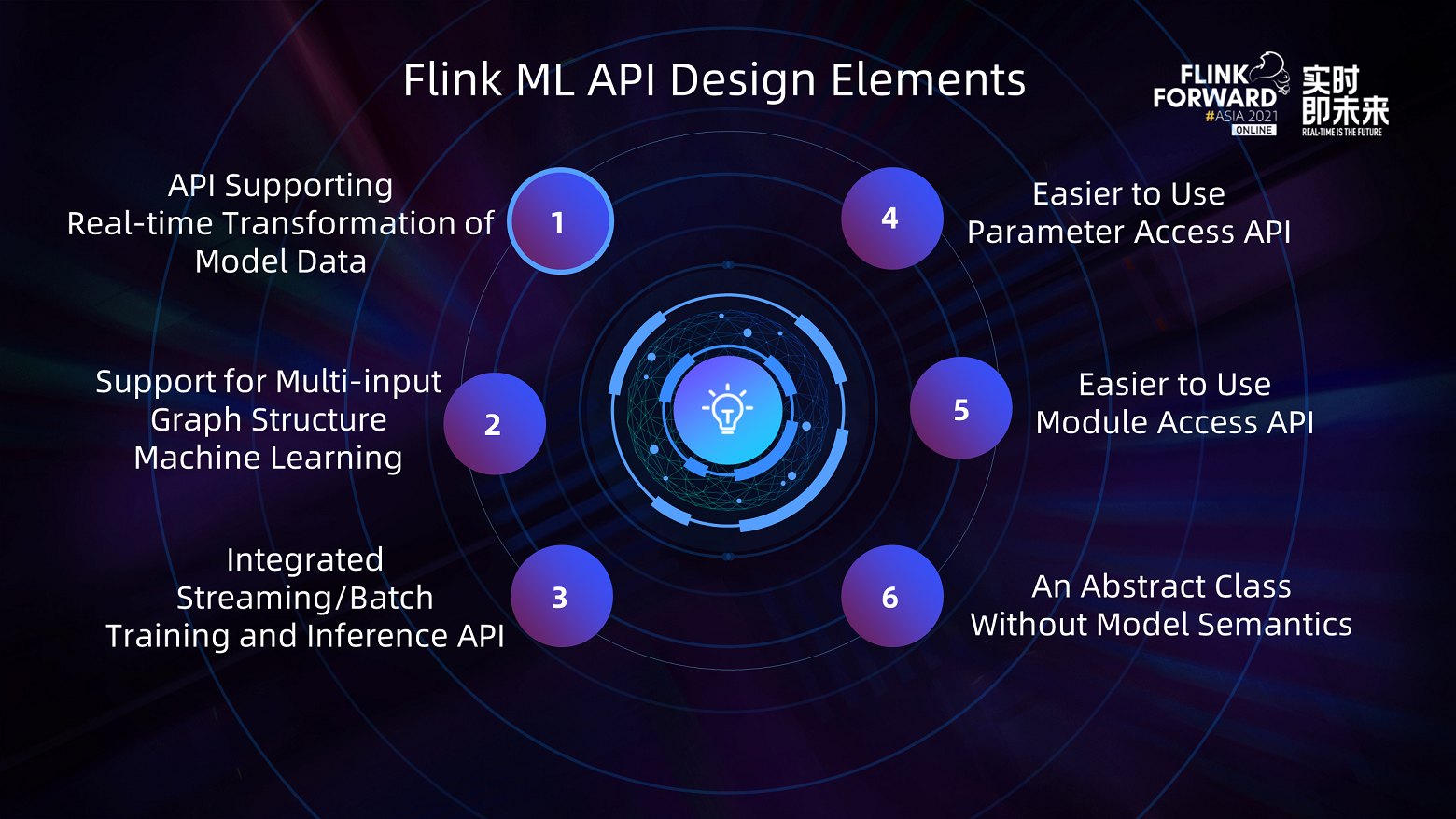

After a lot of discussion and thinking, we have optimized the new API with the following features to solve all the problems above:

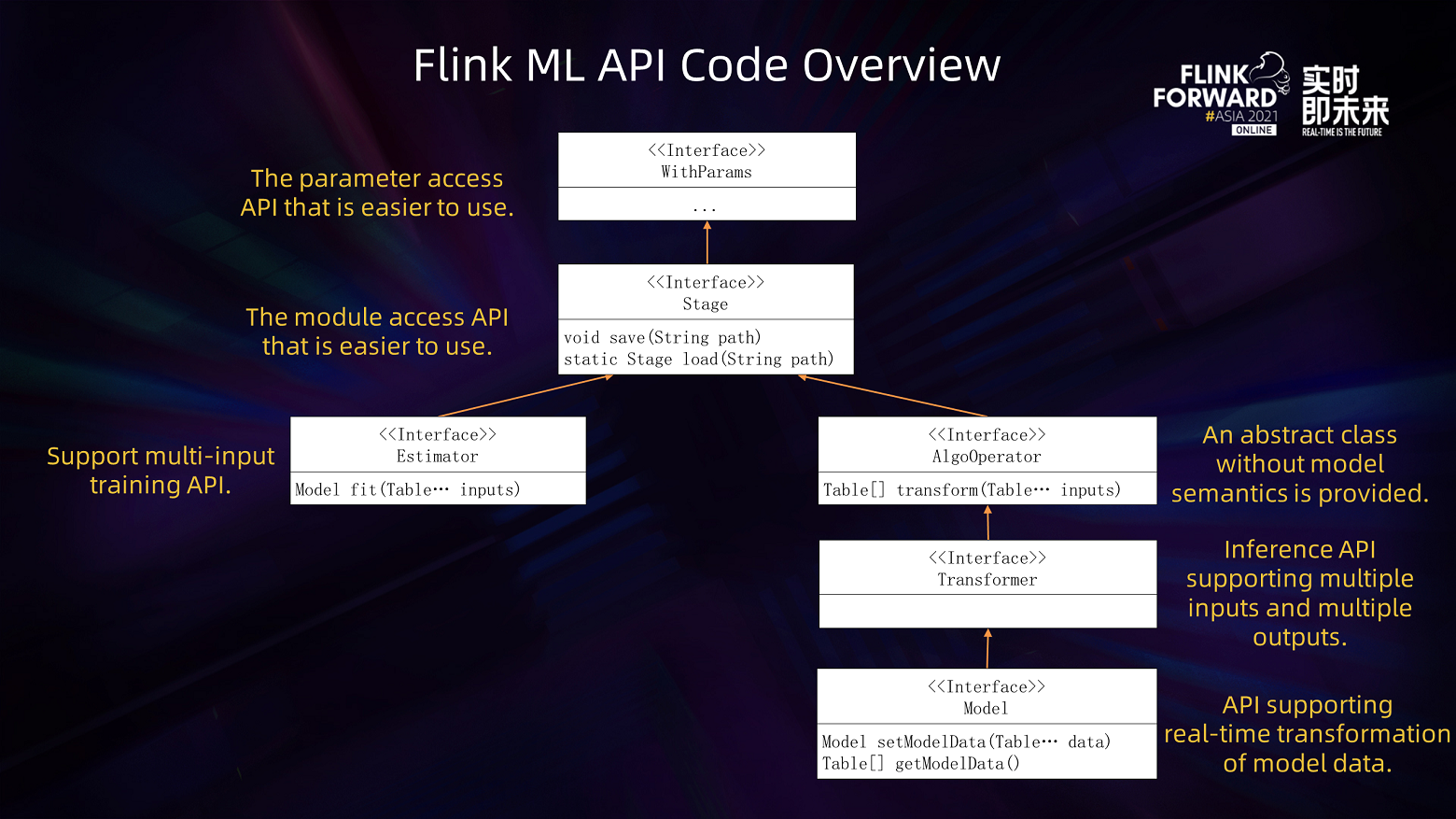

The figure above is the latest API architecture diagram. There is a WithParams interface at the top, which provides an API for parameter access. We have made improvements to this interface. Users no longer need to express fields such as isOptional. Below this interface is a stage interface, which contains all algorithm modules and provides the model access API, such as save() and load(). Save() is responsible for storing the model data and parameters, and load() is responsible for reading the model data and parameters and restoring the original instance objects on the stage interface. Users do not need to consider the complexity of parameter access.

The stage interface is divided into two parts: Estimator to express training logic and AlgoOperator and model class to express inference logic. The core API of Estimator is fit(). Different from the traditional API, the input of multiple tables is now supported, which can be used to express the logic of multiple table input, such as the features concatenation. Estimator::fit() outputs a model. The model belongs to AlgoOperator. AlgoOperator expresses computational logic and supports multiple tables input and output. Each table is a data source and can be used to express common computational logic.

Under AlgoOperator is Transformer, which can express the logic of data conversion. It has the same API format as AlgoOperator, but their abstract concepts are different. The transformer is a data transformation logic model with model semantics. In computing, such as data preprocessing, there are more general operations for different data concatenation and conversion, such as filtering data, in which Transformer may not be applicable. Therefore, we specially developed the AlgoOperator to facilitate users' understanding and use.

Under Transformer is the model class. We added the setModelData() and getModelData() API. These two APIs are specially designed for Machine Learning in the real-time scene, allowing users to export model data to multiple remote terminals in real-time for online inference.

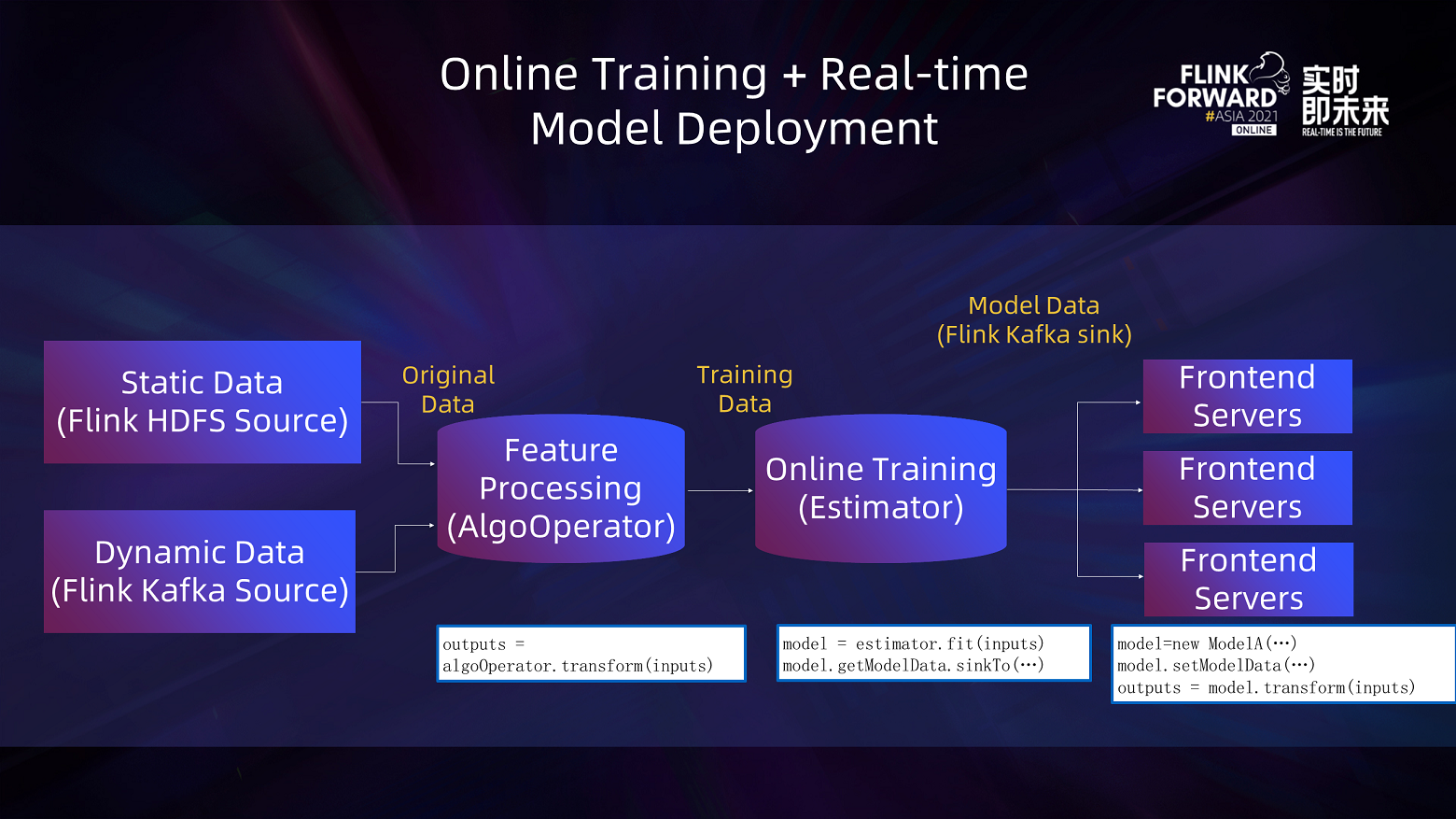

The preceding figure shows a simplified but classic Machine Learning in the real-time scene.

There are two main data sources here. Static data comes from HDFS and dynamic data from Kafka. AlgoOperator reads data from the two data sources above, does data emergence to form a table, and inputs the table to Estimator. The estimator reads the table and then generates a model. Then, you can use getModelData() to obtain a model data table. Then, this model data is transferred to the Kafka topic through the sink() API. Finally, it runs programs on multiple frontend servers. These programs can directly create a model instance, read model data in Kafka to form a table, transmit the data table to the model through setModelData(), and use the obtained model for online inference.

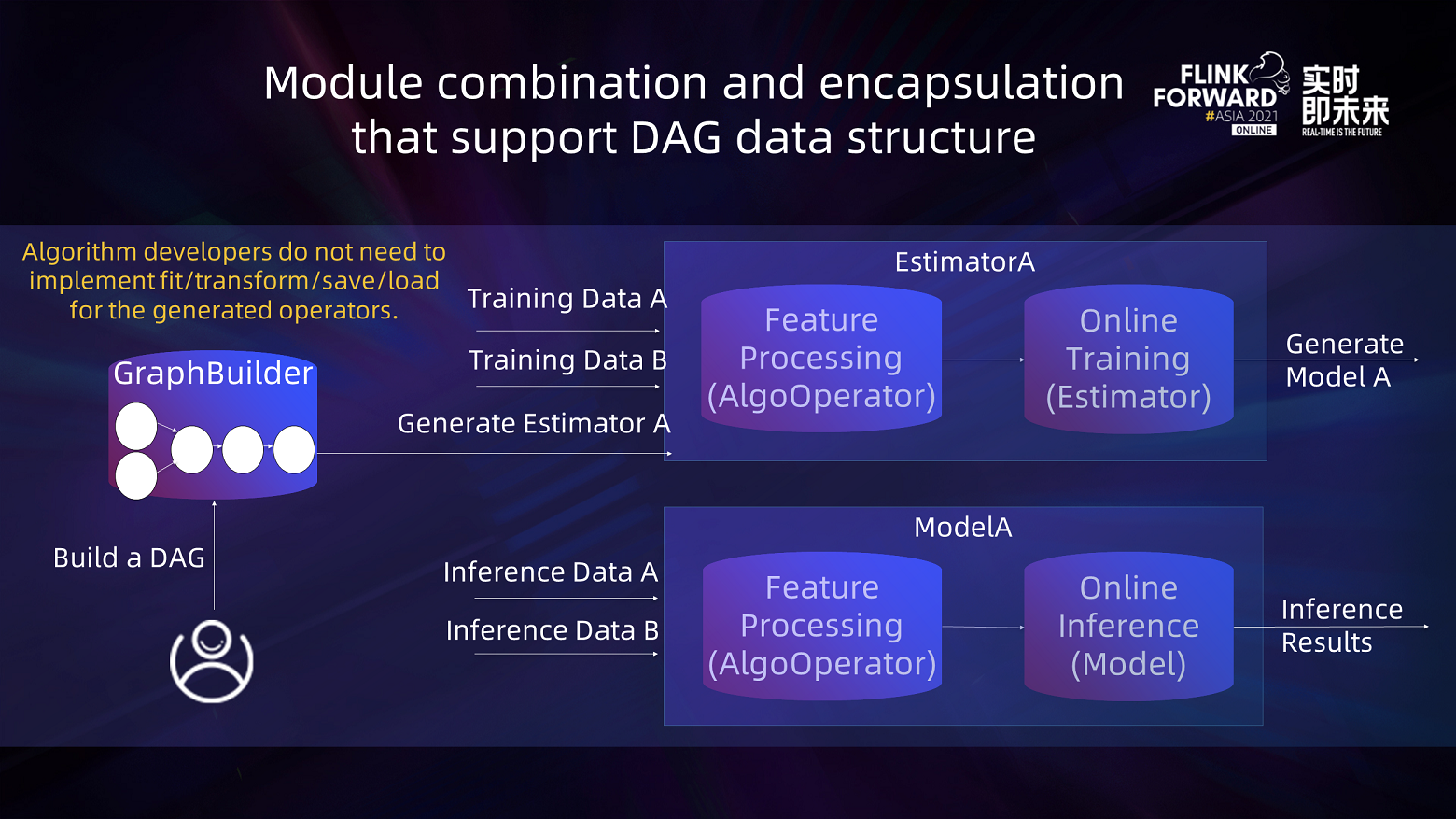

Besides supporting online training and online inference, we provide some basic components to facilitate users to build more complex operators based on simple operators. This component is GraphBuilder provided by FLIP-175.

Let's assume the user's input is also from the same two data sources as above, and the final output is one data source. The core computational work can be divided into two steps. The first step is data preprocessing, such as merging features. Data from two data sources are read, integrated, and input to the Estimator in the form of a table, and the Estimator performs the training logic of the second step. We want to execute the training operator first to get a model. Then, the preprocessing operator and the model are connected to express the online inference logic.

All you need to do is to connect the preceding steps through the GraphBuilder API. You do not need to write the connection logic again specifically for online inference logic. GraphBuilder is automatically generated from the directed acyclic graph and forms a one-to-one correspondence with the operators in the following graph. AlgoOperator in the training graph is directly converted into an operator in the inference graph. The Model obtained by the Estimator in the training graph will become the corresponding node in the inference graph. After connecting these nodes, the final ModelA is obtained, which is finally used as online inference.

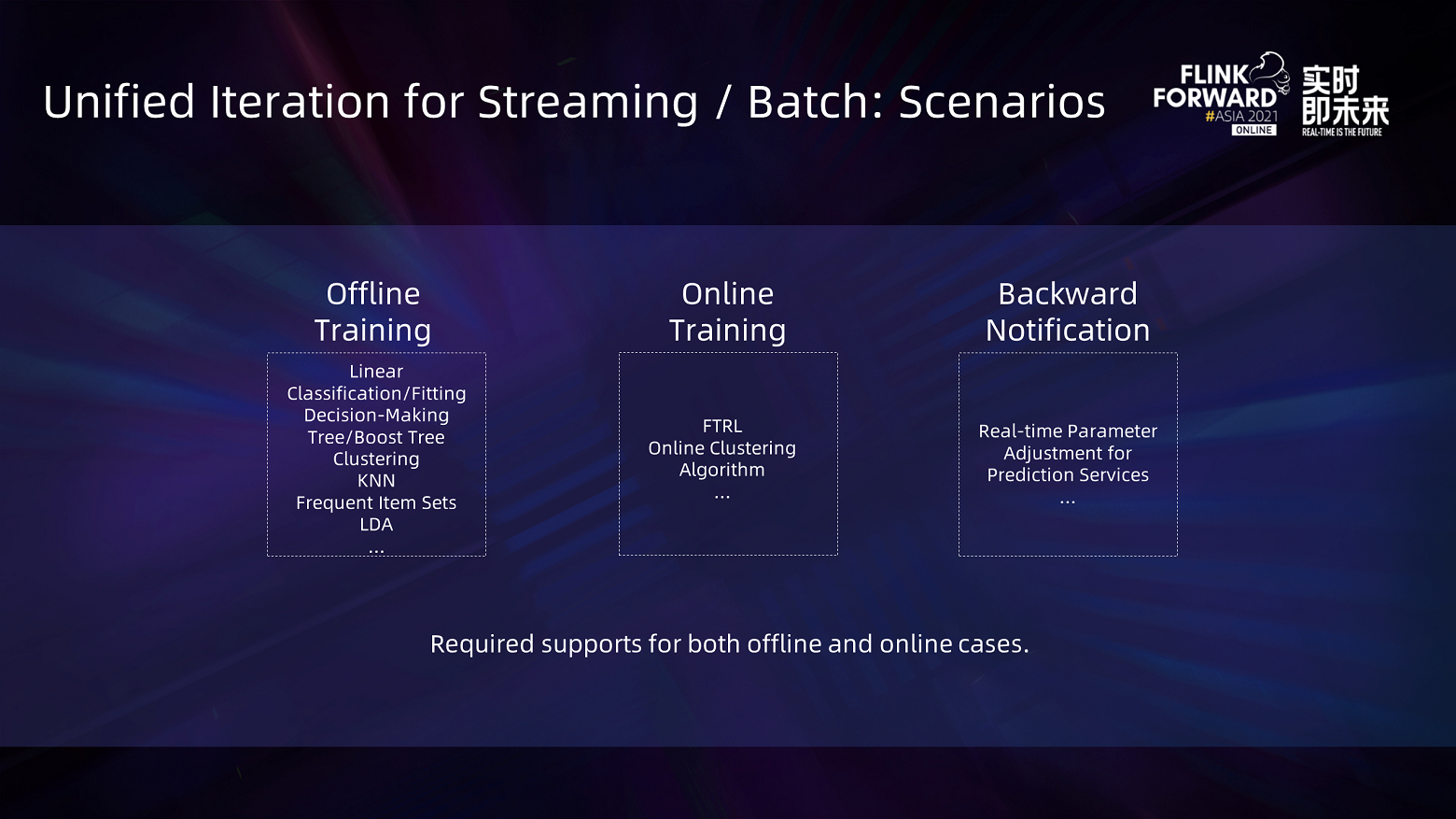

Flink is an engine of integrated streaming/batch processing based on DAG that describes the execution logic. However, users also need the function of processing iterative data in many scenarios, especially in Machine Learning/Graph Processing. For example, iterative data processing is required for offline training and online training of algorithms and dynamic adjustment of model parameters based on results after model deployment.

The actual scenario will cover both offline and online processing cases, so it is necessary to support both offline and online iterative data processing.

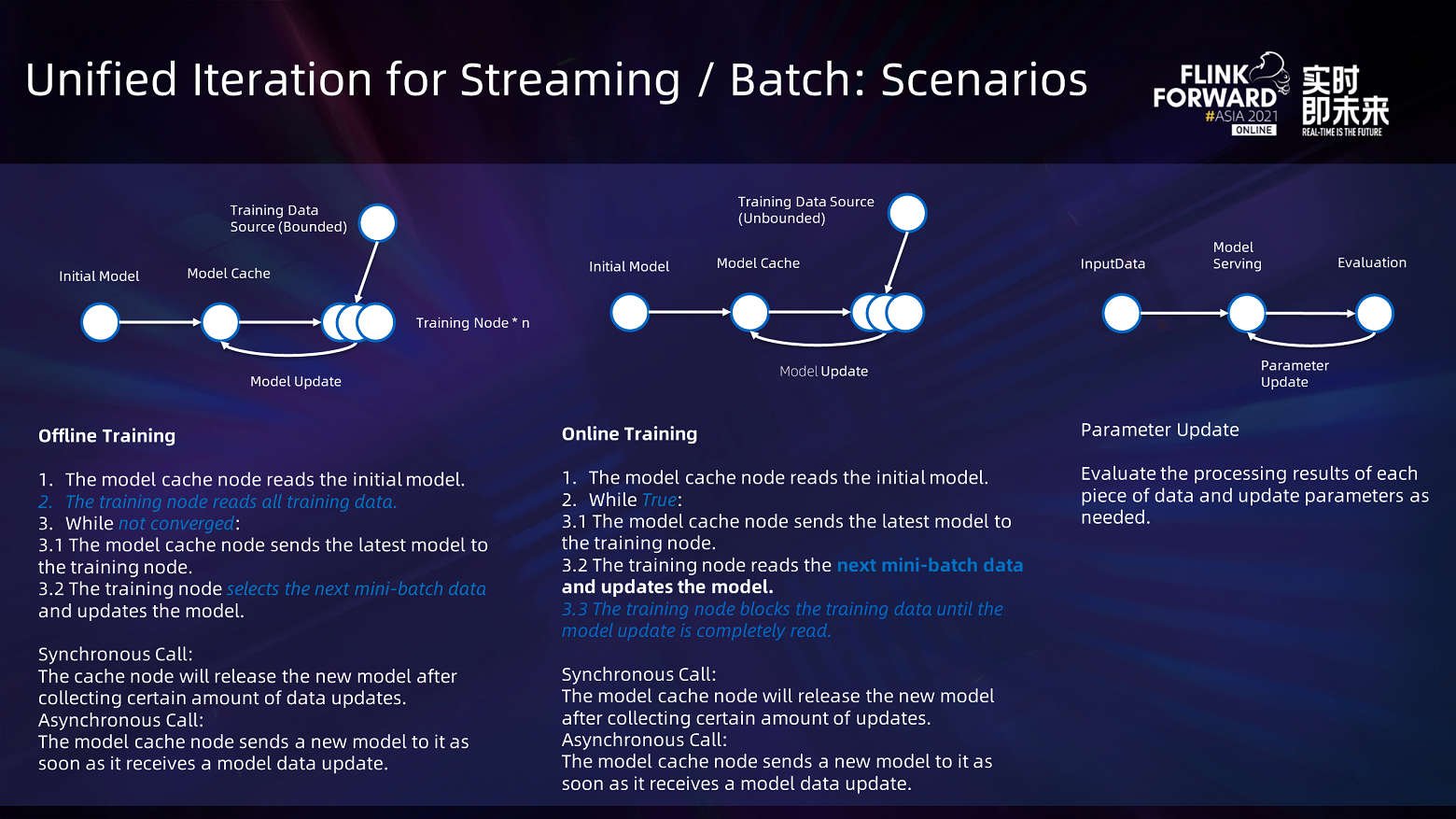

There are differences and commonalities in the processing logic of the three scenarios mentioned above.

Let's take logistic regression as an example for offline training. In iterative data processing, you can use the model cache node to cache the entire model. This node sends the latest model to the training node, and the training node reads the entire data set in advance before the iteration starts. Then, after receiving the latest model each time, the training node selects a mini-batch of data from the data set to update the model and send the result back to the model cache node.

For online training, since the training data is unbounded, it is impossible to read all the training data in advance. The general method is to dynamically read a mini-batch, send it to the model cache node after updating the model, and read the next mini-batch after the model cache node sends the updated model. This requires the training node to read the data according to the priority to finally realize processing the mini-batch one by one.

Both synchronous and asynchronous modes exist in offline training and online training. It depends on whether the model cache node has to collect all updated data before starting the next round of training. In addition, some models dynamically update the parameters during prediction. After each piece of data is processed, the model will evaluate whether the parameters need to be updated immediately and initiate the parameter update if necessary.

The computational logic in these scenarios has certain commonalities. First, an iterative structure needs to be introduced to support the circular processing of the data. After the data circular processing, it is necessary to determine whether to terminate the iteration. What's more, the training system will receive a corresponding notification after each round of data reception in the calculation process, thus triggering a specific calculation. Let's take offline training as an example. After receiving the data of the complete model, the system will start the next round of calculations.

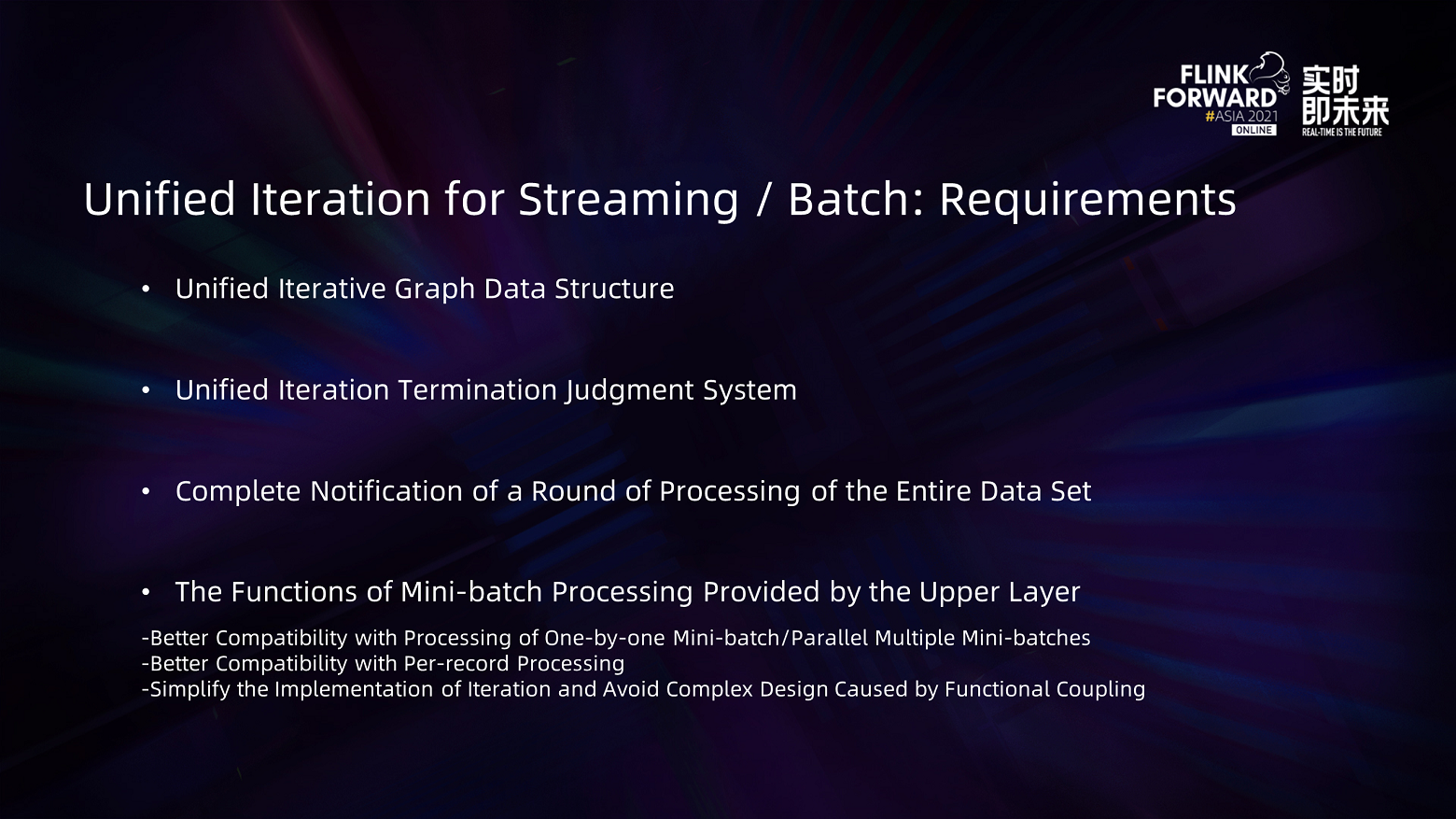

There are two options here. The first option is to directly divide the data set into multiple mini-batches and process each mini-batch iteratively. Each mini-batch is logically applicable to all types of iterations. If you want to do both one-by-one mini-batch processing and multiple mini-batch parallel processing, a new set of interfaces for stream processing of mini-batches must be introduced, and the two processing logic works in practice.

In addition, mini-batches for online training and offline training are generated in different ways. In offline training, all data are pre-read locally, and mini-batches are generated in each round of data processing. In online training, the training system reads a specific amount of data from external data, and mini-batches are generated in this process. Therefore, trying to process both online mini-batches and offline mini-batches synchronously will further increase the complexity of interfaces and the difficulty of implementation.

Finally, if you want the system to be compatible with the single per-record processing, an infinite mini-batch must be introduced, or each record must be treated as a separate mini-batch. The former method will increase the complexity of interfaces, while the latter requires notification to operators every time a mini-batch is recorded, which increases the runtime overhead.

Considering the above, we only provide notifications at the entire data set level in the iteration, and selecting a mini-batch is put on the work of the previous iteration. In offline training, users can efficiently implement mini-batch selection by selecting a portion of data from the entire data set. In online training, users can input operators to process mini-batches one by one through Flink's running set.

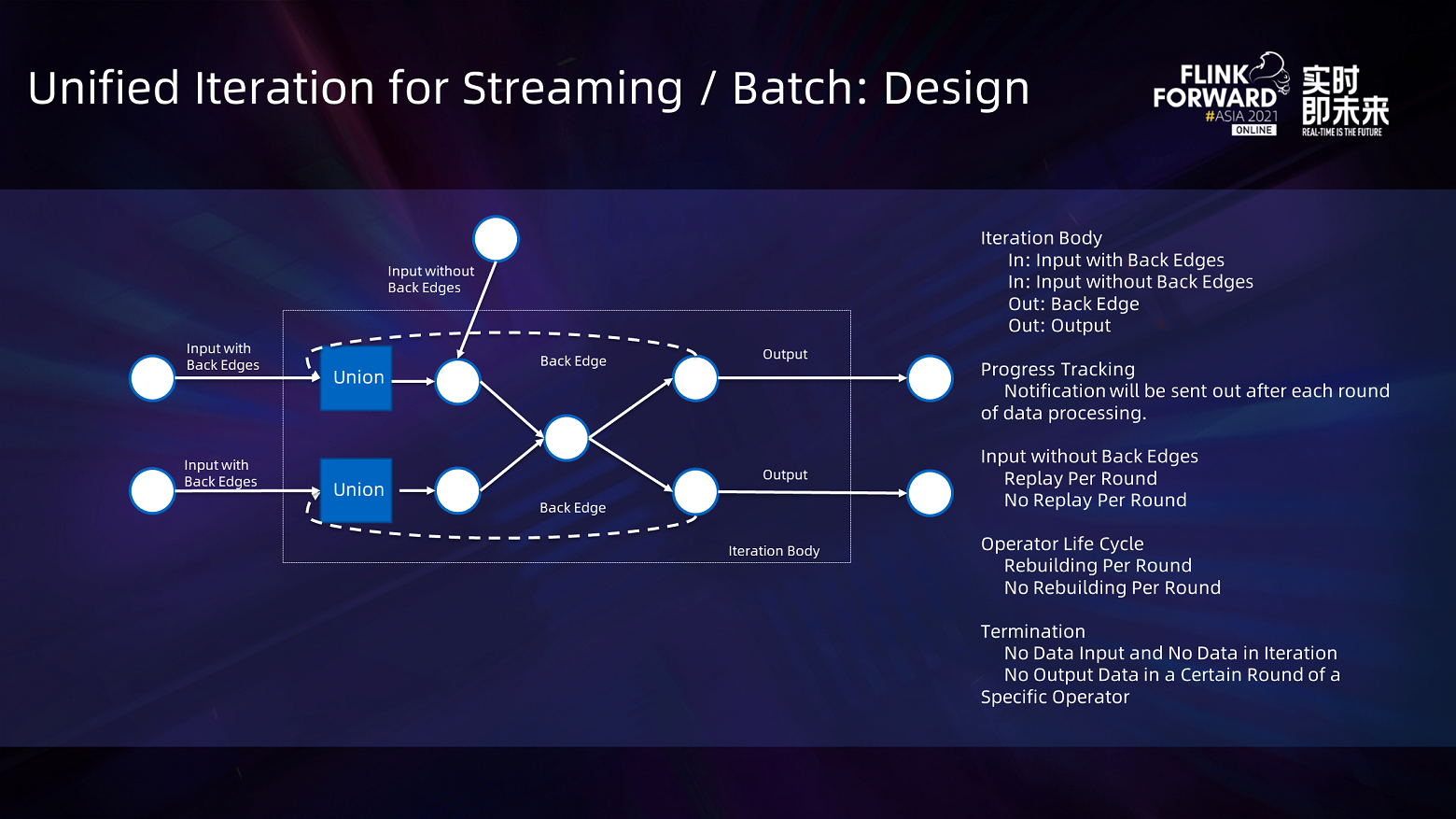

Based on the preceding ideas, the design of the entire iteration is shown in the preceding figure and consists of four parts. The data set corresponding to the back edge corresponds to the input with the back edges. After the data returning from the back edges are united with the initial data, the iterative processing of data is realized.

Users will receive notification of the completion of data set processing from the internal iteration system, which is progress tracking. Based on this function, users can perform specific operations after a certain round of data set processing. For example, during offline training, the user can calculate the next round of model updates after an operator receives the updated data of the model.

In addition, for input without back edges, the user is allowed to decide whether to replay the data each round. As for operators, a new operator will be created per round, and users can process data for all rounds using an instance of an operator. This way, users can realize the data caching in every round without rebuilding operator instances. Users can also replay entered data and reconstruct operators to multiplex operators outside the iteration process, such as the common operators, Reduce and Join. The entered data is replayed, and the operators will be rebuilt in a certain round. In this case, users can directly multiplex these operators outside the iteration process.

At the same time, we provide two kinds of termination judgment logic. One logic says when there is no data to be processed in the entire iteration system, the system will terminate the iteration. The other logic allows users to specify a specific data set when the data stream is bounded. If this data set does not generate new data in a certain round, users can terminate the entire iteration in advance.

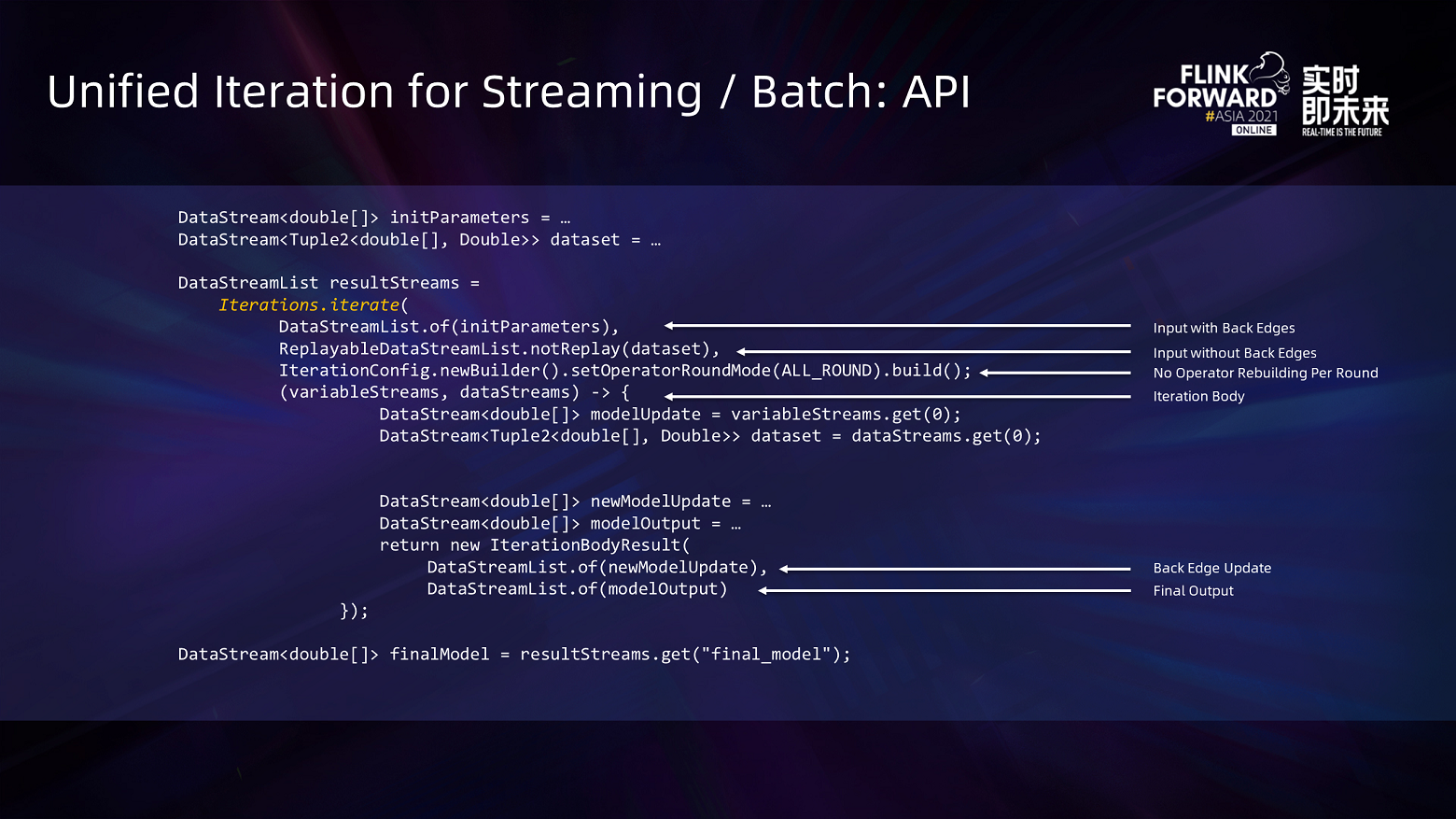

DataStream<double[]> initParameters = …

DataStream<Tuple2<double[], Double>> dataset = …

DataStreamList resultStreams =

Iterations.iterate(

DataStreamList.of(initParameters),

ReplayableDataStreamList.notReplay(dataset),

IterationConfig.newBuilder().setOperatorRoundMode(ALL_ROUND).build();

(variableStreams, dataStreams) -> {

DataStream<double[]> modelUpdate = variableStreams.get(0);

DataStream<Tuple2<double[], Double>> dataset = dataStreams.get(0);

DataStream<double[]> newModelUpdate = …

DataStream<double[]> modelOutput = …

return new IterationBodyResult(

DataStreamList.of(newModelUpdate),

DataStreamList.of(modelOutput)

});

DataStream<double[]> finalModel = resultStreams.get("final_model");The preceding figure is an example of using the iterative API to build an iteration. The user needs to specify the input list with and without back edges and whether the operator needs to be rebuilt in each round. The computational logic of the iteration body. As for the iteration body, the user needs to return the data set corresponding to the back edges and the final output of the iteration.

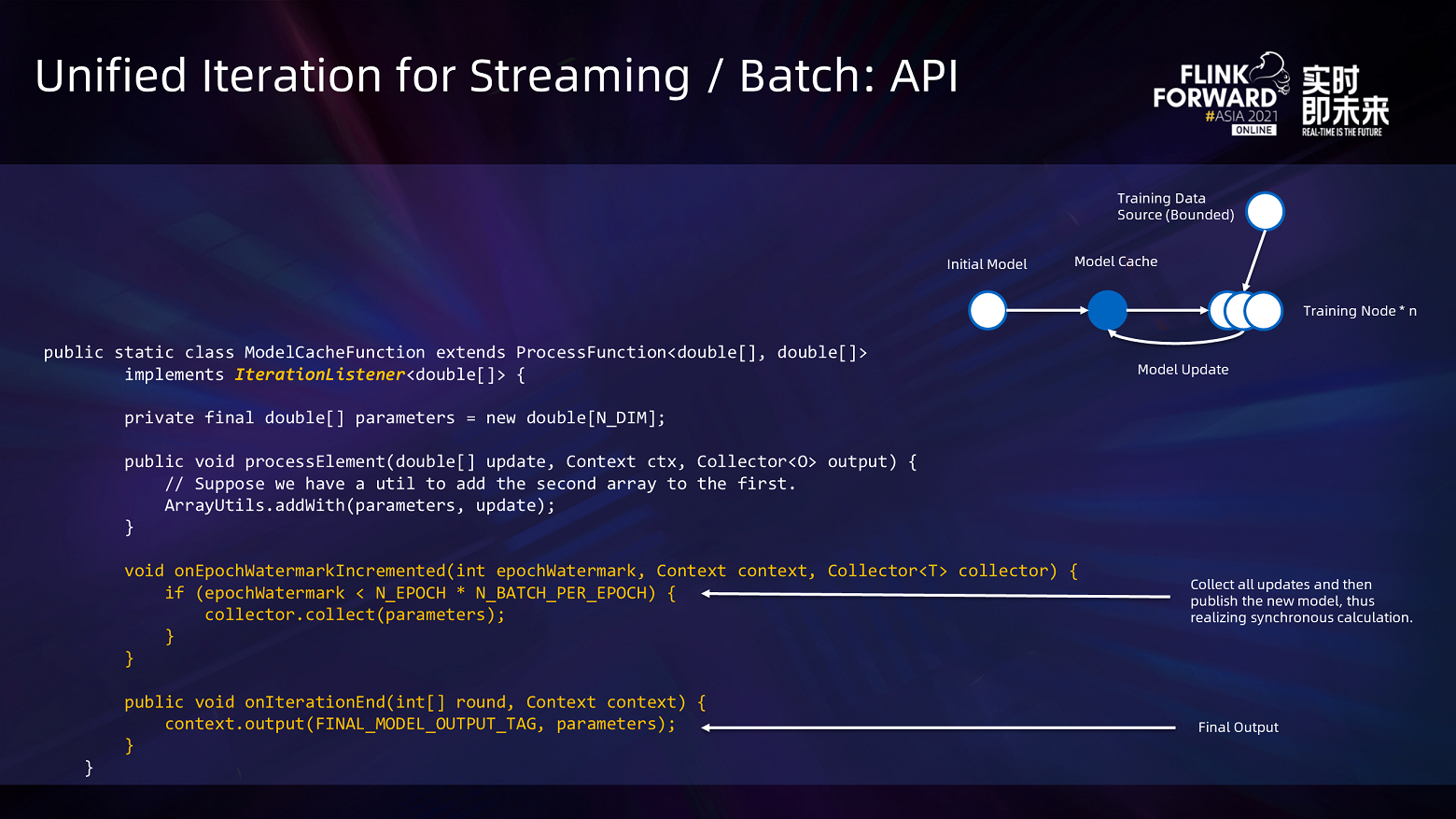

public static class ModelCacheFunction extends ProcessFunction<double[], double[]>

implements IterationListener<double[]> {

private final double[] parameters = new double[N_DIM];

public void processElement(double[] update, Context ctx, Collector<O> output) {

// Suppose we have a util to add the second array to the first.

ArrayUtils.addWith(parameters, update);

}

void onEpochWatermarkIncremented(int epochWatermark, Context context, Collector<T> collector) {

if (epochWatermark < N_EPOCH * N_BATCH_PER_EPOCH) {

collector.collect(parameters);

}

}

public void onIterationEnd(int[] round, Context context) {

context.output(FINAL_MODEL_OUTPUT_TAG, parameters);

}

}As for an operator within the iteration process, if it implements the IterationListener interface, it will notify the iterative operators after all data sets have been processed in a certain round. If the entire iteration is terminated, the iterative operator will be notified through the onIterationTerminated. The user can implement the required computational logic in these two callbacks.

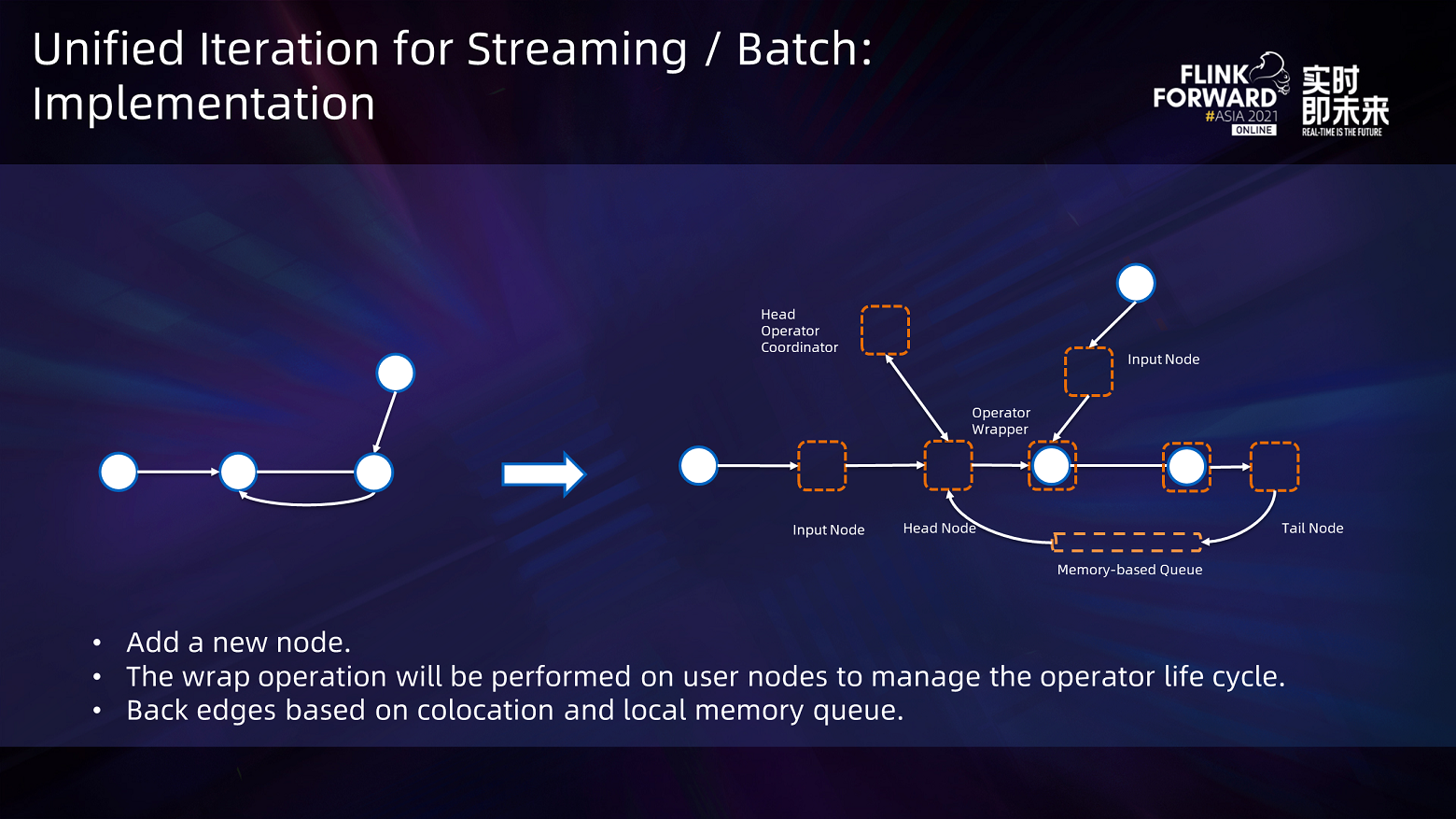

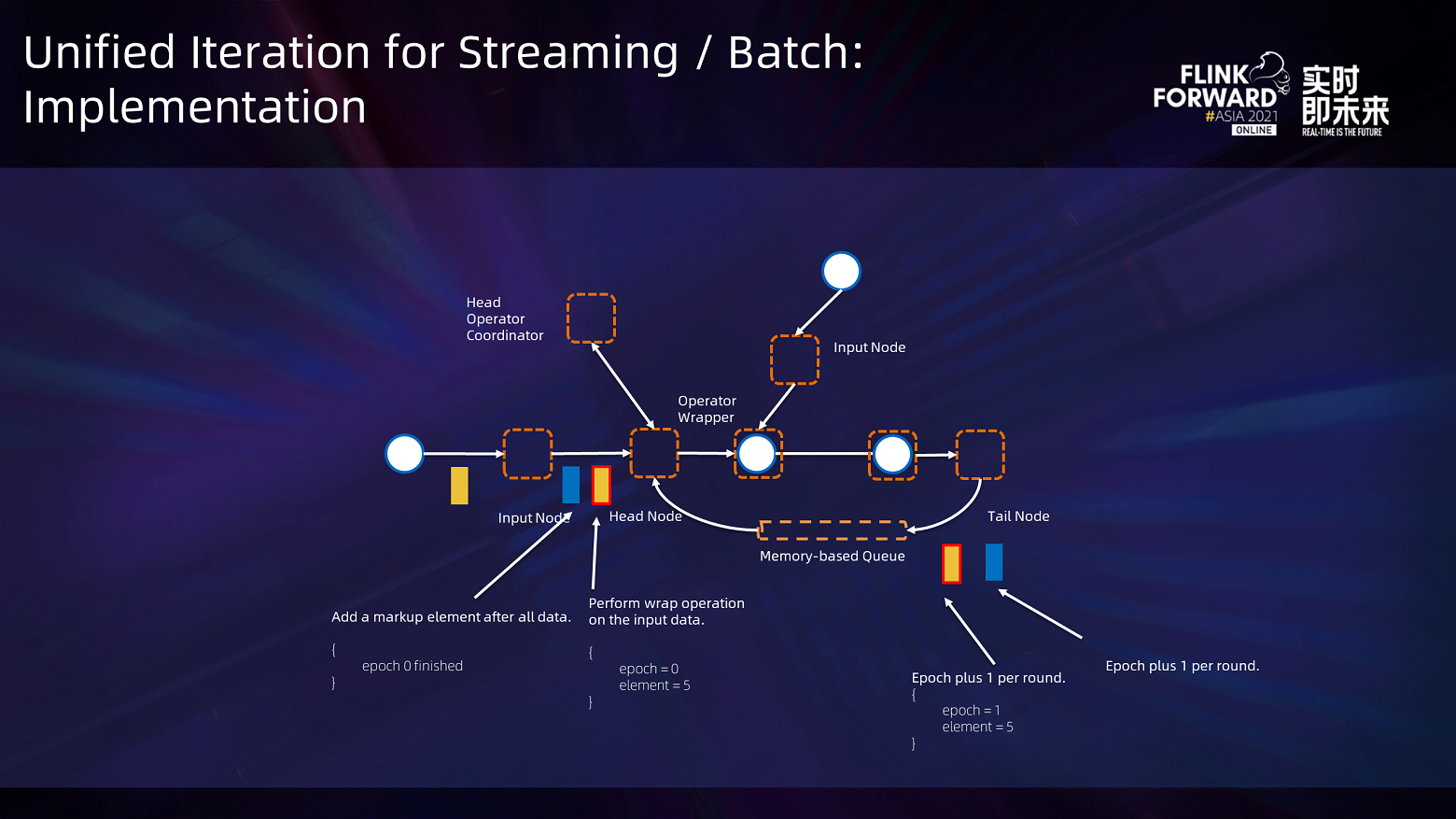

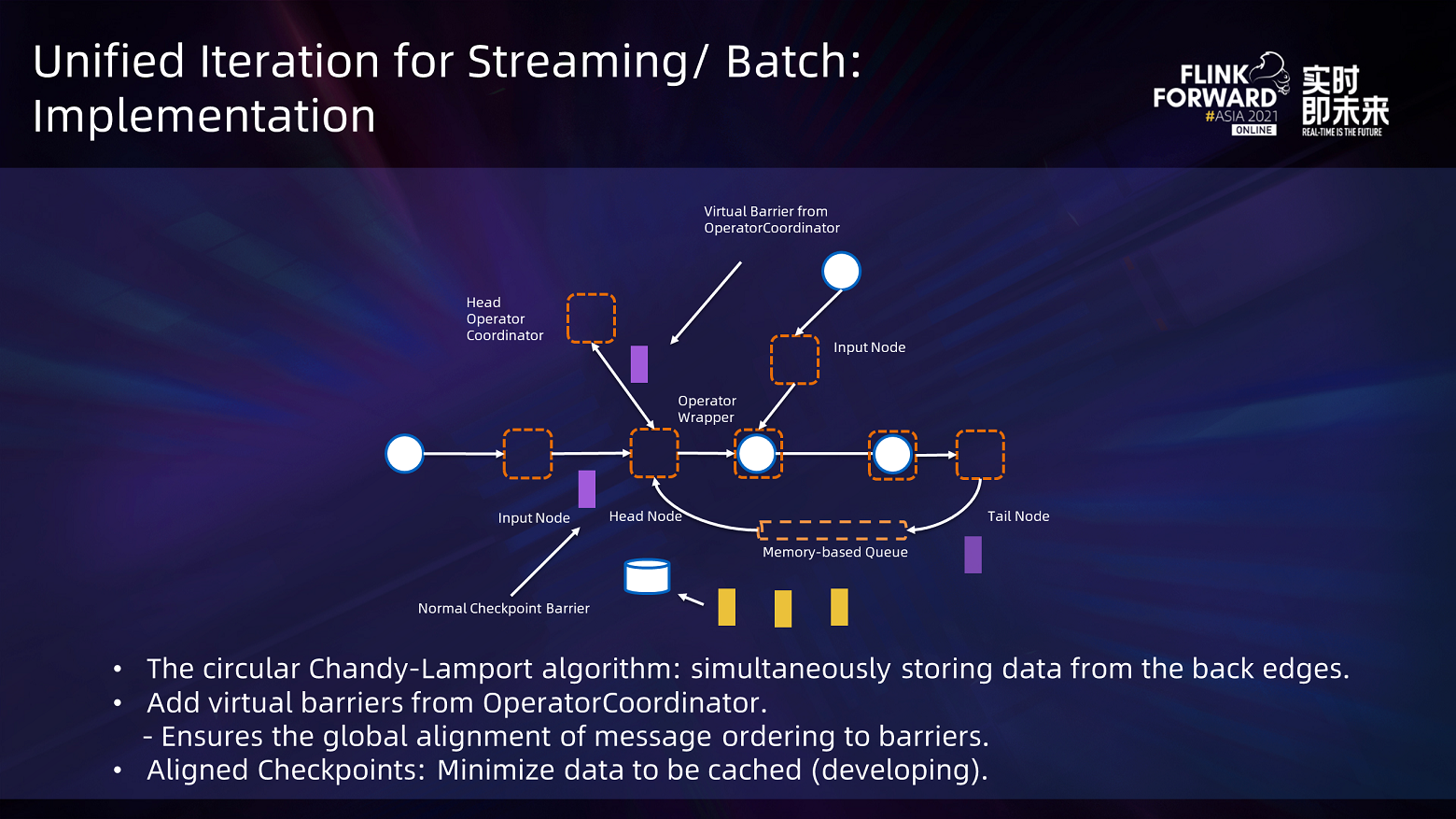

In the implementation of iteration, if the iterative processing structure is created by the users through the code, the nodes inside the iteration process will be added. The wrap operation will be performed on all the processing nodes to achieve the purpose of managing the operator lifecycle and converting the data type. Finally, the iteration implements the back edge based on Colocation and local memory, and the scheduler monitored the entire processing logic still a DAG structure so the current dispatch logic can be directly multiplexed.

The dedicated operators inserted in the iteration process mainly include input, output, head, and tail operators, in which input and output operators are responsible for the conversion of data type. When external data enters the iteration process, the iteration system will add an iteration head for each record of data processing, which records the round of the data processing. The wrapper of each operator will remove the iteration head and hand the data over to the original operator for processing.

The head and tail operators are responsible for implementing the iterative processing of data and calculating whether all iterations have been processed. The head operator reads the entire input from the input operator and inserts a special Epoch Watermark event at the end to mark the end of iteration zero. Since the head operator may have multiple concurrent threads, you must wait for all the concurrent threads of the head operator to read the input before you can send the iteration zero event.

The head operator uses the Operator Coordinator to synchronize all concurrent threads. Operator Coordinator is a global component in JobManager. It can conduct two-way communication with all head tasks. After all concurrent threads of the head operator receive notifications of the completion of each round of data processing, they will send a global broadcast to all head tasks to tell them that the data processing of this round has all been completed. After the head operator sends the Epoch Watermark event, all operators in iterations are aligned with this message. After operators read the Epoch Watermark from all inputs in a certain round, data processing of this round is considered to be completed and operators will call the callback for the end of this data processing round. When the tail node receives data or Epoch Watermark, the number of data processing rounds will be added to 1, and the tail node sends the data to the head again through the back edges, thereby realizing the processing of data iteratively.

Finally, we also support the checkpoint in the case of iteration. The default checkpoint mechanism of Flink cannot support loop computation. Therefore, we optimize the checkpoint mechanism of Flink to implement the loop computation: The chandy-Lamport algorithm, which will cache messages from the back edges at the same time. In addition, when the head operator is aligned, in addition to reading the normal barrier, it also waits for the special barrier from the Operator Coordinator. The message about the end of data processing in each round is also from the Operator Coordinator. It ensures that all operators in the iteration process in each checkpoint are in the same round, thus simplifying the operations of subsequent concurrent modifications done by operators.

In addition, there is an optimization in development. The operator Coordinator delays the received barrier until the next global alignment message is sent out before sending a notification so the state of the operators in the entire iteration process is just after reading a certain round of data. In many synchronization algorithms, operators store the cached data before reading all the data in a round and then do the unified data processing. This optimization ensures that all cached data is cleared when the snapshot operation is performed, thus minimizing the amount of data cached in the checkpoint.

The section above is an introduction to the Flink engines of unified iteration for streaming/batch. These engines can be used both online and offline and support the fault-tolerant mechanism. When a failure occurs, it supports exactly-once. In the future, we will continue supporting the execution mode batch processing and provide more upper-layer development tools.

Recently, we have moved Flink ML-related code from the Flink core code base to a separate Flink ML code base. Firstly, it can facilitate fast iteration of Flink ML. Secondly, it can help reduce the complexity of the Flink core code base to avoid a bloated Flink core code base.

In addition, we have established a neutral organization Flink-extended on GitHub, which can provide a platform for all developers in the Flink community to contribute to some projects they want to be open-source. It is convenient for developers from different companies to share the code without the name of a specific company, which is convenient for all developers in the Flink community to use the shared code, thus facilitating the joint ecological construction of the Flink community. We hope to promote the prosperity and development of the Flink ecological environment.

Currently, there are some important projects in the neutral organization, such as Deep Learning on Flink, which is an open-source project mainly developed by Alibaba's Big Data Team. Its core function is to package TensorFlow into a Java operator and run it in Flink, which facilitates the combination between Flink preprocessing programs and TensorFlow deep learning training algorithms to form an end-to-end training and inference.

Recently, we have developed several common algorithms in Flink ML and will continue providing more out-of-the-box algorithms.

The preceding figure shows the important work holding our focus currently. The core work is to transform the existing Alibaba open-source Alink code base so the algorithms in the Alink code base can adapt to the newly designed Flink ML API. The transformed algorithms can be applied to the Apache project, which facilitates Flink users to get more out-of-the-box algorithms.

In addition, we cooperate with other companies to build the Clink project. The core goal is to use Java to run certain operators in offline processing to obtain training results. Besides, these operators need to be able to do online inference at very low latency. However, it is difficult to implement low-latency online inference using Java, while C++ is usually used to implement this operation. The Clink project provides some basic packaged functions to achieve the purpose that algorithms can be applied to both Java and C++, which facilitates algorithm developers to use JNI to package C++ operators into Java operators and then use these operators in Flink.

Finally, we plan to develop support systems for Python in Flink ML, which includes allowing algorithm users to connect and use Java operators in Flink ML by writing Python programs, hoping to improve the efficiency and experience of Machine Learning.

Most of the work above has been in the open-source project, in which the design of the algorithm API is in FLIP-173. The design of the iterative engine is mainly in FLIP-176. FLIP-174 and FLIP-175 provide the API of algorithm parameters and the API of GraphBuilder, respectively. Projects (such as Clink and Deep Learning on Flink) have also been shared on Flink-extended. You are welcome to use them.

Flink CDC + OceanBase Data Integration Solution: Full Incremental Integration

Streaming Data Warehouse Storage: Requirements and Architecture

206 posts | 56 followers

Follow5141082168496076 - February 20, 2020

Apache Flink Community - March 20, 2025

Apache Flink Community China - September 16, 2020

Apache Flink Community - May 7, 2024

Apache Flink Community China - September 27, 2020

Apache Flink Community - April 16, 2024

206 posts | 56 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community