By Jingsong Lee

In the computer field, a data warehouse (DW or DWH) is a system for data reporting and data analysis, which is considered a core component of business intelligence. It stores current and historical data, creating data analysis reports for workers across the enterprise. [1]

A typical data warehouse based on Extract-Transform-Load (ETL) relies on ODS, DWD, and DWS to implement its key functions. Data analysts can flexibly query data from each layer in a data warehouse for valuable business information.

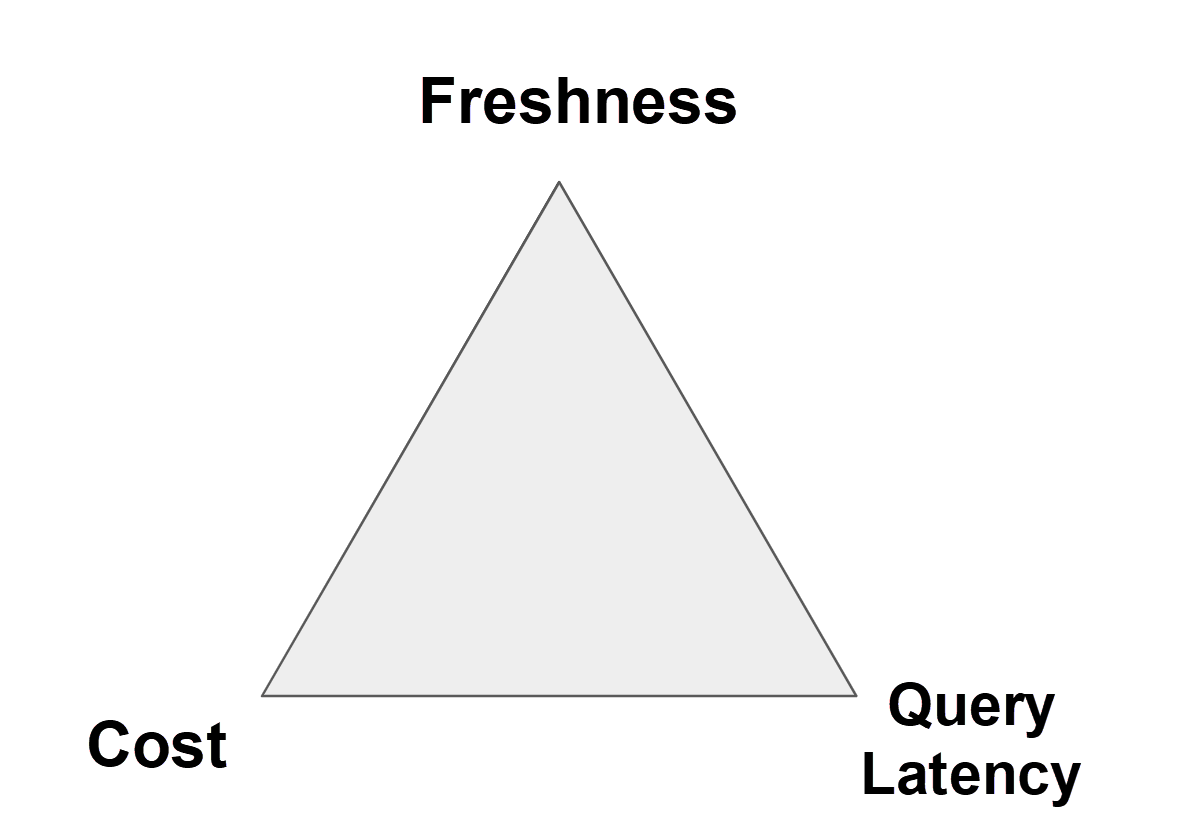

There are three key factors [2] in the data warehouse:

What is the relationship among these three factors?

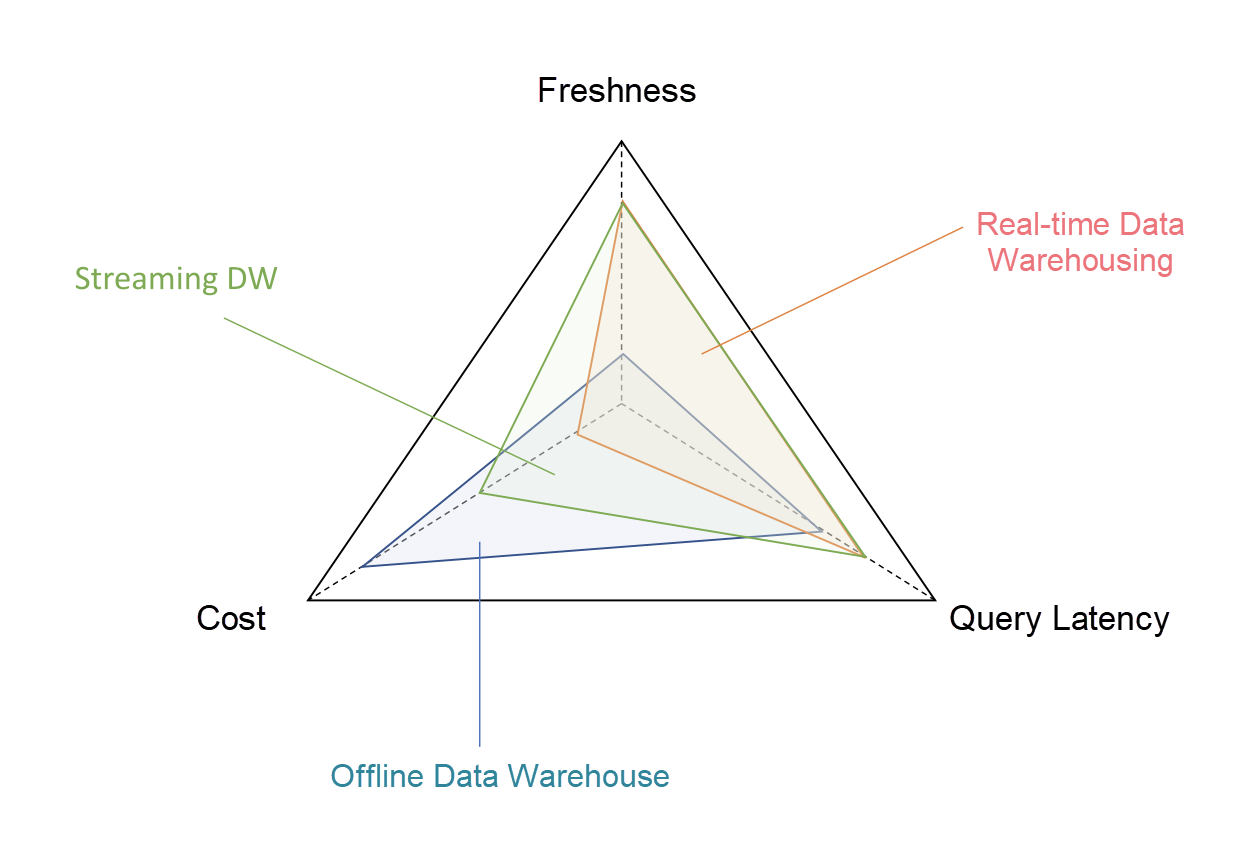

These three constitute a Tradeoff Triangle [2] in the data warehouse:

(Note: In the Tradeoff Triangle, closer to the vertex means better, and farther from the vertex means worse.)

As for this Triangle, what are the Tradeoffs in the field's current mainstream architecture?

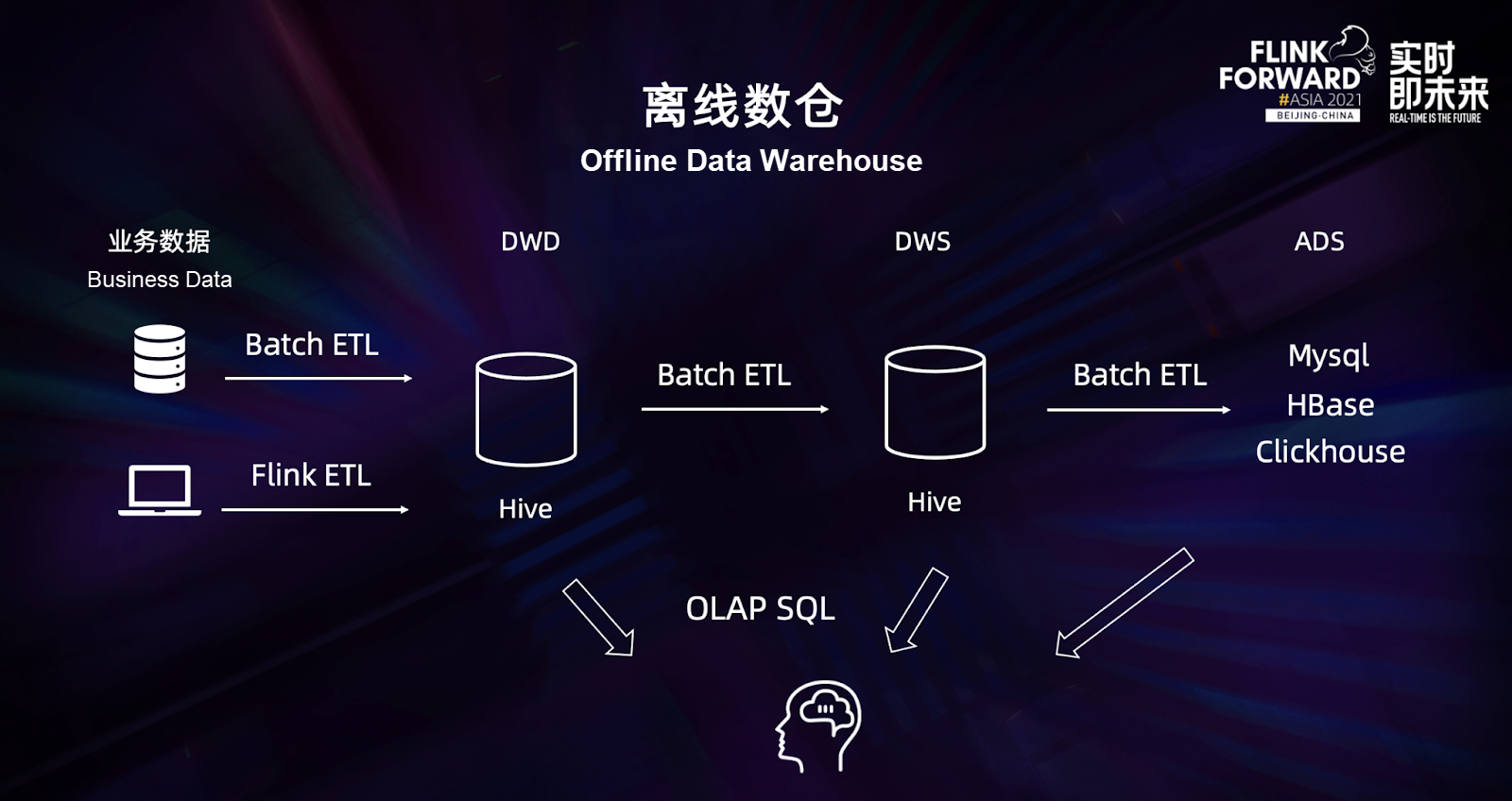

Typical Offline Data Warehouses:

Offline data warehouses use Batch ETL to overwrite data (INSERT OVERWRITE) based on partition granularity, which provides good cost control while processing extremely large data.

However, it has two serious problems:

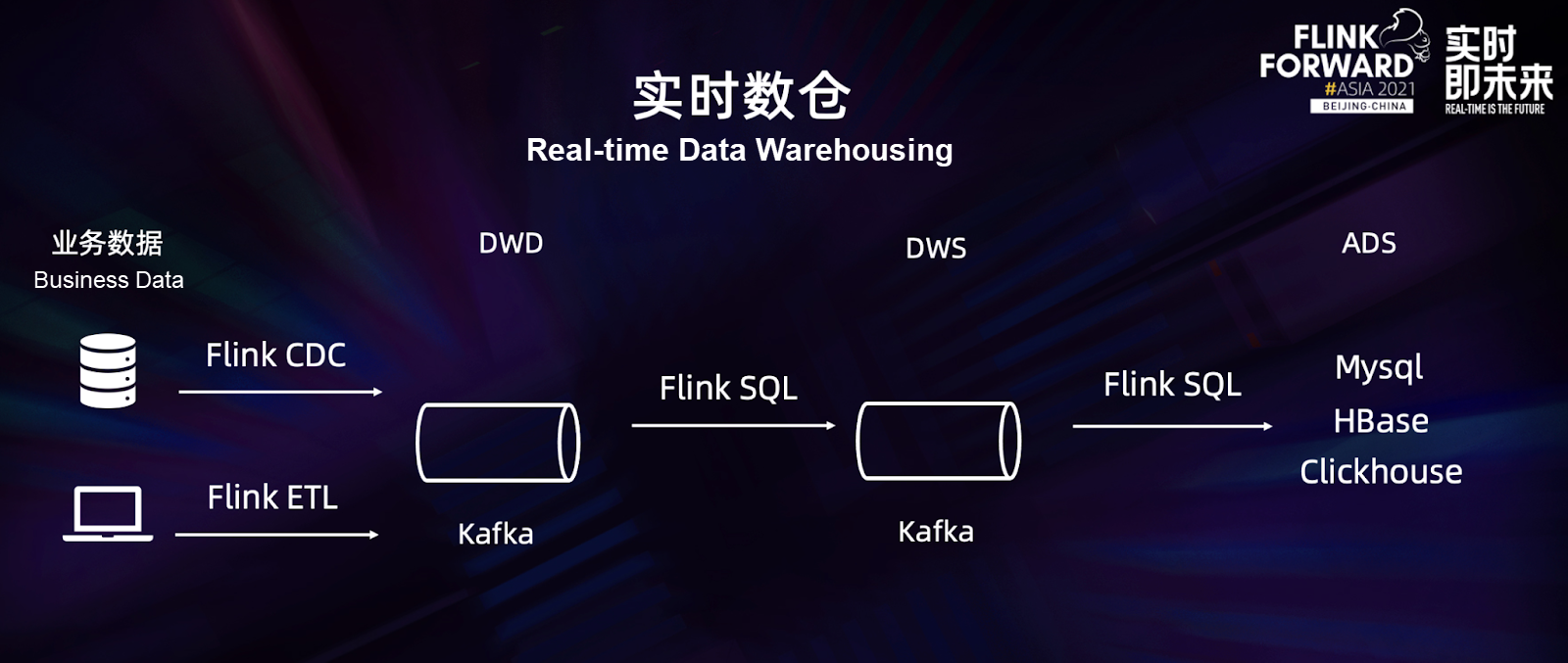

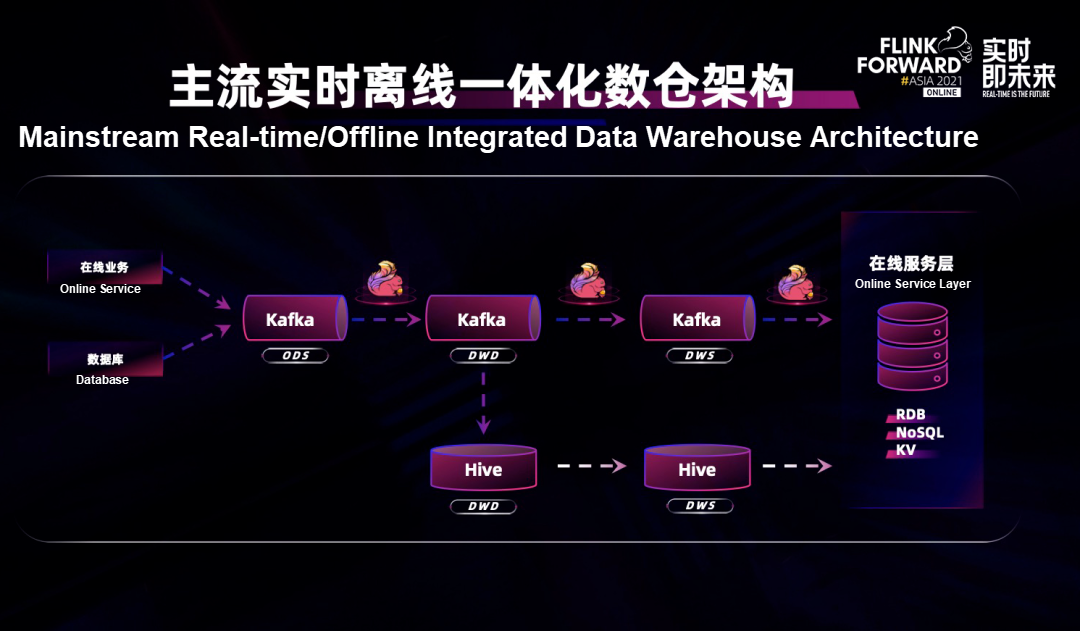

The real-time data warehouse is gradually emerging to solve the problems above. A typical real-time data warehouse uses Flink + Kafka to build an intermediate layer and write it into an online database or analysis system, achieving a comprehensive procedure with second-level latency with good data freshness.

However, it also has some problems.

The first problem is that the intermediate layer cannot be queried.

Data queries in Kafka are limited, OLAP queries cannot be performed flexibly, and long-term historical data is usually not stored. This is very different from the widely used data warehouses. In a mature warehouse system, each data set in a data warehouse should be an abstract implementation of tables that can be queried, while Kafka cannot meet all user requirements for abstract implementation of tables. For example:

In summary, we hope to have a unified architecture to get a real-time data warehouse that can be queried rather than a data warehouse where intermediate data are stuck in all kinds of complex pipelines.

The second problem is the high cost of the real-time procedure.

It is expensive to build a real-time procedure.

Therefore, we hope to have a low-cost real-time data warehouse that supports low operating costs and is compatible with offline toolchains while accelerating the original offline data warehouse.

Summary:

| Offline Data Warehouse | Real-Time Data Warehouse | |

| Cost | Low | High |

| Freshness | Low | High |

| Query Latency of Staging Tables in Data Warehouses | High | N/A |

| Query Latency of Result Tables in Data Warehouses | Low | Low |

Since the current two architectures are oriented to different Tradeoffs and scenarios, users have to maintain the two sets of architectures, which even require different technical teams. This brings high resource costs, high development costs, and high O&M costs.

Is it possible to develop a more balanced data warehouse in terms of freshness, query latency, query capability, and cost? We need to analyze the technical principles behind data freshness and query latency, the different architectures caused by different Tradeoffs, and the technical differences behind them to answer this question.

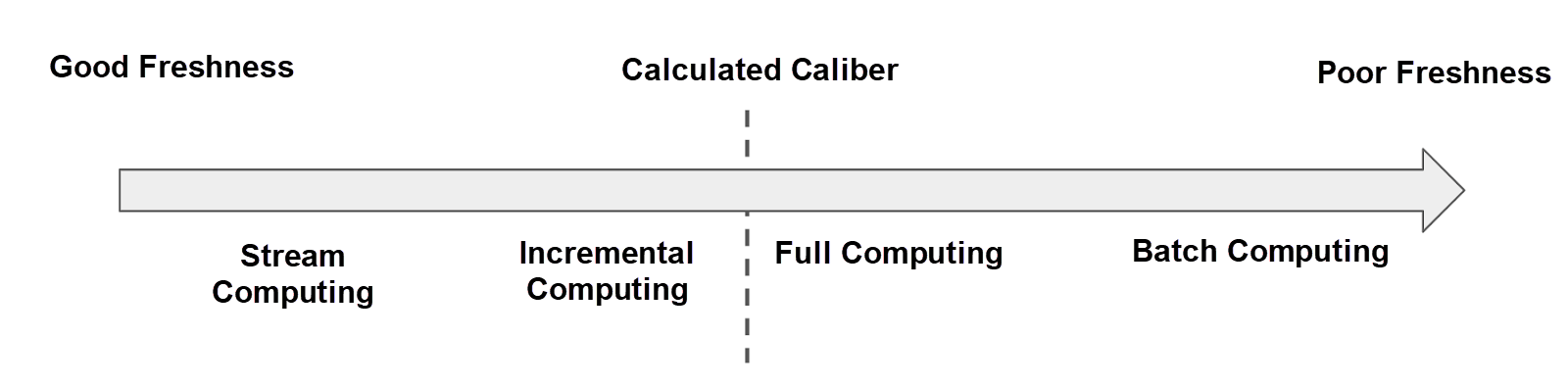

The first thing to think about is data freshness. It is the time that data is available for users to query after a series of processing in the warehouse since its generation. The data is entered into the data warehouse, and after a series of ETL processing, the data will be in an available state.

Traditional batch computing is based on the caliber (day) for ETL processing, so its freshness is the number of days + ETL latency. That means the data freshness of the traditional offline data warehouse is at least one day. According to the computing based on the caliber (day), the input and output data processing are full computing. If the data freshness is less than the caliber (day), the input and output data processing are incremental computing. Typical incremental computing is stream computing, such as Flink Streaming.

However, incremental computing is not the same as stream computing. For example, incremental computing can be performed in small batches. Full computing is not completely equivalent to batch computing. For example, Window can be used to output full data results in stream computing. (The latency of stream computing can also be very high, which can reduce the cost.)

Query latency directly affects the efficiency of data analysis and user experience. The query results are returned to a human. The data ze sees is filtered or aggregated. In traditional offline data warehouses, it may take more than ten minutes to query a large table.

The most effective way to accelerate the return of queries is precomputation. In essence, the ETL processing in data warehouses is precomputation. When the computing time of a project initiated by data analysts is too long, the ETL system will notify the data warehouse personnel to establish the corresponding ETL pipelines. After the data preparation, the analysts can directly query the final result table. This is trading freshness for lower query latency.

However, in traditional offline data warehouses, there are a large number of ad-hoc queries in which users can flexibly select query conditions according to their needs. Queries involving large tables may take more than ten minutes. Major storage systems perform various optimization methods to return the query results as soon as possible.

For example, bring the storage resources closer to the computation resources. The closer the storage resources are to the computation resources, the faster the reading speed is:

For example, data skipping accelerates data search by skipping irrelevant data based on query conditions and fields:

There are many other optimization methods, which are not enumerated one by one here. Storage systems use various methods to cooperate with computing systems to accelerate queries so queries can be more effective.

Through the analysis above, we can see the underlying technologies from different systems are the same.

Theoretically speaking, we can build a unified architecture through the combination of underlying technologies to obtain our desired Tradeoff. This unified architecture may need to meet the following requirements based on different Tradeoffs.

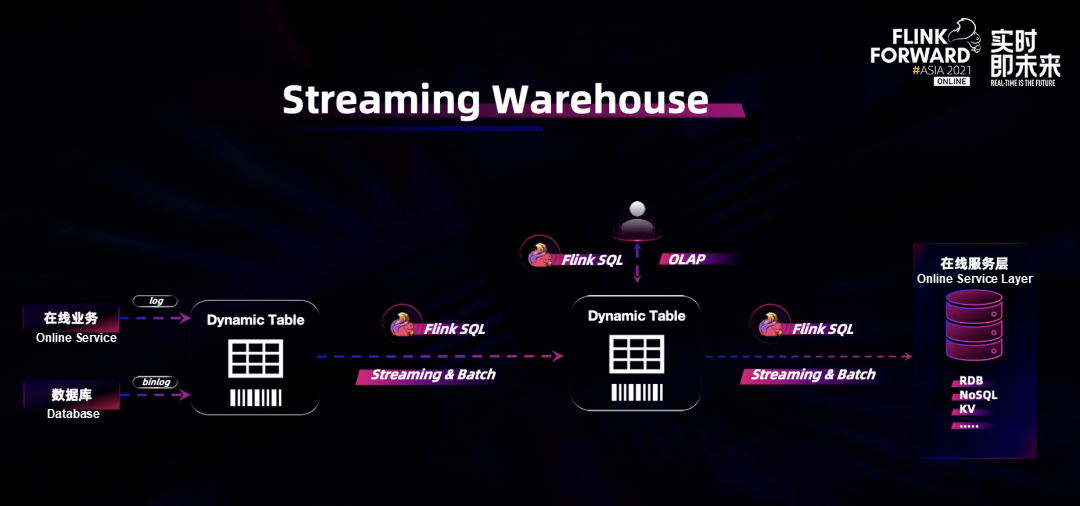

The goal of Streaming Warehouse is to build a unified architecture:

(Note: In the Tradeoff Triangle, closer to the vertex means better, and farther from the vertex means worse.)

In an ideal data warehouse, users can adjust the Tradeoff of cost, freshness, and query latency at will. This requires the ideal data warehouse to be fully equipped with all the functions of offline data warehouses, real-time data warehouses, and OLAP systems. Streaming Data Warehouse takes a step forward based on real-time data warehouses, significantly reducing the cost of real-time data warehouses.

Streaming Data Warehouse provides a real-time computing service and allows users to use the functions of offline data warehouses in the same architecture. Users can make corresponding Tradeoff according to business requirements, thus solving problems in different scenarios.

Before talking about how the Streaming Data Warehouse storage architecture is designed, let's review the two problems of the mainstream real-time data warehouses mentioned earlier. After solving these two problems, the architecture of Streaming Data Warehouses will be clear.

Since the data in Kafka on the intermediate layer is not available, the integration of real-time and offline processing says real-time data processing and offline data processing run in parallel. The business layer does as much encapsulation as possible to show the users a set of abstract implementations of tables.

Many users use Flink + Kafka to process real-time data streams and write the analysis results to the online service layer for display or further analysis. At the same time, they import the data in Kafka in the real-time data warehouse to the asynchronous backstage offline data warehouse to supplement the real-time data. They also regularly do large-scale batch/full processing or correct historical data every day. [3]

However, there are several problems with this architecture:

We hope the data warehouse has a query-oriented unified abstract implementation of tables in the Streaming Data Warehouse. All streaming data can be analyzed without data missing. This requires this unified abstract implementation of tables to provide both services.

In other words, you can both subscribe to Change Logs of this table in a way of Message Queue and directly perform OLAP queries on this table.

Let's talk about the second problem of the classic real-time data warehouse.

Although the unified abstract implementation of tables provided by Streaming Data Warehouse can solve the problems of data freshness and query latency, its cost is higher than offline data warehouses. In many cases, not all business scenarios have high requirements for data freshness and query latency. Therefore, it is still necessary to develop low-cost table storage.

Lake storage is a good choice.

Therefore, while maintaining the real-time flow of comprehensive-procedure data, Streaming Data Warehouse also provides low-cost offline storage and ensures that the architecture does not affect real-time procedures. Generally speaking, the requirements in SLA for real-time procedures are higher than offline procedures. Therefore, the writing and consumption of data queues in the design and implementation of Streaming Data Warehouse storage should be taken as a high priority. The storage of historical data should not affect operations on data queues.

Flink Table Store [4] provides the service of integrated streaming/batch storage designed for Streaming Warehouses.

Over the past few years, with the help of many developers and users, Apache Flink has become one of the best distributed computing engines, especially in large-scale stream processing with the local state. Nevertheless, there are still some challenges when people try to gain inspiration from data analysis. Among these challenges, a prominent one is the lack of storage systems that can meet all computing modes.

Until now, it is common to deploy some storage systems that worked with Flink for different purposes. A typical approach is to deploy a Message Queue for stream processing, a scannable file system/object storage service for batch processing and ad-hoc queries, and a KV storage for queries on nodes. Due to its complexity and heterogeneity, such an architecture faces challenges in terms of data quality and system maintenance. This has become a major problem that undermines the end-to-end user experience of streaming and batch-integrated processing by Apache Flink.

Flink Table Store is developed to solve the problems above. This is an important step for Flink, which extends the services of Flink from computing to storage. As a result, we can provide a better end-to-end experience for our users.

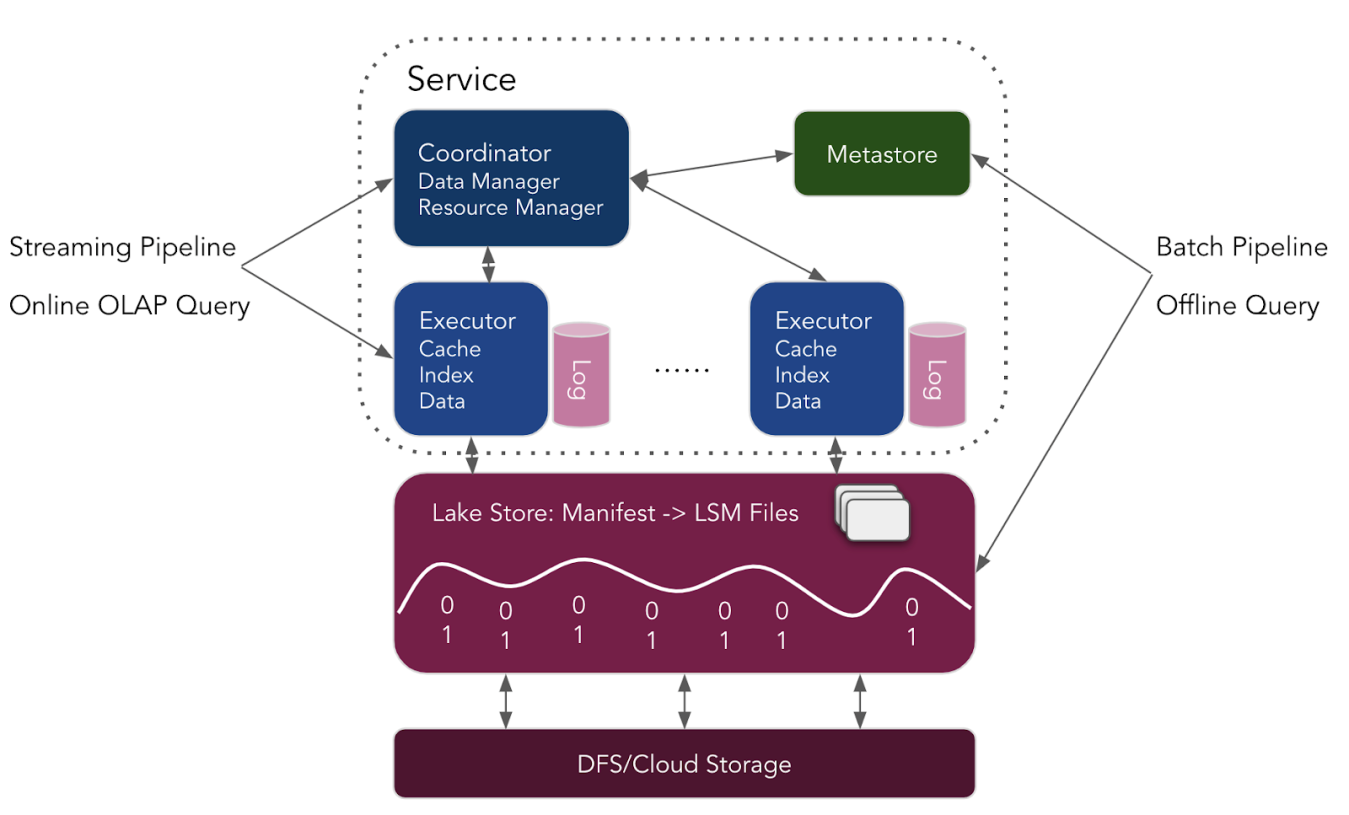

The coordinator is the control node of a cluster. It is mainly responsible for managing various executors. Its main responsibilities include:

Data Manager:

Resource Manager:

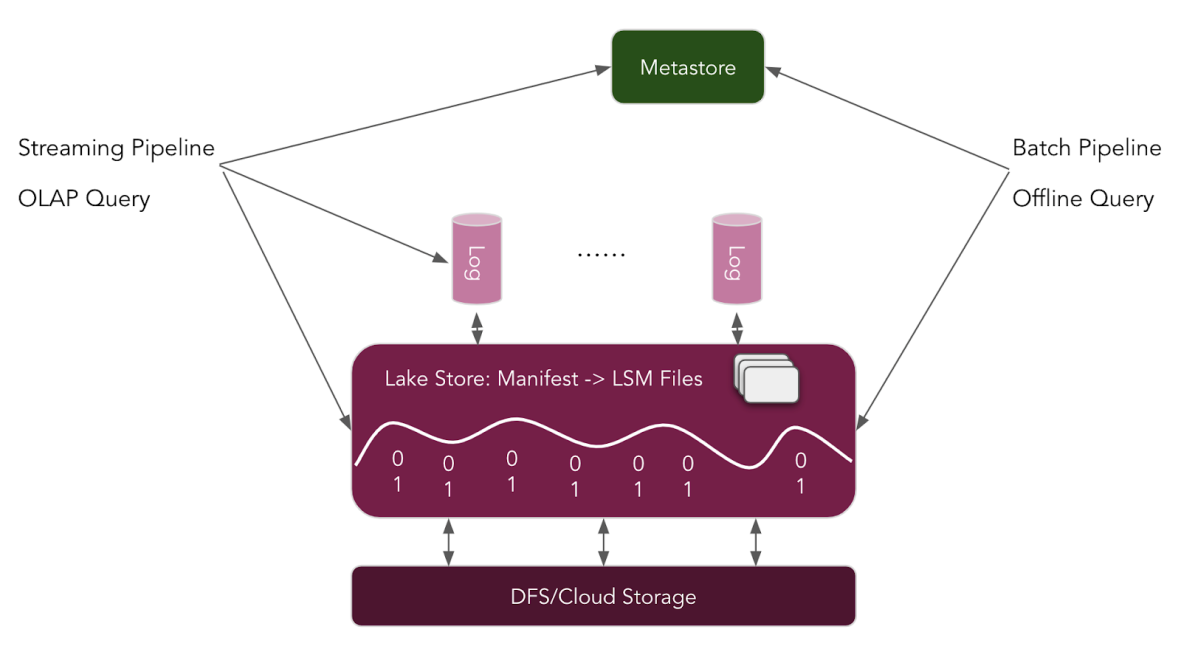

Metastore is an abstract node. It can connect to Hive Metastore, minimize dependencies based on Filesystem, or connect to your Metastore. It stores the most basic table information. You don't have to worry about performance issues because more detailed and complex table information is placed in the lake storage.

The executor is a separate compute node that serves as a cache for storage and an accelerator module for local computing:

Each executor processes one or more buckets. Each bucket has a corresponding change log. These change logs are stored in the Message Queue and are mainly used to:

After the Checkpoint operation on data in Executor, the data falls into the Lake Storage, which is based on the file format of column storage and the file system of shared DFS. Lake Storage provides a complete abstract implementation of the table format. Its main purpose is to support updates and reads at a lower cost:

The read and write paths are divided into two:

The data from the Service is up-to-date. It synchronized to Lake Storage after minutes of checkpoint operations. That's why the data the user reads from the lake storage is not exactly the same as the real-time data. Essentially, the data from both the Service and Lack Storage sides are the same.

Service and Lake Storage have these differences:

Therefore, storage needs to rely on lake storage to deal with these tasks. Then, how can we determine which data should be processed in Service and which data should be processed in lake storage?

INSERT OVERWRITE operation for Batch Pipeline can only be performed on archived partitions.

The overall change in Streaming Data Warehouse is huge. There are outstanding players in the fields of OLAP, Queue, Lake Storage, Stream Computing, and Batch Computing. Currently, it is impossible to produce a perfect solution in a short term.

However, we are moving forward. In Apache Flink Table Store, we developed LSM-based lake storage and natively integrated Kafka as the Log System.

Compared with the complete architecture in the preceding section, the short-term architecture does not include Coordinators and Executors, which means:

We will start from the bottom and lay a solid foundation. First, we propose a complete unified abstraction, accelerate storage, and provide real-time OLAP.

The current architecture provides two core values.

Table Store supports query service to the hierarchical storage Kafka of the original real-time data warehouse, and intermediate data can be queried.

Table Store still supports streaming real-time pipelines. It natively integrated Log, supports the integration of Kafka, and weakens the concept of streaming and batch. Users only see the abstraction of Table.

However, it is important to note that data writing into storage should not affect the stability of Kafka.

Table Store accelerates data processing of offline data warehouses and provides incremental data updates while being compatible with Hive offline data warehouses.

Table Store provides a comprehensive table format of lake storage and quasi-real-time OLAP queries. The structure of LSM is conducive to updating performance and helpful for better Data Skipping, thus accelerating OLAP queries.

The community is currently working to strengthen core functions, stabilize storage formats, and optimize the remaining parts to prepare Flink Table Store for production.

In the upcoming version of 0.2.0, we hope to provide a Table Format with the function of integrated streaming/batch processing and gradually improve the integrated streaming/batch lake storage. You can expect the following additional functions:

In the medium term, you can also expect:

Please try the preview version of 0.1.0 and share your feedback on the Flink mailing list, contributing to the project optimization.

Apache Flink Table Store project [4] is under development, and the first version has been released. You are welcome to try it and give feedback.

[1] Data Warehouse Wiki: https://en.wikipedia.org/wiki/Data_warehouse

[2] Napa: Powering Scalable Data Warehousing with Robust Query Performance at Google: http://vldb.org/pvldb/vol14/p2986-sankaranarayanan.pdf

[3] Flink Next: Beyond Stream Processing: https://mp.weixin.qq.com/s/CxHzYGf2dg8amivPJzLTPQ

Flink ML API: The Algorithmic Interface and Iterative Engine Designed for Real-Time Machine Learning

206 posts | 54 followers

FollowApache Flink Community China - April 13, 2022

Alibaba Cloud Community - October 17, 2025

Hologres - May 31, 2022

Alibaba EMR - September 2, 2022

Apache Flink Community China - June 8, 2021

Alibaba Cloud MaxCompute - January 22, 2021

206 posts | 54 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn MoreMore Posts by Apache Flink Community