By Su Kunhui (Fuyue), an Apache HDFS Committer and an EMR Technical Expert from the Alibaba Computing Platform Division. Currently, he is engaged in the storage and optimization of open-source big data.

Apache Hadoop File System (HDFS) is a widely used big data storage solution. Its core metadata service, NameNode, stores all the metadata in memory. Therefore, the amount of metadata that it can carry is limited by the memory size, with about 0.4 billion as the upper limit of files that a single instance can hold.

JindoFS block storage mode is a storage optimization system developed by Alibaba Cloud based on Object Storage Service (OSS), supporting efficient data read/write acceleration and metadata optimization. In terms of design, it avoids the memory limit of NameNode.

Unlike HDFS, JindoFS metadata service uses RocksDB, which can be stored on large-capacity local high-speed disks for the underlying metadata storage, solving the memory capacity bottleneck. It maintains stable and excellent read/write performance with 10% to 40% of hot file metadata stored in memory cache. With the raft mechanism, the JindoFS metadata service consists of three primary and standby instances to ensure high service availability. Stress testing is conducted with one billion files to verify the performance of JindoFS at this scale. At the same time, comparative tests have been made on some key metadata operations between JindoFS and HDFS.

Limited by the memory size, a single instance of HDFS NameNode can store about 0.4 billion files. In addition, as the number of files increases, DataNode report blocks that need to be processed also increase, which results in huge performance jitter. A large amount of file information is stored in a large fsimage file for loading at the next startup, and due to the large fsimage file, it takes more than ten minutes for NameNode to start.

JindoFS solves the preceding problems using RocksDB to store metadata. Compared with NameNode, JindoFS can store a larger number of files without being limited by memory. In addition, there is no problem with performance jitter since it does not need worker nodes to report block information. The metadata service of JindoFS can be started within one second, and the switch between primary and standby nodes can be achieved in milliseconds. Tests are performed on JindoFS with 0.1 billion files to one billion files based on whether it can maintain stable performance.

| Primary Instance Group (MASTER) | Core Instance Group (CORE) |

| Number of hosts: 3 Model: ecs.g5.8xlarge CPU: 32 cores Memory: 128 GB Configuration of data disks: 640 GB ESSD* 1 |

Number of hosts: 4 Model: ecs.i2g.8xlarge CPU: 32 cores Memory: 128 GB Configuration of data disks: 1,788 GB local disk* 2 |

Four sets of data are prepared to test the performance of the metadata service of JindoFS at different metadata scales. They are an initial state with no files, 0.1 billion files, 0.5 billion files, and one billion files, respectively. Restore an actual HDFS fsimage file masked by users to the JindoFS metadata service. In a ratio of one to one, create block information according to the file size, and save it together with the files to the JindoFS metadata service. The generated dataset is listed below:

Metadata Disk Space Usage

| No files (initial state) | 0.1 billion files | 0.5 billion files | 1 billion files |

| 50 MB | 17 GB | 58 GB | 99 GB |

Distribution of File Size (Using one billion files as an example):

| File size | Ratio |

| 0 (directory) | 1.47% |

| 0 (file) | 2.40% |

| Greater than 0, less than or equal to 128 KB | 33.66% |

| Greater than 128 KB, less than or equal to 1 MB | 16.71% |

| Greater than 1 MB, less than or equal to 8 MB | 15.49% |

| Greater than 8 MB, less than or equal to 64 MB | 18.18% |

| Greater than 64 MB, less than or equal to 512 MB | 9.26% |

| Greater than 512 MB | 2.82% |

In addition, the directory level is mainly distributed in the fifth to the seventh level. The file size distribution and directory level distribution of datasets are similar to those of the production environment to some extent.

NNBench is short for NameNode benchmark. It is an official tool provided by HDFS to test the performance of NameNode. Since it uses the standard filesystem interface, it can test the performance of the JindoFS server. The execution parameters of NNBench are listed below:

Test Write Performance

-operation create_write -maps 200 -numberOfFiles 5000 -bytesToWrite 512Test Read Performance

-operation open_read -maps 200 -numberOfFiles 5000 -bytesToWrite 512Start 200 Map Tasks, each of which writes or reads 5,000 files, totaling one million files. Due to the limited size of the tested cluster, 128 Maps are executed simultaneously.

Test Results

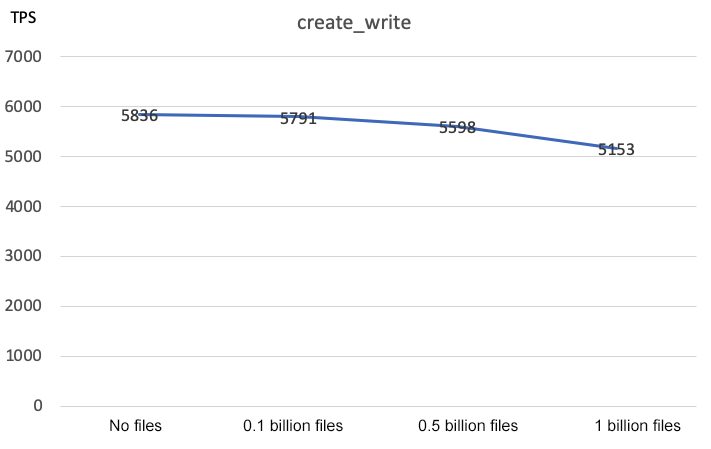

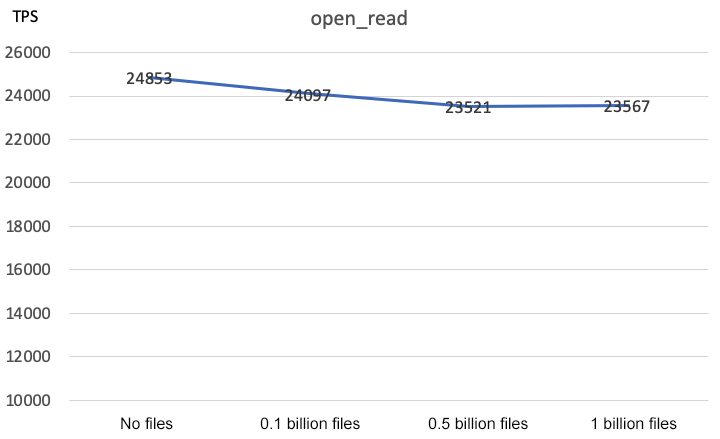

| Operation | No files | 0.1 billion files | 0.5 billion files | 1 billion files |

| create_write | 5,836 | 5,791 | 5,598 | 5,153 |

| open_read | 24,853 | 24,097 | 23,521 | 23,567 |

The NNBench results present the changes in the metadata service performance as the metadata size increases. Analysis of the results shows that:

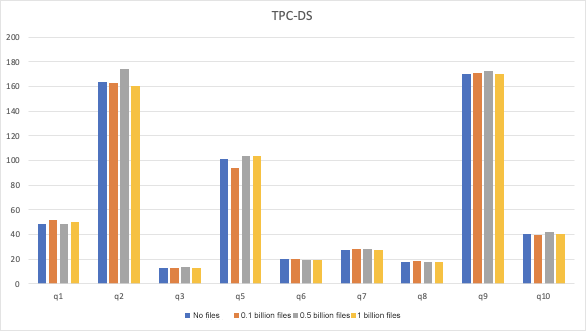

The test uses the official TPC-DS dataset with five TB data stored in ORC file format. Spark is used as the execution engine in the test.

The test time is listed below (in seconds):

99 queries total time consumption comparison:

| Query | No files | 0.1 billion files | 0.5 billion files | 1 billion files |

| Total | 13,032.51 | 13,015.226 | 13,022.914 | 13,052.728 |

Regardless of the error, the TPC-DS results were nearly unaffected as the metadata size increased from no files to one billion files.

The preceding NNBench is mainly used to test the performance of single point writing and query of the metadata service under high concurrency. However, the ls -R command for file list export and du/count command for file size statistics are also frequently used operations. The execution time of these commands reflects the execution efficiency of the metadata service traversal operation.

Two sample data are tested:

1. Run the ls -R command on a table with half a year of data in 154 partitions and 2.7 million files and count the execution time. The command is listed below:

time hadoop fs -ls -R jfs://test/warehouse/xxx.db/tbl_xxx_daily_xxx > /dev/null2. Run the count command on a database with 500,000 directories and 18 million files and count the execution time. The command is listed below:

time hadoop fs -count jfs://test/warehouse/xxx.dbTest Results (in seconds):

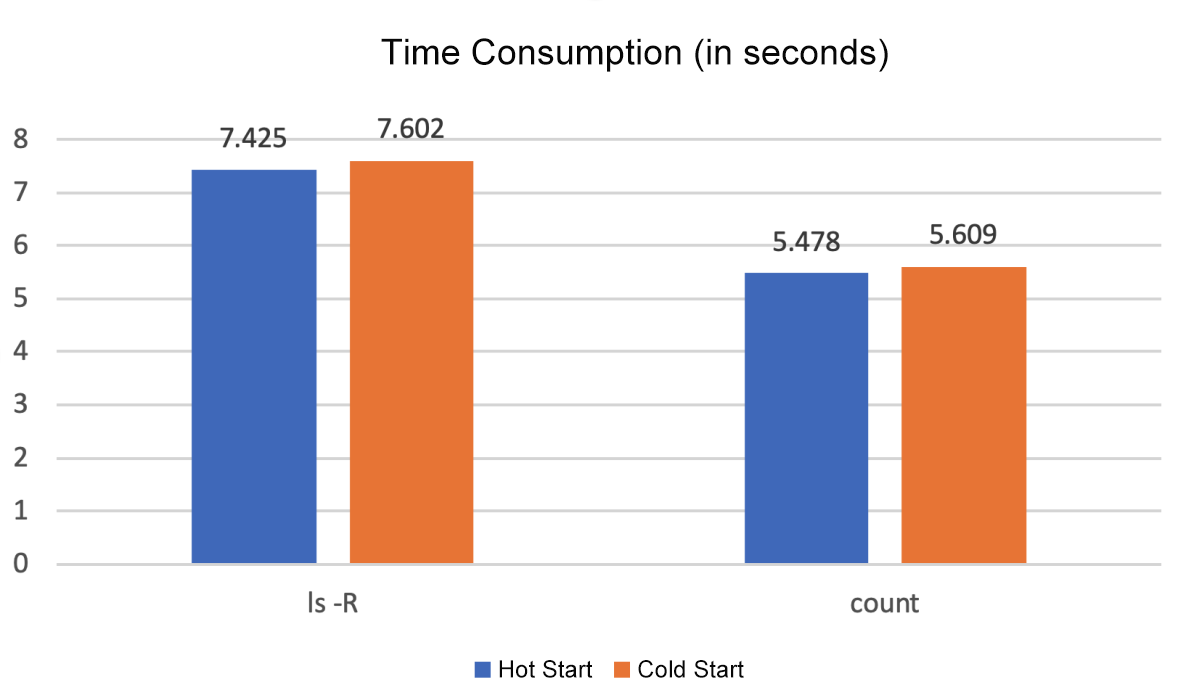

| Operation | 0.1 billion files | 0.5 billion files | 1 billion files |

| ls -R | 7.321 | 7.322 | 7.425 |

| count | 5.425 | 5.445 | 5.478 |

The test results show that the performance of the metadata service remains stable when traversing the same number of files or directories running the ls -R or count command. This means the performance does not change as the total amount of metadata increases.

Billion-level data files take up nearly 100 GB of the disk while JindoFS metadata service only caches part of hot file metadata. Does the page cache of JindoFS files affect its performance? We conducted tests to find the answer:

echo 3 > /proc/sys/vm/drop_caches command to clear the cache and restart the metadata serviceThe test results are listed below (on a dataset with one billion files):

We can see these operations were slightly affected with an increase of 0.2 seconds in the consumed time in terms of cold start.

According to the preceding test, JindoFS remains stable even with one billion files. Additional tests were conducted to compare JindoFS with HDFS. Since machines of extremely high specifications are required for HDFS to store one billion files, this test was mainly conducted on 0.1 billion files. The test focused on the performance differences between JindoFS and HDFS by comparing common operations horizontally, such as list, du, and count.

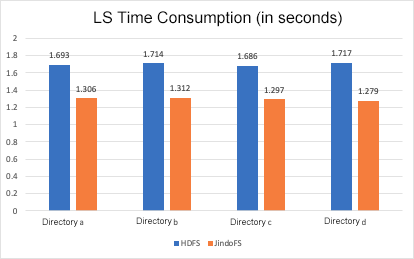

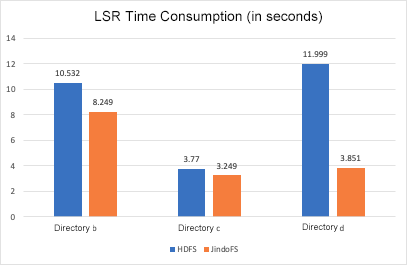

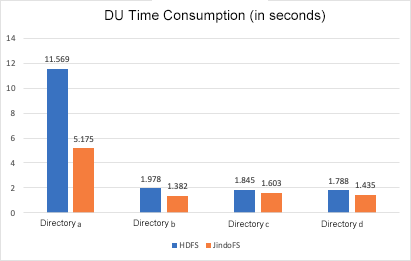

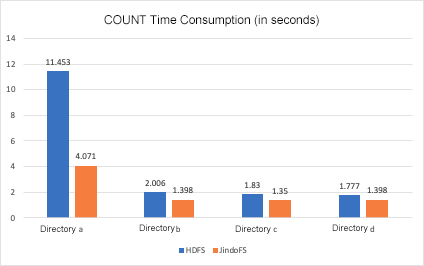

Extract four sets of directories – a, b, c, and d.

Drill down and output the single directory. The sampling method is time hadoop dfs -ls [DIR] > /dev/null.

Drill down and output the directory. The sampling method is time hadoop dfs -ls -R [DIR] > /dev/null.

Calculate the storage space occupied by the directory. The sampling method is time hadoop dfs -du [DIR] > /dev/null.

Calculate the number and capacity of the files or folders of the directory. The sampling method is time hadoop dfs -count [DIR] > /dev/null.

The preceding test results show that JindoFS is faster than HDFS in common operations, such as list, du, and count because HDFS NameNode uses a global read/write lock in its memory. As a result, it needs to take the read lock for query, especially for recursive query on directories. After taking the lock, it executes the recursive query on directories in a single thread serial, which is relatively slow, in turn affecting the execution of other remote procedure calls (RPCs) requests. JindoFS is designed to solve these problems. Its recursive operations on directories use multi-threaded concurrency acceleration, thus being faster in recursive operations on directory trees. Moreover, different directory tree storage structures with fine-grained locks are adopted, reducing the mutual impacts between multiple requests.

JindoFS block storage mode can easily store over one billion files with strong read/write request processing. Compared with HDFS NameNode, it features better performance and simpler O&M with smaller memory. JindoFS can be used as the storage engine to store the underlying data on an object storage service, such as OSS. In addition, combining the local cache acceleration capabilities of JindoFS, a stable and reliable big data storage solution with high performance can be provided as a powerful support for upper-layer computing and analysis engines.

Besides, JindoFS SDK can be used separately to replace the OSS client implementation in the Hadoop community. Compared with the Hadoop community implementation, JindoFS SDK has made a lot of performance optimizations on the ability to read and write OSS. For download and use, please access the GitHub report.

Construction, Analysis, and Development Governance of a Cloud-Native Data Lake

62 posts | 7 followers

FollowAlibaba EMR - April 30, 2021

Alibaba EMR - March 16, 2021

Alibaba Developer - July 8, 2021

Alibaba Clouder - December 3, 2020

Alibaba EMR - June 8, 2021

Alibaba Cloud Native Community - March 1, 2022

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Mobile Testing

Mobile Testing

Provides comprehensive quality assurance for the release of your apps.

Learn More Penetration Test

Penetration Test

Penetration Test is a service that simulates full-scale, in-depth attacks to test your system security.

Learn MoreMore Posts by Alibaba EMR