By Junbao, Alibaba Cloud Technical Expert

It is impossible to ignore the significance of storage services as they are an important part of the computer system and the foundation on which we run all applications, maintain the states of applications, and support data persistence. As storage services have evolved, each new business model or technology put forward new requirements for storage architecture, performance, availability, and stability. With the current ascendance of cloud-native technology, we must consider the types of storage services that will be needed to support cloud-native applications?

This is the first in a series of articles that explore the concepts, characteristics, requirements, principles, and usage of cloud-native storage services. These articles will also introduce case studies and discuss the new opportunities and challenges posed by cloud-native storage technology.

"There is no such thing as a 'stateless' architecture" - Jonas Boner

This article focuses on the basic concepts of cloud-native storage and common storage solutions.

To understand cloud-native storage, we must first familiarize ourselves with cloud-native technology. According to the definition given by the Cloud Native Computing Foundation (CNCF):

Cloud-native technology empowers organizations to build and run scalable applications in emerging dynamic environments, such as the public, private, and hybrid clouds. Examples of cloud-native technology include containers, service meshes, microservices, immutable infrastructures, and declarative APIs.

These techniques enable us to build loosely coupled systems that are highly fault-tolerant and easy to manage and observe. When used together with robust automation solutions, cloud-native technology allows engineers to make high-impact changes frequently, predictably, and effortlessly.

In a nutshell, there is no clear dividing line between cloud-native applications and traditional applications. Cloud-native technology describes a technological tendency. Basically, the more of the features below an application offers, the more cloud-native it is:

A cloud-native application is a collection of application features and capabilities. These features and capabilities, once realized, can greatly optimize the application's core capabilities, such as availability, stability, scalability, and performance. Outstanding capabilities are the current technological trend. As part of this process, cloud-native applications are transforming various application fields while profoundly changing all aspects of application services. As one of the most basic conditions necessary to run an application, storage is an area where many new requirements have been raised as services have become cloud native.

The concept of cloud-native storage originated from cloud-native applications. Cloud-native applications require storage with matching cloud-native capabilities, or in other words, storage with cloud-native tendencies.

The availability of a storage system represents users' ability to access data in the event of system failure caused by storage media, transmission, controllers, or other system components. Availability defines how data can still be accessed when a system fails and how access can be rerouted through other accessible nodes when some nodes become unavailable.

It also defines the recovery time objective (RTO) in the event of failure, which is the amount of time between the occurrence of a failure and the recovery of services. Availability is typically expressed as the percentage, which represents an application's available time as a proportion of its total running time (for example, 99.9%), with measurements made in the time unit of MTTF (mean time to failure) or MTTR (mean time to repair).

Storage scalability primarily represents the ability to:

Storage performance is usually measured by two metrics:

Cloud-native applications are widely used in scenarios such as big data analysis and AI. These high-throughput and large I/O scenarios pose have demanding requirements for storage. In addition, the features of cloud-native applications, such as rapid resizing and extreme scaling test the ability of storage services to cope with sudden traffic peaks.

For storage services, consistency refers to the ability to access new data after it is submitted or existing data is updated. Based on the latency of data consistency, storage consistency can be divided into two types: eventual consistency and strong consistency.

Services vary in their sensitivity to storage consistency. Applications such as databases, which have strict requirements for the accuracy and timeliness of underlying data, require strong consistency.

Multiple factors can influence data persistence:

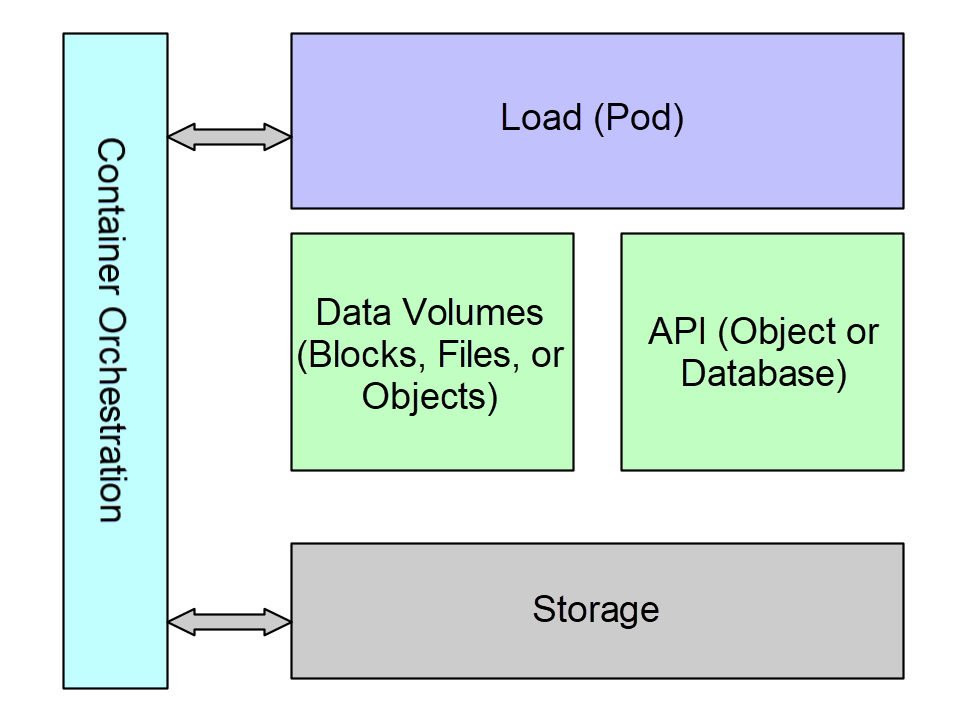

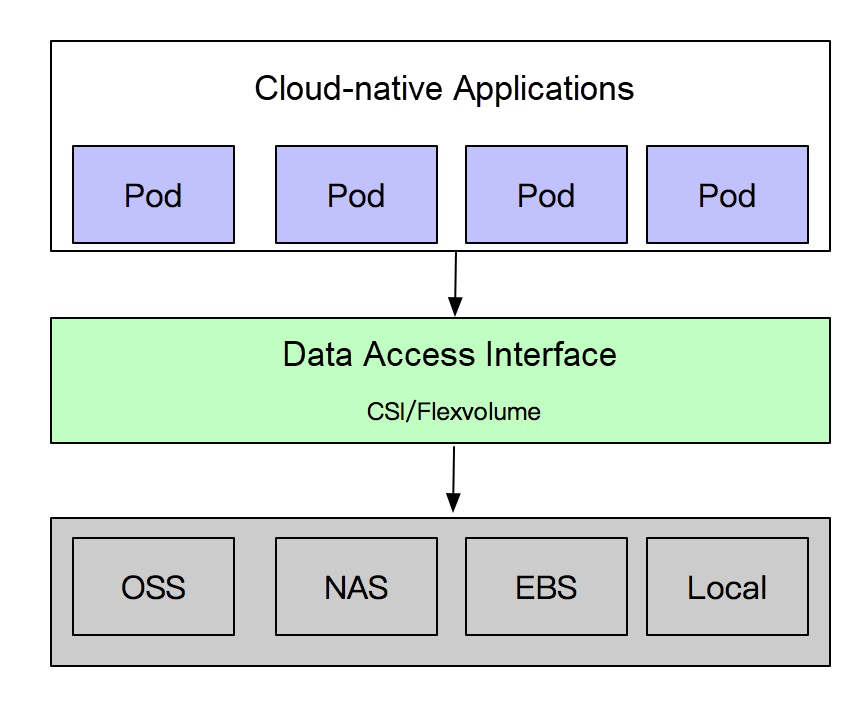

In cloud-native application systems, applications can access storage services in various ways. Storage access can be divided into two modes based on interface type: data volume and API.

Data volume: The storage services are mapped to blocks or a file system for direct access by applications. They can be consumed in a way similar to how applications directly read and write files in a local directory of the operating system. For example, you can mount a block storage or file system storage to the local host and access data volumes just like local files.

API: Some types of storage cannot be mounted and accessed as data volumes, but instead, have to be accessed through API operations. For example, database storage, KV storage, and Object Storage Service (OSS) perform read/write operations through APIs.

Note: OSS is generally used to externally provide file read/write capabilities through RESTful APIs. However, it can also be used by mounting the storage as a user-state file system, just like mounting block or file storage as data volumes.

The following table lists the advantages and disadvantages of each storage interface:

| API | Advantages | Disadvantages |

| Block | High availability, low latency, and high throughput for individual applications | Weak elastic scaling and poor data sharing |

| File system | Multi-load data sharing and high multi-load throughput | Poor file lock performance when sharing data |

| OSS | High availability, large capacity, multi-load data sharing, and high multi-load throughput | High latency |

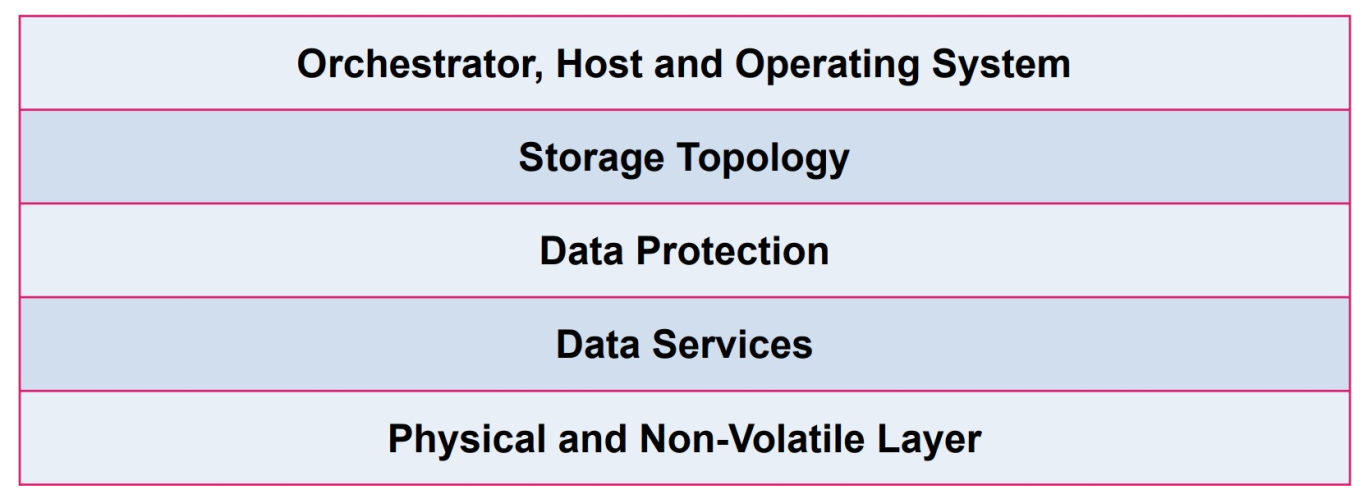

This layer defines the interfaces for external access to the stored data, namely, the form in which storage is presented when applications access data. As described in the preceding section, access methods can be divided into data volume access and API access. This layer is the key to storage orchestration when developing container services. The agility, operability, and scalability of cloud-native storage are implemented on this layer.

This layer defines the topology and architectural design of the storage system. It indicated how different parts of the system, such as storage devices, computing nodes, and data, are related and connected. The construction of the topology affects many properties of the storage system, so much thought must be put into its design.

The storage topology can be centralized, distributed, or hyper-converged.

The layer defines how to protect data through redundancy. It is essential for a storage system to protect data through redundancy so that it can recover data in the event of a failure. Generally, users can choose from several data protection solutions:

Data services supplement core storage capabilities with additional storage services, such as storage snapshots, data recovery, and data encryption.

The supplementary capabilities provided by data services are precisely what cloud-native storage requires. Cloud-native storage achieves agility, stability, and scalability by integrating a variety of data services.

This layer defines the actual physical hardware that stores the data. The choice of physical hardware affects the overall system performance and the continuity of stored data.

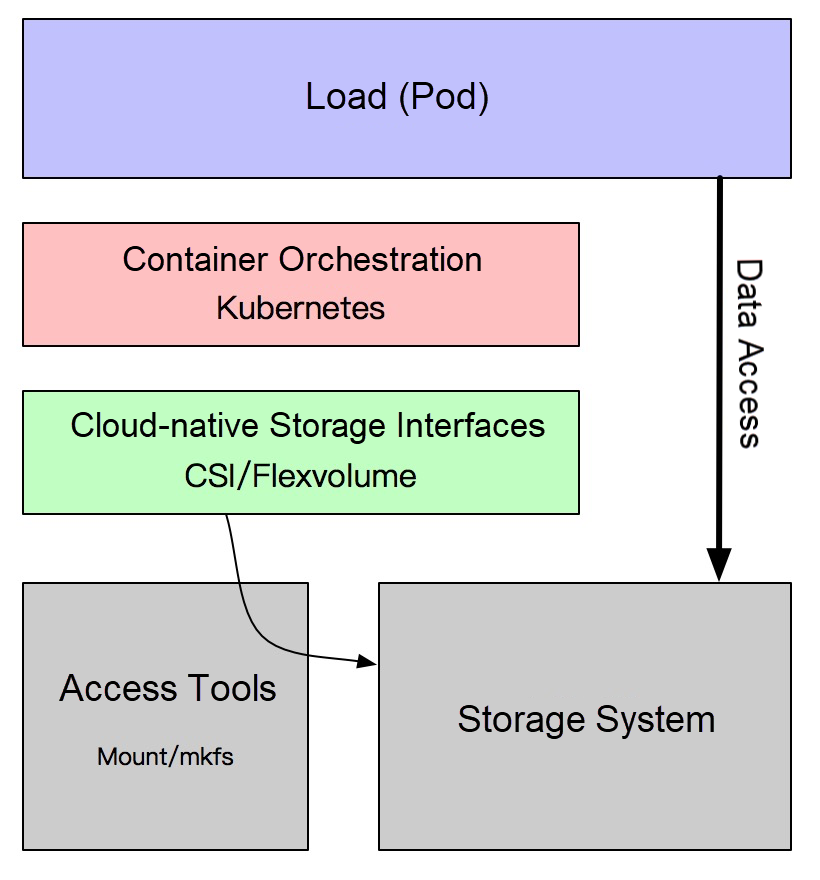

The cloud-native nature of the storage means it must be containerized. In container service scenarios, a certain management system or application orchestration system is usually required. The interaction between the orchestration system and storage system establishes the correlation between workloads and stored data.

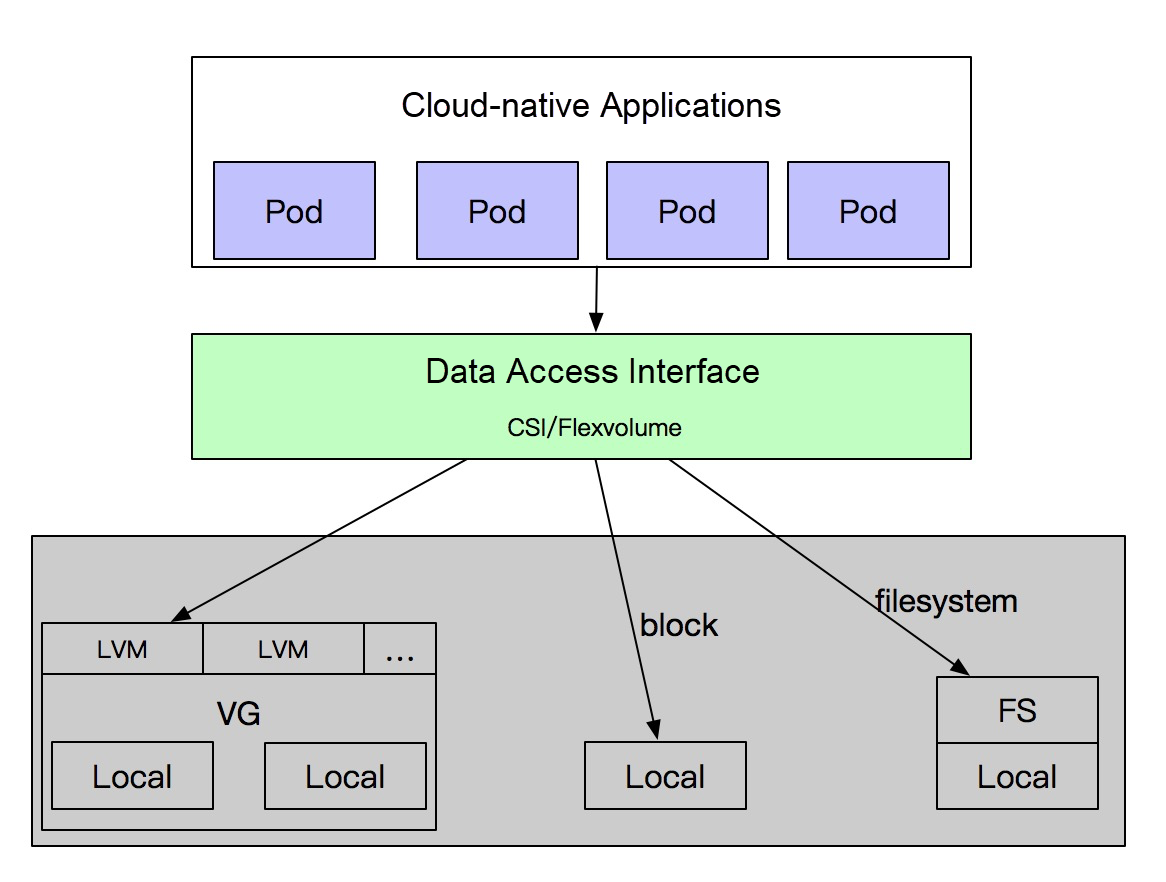

In the above diagram:

The orchestration system prepares the storage resources defined by the application load. The orchestration system uses the control plane of the storage system by calling the control plane interfaces and implements access and egress operations application loads perform on the storage services. After accessing the storage system, an application load can directly access its data plane, giving it direct access to data.

Every public cloud service provider offers a variety of cloud resources, including various cloud storage services. Take Alibaba Cloud for example. It provides basically any type of storage required by business applications, including object storage, block storage, file storage, and databases, just to name a few. Public cloud storage has the advantages of scale. Public cloud providers operate on a large scale, allowing them to keep prices low while making gigantic investments in R&D and O&M. In addition, public cloud storage can easily meet the needs of businesses for stability, performance, and scalability.

With the development of cloud-native technology, public cloud providers are competing to transform and adapt their cloud services for the cloud-native environment and offer more agile and efficient services to meet the needs of cloud-native applications. Alibaba Cloud's storage services are also optimized in many ways for compatibility with cloud-native applications. The CSI storage driver implemented by Alibaba Cloud's container service team seamlessly connects data interfaces across cloud-native applications and the storage services. The underlying storage is imperceptible to users when they consume the storage resources, which allows them to focus on business development.

Advantages:

Disadvantage:

In many private cloud environments, business users purchase commercial storage services to achieve high data reliability. These solutions provide users with highly available, highly efficient, and convenient storage services, and guarantee O&M services and post-production support. Private cloud storage providers, as they become gradually aware of the popularity of cloud-native applications, are providing users with comprehensive and mature cloud-native storage interface implementations.

Advantages:

Disadvantages:

Many companies choose to build their own storage services for business data with low service level requirements. Business users can choose among currently available open-source storage solutions based on their business needs.

Advantages:

Disadvantages:

Some businesses do not need highly available distributed storage services. Instead, they prefer local storage solutions due to their high performance.

Database services: If users need to achieve high storage I/O performance and low access latency, common block storage services cannot effectively satisfy their needs. In addition, if their applications are designed for high data availability and do not need to retain multiple replicas at the underlying layer, the multi-replica design of distributed storage is a waste of resources.

Storage as a cache: Some users expect their applications to save unimportant data, which can be discarded after programs are executed. This also requires high storage performance. Essentially storage is being used as a cache. The high availability of cloud disks does not make much sense for such services. In addition, cloud disks don't have advantages over local storage in terms of performance and cost.

Therefore, although local disk storage is much weaker than distributed block storage in many key aspects, it still maintains a competitive edge in specific scenarios. Alibaba Cloud provides a local disk storage solution based on NVMe. Its superior performance and lower pricing make it popular among users for specific scenarios.

Alibaba Cloud CSI drivers can be used by cloud-native applications to access local storage and support multiple access methods, such as lvm volumes, raw device access to local disks, and local directory mapping. CSI drivers can be used to implement data access adapted for high performance access, quota operations, and IOPS configuration, among others.

Advantages:

Disadvantages:

With the development of cloud-native technology, the developer community has released some open-source cloud-native storage solutions.

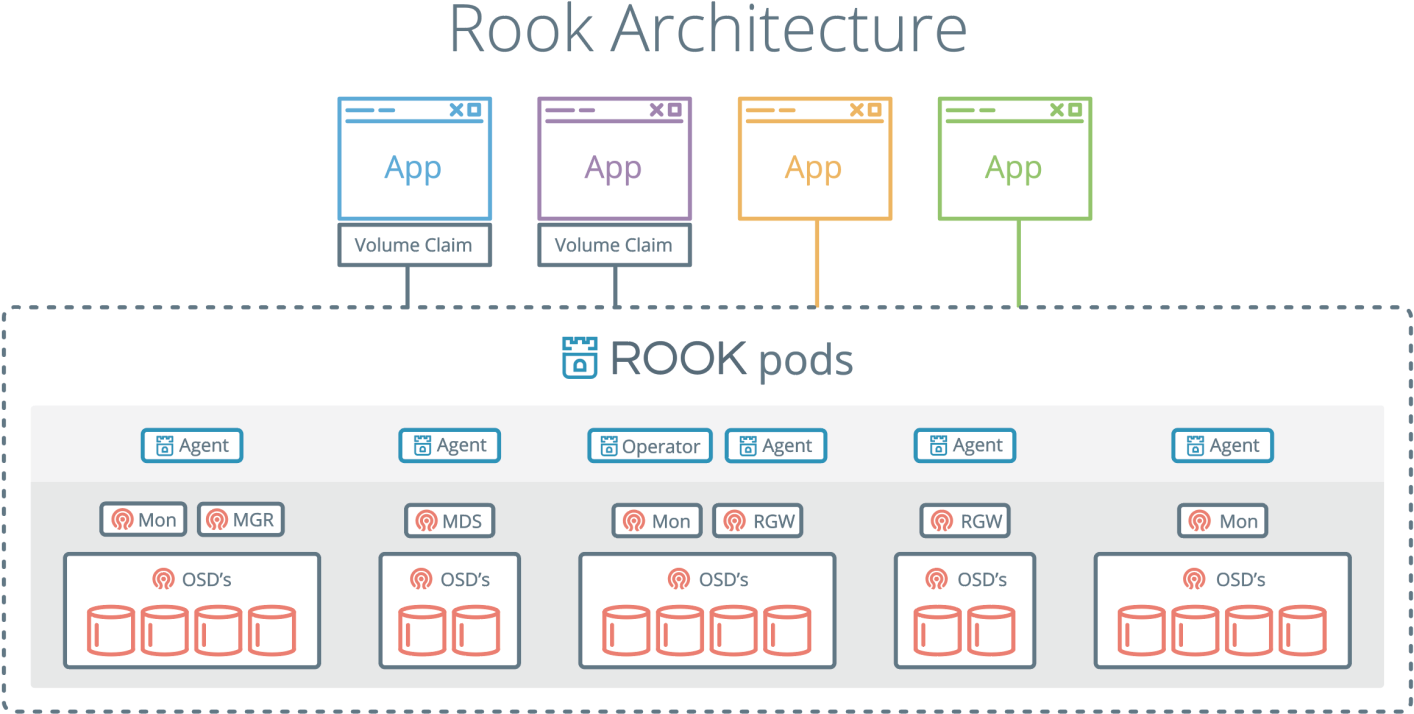

Rook, as the first CNCF storage project, is a cloud-native storage solution that integrates distributed storage systems, such as Ceph and Minio. It is designed for simple deployment and management, deeply integrated with the container service ecosystem, and provides various features for adaption to cloud-native applications. In terms of implementation, Rook can be seen as an operator that provides Ceph cluster management capabilities. It uses CRD to deploy and manage storage resources such as Ceph and Minio.

Rook components:

Rook deploys the Ceph storage service as a service in Kubernetes. The daemon processes, such as MON, OSD, and MGR, are deployed as pods in Kubernetes, while the core components of Rook perform O&M and management operations on the Ceph cluster.

Through Ceph, Rook externally provides comprehensive storage capabilities and supports object, block, and file storage services, allowing users to use multiple storage services with one system. Finally, the default implementation of the cloud-native storage interface in Rook uses the CSI/Flexvolume driver to connect application services to the underlying storage. As Rook was initially designed to serve the needs of the Kubernetes ecosystem, it features strong adaption for containerized applications.

Official Rook documentation: https://rook.io/

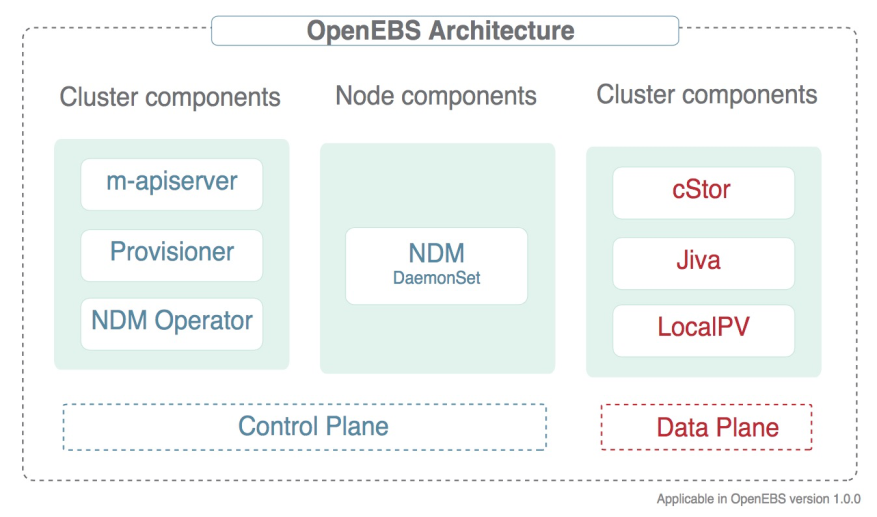

OpenEBS is an open-source implementation that emulates the functions of block storage such as AWS EBS and Alibaba Cloud disks. OpenEBS is a container solution based on container attached storage (CAS). It adopts a microservices model as storage and applications do and orchestrates resources through Kubernetes. In terms of its architecture, the controller of each volume is a separate pod and shares the same node with the application pod. The volume data is managed through multiple pods.

The architecture comprises the data plane and control plane.

Data plane:

OpenEBS persistence volumes (PV) are created based on the PVs in Kubernetes and implemented through iSCSI. Data is stored on nodes or in cloud storage. OpenEBS volumes are managed independent of the application life cycle, similar to the PVs in Kubernetes.

OpenEBS volumes provide persistent storage for containers, elasticity to cope with system failures, and faster access to storage, snapshots, and backups. It also provides a mechanism to monitor usage and execute QoS policies.

Control plane:

The OpenEBS control plane, Maya, has implemented hyper-converged OpenEBS, which can extend the storage functions provided by specific container orchestration systems, such as when it is mounted to the Kubernetes scheduling engine.

The OpenEBS control plane is also based on microservices and implements different features, such as storage management, monitoring, and container orchestration plug-ins, through different components.

For more information on OpenEBS, see: https://openebs.io/

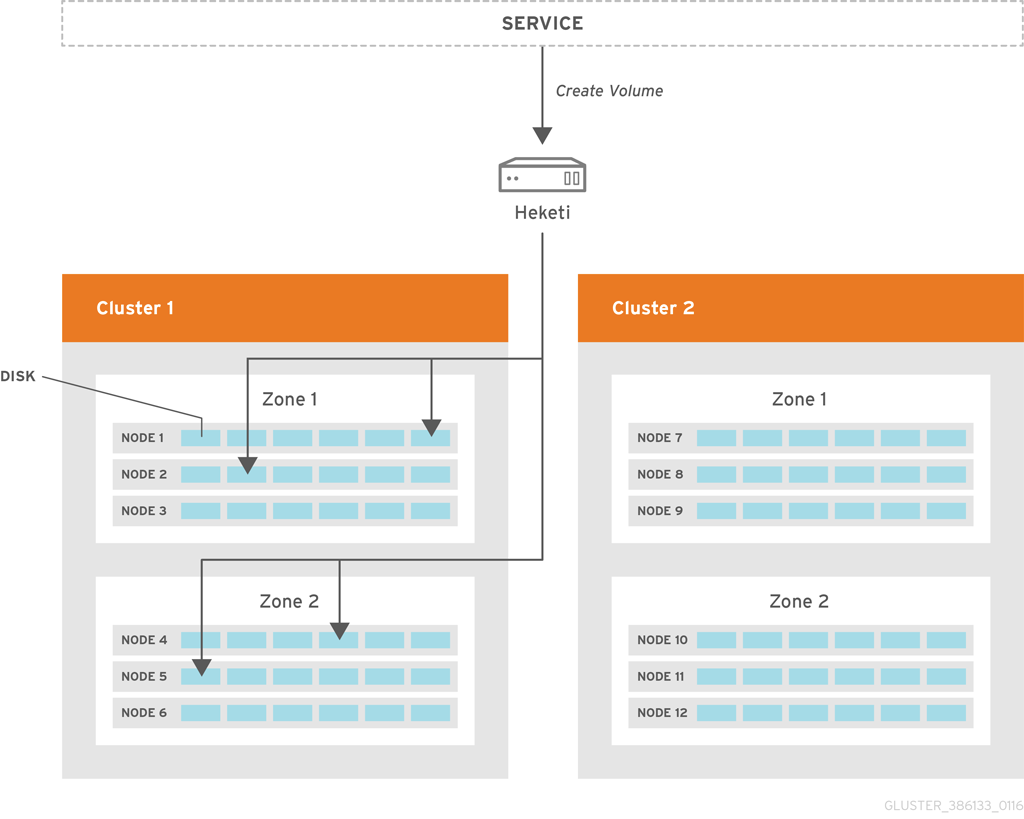

Similar to Rook, Heketi is an implementation of the Ceph open-source storage system on the cloud-native orchestration platform (Kubernetes). Glusterfs also has a cloud-native implementation. Heketi provides a Restful API for managing the life cycles of Gluster volumes. With Heketi, Kubernetes can dynamically provide any type of persistence supported by Gluster volumes. Heketi will automatically determine the location of a brick in the cluster and ensure that the brick and its copies are placed in different failure domains. Heketi also supports any number of Gluster storage clusters to provide network file storage for cloud services.

With Heketi, administrators no longer need to manage or configure block, disk, or storage pools. Heketi services manage all the system hardware and allocate storage as needed. Any physical storage registered with Heketi must be provided through raw devices. Heketi can manage these disks by using LVM on the provided disks.

For more details, see: https://github.com/heketi/heketi

Cloud-native application scenarios impose rigorous requirements for service agility and flexibility. Many expect fast container startup and flexible scheduling. To meet these requirements, the volumes must be adjusted agilely as the pods change.

These requirements demand the following improvements:

Most storage services already have monitoring capabilities at the underlying file system level. However, monitoring for cloud-native data volumes needs to be enhanced. Currently, PV monitoring is inadequate in terms of data dimensions and intensity.

Specific requirements:

Provide more fine-grained monitoring capabilities at the directory level.

Provide more monitoring metrics, including read/write latency, read/write frequency, and I/O distribution.

Big data computing scenarios usually involve a large number of applications accessing storage concurrently, which makes the performance of storage services a key bottleneck that determines how the efficiency of application operations.

Specific requirements:

Shared storage allows multiple pods to share data, which ensures unified data management and access by different applications. However, in multi-tenant scenarios, it is imperative to isolate the storage of different tenants.

The underlying storage provides strong isolation between directories, making it possible for isolation at the file system level among different tenants of a shared file system.

The container orchestration layer implements orchestration isolation based on namespaces and PSP policies. This prevents tenants from accessing the volume services of other tenants during application deployment.

How to Achieve Stable and Efficient Deployment of Cloud-Native Applications?

Containers and Cloud Native Technology: Realizing the Value of Cloud

640 posts | 55 followers

FollowAlibaba Container Service - November 21, 2024

Alibaba Clouder - May 7, 2021

Alibaba Clouder - January 5, 2021

Alibaba Developer - March 8, 2021

Alibaba Developer - January 28, 2021

Alibaba Clouder - September 28, 2020

640 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Elastic Block Storage

Elastic Block Storage

Block-level data storage attached to ECS instances to achieve high performance, low latency, and high reliability

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Hybrid Cloud Distributed Storage

Hybrid Cloud Distributed Storage

Provides scalable, distributed, and high-performance block storage and object storage services in a software-defined manner.

Learn MoreMore Posts by Alibaba Cloud Native Community