Step up the digitalization of your business with Alibaba Cloud 2020 Double 11 Big Sale! Get new user coupons and explore over 16 free trials, 30+ bestselling products, and 6+ solutions for all your needs!

Written by Ziguan. Edited by Yang Zhongbao, server development engineer at Beijing Haizhixingtu Technology Co., Ltd., big data enthusiast, and Spark Chinese community volunteer.

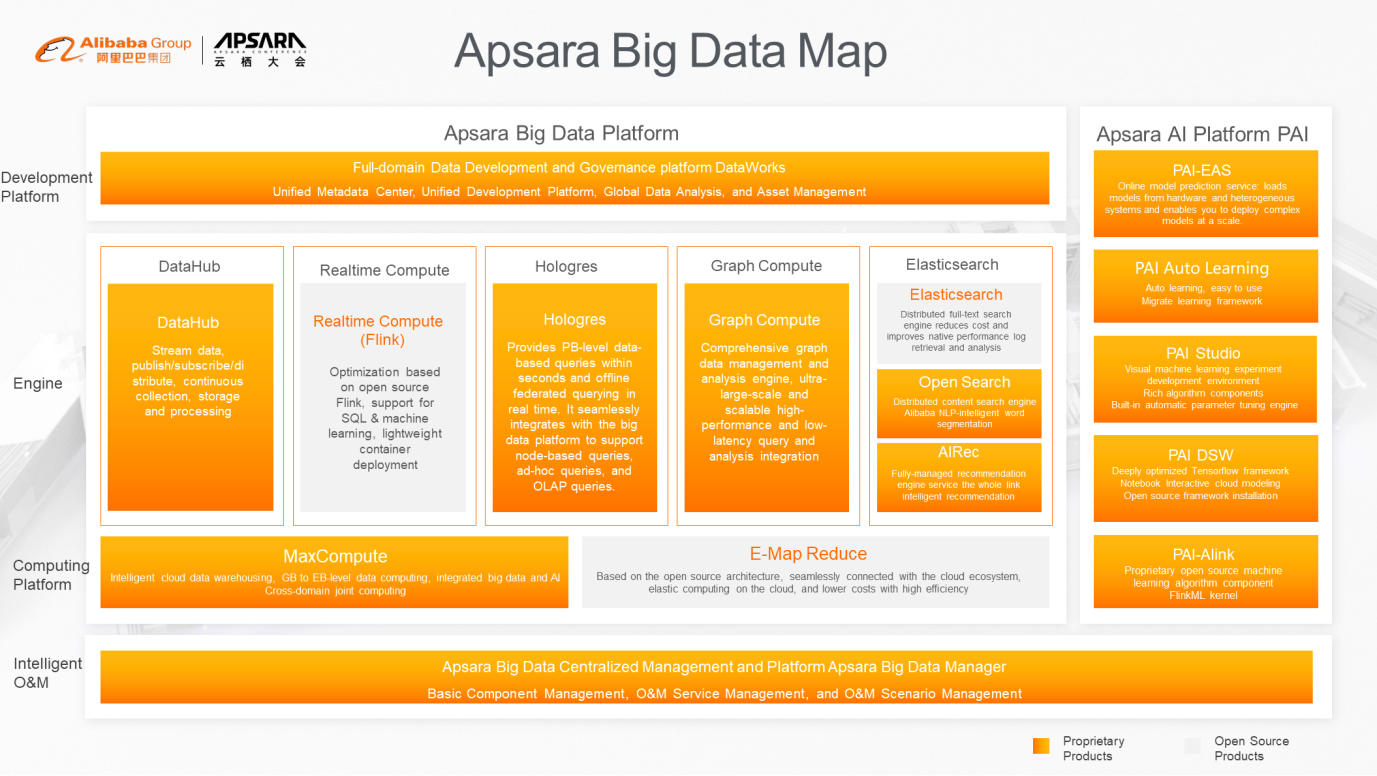

First, let's have a quick look at the Apsara big data platform, hereinafter referred to as the Apsara platform. The Apsara platform is composed of AI-PAI (a machine learning and deep learning platform) and the big data platform. In addition to E-MapReduce (EMR), engines such as MaxCompute, DataHub, Realtime Compute, and Graph Compute are also available.

As shown in the preceding figure, the orange parts indicate the computing engine or platform developed by Alibaba Cloud, and the gray parts indicate the computing engine or platform developed by Alibaba Cloud. EMR is an important open source component of the Apsara system.

This article has three parts:

1) Data lakes

2) EMR data lake solution

3) Customer case studies

The data lake was proposed 15 years ago and has become extremely popular in the last two or three years. In Gartner's Magic Quadrant, data lake technology has great investment and exploration value.

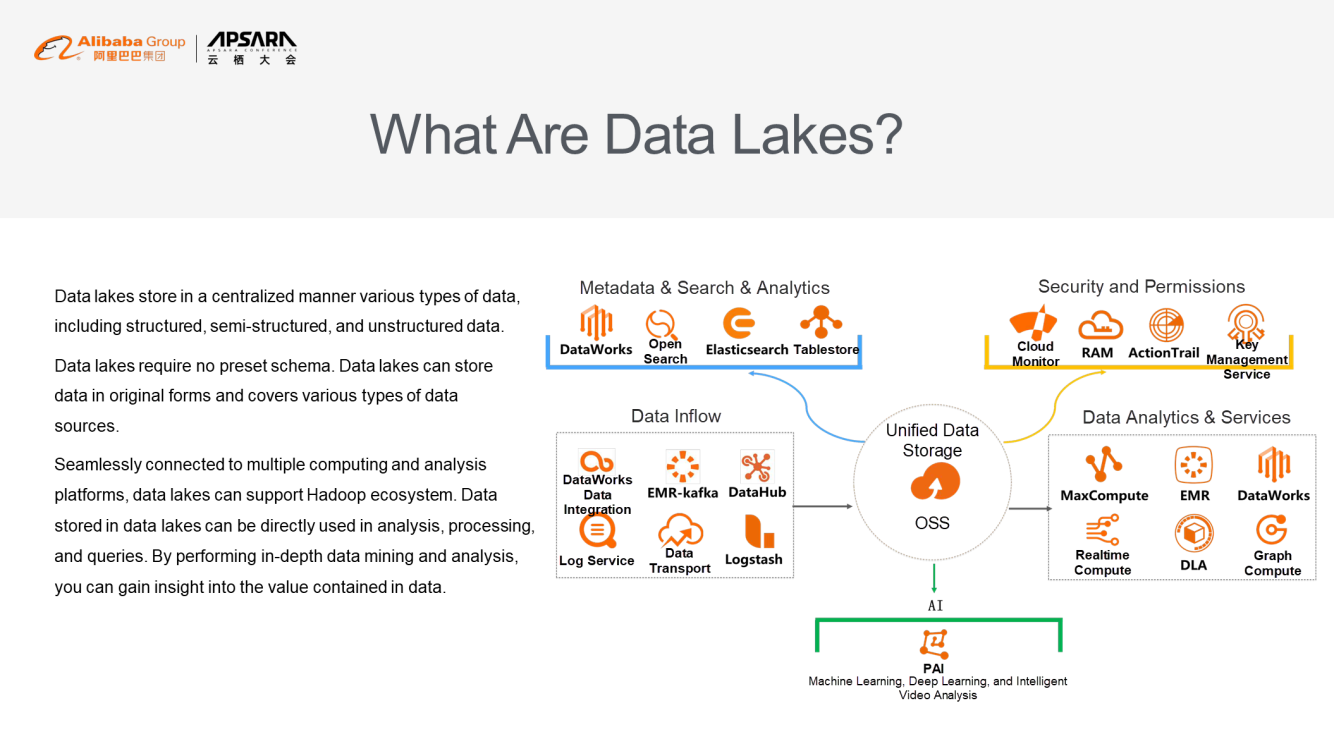

What is a data lake? Previously, we used data warehouses to manage structured data. After the rise of Hadoop, a large amount of unstructured and structured data was stored in HDFS. However, as the data is accumulated, some data may not have a suitable application scenario when it is collected. Therefore, we just store the data first and then consider development and mining when business needs arise.

With the increasing data volume, we may use object storage services such as OSS and HDFS for unified storage. We may also consider choosing different computing scenarios, such as ad hoc queries, offline computing, real-time computing, machine learning, and deep learning. In different computing scenarios, you still may select different engines. Then, you must have unified operations for different scenarios, such as monitoring, authorization, auditing, and account system.

The first part is data acquisition (the leftmost section in the figure), which is mainly used to acquire relational databases. User data flows into unified storage. Different computing services are used for data processing and computing. At the same time, computing results are applied to the AI analysis platform for machine learning or deep learning. Finally, the results are used for business purposes. Capabilities such as search and source data management add value to data. In addition to computing and storage, a series of control and audit measures are required.

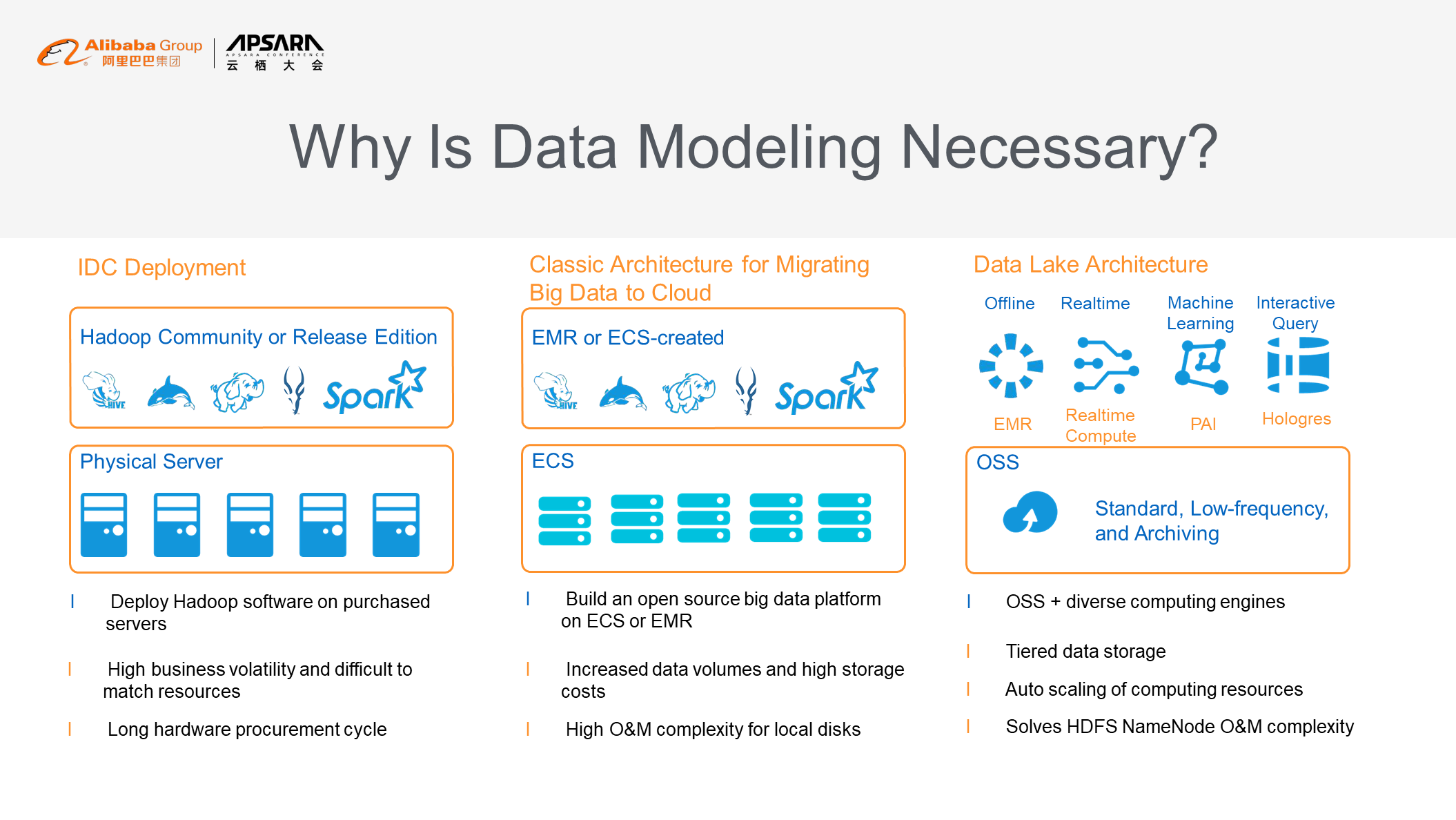

It has been more than 10 years since the birth of big data technology. From the very beginning, everyone has built open-source software on their own IDC. With the continuous growth of the business and rapid accumulation of data, business traffic fluctuates very quickly and even traffic may explode.

The procurement cycle of on-premises IDCs is too long to meet the needs of rapid business growth. During the day, most of the computing tasks are ad hoc queries. At night, you may need to scale up some resources for offline report computing. These scenarios make it difficult to match computing power in the IDC model.

About five or six years ago, a large number of enterprises began to migrate their business to the cloud. As business data continues to grow, enterprises quickly add instances to meet their business growth needs through the capabilities of the cloud supply chain. User-created Hadoop clusters or EMR on the cloud also causes some problems. In essence, Hadoop Distributed File System (HDFS) is used, and the storage cost increases linearly with data growth. When you also use local disks on the cloud, the O&M process becomes complicated.

In large-scale clusters (clusters with hundreds or thousands of servers), a disk failure is a common event, and it is quite challenging to deal with such events. Therefore, the data lake architecture gradually becomes centered on OSS. The hierarchical storage capability of OSS allows storing data in different ways and at different costs. At the same time, the O&M of HDFS NameNode is very complicated in HA scenarios. When more than 100 clusters are involved, stabilizing the NameNode becomes a great challenge, and it may take a lot of effort and manpower. Maintaining an HA architecture may not be able to solve this problem in the long run. OSS is another option that avoids the problem of the HDFS architecture by using cloud storage architecture.

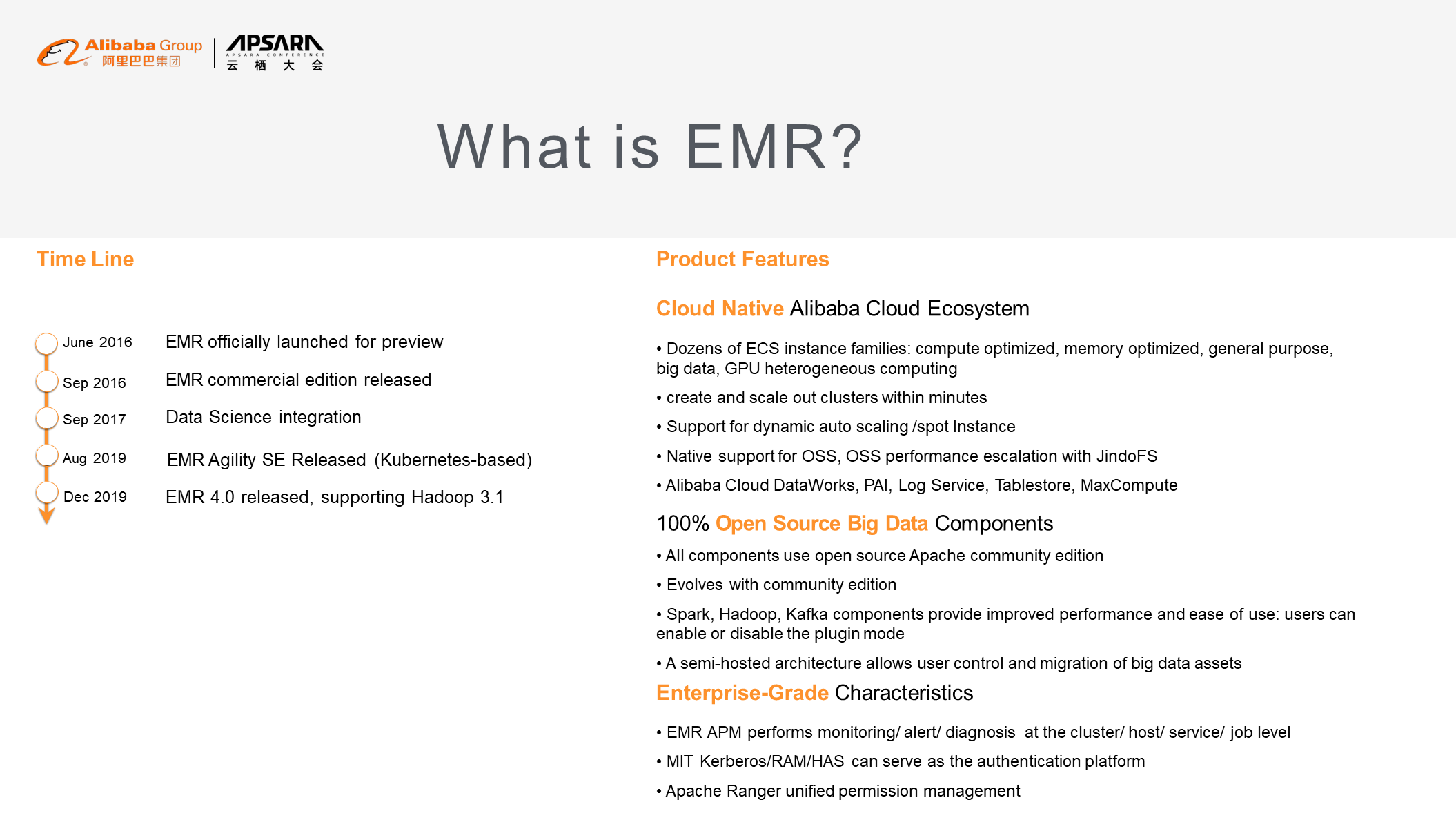

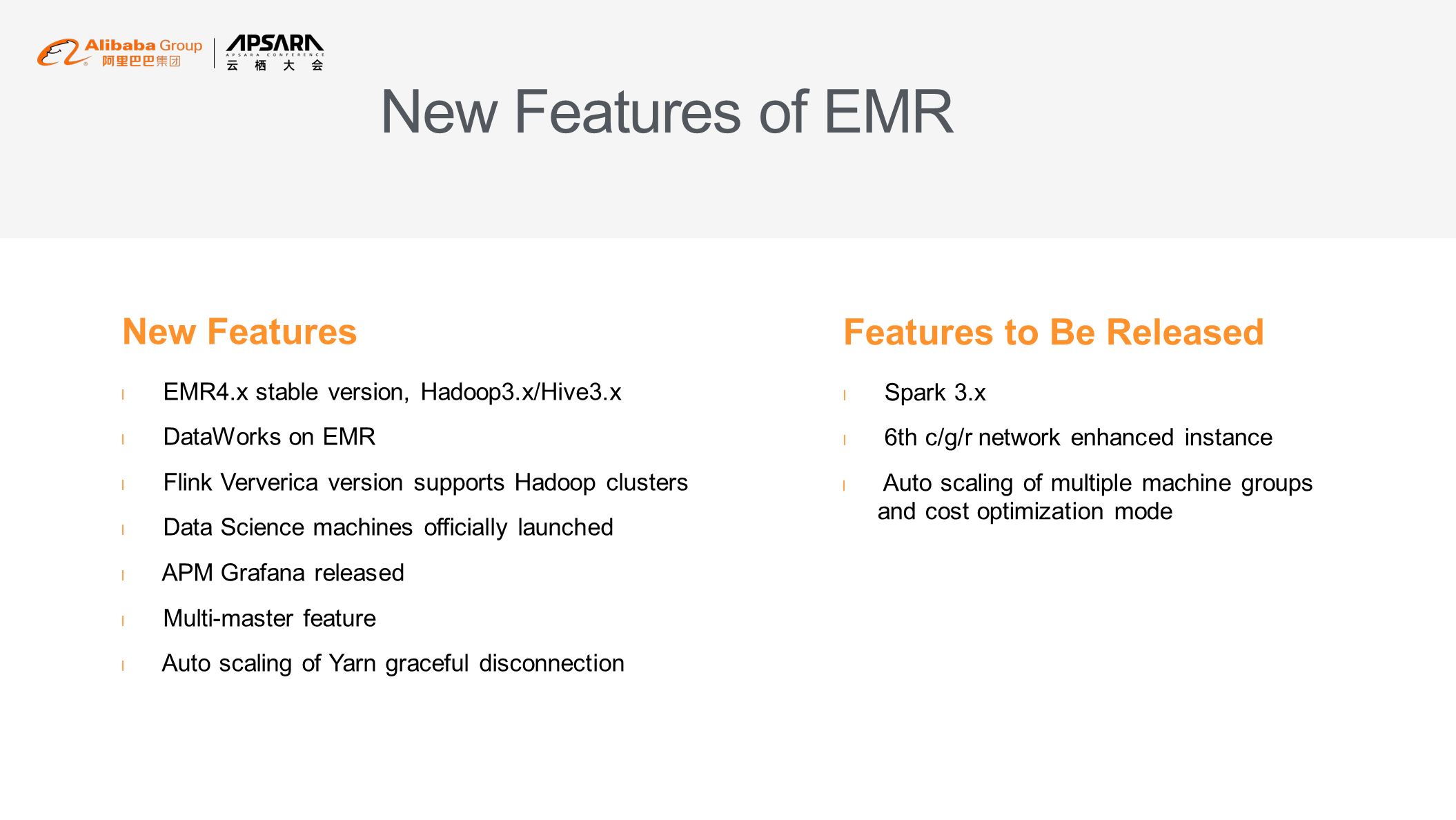

Alibaba Cloud E-MapReduce is designed to leverage the Alibaba Cloud ecosystem, which is 100% open source and provides enterprises with stable and highly reliable big data services. Initially released in June 2016, EMR's latest version is 4.4. With EMR, use more than 10 types of ECS instances in order to create auto scaling clusters in minutes.

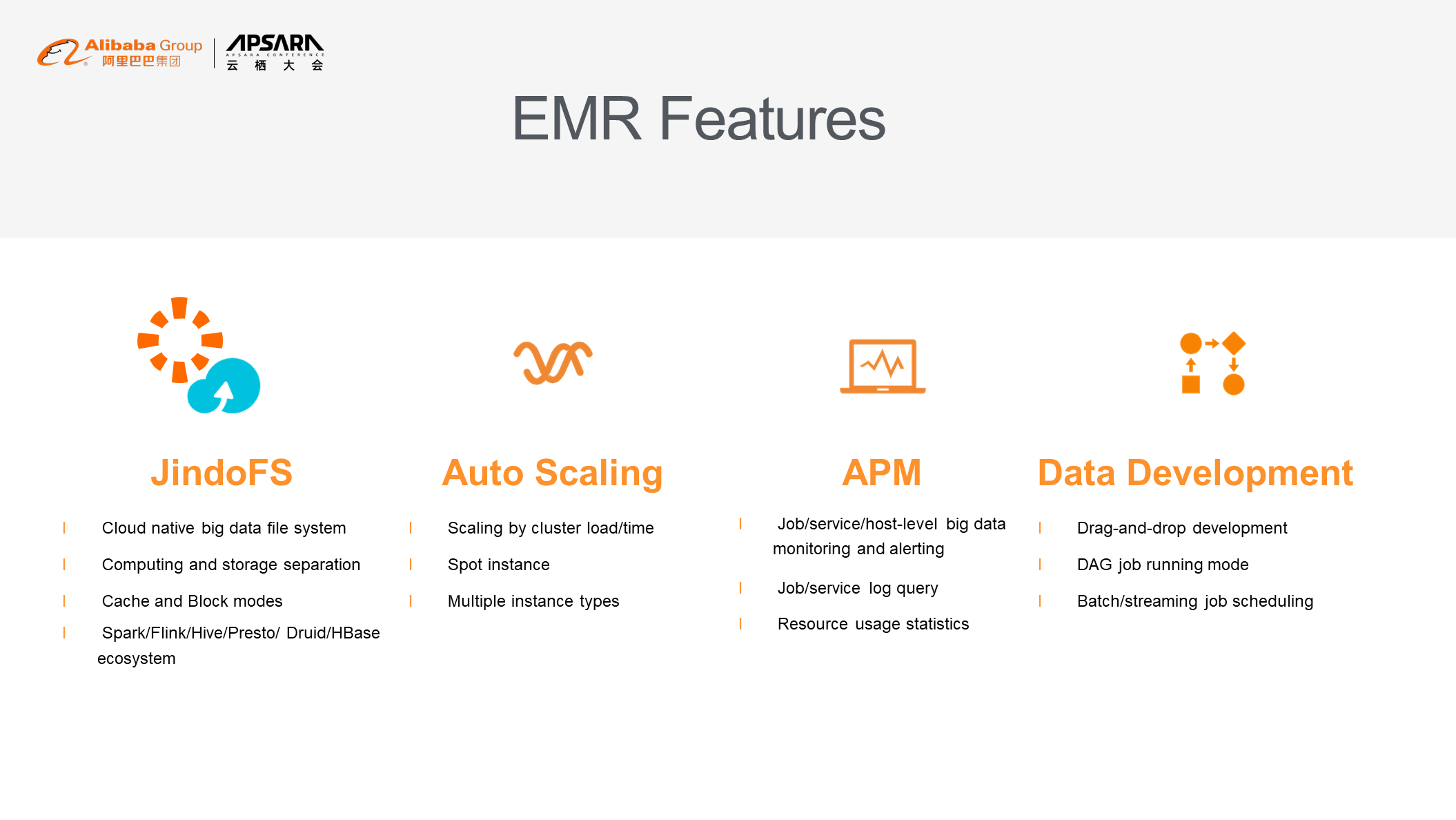

EMR supports OSS, and its self-developed Jindo FS greatly improves the performance of OSS. At the same time, EMR integrates with the Alibaba Cloud ecosystem, for example, DataWorks and PAI can be seamlessly connected in EMR. You can also use EMR as a computing engine to compute stored data for storage services, such as log service and MaxCompute. All EMR components are Apache open source versions. With the continuous upgrade of the community version, the EMR team will make a series of optimizations and improvements in application and performance for components such as Spark, Hadoop, and Kafka.

EMR adopts semi-hosted architecture. Users can log on to the ECS server node in the cluster in order to deploy and manage their own ECS servers, providing a very similar experience to that in the on-premises data center. It also offers a series of enterprise-grade features, including alerting and diagnosis at the service level for host jobs as in APM. It also supports MIT, Kerberos, RAM, and HAS as authentication platforms. Ranger is also used as a unified permission management platform.

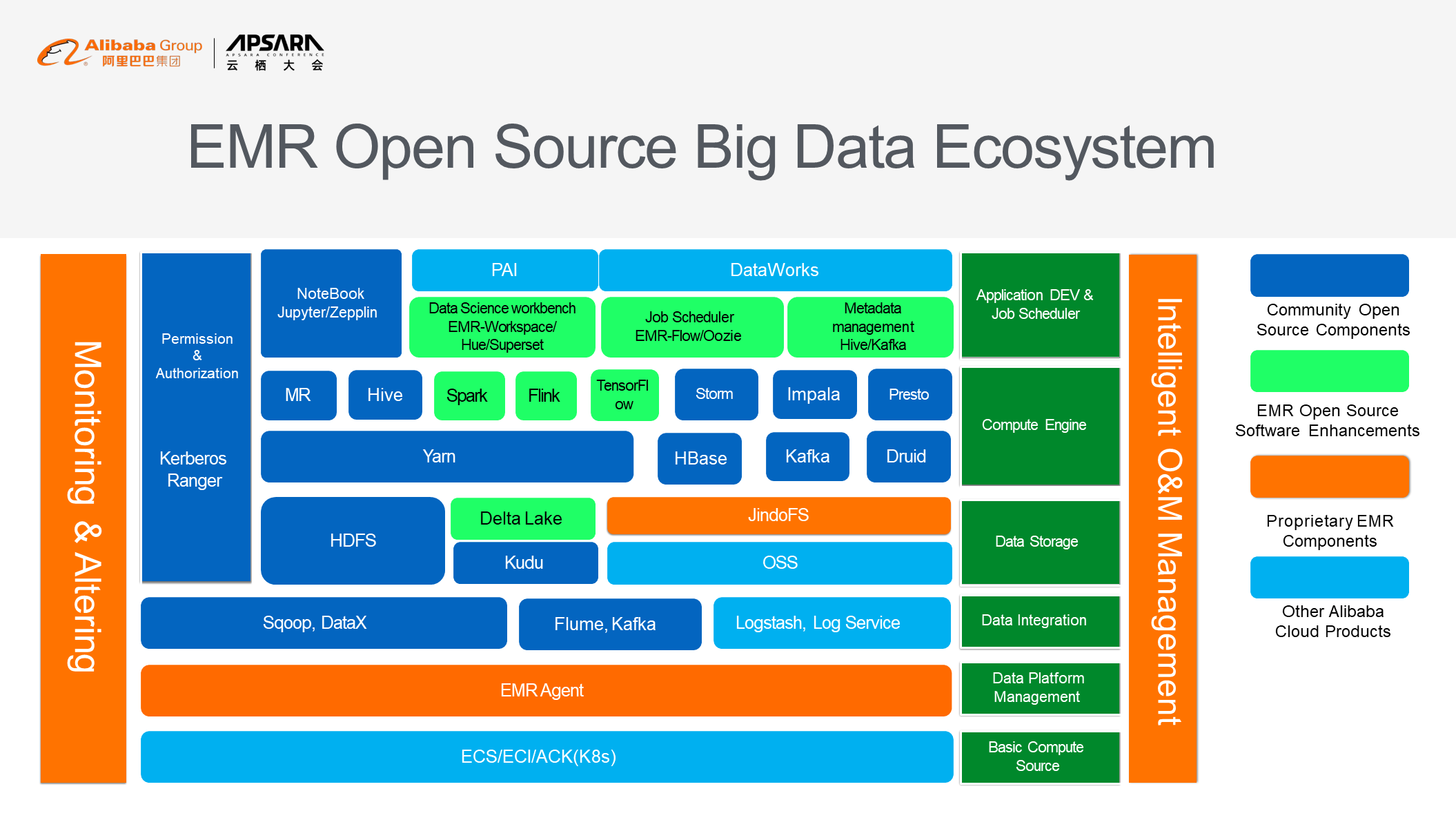

The following figure shows the overall open-source big data ecosystem of EMR, which covers both software and hardware.

Several planes are involved here.

For example, JindoFS is based on the storage layer (OSS).

JindoFS is a set of components developed by the EMR team. This component is used to accelerate the reading and computing of OSS data. In actual comparative tests, the performance of JindoFS is much better than that of offline HDFS.

Delta Lake is a technical computing engine and platform for open-source data lakes. The EMR team made a series of optimizations based on the deployment of Delta Lake in Presto, Kudu, and Hive, and significantly improved performance compared to the open-source version. The Flink of EMR is the enterprise version of Verica and provides better performance, management, and maintainability.

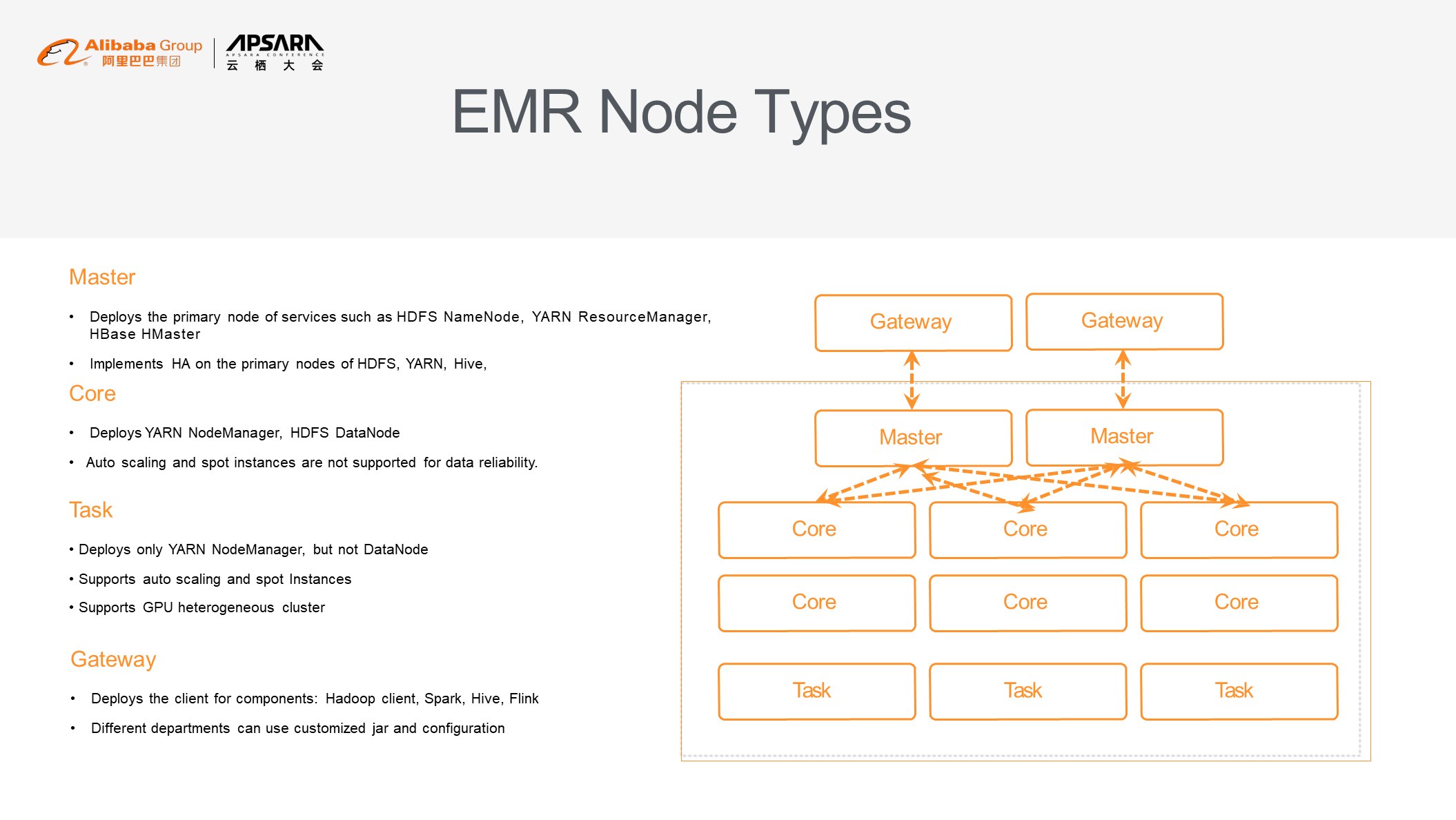

EMR consists of four node types: master, core, task, and gateway.

Services such as NameNode, ResourceManager, and Hmaster of Hbase are deployed on master nodes to achieve centralized cluster management. You may enable the high availability (HA) feature when creating a production cluster to automatically create high availability clusters.

Core nodes mainly accommodate the Yarn NodeManager and HDFS DataNode. From this perspective, core nodes can perform both computing and storage. For data reliability, core nodes cannot implement auto scaling and spot instances.

Only a NodeManager is deployed on the task node. Therefore, scale the data lake accordingly. When all user data is stored in OSS, use the auto scaling feature of task nodes to quickly respond to business changes and flexibly scale computing resources. Also, use ECS preemptible instances to reduce costs. Task nodes also support GPU instances. In many machine learning or deep learning scenarios, the computing period is very short (and once in a few days or weeks). However, GPU instances are expensive, so manual scaling of instances greatly reduce costs.

Gateway nodes are used to hold various client components, such as Spark, Hive, and Flink. Departments can use different clients or client configurations for isolation. This also prevents users from frequently logging on to the cluster and performing operations.

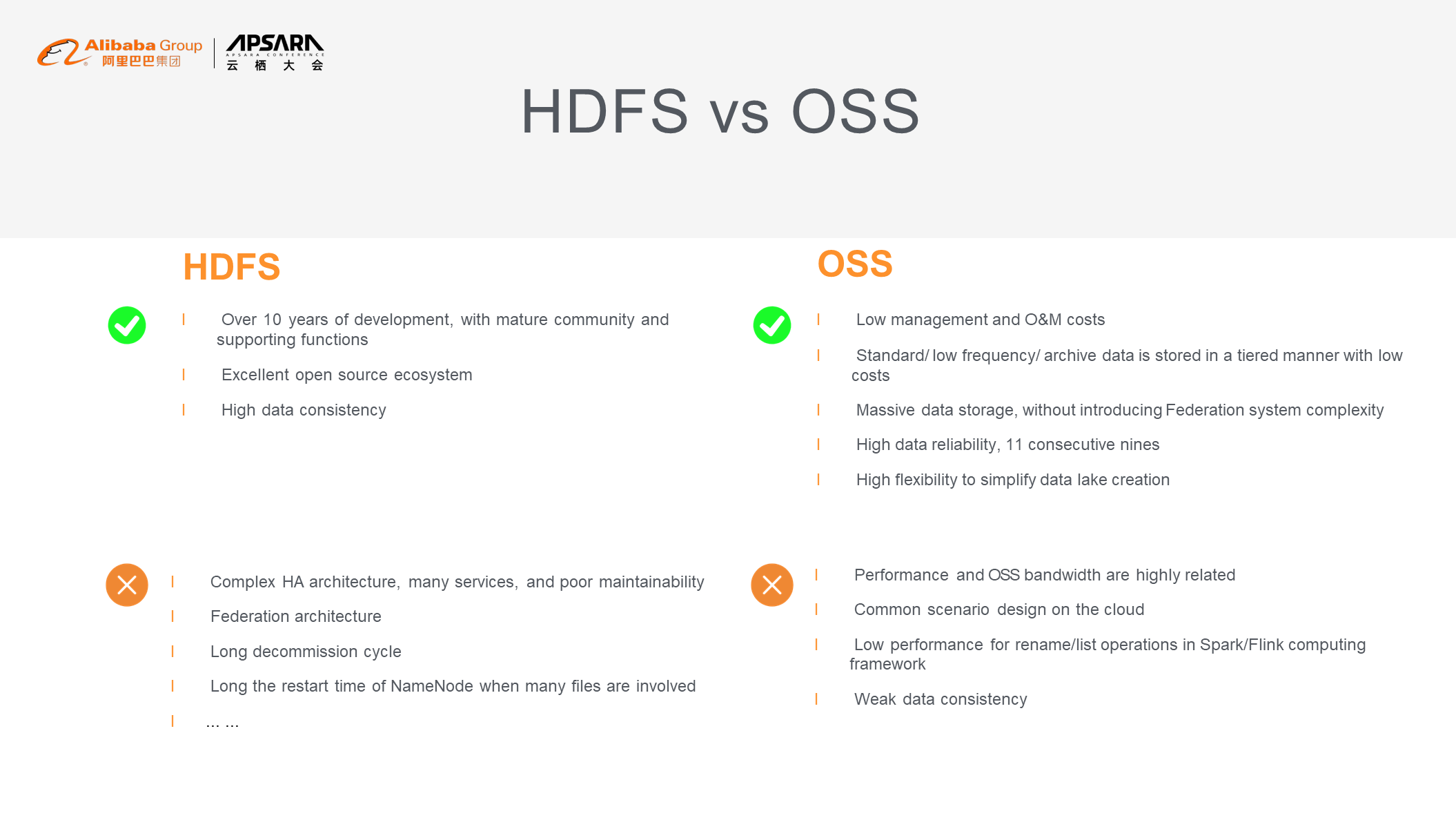

It has been more than 10 years since HDFS was launched. Its community supporting functions are relatively mature and perfect. However, it has some defects. For example, the architecture of HA is too complex (if HA is required, JournalNode and ZKFC must be deployed). When a cluster is too large, the Federation of HDFS is used. When the scale of operation is large, the DataNode-Decommission cycle is also very long. If the host fails or the disk fails, the node needs to be offline for a period of up to 1-2 days, even requiring special personnel to manage the DataNode-Decommission. Restarting a NameNode may take half a day.

What are the advantages of OSS? OSS is service-oriented object storage in Alibaba Cloud with very low management and O&M costs. OSS provides multiple hierarchical data storage types (such as standard object storage, infrequent access storage, and archive storage). OSS effectively reduces user costs. Users do not need to pay attention to NameNode and Federation (because they are service-oriented), and the data reliability is very good (reliability of 11 consecutive nines). Therefore, many customers use OSS to build enterprise data lakes. OSS is typically characterized by high openness. Almost all cloud products support OSS as backend storage.

OSS also has some problems. In the beginning, OSS was mainly used to store data in big data scenarios in conjunction with business systems. Because OSS is designed for general scenarios, performance problems are encountered when it is adapted to big data computing engines (Spark and Flink). When a rename operation is performed, the move operation is actually performed and the file is really copied. OSS is unlike the Linux file system, which is fast enough to complete the rename operation. List operation requests all objects. When there are too many objects, the speed is extremely slow. The eventual consistency cycle is relatively long. When data is read or written, data inconsistency may occur.

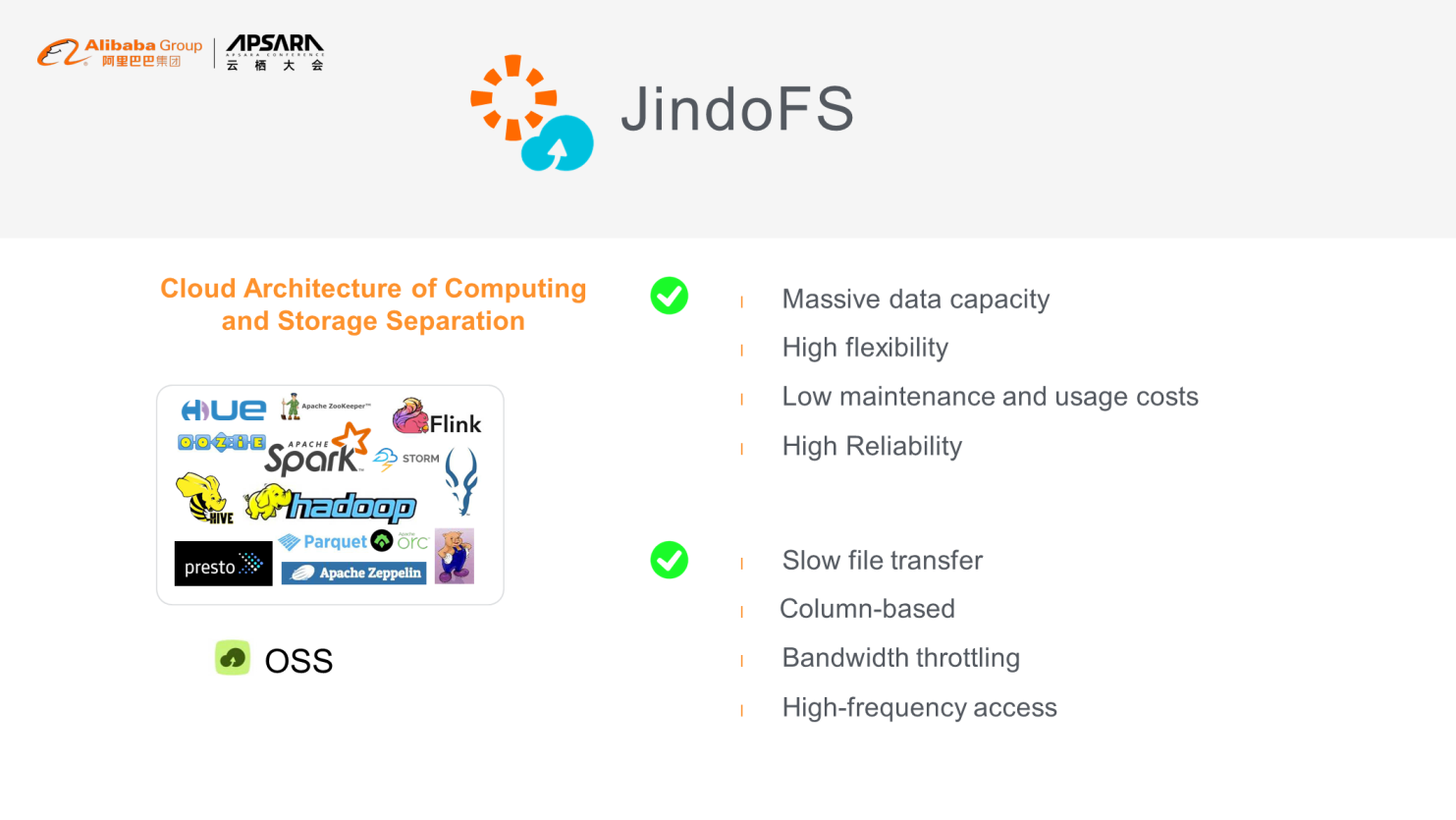

JindoFS is developed based on the open-source ecosystem. You may use JindoFS to read data from OSS and query data in almost all computing engines. On the one hand, JindoFS delivers the advantages of OSS: storage of EB of data (level). JindoFS also offers high flexibility: When you use OSS semantics, all the computing engines such as other computing services or BI report tools can obtain data quickly. JindoFS is a generic API.

JindoFS is widely used in the cloud. When processing data in HDFS and OSS, it avoids performance problems with performing rename, list, and other operations on files.

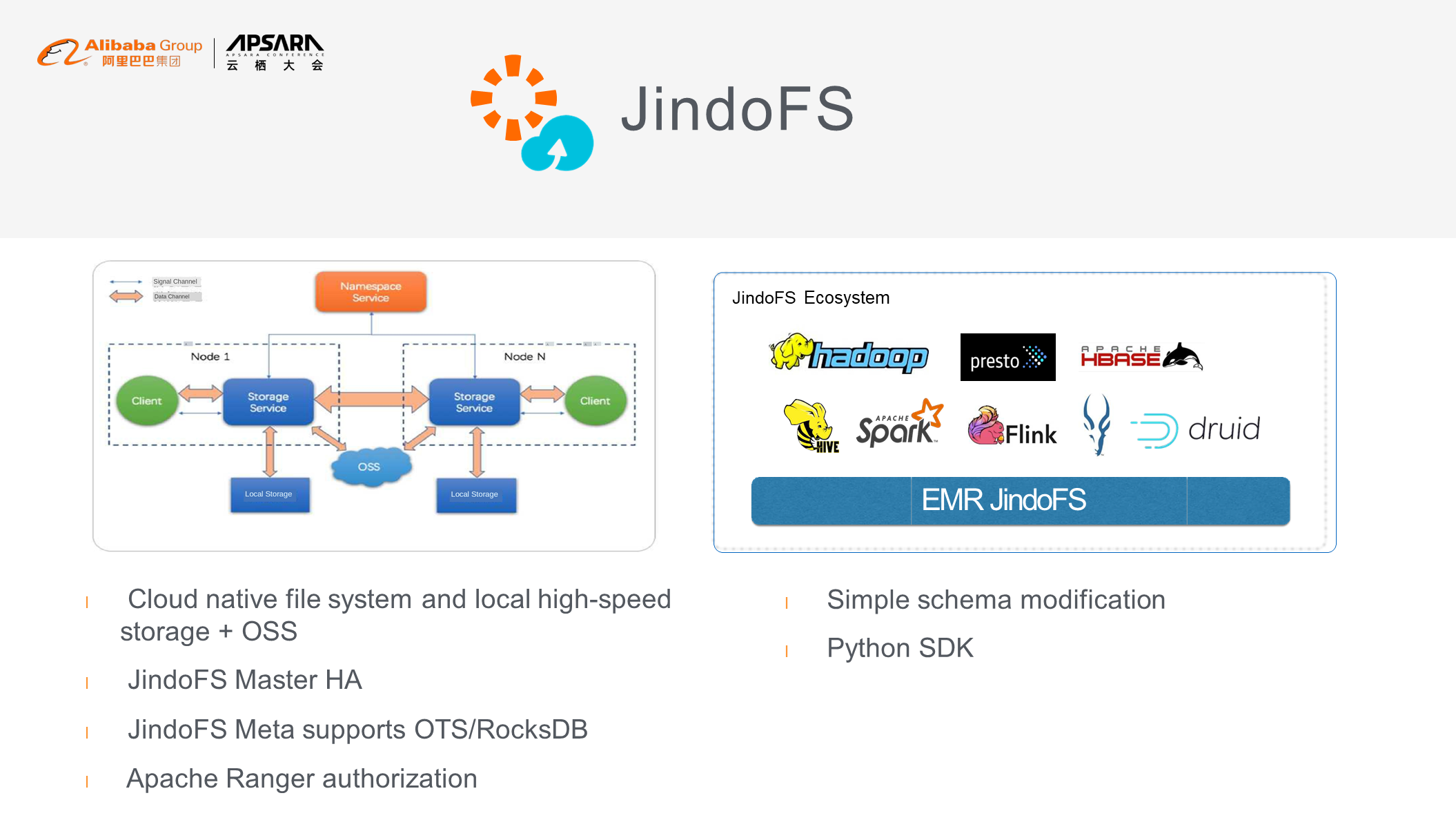

The following figure shows the architecture of the JindoFS. A namespace is the master service, and storage is the slave service. The master service is deployed on one or more nodes. The slave service is deployed on every node. The client service is deployed on each EMR machine. When data is read or written, the system first sends a request to the master service through the slave service to obtain the location of the file. If the file does not exist locally, the file is obtained from the OSS and cached locally. JindoFS implements HA architecture. Local HA is implemented through RocksDB, and remote HA is implemented through OTS. Therefore, JindoFS can achieve both performance and reliability. JindoFS uses Ranger for permission management and design. Use JindoFS SDK to migrate data from on-premises HDFS to OSS for archiving or using.

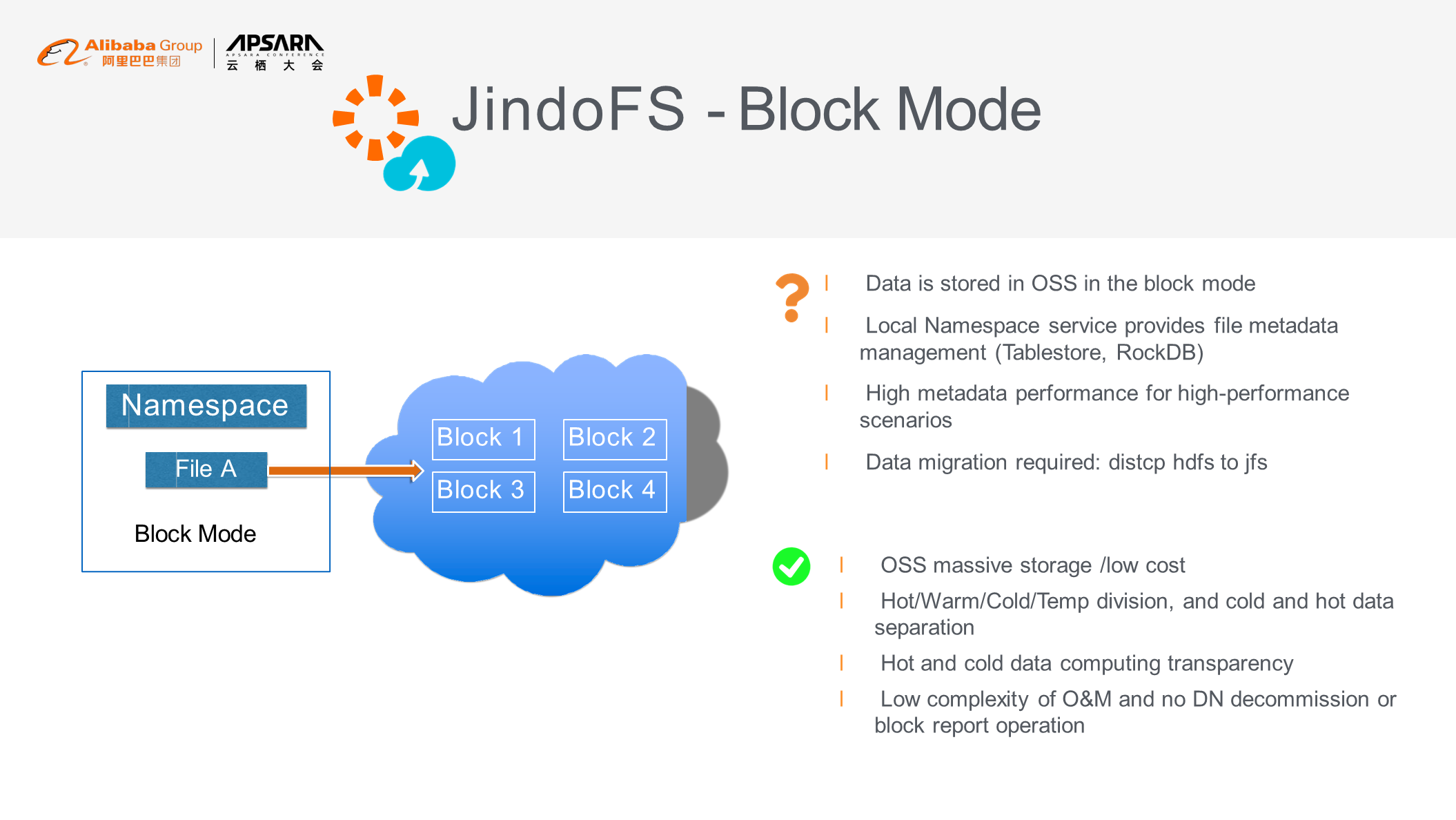

JindoFS supports block storage and cache modes. If you use JindoFS in the block storage mode, its source data is stored in the local RocksDB and remote OTS. The Block mode delivers better performance but is less universal. Customers may only use the source data of JindoFS to obtain the location and detailed information of file blocks. JindoFS in the block storage mode also allows you to specify hot data, cold data, and warm data. Moreover, JindoFS can effectively simplify O&M.

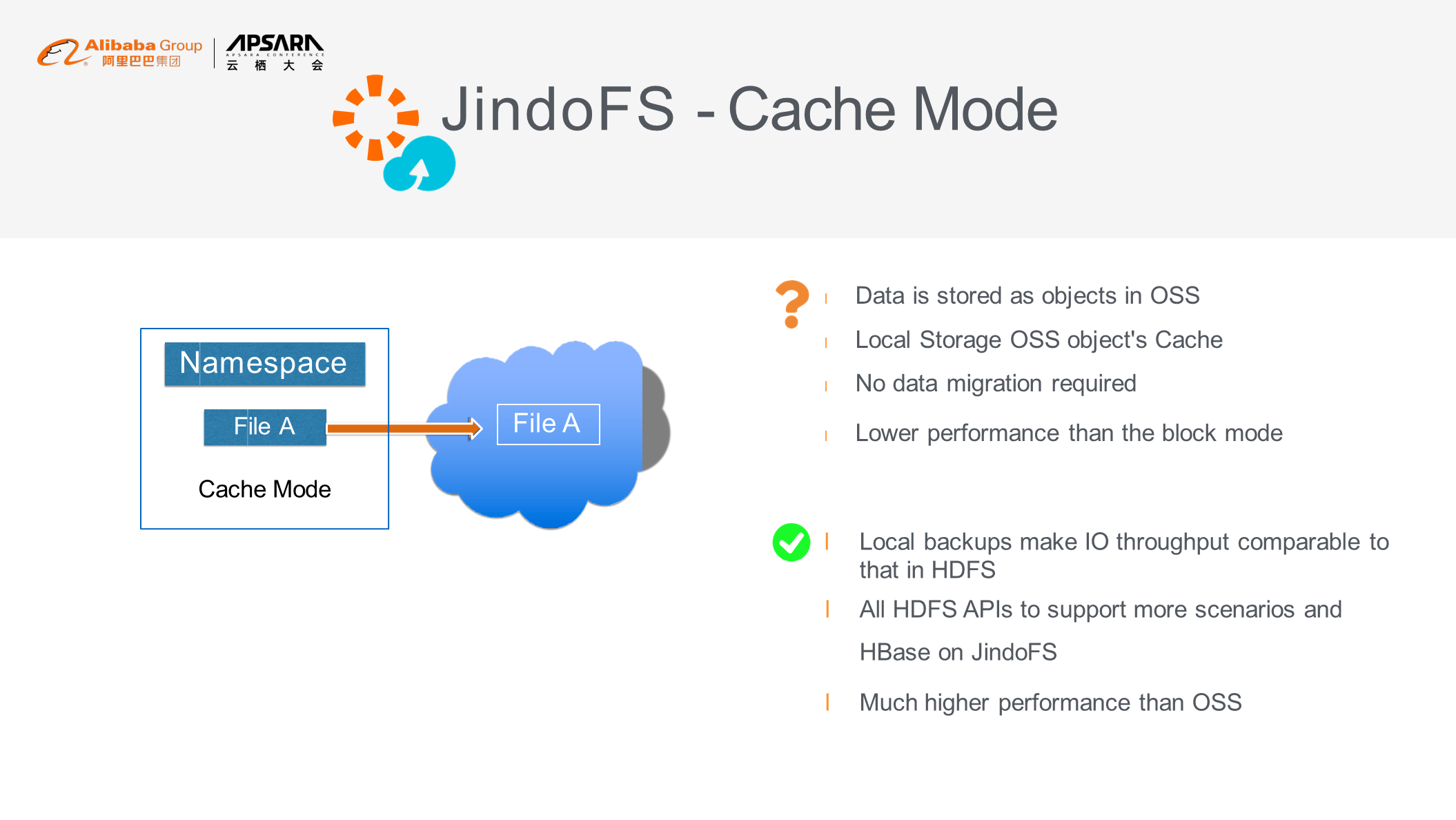

The cache mode uses local storage, and semantics are also based on OSS, such as oss: /bucket/path. The advantage of the cache mode is its universality. This mode is used not only in EMR but also in other computing engines. Its disadvantage lies in its performance. When a large amount of data is involved, the performance is relatively poor compared to the block storage mode.

You may select the modes based on business requirements.

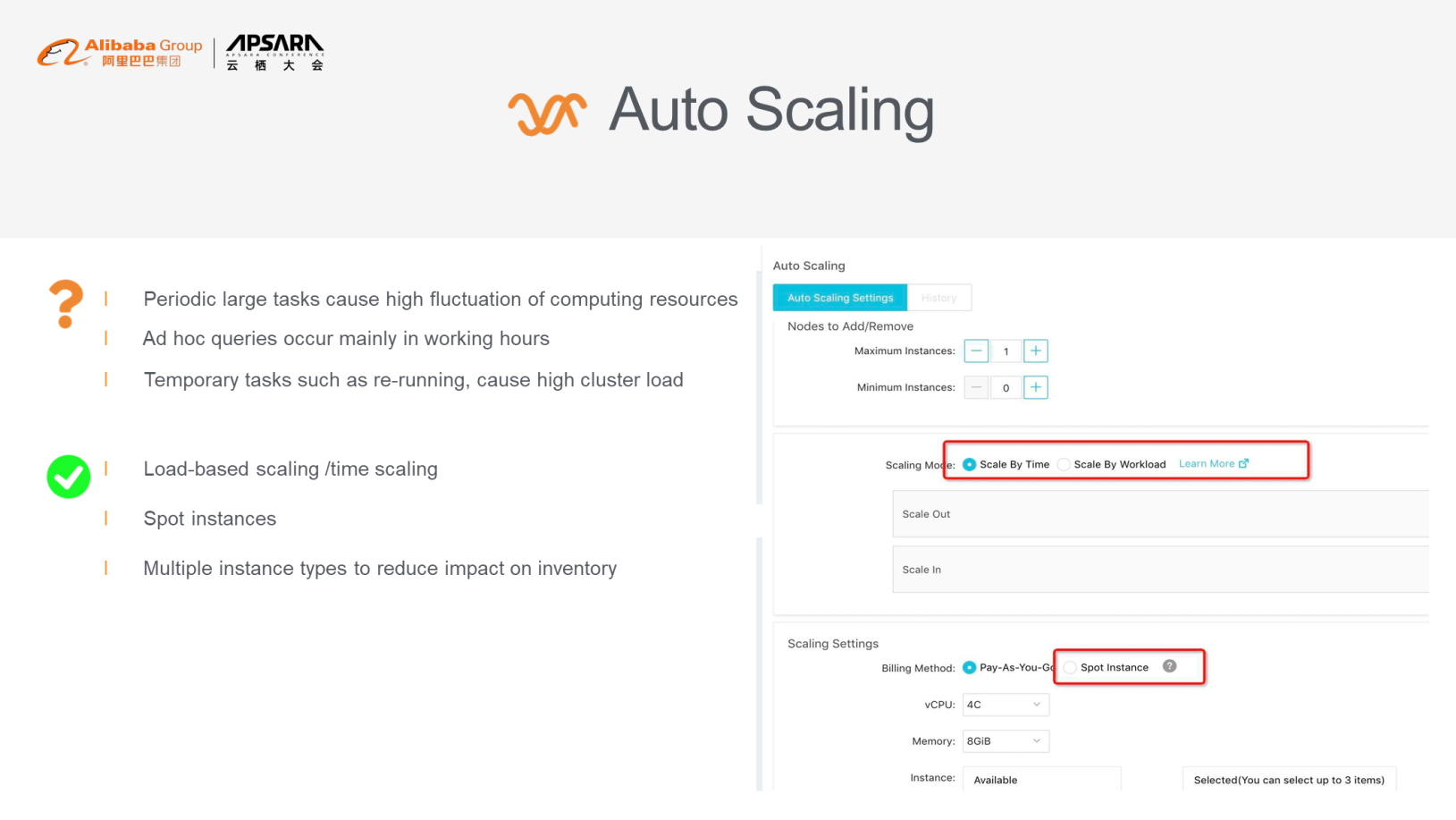

EMR supports auto scaling based on time and cluster load (Yarn metrics are collected and can be manually specified). When you use auto scaling, select multiple recognition types to avoid job failures caused by insufficient resources. Also, use preemptible instances to reduce costs.

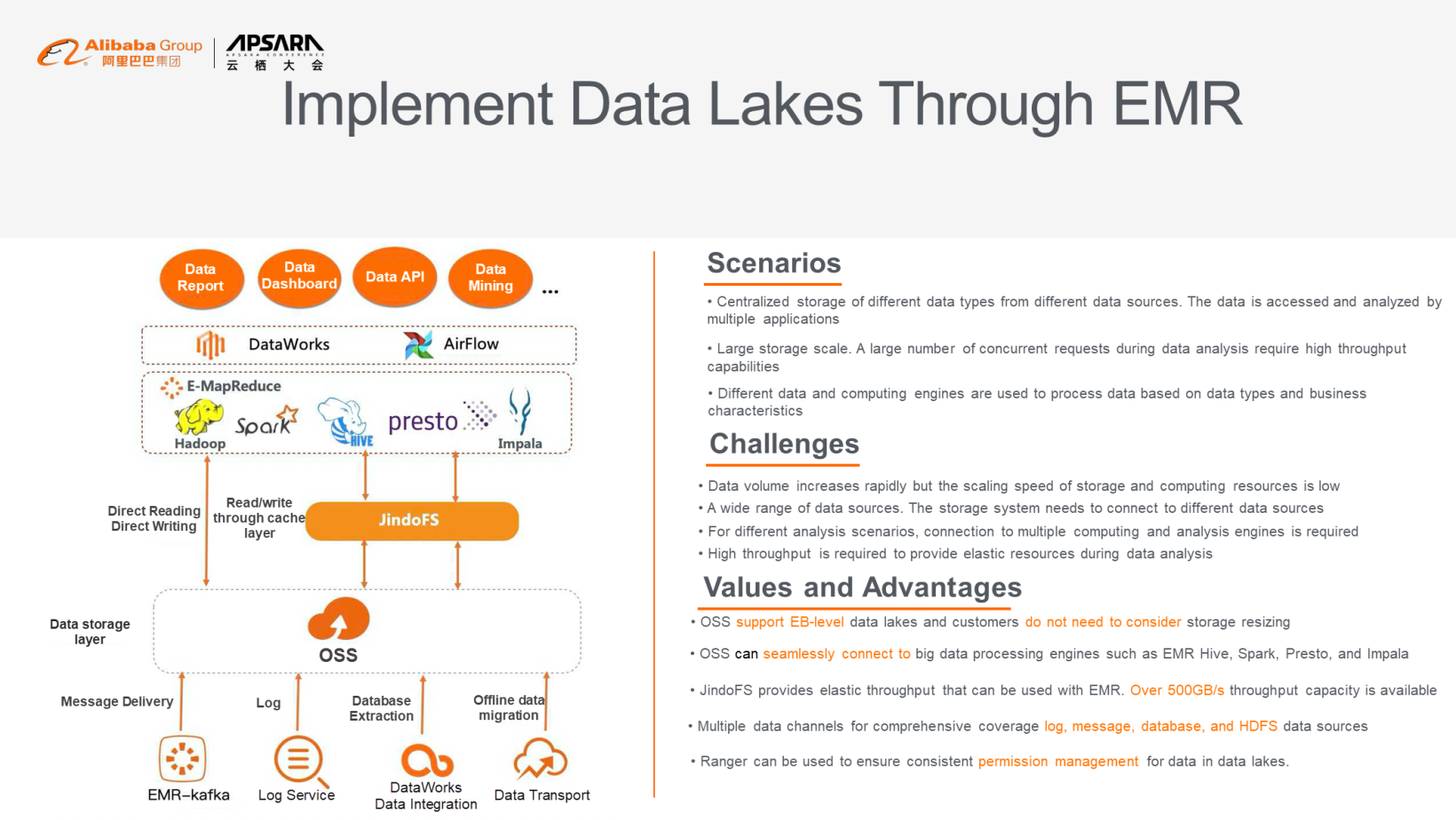

The following figure shows an offline computing architecture. Data can be migrated with Kafka, Log Service, Data Integration (Data Integration in DataWorks), or Lightning Cube (Alibaba Cloud offline migration service) to OSS for EMR in order to directly read and compute. You can use DataWorks or Airflow to schedule workflows. The data applications at the top include data reports, dashboards, and APIs.

The advantage of this offline architecture is the storage of EBs of data. OSS can be connected to the big data computing engine in EMR, while excellent performance is provided.

The capabilities of OSS ensure high-performance of data reading. Implement unified permission management for all open source components in Ranger.

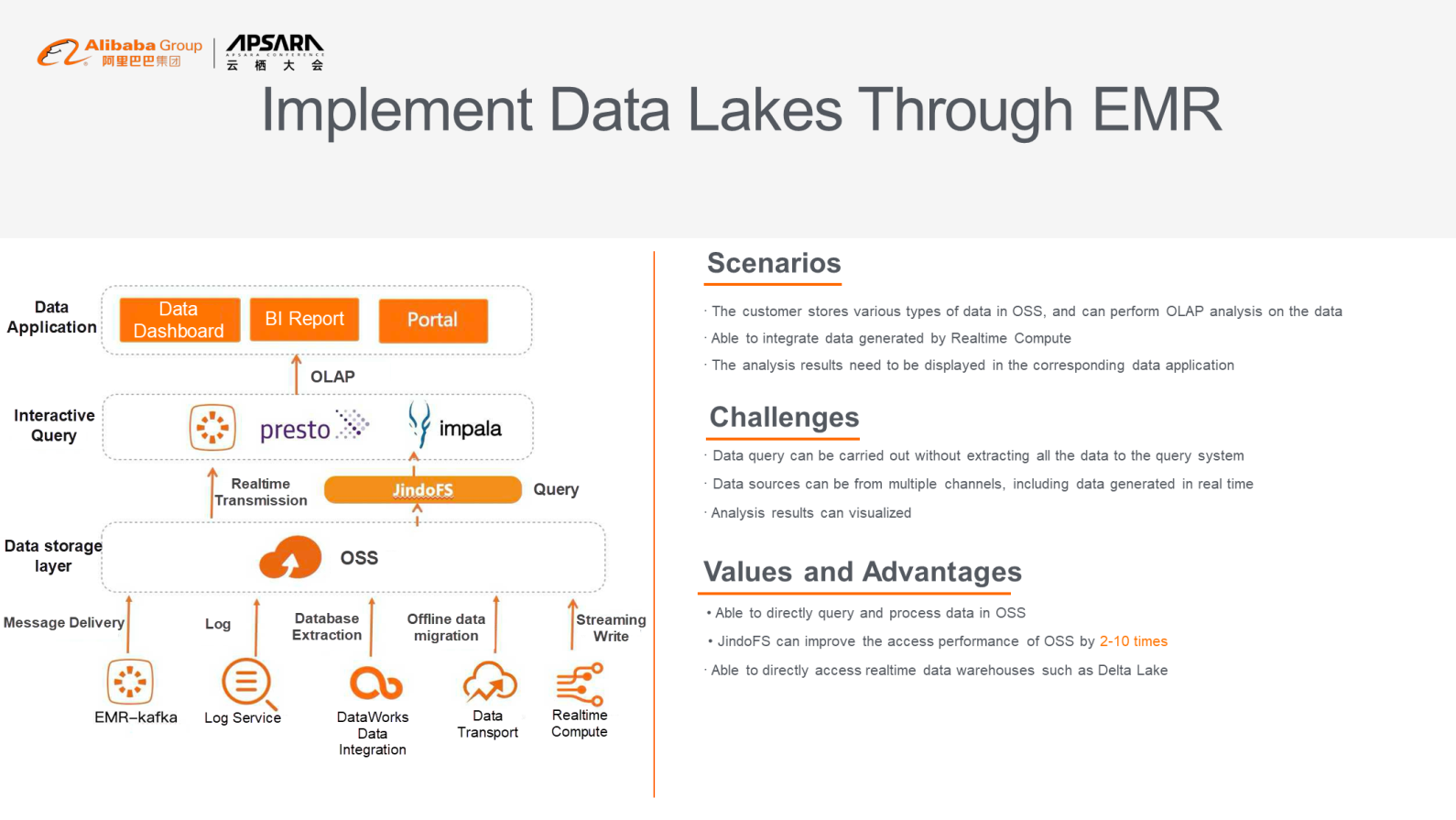

The following figure shows an ad hoc scenario using EMR that involves the inflow of Realtime Compute data. Realtime Compute implements acceleration using JindoFS and uses open-source computing engines such as Presto and Impala for real-time data presentation such as dashboards and BI reports. This scenario is more common in real-time data warehousing services.

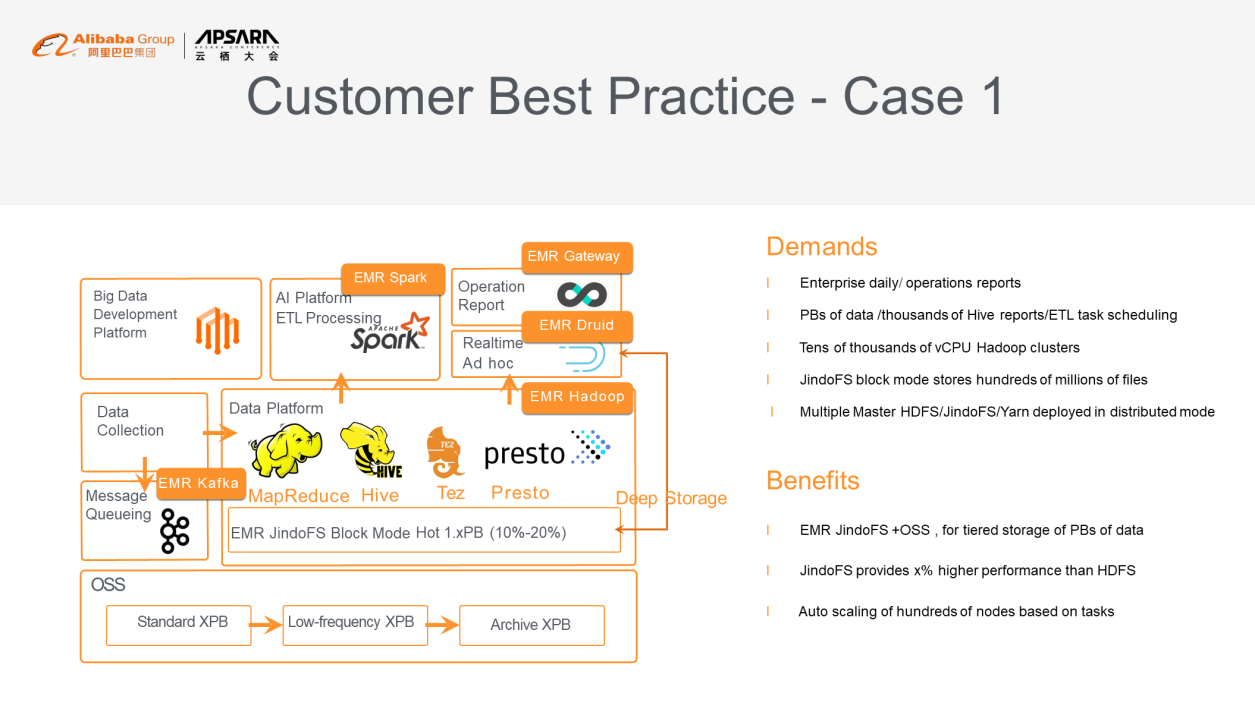

The customer has PBs of data to be migrated. After the data is migrated to the cloud, we find that real hot data accounts for more than 10% of the total data. The cost is effectively reduced through the tiered storage of OSS. When the cluster is large, master nodes have heavy loads. For example, EMR dynamically scales up or down master nodes based on the size and load of the cluster. You may also customize the number of master nodes for computing and cluster deployment. The customer uses the auto scaling feature and has a relatively independent Spark cluster to serve AI and ETL. The Spark cluster supports auto scaling. Besides, the customer uses DataWorks for big data development.

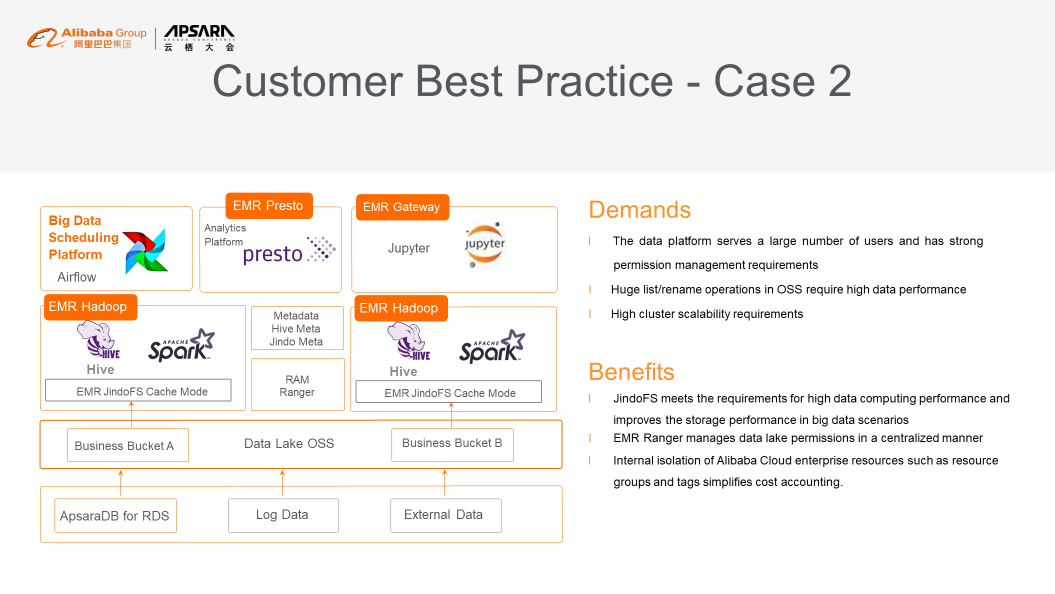

A large number of clusters are involved in multi-computing scenarios. The customer uses the centralized method for managing cluster permissions and source data, such as Hive metadata and JindoFS metadata shared by multiple clusters. During the day, the numbers of clusters and nodes are small. Scaling is required during nighttime peak hours to provide computing capabilities. Airflow is used for workflow scheduling.

Learn more about Alibaba Cloud E-MapReduce at https://www.alibabacloud.com/products/emapreduce

62 posts | 7 followers

FollowAlibaba EMR - August 5, 2024

Alibaba Clouder - April 14, 2021

Alibaba EMR - May 7, 2020

Alibaba EMR - June 2, 2021

Alibaba EMR - July 9, 2021

Alibaba EMR - November 14, 2024

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Data Lake Storage Solution

Data Lake Storage Solution

Build a Data Lake with Alibaba Cloud Object Storage Service (OSS) with 99.9999999999% (12 9s) availability, 99.995% SLA, and high scalability

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba EMR