By Alibaba Cloud MaxCompute Team

This series details the migration journey of a leading Southeast Asian technology group from Google BigQuery to MaxCompute, highlighting key challenges and technical innovations. As the first installment, this article describes the innovations made by MaxCompute in unified storage formats to support this cross-border data warehouse migration.

Note: The customer is a leading Southeast Asian technology group, referred to as GoTerra in this article.

When GoTerra decided to migrate its data warehouse from Google BigQuery to Alibaba Cloud MaxCompute, the group took into account the following items: the regional compliance requirements of global business, the cost optimization goal of localized deployment in the Asia-Pacific market, and the ultimate pursuit of PB-level data processing capabilities.

As a world-leading cloud data warehouse service, BigQuery has been regarded as a benchmark for enterprises worldwide to build large-scale analytical cloud data warehouses, due to its serverless architecture, scalability, and high-concurrency processing performance. It has the following core advantages:

• Fully managed serverless services: BigQuery shields underlying technical details and eliminates the need to maintain the underlying infrastructure. You need to only focus on data logic and business requirements.

• Seamless integration with the Google ecosystem: You can use tools such as Dataflow and Vertex AI to implement a closed-loop process that integrates data processing and AI models.

• Standard SQL and low-latency queries: BigQuery supports complex analysis scenarios and is suitable for small and medium-sized enterprises that need to quickly start big data projects.

• Pay-as-you-go: This billing method avoids a waste of preset resources and is naturally adaptable to sudden data growth.

This migration involves complex cross-border heterogeneous technology migration, and accordingly faces multiple technological breakthroughs and business challenges:

• Differences in underlying storage formats: BigQuery and MaxCompute show significant differences in their underlying storage formats and architectures. To ensure that upper-layer services can be smoothly and seamlessly migrated and used, the underlying storage architecture of GoTerra must be modified and optimized.

• SQL compatibility: MaxCompute SQL and BigQuery SQL differ in the syntax, function library, and execution engine. An automated conversion tool must be developed.

• Data consistency: During cross-platform migration, it is critical to prevent data loss, version conflicts, and extract, transform, and load (ETL) process interruptions.

• Performance optimization: The partitioned tables and resource group scheduling mechanism of MaxCompute and the columnar storage optimization policy of BigQuery adapt to different business scenarios.

• Organization collaboration: The cross-border migration team must balance the system availability during migration and design a canary release policy.

This article describes the differences and reconstruction of underlying storage formats to analyze the technological evolution and innovations of MaxCompute in its underlying storage formats during the nine-month complex migration process of GoTerra.

As a world-leading data warehouse service, BigQuery relies on the extensive technologies of Google and is continuously refined in diverse customer scenarios over a long period of time to provide comprehensive, flexible, O&M-free, and high-performance data warehouse services. In particular, based on the same set of underlying storage formats, BigQuery provides multiple capabilities to manage underlying storage data, including streaming ingestion, atomicity, consistency, isolation, and durability (ACID) transactions, indexing, time travel, and automatic clustering. BigQuery allows you to build a data architecture based on actual business requirements and use various data features of data warehouses without the need to understand underlying storage principles. This simplifies the use of services.

In contrast, MaxCompute provides four table types to meet customer requirements in diverse scenarios, including standard tables, range-clustered and hash-clustered tables, transactional tables, and Delta tables. To better use these table types, you must understand the features and limits of each table type. Due to their own limits, the capabilities of tables cannot be dynamically adapted with the change of scenarios. You must always create tables for new scenarios. This increases learning and maintenance costs. For example:

• You can use standard tables for high-throughput data writes and general scenarios.

• You can use range-clustered or hash-clustered tables in scenarios that pursue ultimate query performance, especially JOIN and filtering. Specific limits are imposed on data writes and data structures.

• You can use transactional tables or Delta tables for row-level data updates.

• You can use Delta tables for data lake scenarios that require complex data updates and time travel queries.

In the technology migration project of GoTerra, MaxCompute faces significant technological challenges due to the high business complexity of GoTerra. GoTerra uses a large number of tables in BigQuery. In different Internet business scenarios, GoTerra uses completely different real-time or batch data writing methods and data consumption methods, and raises greatly varied performance requirements. GoTerra expects to use a unified table format that can provide the advantages and features of different table types. This way, GoTerra can use the same table type to support all business scenarios. To use the four table types provided by MaxCompute, GoTerra needs to learn and understand these table types, and analyze and summarize data usage methods to adapt these table types to existing business scenarios. This involves the training of a large number of staff members in various business departments and the in-depth analysis and sorting of massive data business scenarios. The migration progress may slow down due to heavy training and analysis workloads.

To efficiently migrate large amounts of data and extremely complex business scenarios, MaxCompute must take on the following challenges:

• Unified: provides a unified underlying storage format that integrates multiple data capabilities and features to eliminate the fragmentation of underlying storage capabilities.

• Real-time: uses streaming data writes, incremental data processing, and incremental computing pipelines to improve the real-time capabilities of data warehouses.

• Intelligent: enhances dynamic and auto scaling capabilities to further improve adaptive O&M-free capabilities.

By combining existing storage technologies and the future evolution plans of storage formats, MaxCompute implements system engineering and restructures storage formats to provide Append Delta tables. MaxCompute uses this new table format to provide the following core technologies and features:

• Uses a unified and scalable data organization structure to implement multiple data writing, access, organization, and indexing capabilities, including dynamic cluster bucketing, ACID transactions, data appends, streaming data writes, time travel, incremental data reads, and secondary indexes.

• Allows you to adjust and modify the data organization structure and features of tables based on your business requirements.

• Provides data access, data writing, and data consumption methods that are compatible with existing table formats to simplify the use of new tables and facilitate data migration.

• Ensures the basic data read, write, and access performance that is consistent with that of existing table formats to maintain competitive advantages in costs and performance.

Append Delta tables integrate the capabilities of existing table formats, including ACID transactions, data appends, streaming data writes, and time travel. This way, you can combine multiple capabilities within the same table type and adapt tables to different business scenarios.

| MaxCompute Append Delta table | BigQuery | |

|---|---|---|

| Data insertion by using SQL statements | Supported | Supported |

| Data updates and deletion | Supported | Supported |

| Data import channel | Data writes by using Batch Tunnel or Streaming Tunnel. Streaming data writes: Data can be queried immediately after it is written. |

Data writes to standard tables by using the Storage Write API. Data can be queried at a minute-level latency after it is written. |

| Time travel | Supported | Supported |

| Incremental data access | Incremental data reads by using streams | Not supported |

| Automatic clustering | Supported | Supported |

• MaxCompute Append Delta table: an incremental data table format provided by MaxCompute in 2025 based on the range clustering structure.

• data bucketing: an optimization technology that creates buckets by column and uses buckets to partition data. It can help avoid data shuffle during computing. Data shuffle often consumes a lot of time and resources during parallel computing. MaxCompute introduces buckets for the first time into range-clustered and hash-clustered tables.

• data clustering: a basic technology used to manage and optimize data storage and retrieval in data warehouses. It stores data physically in a centralized manner and uses data pruning to improve query efficiency.

• range-clustered table: a table type that sorts data based on key values and stores the sorted data in ranges based on one or more columns. Range clustering is a new data clustering method that distributes data in a globally sorted order.

• hash-clustered table: a table type that allows you to configure the shuffle and sort properties to distribute data to different buckets and store the data based on the hash results of key values.

In the data migration scenario of GoTerra, to significantly improve the storage and computing efficiency of large amounts of data and reduce business migration costs, MaxCompute uses the following core features of Append Delta tables:

Storage Service is a core component of the self-developed distributed storage engine in MaxCompute. It provides intelligent data governance services for the high-reliability storage and high-throughput reads and writes of large amounts of data. As cloud data warehouse scenarios evolve from large-scale data processing to AI-native intelligent architectures, Storage Service needs to tackle the following key challenges:

• Storage efficiency: supports automatic storage tiering for petabytes of data, compression ratio optimization, and defragmentation.

• Computing collaboration: supports dynamic partitioning, incremental data reads, and conversion from row-oriented storage to column-oriented storage for Append Delta tables.

• Scalability: adapts to the dynamic load fluctuations of cross-region clusters, such as daily PB-level data migration during sales promotions.

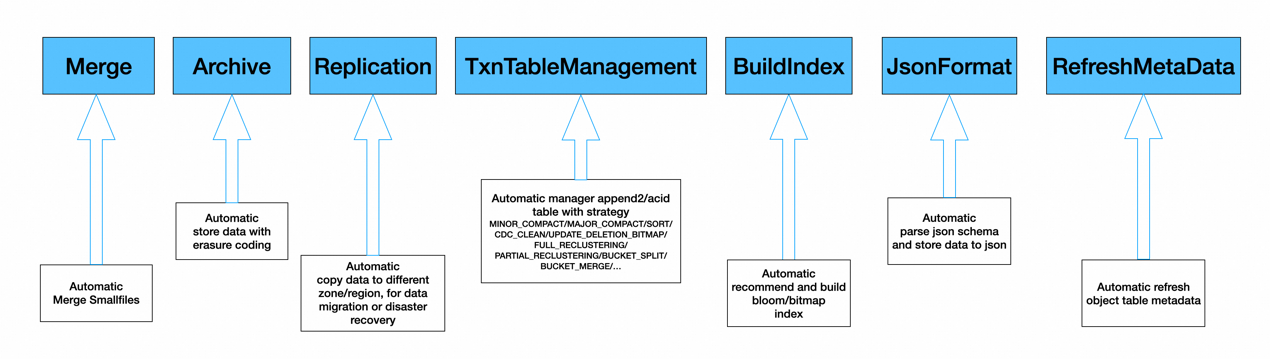

Storage Service supports background data tasks to enable the self-O&M, self-optimization, and self-healing of data, significantly reducing the costs of manual intervention. Automatic data governance services involve, but not limited to, the following tasks:

• File merging and rewriting: a basic merge task that merges small files to reduce the pressure on underlying storage resources. This task also supports data rewriting by using higher compression ratios and encoding algorithms, and supports Redundant Array of Independent Disks (RAID) to provide data archiving capabilities.

• Automatic storage tiering: a task that can migrate petabytes of data between hot and cold data storage on a daily basis, which is supported by the computing power of cross-region ultra-large storage clusters in MaxCompute.

• Minor compaction or major compaction: a task that merges each base file with all of its delta files to improve storage efficiency. The storage layer provides two modes: A minor compaction task merges each base file with all of its delta files and deletes the delta files. A major compaction task merges each base file with all of its delta files, deletes the delta files, and then merges small base files.

• Index building: a task that builds data indexes such as Bloom filter indexes and bitmap indexes.

• Streaming compaction: a task that merges row-oriented files or converts row-oriented files into column-oriented AliORC files in scenarios where data is written by using Streaming Tunnel.

• Data reclustering: a task that partitions and sorts the unordered data appended to a range-clustered table in streaming mode, and then merges the sorted data into the range-clustered table.

• Data backup: a task that enables geo-redundancy by using cross-region data replication.

Storage Service supports the preceding background data tasks to provide automatic data governance services, and continuously optimizes data computing and storage efficiency by using multiple methods such as data resorting, redistribution, and replication. Storage Service consists of Service Control and Service Runtime. Service Control receives, queues, and routes requests, and forwards requests to compute clusters. Service Runtime converts each request into a specific execution plan and submits a service job to process the request. In other words, Service Control collects background data task requirements and distributes the requirements to compute clusters. Then, Service Runtime converts the requirements into specific execution plans and submits service jobs to run background data tasks.

When you create a range-clustered or hash-clustered table, you must evaluate the data amount of your business in advance and specify an appropriate number of buckets and a cluster key based on the amount of business data. After the table is created, MaxCompute uses a clustering algorithm to route data to the correct buckets based on the cluster key. This may lead to data skew or data fragmentation. If the amount of business data is too large and the number of buckets is too small, each bucket contains oversized data. This reduces the effectiveness of data pruning during queries. If the number of buckets is far greater than that required by the amount of business data, each bucket contains too little data. This creates many small, fragmented files and harms query performance. Therefore, when you create a table, you must specify an appropriate number of buckets based on the data amount of your business. You must understand the data usage of your business and the underlying table formats of MaxCompute. This way, you can use clustering capabilities to maximize query performance.

However, the static allocation of buckets may bring the following issues:

• In large-scale data migration scenarios, you must evaluate the potential data amount of each table. This evaluation is manageable for a small number of tables but becomes very difficult for thousands of tables.

• Even if you accurately evaluate the current data amount of a table, the actual data amount will change as the business evolves. The number of buckets specified for a table may not be suitable in the future.

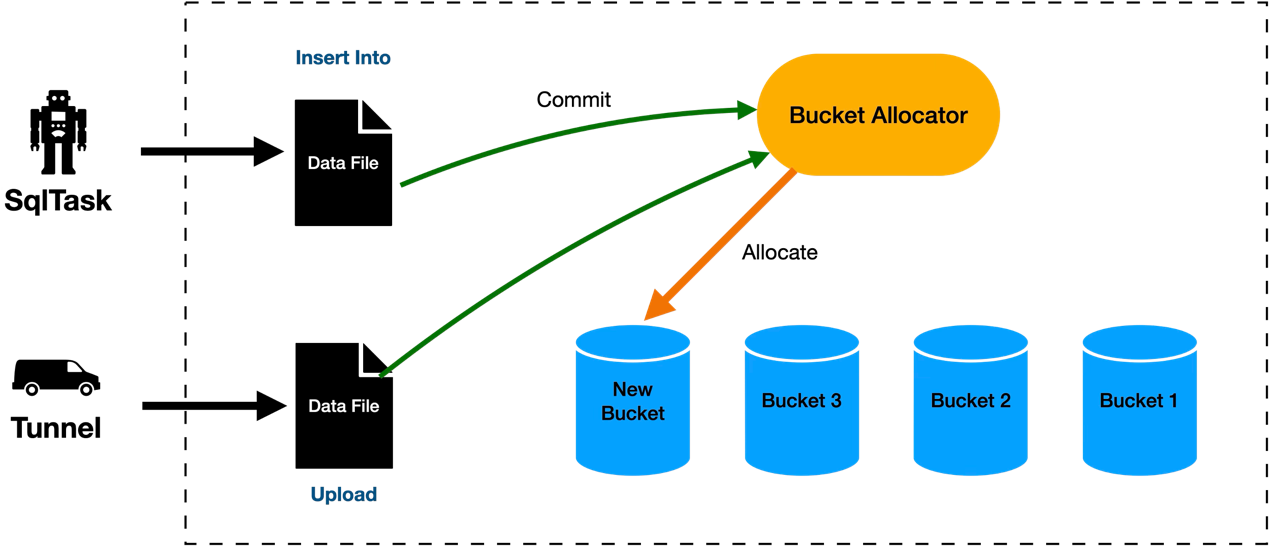

To sum up, the static allocation of buckets cannot effectively support large-scale data migration scenarios or rapidly changing business environments. A better way is to dynamically allocate buckets based on the actual data amount. This frees you from managing the number of underlying buckets, which greatly reduces learning costs and simplifies O&M. This also better adapts to changing data amounts.

The Append Delta table format is designed to support the dynamic allocation of buckets. All data in a table is automatically divided into buckets. Each bucket is a logically contiguous storage unit that contains about 500 MB of data. Before you create a table and write data, you do not need to specify the number of buckets for the table. As data is continuously written, buckets are automatically created as needed. This way, you do not need to worry about data skew or data fragmentation caused by large or small amounts of data in each bucket as the amount of business data increases or decreases.

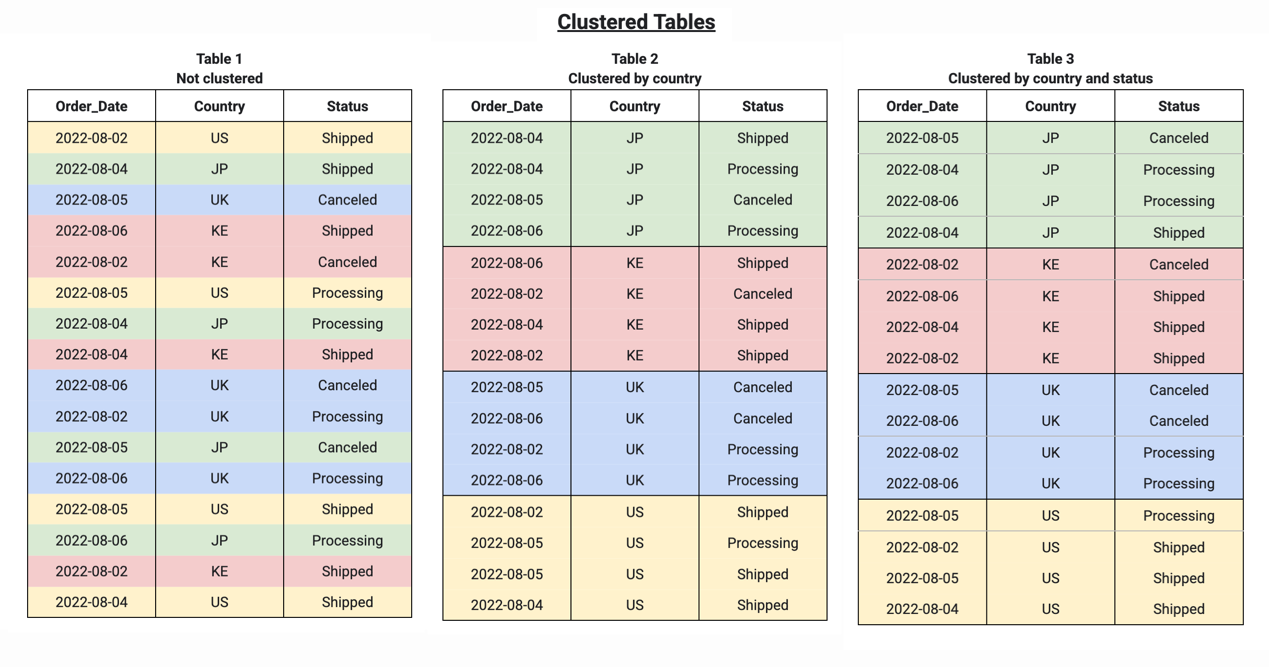

Clustering is a common data optimization method. A cluster key is a user-specified table property. It works by sorting and continuously storing data based on specified data fields. When a query uses the cluster key, optimizations such as pushdown and pruning can narrow the data scan range to improve query efficiency.

As shown in the preceding figure, MaxCompute provides two clustering capabilities: range clustering and hash clustering. You can use range-clustered or hash-clustered tables to split data into buckets and sort the data within each bucket. This accelerates queries by pruning buckets and pruning data within buckets during the query process. However, in range-clustered or hash-clustered tables, data must be bucketed and sorted during the writing process to achieve a globally sorted order. This restricts how data can be written. Data must be written by using a single INSERT OVERWRITE operation. After data is written to a table, to append more data, you must read all existing data from the table, combine the existing data with new data by using a UNION operation, and then rewrite the entire dataset. This process makes appending data very costly and inefficient.

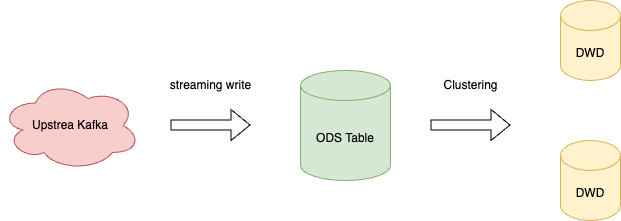

As shown in the preceding figure, clustering is not performed on tables at the operational data store (ODS) layer. This is because data at the ODS layer is close to raw business data and is often continuously imported from external data sources. This process requires high data import performance. The costly data writing methods of traditional clustered tables cannot meet the low-latency, high-throughput requirements. Therefore, a cluster key is specified for each table at the data warehouse (DW) layer. Data from the previous data timestamp in ODS tables is cleansed and then imported into the more stable DW layer. This process accelerates the performance of subsequent queries.

However, this solution causes a delay in the freshness of data at the DW layer. To avoid the read and write amplification caused by repeated updates at the DW layer, the data at the DW layer is usually updated after the data at the ODS layer is stable. As a result, the data queried from the DW layer may not be up-to-date. GoTerra has extremely high requirements for both query performance and data freshness. It requires clustering at the ODS layer to accelerate queries on ODS tables and obtain real-time information.

Therefore, the original solution provided by MaxCompute to synchronously perform clustering during data writes cannot meet the requirements for real-time performance. The incremental clustering capability of Append Delta tables uses a background data service to asynchronously perform incremental clustering. This achieves an optimal balance among data import performance, data freshness, and query performance.

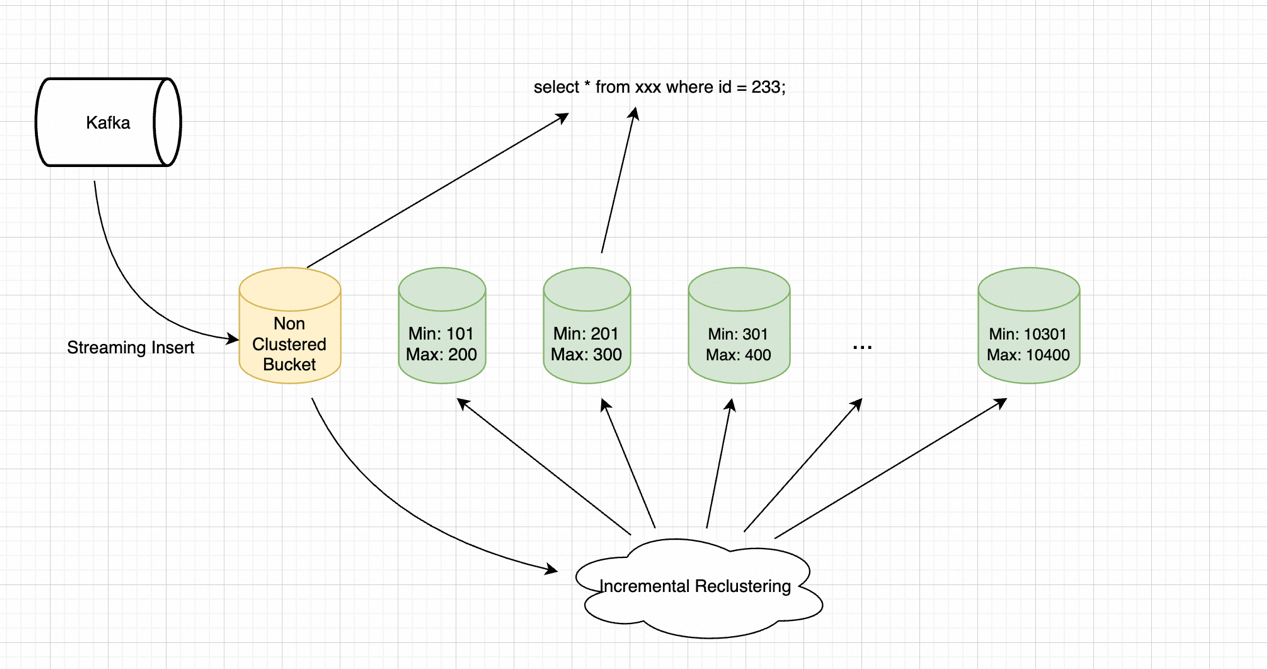

As shown in the preceding figure, data is imported into MaxCompute in streaming mode. During the writing process, unsorted data is directly written to disks and allocated to new buckets. This method maximizes write throughput and minimizes latency. In this case, the newly written data is not clustered. The data ranges of the new buckets overlap with those of existing clustered buckets. When a query runs, the SQL engine prunes the clustered buckets and scans the incremental buckets.

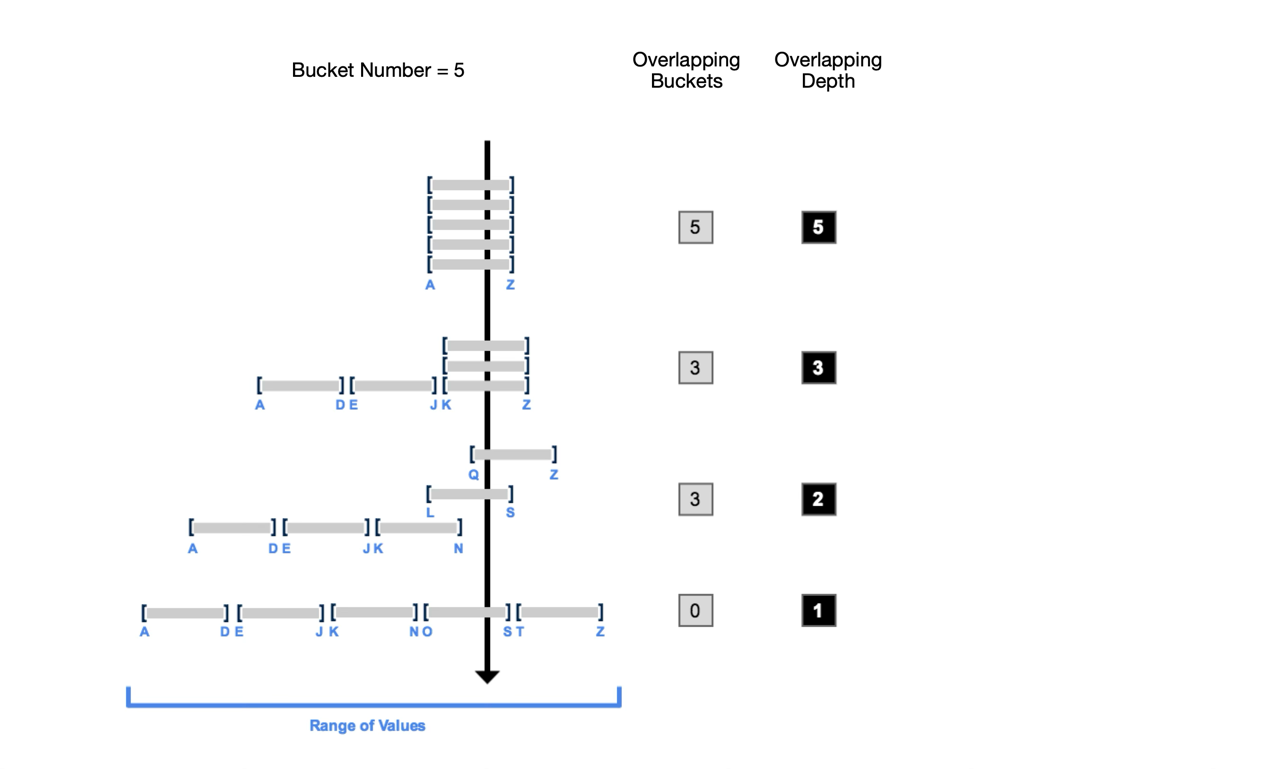

As shown in the preceding figure, the background data service of MaxCompute continuously monitors the bucket overlapping depth. When the depth reaches a specific threshold, incremental reclustering is triggered. This operation reclusters the newly written buckets. This process ensures that the data in buckets remains ordered, which provides stable overall query performance.

The innovative Append Delta table format delivers outstanding performance in complex business scenarios. This reflects the core value of optimizing data storage formats in big data analysis scenarios. This innovative table format has the following technological benefits and performance optimizations:

1. Data autonomy:

• Achieves a dynamic balance between storage efficiency and query performance by using background data tasks such as merging, compaction, and reclustering tasks.

2. Scalability:

• Supports seamless scaling from terabytes to exabytes of data based on dynamic bucketing and auto-split/merge policies.

3. Real-time clustering:

• Uses incremental reclustering to provide millisecond-level data freshness and accelerate queries at the ODS layer.

| Scenario | Before optimization | After optimization | Improvement |

|---|---|---|---|

| Query latency | It takes 500s to scan tables without clustering performed, and 250 GB of data is scanned. | It takes less than 10s to scan tables with incremental clustering performed, and 3 GB of data is scanned. | The latency is reduced by 98%, and the amount of scanned data is reduced by 99%. |

| Compaction latency | The response time of compaction is within 24 hours. | The response time of compaction is within 10 minutes. | The query efficiency is improved by more than six times. |

Append Delta tables eliminate the fragmentation of MaxCompute features by providing a unified underlying storage format that is much easier to understand and use. This innovative table format not only inherits the excellent performance of MaxCompute, but also greatly improves flexibility, timeliness, and the diversity of scenarios. In the data migration project of GoTerra, Append Delta tables significantly improve the overall migration efficiency. MaxCompute uses this unified table format to migrate the full data of GoTerra from BigQuery, including more than 60 PB of data from 550,000 tables. This data migration project proves that the overall architecture design and implementation of Append Delta tables can make MaxCompute competitive with world-leading services such as BigQuery in an all-around manner.

The successful implementation of Append Delta tables not only marks a major breakthrough in the storage architecture of MaxCompute, but also indicates the evolution trends of cloud-native data platforms towards large scales, real-time performance, and intelligence. Append Delta tables maintain the high-throughput batch processing capabilities of MaxCompute and also improve the timeliness of traditional data warehouses. This innovative table format enables GoTerra to seamlessly transfer from historical batch processing jobs and meet emerging real-time analysis requirements after migration. For example, it reduces the processing latency of behavioral logs from minutes to seconds. This supports GoTerra in making real-time decisions for scenarios such as dynamic pricing and risk control model iteration in the Southeast Asian market.

From a broader perspective, the innovative Append Delta table format reflects the forward-looking layout of a data + AI integration architecture on Alibaba Cloud. The underlying column-oriented storage and vectorized engine provide a natural data acceleration path for feature engineering in machine learning. Alibaba Cloud will continue to develop the near real-time data processing and multimodal data storage capabilities. The unified storage capability of multimodal data, such as text, images, and time series data, lays a foundation for enterprises to build cross-modal analysis pipelines. The massive data migration project of GoTerra is a key step for Chinese enterprises to move towards global data governance standards. It shows that China's self-developed data infrastructure has complete capabilities to support the complex business of cross-border enterprises. In the future, MaxCompute will deeply integrate Append Delta tables with its native real-time computing components, and provide the new Delta Live materialized view (MV) capability. MaxCompute will further unlock the values of data assets throughout their lifecycle and provide a Chinese solution for the data revolution in the cloud-native era.

1,317 posts | 463 followers

FollowAlibaba Cloud Community - October 17, 2025

Alibaba EMR - October 12, 2021

Alibaba Cloud MaxCompute - July 15, 2021

Alibaba EMR - May 13, 2022

Alibaba Cloud Community - October 20, 2025

Alibaba EMR - September 2, 2022

1,317 posts | 463 followers

Follow Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud Community