By Alibaba Cloud MaxCompute Team

This series details the migration journey of a leading Southeast Asian technology group from Google BigQuery to MaxCompute, highlighting key challenges and technical innovations. This third installment analyzes the performance optimization technologies that MaxCompute uses to support this cross-border data warehouse migration.

Note: The customer is a leading Southeast Asian technology group, referred to as GoTerra in this article.

As a core participant in the digital economy of Southeast Asia, GoTerra requires its data architecture to support transactions, high-concurrency risk control, and cross-region logistics scheduling for hundreds of millions of users. Although the original BigQuery data warehouse has powerful analysis capabilities, the costs prominently increase as the business scale exceeds specific thresholds. To migrate its data warehouse from BigQuery to MaxCompute, GoTerra expects to reduce costs and improve efficiency by remodeling the underlying architecture and optimizing performance. After technical issues such as SQL syntax conversion and storage formats are resolved, performance optimization has become a critical bottleneck of the migration project and presents three core challenges:

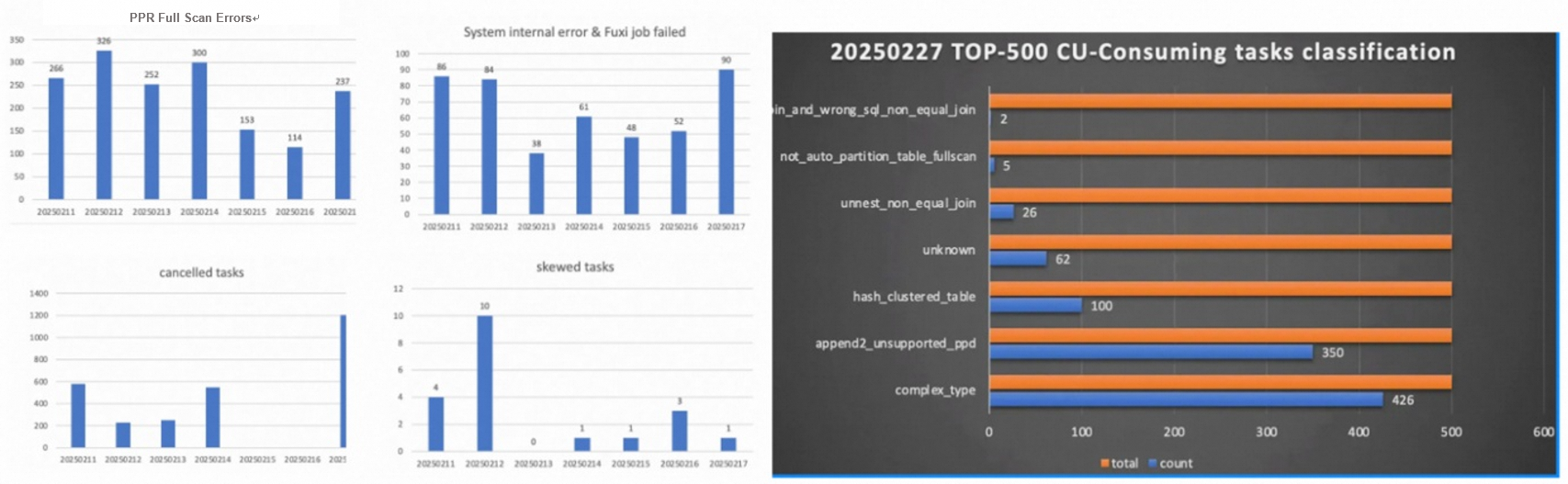

In response to the preceding challenges, the migration team abandons the traditional extensive optimization strategy and establishes a data-driven, hierarchical governance-based optimization framework. The core logic is to invest limited resources in critical bottlenecks by using intelligent classification. Based on the Pareto principle, 80% of issues or resources are generated or consumed by 20% of queries, which are high-frequency or inefficient queries. To accurately identify issues, an automated classification tool is used to classify queries into the following types:

In addition, the following methods are used:

The following figure shows an example of query classification in the initial stage of performance optimization. Partition pruning, complex data types, Append 2.0, and UNNEST are the major issues that need to be resolved.

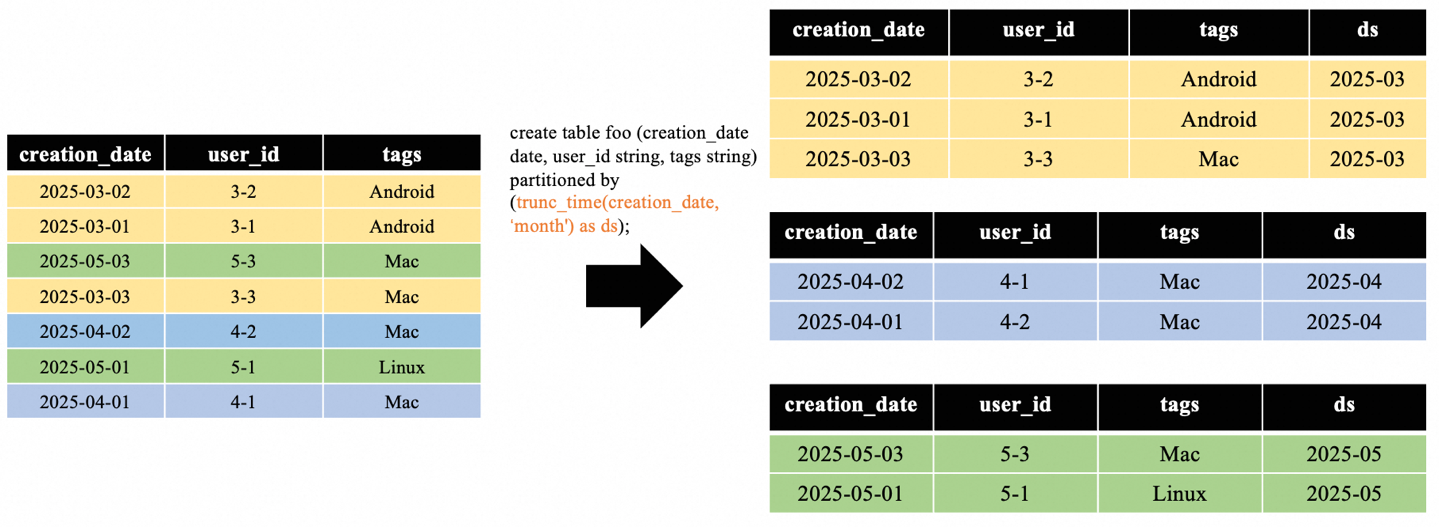

MaxCompute uses auto-partitioned tables to implement a feature similar to the time-unit column partitioning feature of BigQuery. Compared with traditional static partitioned tables, auto-partitioned tables dynamically generate partition key columns by using the trunc_time function, as shown in the following figure.

The automatic partitioning feature effectively improves the flexibility of partition management. However, the partition pruning process of static partitioned tables cannot be directly reused because partition key columns cannot be directly mapped to query conditions. This makes partition pruning ineffective and causes two core issues:

To support the partition pruning feature of auto-partitioned tables, MaxCompute designs a solution to perform dynamic partition pruning based on expressions. The purpose is to minimize the amount of scanned data while ensuring the correctness of query semantics. For example, execute the following statements to create an auto-partitioned table and query data from the table:

create table auto_partition_table

(

key string,

value bigint,

ts timestamp

)

partitioned by trunc_time(ts, 'day') as pt

;

SELECT * FROM auto_partition_table

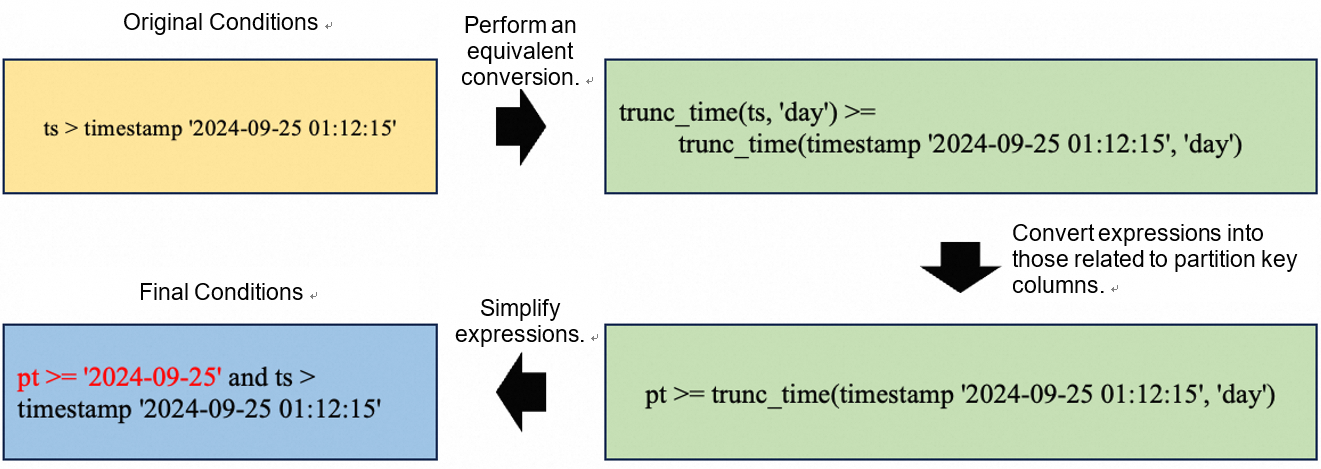

WHERE ts > timestamp'2024-09-25 01:12:15';The essence of partition pruning based on the filter conditions of the ts column is to convert the expressions related to the ts column into the expressions related to the pt column. Both equivalent conversion and non-equivalent conversion are supported, but the filtering results before and after conversion must have an "inclusive" relationship. The following three scenarios are supported:

If the filter conditions of the ts column do not include functions, expressions can be directly converted. However, the filter ranges of expressions before and after conversion are not equivalent. Therefore, the original expressions must be retained. The following figure shows the derivation process.

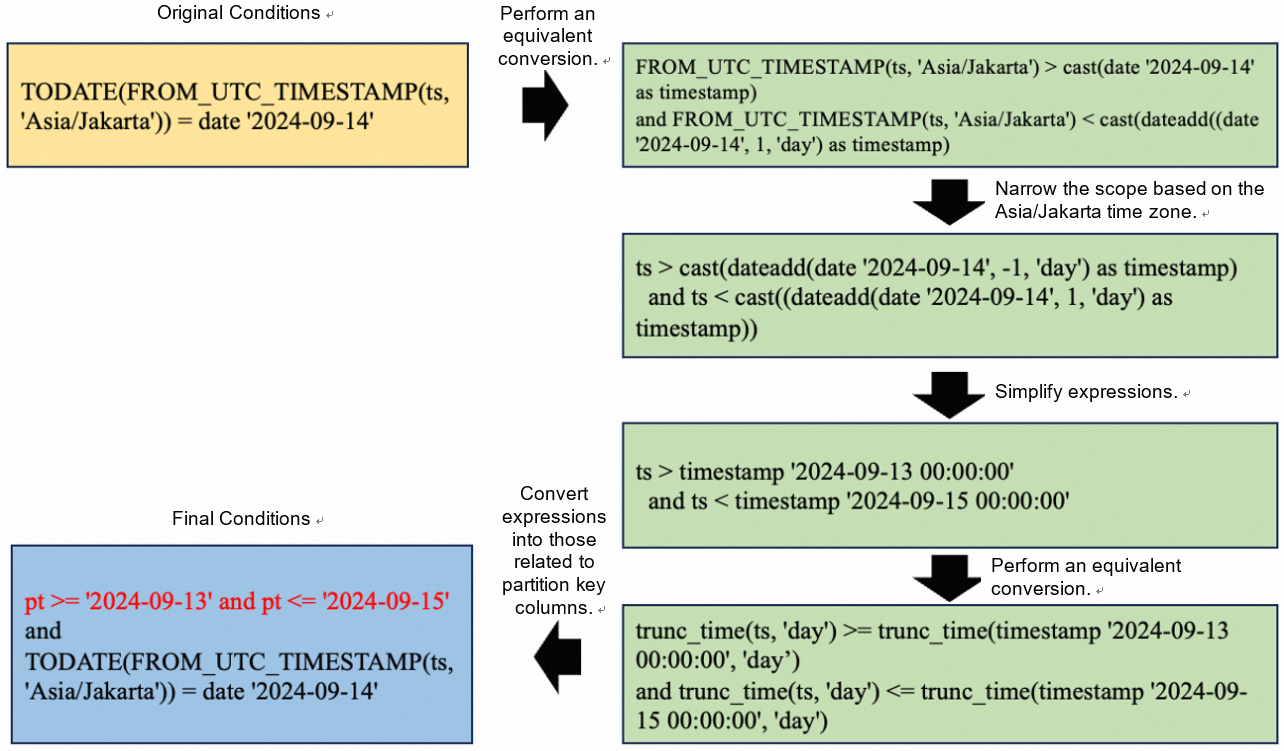

If the filter conditions of the ts column include functions such as time zone conversion or date format conversion, expressions cannot be directly converted. In this case, built-in functions are used to fold the functions in expressions. For example, execute the following statement to query data:

select * from auto_partition_table

where TODATE(FROM_UTC_TIMESTAMP(ts, 'Asia/Jakarta')) = date '2024-09-14'The following figure shows the derivation process during partition pruning.

In this case, the results of the original filter conditions are a subset of the results of partition pruning. Therefore, the derivation process applies only to partition pruning, and the original filter conditions are retained.

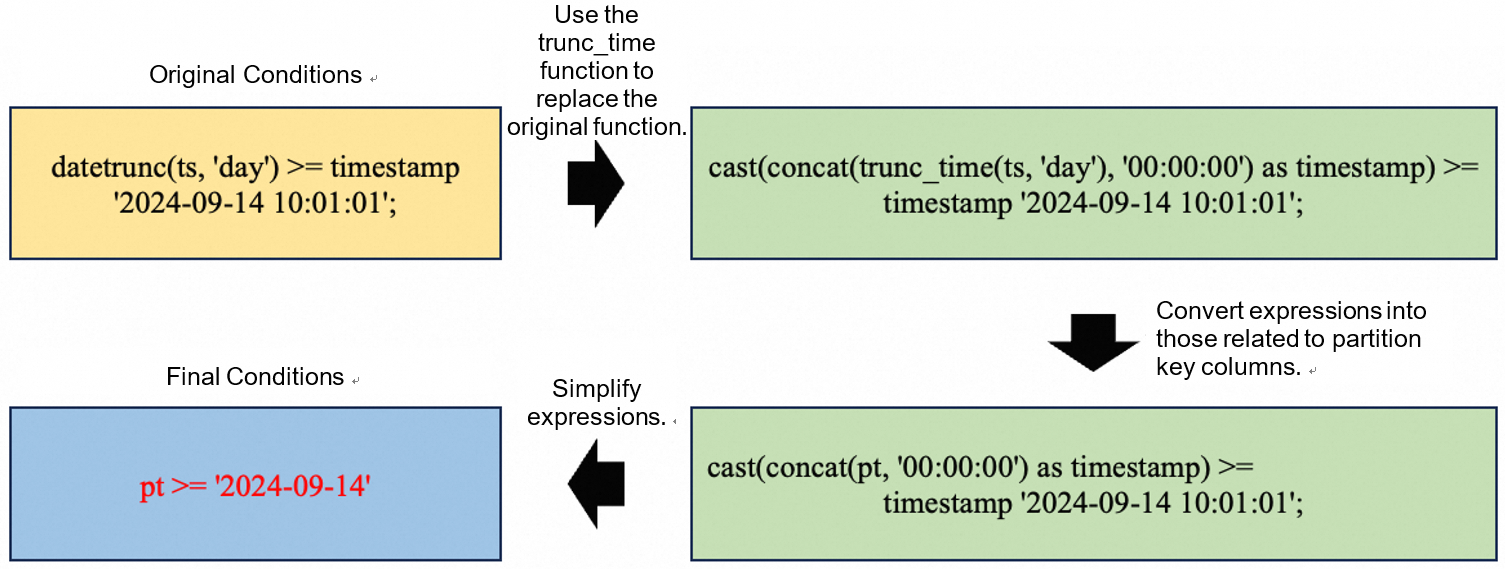

If the functions in the filter conditions of the ts column are semantically equivalent to the functions that define the partition key, such as datetrunc(ts, 'day') and trunc_time(ts, 'day'), expressions can be equivalently converted. In this case, built-in functions are also used.

select * from auto_partition_table

where datetrunc(ts, 'day') >= timestamp '2024-09-14 10:01:01';In the preceding query statement, datetrunc(ts, 'day') is semantically equivalent to trunc_time(ts, 'day'). The following figure shows the derivation process.

In this case, the original expression is converted into an equivalent expression. Therefore, after partition pruning is complete, the original filter conditions are not retained.

MaxCompute uses the expression-based dynamic partition pruning solution to achieve the high-performance and cost-effective data processing capabilities of static partitioned tables, and also ensures the flexibility of partition management.

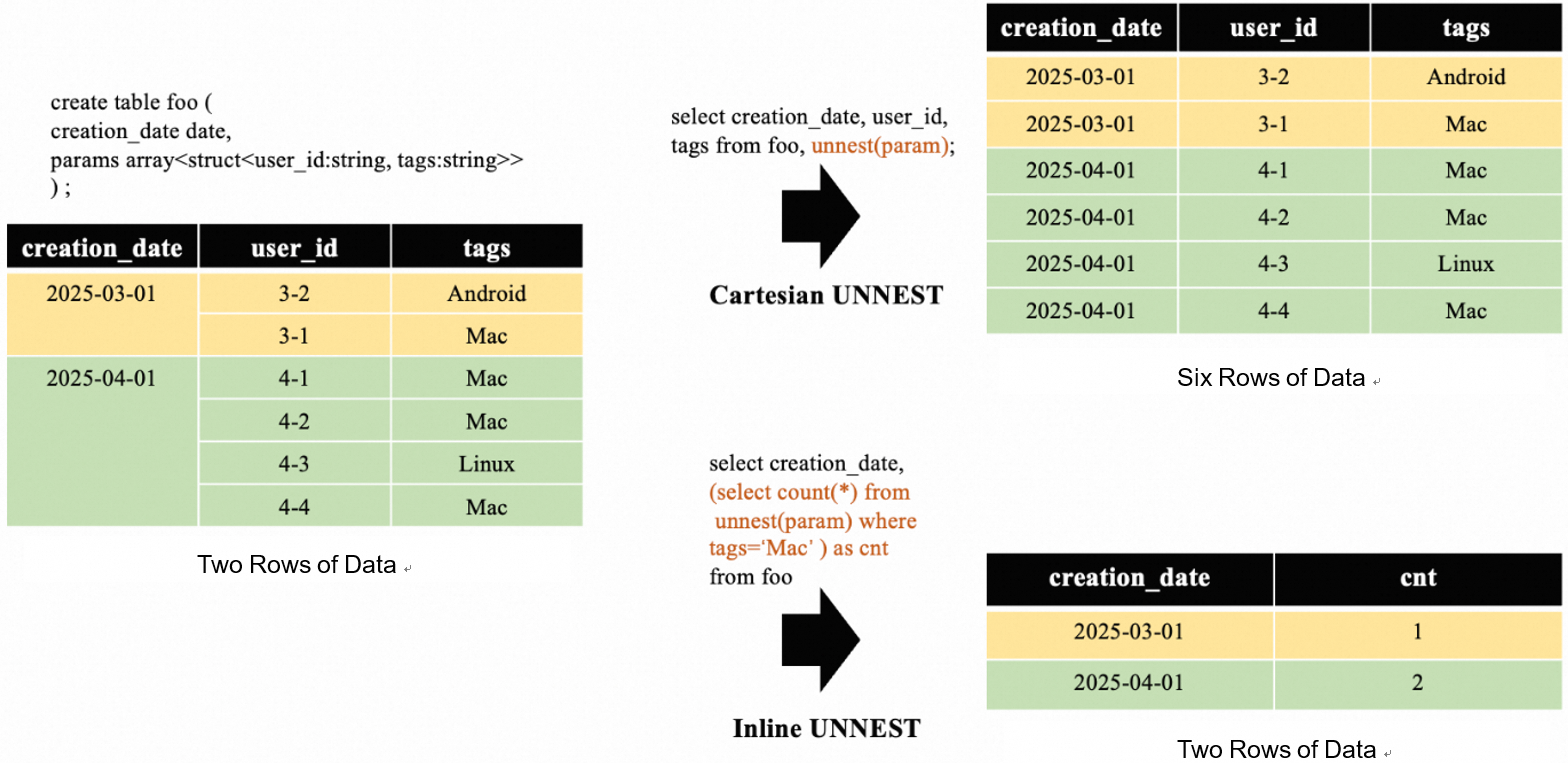

In Google BigQuery, UNNEST is a core operation used to process arrays. It is used to display the elements of a nested array in multiple rows, as show in the following figure.

GoTerra extensively uses UNNEST in queries. After these queries are migrated to MaxCompute, MaxCompute simulates the feature of UNNEST by using LATERAL VIEW + EXPLODE or CROSS JOIN. This causes the following performance issues:

The following statements provide a simplified example:

create table foo (

creation_date date,

params array<struct<user_id:string, tags:string>>

) ;

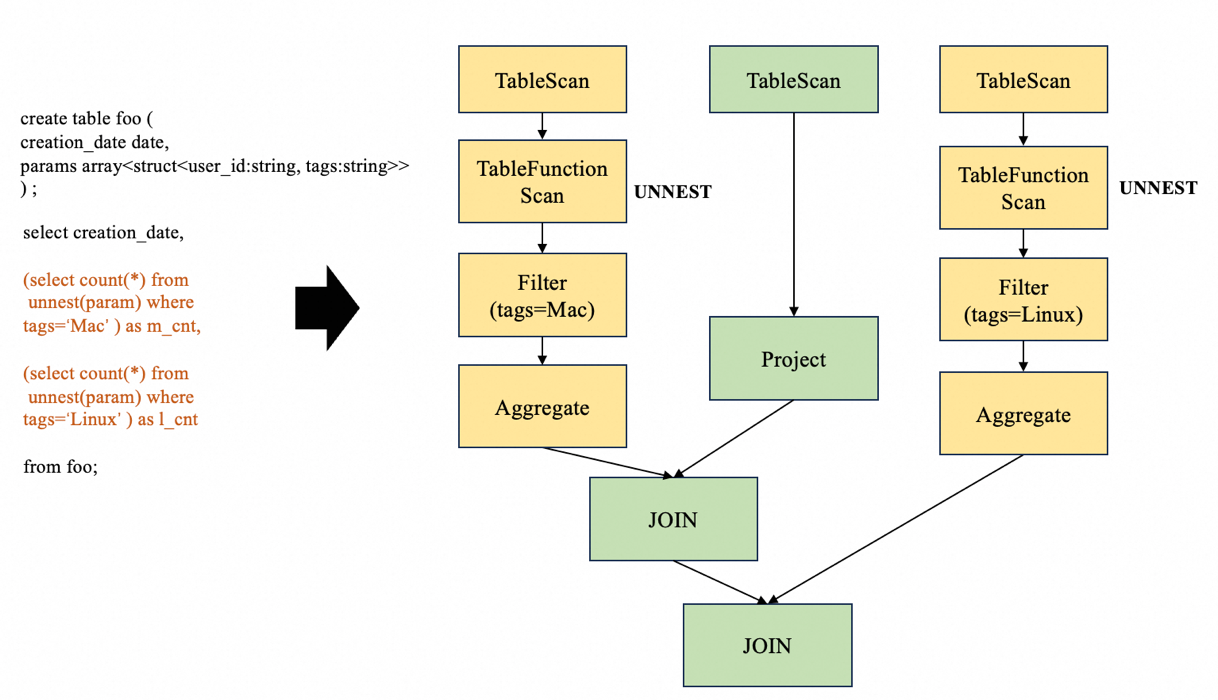

select creation_date,

(select count(*) from

unnest(param) where tags='Mac' ) as m_cnt,

(select count(*) from

unnest(param) where tags='Linux' ) as l_cnt

from foo;The following figure shows the execution plan.

As shown in the preceding figure, the execution plan has the following issues:

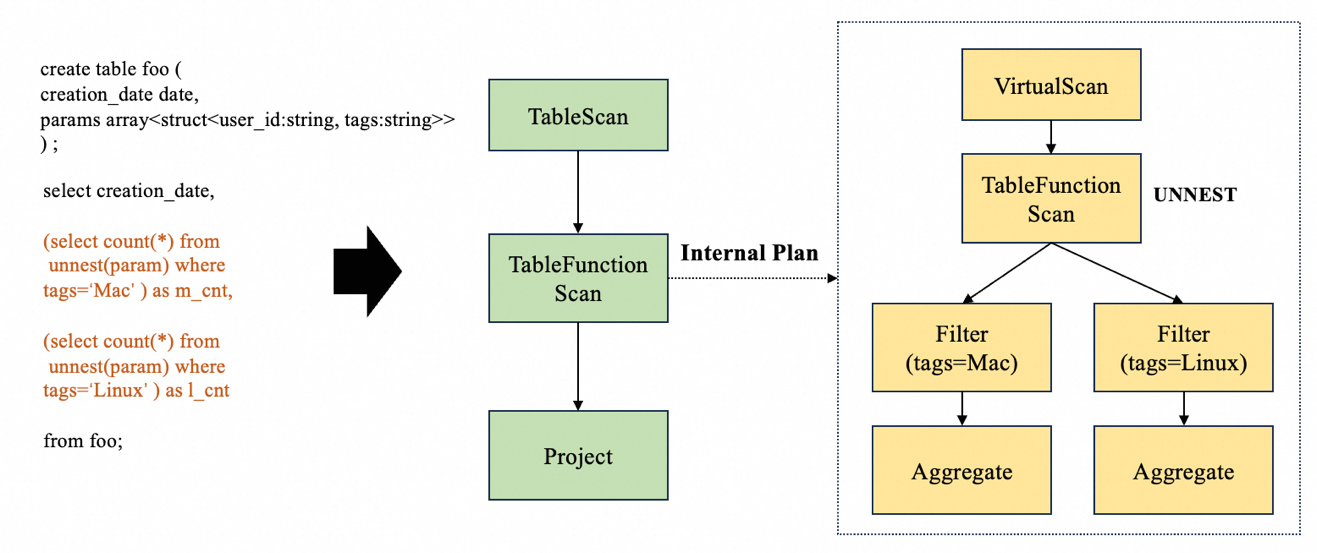

To resolve the preceding issues, MaxCompute redesigns the execution framework of UNNEST and uses a general-purpose framework to run sub-plans by using the APPLY operator. This framework provides common subquery execution capabilities and higher extensibility. The following figure shows the optimized execution plan for UNNEST in the new architecture.

The optimization process consists of the following items:

The optimizer performs further optimizations based on the following items:

After the execution framework of UNNEST is reconstructed and upgraded, MaxCompute improves the performance and stability of UNNEST: The performance is improved by up to 10 times. Out-of-memory (OOM) errors can be prevented in scenarios where a large number of UNNEST operations are performed, which lays a solid foundation for complex data analysis scenarios.

GoTerra uses a large number of ultra-large queries. The execution plans of these ultra-large queries are much larger than those of regular large queries. The former usually contains a large number of operators, deeply nested subqueries, and complex data types such as thousands of columns in a single STRUCT column.

| Number of data types processed in a step | Number of relational operators of an execution plan | Nesting depth of subqueries | Number of relational operators after an execution plan is expanded | |

|---|---|---|---|---|

| Large query | < 1,024 | < 10,000 | < 50 | < 100,000 |

| Ultra-large query | > 5,000 | > 100,000 | > 100 | > 100,000,000 |

When the optimizer processes the execution plans of ultra-large queries, issues such as high memory usage and slow graph traversal may occur.

Each execution plan is a directed acyclic graph (DAG) composed of relational operators. The traversal direction of the graph is from the output to the input. The optimizer matches the operator modes in the graph and replaces the old operator structure with the new operator structure.

| Technical point | Before optimization | After optimization |

|---|---|---|

| Graph reuse among multiple stages | A graph is built separately in multiple optimization stages, which is costly. | Only one graph is built and shared among multiple optimization stages. |

| Bidirectional graph structure | The traversal direction from the input has O(n) complexity. | Bidirectional traversal has O(1) complexity. |

| Graph garbage cleaning | Subgraphs that need to be deleted after replacement are still scanned and computed, with O(n) complexity. | Unreferenced subgraphs are deleted, with O(1) complexity. |

| Traversal iterator for large graphs | Iterator construction requires the pre-traversal of child nodes, with O(n) complexity. | Iterator construction does not require pre-traversal, with O(1) complexity. |

| Traversal after deduplication | A single node is traversed multiple times in scenarios where subqueries are deeply nested. | Traversal after deduplication eliminates amplification effects. |

A digest expresses the complete information about any part of a relational operator. The optimizer identifies a relational operator based on the digest. Each time a relational operator is generated, a relational operator digest must be constructed, including a type information digest and a scalar function digest.

MaxCompute supports the caching and partial reuse of these three types of digests. In most cases, execution plans contain a large number of similar information structures. During optimization, complexity is calculated based on the hash calculation and equality comparison of related objects, and the required memory space is also reduced.

In this example, a type information digest is used.

| Memory space | Nested type information is globally registered and reused. For multiple similar structures, only distinct parts are stored for future reference. |

|---|---|

| Equality comparison | Only references are compared for equality, with O(1) complexity. |

| Hash calculation | The hash value of each layer of a nested type is calculated and cached for future use, with O(1) complexity. |

Based on the optimization of ultra-large queries, MaxCompute breaks through the bottlenecks of the optimizer. It supports the efficient processing of execution plans that contain deeply nested subqueries, complex data types, and millions of operators. In typical large queries, the duration is reduced from more than 15 minutes to 1 minute, and the maximum memory usage is reduced from more than 5 GB to 1 GB.

Big data computing scenarios may contain issues such as the lack of statistics, a large number of user-defined function (UDF) optimization black boxes, and complex jobs that involve thousands of lines of SQL code. It is difficult for the optimizer to generate an optimal execution plan based on an accurate cardinality or make an ideal resource allocation plan for each stage in advance.

As a result, the system misses lots of optimization opportunities. In addition, important jobs must be manually optimized, such as adjusting stage parallelism and adding tuning flags. Manual optimization is time-consuming and labor-intensive. Adjustment is also required if the data and jobs change.

Intelligent tuning resolves the preceding pain points. Recurring jobs with similar computing logic can make full use of historical execution information to guide future execution. This enables automatic system optimization and improves overall job performance.

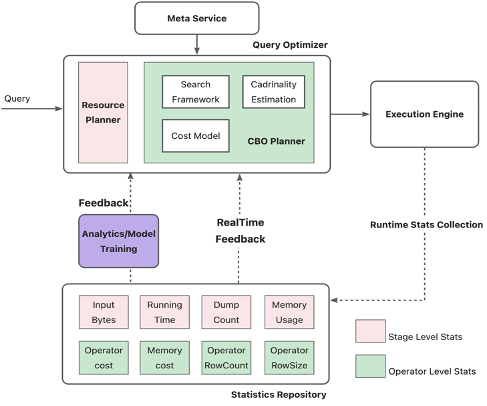

MaxCompute collects various job statistics. After real-time feedback or offline training and analysis, the optimizer can learn the historical execution status of jobs and more intelligently understand the data and jobs. The optimizer injects historical statistics into the cost-based optimizer (CBO) framework to generate a globally optimal execution plan. Also, the optimizer intelligently adjusts stage parallelism based on the calculation amount and running speed of each stage to reduce the consumption of worker resources, and automatically accelerates the stages with low parallelism.

Architecture

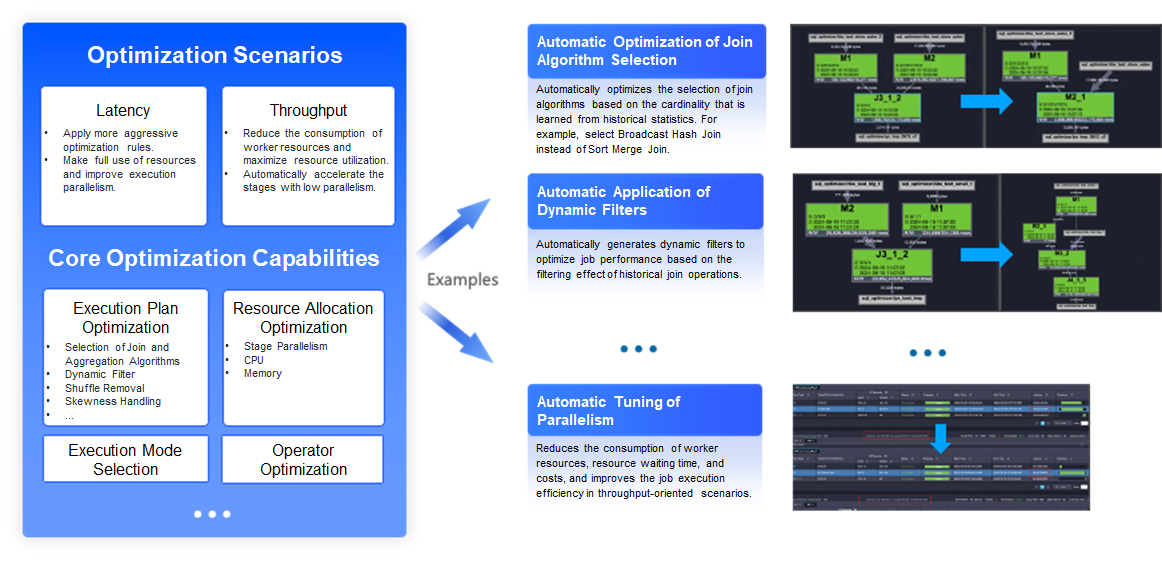

The following figure shows the optimization capabilities of intelligent tuning, including the optimization of execution plans, resource allocation, execution mode selection, and runtime operator execution. Intelligent tuning has framework-based optimization and extension capabilities. It can automatically use the CBO framework to apply some optimization rules, and also continuously optimize all aspects of job operations.

As the second growth curve of performance optimization, intelligent tuning greatly reduces manual job optimization and enhances automatic optimization. By using intelligent tuning, GoTerra optimizes resource allocation to save up to 87% of resources. GoTerra also uses more intelligent execution mode selection to prevent online jobs from rolling back to offline jobs, accelerating job execution by up to 45%. The optimization capabilities of intelligent tuning will be continuously extended to provide better engine performance and ease of use.

For MaxCompute, the data migration project of GoTerra is not only a benchmark practice for a leading Asian-Pacific customer, but also a performance limit test under ultra-large loads. During the four-month performance test, MaxCompute makes paradigm-level breakthroughs in resource efficiency and computing performance based on kernel-level reconstruction and upgrades.

1. Resource utilization

2. Technologies

In the data migration project of GoTerra, MaxCompute continuously optimizes performance by stage and scenario to effectively improve query performance in BI and ETL scenarios, and successfully achieve migration goals. In the future, MaxCompute will take into account the business requirements of GoTerra and technology development trends in the industry, and continue to improve efficiency, cost-effectiveness, and stability.

Faster: continuous unlocking of performance potential to achieve ultimate optimization

More cost-effective: fine-grained resource management and cost optimization

More stable: future-oriented high availability and observability

The cross-border data warehouse migration project of GoTerra not only sets a technological benchmark for the upgrade and performance optimization of data platforms in the industry, but also lays a solid foundation for MaxCompute to deliver world-class performance. Throughout the project, the cross-border migration team has accumulated performance optimization methodologies, automated toolchains, and general practical experience for large-scale and complex scenarios. This provides a mature template and reusable experience for the subsequent migration of cross-region, ultra-large data warehouse systems. Looking to the future, MaxCompute will stick to performance-driven technological innovations. On the one hand, MaxCompute will focus on AI-driven intelligent optimization and automated O&M, continuously improve the adaptive resource scheduling, performance monitoring, and exception self-healing capabilities, and further improve the development and O&M efficiency. On the other hand, MaxCompute will actively explore new directions such as the integration of data lakes and data warehouses, native geospatial data types and unstructured data types, and incremental computing to continuously expand the boundaries of performance optimization. Based on continuous technological evolution, MaxCompute will provide stronger performance assurance and higher O&M efficiency for enterprises in large-scale data scenarios, accelerate the unlocking of business value, and help customers cope with more complex big data challenges in the future.

1,313 posts | 463 followers

FollowAlibaba Cloud Community - October 17, 2025

Alibaba Cloud Community - October 20, 2025

Alibaba Cloud Community - October 17, 2025

Apache Flink Community - May 30, 2025

Hologres - May 31, 2022

Apache Flink Community - August 14, 2025

1,313 posts | 463 followers

Follow Cloud Migration Solution

Cloud Migration Solution

Secure and easy solutions for moving you workloads to the cloud

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Alibaba Cloud Community