By Rohit Kumar, Alibaba Cloud Solutions Architect

Alibaba Cloud provides Function Compute as a service on its platform which can be used for event-driven computing. This is a fully-managed service and helps you quickly build any type of event-driven application or service without the headache of server management and operations. Function Compute runs your code on your behalf based on the event setup and you only pay for the resources consumed while running the code.

Table Store is Alibaba Cloud's NoSQL database which we would use to store the object name and the metadata information.

Here we will use the Object Storage Service to upload new media files and based on that we would like to populate a table of key (object names) with file metadata info into the table store for further processing.

Mediainfo is an open-source and free-to-use program which can extract technical information (metadata) about media files, as well as allow tagging information for many audio and video files. By using Mediainfo, an organisation can gather insight on each file based on its own metadata. This data can be then used to inform upstream processes, what to do with the file, how should it be processed etc.

In the media industry, audio/video metadata information can be beneficial to make different decisions in the upstream process in the media workflow. Moreover, this can also help in indexing and searching for files in a large database for quick retrieval of files. What does this metadata include? A video file metadata can include file format, bitrate, framerate, file size, width, height, aspect ratio, video and audio codecs and variety of other technical information about the file. Now once you have this file and its metadata information, you can use this information to transcode, fix aspect ratio etc. to make it available for different types of devices for target viewers.

This process can increase manual workloads for the media assets companies in maintaining and managing these assets. To solve this problem, we can try to automate the process using OSS triggers and Function Compute. Now, if these files are stored in Alibaba Cloud storage OSS and if the file size is bigger, it can be difficult to extract this metadata information without downloading the information and downloading these big files can become a problem even if Function Compute functions can run for 600 seconds.

To solve the issue in automating the process using only FC and OSS, we can use Mediainfo, which is created to do the same exact thing ®C extracting the technical metadata from media files. Mediainfo program can be used along with FC as it provides DLL (Dynamically Linked Library) for easy integration with other programs. Mediainfo can also be used as a single executable file, and hence it is easy to compile it with all dependencies on a different machine and then use with FC. Mediainfo can be used in CLI and GUI formats on different operating systems. Mediainfo can extract the metadata information using a simple URL without the need to download the full file. It downloads only small parts of the main file, and this can be processed in the FC memory itself and, in turn, save the download time and space needed by the FC function to extract the full metadata.

When a customer uploads a media file to OSS, OSS triggers an event caused by upload action, now this event can be used to trigger a function compute function, which can all Mediainfo executable to extract the metadata information from this file on OSS. Once this metadata is extracted user can write this metadata as a new entry to an already created table in Table Store.

In this section, I will describe how OSS, FC with Mediainfo and Table store can be used together to automatically extract and store the metadata information for files uploaded to OSS.

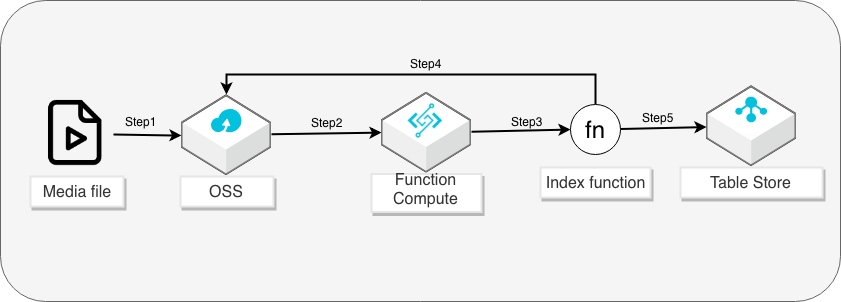

Here are the workflow steps:

Step 1: User uploads a media file to an OSS bucket

Step 2: OSS triggers an event which in turn triggers Function Compute

Step 3: Function Compute executes the index function.

Step 4: FC index function calls an OSS API to generate a signed URL for the uploaded object to pass it on Mediainfo executable, which would download the necessary info from the object in OSS and extracts the metadata

Step 5. FC index function then stores the technical metadata into Table Store in XML format

Here is the architecture diagram to explain the scenario.

In this example implementation, I am going to use a test bucket to upload the media files in Frankfurt region, create a Mediainfo executable, create Table store instance and table, create a Function Compute function which would be executed by OSS trigger on object upload and then extract and put the file metadata into the table.

Next, we need to create a Mediainfo executable to be called by Function Compute function. As Mediainfo executable is a third-party program, we need to download this dependency to the folder where we define our function and upload this to the function compute for use. It is important to note that executable files compiled from C, C++, or Go code must be compatible with the runtime environment of Function Compute. As we are going to write our function in Python, we need to use correct python runtime environments that are supported in Function Compute - Linux kernel version: Linux 4.4.24-2.al7.x86_64. This kernel is available as part of the Aliyun Linux public image in the console.

1. First, we need to create an ECS instance, using Aliyun Linux 17.1 64-bit public image (alinux_17_01_64_20G_cloudinit_20171222.vhd).

You can find this (latest) image information at install third-party dependency section of Function Compute. We use this instance to download and compile Mediainfo. A low config general purpose instance (e.g. ecs.t5-lc1m2.large) can be used for this purpose and can be terminated once the Mediainfo executable has been transferred to your local machine.

Once the ECS instance is created and logged in using the command-line interface (SSH), please use the following commands to download and compile Mediainfo.

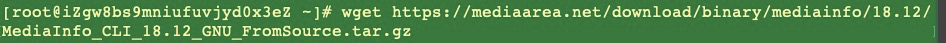

2. Download the Mediainfo file. Link to the latest binary can be taken from this page.

3. Untar the Mediainfo download file and cd into the directory

4. Install 'Development Tools' and dependencies needed to compile Mediainfo. Libcurl is required to support URL input with Mediainfo executable, which would be used inside the FC function to pass the OSS object URL.

5. Compile Mediainfo with libcurl

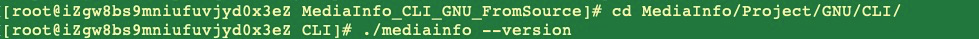

6. Once it is completed, you can run the following command to confirm if it is successfully installed. This

If you get the following message on the CLI, then it means your independent static Mediainfo executable is ready to be copied to your local machine and used with FC function.

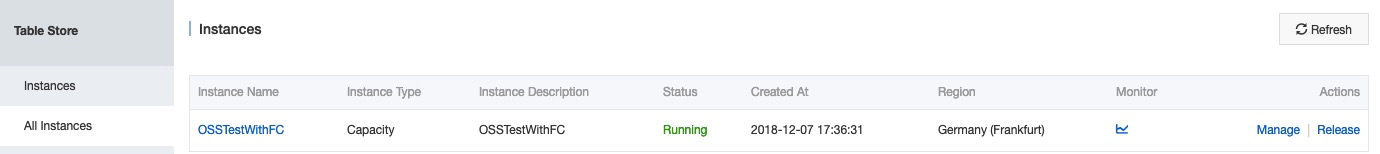

1. First, create a Table Store instance. I have named it as OSSTestwithFC. Here is how it should look like in your console.

2. Next create a table in the Table store. I have named it as Meta_table_new. Please note Table store instance name and table name as we will use in the next step in function creation.

1. Go to FC console and create a service. You can skip the advance config for this service.

2. Next, click on the service name and create a function. For our case, you can select the language as Python 2.7 and an Empty Function template.

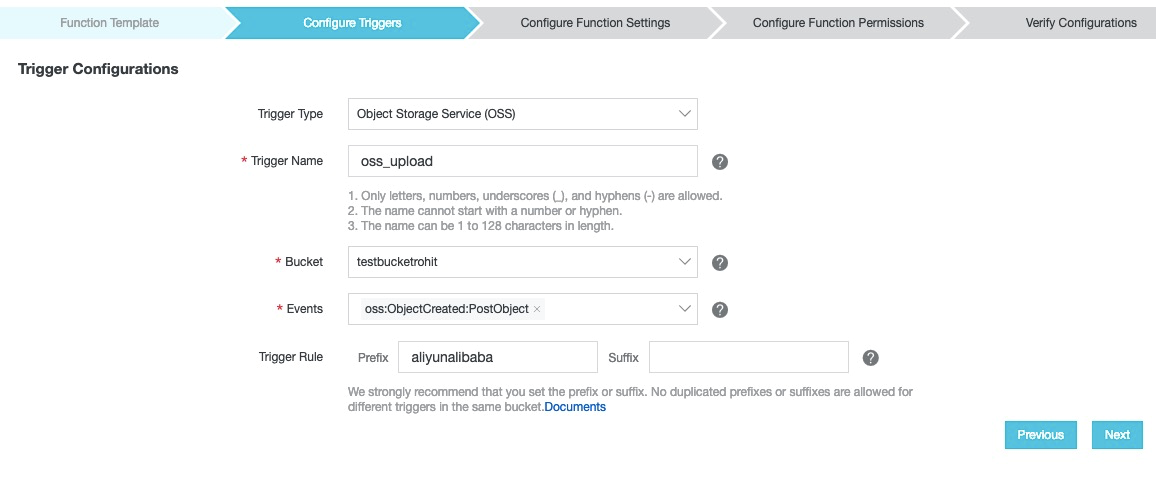

3. On the Configure Triggers page, under Trigger Configurations do the following steps:

i) In the 'Trigger Type', select Object Storage Service

ii) Give this 'Trigger Name' of your choice

iii) Select the bucket we created in Step 1.

iv) In the Events, select 'oss:ObjectCreated:PostObject'

v) For Trigger Rule, you can just name it anything you choose and then click next.

Once done, it should look like this:

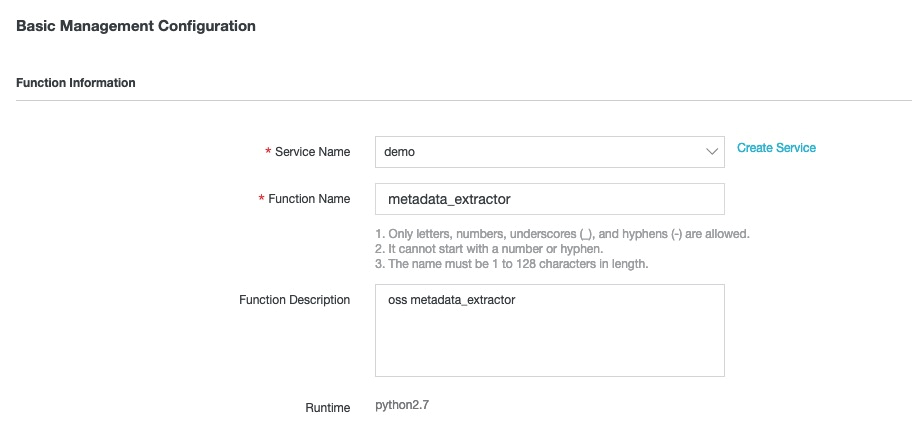

4. On the 'Configure Function Settings' page, under Function Information, select the service created in the first step (selected by default), give this function a name and description and click next. No need to change anything in other configuration parts as we would be writing function code in index.py file separately and adding to this page later. Under Runtime Environment, you'd see Function Handler as index.handler which basically tells you that index.py file contains a method called "handler". So, if you change the handler name in index.py file, you'd need to edit function handler name as well.

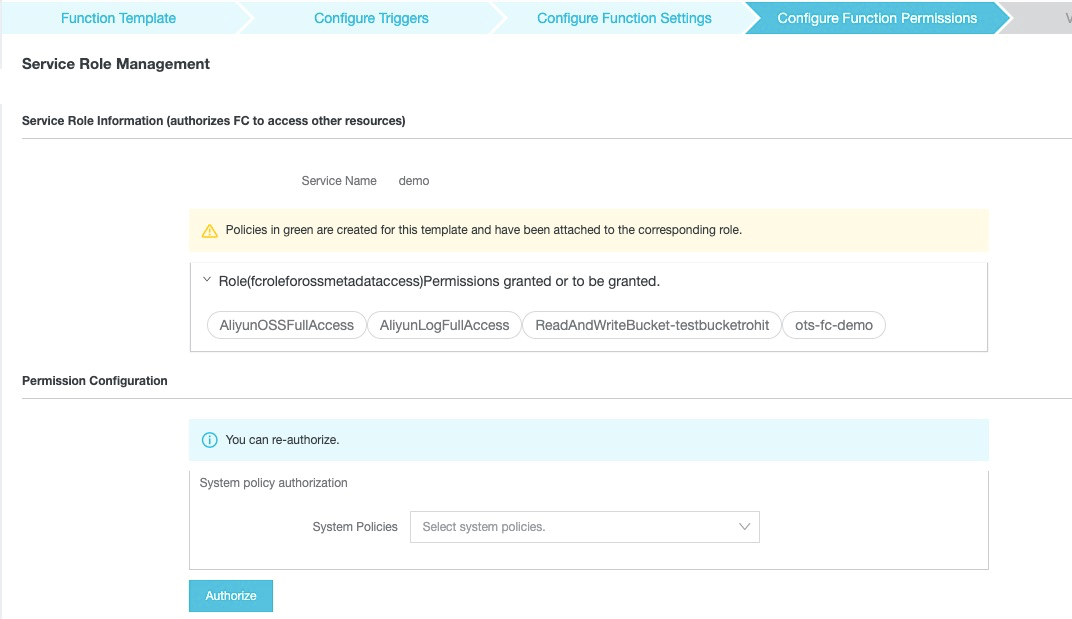

5. Next, On the 'Configure Function Permissions' page, under 'Service Role Management', Add policies to the service RAM role. Here I have assigned three policies to the role for accessing OSS bucket Table store table and Log Service. These can be selected as a system policy drop-down menu under 'Permission configuration' or assigned to RAM role in RAM console.

i) ReadAndWriteBucket-'yourbucket' ®C This policy is to allow Service(function) to access OSS bucket. Please

{

"Version": "1",

"Statement": [

{

"Action": [

"oss:GetObject",

"oss:PutObject"

],

"Resource": [

"acs:oss:*:*:'yourbucket'/*"

],

"Effect": "Allow"

}

]

}ii) ots-fc-demo: This policy is to allow access Service(function) to access Table Store table. To use this policy, please edit it with

{

"Version": "1",

"Statement": [

{

"Action": [

"ots:PutRow",

"ots:GetRow"

],

"Resource": [

"acs:ots:*:*:instance/'YourTableStoreInsatnce'/table/*"

],

"Effect": "Allow"

}

]

}iii) AliyunLogFullAccess: You can select this system policy in 'Permission Configuration' drop-down menu to allow FC to write logs to your Log Service project.

On the same page, under 'Invocation Role Management', you can assign role and authorize OSS to access/call Function Compute when an event occurs.

{

"Version": "1",

"Statement": [

{

"Action": [

"fc:InvokeFunction"

],

"Resource": "acs:fc:*:*:services/demo/functions/*",

"Effect": "Allow"

}

]

}6. Click next, 'Verify Configurations' and click on create, which would create the function.

Now that we have everything else ready we can write our function code and then test. Here is the function code that we can use in our code.

import oss2

import logging

import json

import subprocess

from tablestore import OTSClient, Row, Condition, RowExistenceExpectation

TS_INSTANCE_NAME = 'Your_Instance_Name'

TABLE_NAME = 'Your_table_name'

def my_handler(event, context):

# event is json string, parse it

evt = json.loads(event)

logger = logging.getLogger()

endpoint = 'oss-eu-central-1.aliyuncs.com'

creds = context.credentials

auth = oss2.StsAuth(creds.accessKeyId, creds.accessKeySecret, creds.securityToken)

bucket = oss2.Bucket(auth, endpoint, evt['events'][0]['oss']['bucket']['name'])

# get object key from event when post object

object_name = evt['events'][0]['oss']['object']['key']

# get signed url of the object

signed_url = bucket.sign_url('GET', object_name, 60)

# get the output of mediainfo by passing it signed url

# and store the output in meta_data_output directly using check_output.

# output meta_data_output would be returned in xml format

meta_data_output = subprocess.check_output(["./mediainfo", "--full", "--output=XML", signed_url])

logger.info("Signed URL: %s", signed_url)

logger.info("Output: {}".format(meta_data_output))

#Table Store endpoint and client for access

otsendpoint = 'http://'+TS_INSTANCE_NAME+'.eu-central-1.ots.aliyuncs.com'

client = OTSClient(

otsendpoint, creds.access_key_id, creds.access_key_secret, TS_INSTANCE_NAME,

sts_token=creds.security_token)

# put a row

primary_key = [('ObjectKey', object_name)]

attr_columns = [('Meta_data', meta_data_output )]

row = Row(primary_key, attr_columns)

condition = Condition(RowExistenceExpectation.IGNORE)

consumed, return_row = client.put_row(TABLE_NAME, row, condition)

# get the row

consumed, return_row, next_token = client.get_row(TABLE_NAME, primary_key)

return {

'primary_key': return_row.primary_key,

'attr_columns': return_row.attribute_columns,

}Function has a handler with the name my_handler which takes event and context as parameters. Notice that, its name is 'my_handler', so make sure your function handler in the console is 'index.my_handler' else change the handler name in the code to 'handler' if your console function handler name is index.handler.

Function gets access key information of the account before accessing the OSS bucket and event information. It gets the object from the bucket through the object upload event and get signed URL of the object. This signed URL is used by Mediainfo executable to access OSS object securely. Mediainfo then downloads only necessary bytes of the object to get the technical metadata from the media object. This metadata is outputted to meta_data_output in XML format.

This metadata is then inserted into a Table Store table after getting the target instance endpoint. This stored data in the table can be used for quick queries, retrieval and management of media object files stored in OSS as shown by getting the row details.

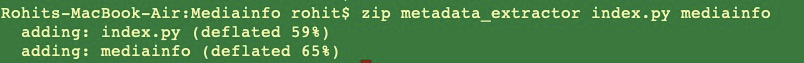

Save this code as index.py and then zip this file with Mediainfo executable file we created in step 2 of section 5. You can do that through a command-line tool on your system, something like this (on macOS):

Once done, you can go to FC console and upload the file through the code management tool.

To test whether the deployment is able to get the technical metadata information of an uploaded object, upload a video file to the target OSS bucket. Here I have used the freely available video file 'Big buck bunny' which has rich metadata information.

Once the file is uploaded successfully, OSS trigger the FC function and Mediainfo executable extracts the technical metadata information.

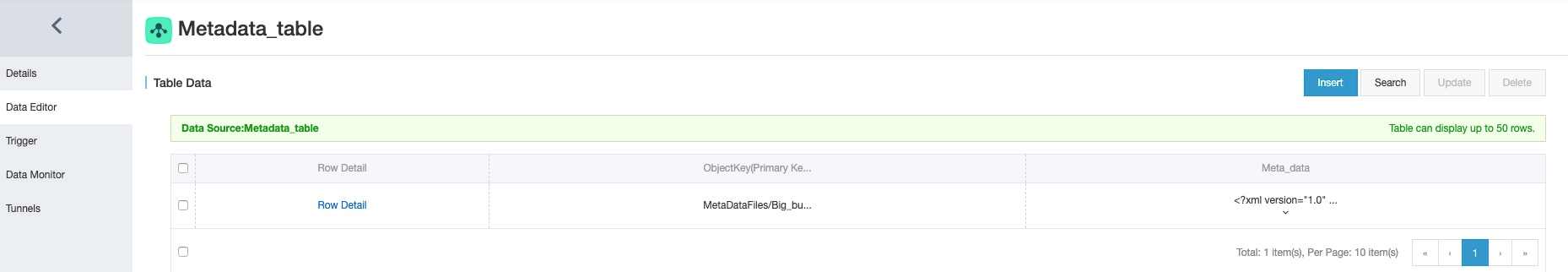

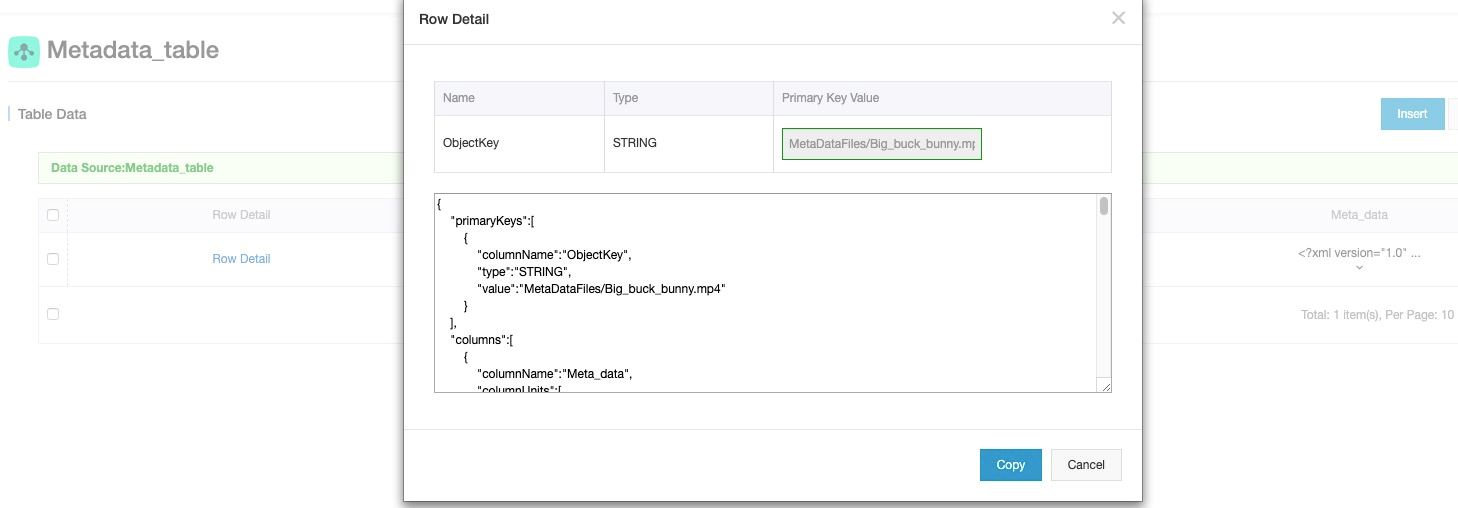

As Mediainfo only downloads a small part of the whole file and function execution is very quick, you can see the result as soon as the file is uploaded. Here is the screenshot of the resulted entry in the Table store table.

Through this article, I made an introduction to a simplified process of extracting media file technical metadata. I made an intro to Mediainfo and how it can be used with Alibaba Cloud Function Compute to automate the metadata extraction which can be quite time and resource-consuming if done in a traditional and manual fashion. We also stored the extracted metadata into Table Store for further usage like query and retrieval. Once the data is in Table Store, this can be used in other media workflow steps to perform various operations on the file like image resizing, watermarking, video transcoding etc. based on the metadata of the file.

How to Manually Setup MATLAB Production Server on Alibaba Cloud

3 posts | 0 followers

FollowAlibaba Cloud Storage - May 8, 2019

Alibaba Cloud Storage - May 14, 2019

ApsaraDB - November 21, 2023

Alibaba Cloud Storage - November 8, 2018

Alibaba Cloud MaxCompute - April 26, 2020

Alibaba Cloud MaxCompute - December 22, 2021

3 posts | 0 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More