This is Technical Insights Series by Perry Ma | Product Lead, Real-time Compute for Apache Flink at Alibaba Cloud.

In distributed systems, data scalability is a crucial feature. Imagine a busy restaurant kitchen: if only one chef is responsible for all dishes, they'll be overwhelmed when orders increase. But if you can increase or decrease the number of chefs and workstations as needed, and each chef can take over others' work, the entire kitchen can operate efficiently.

In Flink, states are primarily divided into two types: partitioned state and non-partitioned state.

Partitioned State is state associated with specific keys. For example, when processing user shopping behavior, each user's shopping cart state is partitioned state, naturally distributed to different tasks based on user ID. Redistributing this type of state is relatively simple because the keys themselves provide a natural basis for redistribution.

Non-Partitioned State is overall state associated with task instances, independent of specific keys. For example:

This type of state originally exists as a whole within each task, and when task count needs to be increased or decreased, it faces the challenge of how to split or merge these states.

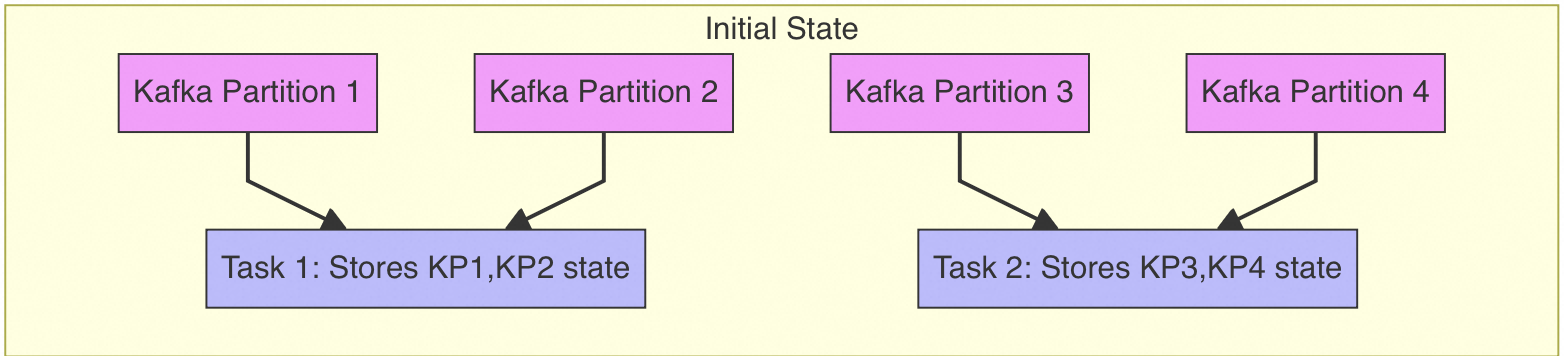

Let's first illustrate the current situation with a specific example. Suppose we're using a Kafka source that's responsible for reading from 4 Kafka partitions and using 2 Flink tasks to process:

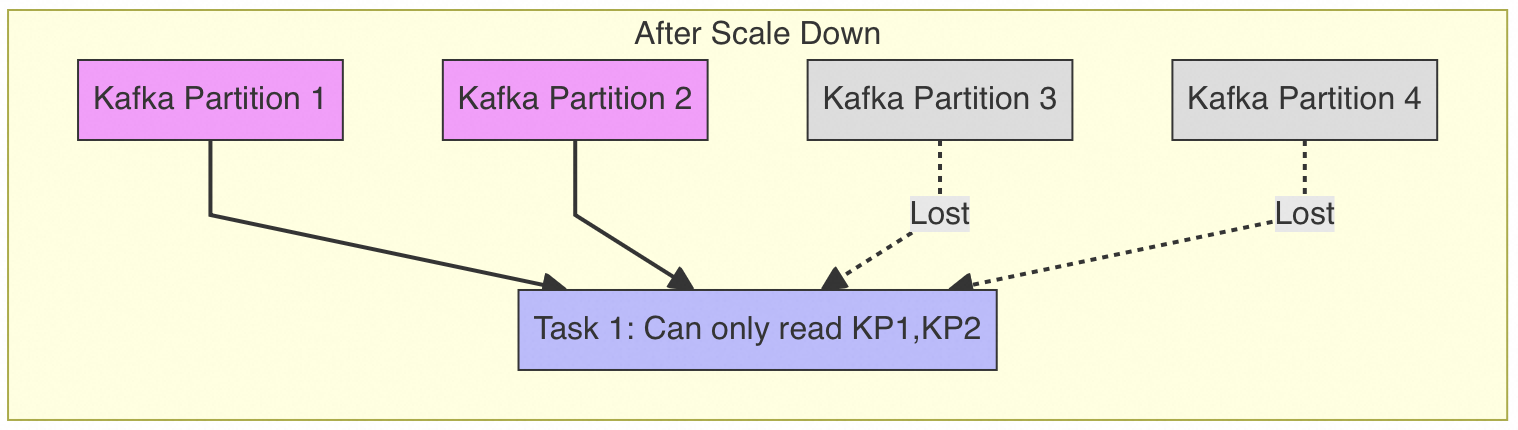

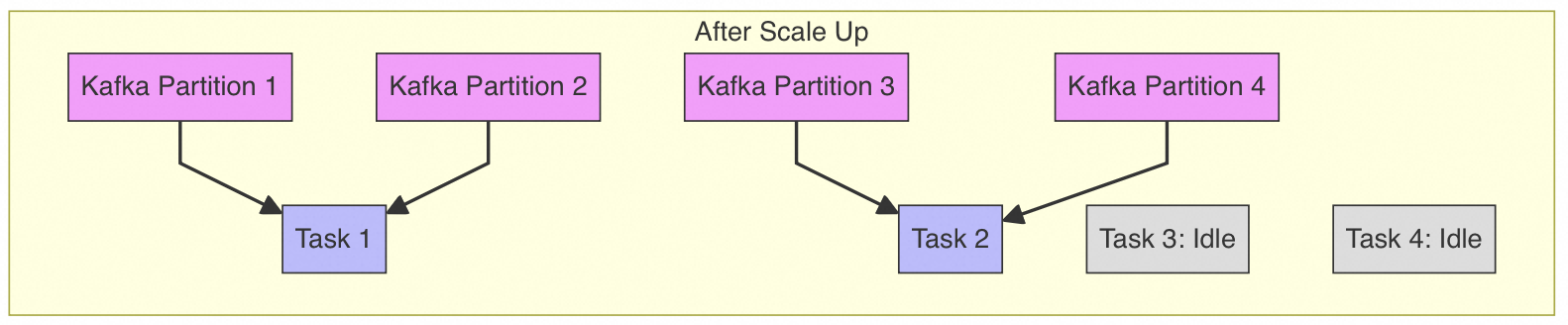

In previous versions, two major problems occurred when changing task count:

When reducing tasks from 2 to 1:

State belonging to Task 2 (offsets for KP3, KP4, etc.) would be lost, preventing continued processing of these partitions.

When increasing tasks from 2 to 4:

New Tasks 3 and 4 would remain idle because the system didn't know how to redistribute existing state to these new tasks.

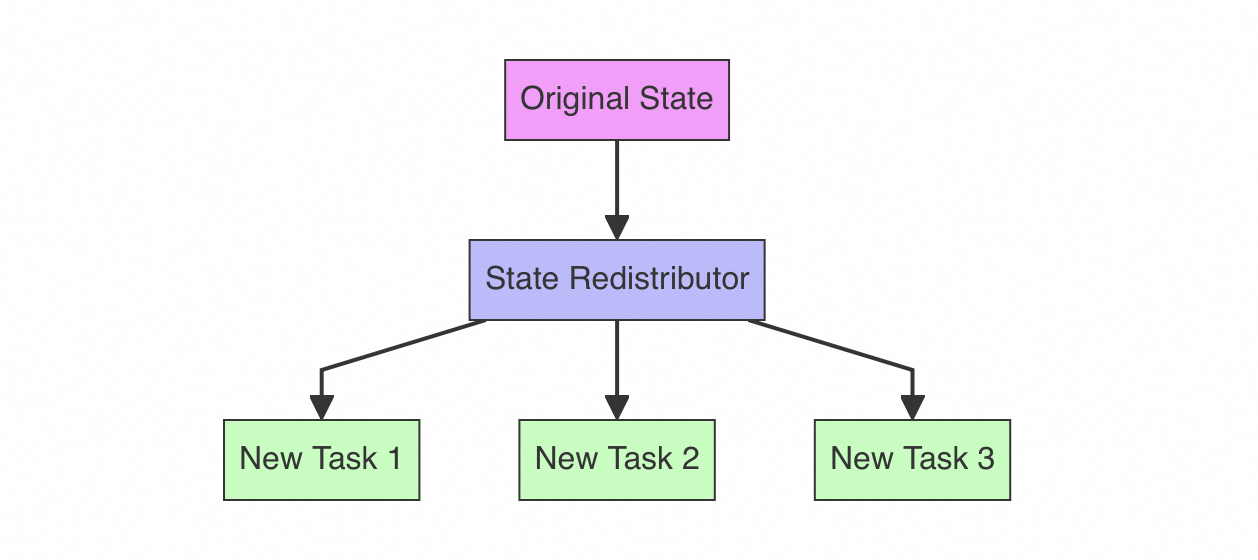

FLIP-8 introduces a new mechanism allowing non-partitioned state to flexibly migrate between tasks. This improvement includes three core components:

Introduced a new state management interface OperatorStateStore that can store state as lists. It's like distributing cafeteria tasks among multiple chefs, each responsible for preparing certain ingredients and dishes.

public interface OperatorStateStore {

// Can register multiple state lists, each redistributable between tasks

<T> ListState<T> getListState(String stateName,

TypeSerializer<T> serializer)

throws Exception;

}Designed flexible state redistribution strategy interfaces:

This mechanism allows users to customize how state is distributed between tasks. The system currently provides a round-robin distribution strategy by default, like evenly distributing a pot of food among multiple chefs' workstations.

To make this feature easier for developers to use, two levels of interfaces are provided:

// Simplified interface example

public interface ListCheckpointed<T extends Serializable> {

// Put data in list when saving state

List<T> snapshotState(long checkpointId) throws Exception;

// Read data from list when restoring state

void restoreState(List<T> state) throws Exception;

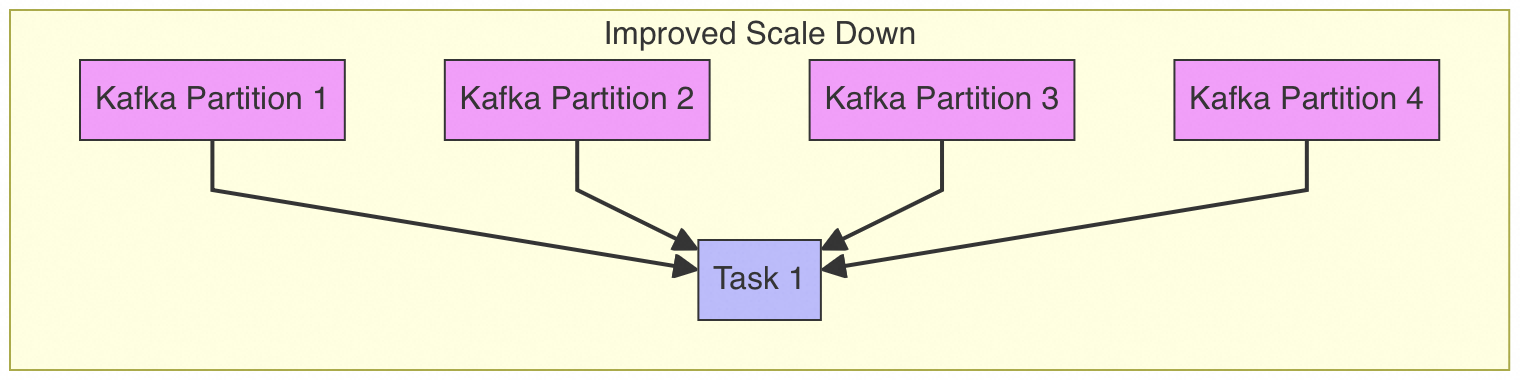

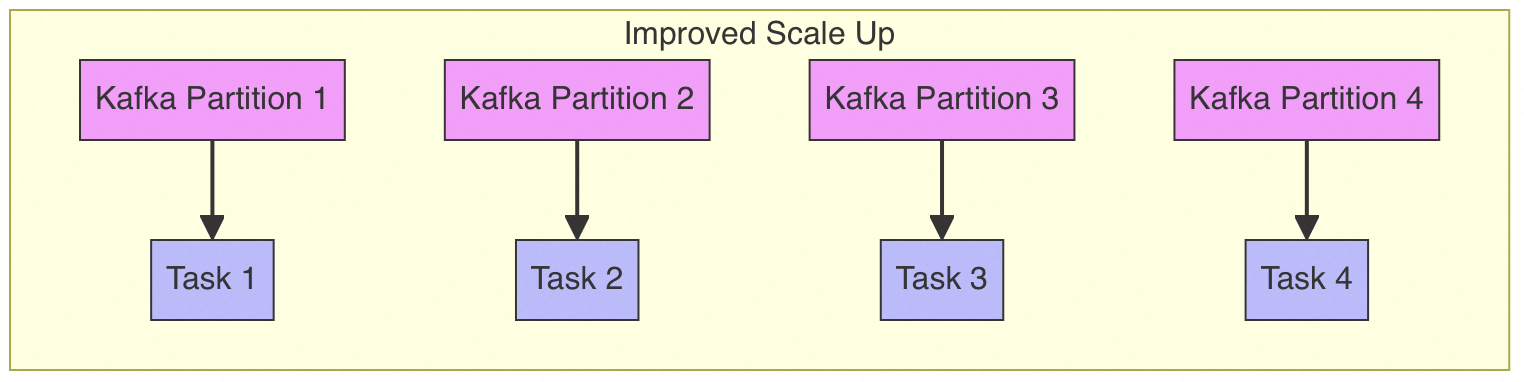

}Let's look at the improved behavior of the Kafka source:

All states merge into remaining tasks, ensuring no data loss.

States are evenly distributed among all tasks, fully utilizing new computing resources.

Yes, this improvement was implemented in Flink 1.2.0. In practice, it not only solved the scaling issues for Kafka connectors but also provided a good solution for other scenarios requiring non-partitioned state management.

To ensure this feature's reliability and performance, the implementation includes these key designs:

Each state has its own serializer, ensuring that even if state data format changes, old checkpoints and savepoints can still be correctly restored. It's like giving each chef standardized recipes, ensuring dish taste and quality remain consistent even when chefs change.

During state redistribution, the system ensures:

The system uses lazy deserialization strategy, only deserializing when state is actually needed, reducing unnecessary performance overhead.

FLIP-8's implementation makes Flink more flexible and powerful in handling non-partitioned state. It's like equipping a restaurant with an intelligent task scheduling system that can dynamically adjust chef workload distribution based on dining peaks, making the entire kitchen operate more efficiently and reliably. This improvement not only enables better Kafka connector operation but also provides a general solution for other scenarios requiring dynamic scaling.

Streaming Data Integration from MySQL to Kafka using Flink CDC YAML

FLIP-9: Trigger Language - Apache Flink Rule Definition Guide

206 posts | 54 followers

FollowApache Flink Community - June 11, 2024

Apache Flink Community - August 29, 2025

Apache Flink Community - May 9, 2024

Apache Flink Community - March 13, 2025

Apache Flink Community - August 1, 2025

Apache Flink Community - May 27, 2024

206 posts | 54 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Apache Flink Community