In 2025, Java celebrates its 30th anniversary. Over the past three decades, Java has solidified its position as the bedrock of enterprise applications. In today's AI era, Java is increasingly bearing heavy AI-related workloads, such as retrieval-augmented generation (RAG) engines based on Elasticsearch and large-scale data analysis and feature engineering based on Spark.

However, traditional Java virtual machines (JVMs) often struggle when they face massive, compute-intensive AI application scenarios. Vectorization capabilities at the Java level remain insufficient, making it difficult to efficiently implement complex parallel computing. Switching to native code for vectorization introduces Java Native Interface (JNI) overhead as well as additional development complexity. Meanwhile, although JVMs offer massive customization potential in core modules such as garbage collection (GC), Just-In-Time (JIT) compilation, and runtime by using numerous parameters, the sheer number of combinations and the high barrier to entry for tuning make performance optimization a time-consuming and laborious task. These dilemmas highlight a critical contradiction facing traditional JVMs in the AI era: the difficulty of achieving both development efficiency and system performance. How to break through performance bottlenecks while maintaining Java's high productivity has become a crucial subject in JVM evolution.

To address these challenges, JVMs themselves must evolve. With the rise of next-generation AI technologies such as large language models (LLMs), artificial intelligence for IT operations (AIOps) is becoming the future of enterprise IT operations. However, traditional JVMs remain a "black box" to AI and O&M systems. Their internal status is hard to observe, and their complex parameters make automated tuning nearly impossible. Enterprises urgently need a smart JVM that is more AI-aware and adaptable to AIOps. It must offer stronger native computing acceleration capabilities, deeper runtime insights, and more advanced self-adaptive optimization. This way, the JVM can integrate deeply with AIOps platforms to realize capabilities previously unimaginable, such as intelligent capacity assessment, root cause analysis of anomalies, and self-healing of performance bottlenecks, ultimately helping enterprises unify development efficiency with system performance in the AI era.

Recognizing this need, Alibaba Cloud introduces Alibaba Dragonwell 21 AI Extension, built on Dragonwell 21 Extended Edition. As a production-grade downstream distribution of OpenJDK, Dragonwell 21 incorporates a broad set of optimizations, delivering comprehensive performance that is a step ahead of OpenJDK 21. Building on this foundation, the AI Extension is deeply optimized specifically for AI applications, dedicated to resolving the aforementioned challenges and helping enterprises maximize the return on investment (ROI) of their Java applications in AI scenarios.

On traditional JVMs, frequently executed Java methods are compiled by the JIT compiler into highly optimized machine code to boost performance. However, the JIT compiler often lacks support for the latest CPU features, such as AVX-512 and AMX. Consequently, it fails to generate sufficiently efficient code for many compute-intensive AI workloads. An even more significant limit is that the JIT compiler cannot utilize GPU resources, which is a major drawback in the AI era.

Alibaba Dragonwell 21 AI Extension introduces a native acceleration feature, which replaces hotspot methods found in common AI workloads with precompiled, heavily optimized native code implementations. This is like upgrading the "engine" of a Java application with "Formula 1 core components", enabling a JVM to unleash the full potential of CPU and GPU resources along critical execution paths.

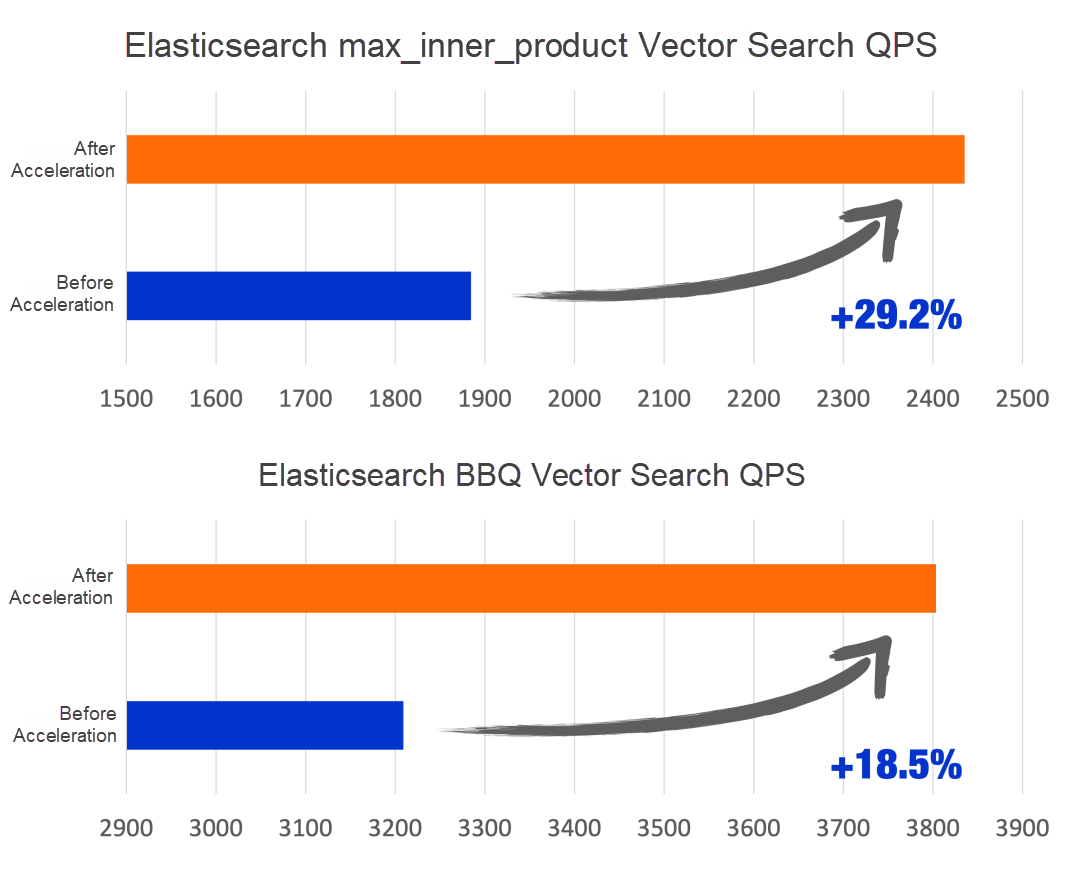

Amid the current wave of LLMs, RAG has emerged as a core technology for enterprises that are building intelligent Q&A systems and knowledge-base applications. These applications typically rely on vector databases such as Elasticsearch for efficient search, and their performance directly impacts user experience. The native acceleration feature specifically optimizes the core compute-intensive parts in vector search, significantly reducing latency and improving RAG responsiveness. Benchmarks on the GIST dataset demonstrate that the end-to-end performance of Elasticsearch max_inner_product and BBQ vector search improved by 29.2% and 18.5%, as shown in the following figure.

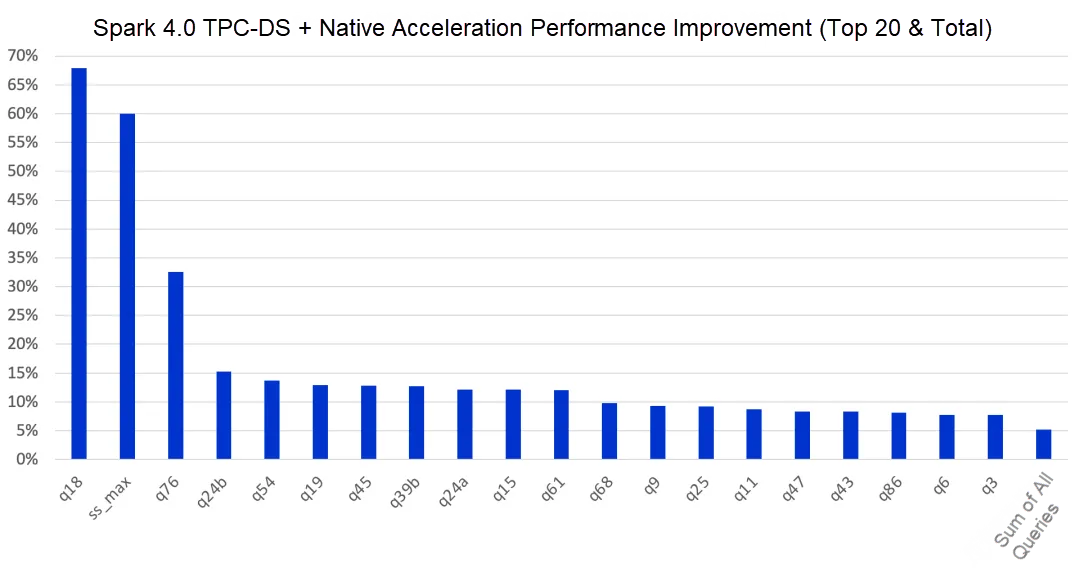

Similarly, in the realm of large-scale data processing, enterprises rely on distributed computing frameworks such as Spark for complex data cleansing, feature engineering, and model training. These tasks involve intensive computation and data exchange, so even modest performance gains translate into significant cost savings and efficiency improvements. The native acceleration feature provides native code-level optimization for the core implementation of Spark, enabling Java applications to achieve extreme execution efficiency, thus accelerating the entire data processing process. With acceleration enabled, Spark 4.0 shows an overall 5.2% performance improvement on the TPC-DS benchmark, with some key queries achieving performance gains exceeding 60%, as shown in the following figure.

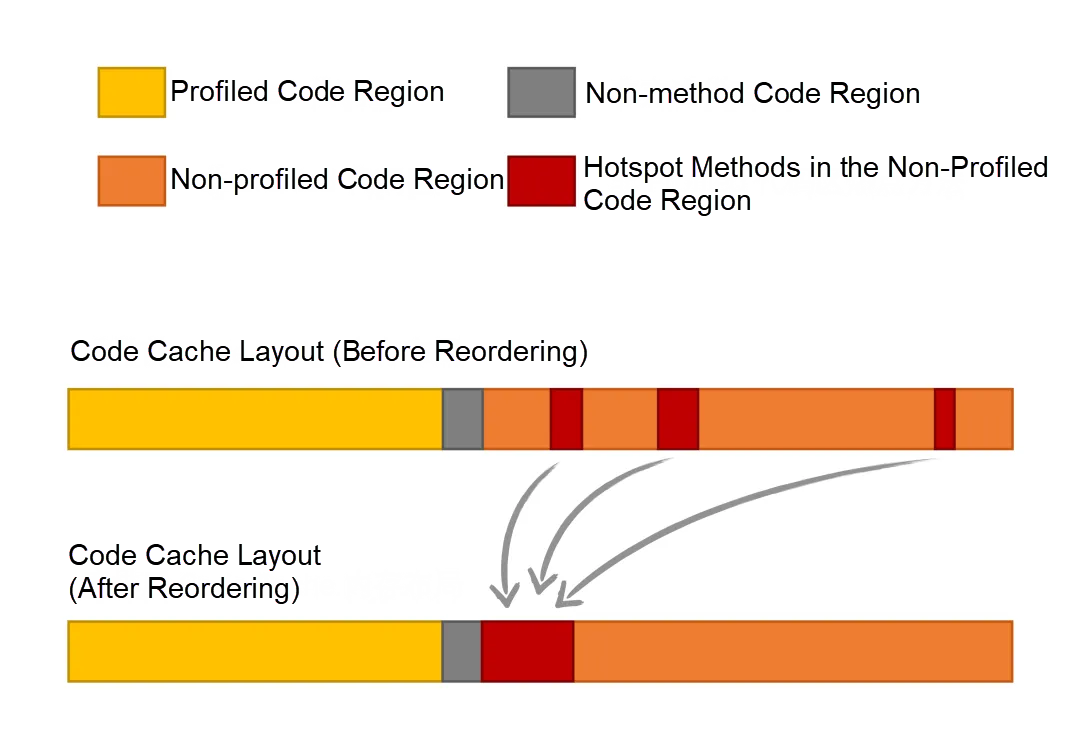

At runtime, JVMs store the JIT-compiled code of Java methods in the code cache. However, traditional JVMs simply append compiled code sequentially as methods are generated, which can scatter hotspot methods across the cache. That scattering forces the CPU to jump between disparate cache regions during execution, degrading the instruction cache (I-Cache) locality and hurting performance.

To address this issue, Alibaba Dragonwell 21 AI Extension introduces hot code reordering, which operates in two phases:

Under large workloads, traditional JVMs waste substantial CPU time waiting on poorly localized code and data. Hot code reordering greatly reduces this idle time, so CPU cycles are spent on useful work. After hot code reordering is enabled, a JVM automatically reorganizes the code cache layout to improve locality. This is especially effective when code cache usage is high and an application has complex logic with a concentrated hotspot set.

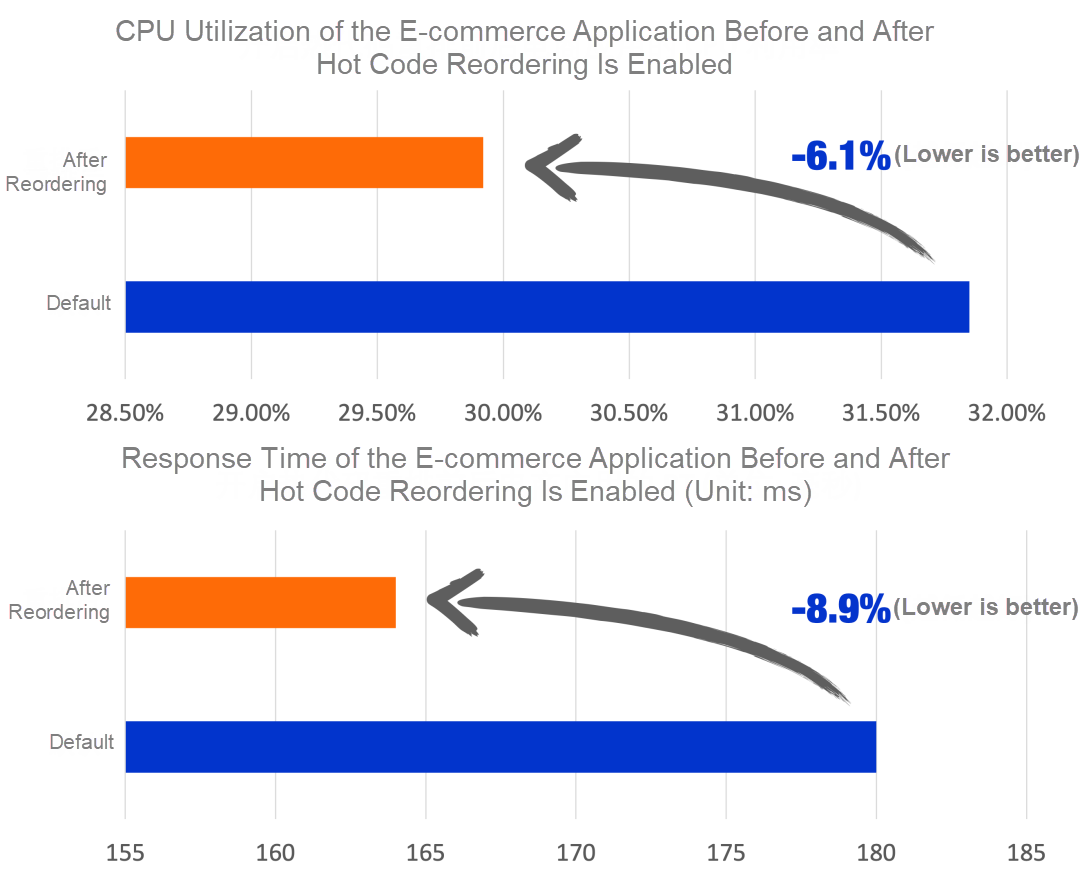

In a test on a large Alibaba e-commerce application, the team performed a Training Run in a canary release environment to collect hotspot data, and then enabled the Production Run optimizations in production. The result is that CPU utilization dropped by 6.1% and response time improved by 8.9%, delivering both lower service costs and better user experience, as shown in the following figure.

JVM parameter tuning has always been a daunting challenge, particularly when it comes to GC parameters. For a single Java application, deriving a set of robust configurations typically requires multiple runs, deep expertise, and considerable manual efforts. For many small and medium-sized enterprises, this is effectively unmanageable.

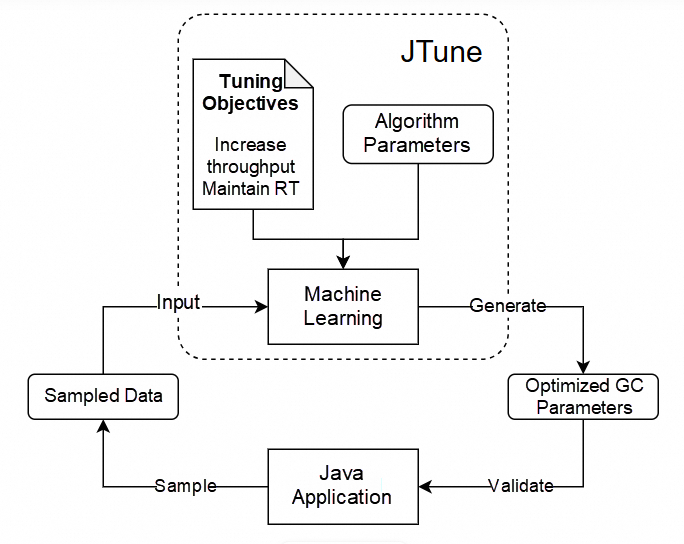

Alibaba Dragonwell 21 AI Extension offers a powerful tool: JTune. Unlike traditional rule-based tuners, JTune embeds machine learning models that automatically explore a JVM's vast configuration space. By using a sample-adjust-validate feedback loop, JTune finds the best parameter combination for your specific application and hardware environment.

JTune turns JVM tuning from "black magic" into a practical technique. Instead of weeks of manual tuning by JVM experts, you run a few commands and JTune automatically performs the optimization. This both saves time and often uncovers configurations that exceed human experts' experience.

Currently, JTune intelligently tunes two G1 GC parameters, G1NewSizePercent and G1MaxNewSizePercent, optimizing the young-generation ratio to increase throughput while keeping response time stable. JTune also recommends the Java heap size (-Xmx) based on observed GC behavior, resolving a long-standing operational challenge. With JTune, your service can run faster without wasting memory, maximizing hardware utilization.

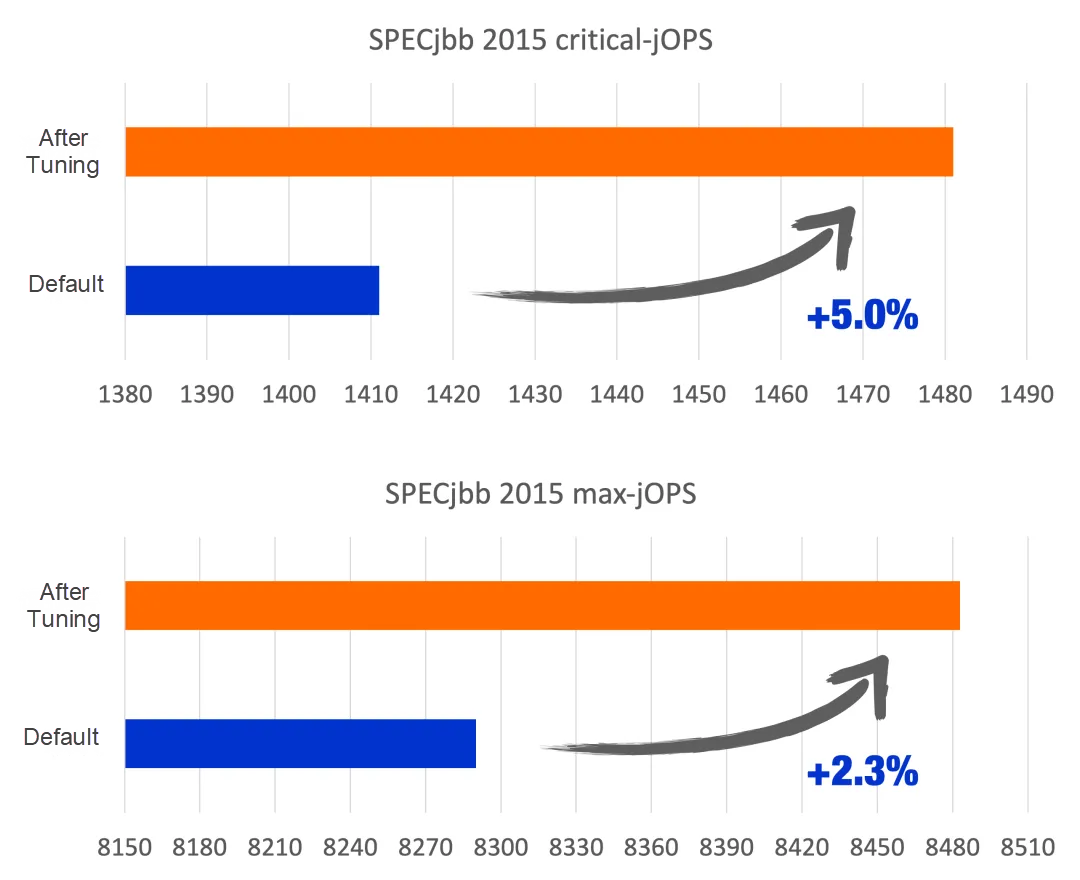

In the industry-standard SPECjbb2015 benchmark, JTune demonstrated powerful strengths. As shown in the preceding figure, after AI-powered tuning, JTune recommended G1NewSizePercent=21 and G1MaxNewSizePercent=51 as the optimal pair. The result is that the critical business throughput (critical-jOPS) increased by 5.0% and the maximum throughput (max-jOPS) raised by 2.3%. This means that your application can process more business requests with lower latency, directly translating into higher system capacity and superior user experience.

Alibaba Dragonwell 21 AI Extension is well suited for the following scenarios:

• AI vector search and RAG workloads: For teams that are building enterprise-grade intelligent knowledge bases, document assistants, or Q&A systems by using RAG, the AI Extension combines multiple optimizations to substantially improve the performance of underlying components such as Elasticsearch. It reduces search latency, increases stability under high concurrency, and helps ensure smooth user experience.

• Big data analysis and feature engineering: For enterprises that are processing massive datasets or performing complex feature engineering and data analysis, the AI Extension systematically addresses performance bottlenecks in frameworks such as Spark. It shortens data processing cycles and lowers hardware consumption without requiring changes to application code.

• Large, complex systems (such as smart driving): For systems with large codebases and dispersed runtime hotspots, such as smart driving stacks and high-definition mapping, the AI Extension improves code cache efficiency, reduces CPU overhead and response time, and enhances overall system stability.

• Extreme O&M efficiency: For enterprises that aim to reduce JVM tuning costs and raise O&M automation levels, the AI Extension integrates with AIOps ecosystems to transform a JVM from a passive "black box" into an introspective runtime that interacts intelligently with external systems. This lays a solid foundation for deeper automated performance tuning and capacity management.

Alibaba Dragonwell 21 AI Extension is distributed as an RPM package. On Alibaba Cloud Linux, you can easily install it by using the yum install command.

After installation, simply add the AI enhancement parameters to your Java startup commands to enable the corresponding AI features. For example, to accelerate Elasticsearch 8.17 by using AI enhancements, use the following parameters:

java -XX:+UnlockExperimentalVMOptions -XX:+UseAIExtension \

-XX:AIExtensionUnit=es_8.17 ...The -XX:AIExtensionUnit= parameter can be specified multiple times, allowing you to enable several AI enhancements at a time. For example, you can also enable Spark 4.0 acceleration:

java -XX:+UnlockExperimentalVMOptions -XX:+UseAIExtension \

-XX:AIExtensionUnit=es_8.17 -XX:AIExtensionUnit=spark_4.0 ...Currently, Alibaba Dragonwell 21 AI Extension supports the following enhancements:

• es_8.17: accelerates Elasticsearch 8.17.x with AI enhancements.

• spark_4.0: accelerates Spark 4.0 with AI enhancements.

• hotcode_1.0: enables hot code reordering to optimize code cache performance.

The JTune feature is provided as a standalone command-line tool. It supports both online and offline collection of Java application performance data for intelligent analysis. Considering that the underlying machine learning algorithms consume CPU resources, we recommend that you use offline collection:

jcmd command to sample performance data during execution.jtune output.jfr --feature=G1GC. Wait a few minutes to receive GC parameter recommendations tailored to your specific application and hardware environment.Detailed documentation and usage instructions for these AI enhancements will be publicly available upon the official release of Alibaba Dragonwell 21 AI Extension. Stay tuned.

Alibaba Dragonwell 21 AI Extension is developed on top of Dragonwell 21 Extended Edition. As a downstream distribution of OpenJDK 21, Dragonwell 21 Extended Edition allows you to run your existing JDK 21 applications directly on the AI Extension without any code changes or migration efforts.

Alibaba Dragonwell 21 AI Extension offers long-term support (LTS), with a maintenance schedule aligned with OpenJDK. Updates are released quarterly, with support guaranteed at least until November 2029. During this period, Alibaba Cloud will continue to provide performance optimizations and security updates for Dragonwell 21, ensuring that you can use it securely and reliably.

Alibaba Dragonwell 21 AI Extension uses three core technological innovations to deliver an ideal combination of extreme performance and ease of use for enterprise customers in the AI era: native acceleration, hot code reordering, and JTune. Together, these features optimize native computing power, execution efficiency, and runtime configurations, simplifying the complex process of performance tuning. Without deep knowledge of JVM fundamentals, you can efficiently handle AI workloads and gain instant performance improvements out of the box.

These optimizations are grounded in the extensive experience of Alibaba across massive production workloads. Technologies that have been rigorously validated in real-world environments are transformed into standardized, easy-to-use product features, helping enterprise customers confidently address AI-era challenges. This is only the beginning. In the future, we plan to extend native acceleration to support more mainstream AI computing frameworks and core components, further simplify the code cache optimization workflow, introduce more advanced AI-driven algorithms for adaptive parameter tuning, and deliver additional internal optimizations to continuously enhance product intelligence and performance.

Looking ahead, AI-enhanced JVMs will become an indispensable component of the AIOps ecosystem. We envision a next-generation Java runtime that is no longer a passive code execution engine, but an intelligent entity capable of perception, decision-making, and self-optimization. Native acceleration will continue to extend, covering more mainstream AI frameworks to provide applications with abundant computing power. Hot code reordering and JTune will integrate deeply, enabling JVMs to adapt autonomously and dynamically adjust runtime status based on real-time workloads, thus achieving "self-driving" performance optimization. Ultimately, a highly intelligent JVM will proactively expose rich runtime information to AIOps platforms, enabling more precise anomaly detection, troubleshooting, and capacity forecasting, ensuring that the Java technology stack remains robust and resilient in the AI era.

The first release of Alibaba Dragonwell 21 AI Extension will soon be available as a "Taster Edition", and will later be officially launched alongside the standard Dragonwell release. We sincerely invite developers and enterprises in the Java and AI domains to download and try it, and experience firsthand the next-generation JVM's powerful capabilities for AI applications.

Finally, join our Dragonwell customer support group on DingTalk (ID 35434688) to provide feedback. Together, let's continue to drive Java's evolution and excellence in the AI era.

Java Memory Diagnostics Available in Alibaba Cloud OS Console

97 posts | 6 followers

FollowAlibaba Clouder - October 21, 2020

Alibaba Cloud Community - April 22, 2024

Alibaba Clouder - April 15, 2021

Alibaba Container Service - April 28, 2020

OpenAnolis - September 26, 2022

Aliware - April 10, 2020

97 posts | 6 followers

Follow AgentBay

AgentBay

Multimodal cloud-based operating environment and expert agent platform, supporting automation and remote control across browsers, desktops, mobile devices, and code.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn MoreMore Posts by OpenAnolis