By Yu Lei, nicknamed Liangxi at Alibaba.

Created some twenty years ago, Java is an object-oriented programming (OOP) language based on a large number of excellent enterprise-level frameworks. It provides stability and high performance under rigorous and long-term operating conditions. Language simplicity is important to ensure fast iteration and delivery on the cloud. This makes Java a seemingly an inappropriate language, being rather much a heavyweight. Yet, this language is still an important tool.

This article was prepared by Yu Lei, a technical expert from the Alibaba JVM team. In this article, Yu Lei describes how the JVM team deals with the challenge of serving a large number of complex services within Alibaba using Java.

Obviously, one of Java's major weaknesses is its high resource consumption, particularly its memory usage by heaps. Even when no requests are processed and no objects are allocated, processes still reserve the full heap memory space to ensure the agile allocation and operation of memory resources during garbage collection.

Alibaba's custom Java Development Kit, AJDK ZenGC and ElasticHeap have supported hundreds of applications and hundreds of thousands of instances on core links during the largest online shopping promotion in the world, the Double 11 Shopping Festival.

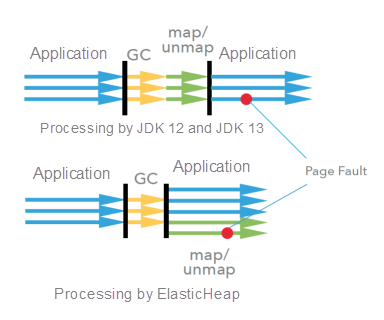

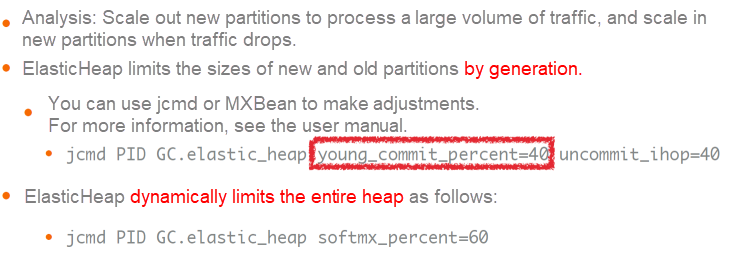

JDK 12 and later versions support triggering the concurrent mark at a fixed time and shrinking the Java heap in remark before returning it to the memory. However, this does not remove stop-the-world (STW) pauses, so the heap memory cannot be returned during young garbage collection cycles. In ElasticHeap, concurrent and asynchronous threads process the overhead that results from repeated map and unmap actions on the memory and page fault handling. In this way, the heap memory can be promptly returned or the memory is available for use again during each young garbage collection cycle.

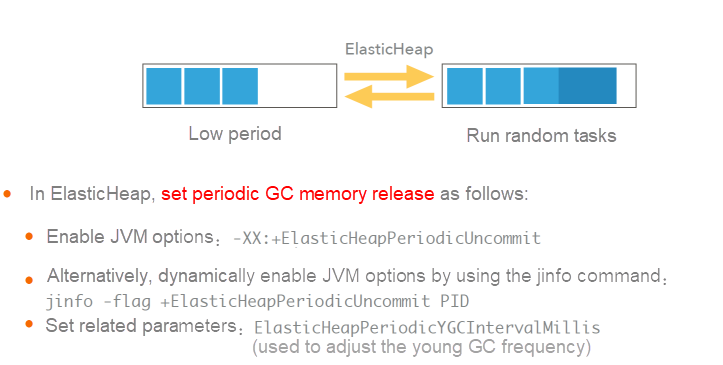

Multiple Java instances receive random traffic tasks without overlapping traffic peaks. This reduces the overall memory usage of multiple instances during idle times and improves deployment density.

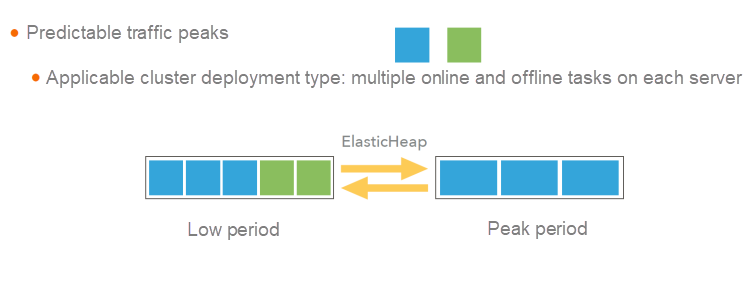

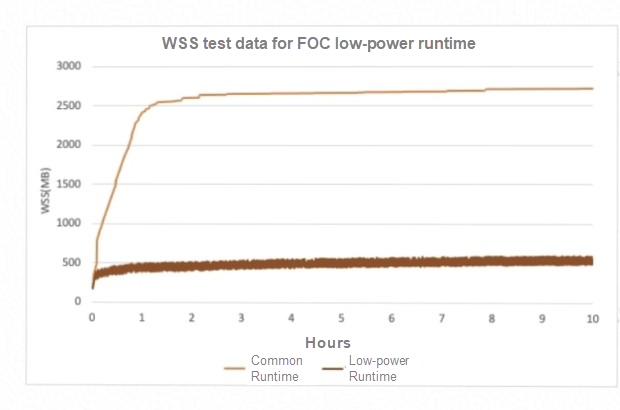

During Alibaba's e-commerce platform's biggest promotion, Double 11, our core transaction system used ElasticHeap to run in low-power mode, greatly reducing the working set size (WSS) of instances.

Many new applications on the cloud are developed by using the Go language to remove runtime dependencies. Statically compiled applications start up quickly, without the just-in-time compilation (JIT) ramp-up period. Then, how do we incorporate these advantages of the Go language into the Java code used by Alibaba?

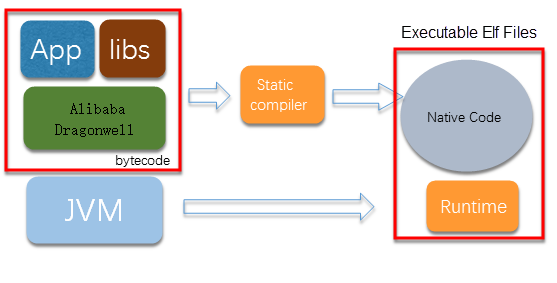

Java static compilation is an advanced form of ahead-of-time (AOT) compilation. Java programs are compiled into local code in an independent compilation phase and do not require traditional JVM or runtime environments. You only need to ensure that the Java programs support operating system class libraries. The following figure shows a schematic drawing of Java static compilation. The static compilation technology is used to compile Java programs into native programs with the bootstrap function and Java behavior. Such programs combine the advantages of Java programs and native programs.

The JVM team has worked closely with the SOFAStack team to develop middleware applications through static compilation. Static compilation reduces the startup duration of an application from 60 seconds to 3.8 seconds. During Double 11, statically compiled applications ran stably and without faults. The garbage collection pause was 100 milliseconds, which is acceptable for services. The memory usage and response time were the same as those of traditional Java applications.

Statically compiled applications are on an equal footing with traditional Java applications in terms of stability, resource usage, and response time, but reduce the average startup duration by 2,000%.

Imagine that you develop a simple web service by using Vert.X and a quality assurance engineer asks you to perform stress testing on your web service in a 1C container with 2 GB memory capacity. You may find that your web service cannot match Go-compiled applications. This is because the coroutine model performs much better when the number of cores is small. But, is this problem caused by Java?

Alibaba's customized JDK, AJDK Wisp2 allows you to develop applications with high-performance coroutines by using Java. Released on a large scale this year, Wisp2 supports coroutine scheduling in Java runtime to convert threads, such as Socket.getInputStream().read()) blocks, into lightweight coroutine switches.

Wisp2 is fully compatible with the Thread API. In Wisp2-enabled JDK, Thread.start() is used to create a coroutine, which is a lightweight thread. For this, Go only provides the coroutine keyword "go" without exposing the Thread API. Wisp2 only provides the coroutine creation method, allowing applications to transparently switch to coroutines.

Wisp2 supports work stealing and a scheduling policy suitable for web scenarios to minimize scheduling overhead under heavy workloads.

During the 2019 Double 11 Shopping Festival, Wisp supported hundreds of applications and hundreds of thousands of containers. Currently, 90% of Alibaba's containers have been updated to Wisp2.

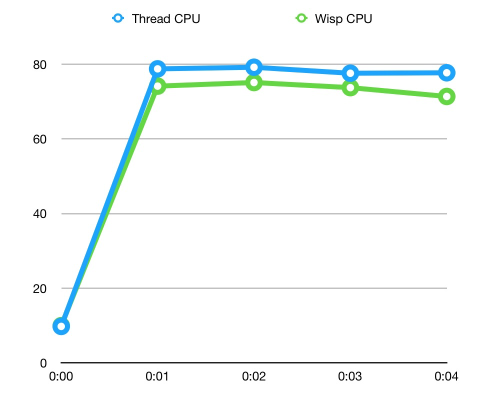

When nearing peak values, the CPU usage of Wisp2-enabled servers is about 7% lower, and Wisp1-enabled servers have even lower CPU usage. This is because Wisp2 is specially designed to reduce the response times. The reduction mainly comes from the conservation of system CPU resources due to lightweight scheduling. The CPU usage is the same at midnight, the start of Double 11, where the highest peaks in traffic are seen. This indicates that Wisp2 addresses the scheduling overhead and shows little performance advantage under low CPU usage and no scheduling burden.

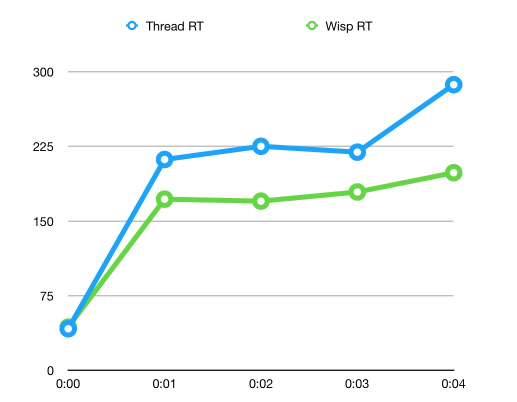

The response times of the Wisp2-enabled server are about 20% lower due to coroutine scheduling under heavy workloads on the CPU. Coroutine scheduling pushes up the resource usage limit and prevents system crashes due to a high response time.

During Double 11, CPU usage peaked in the several minutes after midnight, when the big promotion began. Data analysis indicates that the main reason was that JIT compilation was triggered at midnight. The following is an example of the program logic: If (is1111(LocalDate.now())) {branch1} else {branch2 }. What this formula means is that, if branch2 is executed during the ramp-up period, JIT compilation is not performed on branch1. When branch1 is entered at midnight, deoptimization must be triggered to recompile the method. Now, let's look at how AJDK solves the problem of deoptimization through profiling.

When the JDK runs the code, it dynamically compiles the Java method through tiered compilation. For performance reasons, some optimistic assumptions are made based on data collected during high-level compilation, with optimal peak performance. Deoptimization occurs when these assumptions are not met. For example, if a code snippet in a hotspot method is only executed during the Double 11 Shopping Festival, this code snippet is not compiled during the ramp-up period. Deoptimization is triggered for the method when the code snippet is executed during Double 11.

Deoptimization has an adverse impact on two methods. The first is the executed method, which changes from efficient compilation to interpretation, lowering the running speed by a factor of over 100. The second is the method for which deoptimization is triggered during traffic peaks. This method is rapidly recompiled, and the compilation thread consumes CPU resources. The adverse impact of deoptimization is all the more obvious in scenarios where the traffic volume increases rapidly in a short period of time compared with the traffic during the ramp-up period. The Double 11 Shopping Festival is precisely such a scenario.

Feedback directed optimization is used to improve compilation during the current runtime based on the compilation information from past JVM runtimes. For this, information about deoptimization during runtime is recorded in a file, which is read during the next runtime. Then, the recorded information is used to determine whether to make optimistic assumptions. This reduces the probability of de-optimization. The recorded information shows that deoptimization occurs in more than half of if-else statements. Therefore, our team provided a method of disabling all related optimistic assumptions on a path based on information about past occurrences of deoptimization in if-else statements.

Feedback directed optimization was applied during the 2019 Double 11 Shopping Festival to solve the following two problems:

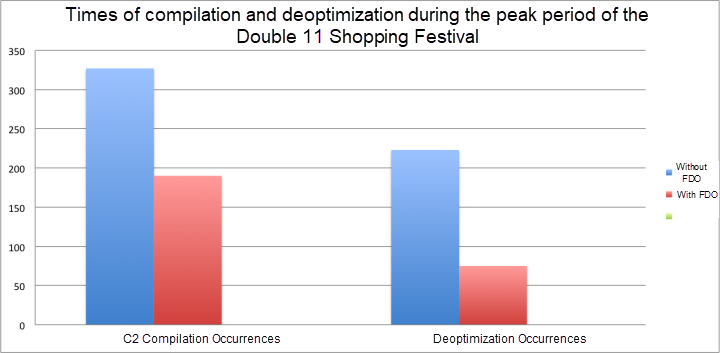

To address the first problem, we collected CPU usage data and counted the occurrences of deoptimization and C2 compilation at one minute after midnight during the Double 11 Shopping Festival when a traffic peak occurred.

With FDO enabled, the occurrences of C2 compilation fell by about 45%, and the occurrences of deoptimization fell by about 70%.

After FDO was enabled during the first minute of the peak period, CPU usage dropped by about 7.0%, from about 67.5% to 63.1%.

To address the second problem, we verified the CPU usage during the first minute of the stress test.

With FDO enabled, the CPU usage during the first minute of the stress test dropped by about 10%, from 66.19% to 60.33%.

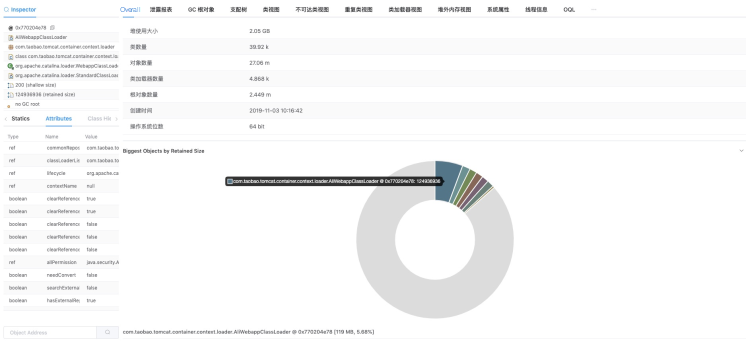

ZProfiler is used to troubleshoot problems with Java applications in Alibaba. As the optimized and platform-based version of ZProfiler, Grace transforms the standalone architecture into a master/worker architecture and introduces a task queuing mechanism to reduce the workloads of worker threads with heavy loads. Grace is more maintainable and scalable and delivers a better user experience than ZProfiler. This lays a solid foundation for migrating tools and platforms to the cloud and developing open-source projects.

Grace is integrated with the Heap Dump function and optimizes the functions of ZProfiler. It provides an advanced version of the parsing engine to fully support OQL syntax.

JDK 8 is a widely used classic version. Migration from JDK 6 and JDK 7 is complex but provides many benefits.

OpenJDK 11 is the next stable version of OpenJDK 8. The JVM team will pay close attention to the updates of OpenJDK 11. Currently, AJDK 11 supports the Wisp2 and multi-tenant features of AJDK 8. The clusters that were migrated to JDK 11 performed stably during the 2019 Double 11 Shopping Festival.

Will upgrading to JDK 11 bring as many benefits as upgrading to JDK 8 did? JDK 11 provides the latest version of Z Garbage Collector.

ZGC is an important feature of JDK 11. Z Garbage Collector can keep the pause time within 10 ms in a heap ranging from dozens of gigabytes to several terabytes in size. With a short pause time, Z Garbage Collector is favored by Java developers because it removes the latency caused by long pause times typical in other garbage collectors.

Currently, Z Garbage Collector is still an experimental feature of OpenJDK. JDK 11 is not widely used in the industry and only supports Linux-based ZGCs. Z Garbage Collectors based on MacOS and Windows can be supported by JDK 14 that will be released in March 2020. Java developers will have to wait a bit longer.

However, bold steps have been made. The Alibaba JVM team and database team have begun to run database applications on Z Garbage Collector and improve Z Garbage Collector based on the running results, such as by optimizing the Z Garbage Collector page caching mechanism and Z Garbage Collector trigger timing.

The two teams have stably run online database applications on Z Garbage Collector since September 2019. These applications withstood the traffic burden during the Double 11 Shopping Festival. Online feedback has been very positive.

Alibaba's custom JDK, AJDK has evolved from a traditional managed runtime. AJDK will continue to improve the development experience for cloud applications and provide more possibilities for upper-layer applications through underlying innovation.

Alibaba Dragonwell 8 is a free release version of OpenJDK. It features long-term support, including performance enhancements and security fixes. Alibaba Dragonwell 8 currently supports the x86-64/Linux platform. It can significantly improve stability, efficiency, and performance for large-scale Java applications deployed in data centers. Alibaba Dragonwell 8 is a "friendly fork" under the same licensing terms as the OpenJDK project. Alibaba Dragonwell 8 is compatible with the Java SE standard. You can use Alibaba Dragonwell 8 to develop and run Java applications. Alibaba Dragonwell 8 is the open-source version of AJDK, which is a custom version of OpenJDK used within Alibaba. AJDK is optimized for e-commerce, finance, and logistics and runs in the ultra-large Alibaba data center with more than 1,000,000 servers.

We are currently preparing to release Alibaba Dragonwell 11. Dragonwell 11 is a release version of Dragonwell based on OpenJDK 11. It provides a range of features such as cloud-based enablement, clear modularization, and long-term support. We recommend that you pay attention to this upcoming release and upgrade to Dragonwell 11 when it becomes available.

Are you eager to know the latest tech trends in Alibaba Cloud? Hear it from our top experts in our newly launched series, Tech Show!

212 posts | 13 followers

FollowAlibaba Clouder - May 10, 2021

Alibaba Container Service - March 10, 2020

Alibaba Cloud Native Community - July 28, 2025

Alibaba Cloud Native Community - December 11, 2025

Alibaba Cloud Native Community - August 25, 2025

Justin See - November 7, 2025

212 posts | 13 followers

Follow E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Cloud Native