By Yi Li, nicknamed Weiyuan at Alibaba. Yi Li is a senior technical expert at Alibaba Cloud.

What does the cloud-native era mean to Java developers? Some people say that cloud-native is not intended for Java at all. However, I think that they are wrong. I think that Java will remain a major player in the cloud-native era. Through a series of experiments, I will provide a series of justifications for my opinion.

In many ways, Java is still the king of the enterprise software field. However, developers both love and hate it. Its rich ecosystem and complete support for tools greatly improve the efficiency of application development. However, Java is also notorious as a "memory eater" and a "CPU shredder" in terms of runtime efficiency, and therefore is continuously challenged by new and old languages such as Node.js, Python, and Golang.

In the techn community, we often see that some people view Java technology unfavorably and think it cannot adapt to the development trend of cloud native. Let's put these views aside for the moment and think about the different requirements cloud-native imposes on application runtime.

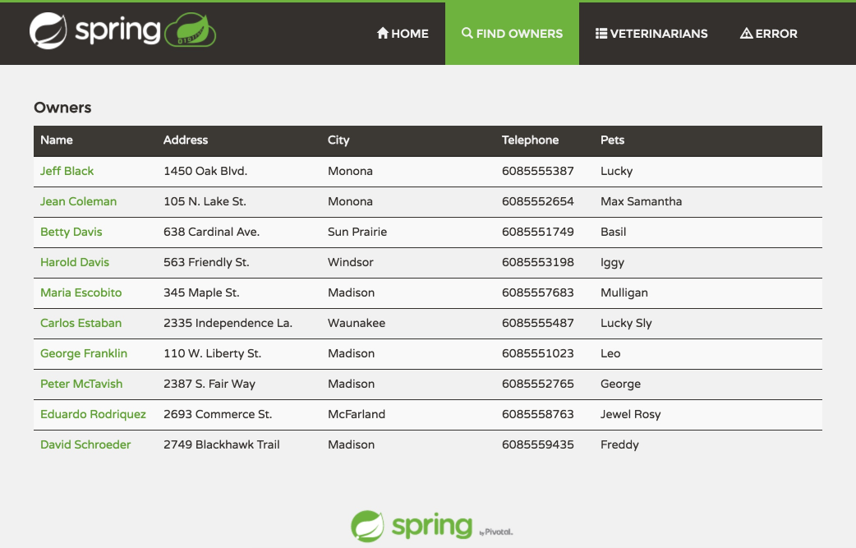

Most developers who are familiar with the Spring framework are no strangers to Spring Petclinic. This article will use this famous example application to show how to make our Java applications smaller, faster, more lightweight, and more powerful!

We forked the examples from IBM's Michael Thompson, having made a few adjustments.

$ git clone https://github.com/denverdino/adopt-openj9-spring-boot

$ cd adopt-openj9-spring-bootFirst, we built a Docker image for the PetClinic application. In Dockerfile, we used OpenJDK as the basic image, installed Maven, downloaded, compiled, and packaged Spring PetClinic applications, and set the startup parameters of the image to build the image.

$ cat Dockerfile.openjdk

FROM adoptopenjdk/openjdk8

RUN sed -i 's/archive.ubuntu.com/mirrors.aliyun.com/' /etc/apt/sources.list

RUN apt-get update

RUN apt-get install -y \

git \

maven

WORKDIR /tmp

RUN git clone https://github.com/spring-projects/spring-petclinic.git

WORKDIR /tmp/spring-petclinic

RUN mvn install

WORKDIR /tmp/spring-petclinic/target

CMD ["java","-jar","spring-petclinic-2.1.0.BUILD-SNAPSHOT.jar"]The preceding figure shows how to build and run an image.

$ docker build -t petclinic-openjdk-hotspot -f Dockerfile.openjdk .

$ docker run --name hotspot -p 8080:8080 --rm petclinic-openjdk-hotspot

|\ _,,,--,,_

/,`.-'`' ._ \-;;,_

_______ __|,4- ) )_ .;.(__`'-'__ ___ __ _ ___ _______

| | '---''(_/._)-'(_\_) | | | | | | | | |

| _ | ___|_ _| | | | | |_| | | | __ _ _

| |_| | |___ | | | | | | | | | | \ \ \ \

| ___| ___| | | | _| |___| | _ | | _| \ \ \ \

| | | |___ | | | |_| | | | | | | |_ ) ) ) )

|___| |_______| |___| |_______|_______|___|_| |__|___|_______| / / / /

==================================================================/_/_/_/

...

2019-09-11 01:58:23.156 INFO 1 --- [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port(s): 8080 (http) with context path ''

2019-09-11 01:58:23.158 INFO 1 --- [ main] o.s.s.petclinic.PetClinicApplication : Started PetClinicApplication in 7.458 seconds (JVM running for 8.187)You can visit http://localhost:8080/ to open the application page. Now, inspect the built Docker image. As you can see, the size of petclinic-openjdk-openj9 is 871 MB, and the size of the basic image adoptopenjdk/openjdk8 is only 300 MB, which is a huge difference.

$ docker images petclinic-openjdk-hotspot

REPOSITORY TAG IMAGE ID CREATED SIZE

petclinic-openjdk-hotspot latest 469f73967d03 26 hours ago 871MBSo, how did we achieve this anyway? To build a Spring application, we introduced a set of compile-time dependencies such as Git and Maven in the images and generated a large number of temporary files. However, this content is not required during runtime.

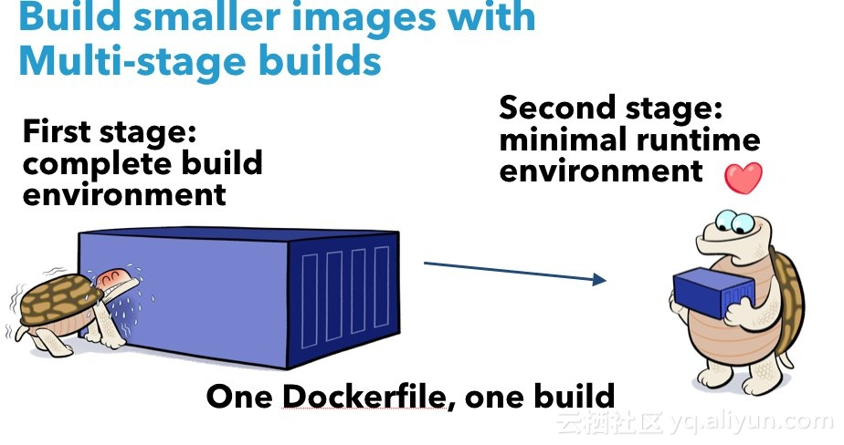

In the famous 12-Factor App Methodology, the fifth factor clearly states "Strictly separate build and run stages." The strict separation of the build and run stages not only helps improve application traceability to ensure consistency during application delivery, but also reduces the volume of distributed applications to minimize security risks.

To reduce the image size, Docker provides the multi-stage build service.

We can divide the image building process into two stages:

$ cat Dockerfile.openjdk-slim

FROM adoptopenjdk/openjdk8 AS build

RUN sed -i 's/archive.ubuntu.com/mirrors.aliyun.com/' /etc/apt/sources.list

RUN apt-get update

RUN apt-get install -y \

git \

maven

WORKDIR /tmp

RUN git clone https://github.com/spring-projects/spring-petclinic.git

WORKDIR /tmp/spring-petclinic

RUN mvn install

FROM adoptopenjdk/openjdk8:jre8u222-b10-alpine-jre

COPY --from=build /tmp/spring-petclinic/target/spring-petclinic-2.1.0.BUILD-SNAPSHOT.jar spring-petclinic-2.1.0.BUILD-SNAPSHOT.jar

CMD ["java","-jar","spring-petclinic-2.1.0.BUILD-SNAPSHOT.jar"]As you can see, the size of the new image has decreased from 871 MB to 167 MB.

$ docker build -t petclinic-openjdk-hotspot-slim -f Dockerfile.openjdk-slim .

...

$ docker images petclinic-openjdk-hotspot-slim

REPOSITORY TAG IMAGE ID CREATED SIZE

petclinic-openjdk-hotspot-slim latest d1f1ca316ec0 26 hours ago 167MBAfter the image size is reduced, application distribution is greatly accelerated. Next, we need to accelerate the application startup.

To overcome the performance bottleneck created by Java startup, we first need to understand the implementation principles of JVMs. To implement "write once, run anywhere," Java programs are compiled into bytecodes that are independent of the implementation architecture. During runtime, the JVM converts bytecodes to local machine codes for execution. The conversion process determines the startup and running speeds of a Java application.

To improve execution efficiency, the just-in-time (JIT) compiler has been introduced in JVMs. In particular, Java HotSpot VM is one famous JIT compiler implementation released by Sun and Oracle. It provides an adaptive optimizer that can dynamically analyze and discover key paths during code execution and optimize compilation. Java HotSpot VMs significantly improve the execution efficiency of Java applications and have therefore become the default VM implementation since Java 1.4.

However, Java HotSpot VMs only compile bytecodes during startup. As a result, the execution efficiency is low during startup, and many CPU resources are required during compilation and optimization, slowing down the startup process. So one question, of course, is then: Can we optimize this process to speed up startup?

Well, if you are familiar with the history of Java, you may know about IBM J9 VM. As an enterprise-grade high-performance JVM released by IBM, IBM J9 VM helped IBM dominate the middleware market when it came to commercial application platforms. In September 2017, IBM donated J9 VM to the Eclipse Foundation and renamed it Eclipse OpenJ9. This was the beginning of its transformation into an open-source product.

Eclipse OpenJ9 provides shared class cache (SCC) and ahead-of-time (AOT) compilation technologies, which significantly reduce the startup time of Java applications.

An SCC is a memory-mapped file that contains the bytecode execution and analysis information of the J9 VM and the locally compiled code. After AOT compilation is enabled, JVM compilation results are stored in shared class cache and can be directly reused during subsequent JVM startup. Compared with JIT compilation performed during runtime, AOT compilation is faster and consumes less resources because precompiled code can be loaded from the SCCs. As such, the startup time can be significantly shortened.

Let's start to build a Docker application image that incorporates AOT compilation optimization.

$cat Dockerfile.openj9.warmed

FROM adoptopenjdk/openjdk8-openj9 AS build

RUN sed -i 's/archive.ubuntu.com/mirrors.aliyun.com/' /etc/apt/sources.list

RUN apt-get update

RUN apt-get install -y \

git \

maven

WORKDIR /tmp

RUN git clone https://github.com/spring-projects/spring-petclinic.git

WORKDIR /tmp/spring-petclinic

RUN mvn install

FROM adoptopenjdk/openjdk8-openj9:jre8u222-b10_openj9-0.15.1-alpine

COPY --from=build /tmp/spring-petclinic/target/spring-petclinic-2.1.0.BUILD-SNAPSHOT.jar spring-petclinic-2.1.0.BUILD-SNAPSHOT.jar

# Start and stop the JVM to pre-warm the class cache

RUN /bin/sh -c 'java -Xscmx50M -Xshareclasses -Xquickstart -jar spring-petclinic-2.1.0.BUILD-SNAPSHOT.jar &' ; sleep 20 ; ps aux | grep java | grep petclinic | awk '{print $1}' | xargs kill -1

CMD ["java","-Xscmx50M","-Xshareclasses","-Xquickstart", "-jar","spring-petclinic-2.1.0.BUILD-SNAPSHOT.jar"]Let's enable SCC for the Java parameter -Xshareclasses and enable AOT for -Xquickstart. In Dockerfile, we use a technique to warm up SCCs. At the build stage, we start the JVM to load the application, enable SCC and AOT, and stop the JVM after the application is started. For this, the Docker image contains a generated SCC file. Then, we build a Docker image and start the test application.

$ docker build -t petclinic-openjdk-openj9-warmed-slim -f Dockerfile.openj9.warmed-slim .

$ docker run --name hotspot -p 8080:8080 --rm petclinic-openjdk-openj9-warmed-slim

...

2019-09-11 03:35:20.192 INFO 1 --- [ main] o.s.b.w.embedded.tomcat.TomcatWebServer : Tomcat started on port(s): 8080 (http) with context path ''

2019-09-11 03:35:20.193 INFO 1 --- [ main] o.s.s.petclinic.PetClinicApplication : Started PetClinicApplication in 3.691 seconds (JVM running for 3.952)

...As you can see, the startup time has decreased by approximately 50% from 8.2 to 4 seconds.

In this solution, we shift the time-consuming and effort-consuming compilation optimization process to the build stage and store the pre-compiled shared class cache (SCC) in the Docker image by using the space-for-time substitution method. During container startup, the JVM can directly load the SCC by using a memory-mapped file, which speeds up startup and reduces resource utilization.

Another advantage of this method is that Docker images are stored hierarchically, so multiple Docker application instances on the same host can share the same SCC for memory mapping. This can greatly reduce the memory consumption when single hosts are deployed densely.

Next, let's compare the resource utilization. Build an image based on Java HotSpot VM and start four Docker application instances. After 30 seconds, check the resource utilization by running docker stats.

$ ./run-hotspot-4.sh

...

Wait a while ...

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

0fa58df1a291 instance4 0.15% 597.1MiB / 5.811GiB 10.03% 726B / 0B 0B / 0B 33

48f021d728bb instance3 0.13% 648.6MiB / 5.811GiB 10.90% 726B / 0B 0B / 0B 33

a3abb10078ef instance2 0.26% 549MiB / 5.811GiB 9.23% 726B / 0B 0B / 0B 33

6a65cb1e0fe5 instance1 0.15% 641.6MiB / 5.811GiB 10.78% 906B / 0B 0B / 0B 33

...Then, build an image based on OpenJ9 VM and start four Docker application instances. Then, check the resource utilization.

$ ./run-openj9-warmed-4.sh

...

Wait a while ...

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

3a0ba6103425 instance4 0.09% 119.5MiB / 5.811GiB 2.01% 1.19kB / 0B 0B / 446MB 39

c07ca769c3e7 instance3 0.19% 119.7MiB / 5.811GiB 2.01% 1.19kB / 0B 16.4kB / 120MB 39

0c19b0cf9fc2 instance2 0.15% 112.1MiB / 5.811GiB 1.88% 1.2kB / 0B 22.8MB / 23.8MB 39

95a9c4dec3d6 instance1 0.15% 108.6MiB / 5.811GiB 1.83% 1.45kB / 0B 102MB / 414MB 39

...By comparing the two results, we can see that the application memory usage in the OpenJ9 scenario drops from an average of 600 MB to 120 MB.

Generally, execution paths are optimized more widely and deeply through JIT compilation of HotSpot VMs than through AOT compilation. Therefore, the operating efficiency is higher when JIT compilation is used. To resolve this conflict, the AOT SCCs of OpenJ9 take effect only during startup, and JIT is used during subsequent operations for deep compilation optimization, such as branch prediction and code inlining.

For more information about the OpenJ9 SCC and AOT technologies, see the following:

Java HotSpot VM has made great progress in class data sharing (CDS) and AOT, but IBM J9 VM is still more mature in this regard. We hope that Alibaba Dragonwell will also provide corresponding optimizations.

Some food for thought: Unlike static compilation languages such as C, C ++, Golang, and Rust, Java runs in VM mode. This improves application portability at the expense of some performance. Can we take AOT to the extreme? Can we completely remove the process from bytecode compilation to local code compilation?

To compile Java applications into local executable code, we first need to solve the challenge posed by the dynamic features of the JVM and application framework during runtime. JVM provides a flexible class loading mechanism. Spring's dependency injection (DI) can implement dynamic class loading and binding during runtime. In the Spring framework, technologies such as reflection and runtime annotation processors are also widely used. These dynamic features improve the flexibility and accessibility of the application architecture at the expense of the application startup speed, which makes AOT native compilation and optimization very complex.

The community is currently exploring ingenious ways to meet these challenges. Micronaut is an excellent example. Unlike the Spring framework sequence, Micronaut provides compile-time dependency injection and AOP processing capabilities and minimizes the use of reflection and dynamic proxies. Micronaut applications start faster and use less memory. Even more interesting is that Micronaut can work with Graal VM to compile Java applications into local executable code to run at full speed. Note: Graal VM is a new general-purpose VM released by Oracle. It supports multiple languages and can compile Java applications into local native applications.

Next, let's start exploring. Let's use a Micronaut PetClinic sample project provided by Mitz and make some adjustments by using Graal VM 19.2.

$ git clone https://github.com/denverdino/micronaut-petclinic

$ cd micronaut-petclinicThe following figure shows the content contained in the Docker image.

$ cat Dockerfile

FROM maven:3.6.1-jdk-8 as build

COPY ./ /micronaut-petclinic/

WORKDIR /micronaut-petclinic

RUN mvn package

FROM oracle/graalvm-ce:19.2.0 as graalvm

RUN gu install native-image

WORKDIR /work

COPY --from=build /micronaut-petclinic/target/micronaut-petclinic-*.jar .

RUN native-image --no-server -cp micronaut-petclinic-*.jar

FROM frolvlad/alpine-glibc

EXPOSE 8080

WORKDIR /app

COPY --from=graalvm /work/petclinic .

CMD ["/app/petclinic"]build stage, we used Maven to build a Micronaut PetClinic application.graalvm stage, we run native-image to convert the JAR file of the PetClinic application to an executable file.Build the application:

$ docker-compose buildStart the test database:

$ docker-compose up dbStart the application to be tested:

$ docker-compose up app

micronaut-petclinic_db_1 is up-to-date

Starting micronaut-petclinic_app_1 ... done

Attaching to micronaut-petclinic_app_1

app_1 | 04:57:47.571 [main] INFO org.hibernate.dialect.Dialect - HHH000400: Using dialect: org.hibernate.dialect.PostgreSQL95Dialect

app_1 | 04:57:47.649 [main] INFO org.hibernate.type.BasicTypeRegistry - HHH000270: Type registration [java.util.UUID] overrides previous : org.hibernate.type.UUIDBinaryType@5f4e0f0

app_1 | 04:57:47.653 [main] INFO o.h.tuple.entity.EntityMetamodel - HHH000157: Lazy property fetching available for: com.example.micronaut.petclinic.owner.Owner

app_1 | 04:57:47.656 [main] INFO o.h.e.t.j.p.i.JtaPlatformInitiator - HHH000490: Using JtaPlatform implementation: [org.hibernate.engine.transaction.jta.platform.internal.NoJtaPlatform]

app_1 | 04:57:47.672 [main] INFO io.micronaut.runtime.Micronaut - Startup completed in 159ms. Server Running: http://1285c42bfcd5:8080The startup time of the application decreased to 159 millseconds, only 1/50 of the time when using HotSpot VM.

Micronaut and Graal VMs are still evolving rapidly, and there are many things to consider when migrating a Spring application. In addition, the toolchains, including Graal VM debugging and monitoring, are still imperfect. However, we can see a new dawn: The world of Java applications and serverless architecture is not that far away. We cannot go into detail here. If you are interested in Graal VM and Micronaut, visit the following pages:

As a progressive giant, Java is also continuing to evolve in the cloud-native era. After JDK 8u191 and JDK 10 are released, JVM was empowered with enhanced resource awareness of Docker containers.

The community is exploring the boundaries of Java technology stacks in different directions. As a conventional VM, JVM OpenJ9 not only maintains high compatibility with existing Java applications, but also greatly speeds up startup and reduces memory usage. Therefore, it can work perfectly with existing microservices architectures such as Spring.

In contrast, Micronaut and Graal VM have broken new ground. By changing the programming model and compilation process, they process dynamic features of applications earlier during the compile-time, which significantly shortens the application startup time. Therefore, Micronaut and Graal VM are promising technologies in the serverless architecture field. All these design concepts are worth learning from.

In the cloud-native era, we must effectively split and reorganize the development, delivery, and maintenance processes in the horizontal application development lifecycle to improve R&D collaboration efficiency. In addition, we must optimize systems in terms of programming models, application runtime, and infrastructure throughout the vertical software technology stack to implement radical simplification and improve system efficiency.

Are you eager to know the latest tech trends in Alibaba Cloud? Hear it from our top experts in our newly launched series, Tech Show!

Unlock Cloud-Native AI Skills | Develop Your Machine Learning Workflow

222 posts | 33 followers

FollowAlibaba Clouder - October 21, 2020

Alibaba Clouder - May 10, 2021

Alibaba Cloud Native Community - May 8, 2023

Alibaba Clouder - April 15, 2021

Alibaba Cloud Native Community - March 18, 2025

Alibaba Cloud Community - March 9, 2022

222 posts | 33 followers

Follow Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Registry

Container Registry

A secure image hosting platform providing containerized image lifecycle management

Learn MoreMore Posts by Alibaba Container Service