Abstract: This article describes the backup and restoration principles involved with Tunnel Service and provides a step-by-step guide to making a data backup and restoration solution.

By Wang Zhuoran, nicknamed Zhuoran at Alibaba.

In the field of information technology and data management, the term backup implies the replication of data in a file system or database system to restore the requisite data and routine operations of the system in the event of a disaster or an error. What this all means is that, in actual backup scenarios, it is recommended that you back up important data in three or more replicas, and store them in different locations for geo-redundancy purpose.

Backup fundamentally involves two different items. The first one is to restore lost data due to mis-deletion or corruption, and the second one is to restore older data based on a custom data retention policy. Generally, the configuration of replication scope is done in the backup application.

Another important point to know is that a backup system may have high requirements for data storage as a system will contain at least one replica consisting of all the data worth storing. It may be complex to organize this storage space and manage backups. Currently, there are many different types of data storage devices available for backup on the market. These devices are configurable in several different ways to ensure geo-redundancy, data security, as well as portability.

Before sending data to a storage location, generally the processes of effective selection, extraction, and the related operation are required. You can optimize the backup process using a variety of technologies, including open file processing, real-time data source optimization, and data compression, encryption, and deduplication. Each backup solution must necessarily provide for drilling scenarios so to be able to verify the reliability of the data being backed up and, more importantly, to reveal the limits and human factors involved in the backup solution.

Now that we are on the same page when it comes to data backup and restoration, let's discuss the backup and restoration principles involved with Tunnel Service, which is part of Alibaba Cloud Tablestore. And, in this article, we're also going to provide you with a step-by-step guide on how to make a data backup and restoration solution using Tablestore and Tunnel Service.

Storage systems by their very nature must necessarily give top priority to data security and reliability. And, as such, they must ensure the following guarantees:

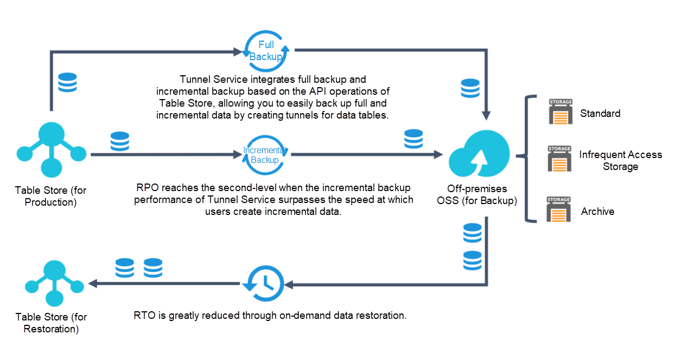

The following figure shows the logical structure of backup and restoration through Alibaba Cloud Tablestore. Tunnel Service. This service integrates the full mode and incremental mode, allowing you more easily build a complete data backup and restoration solution thanks to real-time incremental backup and second-level restoration capabilities. With this service, the entire backup and restoration system is fully automated after the backup and restoration plan configuration.

Currently, Alibaba Cloud Tablestore does not provide official backup and restoration functions. So, let's take a look at how to design a custom backup and restoration solution for Tablestore based on the Tunnel Service of Tablestore. The actual procedure is divided into two parts: backup and recovery.

1. Backup Preparation: Determine the data source to back up and the destination location. In this case study, the data source to back up is tables in Alibaba Cloud Tablestore, and the destination location is buckets in Alibaba Cloud OSS. For this case study, we will be doing the following:

2. Recovery Last, we need to do the recovery step. For this step, all we need to do is restore the files.

A backup policy specifies the backup content, backup time, and backup method. Common backup policies are as follows:

Additionally note that this case study uses the following backup plan and policy: you can customize a backup plan and policy as needed and use the Tunnel Service SDK to write related code.

This step is described based on code snippets. Detailed code will be open-source later (links will be updated in this article). It's recommended that you read the user manual of the Tunnel Service SDK in advance.

1. Create a tunnel of the full-incremental type using the SDK or within the console.

private static void createTunnel(TunnelClient client, String tunnelName) {

CreateTunnelRequest request = new CreateTunnelRequest(TableName, tunnelName, TunnelType.BaseAndStream);

CreateTunnelResponse resp = client.createTunnel(request);

System.out.println("RequestId: " + resp.getRequestId());

System.out.println("TunnelId: " + resp.getTunnelId());

}2. Familiarize yourself with the automatic data processing framework of Tunnel Service

Enter the process function and shutdown function for automatic data processing based on the instructions in the Quick Start Guide of Tunnel Service. The input parameters of the process function contain a list. StreamRecord encapsulates the data of each row in Tablestore, including the record type, primary key column, property column, and timestamp when you write records.

3. Design the persistence format for each row of data in Tablestore

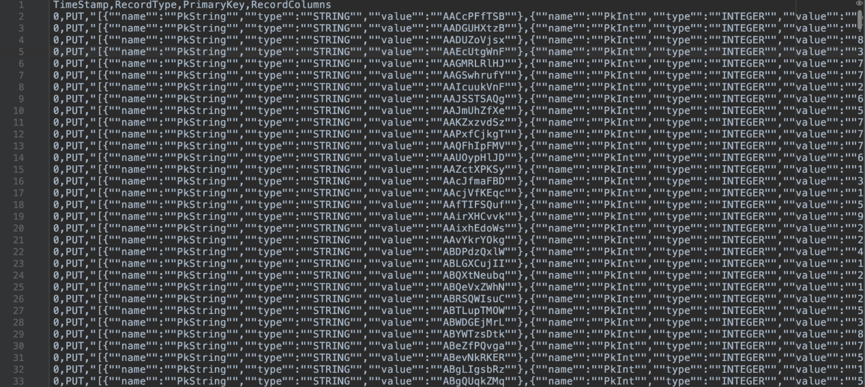

This case study uses the CSV file format, which makes data fully accessible. You may also use PB or other formats. Each data row corresponds to a row in a CSV file. The persistent format is shown in the following figure. A CSV file has four columns. The TimeStamp column contains the timestamp when data is written to Tablestore (the value is 0 for a full back up or a specific timestamp for incremental backup). RecordType indicates the action type (PUT, UPDATE, or DELETE) of the data row. PrimaryKey is the JSON string of the primary key column. RecordColumns is the JSON string of the property column.

The following code snippet is the core code of conversion and processed using uniVocity-parsers (CSV) and Gson. Note the following points:

this.gson = new GsonBuilder().registerTypeHierarchyAdapter(byte[].class, new ByteArrayToBase64TypeAdapter())

.setLongSerializationPolicy(LongSerializationPolicy.STRING).create();

// ByteArrayOutputStream到ByteArrayInputStream会有一次array.copy, 可考虑用管道或者NIO channel.

public void streamRecordsToOSS(List<StreamRecord> records, String bucketName, String filename, boolean isNewFile) {

if (records.size() == 0) {

LOG.info("No stream records, skip it!");

return;

}

try {

CsvWriterSettings settings = new CsvWriterSettings();

ByteArrayOutputStream out = new ByteArrayOutputStream();

CsvWriter writer = new CsvWriter(out, settings);

if (isNewFile) {

LOG.info("Write csv header, filename {}", filename);

List<String> headers = Arrays.asList(RECORD_TIMESTAMP, RECORD_TYPE, PRIMARY_KEY, RECORD_COLUMNS);

writer.writeHeaders(headers);

System.out.println(writer.getRecordCount());

}

List<String[]> totalRows = new ArrayList<String[]>();

LOG.info("Write stream records, num: {}", records.size());

for (StreamRecord record : records) {

String timestamp = String.valueOf(record.getSequenceInfo().getTimestamp());

String recordType = record.getRecordType().name();

String primaryKey = gson.toJson(

TunnelPrimaryKeyColumn.genColumns(record.getPrimaryKey().getPrimaryKeyColumns()));

String columns = gson.toJson(TunnelRecordColumn.genColumns(record.getColumns()));

totalRows.add(new String[] {timestamp, recordType, primaryKey, columns});

}

writer.writeStringRowsAndClose(totalRows);

// write to oss file

ossClient.putObject(bucketName, filename, new ByteArrayInputStream(out.toByteArray()));

} catch (Exception e) {

e.printStackTrace();

}

}1. Run the automatic data framework of the Tunnel Service to mount the backup policy code designed in the previous step.

public class TunnelBackup {

private final ConfigHelper config;

private final SyncClient syncClient;

private final CsvHelper csvHelper;

private final OSSClient ossClient;

public TunnelBackup(ConfigHelper config) {

this.config = config;

syncClient = new SyncClient(config.getEndpoint(), config.getAccessId(), config.getAccessKey(),

config.getInstanceName());

ossClient = new OSSClient(config.getOssEndpoint(), config.getAccessId(), config.getAccessKey());

csvHelper = new CsvHelper(syncClient, ossClient);

}

public void working() {

TunnelClient client = new TunnelClient(config.getEndpoint(), config.getAccessId(), config.getAccessKey(),

config.getInstanceName());

OtsReaderConfig readerConfig = new OtsReaderConfig();

TunnelWorkerConfig workerConfig = new TunnelWorkerConfig(

new OtsReaderProcessor(csvHelper, config.getOssBucket(), readerConfig));

TunnelWorker worker = new TunnelWorker(config.getTunnelId(), client, workerConfig);

try {

worker.connectAndWorking();

} catch (Exception e) {

e.printStackTrace();

worker.shutdown();

client.shutdown();

}

}

public static void main(String[] args) {

TunnelBackup tunnelBackup = new TunnelBackup(new ConfigHelper());

tunnelBackup.working();

}

}2. Monitor the backup process

Monitor the backup process in the Tablestore console or use the DescribeTunnel operation to obtain client information (customizable) about each channel of the current tunnel, the total number of consumed rows, and the consumer offset.

Assume that you need to synchronize data for the period from 2 pm to 3 pm. If the DescribeTunnel operation obtains the consumer offset 2:30 pm, then half of the data is backed up. If the operation obtains the consumer offset 3:00 pm, then data from the period from 2:00 pm to 3:00 pm is backed up.

After backing up the data, you'll need to perform a full restoration or partial restoration as needed to reduce RTO. While restoring your data, you'll also want to take the following measures to optimize restoration performance:

public class TunnelRestore {

private ConfigHelper config;

private final SyncClient syncClient;

private final CsvHelper csvHelper;

private final OSSClient ossClient;

public TunnelRestore(ConfigHelper config) {

this.config = config;

syncClient = new SyncClient(config.getEndpoint(), config.getAccessId(), config.getAccessKey(),

config.getInstanceName());

ossClient = new OSSClient(config.getOssEndpoint(), config.getAccessId(), config.getAccessKey());

csvHelper = new CsvHelper(syncClient, ossClient);

}

public void restore(String filename, String tableName) {

csvHelper.parseStreamRecordsFromCSV(filename, tableName);

}

public static void main(String[] args) {

TunnelRestore restore = new TunnelRestore(new ConfigHelper());

restore.restore("FullData-1551767131130.csv", "testRestore");

}

}To conclude, this article has described the following items:

How to Implement Data Replication Using Tunnel Service for Data in Tablestore

57 posts | 12 followers

Followzhuoran - February 5, 2021

Rupal_Click2Cloud - September 13, 2022

Alibaba Cloud Storage - March 3, 2021

Alibaba Cloud Storage - November 8, 2018

PM - C2C_Yuan - September 15, 2023

Alibaba Clouder - September 27, 2019

57 posts | 12 followers

Follow OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn More Database Backup

Database Backup

A reliable, cost-efficient backup service for continuous data protection.

Learn More Log Service

Log Service

An all-in-one service for log-type data

Learn More Cloud Backup

Cloud Backup

Cloud Backup is an easy-to-use and cost-effective online data management service.

Learn MoreMore Posts by Alibaba Cloud Storage