In the cloud-native age, container images have become a standard tool for software deployment and development. Custom container runtime offers developers a simpler experience and makes development and delivery more efficient. Developers can deliver their functions as container images and interact over HTTP with Alibaba Cloud Function Compute.

As described by the official website, KEDA is a Kubernetes-based event driven autoscaler, which enables you to automatically scale containers in Kubernetes based on the number of events needing to be processed. In this blog, we will show you how you can combine these two concepts, in particular, to deploy functions in Kubernetes with Kubernetes Event Driven Autoscaler (KEDA) using Alibaba Cloud Function Compute.

Alibaba Cloud Function Compute provides function-oriented computing service with two key abilities: a runtime to process functions and an event driven system to schedule and scale functions.

A runtime is a container with the function's runtime dependencies and everything needed for monitoring, logging, etc. The container can be deployed in any Docker or OCI standard environment, including Kubernetes. An event driven system is complicated in Alibaba Cloud Function Compute, but Kubernetes with KEDA do have the ability to handle some certain scenarios.

KEDA can scale Kubernetes Deployments and any other scalable objects to and from zero(0-1), and it also can work with HPA to autoscale Deployments to and from some certain scale(1-n), by exposing rich metrics to HPA controller.

Functions can be any custom containers that respond to HTTP requests. We provided a demo and a tutorial here.

git clone git@github.com:awesome-fc/fc-kubernetes-keda-example.git

# Customize your own image name, e.g. registry.cn-shenzhen.aliyuncs.com/my-fc-demo/java-springboot:1.0

export FC_DEMO_IMAGE="registry.cn-shenzhen.aliyuncs.com/my-fc-demo/java-springboot:1.0"

docker build -t ${FC_DEMO_IMAGE} .

# Docker login before pushing, replace {your-ACR-registry}, e.g. registry.cn-shenzhen.aliyuncs.com

# It's OK if you want to push your image to your dedicated registry.

# Make sure your Kubernetes cluster has access to your registry.

docker login registry.cn-shenzhen.aliyuncs.com

# Push the image

docker push ${FC_DEMO_IMAGE}In this section, we build a Deployment which is stateless and horizontally scalable, and we will expose the Deployment through a Service to Internet.

export DEPLOYMENT_NAME={demo-deployment-name} # Customize your own deployment name, e.g. demo-java-springboot

export CONTAINER_PORT=8080 # In this case, container port should be 8080.

export SERVICE_PORT=80 # Customize service port, e.g. 80

export SERVICE_NAME=${DEPLOYMENT_NAME}-svc

# Create deployment

# Expose your deployment. WARNING: it will cost you some credit.

/bin/bash ./hack/deploy_to_kubernetes.sh

# Verify your deployment is available.

curl -L "http://`kubectl get svc | grep ${SERVICE_NAME} | awk '{print $4}'`:${SERVICE_PORT}/2016-08-15/proxy/CustomContainerDemo/java-springboot-http/"KEDA community provides numbers of scales , and you can also extend it by implementing scaler interface.

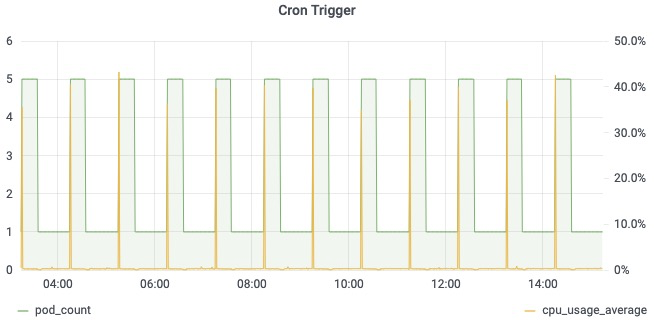

In this section, we take cron trigger and CPU trigger as examples.

export SCALED_OBJECT_NAME={cron-scaled-obj} # Customize your own ScaledObject name, e.g. cron-scaled-obj

# Create ScaleObject with cron trigger

/bin/bash ./hack/create_cron_scaleobject.sh

## Deployment replicas will be 5 between 15 and 30 every hour

kubectl get deployments.apps ${DEPLOYMENT_NAME}Cron trigger will work like below.

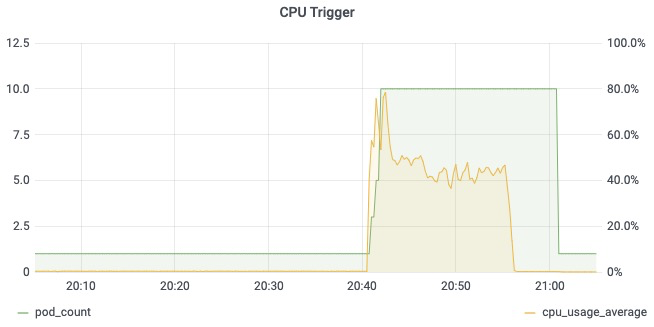

# Create ScaleObject with CPU trigger

/bin/bash ./hack/create_cpu_scaledobject.sh

## Put some stress on deployment

## Add 30qps stress for 120s

/bin/bash ./hack/put_stress_on_deployment.sh 2>&1 > /dev/null &

## We will see all pods' CPU usage are increasing

## And pods count will reach limit in a while

kubectl top pod | grep ${DEPLOYMENT_NAME}CPU trigger will work like below.

Functions in Alibaba Cloud can be deployed literally everywhere including Docker only environment, Kubernetes and other cloud providers with standard infrastructures. And Functions in Kubernetes, with KEDA, have massive potential in hybrid cloud in solving security, cost, stability, and scalability problems. Alibaba Cloud Function Compute team will continue working on cloud native computing and offer beyond computing value to our clients.

99 posts | 7 followers

FollowAlibaba Container Service - July 19, 2021

Alibaba Cloud Storage - June 4, 2019

Alibaba Container Service - March 7, 2025

Alibaba Cloud Native Community - November 23, 2023

Alibaba Container Service - April 18, 2024

Alibaba Cloud Native Community - March 6, 2023

99 posts | 7 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn MoreMore Posts by Alibaba Cloud Serverless